#check column SQL

Explore tagged Tumblr posts

Text

Checking for the Existence of a Column in a SQL Server Table

To check if a column exists in a SQL Server table, you can use the INFORMATION_SCHEMA.COLUMNS system view, which provides information about all columns in all tables in a database. Here’s a SQL query that checks if a specific column exists in a specific table: IF EXISTS ( SELECT 1 FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_NAME = 'YourTableName' AND COLUMN_NAME = 'YourColumnName' ) BEGIN PRINT…

View On WordPress

#check column SQL#INFORMATION_SCHEMA.COLUMNS#SQL Server column existence#SQL Server table structure#validate SQL column

0 notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

Hmm. Not sure if my perfectionism is acting up, but... is there a reasonably isolated way to test SQL?

In particular, I want a test (or set of tests) that checks the following conditions:

The generated SQL is syntactically valid, for a given range of possible parameters.

The generated SQL agrees with the database schema, in that it doesn't reference columns or tables which don't exist. (Reduces typos and helps with deprecating parts of the schema)

The generated SQL performs joins and filtering in the way that I want. I'm fine if this is more a list of reasonable cases than a check of all possible cases.

Some kind of performance test? Especially if it's joining large tables

I don't care much about the shape of the output data. That's well-handled by parsing and type checking!

I don't think I want to perform queries against a live database, but I'm very flexible on this point.

5 notes

·

View notes

Text

LDAP testing & defense

LDAP Injection is an attack used to exploit web based applications that construct LDAP statements based on user input. When an application fails to properly sanitize user input, it's possible to modify LDAP statements through techniques similar to SQL Injection.

LDAP injection attacks are common due to two factors:

The lack of safer, parameterized LDAP query interfaces

The widespread use of LDAP to authenticate users to systems.

How to test for the issue

During code review

Please check for any queries to the LDAP escape special characters, see here.

Automated Exploitation

Scanner module of tool like OWASP ZAP have module to detect LDAP injection issue.

Remediation

Escape all variables using the right LDAP encoding function

The main way LDAP stores names is based on DN (distinguished name). You can think of this like a unique identifier. These are sometimes used to access resources, like a username.

A DN might look like this

cn=Richard Feynman, ou=Physics Department, dc=Caltech, dc=edu

or

uid=inewton, ou=Mathematics Department, dc=Cambridge, dc=com

There are certain characters that are considered special characters in a DN. The exhaustive list is the following: \ # + < > , ; " = and leading or trailing spaces

Each DN points to exactly 1 entry, which can be thought of sort of like a row in a RDBMS. For each entry, there will be 1 or more attributes which are analogous to RDBMS columns. If you are interested in searching through LDAP for users will certain attributes, you may do so with search filters. In a search filter, you can use standard boolean logic to get a list of users matching an arbitrary constraint. Search filters are written in Polish notation AKA prefix notation.

Example:

(&(ou=Physics)(| (manager=cn=Freeman Dyson,ou=Physics,dc=Caltech,dc=edu) (manager=cn=Albert Einstein,ou=Physics,dc=Princeton,dc=edu) ))

When building LDAP queries in application code, you MUST escape any untrusted data that is added to any LDAP query. There are two forms of LDAP escaping. Encoding for LDAP Search and Encoding for LDAP DN (distinguished name). The proper escaping depends on whether you are sanitising input for a search filter, or you are using a DN as a username-like credential for accessing some resource.

Safe Java for LDAP escaping Example:

public String escapeDN (String name) {

//From RFC 2253 and the / character for JNDI

final char[] META_CHARS = {'+', '"', '<', '>', ';', '/'};

String escapedStr = new String(name);

//Backslash is both a Java and an LDAP escape character,

//so escape it first escapedStr = escapedStr.replaceAll("\\\\\\\\","\\\\\\\\");

//Positional characters - see RFC 2253

escapedStr = escapedStr.replaceAll("\^#","\\\\\\\\#");

escapedStr = escapedStr.replaceAll("\^ | $","\\\\\\\\ ");

for (int i=0 ; i < META_CHARS.length ; i++) {

escapedStr = escapedStr.replaceAll("\\\\" + META_CHARS[i],"\\\\\\\\" + META_CHARS[i]);

}

return escapedStr;

}

3 notes

·

View notes

Text

Top SQL Interview Questions and Answers for Freshers and Professionals

SQL is the foundation of data-driven applications. Whether you’re applying for a data analyst, backend developer, or database administrator role, having a solid grip on SQL interview questions is essential for cracking technical rounds.

In this blog post, we’ll go over the most commonly asked SQL questions along with sample answers to help you prepare effectively.

📘 Want a complete, updated list of SQL interview questions? 👉 Check out: SQL Interview Questions & Answers – Freshy Blog

🔹 What is SQL?

SQL (Structured Query Language) is used to communicate with and manipulate databases. It is the standard language for relational database management systems (RDBMS).

🔸 Most Common SQL Interview Questions

1. What is the difference between WHERE and HAVING clause?

WHERE: Filters rows before grouping

HAVING: Filters groups after aggregation

2. What is a Primary Key?

A primary key is a unique identifier for each record in a table and cannot contain NULL values.

3. What are Joins in SQL?

Joins are used to combine rows from two or more tables based on a related column. Types include:

INNER JOIN

LEFT JOIN

RIGHT JOIN

FULL OUTER JOIN

🔸 Intermediate to Advanced SQL Questions

4. What is the difference between DELETE, TRUNCATE, and DROP?

DELETE: Removes rows (can be rolled back)

TRUNCATE: Removes all rows quickly (cannot be rolled back)

DROP: Deletes the table entirely

5. What is a Subquery?

A subquery is a query nested inside another query. It is used to retrieve data for use in the main query.

6. What is normalization?

Normalization is the process of organizing data to reduce redundancy and improve integrity.

🚀 Get a full breakdown with examples, tips, and pro-level questions: 👉 https://www.freshyblog.com/sql-interview-questions-answers/

🔍 Bonus Questions to Practice

What is the difference between UNION and UNION ALL?

What are indexes and how do they improve performance?

How does a GROUP BY clause work with aggregate functions?

What is a stored procedure and when would you use one?

✅ Tips to Crack SQL Interviews

Practice writing queries by hand

Focus on real-world database scenarios

Understand query optimization basics

Review basic RDBMS concepts like constraints and keys

Final Thoughts

Whether you're a fresher starting out or an experienced developer prepping for technical rounds, mastering these SQL interview questions is crucial for acing your next job opportunity.

📚 Access the full SQL interview guide here: 👉 https://www.freshyblog.com/sql-interview-questions-answers/

#SQLInterviewQuestions#SQLQueries#DatabaseInterview#DataAnalytics#BackendDeveloper#FreshyBlog#SQLForFreshers#TechJobs

0 notes

Text

DBMS Tutorial Explained: Concepts, Types, and Applications

In today’s digital world, data is everywhere — from social media posts and financial records to healthcare systems and e-commerce websites. But have you ever wondered how all that data is stored, organized, and managed? That’s where DBMS — or Database Management System — comes into play.

Whether you’re a student, software developer, aspiring data analyst, or just someone curious about how information is handled behind the scenes, this DBMS tutorial is your one-stop guide. We’ll explore the fundamental concepts, various types of DBMS, and real-world applications to help you understand how modern databases function.

What is a DBMS?

A Database Management System (DBMS) is software that enables users to store, retrieve, manipulate, and manage data efficiently. Think of it as an interface between the user and the database. Rather than interacting directly with raw data, users and applications communicate with the database through the DBMS.

For example, when you check your bank account balance through an app, it’s the DBMS that processes your request, fetches the relevant data, and sends it back to your screen — all in milliseconds.

Why Learn DBMS?

Understanding DBMS is crucial because:

It’s foundational to software development: Every application that deals with data — from mobile apps to enterprise systems — relies on some form of database.

It improves data accuracy and security: DBMS helps in organizing data logically while controlling access and maintaining integrity.

It’s highly relevant for careers in tech: Knowledge of DBMS is essential for roles in backend development, data analysis, database administration, and more.

Core Concepts of DBMS

Let’s break down some of the fundamental concepts that every beginner should understand when starting with DBMS.

1. Database

A database is an organized collection of related data. Instead of storing information in random files, a database stores data in structured formats like tables, making retrieval efficient and logical.

2. Data Models

Data models define how data is logically structured. The most common models include:

Hierarchical Model

Network Model

Relational Model

Object-Oriented Model

Among these, the Relational Model (used in systems like MySQL, PostgreSQL, and Oracle) is the most popular today.

3. Schemas and Tables

A schema defines the structure of a database — like a blueprint. It includes definitions of tables, columns, data types, and relationships between tables.

4. SQL (Structured Query Language)

SQL is the standard language used to communicate with relational DBMS. It allows users to perform operations like:

SELECT: Retrieve data

INSERT: Add new data

UPDATE: Modify existing data

DELETE: Remove data

5. Normalization

Normalization is the process of organizing data to reduce redundancy and improve integrity. It involves dividing a database into two or more related tables and defining relationships between them.

6. Transactions

A transaction is a sequence of operations performed as a single logical unit. Transactions in DBMS follow ACID properties — Atomicity, Consistency, Isolation, and Durability — ensuring reliable data processing even during failures.

Types of DBMS

DBMS can be categorized into several types based on how data is stored and accessed:

1. Hierarchical DBMS

Organizes data in a tree-like structure.

Each parent can have multiple children, but each child has only one parent.

Example: IBM’s IMS.

2. Network DBMS

Data is represented as records connected through links.

More flexible than hierarchical model; a child can have multiple parents.

Example: Integrated Data Store (IDS).

3. Relational DBMS (RDBMS)

Data is stored in tables (relations) with rows and columns.

Uses SQL for data manipulation.

Most widely used type today.

Examples: MySQL, PostgreSQL, Oracle, SQL Server.

4. Object-Oriented DBMS (OODBMS)

Data is stored in the form of objects, similar to object-oriented programming.

Supports complex data types and relationships.

Example: db4o, ObjectDB.

5. NoSQL DBMS

Designed for handling unstructured or semi-structured data.

Ideal for big data applications.

Types include document, key-value, column-family, and graph databases.

Examples: MongoDB, Cassandra, Redis, Neo4j.

Applications of DBMS

DBMS is used across nearly every industry. Here are some common applications:

1. Banking and Finance

Customer information, transaction records, and loan histories are stored and accessed through DBMS.

Ensures accuracy and fast processing.

2. Healthcare

Manages patient records, billing, prescriptions, and lab reports.

Enhances data privacy and improves coordination among departments.

3. E-commerce

Handles product catalogs, user accounts, order histories, and payment information.

Ensures real-time data updates and personalization.

4. Education

Maintains student information, attendance, grades, and scheduling.

Helps in online learning platforms and academic administration.

5. Telecommunications

Manages user profiles, billing systems, and call records.

Supports large-scale data processing and service reliability.

Final Thoughts

In this DBMS tutorial, we’ve broken down what a Database Management System is, why it’s important, and how it works. Understanding DBMS concepts like relational models, SQL, and normalization gives you the foundation to build and manage efficient, scalable databases.

As data continues to grow in volume and importance, the demand for professionals who understand database systems is also rising. Whether you're learning DBMS for academic purposes, career development, or project needs, mastering these fundamentals is the first step toward becoming data-savvy in today’s digital world.

Stay tuned for more tutorials, including hands-on SQL queries, advanced DBMS topics, and database design best practices!

0 notes

Text

Top 10 Power BI terms Every New User should know

If you are just getting started with Power BI, understanding the key terms will help you navigate the platform more effectively. Here are the top 10 Power BI terms every new user should know:

1) Dataset

A dataset in Power BI refers to information that has been imported from Excel, SQL or cloud services. Reports and dashboards can only be created by first having the necessary datasets. They save everything, including the raw data and the designs, relationships, measures and calculated columns we’ve built in Power BI. Creating, handling and updating datasets are basic skills needed because data push in analytics, give instant business insights and bind different data sources.

2) Report

A Power BI report is made up of charts, graphs and tables, all built from data in a dataset. With reports, we have an interactive tool to explore your notes, click deeper and review additional information. Every report page is designed to communicate a certain story or point out a specific part of your data. To ensure your organization makes good decisions, it helps to know how to create and customize reports.

3) Dashboard

The dashboard in Power BI are a grouping of visual components and metrics. It gives a general and joined-up look at important observations, unlike reports that just provide a detailed view of each report or group of data. They offer business leaders an easy way to monitor and check business KPIs at any time. Managers and executives benefit from dashboards, since they can get the needed performance updates without going through many detailed reports.

4) Visualization

A visualization in Power BI presents the data using images. It is sometimes known as a visual or chart. Bar charts, pie charts, line graphs, maps, tables and similar options are part of visualizations. They change difficult-to-read information into clear graphics that can show trends, unusual values and details quickly. With Power BI, users have many ways to change how the visualizations look for their organization. To make exciting reports and dashboards, we should learn to use visualizations well.

5) Measure

In Power BI, measures are calculations designed to study data, and we can build them using DAX (Data Analysis Expressions). Unlike regular columns, measures can refresh their values at any time based on reports or what a user selects. It is easy to find the total, average, ratio, percentage and complex groups with measures. Working on efficient measures helps we turn your data into useful insights and boosts your analytical skills within Power BI.

6) Calculated Column

To create a calculated column, we add a DAX formula to some input fields in your data. We can add new information to your data using calculated columns such as grouping, marking special items or defining values for ourselves. Unlike measures, these columns are evaluated when the data refreshes rather than at query time. Having calculated columns in our data model ensures that your reports become richer and better fit your company’s specific needs.

7) Filter

We can narrow the data in your charts and graphs using filters in Power BI. They can be used at the report, page or graphical levels. Using filters, we can narrow down your analysis to a particular time, type or area of the business. Additional options available include slicers, filters for dates and filters for moving down to the next page. Adding the right filters makes your reports more relevant, so stakeholders can easily spot the main insights.

8) Data Model

Any Power BI project depends on a solid data model as its core structure. It shows the connections between data tables by using relationships, Primary Keys and Foreign Keys. In addition, the data model has calculated columns, measures and hierarchies to organize your data for use properly.

With a proper data model in place, our analysis results are correct, queries are fast, and your visualizations are easy to interact with. To create stable and scalable Power BI projects, one must learn about modelling.

9) DAX is Data Analysis Expressions

Power BI provides DAX as a language for creating your calculations in measures, calculated columns and tables. DAX has functions, operators and values that make it possible to create advanced rules for a business.

When we master DAX, we can use analytics for many purposes, including complex grouping, analyzing time and transforming the data. Knowing the basics of DAX will help new Power BI users make the most of the product’s reporting capabilities.

10) Power Query

The purpose of Power Query is to prepare your data for use in Power BI. The platform enables users to bring data from various locations, clean it, format it and transform it before it enters the data model. An easy-to-use interface and the M language in Power Query make it simple to merge, filter duplicates, organize columns and fill out repetitive tasks on the table. By using Power Query, it becomes smoother to ETL your data and it is always accurate and easy to analyze.

Conclusion

Understanding the core terminology of Power BI is essential for anyone new to the platform. These top 10 terms, like datasets, reports, dashboards, DAX, and visualizations—form the building blocks of how Power BI works and how data is transformed into meaningful insights. I suggest you learn Power BI from the Tpoint Tech website. It provides a Power BI tutorial, interview questions that helps you to learn Power BI features and tools in easier way.

0 notes

Text

What’s the function of Tableau Prep?

Tableau Prep is a data preparation tool from Tableau that helps users clean, shape, and organize data before it is analyzed or visualized. It is especially useful for data analysts and business intelligence professionals who need to prepare data quickly and efficiently without writing complex code.

The core function of Tableau Prep is to simplify the data preparation process through an intuitive, visual interface. Users can drag and drop datasets, apply filters, rename fields, split or combine columns, handle null values, pivot data, and even join or union multiple data sources. These actions are displayed in a clear, step-by-step workflow, which makes it easy to understand how data is transformed at each stage.

Tableau Prep includes two main components: Prep Builder, used to create and edit data preparation workflows, and Prep Conductor, which automates the running of flows and integrates with Tableau Server or Tableau Cloud for scheduled data refreshes. This automation is a major advantage, especially in dynamic environments where data updates regularly.

Another significant benefit is real-time previews. As users manipulate data, they can instantly see the effects of their actions, allowing for better decisions and error checking. It supports connections to various data sources such as Excel, SQL databases, and cloud platforms like Google BigQuery or Amazon Redshift.

Tableau Prep’s seamless integration with Tableau Desktop means that once data is prepped, it can be directly pushed into visualization dashboards without exporting and re-importing files.

In short, Tableau Prep helps streamline the otherwise time-consuming process of cleaning and preparing data, making it more accessible to analysts without deep programming knowledge.

If you’re looking to master tools like Tableau Prep and enter the analytics field, consider enrolling in a data analyst course with placement for hands-on training and career support.

0 notes

Text

This SQL Trick Cut My Query Time by 80%

How One Simple Change Supercharged My Database Performance

If you work with SQL, you’ve probably spent hours trying to optimize slow-running queries — tweaking joins, rewriting subqueries, or even questioning your career choices. I’ve been there. But recently, I discovered a deceptively simple trick that cut my query time by 80%, and I wish I had known it sooner.

Here’s the full breakdown of the trick, how it works, and how you can apply it right now.

🧠 The Problem: Slow Query in a Large Dataset

I was working with a PostgreSQL database containing millions of records. The goal was to generate monthly reports from a transactions table joined with users and products. My query took over 35 seconds to return, and performance got worse as the data grew.

Here’s a simplified version of the original query:

sql

SELECT

u.user_id,

SUM(t.amount) AS total_spent

FROM

transactions t

JOIN

users u ON t.user_id = u.user_id

WHERE

t.created_at >= '2024-01-01'

AND t.created_at < '2024-02-01'

GROUP BY

u.user_id, http://u.name;

No complex logic. But still painfully slow.

⚡ The Trick: Use a CTE to Pre-Filter Before the Join

The major inefficiency here? The join was happening before the filtering. Even though we were only interested in one month’s data, the database had to scan and join millions of rows first — then apply the WHERE clause.

✅ Solution: Filter early using a CTE (Common Table Expression)

Here’s the optimized version:

sql

WITH filtered_transactions AS (

SELECT *

FROM transactions

WHERE created_at >= '2024-01-01'

AND created_at < '2024-02-01'

)

SELECT

u.user_id,

SUM(t.amount) AS total_spent

FROM

filtered_transactions t

JOIN

users u ON t.user_id = u.user_id

GROUP BY

u.user_id, http://u.name;

Result: Query time dropped from 35 seconds to just 7 seconds.

That’s an 80% improvement — with no hardware changes or indexing.

🧩 Why This Works

Databases (especially PostgreSQL and MySQL) optimize join order internally, but sometimes they fail to push filters deep into the query plan.

By isolating the filtered dataset before the join, you:

Reduce the number of rows being joined

Shrink the working memory needed for the query

Speed up sorting, grouping, and aggregation

This technique is especially effective when:

You’re working with time-series data

Joins involve large or denormalized tables

Filters eliminate a large portion of rows

🔍 Bonus Optimization: Add Indexes on Filtered Columns

To make this trick even more effective, add an index on created_at in the transactions table:

sql

CREATE INDEX idx_transactions_created_at ON transactions(created_at);

This allows the database to quickly locate rows for the date range, making the CTE filter lightning-fast.

🛠 When Not to Use This

While this trick is powerful, it’s not always ideal. Avoid it when:

Your filter is trivial (e.g., matches 99% of rows)

The CTE becomes more complex than the base query

Your database’s planner is already optimizing joins well (check the EXPLAIN plan)

🧾 Final Takeaway

You don’t need exotic query tuning or complex indexing strategies to speed up SQL performance. Sometimes, just changing the order of operations — like filtering before joining — is enough to make your query fly.

“Think like the database. The less work you give it, the faster it moves.”

If your SQL queries are running slow, try this CTE filtering trick before diving into advanced optimization. It might just save your day — or your job.

Would you like this as a Medium post, technical blog entry, or email tutorial series?

0 notes

Text

"Power BI Training 2025 – Learn Data Analytics from Scratch | Naresh i Technologies"

🌐 Introduction: Why Power BI Matters in 2025

With data now being a central asset for every industry—from retail to healthcare—tools that simplify data analysis and visualization are essential. Power BI by Microsoft has emerged as one of the top tools in this space. It’s more than just charts—Power BI transforms data into decisions.

Whether you’re a beginner, a working professional, or planning a career transition into data analytics, this guide offers a practical roadmap to becoming proficient in Power BI.

📅 Want to join our latest Power BI training batch? Check all new batches and register here

🔍 What is Power BI?

Power BI is a cloud-based business intelligence platform by Microsoft that helps you visualize data, build interactive dashboards, and generate actionable insights. It's known for being user-friendly, scalable, and deeply integrated with Microsoft services like Excel, Azure, and SQL Server.

🧠 Key Features of Power BI

📊 Custom dashboards & reports

🔄 Real-time data streaming

🔍 AI-powered insights

🔌 Connects to 100+ data sources

🔐 Enterprise-grade security

These features make Power BI a top choice for companies looking to turn data into decisions—fast.

🧩 Types of Power BI Tools Explained

Power BI Tool What It Does Ideal For Power BI Desktop Free tool for creating and designing reports Analysts, developers Power BI Service (Pro) Online collaboration & sharing reports Teams, SMEs Power BI Premium Dedicated cloud capacity & advanced AI features Enterprises Power BI Mobile View dashboards on-the-go Managers, execs Power BI Embedded Embed visuals in your apps or web apps Software developers Power BI Report Server On-premise deployment for sensitive data Government, finance sectors

🧭 How to Learn Power BI in 2025 (Beginner to Advanced Path)

Here's a practical learning roadmap:

✅ Step 1: Start with Basics

Understand the UI and connect to Excel or CSV files.

Learn what datasets, reports, and dashboards are.

✅ Step 2: Learn Data Cleaning (Power Query)

Transform messy data into clean, structured tables.

✅ Step 3: Master DAX (Data Analysis Expressions)

Create measures, calculated columns, KPIs, and time intelligence functions.

✅ Step 4: Build Projects

Work on real-life dashboards (Sales, HR, Finance, Marketing).

✅ Step 5: Publish & Share Reports

Use Power BI Service to collaborate and distribute your insights.

✅ Step 6: Get Certified

Earn Microsoft’s PL-300: Power BI Data Analyst Associate certification to boost your credibility.

🎓 Explore our Power BI Training Programs & Enroll Today

Power BI Career Path in 2025:

As more companies prioritize data to guide their decisions, professionals who can interpret and visualize that data are in high demand. Power BI, Microsoft’s business analytics tool, has quickly become a preferred skill for analysts, developers, and business teams across the world.

But what does a career in Power BI actually look like?

Let’s break it down.

🛤️ A Realistic Power BI Career Progression

🔹 1. Data Analyst (Beginner Level)

If you're just starting out, you’ll likely begin as a data analyst using Power BI to create basic dashboards, import data from Excel, and build reports for stakeholders.

Tools to learn: Power BI Desktop, Excel, Power Query

Skills needed: Data cleaning, basic visualization, storytelling with charts

Typical salary: ₹3–5 LPA (India) | $60,000–75,000 (US)

🔹 2. Power BI Developer (Mid Level)

With 1–2 years of experience, you’ll be developing complex dashboards and working with large datasets. Here, DAX (Data Analysis Expressions) becomes essential.

Tools to learn: DAX, SQL, Power BI Service, Azure Data Sources

Responsibilities: Data modeling, report optimization, data refresh automation

Typical salary: ₹6–12 LPA (India) | $80,000–100,000 (US)

🔹 3. Business Intelligence Consultant / Sr. Analyst

At this stage, you’ll work on enterprise-scale BI projects, helping organizations plan, deploy, and manage full BI solutions using Power BI alongside cloud platforms like Azure.

Additional skills: Azure Synapse, Dataflows, Row-Level Security (RLS), Power BI Gateway

Salary range: ₹12–20+ LPA (India) | $100,000–130,000+ (US)

🛠 Must-Know Tools & Skills Alongside Power BI

Skill/Tool Why It’s Useful Excel Easily integrates and helps with modeling SQL Useful for custom queries and joining data Power Query Data cleaning and transformation DAX Metrics, logic, and analytics Azure Synapse or SQL Server Common Power BI data sources Python/R For statistical or advanced data science workflows

📌 Conclusion: Why Start Power BI Now?

Power BI is more than just a skill—it’s a career accelerator. Whether you're entering data analytics, trying to land a job abroad, or upskilling in your current role, Power BI is your go-to tool in 2025.

🎉 Ready to learn Power BI and land your next role? View all our upcoming batches and enroll now

🎓 Naresh i Technologies – One Destination for All In-Demand Courses

Naresh i Technologies doesn’t just offer Power BI—they provide a full spectrum of career-building IT courses, both online and in-classroom, guided by real-time professionals.

Whether you're interested in Power BI, cloud computing, software testing, or core development, Naresh has you covered.

🟢 Popular Courses at Naresh i Technologies:

✅ DevOps with Multi-Cloud Training in KPHB – Learn CI/CD, AWS, Azure, and real-world deployment.

✅ Full Stack Software Testing Training – Covers manual, automation (Selenium), API testing & more.

✅ Core Java Training in KPHB – Master Java OOPs, multithreading, JDBC, and more for strong backend foundations.

💬 Frequently Asked Questions

Q1. Is Power BI better than Tableau? Depends on your needs—Power BI is better for Microsoft ecosystem integration and affordability. Tableau is strong in flexibility and advanced visuals.

Q2. Can I learn Power BI in one month? Yes, if you dedicate consistent daily time, you can cover the basics and build a simple project within 30 days.

Q3. Is Power BI coding-based? Not

#PowerBITraining#LearnPowerBI#PowerBI2025#DataAnalyticsTraining#NareshTechnologies#BusinessIntelligence#DAX#PowerQuery#MicrosoftPowerBI#DataVisualization#BItools#KPHBTraining#ITCoursesHyderabad

0 notes

Text

Batch Address Validation Tool and Bulk Address Verification Software

When businesses manage thousands—or millions—of addresses, validating each one manually is impractical. That’s where batch address validation tools and bulk address verification software come into play. These solutions streamline address cleansing by processing large datasets efficiently and accurately.

What Is Batch Address Validation?

Batch address validation refers to the automated process of validating multiple addresses in a single operation. It typically involves uploading a file (CSV, Excel, or database) containing addresses, which the software then checks, corrects, formats, and appends with geolocation or delivery metadata.

Who Needs Bulk Address Verification?

Any organization managing high volumes of contact data can benefit, including:

Ecommerce retailers shipping to customers worldwide.

Financial institutions verifying client data.

Healthcare providers maintaining accurate patient records.

Government agencies validating census or mailing records.

Marketing agencies cleaning up lists for campaigns.

Key Benefits of Bulk Address Verification Software

1. Improved Deliverability

Clean data ensures your packages, documents, and marketing mailers reach the right person at the right location.

2. Cost Efficiency

Avoiding undeliverable mail means reduced waste in printing, postage, and customer service follow-up.

3. Database Accuracy

Maintaining accurate addresses in your CRM, ERP, or mailing list helps improve segmentation and customer engagement.

4. Time Savings

What would take weeks manually can now be done in minutes or hours with bulk processing tools.

5. Regulatory Compliance

Meet legal and industry data standards more easily with clean, validated address data.

Features to Expect from a Batch Address Validation Tool

When evaluating providers, check for the following capabilities:

Large File Upload Support: Ability to handle millions of records.

Address Standardization: Correcting misspellings, filling in missing components, and formatting according to regional norms.

Geocoding Integration: Assigning latitude and longitude to each validated address.

Duplicate Detection & Merging: Identifying and consolidating redundant entries.

Reporting and Audit Trails: For compliance and quality assurance.

Popular Batch Address Verification Tools

Here are leading tools in 2025:

1. Melissa Global Address Verification

Features: Supports batch and real-time validation, international formatting, and geocoding.

Integration: Works with Excel, SQL Server, and Salesforce.

2. Loqate Bulk Cleanse

Strengths: Excel-friendly UI, supports uploads via drag-and-drop, and instant insights.

Ideal For: Businesses looking to clean customer databases or mailing lists quickly.

3. Smarty Bulk Address Validation

Highlights: Fast processing, intuitive dashboard, and competitive pricing.

Free Tier: Great for small businesses or pilot projects.

4. Experian Bulk Address Verification

Capabilities: Cleans large datasets with regional postal expertise.

Notable Use Case: Utility companies and financial services.

5. Data Ladder’s DataMatch Enterprise

Advanced Matching: Beyond address validation, it detects data anomalies and fuzzy matches.

Use Case: Enterprise-grade data cleansing for mergers or CRM migrations.

How to Use Bulk Address Verification Software

Using batch tools is typically simple and follows this flow:

Upload Your File: Use CSV, Excel, or database export.

Map Fields: Match your columns with the tool’s required address fields.

Validate & Clean: The software standardizes, verifies, and corrects addresses.

Download Results: Export a clean file with enriched metadata (ZIP+4, geocode, etc.)

Import Back: Upload your clean list into your CRM or ERP system.

Integration Options for Bulk Address Validation

Many vendors offer APIs or direct plugins for:

Salesforce

Microsoft Dynamics

HubSpot

Oracle and SAP

Google Sheets

MySQL / PostgreSQL / SQL Server

Whether you're cleaning one-time datasets or automating ongoing data ingestion, integration capabilities matter.

SEO Use Cases: Why Batch Address Tools Help Digital Businesses

In the context of SEO and digital marketing, bulk address validation plays a key role:

Improved Local SEO Accuracy: Accurate NAP (Name, Address, Phone) data ensures consistent local listings and better visibility.

Better Audience Segmentation: Clean data supports targeted, geo-focused marketing.

Lower Email Bounce Rates: Often tied to postal address quality in cross-channel databases.

Final Thoughts

Batch address validation tools and bulk verification software are essential for cleaning and maintaining large datasets. These platforms save time, cut costs, and improve delivery accuracy—making them indispensable for logistics, ecommerce, and CRM management.

Key Takeaways

Use international address validation to expand globally without delivery errors.

Choose batch tools to clean large datasets in one go.

Prioritize features like postal certification, coverage, geocoding, and compliance.

Integrate with your business tools for automated, real-time validation.

Whether you're validating a single international address or millions in a database, the right tools empower your operations and increase your brand's reliability across borders.

youtube

SITES WE SUPPORT

Validate Address With API – Wix

0 notes

Text

Advanced Database Design

As applications grow in size and complexity, the design of their underlying databases becomes critical for performance, scalability, and maintainability. Advanced database design goes beyond basic tables and relationships—it involves deep understanding of normalization, indexing, data modeling, and optimization strategies.

1. Data Modeling Techniques

Advanced design starts with a well-thought-out data model. Common modeling approaches include:

Entity-Relationship (ER) Model: Useful for designing relational databases.

Object-Oriented Model: Ideal when working with object-relational databases.

Star and Snowflake Schemas: Used in data warehouses for efficient querying.

2. Normalization and Denormalization

Normalization: The process of organizing data to reduce redundancy and improve integrity (up to 3NF or BCNF).

Denormalization: In some cases, duplicating data improves read performance in analytical systems.

3. Indexing Strategies

Indexes are essential for query performance. Common types include:

B-Tree Index: Standard index type in most databases.

Hash Index: Good for equality comparisons.

Composite Index: Combines multiple columns for multi-column searches.

Full-Text Index: Optimized for text search operations.

4. Partitioning and Sharding

Partitioning: Splits a large table into smaller, manageable pieces (horizontal or vertical).

Sharding: Distributes database across multiple machines for scalability.

5. Advanced SQL Techniques

Common Table Expressions (CTEs): Temporary result sets for organizing complex queries.

Window Functions: Perform calculations across a set of table rows related to the current row.

Stored Procedures & Triggers: Automate tasks and enforce business logic at the database level.

6. Data Integrity and Constraints

Primary and Foreign Keys: Enforce relational integrity.

CHECK Constraints: Validate data against specific rules.

Unique Constraints: Ensure column values are not duplicated.

7. Security and Access Control

Security is crucial in database design. Best practices include:

Implementing role-based access control (RBAC).

Encrypting sensitive data both at rest and in transit.

Using parameterized queries to prevent SQL injection.

8. Backup and Recovery Planning

Design your database with disaster recovery in mind:

Automate daily backups.

Test recovery procedures regularly.

Use replication for high availability.

9. Monitoring and Optimization

Tools like pgAdmin (PostgreSQL), MySQL Workbench, and MongoDB Compass help in identifying bottlenecks and optimizing performance.

10. Choosing the Right Database System

Relational: MySQL, PostgreSQL, Oracle (ideal for structured data and ACID compliance).

NoSQL: MongoDB, Cassandra, CouchDB (great for scalability and unstructured data).

NewSQL: CockroachDB, Google Spanner (combines NoSQL scalability with relational features).

Conclusion

Advanced database design is a balancing act between normalization, performance, and scalability. By applying best practices and modern tools, developers can ensure that their systems are robust, efficient, and ready to handle growing data demands. Whether you’re designing a high-traffic e-commerce app or a complex analytics engine, investing time in proper database architecture pays off in the long run.

0 notes

Text

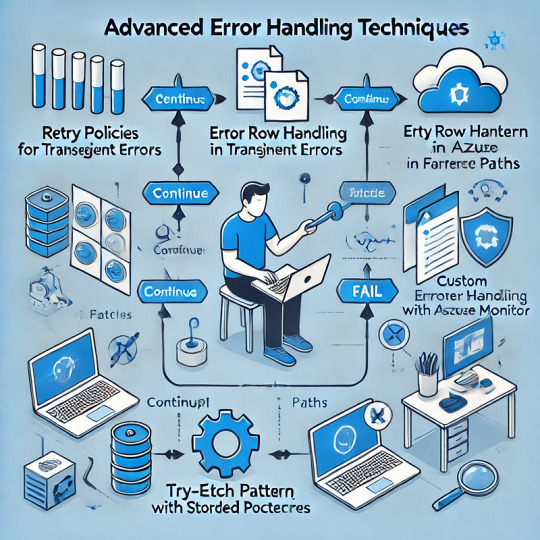

Advanced Error Handling Techniques in Azure Data Factory

Azure Data Factory (ADF) is a powerful data integration tool, but handling errors efficiently is crucial for building robust data pipelines. This blog explores advanced error-handling techniques in ADF to ensure resilience, maintainability, and better troubleshooting.

1. Understanding Error Types in ADF

Before diving into advanced techniques, it’s essential to understand common error types in ADF:

Transient Errors — Temporary issues such as network timeouts or throttling.

Data Errors — Issues with source data integrity, format mismatches, or missing values.

Configuration Errors — Incorrect linked service credentials, dataset configurations, or pipeline settings.

System Failures — Service outages or failures in underlying compute resources.

2. Implementing Retry Policies for Transient Failures

ADF provides built-in retry mechanisms to handle transient errors. When configuring activities:

Enable Retries — Set the retry count and interval in activity settings.

Use Exponential Backoff — Adjust retry intervals dynamically to reduce repeated failures.

Leverage Polybase for SQL — If integrating with Azure Synapse, ensure the retry logic aligns with PolyBase behavior.

Example JSON snippet for retry settings in ADF:jsonCopyEdit"policy": { "concurrency": 1, "retry": { "count": 3, "intervalInSeconds": 30 } }

3. Using Error Handling Paths in Data Flows

Data Flows in ADF allow “Error Row Handling” settings per transformation. Options include:

Continue on Error — Skips problematic records and processes valid ones.

Redirect to Error Output — Routes bad data to a separate table or storage for investigation.

Fail on Error — Stops the execution on encountering issues.

Example: Redirecting bad records in a Derived Column transformation.

In Data Flow, select the Derived Column transformation.

Choose “Error Handling” → Redirect errors to an alternate sink.

Store bad records in a storage account for debugging.

4. Implementing Try-Catch Patterns in Pipelines

ADF doesn’t have a traditional try-catch block, but we can emulate it using:

Failure Paths — Use activity dependencies to handle failures.

Set Variables & Logging — Capture error messages dynamically.

Alerting Mechanisms — Integrate with Azure Monitor or Logic Apps for notifications.

Example: Using Failure Paths

Add a Web Activity after a Copy Activity.

Configure Web Activity to log errors in an Azure Function or Logic App.

Set the dependency condition to “Failure” for error handling.

5. Using Stored Procedures for Custom Error Handling

For SQL-based workflows, handling errors within stored procedures enhances control.

Example:sqlBEGIN TRY INSERT INTO target_table (col1, col2) SELECT col1, col2 FROM source_table; END TRY BEGIN CATCH INSERT INTO error_log (error_message, error_time) VALUES (ERROR_MESSAGE(), GETDATE()); END CATCH

Use RETURN codes to signal success/failure.

Log errors to an audit table for investigation.

6. Logging and Monitoring Errors with Azure Monitor

To track failures effectively, integrate ADF with Azure Monitor and Log Analytics.

Enable diagnostic logging in ADF.

Capture execution logs, activity failures, and error codes.

Set up alerts for critical failures.

Example: Query failed activities in Log AnalyticskustoADFActivityRun | where Status == "Failed" | project PipelineName, ActivityName, ErrorMessage, Start, End

7. Handling API & External System Failures

When integrating with REST APIs, handle external failures by:

Checking HTTP Status Codes — Use Web Activity to validate responses.

Implementing Circuit Breakers — Stop repeated API calls on consecutive failures.

Using Durable Functions — Store state for retrying failed requests asynchronously.

Example: Configure Web Activity to log failuresjson"dependsOn": [ { "activity": "API_Call", "dependencyConditions": ["Failed"] } ]

8. Leveraging Custom Logging with Azure Functions

For advanced logging and alerting:

Use an Azure Function to log errors to an external system (SQL DB, Blob Storage, Application Insights).

Pass activity parameters (pipeline name, error message) to the function.

Trigger alerts based on severity.

Conclusion

Advanced error handling in ADF involves: ✅ Retries and Exponential Backoff for transient issues. ✅ Error Redirects in Data Flows to capture bad records. ✅ Try-Catch Patterns using failure paths. ✅ Stored Procedures for custom SQL error handling. ✅ Integration with Azure Monitor for centralized logging. ✅ API and External Failure Handling for robust external connections.

By implementing these techniques, you can enhance the reliability and maintainability of your ADF pipelines. 🚀

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Relational vs. Non-Relational Databases

Introduction

Databases are a crucial part of modern-day technology, providing better access to the organization of information and efficient data storage. They vary in size based on the applications they support—from small, user-specific applications to large enterprise databases managing extensive customer data. When discussing databases, it's important to understand the two primary types: Relational vs Non-Relational Databases, each offering different approaches to data management. So, where should you start? Let's take it step by step.

What Are Databases?

A database is simply an organized collection of data that empowers users to store, retrieve, and manipulate data efficiently. Organizations, websites, and applications depend on databases for almost everything between a customer record and a transaction.

Types of Databases

There are two main types of databases:

Relational Databases (SQL) – Organized in structured tables with predefined relationships.

Non-Relational Databases (NoSQL) – More flexible, allowing data to be stored in various formats like documents, graphs, or key-value pairs.

Let's go through these two database types thoroughly now.

Relational Data Base:

A relational database is one that is structured in the sense that the data is stored in tables in the manner of a spreadsheet. Each table includes rows (or records) and columns (or attributes). Relationships between tables are then created and maintained by the keys.

Examples of Relational Databases:

MySQL .

PostgreSQL .

Oracle .

Microsoft SQL Server .

What is a Non-Relational Database?

Non-relational database simply means that it does not use structured tables. Instead, it stores data in formats such as documents, key-value pairs, graphs, or wide-column stores, making it adaptable to certain use cases.

Some Examples of Non-Relational Databases are:

MongoDB (Document-based)

Redis (Key-value)

Cassandra (Wide-column)

Neo4j (Graph-based)

Key Differences Between Relational and Non-relational Databases.

1. Data Structure

Relational: Employs a rigid schema (tables, rows, columns).

Non-Relational: Schema-less, allowing flexible data storage.

2. Scalability

Relational: Scales vertically (adding more power to a single server).

Non-Relational: Scales horizontally (adding more servers).

3. Performance and Speed

Relational: Fast for complex queries and transactions.

Non-Relational: Fast for large-scale, distributed data.

4. Flexibility

Relational: Perfectly suitable for structured data with clear relationships.

Non-Relational: Best suited for unstructured or semi-structured data.

5. Complex Queries and Transactions

Relational: It can support ACID (Atomicity, Consistency, Isolation, and Durability).

Non-Relational: Some NoSQL databases can sacrifice consistency for speed.

Instances where a relational database should be put to use:

Financial systems Medical records E-commerce transactions Applications with strong data integrity When to Use a Non-Relational Database: Big data applications IoT and real-time analytics Social media platforms Content management systems

Selecting the Most Appropriate Database for Your Project

Check the following points when considering relational or non-relational databases:

✔ Data structure requirement

✔ Scalability requirement

✔ Performance expectation

✔ Complexity of query

Trend of future in databases

The future of the database tells a lot about the multi-model databases that shall host data in both a relational and non-relational manner. There is also a lean towards AI-enabled databases that are to improve efficiency and automation in management.

Conclusion

The advantages of both relational and non-relational databases are different; they are relative to specific conditions. Generally, if the requirements involve structured data within a high-class consistency level, then go for relational databases. However, if needs involve scalability and flexibility, then a non-relational kind would be the wiser option.

Location: Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#Best Computer Classes in Iskon-Ambli Road Ahmedabad#Differences between SQL and NoSQL#Relational vs. Non-Relational Databases#TCCI-Tririd Computer Coaching Institute#What is a relational database?

0 notes

Text

Tips for Understanding Computer Databases for Homework Assignments

In today’s digital world, databases play a crucial role in managing and organizing vast amounts of information. Whether you're a student learning database concepts or working on complex assignments, understanding computer databases can be challenging. This blog will guide you through essential tips for mastering computer databases and help you complete your homework efficiently. If you're looking for computer database assistance for homework, All Assignment Experts is here to provide expert support.

What is a Computer Database?

A computer database is a structured collection of data that allows easy access, management, and updating. It is managed using a Database Management System (DBMS), which facilitates storage, retrieval, and manipulation of data. Popular database systems include MySQL, PostgreSQL, MongoDB, and Microsoft SQL Server.

Why is Understanding Databases Important for Students?

Databases are widely used in industries like banking, healthcare, and e-commerce. Students pursuing computer science, information technology, or data science must grasp database concepts to build a strong foundation for future careers. Database knowledge is essential for managing large data sets, developing applications, and performing data analysis.

Tips for Understanding Computer Databases for Homework Assignments

1. Master the Basics First

Before diving into complex queries, ensure you understand basic database concepts like:

Tables and Records: Databases store data in tables, which contain rows (records) and columns (fields).

Primary and Foreign Keys: Primary keys uniquely identify each record, while foreign keys establish relationships between tables.

Normalization: A technique to eliminate redundancy and improve database efficiency.

2. Learn SQL (Structured Query Language)

SQL is the standard language for managing databases. Some essential SQL commands you should learn include:

SELECT – Retrieve data from a database.

INSERT – Add new records to a table.

UPDATE – Modify existing records.

DELETE – Remove records from a table.

JOIN – Combine data from multiple tables.

Using online SQL playgrounds like SQL Fiddle or W3Schools can help you practice these commands effectively.

3. Use Online Resources and Tools

Numerous online platforms provide computer database assistance for homework. Websites like All Assignment Experts offer professional guidance, tutorials, and assignment help to enhance your understanding of databases. Other useful resources include:

W3Schools and TutorialsPoint for database tutorials.

YouTube channels offering step-by-step database lessons.

Interactive coding platforms like Codecademy.

4. Work on Real-Life Database Projects

Practical experience is the best way to solidify your knowledge. Try creating a small database for:

A library management system.

An online store with customer orders.

A student database with courses and grades.

This hands-on approach will help you understand real-world applications and make it easier to complete assignments.

5. Understand Database Relationships

One of the biggest challenges students face is understanding database relationships. The three main types include:

One-to-One: Each record in Table A has only one corresponding record in Table B.

One-to-Many: A record in Table A relates to multiple records in Table B.

Many-to-Many: Multiple records in Table A relate to multiple records in Table B.

Using Entity-Relationship Diagrams (ERDs) can help visualize these relationships.

6. Debug SQL Queries Effectively

If your SQL queries aren’t working as expected, try these debugging techniques:

Break queries into smaller parts and test them individually.

Use EXPLAIN to analyze how queries are executed.

Check for syntax errors and missing table relationships.

7. Seek Expert Assistance When Needed

If you find yourself struggling, don’t hesitate to seek help. All Assignment Experts offers computer database assistance for homework, providing expert solutions to your database-related queries and assignments.

8. Stay Updated with Advanced Database Technologies

The database field is constantly evolving. Explore advanced topics such as:

NoSQL Databases (MongoDB, Firebase): Used for handling unstructured data.

Big Data and Cloud Databases: Learn about databases like AWS RDS and Google BigQuery.

Data Security and Encryption: Understand how databases protect sensitive information.

Conclusion

Understanding computer databases is crucial for students handling homework assignments. By mastering basic concepts, practicing SQL, utilizing online resources, and working on real projects, you can excel in your database coursework. If you need professional guidance, All Assignment Experts provides top-notch computer database assistance for homework, ensuring you grasp key concepts and score better grades.

Start applying these tips today, and you’ll soon develop a solid understanding of databases!

#computer database assistance for homework#computer database assistance#education#homework#do your homework

1 note

·

View note

Text

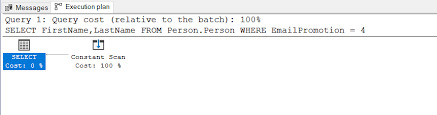

How to use CHECK in SQL

In this article, you will learn how to use the CHECK keyword to the column in SQL queries.

What is CHECK in SQL?

CHECK is a SQL constraint that allows database users to enter only those values which fulfill the specified condition. If any column is defined as a CHECK constraint, then that column holds only TRUE values.

0 notes