#validate SQL column

Explore tagged Tumblr posts

Text

Checking for the Existence of a Column in a SQL Server Table

To check if a column exists in a SQL Server table, you can use the INFORMATION_SCHEMA.COLUMNS system view, which provides information about all columns in all tables in a database. Here’s a SQL query that checks if a specific column exists in a specific table: IF EXISTS ( SELECT 1 FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_NAME = 'YourTableName' AND COLUMN_NAME = 'YourColumnName' ) BEGIN PRINT…

View On WordPress

#check column SQL#INFORMATION_SCHEMA.COLUMNS#SQL Server column existence#SQL Server table structure#validate SQL column

0 notes

Text

some of the tables I deal with have a bunch of columns named "user_defined_field_1" or named with weird abbreviations, and our sql table index does not elaborate upon the purposes of most columns. so I have to filter mystery columns by their values and hope my pattern recognition is good enough to figure out what the hell something means.

I've gotten pretty good at this. the most fucked thing I ever figured out was in this table that logged histories for serials. it has a "source" column where "R" labels receipts, "O" labels order-related records and then there's a third letter "I" which signifies "anything else that could possibly happen".

by linking various values to other tables, source "I" lines can be better identified, but once when I was doing this I tried to cast a bunch of bullshit int dates/times to datetime format and my query errored. it turned out a small number of times were not valid time values. which made no sense because that column was explicitly labeled as the creation time. I tried a couple of weird time format converters hoping to turn those values into ordinary times but nothing generated a result that made sense.

anyway literally next day I was working on something totally unrelated and noticed that the ID numbers in one table were the same length as INT dates. the I was like, how fucked would it be if those bad time values were not times at all, but actually ID numbers that joined to another table. maybe they could even join to the table I was looking at right then!

well guess what.

49 notes

·

View notes

Text

How to Prevent

Preventing injection requires keeping data separate from commands and queries:

The preferred option is to use a safe API, which avoids using the interpreter entirely, provides a parameterized interface, or migrates to Object Relational Mapping Tools (ORMs). Note: Even when parameterized, stored procedures can still introduce SQL injection if PL/SQL or T-SQL concatenates queries and data or executes hostile data with EXECUTE IMMEDIATE or exec().

Use positive server-side input validation. This is not a complete defense as many applications require special characters, such as text areas or APIs for mobile applications.

For any residual dynamic queries, escape special characters using the specific escape syntax for that interpreter. (escaping technique) Note: SQL structures such as table names, column names, and so on cannot be escaped, and thus user-supplied structure names are dangerous. This is a common issue in report-writing software.

Use LIMIT and other SQL controls within queries to prevent mass disclosure of records in case of SQL injection.

bonus question: think about how query on the image above should look like? answer will be in the comment section

4 notes

·

View notes

Text

Hmm. Not sure if my perfectionism is acting up, but... is there a reasonably isolated way to test SQL?

In particular, I want a test (or set of tests) that checks the following conditions:

The generated SQL is syntactically valid, for a given range of possible parameters.

The generated SQL agrees with the database schema, in that it doesn't reference columns or tables which don't exist. (Reduces typos and helps with deprecating parts of the schema)

The generated SQL performs joins and filtering in the way that I want. I'm fine if this is more a list of reasonable cases than a check of all possible cases.

Some kind of performance test? Especially if it's joining large tables

I don't care much about the shape of the output data. That's well-handled by parsing and type checking!

I don't think I want to perform queries against a live database, but I'm very flexible on this point.

5 notes

·

View notes

Text

ETL and Data Testing Services: Why Data Quality Is the Backbone of Business Success | GQAT Tech

Data drives decision-making in the digital age. Businesses use data to build strategies, attain insights, and measure performance to plan for growth opportunities. However, data-driven decision-making only exists when the data is clean, complete, accurate, and trustworthy. This is where ETL and Data Testing Services are useful.

GQAT Tech provides ETL (Extract, Transform, Load) and Data Testing Services so your data pipelines can run smoothly. Whether you are migrating legacy data, developing on a data warehouse, or merging with other data, GQAT Tech services help ensure your data is an asset and not a liability.

What is ETL and Why Is It Important?

ETL (extract, transform, load) is a process for data warehousing and data integration, which consists of:

Extracting data from different sources

Transforming the data to the right format or structure

Loading the transformed data into a central system, such as a data warehouse.

Although ETL can simplify data processing, it can also create risks in that data can be lost, misformatted, corrupted, or misapplied transformation rules. This is why ETL testing is very important.

The purpose of ETL testing is to ensure that the data is:

Correctly extracted from the source systems

Accurately transformed according to business logic

Correctly loaded into the destination systems.

Why Choose GQAT Tech for ETL and Data Testing?

At GQAT Tech combine our exceptional technical expertise and premier technology and custom-built frameworks to ensure your data is accurate and certified with correctness.

1. End-to-End Data Validation

We will validate your data across the entire ETL process – extract, transform, and load- to confirm the source and target systems are 100% consistent.

2. Custom-Built Testing Frameworks

Every company has a custom data workflow. We build testing frameworks fit for your proprietary data environments, business rules, and compliance requirements.

3. Automation + Accuracy

We automate to the highest extent using tools like QuerySurge, Talend, Informatica, SQL scripts, etc. This helps a) reduce the amount of testing effort, b) avoid human error.

4. Compliance Testing

Data Privacy and compliance are obligatory today. We help you comply with regulations like GDPR, HIPAA, SOX, etc.

5. Industry Knowledge

GQAT has years of experience with clients in Finance, Healthcare, Telecom, eCommerce, and Retail, which we apply to every data testing assignment.

Types of ETL and Data Testing Services We Offer

Data Transformation Testing

We ensure your business rules are implemented accurately as part of the transformation process. Don't risk incorrect aggregations, mislabels, or logical errors in your final reports.

Data Migration Testing

We ensure that, regardless of moving to the cloud or the legacy to modern migration, all the data is transitioned completely, accurately, and securely.

BI Report Testing

We validate that both dashboards and business reports reflect the correct numbers by comparing visual data to actual backend data.

Metadata Testing

We validate schema, column names, formats, data types, and other metadata to ensure compatibility of source and target systems.

Key Benefits of GQAT Tech’s ETL Testing Services

1. Increase Data Security and Accuracy

We guarantee that valid and necessary data will only be transmitted to your system; we can reduce data leakage and security exposures.

2. Better Business Intelligence

Good data means quality outputs; dashboards and business intelligence you can trust, allowing you to make real-time choices with certainty.

3. Reduction of Time and Cost

We also lessen the impact of manual mistakes, compress timelines, and assist in lower rework costs by automating data testing.

4. Better Customer Satisfaction

Good data to make decisions off of leads to good customer experiences, better insights, and improved services.

5. Regulatory Compliance

By implementing structured testing, you can ensure compliance with data privacy laws and standards in order to avoid fines, penalties, and audits.

Why GQAT Tech?

With more than a decade of experience, we are passionate about delivering world-class ETL & Data Testing Services. Our purpose is to help you operate from clean, reliable data to exercise and action with confidence to allow you to scale, innovate, and compete more effectively.

Visit Us: https://gqattech.com Contact Us: [email protected]

#ETL Testing#Data Testing Services#Data Validation#ETL Automation#Data Quality Assurance#Data Migration Testing#Business Intelligence Testing#ETL Process#SQL Testing#GQAT Tech

0 notes

Text

DBMS Tutorial for Beginners: Unlocking the Power of Data Management

In this "DBMS Tutorial for Beginners: Unlocking the Power of Data Management," we will explore the fundamental concepts of DBMS, its importance, and how you can get started with managing data effectively.

What is a DBMS?

A Database Management System (DBMS) is a software tool that facilitates the creation, manipulation, and administration of databases. It provides an interface for users to interact with the data stored in a database, allowing them to perform various operations such as querying, updating, and managing data. DBMS can be classified into several types, including:

Hierarchical DBMS: Organizes data in a tree-like structure, where each record has a single parent and can have multiple children.

Network DBMS: Similar to hierarchical DBMS but allows more complex relationships between records, enabling many-to-many relationships.

Relational DBMS (RDBMS): The most widely used type, which organizes data into tables (relations) that can be linked through common fields. Examples include MySQL, PostgreSQL, and Oracle.

Object-oriented DBMS: Stores data in the form of objects, similar to object-oriented programming concepts.

Why is DBMS Important?

Data Integrity: DBMS ensures the accuracy and consistency of data through constraints and validation rules. This helps maintain data integrity and prevents anomalies.

Data Security: With built-in security features, DBMS allows administrators to control access to data, ensuring that only authorized users can view or modify sensitive information.

Data Redundancy Control: DBMS minimizes data redundancy by storing data in a centralized location, reducing the chances of data duplication and inconsistency.

Efficient Data Management: DBMS provides tools for data manipulation, making it easier for users to retrieve, update, and manage data efficiently.

Backup and Recovery: Most DBMS solutions come with backup and recovery features, ensuring that data can be restored in case of loss or corruption.

Getting Started with DBMS

To begin your journey with DBMS, you’ll need to familiarize yourself with some essential concepts and tools. Here’s a step-by-step guide to help you get started:

Step 1: Understand Basic Database Concepts

Before diving into DBMS, it’s important to grasp some fundamental database concepts:

Database: A structured collection of data that is stored and accessed electronically.

Table: A collection of related data entries organized in rows and columns. Each table represents a specific entity (e.g., customers, orders).

Record: A single entry in a table, representing a specific instance of the entity.

Field: A specific attribute of a record, represented as a column in a table.

Step 2: Choose a DBMS

There are several DBMS options available, each with its own features and capabilities. For beginners, it’s advisable to start with a user-friendly relational database management system. Some popular choices include:

MySQL: An open-source RDBMS that is widely used for web applications.

PostgreSQL: A powerful open-source RDBMS known for its advanced features and compliance with SQL standards.

SQLite: A lightweight, serverless database that is easy to set up and ideal for small applications.

Step 3: Install the DBMS

Once you’ve chosen a DBMS, follow the installation instructions provided on the official website. Most DBMS solutions offer detailed documentation to guide you through the installation process.

Step 4: Create Your First Database

After installing the DBMS, you can create your first database. Here’s a simple example using MySQL:

Open the MySQL command line or a graphical interface like MySQL Workbench. Run the following command to create a new CREATE DATABASE my_first_database;

Use the database: USE my_first_database;

Step 5: Create Tables

Next, you’ll want to create tables to store your data. Here’s an example of creating a table for storing customer information:

CREATE TABLE customers ( 2 customer_id INT AUTO_INCREMENT PRIMARY KEY, 3 first_name VARCHAR(50), 4 last_name VARCHAR(50), 5 email VARCHAR(100), 6 created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP 7);

In this example, we define a table named customers with fields for customer ID, first name, last name, email, and the date the record was created.

Step 6: Insert Data

Now that you have a table, you can insert data into it. Here’s how to add a new customer:

1 INSERT INTO customers (first_name, last_name, email) 2VALUES ('John', 'Doe', '[email protected]');

Query Data

To retrieve data from your table, you can use the SELECT statement. For example, to get all customers:

1 SELECT * FROM customers;

You can also filter results using the WHERE clause:

SELECT * FROM customers WHERE last_name = 'Doe';

Step 8: Update and Delete Data

You can update existing records using the UPDATE statement:

UPDATE customers SET email = '[email protected]' WHERE customer_id = 1;

To delete a record, use the DELETE statement:

DELETE FROM customers WHERE customer_id = 1;

Conclusion

In this "DBMS Tutorial for Beginners: Unlocking the Power of Data Management," we’ve explored the essential concepts of Database Management Systems and how to get started with managing data effectively. By understanding the importance of DBMS, familiarizing yourself with basic database concepts, and learning how to create, manipulate, and query databases, you are well on your way to becoming proficient in data management.

As you continue your journey, consider exploring more advanced topics such as database normalization, indexing, and transaction management. The world of data management is vast and full of opportunities, and mastering DBMS will undoubtedly enhance your skills as a developer or data professional.

With practice and experimentation, you’ll unlock the full potential of DBMS and transform the way you work with data. Happy database management!

0 notes

Text

PHP with MySQL: Best Practices for Database Integration

PHP and MySQL have long formed the backbone of dynamic web development. Even with modern frameworks and languages emerging, this combination remains widely used for building secure, scalable, and performance-driven websites and web applications. As of 2025, PHP with MySQL continues to power millions of websites globally, making it essential for developers and businesses to follow best practices to ensure optimized performance and security.

This article explores best practices for integrating PHP with MySQL and explains how working with expert php development companies in usa can help elevate your web projects to the next level.

Understanding PHP and MySQL Integration

PHP is a server-side scripting language used to develop dynamic content and web applications, while MySQL is an open-source relational database management system that stores and manages data efficiently. Together, they allow developers to create interactive web applications that handle tasks like user authentication, data storage, and content management.

The seamless integration of PHP with MySQL enables developers to write scripts that query, retrieve, insert, and update data. However, without proper practices in place, this integration can become vulnerable to performance issues and security threats.

1. Use Modern Extensions for Database Connections

One of the foundational best practices when working with PHP and MySQL is using modern database extensions. Outdated methods have been deprecated and removed from the latest versions of PHP. Developers are encouraged to use modern extensions that support advanced features, better error handling, and more secure connections.

Modern tools provide better performance, are easier to maintain, and allow for compatibility with evolving PHP standards.

2. Prevent SQL Injection Through Prepared Statements

Security should always be a top priority when integrating PHP with MySQL. SQL injection remains one of the most common vulnerabilities. To combat this, developers must use prepared statements, which ensure that user input is not interpreted as SQL commands.

This approach significantly reduces the risk of malicious input compromising your database. Implementing this best practice creates a more secure environment and protects sensitive user data.

3. Validate and Sanitize User Inputs

Beyond protecting your database from injection attacks, all user inputs should be validated and sanitized. Validation ensures the data meets expected formats, while sanitization cleans the data to prevent malicious content.

This practice not only improves security but also enhances the accuracy of the stored data, reducing errors and improving the overall reliability of your application.

4. Design a Thoughtful Database Schema

A well-structured database is critical for long-term scalability and maintainability. When designing your MySQL database, consider the relationships between tables, the types of data being stored, and how frequently data is accessed or updated.

Use appropriate data types, define primary and foreign keys clearly, and ensure normalization where necessary to reduce data redundancy. A good schema minimizes complexity and boosts performance.

5. Optimize Queries for Speed and Efficiency

As your application grows, the volume of data can quickly increase. Optimizing SQL queries is essential for maintaining performance. Poorly written queries can lead to slow loading times and unnecessary server load.

Developers should avoid requesting more data than necessary and ensure queries are specific and well-indexed. Indexing key columns, especially those involved in searches or joins, helps the database retrieve data more quickly.

6. Handle Errors Gracefully

Handling database errors in a user-friendly and secure way is important. Error messages should never reveal database structures or sensitive server information to end-users. Instead, errors should be logged internally, and users should receive generic messages that don’t compromise security.

Implementing error handling protocols ensures smoother user experiences and provides developers with insights to debug issues effectively without exposing vulnerabilities.

7. Implement Transactions for Multi-Step Processes

When your application needs to execute multiple related database operations, using transactions ensures that all steps complete successfully or none are applied. This is particularly important for tasks like order processing or financial transfers where data integrity is essential.

Transactions help maintain consistency in your database and protect against incomplete data operations due to system crashes or unexpected failures.

8. Secure Your Database Credentials

Sensitive information such as database usernames and passwords should never be exposed within the application’s core files. Use environment variables or external configuration files stored securely outside the public directory.

This keeps credentials safe from attackers and reduces the risk of accidental leaks through version control or server misconfigurations.

9. Backup and Monitor Your Database

No matter how robust your integration is, regular backups are critical. A backup strategy ensures you can recover quickly in the event of data loss, corruption, or server failure. Automate backups and store them securely, ideally in multiple locations.

Monitoring tools can also help track database performance, detect anomalies, and alert administrators about unusual activity or degradation in performance.

10. Consider Using an ORM for Cleaner Code

Object-relational mapping (ORM) tools can simplify how developers interact with databases. Rather than writing raw SQL queries, developers can use ORM libraries to manage data through intuitive, object-oriented syntax.

This practice improves productivity, promotes code readability, and makes maintaining the application easier in the long run. While it’s not always necessary, using an ORM can be especially helpful for teams working on large or complex applications.

Why Choose Professional Help?

While these best practices can be implemented by experienced developers, working with specialized php development companies in usa ensures your web application follows industry standards from the start. These companies bring:

Deep expertise in integrating PHP and MySQL

Experience with optimizing database performance

Knowledge of the latest security practices

Proven workflows for development and deployment

Professional PHP development agencies also provide ongoing maintenance and support, helping businesses stay ahead of bugs, vulnerabilities, and performance issues.

Conclusion

PHP and MySQL remain a powerful and reliable pairing for web development in 2025. When integrated using modern techniques and best practices, they offer unmatched flexibility, speed, and scalability.

Whether you’re building a small website or a large-scale enterprise application, following these best practices ensures your PHP and MySQL stack is robust, secure, and future-ready. And if you're seeking expert assistance, consider partnering with one of the top php development companies in usa to streamline your development journey and maximize the value of your project.

0 notes

Text

Reading and Importing Data in SAS: CSV, Excel, and More

In the world of data analytics, efficient data importation is a fundamental skill. SAS (Statistical Analysis System), a powerful platform for data analysis and statistical computing, offers robust tools to read and import data from various formats, including CSV, Excel, and more. Regardless of whether you are a beginner or overseeing analytics at an enterprise level, understanding how to import data into SAS is the initial step towards obtaining valuable insights.

This article breaks down the most common methods of importing data in SAS, along with best practices and real-world applications—offering value to everyone from learners in a Data Analyst Course to experienced professionals refining their workflows.

Why Importing Data Matters in SAS

Before any analysis begins, the data must be accessible. Importing data correctly ensures integrity, compatibility, and efficiency in processing. SAS supports a range of formats, allowing analysts to work with data from different sources seamlessly. The most common among these are CSV and Excel files due to their ubiquity in business and research environments.

Understanding how SAS handles these files can drastically improve productivity, particularly when working with large datasets or performing repetitive tasks in reporting and modelling.

Importing CSV Files into SAS

Comma-Separated Values (CSV) files are lightweight, easy to generate, and commonly used to exchange data. In SAS, importing CSVs is straightforward.

When importing a CSV file, SAS treats each line as an observation and each comma as a delimiter between variables. This format is ideal for users who deal with exported data from databases or web applications.

Best Practices:

Clean your CSV files before importing—ensure no missing headers, extra commas, or encoding issues.

Use descriptive variable names in the first row of the CSV to streamline your SAS workflow.

Always review the imported data to verify that variable types and formats are interpreted correctly.

Professionals undertaking a Data Analyst Course often begin with CSV files due to their simplicity, making this an essential foundational skill.

Importing Excel Files into SAS

Excel files are the go-to format for business users and analysts. They often contain multiple sheets, merged cells, and various data types, which adds complexity to the import process.

SAS provides built-in tools for reading Excel files, including engines like XLSX and the Import Wizard, which are available in SAS Studio or Enterprise Guide. These tools allow users to preview sheets, specify ranges, and even convert date formats during import.

Key Considerations:

Ensure the Excel file is not open during import to avoid access errors.

Use consistent formatting in Excel—SAS may misinterpret mixed data types within a single column.

If your Excel workbook contains multiple sheets, decide whether you need to import one or all of them.

Advanced users and those enrolled in a Data Analytics Course in Mumbai often work with Excel as part of larger data integration pipelines, making mastery of these techniques critical.

Importing Data from Other Sources

Beyond CSV and Excel, SAS supports numerous other data formats, including:

Text files (.txt): Often used for raw data exports or logs.

Database connections: Through SAS/ACCESS, users can connect to databases like Oracle, SQL Server, or MySQL.

JSON and XML: Increasingly used in web-based and API data integrations.

SAS Datasets (.sas7bdat): Native format with optimised performance for large datasets.

Each format comes with its own import nuances, such as specifying delimiters, encoding schemes, or schema mappings. Familiarity with these enhances flexibility in working with diverse data environments.

Tips for Efficient Data Importing

Here are a few practical tips to improve your SAS data importing skills:

Automate repetitive imports using macros or scheduled jobs.

Validate imported data against source files to catch discrepancies early.

Log and document your import steps—especially when working in team environments or preparing data for audits.

Stay updated: SAS frequently updates its procedures and import capabilities to accommodate new formats and security standards.

Learning and Upskilling with SAS

Importing data is just one piece of the SAS puzzle. For aspiring data professionals, structured training offers the advantage of guided learning, hands-on practice, and industry context. A Data Analyst training will typically begin with data handling techniques, setting the stage for more advanced topics like modelling, visualisation, and predictive analytics.

For learners in metro regions, a Data Analytics Course in Mumbai can provide local networking opportunities, expert mentorship, and exposure to real-world projects involving SAS. These programs often include training in data import techniques as part of their curriculum, preparing students for the demands of modern data-driven roles.

Final Thoughts

Reading and importing data into SAS is a vital skill that underpins all subsequent analysis. Whether you're working with CSV files exported from a CRM, Excel spreadsheets from finance teams, or direct connections to enterprise databases, mastering these tasks can significantly enhance your efficiency and accuracy.

By understanding the nuances of each data format and leveraging SAS's powerful import tools, you’ll be better equipped to manage data workflows, ensure data quality, and drive valuable insights. And for those committed to building a career in analytics, a course could be the stepping stone to mastering not just SAS but the entire data science pipeline.

Business name: ExcelR- Data Science, Data Analytics, Business Analytics Course Training Mumbai

Address: 304, 3rd Floor, Pratibha Building. Three Petrol pump, Lal Bahadur Shastri Rd, opposite Manas Tower, Pakhdi, Thane West, Thane, Maharashtra 400602

Phone: 09108238354,

Email: [email protected]

0 notes

Text

Accounting Data Disasters: Common Mistakes and How to Avoid Them

Ever feel like you're drowning in spreadsheets? You're not alone. As financial data grows more complex, even seasoned accountants make critical errors that can ripple through an entire business.

The Data Danger Zone: 5 Common Accounting Mistakes

1. Trusting Data Without Verification

We recently worked with a London retailer who based an entire quarter's strategy on sales projections from unreconciled data. The result? A £45,000 inventory mistake that could have been prevented with basic data validation.

Quick Fix: Implement a "trust but verify" protocol—cross-reference data from multiple sources before making significant decisions. At Flexi Consultancy, we always triangulate data from at least three sources before presenting insights to clients.

2. Excel as Your Only Tool

Excel is brilliant, but it's not designed to be your only data analysis tool when working with large datasets. Its limitations become dangerous when handling complex financial information.

Quick Fix: Supplement Excel with specialized data tools like Power BI, Tableau, or industry-specific financial analytics platforms. Even basic SQL knowledge can transform how you handle large datasets.

3. Ignoring Data Visualization

Numbers tell stories, but only if you can see the patterns. Too many accountants remain stuck in endless rows and columns when visual representation could instantly reveal insights.

Quick Fix: Learn basic data visualization principles and create dashboard summaries for all major reports. Your clients will thank you for making complex data digestible.

4. Overlooking Metadata

The context around your data matters just as much as the numbers themselves. When was it collected? Who entered it? What methodology was used?

Quick Fix: Create standardized metadata documentation for all financial datasets. Something as simple as "last modified" timestamps can prevent major reporting errors.

5. Manual Data Entry (Still!)

We're shocked by how many London accounting firms still manually transfer data between systems. Beyond being inefficient, this practice introduces errors at an alarming rate.

Quick Fix: Invest in proper API connections and automated data transfer protocols between your accounting systems. The upfront cost is nothing compared to the errors you'll prevent.

The Real Cost of Data Mistakes

These aren't just technical issues—they're business killers. One of our clients came to us after their previous accountant's data analysis error led to a six-figure tax miscalculation. Another lost investor confidence due to inconsistent financial reporting stemming from poor data management.

Beyond immediate financial implications, data mistakes erode trust, which is the currency of accounting.

Beyond Fixing: Building a Data-Strong Accounting Practice

Creating reliable financial insights from large datasets requires more than avoiding mistakes—it demands a systematic approach:

Document your data journey: Track every transformation from raw data to final report

Create repeatable processes: Standardize data handling procedures across your practice

Build data literacy: Ensure everyone touching financial information understands basic data principles

Implement peer reviews: Fresh eyes catch mistakes others miss

Need Help Navigating Your Data Challenges?

If you're struggling with financial data management or want to elevate your approach, reach out to our team. We specialize in helping London businesses transform financial data from a headache into a strategic asset.

This post was brought to you by the data nerds at Flexi Consultancy who believe financial insights should be both accurate AND actionable. Follow us for more practical accounting and financial management tips for London SMEs and startups.

0 notes

Text

Batch Address Validation Tool and Bulk Address Verification Software

When businesses manage thousands—or millions—of addresses, validating each one manually is impractical. That’s where batch address validation tools and bulk address verification software come into play. These solutions streamline address cleansing by processing large datasets efficiently and accurately.

What Is Batch Address Validation?

Batch address validation refers to the automated process of validating multiple addresses in a single operation. It typically involves uploading a file (CSV, Excel, or database) containing addresses, which the software then checks, corrects, formats, and appends with geolocation or delivery metadata.

Who Needs Bulk Address Verification?

Any organization managing high volumes of contact data can benefit, including:

Ecommerce retailers shipping to customers worldwide.

Financial institutions verifying client data.

Healthcare providers maintaining accurate patient records.

Government agencies validating census or mailing records.

Marketing agencies cleaning up lists for campaigns.

Key Benefits of Bulk Address Verification Software

1. Improved Deliverability

Clean data ensures your packages, documents, and marketing mailers reach the right person at the right location.

2. Cost Efficiency

Avoiding undeliverable mail means reduced waste in printing, postage, and customer service follow-up.

3. Database Accuracy

Maintaining accurate addresses in your CRM, ERP, or mailing list helps improve segmentation and customer engagement.

4. Time Savings

What would take weeks manually can now be done in minutes or hours with bulk processing tools.

5. Regulatory Compliance

Meet legal and industry data standards more easily with clean, validated address data.

Features to Expect from a Batch Address Validation Tool

When evaluating providers, check for the following capabilities:

Large File Upload Support: Ability to handle millions of records.

Address Standardization: Correcting misspellings, filling in missing components, and formatting according to regional norms.

Geocoding Integration: Assigning latitude and longitude to each validated address.

Duplicate Detection & Merging: Identifying and consolidating redundant entries.

Reporting and Audit Trails: For compliance and quality assurance.

Popular Batch Address Verification Tools

Here are leading tools in 2025:

1. Melissa Global Address Verification

Features: Supports batch and real-time validation, international formatting, and geocoding.

Integration: Works with Excel, SQL Server, and Salesforce.

2. Loqate Bulk Cleanse

Strengths: Excel-friendly UI, supports uploads via drag-and-drop, and instant insights.

Ideal For: Businesses looking to clean customer databases or mailing lists quickly.

3. Smarty Bulk Address Validation

Highlights: Fast processing, intuitive dashboard, and competitive pricing.

Free Tier: Great for small businesses or pilot projects.

4. Experian Bulk Address Verification

Capabilities: Cleans large datasets with regional postal expertise.

Notable Use Case: Utility companies and financial services.

5. Data Ladder’s DataMatch Enterprise

Advanced Matching: Beyond address validation, it detects data anomalies and fuzzy matches.

Use Case: Enterprise-grade data cleansing for mergers or CRM migrations.

How to Use Bulk Address Verification Software

Using batch tools is typically simple and follows this flow:

Upload Your File: Use CSV, Excel, or database export.

Map Fields: Match your columns with the tool’s required address fields.

Validate & Clean: The software standardizes, verifies, and corrects addresses.

Download Results: Export a clean file with enriched metadata (ZIP+4, geocode, etc.)

Import Back: Upload your clean list into your CRM or ERP system.

Integration Options for Bulk Address Validation

Many vendors offer APIs or direct plugins for:

Salesforce

Microsoft Dynamics

HubSpot

Oracle and SAP

Google Sheets

MySQL / PostgreSQL / SQL Server

Whether you're cleaning one-time datasets or automating ongoing data ingestion, integration capabilities matter.

SEO Use Cases: Why Batch Address Tools Help Digital Businesses

In the context of SEO and digital marketing, bulk address validation plays a key role:

Improved Local SEO Accuracy: Accurate NAP (Name, Address, Phone) data ensures consistent local listings and better visibility.

Better Audience Segmentation: Clean data supports targeted, geo-focused marketing.

Lower Email Bounce Rates: Often tied to postal address quality in cross-channel databases.

Final Thoughts

Batch address validation tools and bulk verification software are essential for cleaning and maintaining large datasets. These platforms save time, cut costs, and improve delivery accuracy—making them indispensable for logistics, ecommerce, and CRM management.

Key Takeaways

Use international address validation to expand globally without delivery errors.

Choose batch tools to clean large datasets in one go.

Prioritize features like postal certification, coverage, geocoding, and compliance.

Integrate with your business tools for automated, real-time validation.

Whether you're validating a single international address or millions in a database, the right tools empower your operations and increase your brand's reliability across borders.

youtube

SITES WE SUPPORT

Validate Address With API – Wix

0 notes

Text

Empowering Your Data Skills: Power BI Training in Riyadh by Simfotix

In today’s data-driven world, the ability to extract insights and create meaningful visualizations from raw data is an invaluable skill. Whether you're a business analyst, a manager, or a decision-maker, Microsoft Power BI has emerged as the go-to tool for interactive data visualization and business intelligence. If you're looking to master this powerful platform, enrolling in Power BI Training in Riyadh could be your smartest move—and Simfotix offers some of the most comprehensive and hands-on training programs in the region.

Why Power BI Training in Riyadh is a Game-Changer

Riyadh, Saudi Arabia’s thriving capital, is becoming a hub for digital transformation. As organizations in both the public and private sectors embrace big data and AI, professionals with Power BI skills are in high demand. Power BI Training in Riyadh not only equips you with the knowledge to create visually rich reports but also empowers you to make data-driven decisions that drive business success.

Simfotix: Your Trusted Partner for Power BI Training in Riyadh

Simfotix is a well-established professional training company with a reputation for delivering cutting-edge programs in data analytics, business intelligence, and digital transformation. Their Power BI Training in Riyadh stands out for its practical approach, real-world case studies, and expert trainers with industry experience.

The training is tailored for all skill levels—whether you’re a beginner looking to understand the basics of Power BI or an advanced user aiming to optimize your dashboards and DAX formulas. Simfotix ensures that every participant walks away with tangible skills and confidence to work on real-world data projects.

What to Expect from Power BI Training in Riyadh

Hands-On Learning Power BI is a tool best learned by doing. Simfotix’s Power BI Training in Riyadh offers interactive sessions where you’ll get your hands dirty with live datasets. From connecting various data sources like Excel, SQL Server, and web data, to building dashboards and publishing reports, the program is highly immersive.

DAX and Data Modeling The training covers advanced topics like Data Analysis Expressions (DAX) and data modeling. You’ll learn how to create calculated columns, measures, and KPIs that allow you to extract deeper insights from your data.

Custom Visualizations Not all data stories can be told with standard charts. Power BI Training in Riyadh also introduces you to custom visuals, enabling you to tailor your reports to specific business needs.

Real-World Business Scenarios Simfotix emphasizes real-world business use cases during the training. This practical exposure helps participants immediately apply their learning in their work environments.

Certification of Completion Upon successfully finishing the course, participants receive a certificate from Simfotix, validating their expertise in Power BI—a valuable addition to any professional’s resume.

Who Should Attend Power BI Training in Riyadh?

Power BI Training in Riyadh is ideal for:

Business Analysts

Financial Analysts

Data Scientists

Project Managers

Marketing Professionals

IT and Database Professionals

Anyone looking to enhance their data storytelling skills

Why Choose Simfotix for Power BI Training in Riyadh?

Simfotix’s commitment to quality, their experienced instructors, and flexible training schedules make them a top choice for professionals in Riyadh. They offer both in-person and virtual options, allowing participants to choose a mode of learning that suits their lifestyle.

Moreover, Simfotix's focus on post-training support sets them apart. Participants can reach out for mentorship and guidance even after the course ends—a feature that’s incredibly valuable for applying Power BI knowledge in real business scenarios.

Final Thoughts

If you’re serious about taking your data analysis and visualization skills to the next level, enrolling in Power BI Training in Riyadh with Simfotix is a step in the right direction. The training equips you with tools that are not just industry-relevant but also future-proof in a world where data is the new oil.

Start your journey toward data mastery today by visiting https://simfotix.com/ and explore their upcoming Power BI Training in Riyadh sessions. Invest in yourself—because mastering Power BI could be the smartest career move you’ll make this year.

0 notes

Text

Advanced Database Design

As applications grow in size and complexity, the design of their underlying databases becomes critical for performance, scalability, and maintainability. Advanced database design goes beyond basic tables and relationships—it involves deep understanding of normalization, indexing, data modeling, and optimization strategies.

1. Data Modeling Techniques

Advanced design starts with a well-thought-out data model. Common modeling approaches include:

Entity-Relationship (ER) Model: Useful for designing relational databases.

Object-Oriented Model: Ideal when working with object-relational databases.

Star and Snowflake Schemas: Used in data warehouses for efficient querying.

2. Normalization and Denormalization

Normalization: The process of organizing data to reduce redundancy and improve integrity (up to 3NF or BCNF).

Denormalization: In some cases, duplicating data improves read performance in analytical systems.

3. Indexing Strategies

Indexes are essential for query performance. Common types include:

B-Tree Index: Standard index type in most databases.

Hash Index: Good for equality comparisons.

Composite Index: Combines multiple columns for multi-column searches.

Full-Text Index: Optimized for text search operations.

4. Partitioning and Sharding

Partitioning: Splits a large table into smaller, manageable pieces (horizontal or vertical).

Sharding: Distributes database across multiple machines for scalability.

5. Advanced SQL Techniques

Common Table Expressions (CTEs): Temporary result sets for organizing complex queries.

Window Functions: Perform calculations across a set of table rows related to the current row.

Stored Procedures & Triggers: Automate tasks and enforce business logic at the database level.

6. Data Integrity and Constraints

Primary and Foreign Keys: Enforce relational integrity.

CHECK Constraints: Validate data against specific rules.

Unique Constraints: Ensure column values are not duplicated.

7. Security and Access Control

Security is crucial in database design. Best practices include:

Implementing role-based access control (RBAC).

Encrypting sensitive data both at rest and in transit.

Using parameterized queries to prevent SQL injection.

8. Backup and Recovery Planning

Design your database with disaster recovery in mind:

Automate daily backups.

Test recovery procedures regularly.

Use replication for high availability.

9. Monitoring and Optimization

Tools like pgAdmin (PostgreSQL), MySQL Workbench, and MongoDB Compass help in identifying bottlenecks and optimizing performance.

10. Choosing the Right Database System

Relational: MySQL, PostgreSQL, Oracle (ideal for structured data and ACID compliance).

NoSQL: MongoDB, Cassandra, CouchDB (great for scalability and unstructured data).

NewSQL: CockroachDB, Google Spanner (combines NoSQL scalability with relational features).

Conclusion

Advanced database design is a balancing act between normalization, performance, and scalability. By applying best practices and modern tools, developers can ensure that their systems are robust, efficient, and ready to handle growing data demands. Whether you’re designing a high-traffic e-commerce app or a complex analytics engine, investing time in proper database architecture pays off in the long run.

0 notes

Text

Remediation of SQLi

Defense Option 1: Prepared Statements (with Parameterized Queries)

Prepared statements ensure that an attacker is not able to change the intent of a query, even if SQL commands are inserted by an attacker. In the safe example below, if an attacker were to enter the userID of tom' or '1'='1, the parameterized query would not be vulnerable and would instead look for a username which literally matched the entire string tom' or '1'='1.

Defense Option 2: Stored Procedures

The difference between prepared statements and stored procedures is that the SQL code for a stored procedure is defined and stored in the database itself, and then called from the application.

Both of these techniques have the same effectiveness in preventing SQL injection so it is reasonable to choose which approach makes the most sense for you. Stored procedures are not always safe from SQL injection. However, certain standard stored procedure programming constructs have the same effect as the use of parameterized queries when implemented safely (the stored procedure does not include any unsafe dynamic SQL generation) which is the norm for most stored procedure languages.

Defense Option 3: Allow-List Input Validation

Various parts of SQL queries aren't legal locations for the use of bind variables, such as the names of tables or columns, and the sort order indicator (ASC or DESC). In such situations, input validation or query redesign is the most appropriate defense. For the names of tables or columns, ideally those values come from the code, and not from user parameters.

But if user parameter values are used to make different for table names and column names, then the parameter values should be mapped to the legal/expected table or column names to make sure unvalidated user input doesn't end up in the query. Please note, this is a symptom of poor design and a full rewrite should be considered if time allows.

Defense Option 4: Escaping All User-Supplied Input

This technique should only be used as a last resort, when none of the above are feasible. Input validation is probably a better choice as this methodology is frail compared to other defenses and we cannot guarantee it will prevent all SQL Injection in all situations.

This technique is to escape user input before putting it in a query and usually only recommended to retrofit legacy code when implementing input validation isn't cost effective.

3 notes

·

View notes

Text

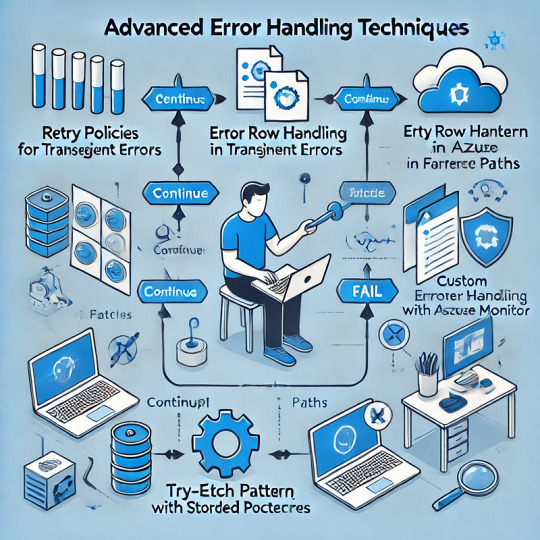

Advanced Error Handling Techniques in Azure Data Factory

Azure Data Factory (ADF) is a powerful data integration tool, but handling errors efficiently is crucial for building robust data pipelines. This blog explores advanced error-handling techniques in ADF to ensure resilience, maintainability, and better troubleshooting.

1. Understanding Error Types in ADF

Before diving into advanced techniques, it’s essential to understand common error types in ADF:

Transient Errors — Temporary issues such as network timeouts or throttling.

Data Errors — Issues with source data integrity, format mismatches, or missing values.

Configuration Errors — Incorrect linked service credentials, dataset configurations, or pipeline settings.

System Failures — Service outages or failures in underlying compute resources.

2. Implementing Retry Policies for Transient Failures

ADF provides built-in retry mechanisms to handle transient errors. When configuring activities:

Enable Retries — Set the retry count and interval in activity settings.

Use Exponential Backoff — Adjust retry intervals dynamically to reduce repeated failures.

Leverage Polybase for SQL — If integrating with Azure Synapse, ensure the retry logic aligns with PolyBase behavior.

Example JSON snippet for retry settings in ADF:jsonCopyEdit"policy": { "concurrency": 1, "retry": { "count": 3, "intervalInSeconds": 30 } }

3. Using Error Handling Paths in Data Flows

Data Flows in ADF allow “Error Row Handling” settings per transformation. Options include:

Continue on Error — Skips problematic records and processes valid ones.

Redirect to Error Output — Routes bad data to a separate table or storage for investigation.

Fail on Error — Stops the execution on encountering issues.

Example: Redirecting bad records in a Derived Column transformation.

In Data Flow, select the Derived Column transformation.

Choose “Error Handling” → Redirect errors to an alternate sink.

Store bad records in a storage account for debugging.

4. Implementing Try-Catch Patterns in Pipelines

ADF doesn’t have a traditional try-catch block, but we can emulate it using:

Failure Paths — Use activity dependencies to handle failures.

Set Variables & Logging — Capture error messages dynamically.

Alerting Mechanisms — Integrate with Azure Monitor or Logic Apps for notifications.

Example: Using Failure Paths

Add a Web Activity after a Copy Activity.

Configure Web Activity to log errors in an Azure Function or Logic App.

Set the dependency condition to “Failure” for error handling.

5. Using Stored Procedures for Custom Error Handling

For SQL-based workflows, handling errors within stored procedures enhances control.

Example:sqlBEGIN TRY INSERT INTO target_table (col1, col2) SELECT col1, col2 FROM source_table; END TRY BEGIN CATCH INSERT INTO error_log (error_message, error_time) VALUES (ERROR_MESSAGE(), GETDATE()); END CATCH

Use RETURN codes to signal success/failure.

Log errors to an audit table for investigation.

6. Logging and Monitoring Errors with Azure Monitor

To track failures effectively, integrate ADF with Azure Monitor and Log Analytics.

Enable diagnostic logging in ADF.

Capture execution logs, activity failures, and error codes.

Set up alerts for critical failures.

Example: Query failed activities in Log AnalyticskustoADFActivityRun | where Status == "Failed" | project PipelineName, ActivityName, ErrorMessage, Start, End

7. Handling API & External System Failures

When integrating with REST APIs, handle external failures by:

Checking HTTP Status Codes — Use Web Activity to validate responses.

Implementing Circuit Breakers — Stop repeated API calls on consecutive failures.

Using Durable Functions — Store state for retrying failed requests asynchronously.

Example: Configure Web Activity to log failuresjson"dependsOn": [ { "activity": "API_Call", "dependencyConditions": ["Failed"] } ]

8. Leveraging Custom Logging with Azure Functions

For advanced logging and alerting:

Use an Azure Function to log errors to an external system (SQL DB, Blob Storage, Application Insights).

Pass activity parameters (pipeline name, error message) to the function.

Trigger alerts based on severity.

Conclusion

Advanced error handling in ADF involves: ✅ Retries and Exponential Backoff for transient issues. ✅ Error Redirects in Data Flows to capture bad records. ✅ Try-Catch Patterns using failure paths. ✅ Stored Procedures for custom SQL error handling. ✅ Integration with Azure Monitor for centralized logging. ✅ API and External Failure Handling for robust external connections.

By implementing these techniques, you can enhance the reliability and maintainability of your ADF pipelines. 🚀

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Exploring the Key Facets of Data in Data Science

Data is at the heart of everything in data science. But it’s not just about having a lot of data; it’s about knowing how to handle it, process it, and extract meaningful insights from it. In data science, we work with data in many different forms, and each form has its own unique characteristics. In this article, we’re going to take a closer look at the important aspects of data that data scientists must understand to unlock its full potential.

1. Types of Data: Understanding the Basics

When you dive into data science, one of the first things you’ll encounter is understanding the different types of data. Not all data is created equal, and recognizing the type you’re working with helps you figure out the best tools and techniques to use.

Structured Data: This is the kind of data that fits neatly into rows and columns, like what you'd find in a spreadsheet or a relational database. Structured data is easy to analyze, and tools like SQL are perfect for working with it. Examples include sales data, employee records, or product inventories.

Unstructured Data: Unstructured data is a bit trickier. It’s not organized in any set format. Think about things like social media posts, emails, or videos. This type of data often requires advanced techniques like natural language processing (NLP) or computer vision to make sense of it.

Semi-structured Data: This type of data is somewhere in the middle. It has some structure but isn’t as organized as structured data. Examples include XML or JSON files. You’ll often encounter semi-structured data when pulling information from websites or APIs.

Knowing the type of data you’re dealing with is crucial. It will guide you in selecting the right tools and methods for analysis, which is the foundation of any data science project.

2. Data Quality: The Foundation of Reliable Insights

Good data is essential to good results. Data quality is a huge factor in determining how reliable your analysis will be. Without high-quality data, the insights you generate could be inaccurate or misleading.

Accuracy: Data needs to be accurate. Even small errors can distort your analysis. If the numbers you're working with are wrong, the conclusions you draw won’t be reliable. This could be something like a typo in a database entry or an incorrect sensor reading.

Consistency: Consistency means the data is uniform across different sources. For example, you don't want to have "NY" and "New York" both used to describe the same location in different parts of your dataset. Consistency makes it easier to work with the data without having to constantly fix small discrepancies.

Completeness: Missing data is a common issue, but it can be a real problem. If you’re working with a dataset that has missing values, your model or analysis might not be as effective. Sometimes you can fill in missing data or remove incomplete rows, but it’s important to be mindful of how these missing pieces affect your overall results.

Data scientists spend a lot of time cleaning and validating data because, at the end of the day, your conclusions are only as good as the data you work with.

3. Transforming and Preprocessing Data

Once you’ve got your data, it often needs some work before you can start drawing insights from it. Raw data usually isn’t in the best form for analysis, so transforming and preprocessing it is an essential step.

Normalization and Scaling: When different features of your data are on different scales, it can cause problems with some machine learning algorithms. For example, if one feature is measured in thousands and another is measured in ones, the algorithm might give more importance to the larger values. Normalizing or scaling your data ensures that everything is on a comparable scale.

Feature Engineering: This involves creating new features from the data you already have. It’s a way to make your data more useful for predictive modeling. For example, if you have a timestamp, you could extract features like day of the week, month, or season to see if they have any impact on your target variable.

Handling Missing Data: Missing data happens. Sometimes it’s due to errors in data collection, and sometimes it’s just unavoidable. You might choose to fill in missing values based on the mean or median, or, in some cases, remove rows or columns that have too many missing values.

These steps ensure that your data is clean, consistent, and ready for the next stage of analysis.

4. Integrating and Aggregating Data

In real-world projects, you rarely get all your data in one place. More often than not, data comes from multiple sources: databases, APIs, spreadsheets, or even data from sensors. This is where data integration comes in.

Data Integration: This process involves bringing all your data together into one cohesive dataset. Sometimes, this means matching up similar records from different sources, and other times it means transforming data into a common format. Integration helps you work with a unified dataset that tells a full story.

Data Aggregation: Sometimes you don’t need all the data. Instead, you might want to summarize it. Aggregation involves combining data points into a summary form. For example, if you're analyzing sales, you might aggregate the data by region or time period (like daily or monthly sales). This helps make large datasets more manageable and reveals high-level insights.

When you're dealing with large datasets, integration and aggregation help you zoom out and see the bigger picture.

5. The Importance of Data Visualization

Data visualization is all about telling the story of your data. It’s one thing to find trends and patterns, but it’s another to be able to communicate those findings in a way that others can understand. Visualization is a powerful tool for data scientists because it makes complex information more accessible.

Spotting Trends: Graphs and charts can help you quickly see patterns over time, like a rise in sales during the holiday season or a dip in customer satisfaction after a product launch.

Identifying Outliers: Visualizations also help you identify anomalies in the data. For instance, a bar chart might highlight an unusually high spike in sales that warrants further investigation.

Making Sense of Complex Data: Some datasets are just too large to process without visualization. A heatmap or scatter plot can distill complex datasets into something much easier to digest.

Effective data visualization turns raw data into insights that are easier to communicate, which is a critical skill for any data scientist.

6. Data Security and Privacy: Ethical Considerations

In today’s data-driven world, privacy and security are huge concerns. As a data scientist, it’s your responsibility to ensure that the data you’re working with is handled ethically and securely.

Data Anonymization: If you’re working with sensitive information, it’s crucial to remove personally identifiable information (PII) to protect people's privacy. Anonymizing data ensures that individuals’ identities remain secure.

Secure Data Storage: Data should always be stored securely to prevent unauthorized access. This might mean using encrypted storage solutions or following best practices for data security.

Ethical Use of Data: Finally, data scientists need to be mindful of how the data is being used. For example, if you’re using data to make business decisions or predictions, it’s essential to ensure that your models are not biased in ways that could negatively impact certain groups of people.

Data security and privacy are not just legal requirements—they’re also ethical obligations that should be taken seriously in every data science project.

Conclusion

Data is the foundation of data science, but it's not just about having lots of it. The true power of data comes from understanding its many facets, from its structure and quality to how it’s processed, integrated, and visualized. By mastering these key elements, you can ensure that your data science projects are built on solid ground, leading to reliable insights and effective decision-making.

If you’re ready to dive deeper into data science and learn more about handling data in all its forms, seeking out a data science course nearby might be a great way to gain hands-on experience and expand your skill set.

0 notes