#cloudflare detection

Explore tagged Tumblr posts

Text

🚫🔍 Struggling with "noindex detected" errors in Google Search Console? Our latest guide reveals where the issue might stem from and provides actionable steps to resolve it! Boost your site's visibility today! 🌐✨ #SEO #GoogleSearchConsole #WebmasterTools

#401 Unauthorized response#CDN issues#Cloudflare#digital marketing#Google indexing error#Google Search Console#Googlebot#indexing problems#indexing visibility#noindex detected#outdated URLs#Rich Results Tester#robots.txt#search engine optimization#SEO troubleshooting#website indexing issues#X-Robots-Tag error

0 notes

Text

It's Time To Investigate SevenArt.ai

sevenart.ai is a website that uses ai to generate images.

Except, that's not all it can do.

It can also overlay ai filters onto images to create the illusion that the algorithm created these images.

And its primary image source is Tumblr.

It scrapes through the site for recent images that are at least 10 days old and has some notes attached to it, as well as copying the tags to make the unsuspecting user think that the post was from a genuine user.

No image is safe. Art, photography, screenshots, you name it.

Initially I thought that these are bots that just repost images from their site as well as bastardizations of pictures across tumblr, until a user by the name of @nataliedecorsair discovered that these "bots" can also block users and restrict replies.

Not only that, but these bots do not procreate and multiply like most bots do. Or at least, they have.

The following are the list of bots that have been found on this very site. Brace yourself. It's gonna be a long one:

@giannaaziz1998blog

@kennedyvietor1978blog

@nikb0mh6bl

@z4uu8shm37

@xguniedhmn

@katherinrubino1958blog

@3neonnightlifenostalgiablog

@cyberneticcreations58blog

@neomasteinbrink1971blog

@etharetherford1958blog

@punxajfqz1

@camicranfill1967blog

@1stellarluminousechoblog

@whwsd1wrof

@bnlvi0rsmj

@steampunkstarshipsafari90blog

@surrealistictechtales17blog

@2steampunksavvysiren37blog

@krispycrowntree

@voucwjryey

@luciaaleem1961blog

@qcmpdwv9ts

@2mplexltw6

@sz1uwxthzi

@laurenesmock1972blog

@rosalinetritsch1992blog

@chereesteinkirchner1950blog

@malindamadaras1996blog

@1cyberneticdreamscapehubblog

@neomasteinbrink1971blog

@neonfuturecityblog

@olindagunner1986blog

@neonnomadnirvanablog

@digitalcyborgquestblog

@freespiritfusionblog

@piacarriveau1990blog

@3technoartisticvisionsblog

@wanderlustwineblissblog

@oyqjfwb9nz

@maryannamarkus1983blog

@lashelldowhower2000blog

@ovibigrqrw

@3neonnightlifenostalgiablog

@ywldujyr6b

@giannaaziz1998blog

@yudacquel1961blog

@neotechcreationsblog

@wildernesswonderquest87blog

@cybertroncosmicflow93blog

@emeldaplessner1996blog

@neuralnetworkgallery78blog

@dunstanrohrich1957blog

@juanitazunino1965blog

@natoshaereaux1970blog

@aienhancedaestheticsblog

@techtrendytreks48blog

@cgvlrktikf

@digitaldimensiondioramablog

@pixelpaintedpanorama91blog

@futuristiccowboyshark

@digitaldreamscapevisionsblog

@janishoppin1950blog

The oldest ones have been created in March, started scraping in June/July, and later additions to the family have been created in July.

So, I have come to the conclusion that these accounts might be run by a combination of bot and human. Cyborg, if you will.

But it still doesn't answer my main question:

Who is running the whole operation?

The site itself gave us zero answers to work with.

No copyright, no link to the engine where the site is being used on, except for the sign in thingy (which I did.)

I gave the site a fake email and a shitty password.

Turns out it doesn't function like most sites that ask for an email and password.

Didn't check the burner email, the password isn't fully dotted and available for the whole world to see, and, and this is the important thing...

My browser didn't detect that this was an email and password thingy.

And there was no log off feature.

This could mean two things.

Either we have a site that doesn't have a functioning email and password database, or that we have a bunch of gullible people throwing their email and password in for people to potentially steal.

I can't confirm or deny these facts, because, again, the site has little to work with.

The code? Generic as all hell.

Tried searching for more information about this site, like the server it's on, or who owned the site, or something. ANYTHING.

Multiple sites pulled me in different directions. One site said it originates in Iceland. Others say its in California or Canada.

Luckily, the server it used was the same. Its powered by Cloudflare.

Unfortunately, I have no idea what to do with any of this information.

If you have any further information about this site, let me know.

Until there is a clear answer, we need to keep doing what we are doing.

Spread the word and report about these cretins.

If they want attention, then they are gonna get the worst attention.

12K notes

·

View notes

Text

Cloudflare thinks this stuff is also a useful tool to detect bot activity.

“No real human would go four links deep into a maze of AI-generated nonsense,” Cloudflare’s trio wrote. “Any visitor that does is very likely to be a bot, so this gives us a brand-new tool to identify and fingerprint bad bots, which we add to our list of known bad actors.”

29 notes

·

View notes

Text

Cybercriminals Abusing Cloudflare Tunnels to Evade Detection and Spread Malware

Source: https://thehackernews.com/2024/08/cybercriminals-abusing-cloudflare.html

More info:

https://www.esentire.com/blog/quartet-of-trouble-xworm-asyncrat-venomrat-and-purelogs-stealer-leverage-trycloudflare

https://www.proofpoint.com/us/blog/threat-insight/threat-actor-abuses-cloudflare-tunnels-deliver-rats

6 notes

·

View notes

Text

During a Fox News interview earlier this week, multi-hypenate billionaire and X-formerly-Twitter owner Elon Musk blamed a "massive cyberattack" that repeatedly took down the site yesterday as coming from Ukraine.

But, as Wired reports, his evidence is flimsy at best. Musk claimed that "IP addresses" behind the attack originated in the embattled European nation. But as experts told the publication, that's far from actual proof.

"What we can conclude from the IP data is the geographic distribution of traffic sources, which may provide insights into botnet composition or infrastructure used," connectivity firm Zayo chief security officer Shawn Edwards told Wired. "What we can’t conclude with certainty is the actual perpetrator’s identity or intent."

One researcher claimed in an interview with Wired that Ukraine wasn't even in the top 20 IP addresses involved in the attack.

Since then, a pro-Palestine hacking group called Dark Storm Team claimed responsibility for the attacks in now-deleted Telegram posts.

And considering some glaring technical oversights, the hackers seem to have had a surprisingly easy time taking down the social media platform. Security researchers told Wired that several X origin servers, which are designated to respond to web requests, weren't secured by the company's Cloudflare protection.

Cloudflare offers services allowing websites to automatically detect and mitigate distributed denial-of-service (DDoS) attacks, like the most recent cyberattack targeting X.

"The botnet was directly attacking the IP and a bunch more on that X subnet yesterday," independent security researcher Kevin Beaumont told Wired. "It's a botnet of cameras and DVRs."

Put simply, X was ill-prepared, despite DDoS attacks being an extremely common threat to virtually all services on the internet. The company's loose protections may have even allowed the incident to be far worse than it would've been otherwise.

It'd be far from the first time X has been thrown into chaos due to questionable decision-making and a bevy of bugs.

#technology#twitter#internet#cybersecurity#again we see why giving Elon and his minions access to sensitive systems and info is a giant mistake

2 notes

·

View notes

Text

Build a Seamless Crypto Exchange Experience with Binance Clone Software

Binance Clone Script

The Binance clone script is a fully functional, ready-to-use solution designed for launching a seamless cryptocurrency exchange. It features a microservice architecture and offers advanced functionalities to enhance user experience. With Plurance’s secure and innovative Binance Clone Software, users can trade bitcoins, altcoins, and tokens quickly and safely from anywhere in the world.

This clone script includes essential features such as liquidity APIs, dynamic crypto pairing, a comprehensive order book, various trading options, and automated KYC and AML verifications, along with a core wallet. By utilizing our ready-to-deploy Binance trading clone, business owners can effectively operate a cryptocurrency exchange platform similar to Binance.

Features of Binance Clone Script

Security Features

AML and KYC Verification: Ensures compliance with anti-money laundering and know-your-customer regulations.

Two-Factor Authentication: Provides an additional security measure during user logins.

CSRF Protection: Shields the platform from cross-site request forgery threats.

DDoS Mitigation: Safeguards the system against distributed denial-of-service attacks.

Cloudflare Integration: Enhances security and performance through advanced web protection.

Time-Locked Transactions: Safeguards transactions by setting time limits before processing.

Cold Wallet Storage: Keeps the majority of funds offline for added security.

Multi-Signature Wallets: Mandates multiple confirmations for transactions, boosting security.

Notifications via Email and SMS: Keeps users informed of account activities and updates.

Login Protection: Monitors login attempts to detect suspicious activity.

Biometric Security: Utilizes fingerprint or facial recognition for secure access.

Data Protection Compliance: Adheres to relevant data privacy regulations.

Admin Features of Binance Clone Script

User Account Management: Access detailed user account information.

Token and Cryptocurrency Management: Add and manage various tokens and cryptocurrencies.

Admin Dashboard: A comprehensive interface for managing platform operations.

Trading Fee Setup: Define and adjust trading fees for transactions.

Payment Gateway Integration: Manage payment processing options effectively.

AML and KYC Oversight: Monitor compliance processes for user verification.

User Features of Binance Clone Script

Cryptocurrency Deposits: Facilitate easy deposit of various cryptocurrencies.

Instant Buy/Sell Options: Allow users to trade cryptocurrencies seamlessly.

Promotional Opportunities: Users can take advantage of promotional features to maximize profits.

Transaction History: Access a complete record of past transactions.

Cryptocurrency Wallet Access: Enable users to manage their digital wallets.

Order Tracking: Keep track of buy and sell orders for better trading insights.

Binance Clone Development Process

The following way outline how our blockchain experts develop a largely effective cryptocurrency exchange platform inspired by Binance.

Demand Analysis

We begin by assessing and gathering your business conditions, similar as the type of trades you want to grease, your target followership, geographical focus, and whether the exchange is intended for short-term or long-term operation.

Strategic Planning

After collecting your specifications, our platoon formulates a detailed plan to effectively bring your ideas to life. This strategy aims to deliver stylish results acclimatized to your business requirements.

Design and Development

Our inventors excel in UI/ UX design, creating visually appealing interfaces. They draft a unique trading platform by exercising the rearmost technologies and tools.

Specialized perpetration

Once the design is complete, we concentrate on specialized aspects, integrating essential features similar to portmanteau connectors, escrow services, payment options, and robust security measures to enhance platform functionality.

Quality Assurance Testing

After development, we conduct thorough testing to ensure the exchange platform operates easily. This includes security assessments, portmanteau and API evaluations, performance testing, and vindicating the effectiveness of trading machines.

Deployment and Support

Following successful testing, we do with the deployment of your exchange platform. We also gather stoner feedback to make advancements and introduce new features, ensuring the platform remains robust and over-to-date.

Revenue Streams of a Binance Clone Script

Launching a cryptocurrency exchange using a robust Binance clone can create multiple avenues for generating revenue.

Trading Fees

The operator of the Binance clone platform has the discretion to set a nominal fee on each trade executed.

Withdrawal Charges

If users wish to withdraw their cryptocurrencies, a fee may be applied when they request to transfer funds out of the Binance clone platform.

Margin Trading Fees

With the inclusion of margin trading functionalities, fees can be applied whenever users execute margin transactions on the platform.

Listing Fees

The platform owner can impose a listing fee for users who want to feature their cryptocurrencies or tokens on the exchange.

Referral Program

Our Binance clone script includes a referral program that allows users to earn commissions by inviting friends to register on the trading platform.

API Access Fees

Developers can integrate their trading bots or other applications by paying for access to the platform’s API.

Staking and Lending Fees

The administrator has the ability to charge fees for services that enable users to stake or lend their cryptocurrencies to earn interest.

Launchpad Fees

The Binance clone software offers a token launchpad feature, allowing the admin to charge for listing and launching new tokens.

Advertising Revenue

Similar to Binance, the trading platform can also generate income by displaying advertisements to its users.

Your Path to Building a Crypto Exchange Like Binance

Take the next step toward launching your own crypto exchange similar to Binance by collaborating with our experts to establish a robust business ecosystem in the cryptocurrency realm.

Token Creation

Utilizing innovative fundraising methods, you can issue tokens on the Binance blockchain, enhancing revenue generation and providing essential support for your business.

Staking Opportunities

Enable users to generate passive income by staking their digital assets within a liquidity pool, facilitated by advanced staking protocols in the cryptocurrency environment.

Decentralized Swapping

Implement a DeFi protocol that allows for the seamless exchange of tokenized assets without relying on a central authority, creating a dedicated platform for efficient trading.

Lending and Borrowing Solutions

Our lending protocol enables users to deposit funds and earn annual returns, while also offering loans for crypto trading or business ventures.

NFT Minting

Surpass traditional cryptocurrency investments by minting a diverse range of NFTs, representing unique digital assets such as sports memorabilia and real estate, thereby tapping into new market values.

Why Should You Go With Plurance's Ready-made Binance Clone Script?

As a leading cryptocurrency exchange development company, Plurance provides an extensive suite of software solutions tailored for cryptocurrency exchanges, including Binance scripts, to accommodate all major platforms in the market. We have successfully assisted numerous businesses and entrepreneurs in launching profitable user-to-admin cryptocurrency exchanges that rival Binance.

Our team consists of skilled front-end and back-end developers, quality analysts, Android developers, and project engineers, all focused on bringing your vision to life. The ready-made Binance Clone Script is meticulously designed, developed, tested, and ready for immediate deployment.

Our committed support team is here to help with any questions you may have about the Binance clone software. Utilizing Binance enables you to maintain a level of customization while accelerating development. As the cryptocurrency sector continues to evolve, the success of your Binance Clone Script development will hinge on its ability to meet customer expectations and maintain a competitive edge.

#Binance Clone Script#Binance Clone Software#White Label Binance Clone Software#Binance Exchange Clone Script

2 notes

·

View notes

Text

What is Generative Artificial Intelligence-All in AI tools

Generative Artificial Intelligence (AI) is a type of deep learning model that can generate text, images, computer code, and audiovisual content based on prompts.

These models are trained on a large amount of raw data, typically of the same type as the data they are designed to generate. They learn to form responses given any input, which are statistically likely to be related to that input. For example, some generative AI models are trained on large amounts of text to respond to written prompts in seemingly creative and original ways.

In essence, generative AI can respond to requests like human artists or writers, but faster. Whether the content they generate can be considered "new" or "original" is debatable, but in many cases, they can rival, or even surpass, some human creative abilities.

Popular generative AI models include ChatGPT for text generation and DALL-E for image generation. Many organizations also develop their own models.

How Does Generative AI Work?

Generative AI is a type of machine learning that relies on mathematical analysis to find relevant concepts, images, or patterns, and then uses this analysis to generate content related to the given prompts.

Generative AI depends on deep learning models, which use a computational architecture called neural networks. Neural networks consist of multiple nodes that pass data between them, similar to how the human brain transmits data through neurons. Neural networks can perform complex and intricate tasks.

To process large blocks of text and context, modern generative AI models use a special type of neural network called a Transformer. They use a self-attention mechanism to detect how elements in a sequence are related.

Training Data

Generative AI models require a large amount of data to perform well. For example, large language models like ChatGPT are trained on millions of documents. This data is stored in vector databases, where data points are stored as vectors, allowing the model to associate and understand the context of words, images, sounds, or any other type of content.

Once a generative AI model reaches a certain level of fine-tuning, it does not need as much data to generate results. For example, a speech-generating AI model may be trained on thousands of hours of speech recordings but may only need a few seconds of sample recordings to realistically mimic someone's voice.

Advantages and Disadvantages of Generative AI

Generative AI models have many potential advantages, including helping content creators brainstorm ideas, providing better chatbots, enhancing research, improving search results, and providing entertainment.

However, generative AI also has its drawbacks, such as illusions and other inaccuracies, data leaks, unintentional plagiarism or misuse of intellectual property, malicious response manipulation, and biases.

What is a Large Language Model (LLM)?

A Large Language Model (LLM) is a type of generative AI model that handles language and can generate text, including human speech and programming languages. Popular LLMs include ChatGPT, Llama, Bard, Copilot, and Bing Chat.

What is an AI Image Generator?

An AI image generator works similarly to LLMs but focuses on generating images instead of text. DALL-E and Midjourney are examples of popular AI image generators.

Does Cloudflare Support Generative AI Development?

Cloudflare allows developers and businesses to build their own generative AI models and provides tools and platform support for this purpose. Its services, Vectorize and Cloudflare Workers AI, help developers generate and store embeddings on the global network and run generative AI tasks on a global GPU network.

Explorer all Generator AI Tools

Reference

what is chatGPT

What is Generative Artificial Intelligence - All in AI Tools

2 notes

·

View notes

Text

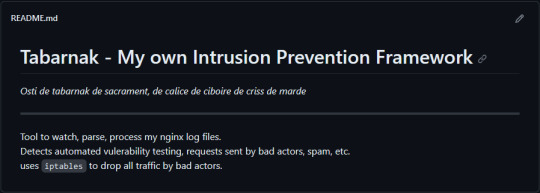

(this is a small story of how I came to write my own intrusion detection/prevention framework and why I'm really happy with that decision, don't mind me rambling)

Preface

About two weeks ago I was faced with a pretty annoying problem. Whilst I was going home by train I have noticed that my server at home had been running hot and slowed down a lot. This prompted me to check my nginx logs, the only service that is indirectly available to the public (more on that later), which made me realize that - due to poor access control - someone had been sending me hundreds of thousands of huge DNS requests to my server, most likely testing for vulnerabilities. I added an iptables rule to drop all traffic from the aforementioned source and redirected remaining traffic to a backup NextDNS instance that I set up previously with the same overrides and custom records that my DNS had to not get any downtime for the service but also allow my server to cool down. I stopped the DNS service on my server at home and then used the remaining train ride to think. How would I stop this from happening in the future? I pondered multiple possible solutions for this problem, whether to use fail2ban, whether to just add better access control, or to just stick with the NextDNS instance.

I ended up going with a completely different option: making a solution, that's perfectly fit for my server, myself.

My Server Structure

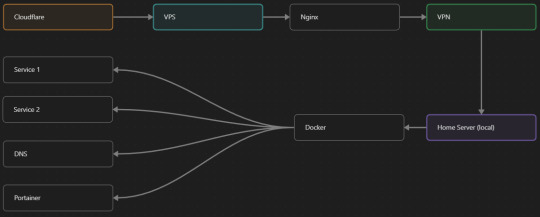

So, I should probably explain how I host and why only nginx is public despite me hosting a bunch of services under the hood.

I have a public facing VPS that only allows traffic to nginx. That traffic then gets forwarded through a VPN connection to my home server so that I don't have to have any public facing ports on said home server. The VPS only really acts like the public interface for the home server with access control and logging sprinkled in throughout my configs to get more layers of security. Some Services can only be interacted with through the VPN or a local connection, such that not everything is actually forwarded - only what I need/want to be.

I actually do have fail2ban installed on both my VPS and home server, so why make another piece of software?

Tabarnak - Succeeding at Banning

I had a few requirements for what I wanted to do:

Only allow HTTP(S) traffic through Cloudflare

Only allow DNS traffic from given sources; (location filtering, explicit white-/blacklisting);

Webhook support for logging

Should be interactive (e.g. POST /api/ban/{IP})

Detect automated vulnerability scanning

Integration with the AbuseIPDB (for checking and reporting)

As I started working on this, I realized that this would soon become more complex than I had thought at first.

Webhooks for logging This was probably the easiest requirement to check off my list, I just wrote my own log() function that would call a webhook. Sadly, the rest wouldn't be as easy.

Allowing only Cloudflare traffic This was still doable, I only needed to add a filter in my nginx config for my domain to only allow Cloudflare IP ranges and disallow the rest. I ended up doing something slightly different. I added a new default nginx config that would just return a 404 on every route and log access to a different file so that I could detect connection attempts that would be made without Cloudflare and handle them in Tabarnak myself.

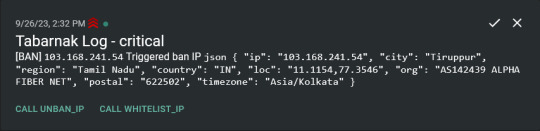

Integration with AbuseIPDB Also not yet the hard part, just call AbuseIPDB with the parsed IP and if the abuse confidence score is within a configured threshold, flag the IP, when that happens I receive a notification that asks me whether to whitelist or to ban the IP - I can also do nothing and let everything proceed as it normally would. If the IP gets flagged a configured amount of times, ban the IP unless it has been whitelisted by then.

Location filtering + Whitelist + Blacklist This is where it starts to get interesting. I had to know where the request comes from due to similarities of location of all the real people that would actually connect to the DNS. I didn't want to outright ban everyone else, as there could be valid requests from other sources. So for every new IP that triggers a callback (this would only be triggered after a certain amount of either flags or requests), I now need to get the location. I do this by just calling the ipinfo api and checking the supplied location. To not send too many requests I cache results (even though ipinfo should never be called twice for the same IP - same) and save results to a database. I made my own class that bases from collections.UserDict which when accessed tries to find the entry in memory, if it can't it searches through the DB and returns results. This works for setting, deleting, adding and checking for records. Flags, AbuseIPDB results, whitelist entries and blacklist entries also get stored in the DB to achieve persistent state even when I restart.

Detection of automated vulnerability scanning For this, I went through my old nginx logs, looking to find the least amount of paths I need to block to catch the biggest amount of automated vulnerability scan requests. So I did some data science magic and wrote a route blacklist. It doesn't just end there. Since I know the routes of valid requests that I would be receiving (which are all mentioned in my nginx configs), I could just parse that and match the requested route against that. To achieve this I wrote some really simple regular expressions to extract all location blocks from an nginx config alongside whether that location is absolute (preceded by an =) or relative. After I get the locations I can test the requested route against the valid routes and get back whether the request was made to a valid URL (I can't just look for 404 return codes here, because there are some pages that actually do return a 404 and can return a 404 on purpose). I also parse the request method from the logs and match the received method against the HTTP standard request methods (which are all methods that services on my server use). That way I can easily catch requests like:

XX.YYY.ZZZ.AA - - [25/Sep/2023:14:52:43 +0200] "145.ll|'|'|SGFjS2VkX0Q0OTkwNjI3|'|'|WIN-JNAPIER0859|'|'|JNapier|'|'|19-02-01|'|'||'|'|Win 7 Professional SP1 x64|'|'|No|'|'|0.7d|'|'|..|'|'|AA==|'|'|112.inf|'|'|SGFjS2VkDQoxOTIuMTY4LjkyLjIyMjo1NTUyDQpEZXNrdG9wDQpjbGllbnRhLmV4ZQ0KRmFsc2UNCkZhbHNlDQpUcnVlDQpGYWxzZQ==12.act|'|'|AA==" 400 150 "-" "-"

I probably over complicated this - by a lot - but I can't go back in time to change what I did.

Interactivity As I showed and mentioned earlier, I can manually white-/blacklist an IP. This forced me to add threads to my previously single-threaded program. Since I was too stubborn to use websockets (I have a distaste for websockets), I opted for probably the worst option I could've taken. It works like this: I have a main thread, which does all the log parsing, processing and handling and a side thread which watches a FIFO-file that is created on startup. I can append commands to the FIFO-file which are mapped to the functions they are supposed to call. When the FIFO reader detects a new line, it looks through the map, gets the function and executes it on the supplied IP. Doing all of this manually would be way too tedious, so I made an API endpoint on my home server that would append the commands to the file on the VPS. That also means, that I had to secure that API endpoint so that I couldn't just be spammed with random requests. Now that I could interact with Tabarnak through an API, I needed to make this user friendly - even I don't like to curl and sign my requests manually. So I integrated logging to my self-hosted instance of https://ntfy.sh and added action buttons that would send the request for me. All of this just because I refused to use sockets.

First successes and why I'm happy about this After not too long, the bans were starting to happen. The traffic to my server decreased and I can finally breathe again. I may have over complicated this, but I don't mind. This was a really fun experience to write something new and learn more about log parsing and processing. Tabarnak probably won't last forever and I could replace it with solutions that are way easier to deploy and way more general. But what matters is, that I liked doing it. It was a really fun project - which is why I'm writing this - and I'm glad that I ended up doing this. Of course I could have just used fail2ban but I never would've been able to write all of the extras that I ended up making (I don't want to take the explanation ad absurdum so just imagine that I added cool stuff) and I never would've learned what I actually did.

So whenever you are faced with a dumb problem and could write something yourself, I think you should at least try. This was a really fun experience and it might be for you as well.

Post Scriptum

First of all, apologies for the English - I'm not a native speaker so I'm sorry if some parts were incorrect or anything like that. Secondly, I'm sure that there are simpler ways to accomplish what I did here, however this was more about the experience of creating something myself rather than using some pre-made tool that does everything I want to (maybe even better?). Third, if you actually read until here, thanks for reading - hope it wasn't too boring - have a nice day :)

10 notes

·

View notes

Text

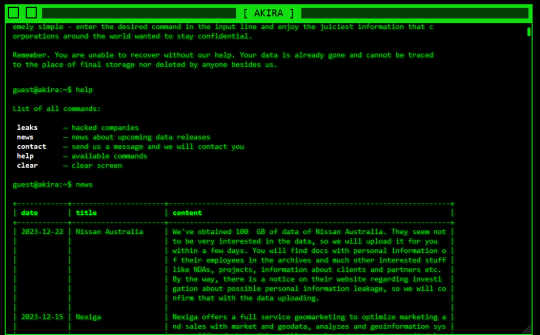

Akira Ransomware Breaches Over 250 Global Entities, Rakes in $42 Million

A joint cybersecurity advisory issued by CISA, FBI, Europol, and the Dutch NCSC-NL has uncovered the staggering scale of the Akira ransomware campaign. Since early 2023, the nefarious Akira operators have compromised more than 250 organizations worldwide, extorting a whopping $42 million in ransom payments. Akira's Prolific Targeting Across Industries The Akira ransomware group has been actively infiltrating entities across diverse sectors, including education, finance, and real estate. Staying true to the double extortion playbook, the threat actors exfiltrate sensitive data before encrypting the victims' systems, amplifying the pressure for a ransom payment. Early Akira versions, coded in C++, appended the .akira extension to encrypted files. However, from August 2023 onward, certain attacks deployed Megazord, a Rust-based variant that encrypts data with the .powerranges extension. Investigations reveal the perpetrators interchangeably using both Akira and Megazord, including the updated Akira_v2. Initial Access and Privilege Escalation The cybercriminals exploit various entry points, such as unprotected VPNs, Cisco vulnerabilities (CVE-2020-3259 and CVE-2023-20269), RDP exposures, spear phishing, and stolen credentials. Post-compromise, they create rogue domain accounts, like itadm, to elevate privileges, leveraging techniques like Kerberoasting and credential scraping with Mimikatz and LaZagne. Disabling Security Controls and Lateral Movement To evade detection and facilitate lateral movement, Akira operators disable security software using tools like PowerTool to terminate antivirus processes and exploit vulnerabilities. They employ various tools for reconnaissance, data exfiltration (FileZilla, WinRAR, WinSCP, RClone), and C2 communication (AnyDesk, Cloudflare Tunnel, RustDesk, Ngrok). Sophisticated Encryption The advisory highlights Akira's "sophisticated hybrid encryption scheme" combining ChaCha20 stream cipher with RSA public-key cryptography for efficient and secure encryption tailored to file types and sizes. As the Akira ransomware campaign continues to wreak havoc, the joint advisory provides crucial indicators of compromise (IoCs) to help organizations bolster their defenses against this formidable threat. Read the full article

2 notes

·

View notes

Text

Fragility of Internet

The fragility of internet infrastructure refers to its vulnerability to disruptions caused by technical failures, cyberattacks, natural disasters, and geopolitical conflicts. Despite being designed for resilience, the internet’s decentralized nature also introduces critical points of failure that can have widespread consequences.

Key Vulnerabilities in Internet Infrastructure

Undersea Cables

Over 95% of global internet traffic travels through undersea fiber-optic cables.

Vulnerable to ship anchors, earthquakes, and sabotage (e.g., 2022 Nord Stream pipeline-like attacks raise concerns).

Incidents like the 2008 Mediterranean cable cuts disrupted internet access in the Middle East and South Asia.

DNS & Routing Weaknesses

The Domain Name System (DNS) is a single point of failure—attacks (e.g., DDoS on Dyn in 2016) can take down major sites.

BGP hijacking (e.g., 2021 Russian ISP rerouted Western traffic) can cause outages or surveillance risks.

Cloud & CDN Centralization

A few providers (AWS, Google Cloud, Cloudflare) host a massive share of internet services.

Outages (e.g., AWS 2021, Fastly 2020) can cripple thousands of websites simultaneously.

Cyberattacks & State-Sponsored Threats

DDoS attacks (e.g., 2022 attacks on Ukrainian banks during war).

Ransomware targeting ISPs (e.g., 2021 Kaseya attack).

SolarWinds-style supply chain compromises threaten critical infrastructure.

Physical Infrastructure Risks

Data centers face risks from power outages, natural disasters (hurricanes, floods), and war (e.g., Ukraine’s internet resilience under Russian attacks).

Satellite internet (Starlink) is an alternative but has limited bandwidth and geopolitical dependencies.

Government & Corporate Control

Internet shutdowns (e.g., Iran, Myanmar) show how governments can cut access.

Tech monopolies controlling key services (e.g., Google, Meta) create systemic risks.

Recent Examples of Internet Fragility

2023: Red Sea Cable Sabotage – Houthi attacks on undersea cables disrupted regional connectivity.

2022: Russia’s Cyberwar in Ukraine – Attacks on Viasat satellites and Ukrainian ISPs.

2021: Facebook’s BGP Misconfiguration – Took down WhatsApp, Instagram, and Oculus for hours.

Possible Solutions for Resilience

✅ Decentralization – More mesh networks, peer-to-peer systems (e.g., IPFS).

✅ Better Redundancy – Diversified cloud providers, backup satellite links.

✅ Stronger Cyber Defenses – Zero-trust architectures, AI-driven threat detection.

✅ International Cooperation – Protecting undersea cables, anti-censorship laws.

Conclusion

The internet remains surprisingly fragile despite its global importance. Increasing reliance on digital infrastructure means that failures can disrupt economies, governments, and daily life. Strengthening resilience requires technological, political, and economic efforts to mitigate risks.

0 notes

Link

#CriticalInfrastructureProtection#EDRLimitations#healthcarecybersecurity#HypervisorSecurity#RansomwareMitigation#VirtualPatching#VMwareESXi#Zero-DayVulnerabilities

0 notes

Text

AI Labyrinth: Cloudflare Defense Against Rogue AI Crawlers

AI Labyrinth, a revolutionary mitigation method that delays, confuses, and wastes AI Crawlers and other bots that use AI-generated material despite “no crawl” directives. Cloudflare automatically deploys an AI-generated list of connected sites when it detects inappropriate bot activity when you opt in, so you don't need to create specific rules

AI Labyrinth is available to all users, including free ones.

Defending using Generative AI

The rise of AI-generated material was shown by four of last autumn's top 20 Facebook posts. Medium reports that 47% of its content is AI-generated. Like any new instrument, it has pros and cons.

New crawlers that AI companies use to capture data for model training have increased. AI crawlers send about 50 billion queries to Cloudflare daily, or only 1% of total online traffic. Cloudflare has several tools to detect and block illicit AI crawling, but it has found that banning dangerous bots might alert the attacker, causing them to shift methods and start an ongoing arms race. Therefore, it wanted to create a new way to stop unwanted bots without informing them.

Cloudflare used AI-generated content, a new offensive tool for bot creators that hasn't been used defensively, to achieve this. Instead of rejecting unauthorised crawling, it will link to a series of AI-generated sites that are realistic enough to seduce a crawler.

An further benefit of AI Labyrinth is its next-generation honeypot. A real human wouldn't navigate AI-generated nonsense. This provides us a new way to detect and fingerprint harmful bots, adding them to the list of known bad actors as any visitor who does so is likely a bot.

How the labyrinth was built

AI crawlers who follow these links waste computer resources digesting useless data instead of your website. This makes it harder for them to get data for model training.

Workers AI and an open source model were used by Cloudflare to create creative HTML pages on various topics that looked human.A pre-generation pipeline sanitises the content to eliminate XSS vulnerabilities and stores it in R2 for faster retrieval, rather than creating it on-demand, which might impair performance.

Cloudflare found that generating many topics and then creating material for each topic yielded more interesting and engaging results. It may develop real, scientifically-based material that is neither proprietary nor related to the crawling page. It avoids generating fake content that spreads misinformation online.

This pre-generated information is easily integrated as hidden links on pre-existing pages using the unique HTML transformation approach without changing the page's content or structure. Every page includes meta directives to prevent search engine crawling and maintain SEO.

It also blocked human visitors from these URLs with carefully chosen features and design. Cloudflare only showed these URLs to suspected AI scrapers, enabling confirmed crawlers and legitimate users to browse often to decrease the impact on regular visitors.

Its success comes from its role in the ever-improving bot detection system. These URLs are definitely crawler activity because humans and normal browsers would never click on them. This produces important data for machine learning models and provides a powerful identification mechanism.

By looking for crawlers using these covert routes, Cloudflare can identify new bot patterns and signatures. By using this proactive approach, it can maintain lead over AI scrapers and improve detection skills without disrupting consumers' surfing experiences.

The developer platform delivers constant quality and quick delivery of convincing decoy material without impacting your website's user experience or performance.

How AI Labyrinth prevents crawlers

One Cloudflare dashboard toggle activates AI Labyrinth. Go to your zone's bot administration to enable the AI Labyrinth:

After activation, the AI Labyrinth starts working without setup.

Honeypots made by AI

AI Labyrinth's main advantage is bot confusion and diversion. Another benefit is that it can be a future honeypot. A honeypot is an unseen link that a website user cannot see, but a bot parsing HTML may click on it and reveal itself as a bot.

Since late 1986's Cuckoo's Egg incident, honeypots have caught hackers. In 2004, before Cloudflare was launched, its founders created Project Honeypot to make it easy to put up free email honeypots and get crawler IP lists in exchange for database contributions. Since bots aggressively hunt for honeypot tactics like hidden links, this strategy is less effective.

Instead of adding hidden links, AI Labyrinth will develop complete networks of related URLs that are more realistic and harder for automated algorithms to identify. AI bots comb through websites to acquire as much information as possible, even if no one would spend much time on them. Bots visiting certain URLs are definitely not humans, and machine learning algorithms automatically record and use this information to identify bots more precisely. Scraping helps safeguard all Cloudflare users, providing a positive feedback loop.

What's next?

Our use of generative AI to stop bots is just beginning. Even if the material seems human, it won't match every website's layout. Cloudflare keeps making these connections harder to identify and blend into the website's design. Sign up now to help us.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#AI Labyrinth#Generative AI#AI crawlers#labyrinth#AI-generated honeypots

0 notes

Text

Bigo Live Clone Development: How to Build a Secure & Scalable Platform

Introduction

A Bigo Live clone is a live streaming app that allows users to broadcast videos, interact with viewers, and monetize content. The demand for live streaming platforms has grown rapidly, making it essential to build a secure and scalable solution. This guide explains the key steps to develop a Bigo Live clone that ensures smooth performance, user engagement, and safety.

Key Features of a Bigo Live Clone

1. User Registration & Profiles

Users sign up via email, phone, or social media.

Profiles display followers, streams, and achievements.

Verification badges for popular streamers.

2. Live Streaming

Real-time video broadcasting with low latency.

Support for HD and ultra-HD quality.

Screen sharing and front/back camera switching.

3. Virtual Gifts & Monetization

Viewers send virtual gifts to streamers.

In-app purchases for coins and premium gifts.

Revenue sharing between streamers and the platform.

4. Chat & Interaction

Live comments and emojis during streams.

Private messaging between users.

Voice chat for real-time discussions.

5. Multi-Guest Streaming

Multiple users join a single live session.

Useful for interviews, collaborations, and group discussions.

6. Moderation Tools

Admins ban users for rule violations.

AI detects inappropriate content.

User reporting system for abusive behavior.

7. Notifications

Alerts for new followers, gifts, and streams.

Push notifications to keep users engaged.

8. Analytics Dashboard

Streamers track viewer count and earnings.

Insights on peak streaming times and audience demographics.

Steps to Develop a Bigo Live Clone

1. Choose the Right Tech Stack

Frontend: React Native (cross-platform), Flutter (for fast UI)

Backend: Node.js (scalability), Django (security)

Database: MongoDB (flexibility), Firebase (real-time updates)

Streaming Protocol: RTMP (low latency), WebRTC (peer-to-peer)

Cloud Storage: AWS S3 (scalable storage), Google Cloud (global reach)

2. Design the UI/UX

Keep the interface simple and intuitive.

Use high-quality graphics for buttons and icons.

Optimize for both mobile and desktop users.

3. Develop Core Features

Implement secure user authentication (OAuth, JWT).

Add live streaming with minimal buffering.

Integrate payment gateways (Stripe, PayPal) for virtual gifts.

4. Ensure Security

Use HTTPS for encrypted data transfer.

Apply two-factor authentication (2FA) for logins.

Store passwords with bcrypt hashing.

5. Test the Platform

Check for bugs in streaming and payments.

Test on different devices (iOS, Android) and network speeds.

Conduct load testing for high-traffic scenarios.

6. Launch & Maintain

Release the app on Google Play and Apple Store.

Monitor performance and fix bugs quickly.

Update regularly with new features and security patches.

Security Measures for a Bigo Live Clone

1. Data Encryption

Encrypt user data in transit (SSL/TLS) and at rest (AES-256).

2. Secure Authentication

Use OAuth for social logins (Google, Facebook).

Enforce strong password policies (minimum 8 characters, special symbols).

3. Anti-Fraud Systems

Detect fake accounts with phone/email verification.

Block suspicious transactions with AI-based fraud detection.

4. Content Moderation

AI filters offensive content (hate speech, nudity).

Users report abusive behavior with instant admin review.

Scalability Tips for a Bigo Live Clone

1. Use Load Balancers

Distribute traffic across multiple servers (AWS ELB, Nginx).

2. Optimize Database Queries

Index frequently accessed data for faster retrieval.

Use Redis for caching frequently used data.

3. Auto-Scaling Cloud Servers

Automatically add servers during high traffic (AWS Auto Scaling).

4. CDN for Faster Streaming

Reduce latency with global content delivery (Cloudflare, Akamai).

Conclusion

Building a Bigo Live clone requires a strong tech stack, security measures, and scalability planning. By following these steps, you can create a platform that handles high traffic, engages users, and keeps data safe.

For professional Bigo Live clone development, consider AIS Technolabs. They specialize in secure and scalable live streaming solutions.

Contact us for a detailed consultation.

FAQs

1. What is a Bigo Live clone?

A Bigo Live clone is a live streaming app similar to Bigo Live, allowing users to broadcast and monetize content.

2. How long does it take to develop a Bigo Live clone?

Development time depends on features, but it typically takes 4-6 months.

3. Can I add custom features to my Bigo Live clone?

Yes, you can include unique features like AR filters or advanced monetization options.

4. How do I ensure my Bigo Live clone is secure?

Use encryption, secure authentication, and AI-based moderation.

5. Which cloud service is best for a Bigo Live clone?

AWS and Google Cloud offer strong scalability for live streaming apps.

0 notes

Text

Cloud Security Market Developments: Industry Insights and Growth Forecast 2032

Cloud Security Market was valued at USD 36.9 billion in 2023 and is expected to reach USD 112.4 Billion by 2032, growing at a CAGR of 13.20% from 2024-2032.

Cloud Security Market is experiencing unprecedented growth as businesses worldwide move their operations to the cloud. With the rise in cyber threats and data breaches, organizations are prioritizing security solutions to protect sensitive information. The demand for advanced security frameworks and compliance-driven solutions is accelerating market expansion.

Cloud Security Market continues to evolve as enterprises adopt multi-cloud and hybrid cloud environments. Companies are investing in AI-driven threat detection, zero-trust security models, and encryption technologies to safeguard data. As cyber risks grow, cloud security remains a top priority for businesses, governments, and cloud service providers alike.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3796

Market Keyplayers:

Amazon Web Services (AWS) - AWS Shield

Microsoft - Microsoft Defender for Cloud

Google Cloud Platform - Google Cloud Armor

IBM - IBM Cloud Security

Palo Alto Networks - Prisma Cloud

Cisco - Cisco Cloudlock

Check Point Software Technologies - CloudGuard

Fortinet - FortiGate Cloud

McAfee - McAfee MVISION Cloud

NortonLifeLock - Norton Cloud Backup

Zscaler - Zscaler Internet Access

CrowdStrike - CrowdStrike Falcon

Cloudflare - Cloudflare Security Solutions

Splunk - Splunk Cloud

Proofpoint - Proofpoint Email Protection

Trend Micro - Trend Micro Cloud One

SonicWall - SonicWall Cloud App Security

CyberArk - CyberArk Cloud Entitlement Manager

Barracuda Networks - Barracuda Cloud Security Guardian

Qualys - Qualys Cloud Platform

Market Trends Driving Growth

1. Rise of Zero-Trust Security Models

Organizations are implementing zero-trust frameworks to ensure strict authentication and access control, reducing the risk of unauthorized access.

2. AI and Machine Learning in Threat Detection

Cloud security providers are integrating AI-driven analytics to detect, predict, and prevent cyber threats in real-time.

3. Compliance and Regulatory Requirements

Stringent data protection laws, such as GDPR and CCPA, are pushing businesses to adopt cloud security solutions for compliance.

4. Increasing Multi-Cloud Adoption

Companies are using multiple cloud providers, necessitating advanced security solutions to manage risks across different cloud environments.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3796

Market Segmentation:

By Component

Solution

Cloud Access Security Broker (CASB)

Cloud Detection and Response (CDR)

Cloud Security Posture Management (CSPM)

Cloud Infrastructure Entitlement Management (CIEM)

Cloud Workload Protection Platform (CWPP)

Services

Professional Services

Managed Services

By Deployment

Private

Hybrid

Public

By Organization Size

Large Enterprises

Small & Medium Enterprises

By End - Use

BFSI

Retail & E-commerce

IT & Telecom

Healthcare

Manufacturing

Government

Aerospace & Defense

Energy & Utilities

Transportation & Logistics

Market Analysis and Current Landscape

Rising Cybersecurity Threats: Growing cyberattacks, ransomware incidents, and data breaches are driving the need for robust cloud security solutions.

Adoption of Cloud-Based Applications: As enterprises migrate to cloud platforms like AWS, Azure, and Google Cloud, demand for security services is increasing.

Expansion of IoT and Edge Computing: The rise in connected devices is creating new vulnerabilities, requiring enhanced cloud security measures.

Government Investments in Cybersecurity: Public sector organizations are strengthening their cloud security frameworks to protect critical infrastructure and citizen data.

Despite this rapid growth, challenges such as complex security architectures, lack of skilled professionals, and evolving attack strategies persist. However, innovations in AI-driven security solutions and automated threat management are helping businesses address these concerns.

Future Prospects: What Lies Ahead?

1. Growth in Security-as-a-Service (SECaaS)

Cloud-based security services will become the norm, offering scalable and cost-effective protection for businesses of all sizes.

2. Advanced Threat Intelligence Solutions

Organizations will increasingly rely on AI-powered threat intelligence to stay ahead of cybercriminals and mitigate risks proactively.

3. Expansion of Quantum-Safe Security

With the advancement of quantum computing, encryption technologies will evolve to ensure data remains secure against future cyber threats.

4. Integration of Cloud Security with DevSecOps

Security will be embedded into cloud application development, ensuring vulnerabilities are addressed at every stage of the software lifecycle.

Access Complete Report: https://www.snsinsider.com/reports/cloud-security-market-3796

Conclusion

The Cloud Security Market is poised for exponential growth as digital transformation accelerates. Companies must invest in cutting-edge security frameworks to protect their data, applications, and infrastructure. As cyber threats become more sophisticated, cloud security will continue to be a critical pillar of business resilience, driving innovation and shaping the future of cybersecurity.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Cloud Security Market#Cloud Security Market Scope#Cloud Security Market Growth#Cloud Security Market Trends

0 notes

Text

Cloudflare is luring web-scraping bots into an ‘AI Labyrinth’

Cloudflare, one of the biggest network internet infrastructure companies in the world, has announced AI Labyrinth, a new tool to fight web-crawling bots that scrape sites for AI training data without permission. The company says in a blog post that when it detects “inappropriate bot behavior,” the free, opt-in tool lures crawlers down a path of links to AI-generated decoy pages that “slow down,…

View On WordPress

0 notes

Text

How to Ensure 24/7 Uptime in Cryptocurrency Exchange Development

Cryptocurrency exchanges operate in a high-stakes environment where even a few minutes of downtime can result in significant financial losses, security vulnerabilities, and loss of customer trust. Ensuring 24/7 uptime in cryptocurrency exchange development requires a combination of advanced infrastructure, strategic planning, security measures, and continuous monitoring. This guide explores the best practices and technologies to achieve maximum uptime and ensure seamless operations.

1. Choosing the Right Infrastructure

The backbone of any high-availability exchange is its infrastructure. Consider the following:

1.1 Cloud-Based Solutions vs. On-Premises Hosting

Cloud-based solutions: Scalable, reliable, and backed by industry leaders such as AWS, Google Cloud, and Microsoft Azure.

On-premises hosting: Offers more control but requires extensive maintenance and security protocols.

1.2 High Availability Architecture

Load balancing: Distributes traffic across multiple servers to prevent overload.

Redundant servers: Ensures backup servers take over in case of failure.

Content Delivery Networks (CDNs): Improve response times by caching content globally.

2. Implementing Failover Mechanisms

2.1 Database Redundancy

Use Primary-Replica architecture to maintain real-time backups.

Implement automatic failover mechanisms for instant switching in case of database failure.

2.2 Active-Passive and Active-Active Systems

Active-Passive: One server remains on standby and takes over during failures.

Active-Active: Multiple servers actively handle traffic, ensuring zero downtime.

3. Ensuring Network Resilience

3.1 Distributed Denial-of-Service (DDoS) Protection

Implement DDoS mitigation services like Cloudflare or Akamai.

Use rate limiting and traffic filtering to prevent malicious attacks.

3.2 Multiple Data Centers

Distribute workload across geographically dispersed data centers.

Use automated geo-routing to shift traffic in case of regional outages.

4. Continuous Monitoring and Automated Alerts

4.1 Real-Time Monitoring Tools

Use Nagios, Zabbix, or Prometheus to monitor server health.

Implement AI-driven anomaly detection for proactive issue resolution.

4.2 Automated Incident Response

Develop automated scripts to resolve common issues.

Use chatbots and AI-powered alerts for instant notifications.

5. Regular Maintenance and Software Updates

5.1 Scheduled Maintenance Windows

Plan updates during non-peak hours.

Use rolling updates to avoid complete downtime.

5.2 Security Patching

Implement automated patch management to fix vulnerabilities without disrupting service.

6. Advanced Security Measures

6.1 Multi-Layer Authentication

Use 2FA (Two-Factor Authentication) for secure logins.

Implement hardware security modules (HSMs) for cryptographic security.

6.2 Cold and Hot Wallet Management

Use cold wallets for long-term storage and hot wallets for active trading.

Implement multi-signature authorization for withdrawals.

7. Scalability Planning

7.1 Vertical vs. Horizontal Scaling

Vertical Scaling: Upgrading individual server components (RAM, CPU).

Horizontal Scaling: Adding more servers to distribute load.

7.2 Microservices Architecture

Decouple services for independent scaling.

Use containerization (Docker, Kubernetes) for efficient resource management.

8. Compliance and Regulatory Requirements

8.1 Adherence to Global Standards

Ensure compliance with AML (Anti-Money Laundering) and KYC (Know Your Customer) policies.

Follow GDPR and PCI DSS standards for data protection.

8.2 Audit and Penetration Testing

Conduct regular security audits and penetration testing to identify vulnerabilities.

Implement bug bounty programs to involve ethical hackers in security improvements.

Conclusion

Achieving 24/7 uptime in cryptocurrency exchange development requires a comprehensive approach involving robust infrastructure, failover mechanisms, continuous monitoring, and security best practices. By integrating these strategies, exchanges can ensure reliability, security, and customer trust in a highly competitive and fast-evolving market.

0 notes