#cognos software solutions

Explore tagged Tumblr posts

Text

Cognos Business Intelligence is a web-based integrated business intelligence suite by IBM this tool assists business in making data driven decisions that results in getting outcomes with better performance with rich user- friendly interfaces, IBM being an top industry in tech field delivering with the Cognos analytics. The IBM Cognos business intelligence mechanism that offering a full range of Business Intelligence Capabilities. Pattem digital is an top OPD company being IBM business partner providing an Intelligence solutions for all areas of company's business.

0 notes

Text

Self-Service Analytics Market Share Analysis 2032: Size, Scope, Growth Trends & Forecast

The Self-Service Analytics Market was valued at USD 4.5 billion in 2023 and is expected to reach USD 19.75 billion by 2032, growing at a CAGR of 17.90% over the forecast period 2024-2032.

The self-service analytics market is witnessing accelerated growth as organizations across industries embrace data-driven decision-making. As the volume of data continues to rise, enterprises are increasingly shifting towards tools that empower non-technical users to analyze and interpret data without the need for specialized IT intervention. Self-service analytics enables business users to access, visualize, and explore data insights independently, which enhances productivity, speeds up decision-making, and fosters a culture of data democratization within organizations.

Self-Service Analytics Market Size, Share, Scope, Analysis, Forecast, Growth, and Industry Report 2032 highlights the increasing demand for intuitive analytical platforms that integrate seamlessly with existing business applications. With businesses becoming more agile, there is a growing need for real-time analytics, customizable dashboards, and interactive reporting tools. Enterprises are investing in platforms that offer ease of use, scalability, and strong data governance features to ensure security and compliance. This shift is contributing to substantial market expansion and innovation within the analytics industry.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/5511

Market Keyplayers:

Tableau (Tableau Desktop, Tableau Server)

Microsoft (Power BI, Azure Synapse Analytics)

Qlik (Qlik Sense, QlikView)

Sisense (Sisense for Cloud Data Teams, Sisense for Cloud Analytics)

TIBCO Software (Spotfire, TIBCO Data Science)

SAP (SAP BusinessObjects, SAP Analytics Cloud)

Domo (Domo Business Cloud, Domo Analytics)

IBM (IBM Cognos Analytics, IBM Watson Analytics)

Google (Looker, Google Data Studio)

Oracle (Oracle Analytics Cloud, Oracle Business Intelligence)

Zoho (Zoho Analytics, Zoho Reports)

MicroStrategy (MicroStrategy Analytics, MicroStrategy Desktop)

Alteryx (Alteryx Designer, Alteryx Server)

Sisense (Sisense for Cloud Data Teams, Sisense for Cloud Analytics)

Qlik (Qlik Sense, Qlik DataMarket)

ThoughtSpot (ThoughtSpot Analytics, ThoughtSpot Search)

TARGIT (TARGIT Decision Suite, TARGIT Analytics)

GoodData (GoodData Platform, GoodData Insights)

Yellowfin (Yellowfin Analytics, Yellowfin Signals)

Birst (Birst Analytics, Birst Cloud)

Market Trends

Several key trends are shaping the current and future landscape of the self-service analytics market:

Rise of Embedded Analytics: Organizations are integrating analytics directly into business applications, allowing users to access insights without switching platforms. Embedded analytics enhances user experience and increases adoption across departments.

Growth of Cloud-Based Analytics Solutions: The move towards cloud computing has significantly boosted the adoption of self-service analytics tools. Cloud-based platforms offer scalability, cost-efficiency, and remote accessibility, which align with the needs of modern, distributed workforces.

Natural Language Processing (NLP): NLP capabilities are transforming self-service analytics by allowing users to interact with data using conversational queries. This trend is reducing the entry barrier for users with limited technical expertise.

Focus on Data Governance and Security: As more employees gain access to analytics tools, organizations are prioritizing robust governance frameworks to manage data access, ensure quality, and comply with regulations like GDPR and HIPAA.

AI and ML Integration: Advanced self-service platforms are incorporating machine learning algorithms to uncover hidden trends, perform predictive analytics, and automate data analysis—empowering users to make smarter, faster decisions.

Enquiry of This Report: https://www.snsinsider.com/enquiry/5511

Market Segmentation:

By Software

Tableau

Sas

Logi Analytics

Qlik

Others

By Service

Business User

Consultants

Corporate IT Professionals

Others

By Enterprise Type

Large Size Enterprises

Small and Medium Sized Enterprises (SMEs)

By Industry Vertical

BFSI

Consumer Goods & Retail

Healthcare

IT and Telecom

Manufacturing & Automotive

Market Analysis

This growth is fueled by a surge in demand from sectors like retail, BFSI, healthcare, and manufacturing, where quick access to insights can drive operational efficiency and competitive advantage.

North America currently dominates the market due to early technology adoption, a mature IT infrastructure, and a high concentration of analytics providers. However, Asia-Pacific is expected to witness the fastest growth during the forecast period, driven by digital transformation initiatives, expanding internet penetration, and increasing investments in business intelligence solutions.

The market is highly competitive, with leading players such as Microsoft, Tableau (Salesforce), IBM, Qlik, and SAP constantly innovating to offer enhanced functionalities. Startups and niche players are also entering the market with specialized offerings tailored for small and medium-sized enterprises (SMEs), contributing to a diverse and dynamic vendor landscape.

Future Prospects

The future of self-service analytics is expected to be driven by a deeper integration of artificial intelligence, automation, and mobile-first technologies. As analytics becomes more embedded into everyday business operations, we can expect platforms to offer more real-time collaboration features, voice-enabled insights, and customizable solutions based on industry-specific requirements.

With increasing focus on upskilling and data literacy among employees, organizations are likely to invest in training programs and user-friendly platforms that support self-learning. The demand for industry-specific solutions tailored to roles and functions—such as marketing analytics, financial performance dashboards, and operational intelligence—will also rise.

In addition, interoperability between different data sources and tools will be a key focus area. Vendors that can offer seamless integration with enterprise ecosystems—including ERP, CRM, and HRMS platforms—will hold a competitive edge. The continued evolution of low-code/no-code platforms will also broaden the user base, allowing even non-technical users to build custom reports and dashboards.

Access Complete Report: https://www.snsinsider.com/reports/self-service-analytics-market-5511

Conclusion

The self-service analytics market is evolving rapidly, unlocking opportunities for businesses to make faster, smarter, and more inclusive decisions. As organizations prioritize agility, transparency, and data democratization, self-service tools are becoming indispensable across all sectors. The convergence of AI, cloud computing, and user-centric design is setting the stage for the next wave of innovation in analytics.

With growing investment in analytics infrastructure and an increasing emphasis on data-driven culture, the market is poised for robust and sustained growth through 2032. Organizations that embrace self-service analytics will be better equipped to respond to changing market dynamics, innovate faster, and achieve measurable business outcomes in an increasingly competitive digital landscape.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Self-Service Analytics Market#Self-Service Analytics Market Scope#Self-Service Analytics Market Growth#Self-Service Analytics Market Trends

0 notes

Text

Choosing the Right Reporting Tool for Your Business

For any business striving for success, gathering data and generating reports are critical for assessing performance, understanding customer behavior, and making well-informed decisions. With an abundance of reporting tools available, finding the right one for your organization can be challenging.

This guide aims to simplify the process by helping you identify the best data reporting tool to meet your company’s specific needs. We’ll explore popular software options, highlighting their features, benefits, and potential limitations.

What Are Reporting Tools?

A reporting tool is a software solution designed to help organizations collect, analyze, and present data in a clear and organized format. These tools enable businesses to create various types of reports—such as financial, operational, sales, and marketing reports—which can inform strategic decisions and track progress.

Key features of reporting tools often include:

Data visualization capabilities

Customizable report templates

Automated report generation

Integration with diverse data sources

By leveraging these tools, businesses can uncover insights, monitor trends, and track key performance indicators (KPIs), ultimately driving growth and operational efficiency.

Types of Reporting Tools

Different reporting tools serve distinct purposes. Below are some common types of tools to consider:

1. Business Intelligence (BI) Tools

BI tools help analyze and visualize complex datasets, supporting better decision-making. Popular examples include Tableau, Microsoft Power BI, and QlikView. Many BI tools offer advanced capabilities such as predictive analytics, data mining, and machine learning.

2. Dashboard Reporting Tools

These tools specialize in creating interactive dashboards that display real-time KPIs and metrics. Examples include Sisense, Domo, and Klipfolio. They provide a visual overview of data, making it easier to monitor performance at a glance.

3. Spreadsheet-Based Tools

Spreadsheet tools like Microsoft Excel and Google Sheets are widely used for their simplicity and flexibility. Users can create charts, perform calculations, and organize data within a familiar interface.

4. Ad Hoc Reporting Tools

Designed for quick, custom report creation, these tools often feature drag-and-drop interfaces and pre-built templates. Examples include Zoho Analytics and Birst. They are ideal for users without technical expertise who need tailored reports.

5. Financial Reporting Tools

These tools focus on generating financial reports, such as balance sheets, income statements, and cash flow analyses. Examples include Oracle Hyperion, SAP Financial Statement Insights, and IBM Cognos. They are crucial for tracking financial health and ensuring compliance.

6. CRM Reporting Tools

Integrated into Customer Relationship Management systems, these tools provide insights into customer data, sales performance, and marketing campaigns. Examples include Salesforce, HubSpot, and Microsoft Dynamics 365.

7. Open-Source Reporting Tools

Open-source tools are free to use and highly customizable, making them a good option for businesses with tight budgets. Examples include BIRT (Business Intelligence and Reporting Tools), JasperReports, and Pentaho.

Top 5 Reporting Tools of 2023

Here are five top-rated reporting tools to consider for your business:

1. Tableau

Tableau is a leading BI and data visualization tool known for its intuitive interface, extensive customization options, and strong community support. It’s suitable for organizations of all sizes looking to create interactive dashboards and reports.

2. Domo

Domo is a cloud-based BI platform offering real-time data visualization, collaboration tools, and mobile access. Its user-friendly design and pre-built connectors make it ideal for businesses seeking to streamline reporting processes.

3. Looker

Looker provides advanced analytics and reporting capabilities, with a focus on data governance and integration with popular data warehouses. Acquired by Google in 2019, Looker’s customizable data models make it a strong choice for businesses prioritizing data accuracy.

4. Microsoft Power BI

Power BI integrates seamlessly with Microsoft products like Office 365 and Azure. Its robust data analysis, visualization features, and competitive pricing make it appealing for organizations leveraging Microsoft’s ecosystem.

5. Sisense

Sisense is renowned for its ease of use and scalability. Its In-Chip™ technology enables faster data processing, while its capability to handle large datasets and embed analytics into other applications makes it an excellent choice for complex reporting needs.

0 notes

Text

Software Engineer, Cognos, AVP

Join us as a Software Engineer In your new role, you’ll engineer and maintain innovative, customer centric, high performance, secure and robust solutions We’ll look to you to design and engineer software focusing on the customer or user experience as the primary objective It’s a chance to hone your existing technical skills and advance your career as you develop the discipline of software…

0 notes

Text

Anthony Deighton, CEO of Tamr – Interview Series

New Post has been published on https://thedigitalinsider.com/anthony-deighton-ceo-of-tamr-interview-series/

Anthony Deighton, CEO of Tamr – Interview Series

Anthony Deighton is CEO of Tamr. He has 20 years of experience building and scaling enterprise software companies. Most recently, he spent two years as Chief Marketing Officer at Celonis, establishing their leadership in the Process Mining software category and creating demand generation programs resulting in 130% ARR growth. Prior to that, he served for 10+ years at Qlik growing it from an unknown Swedish software company to a public company — in roles from product leadership, product marketing and finally as CTO. He began his career at Siebel Systems learning how to build enterprise software companies in a variety of product roles.

Can you share some key milestones from your journey in the enterprise software industry, particularly your time at Qlik and Celonis?

I began my career in enterprise software at Siebel Systems and learned a lot about building and scaling enterprise software companies from the leadership team there. I joined Qlik when it was a small, unknown, Swedish software company with 95% of the small 60-person team located in Lund, Sweden. I joke that since I wasn’t an engineer or a salesperson, I was put in charge of marketing. I built the marketing team there, but over time my interest and contributions gravitated towards product management, and eventually I became Chief Product Officer. We took Qlik public in 2010, and we continued as a successful public company. After that, we wanted to do some acquisitions, so I started an M&A team. After a long and reasonably successful run as a public company, we eventually sold Qlik to a private equity firm named Thoma Bravo. It was, as I like to say, the full life cycle of an enterprise software company. After leaving Qlik, I joined Celonis, a small German software company trying to gain success selling in the U.S. Again, I ran marketing as the CMO. We grew very quickly and built a very successful global marketing function.

Both Celonis and Qlik were focused on the front end of the data analytics challenge – how do I see and understand data? In Qlik’s case, that was dashboards; in Celonis’ case it was business processes. But a common challenge across both was the data behind these visualizations. Many customers complained that the data was wrong: duplicate records, incomplete records, missing silos of data. This is what attracted me to Tamr, where I felt that for the first time, we might be able to solve the challenge of messy enterprise data. The first 15 years of my enterprise software career was spent visualizing data, I hope that the next 15 can be spent cleaning that data up.

How did your early experiences shape your approach to building and scaling enterprise software companies?

One important lesson I learned in the shift from Siebel to Qlik was the power of simplicity. Siebel was very powerful software, but it was killed in the market by Salesforce.com, which made a CRM with many fewer features (“a toy” Siebel used to call it), but customers could get it up and running quickly because it was delivered as a SaaS solution. It seems obvious today, but at the time the wisdom was that customers bought features, but what we learned is that customers invest in solutions to solve their business problems. So, if your software solves their problem faster, you win. Qlik was a simple solution to the data analytics problem, but it was radically simpler. As a result, we could beat more feature-rich competitors such as Business Objects and Cognos.

The second important lesson I learned was in my career transition from marketing to product. We think of these domains as distinct. In my career I have found that I move fluidly between product and marketing. There is an intimate link between what product you build and how you describe it to potential customers. And there is an equally important link between what prospects demand and what product we should build. The ability to move between these conversations is a critical success factor for any enterprise software company. A common reason for a startup’s failure is believing “if you build it, they will come.” This is the common belief that if you just build cool software, people will line up to buy it. This never works, and the solution is a robust marketing process connected with your software development process.

The last idea I will share links my academic work with my professional work. I had the opportunity at business school to take a class about Clay Christensen’s theory of disruptive innovation. In my professional work, I have had the opportunity to experience both being the disruptor and being disrupted. The key lesson I’ve learned is that any disruptive innovation is a result of an exogenous platform shift that makes the impossible finally possible. In Qlik’s case it was the platform availability of large memory servers that allowed Qlik to disrupt traditional cube-based reporting. At Tamr, the platform availability of machine learning at scale allows us to disrupt manual rules-based MDM in favor of an AI-based approach. It’s important to always figure out what platform shift is driving your disruption.

What inspired the development of AI-native Master Data Management (MDM), and how does it differ from traditional MDM solutions?

The development of Tamr came out of academic work at MIT (Massachusetts Institute of Technology) around entity resolution. Under the academic leadership of Turing Award winner Michael Stonebraker, the question the team were investigating was “can we link data records across hundreds of thousands of sources and millions of records.” On the face of it, this is an insurmountable challenge because the more records and sources the more records each possible match needs to be compared to. Computer scientists call this an “n-squared problem” because the problem increases geometrically with scale.

Traditional MDM systems try to solve this problem with rules and large amounts of manual data curation. Rules don’t scale because you can never write enough rules to cover every corner case and managing thousands of rules is a technical impossibility. Manual curation is extremely expensive because it relies on humans to try to work through millions of possible records and comparisons. Taken together, this explains the poor market adoption of traditional MDM (Master Data Management) solutions. Frankly put, no one likes traditional MDM.

Tamr’s simple idea was to train an AI to do the work of source ingestion, record matching, and value resolution. The great thing about AI is that it doesn’t eat, sleep, or take vacation; it is also highly parallelizable, so it can take on huge volumes of data and churn away at making it better. So, where MDM used to be impossible, it is finally possible to achieve clean, consolidated up-to-date data (see above).

What are the biggest challenges companies face with their data management, and how does Tamr address these issues?

The first, and arguably the most important challenge companies face in data management is that their business users don’t use the data they generate. Or said differently, if data teams don’t produce high-quality data that their organizations use to answer analytical questions or streamline business processes, then they’re wasting time and money. A primary output of Tamr is a 360 page for every entity record (think: customer, product, part, etc.) that combines all the underlying 1st and 3rd party data so business users can see and provide feedback on the data. Like a wiki for your entity data. This 360 page is also the input to a conversational interface that allows business users to ask and answer questions with the data. So, job one is to give the user the data.

Why is it so hard for companies to give users data they love? Because there are three primary hard problems underlying that goal: loading a new source, matching the new records into the existing data, and fixing the values/fields in data. Tamr makes it easy to load new sources of data because its AI automatically maps new fields into a defined entity schema. This means that regardless of what a new data source calls a particular field (example: cust_name) it gets mapped to the right central definition of that entity (example: “customer name”). The next challenge is to link records which are duplicates. Duplication in this context means that the records are, in fact, the same real-world entity. Tamr’s AI does this, and even uses external 3rd party sources as “ground truth” to resolve common entities such as companies and people. A good example of this would be linking all the records across many sources for an important customer such as “Dell Computer.” Lastly, for any given record there may be fields which are blank or incorrect. Tamr can impute the correct field values from internal and 3rd party sources.

Can you share a success story where Tamr significantly improved a company’s data management and business outcomes?

CHG Healthcare is a major player in the healthcare staffing industry, connecting skilled healthcare professionals with facilities in need. Whether it’s temporary doctors through Locums, nurses with RNnetwork, or broader solutions through CHG itself, they provide customized staffing solutions to help healthcare facilities run smoothly and deliver quality care to patients.

Their fundamental value proposition is connecting the right healthcare providers with the right facility at the right time. Their challenge was that they didn’t have an accurate, unified view of all the providers in their network. Given their scale (7.5M+ providers), it was impossible to keep their data accurate with legacy, rules-driven approaches without breaking the bank on human curators. They also couldn’t ignore the problem since their staffing decisions depended on it. Bad data for them could mean a provider gets more shifts than they can handle, leading to burnout.

Using Tamr’s advanced AI/ML capabilities, CHG Healthcare reduced duplicate physician records by 45% and almost completely eliminated the manual data preparation that was being done by scarce data & analytics resources. And most importantly, by having a trusted and accurate view of providers, CHG is able to optimize staffing, enabling them to deliver a better customer experience.

What are some common misconceptions about AI in data management, and how does Tamr help dispel these myths?

A common misconception is that AI has to be “perfect”, or that rules and human curation are perfect in contrast to AI. The reality is that rules fail all the time. And, more importantly, when rules fail, the only solution is more rules. So, you have an unmanageable mess of rules. And human curation is fallible as well. Humans might have good intentions (although not always), but they’re not always right. What’s worse, some human curators are better than others, or simply might make different decisions than others. AI, in contrast, is probabilistic by nature. We can validate through statistics how accurate any of these techniques are, and when we do we find that AI is less expensive and more accurate than any competing alternative.

Tamr combines AI with human refinement for data accuracy. Can you elaborate on how this combination works in practice?

Humans provide something exceptionally important to AI – they provide the training. AI is really about scaling human efforts. What Tamr looks to humans for is the small number of examples (“training labels”) that the machine can use to set the model parameters. In practice what this looks like is humans spend a small amount of time with the data, giving Tamr examples of errors and mistakes in the data, and the AI runs those lessons across the full data set(s). In addition, as new data is added, or data changes, the AI can surface instances where it is struggling to confidently make decisions (“low confidence matches”) and ask the human for input. This input, of course, goes to refine and update the models.

What role do large language models (LLMs) play in Tamr’s data quality and enrichment processes?

First, it’s important to be clear about what LLMs are good at. Fundamentally, LLMs are about language. They produce strings of text which mean something, and they can “understand” the meaning of text that’s handed to them. So, you could say that they are language machines. So for Tamr, where language is important, we use LLMs. One obvious example is in our conversational interface which sits on top of our entity data which we affectionately call our virtual CDO. When you speak to your real-life CDO they understand you and they respond using language you understand. This is exactly what we’d expect from an LLM, and that is exactly how we use it in that part of our software. What’s valuable about Tamr in this context is that we use the entity data as context for the conversation with our vCDO. It’s like your real-life CDO has ALL your BEST enterprise data at their fingertips when they respond to your questions – wouldn’t that be great!

In addition, there are instances where in cleaning data values or imputing missing values, where we want to use a language-based interpretation of input values to find or fix a missing value. For example, you might ask from the text “5mm ball bearing” what is the size of the part, and an LLM (or a person) would correctly answer “5mm.”

Lastly, underlying LLMs are embedding models which encode language meaning to tokens (think words). These can be very useful for calculating linguistic comparison. So, while “5” and “five” share no characters in common, they are very close in linguistic meaning. So, we can use this information to link records together.

How do you see the future of data management evolving, especially with advancements in AI and machine learning?

The “Big Data” era of the early 2000s should be remembered as the “Small Data” era. While a lot of data has been created over the past 20+ years, enabled by the commoditization of storage and compute, the majority of data that has had an impact in the enterprise is relatively small scale — basic sales & customer reports, marketing analytics, and other datasets that could easily be depicted in a dashboard. The result is that many of the tools and processes used in data management are optimized for ‘small data’, which is why rules-based logic, supplemented with human curation, is still so prominent in data management.

The way people want to use data is fundamentally changing with advancements in AI and machine learning. The idea of “AI agents” that can autonomously perform a significant portion of a person’s job only works if the agents have the data they need. If you’re expecting an AI agent to serve on the frontlines of customer support, but you have five representations of “Dell Computer” in your CRM and it’s not connected with product information in your ERP, how can you expect them to deliver high-quality service when someone from Dell reaches out?

The implication of this is that our data management tooling and processes will need to evolve to handle scale, which means embracing AI and machine learning to automate more data cleaning activities. Humans will still play a big role in overseeing the process, but fundamentally we need to ask the machines to do more so that it’s not just the data in a single dashboard that is accurate and complete, but it’s the majority of data in the enterprise.

What are the biggest opportunities for businesses today when it comes to leveraging their data more effectively?

Increasing the number of ways that people can consume data. There’s no question that improvements in data visualization tools have made data much more accessible throughout the enterprise. Now, data and analytics leaders need to look beyond the dashboard for ways to deliver value with data. Interfaces like internal 360 pages, knowledge graphs, and conversational assistants are being enabled by new technologies, and give potential data consumers more ways to use data in their day-to-day workflow. It’s particularly powerful when these are embedded in the systems that people already use, such as CRMs and ERPs. The fastest way to create more value from data is by bringing the data to the people who can use it.

Thank you for the great interview, readers who wish to learn more should visit Tamr.

#acquisitions#agent#agents#ai#ai agent#AI AGENTS#AI/ML#amp#Analytics#approach#bank#bearing#Big Data#Building#burnout#Business#career#CDO#CEO#challenge#Companies#comparison#computer#consolidated#consumers#course#crm#CTO#customer experience#dashboard

0 notes

Text

Comparative Analysis of BI Software Providers in the UAE Market

Business Intelligence (BI) software has become integral to the decision-making processes of organizations across the UAE. With a plethora of BI software providers offering diverse solutions, choosing the right one can be challenging. This blog aims to provide a comparative analysis of leading BI software providers in the UAE market, helping businesses make informed decisions tailored to their needs.

Understanding the BI Landscape in the UAE

The UAE's business landscape is characterized by diverse industries, including finance, real estate, healthcare, and government services. Each sector has unique requirements for data analytics and business insights, driving the demand for specialized BI solutions. As such, BI software providers in the UAE offer a range of features tailored to these specific industry needs.

Comparative Analysis of Leading BI Software Providers

Microsoft Power BI

Key Features: Integration with Microsoft ecosystem, intuitive data visualization, AI-powered analytics.

Strengths: User-friendly interface, scalability, robust security features.

Use Cases: Widely adopted across various industries for its seamless integration capabilities and powerful analytical tools.

Tableau

Key Features: Interactive dashboards, extensive data connectivity options, advanced analytics.

Strengths: Data visualization excellence, strong community support, easy-to-use interface for non-technical users.

Use Cases: Ideal for organizations needing powerful visual analytics and data storytelling capabilities.

Qlik Sense

Key Features: Associative data model, augmented intelligence, mobile-first design.

Strengths: Powerful data integration capabilities, real-time data analysis, scalability.

Use Cases: Suited for enterprises requiring complex data analysis and predictive modeling.

SAP BusinessObjects

Key Features: Enterprise-wide BI, ad-hoc reporting, predictive analytics.

Strengths: Integration with SAP ERP systems, comprehensive BI suite, governance and compliance features.

Use Cases: Preferred by large enterprises needing unified BI solutions integrated with their existing SAP infrastructure.

IBM Cognos Analytics

Key Features: AI-powered analytics, reporting, dashboarding.

Strengths: Scalability, enterprise-grade security, robust performance management capabilities.

Use Cases: Suitable for organizations looking for advanced analytics and AI-driven insights.

Factors to Consider When Choosing BI Software

Scalability and Flexibility: Ensure the BI software can grow with your business and adapt to future needs.

Ease of Use: Consider the learning curve for end-users and the availability of training and support.

Integration Capabilities: Assess how well the BI software integrates with existing IT infrastructure and data sources.

Cost and Licensing: Compare pricing models, including upfront costs, licensing fees, and ongoing maintenance expenses.

Conclusion

Choosing the right BI Software UAE involves assessing specific business requirements, industry challenges, and long-term strategic goals. Each provider offers unique strengths and capabilities tailored to different organizational needs, from small businesses to large enterprises. By conducting a comparative analysis based on features, strengths, and use cases, businesses can make informed decisions that drive efficiency, innovation, and competitive advantage in the dynamic UAE market. Embracing BI software is not just about data analytics; it's about empowering organizations to make smarter decisions and achieve sustainable growth in a rapidly evolving business environment.

0 notes

Text

Budgeting Software Market to Witness Excellent Revenue Growth Owing to Rapid Increase in Demand

Latest released the research study on Global Budgeting Software Market, offers a detailed overview of the factors influencing the global business scope. Budgeting Software Market research report shows the latest market insights, current situation analysis with upcoming trends and breakdown of the products and services. The report provides key statistics on the market status, size, share, growth factors of the Budgeting Software The study covers emerging player’s data, including: competitive landscape, sales, revenue and global market share of top manufacturers are Deltek Vision (United States), TimeCamp (United States), Cognos (Canada), Idu-Concept (South Africa), Microsoft (United States), Hyperion (United States), Riskturn (United States), Merlin Project (India), Questica Budget (United States), Freshbooks (Canada),

Free Sample Report + All Related Graphs & Charts @: https://www.advancemarketanalytics.com/sample-report/13711-global-budgeting-software-market?utm_source=Organic&utm_medium=Vinay

Budgeting software is used to provide business solutions with planning, managing, budgeting, and forecasting. Budgeting software is used for personal budgeting as well as business money management. It provides financial solutions to organizations. You Need a Budget (YNAB), Mvelopes, Quicken and others these are some best budgeting software. Rising need of track, as well as manage income, will help to boost global budgeting software market.

Thanks for reading this article; you can also get individual chapter wise section or region wise report version like North America, Europe or Southeast Asia.

0 notes

Photo

IBM Cognos Business Intelligence Solutions

Business intelligence has risen significantly over the past year. IT industry has gone through a fluctuating change of business and technology trends resulting in a market of business intelligence software, cloud computing vendors, and big data and analytic platforms among other offerings. One of the biggest cloud-based and cognitive-computing giants in the market today is IBM Cognos, a global technology company that provides cloud-based services and hardware-software. Cognos Business Intelligence provides organizations with a web-based BI suite toolset of reporting, score carding, planning, budgeting, forecasting, and analysis services. Visit the website for more info; https://www.chetu.com/solutions/bi-analytics/cognos.php

#Cognos Developer#Cognos Cloud Solution#IBM Cognos Bi Software#Cognos Implementation Services#Cognos Business Intelligence Software

0 notes

Text

Download Acronis Disk Director crack (keygen) latest version 2G6!

💾 ►►► DOWNLOAD FILE 🔥🔥🔥 Download Acronis Disk Dire Acronis Disk Director Crack With Cognos Disk Director, you can manage hard drive partitions and use features such as partition resizing, adding, and deleting. You can use this program to control the size of units and increase or decrease the number of units. All of these operations are done in a secure way to keep your data safe. Other features of this software include hard drive debugging, drive optimization, and data recovery. Acronis Disk Director Crack provides a more secure way to manage your data and hard drives. It provides powerful tools for optimizing disk usage and protecting your data. This program makes cloning discs very easy. You can easily transfer data, applications, and even your entire operating system from your old drive to your new drive. In addition, it helps to create and manage disk partitions to store various file systems. This application allows you to create, customize, and organize the structure of your hard drive. Acronis Disk Director You can use this app to recover lost or deleted data partitions. With Cognos Disk Director Business, you can merge, move, or resize partitions without losing data. There are powerful disk repair tools. You can make low-level changes to the contents of the disc to recover the missing data. Cognos Disk Director Crack provides a one-stop solution for backing up and restoring critical hard drive sectors. This program is suitable for viewing drives. In addition, it allows you to handle your data and stations the way you want. This application makes complex operations very easy and efficient. Its intuitive interface allows you to format the drive to the appropriate file system. In addition, you can split an existing disc into two parts. You can also install another operating system on another volume. You can use this program to instantly switch between basic and dynamic drives. Acronis Disc Director license key provides direct byte access to the disc. It provides high power, flexibility and ease of use for advanced disk applications. The current version now supports the Windows 10 pre-installed environment. Acronis Recovery Expert can help you recover lost or deleted disk partitions. The best thing about this application is that you can create and restore RAID-5 disks. Therefore, download this program now and perform all the features with confidence. It allows you to add, remove, or break mirrored volumes. Also, it allows you to convert primary volumes to logical and vice versa. It lets you change a disk status from online to offline and vice versa. This program allows you to copy or move a volume of one type as a volume of another type. It helps you to create dynamic disks that are accessible to the system. You can clone your data, partitions, or the entire operating system. It helps you to copy, resize, move, split, and merge volumes without data loss. The program is capable to initialize newly added hard disks. It lets you format and label volumes and assigns volume letters. With it, you can delete volumes or change file systems. The program has powerful tools for disk cleanup. Using it, you can hide or unhide data, partitions, or volumes. It allows you to explore volume data before performing operations. Above all, it lets you create WinPE or Linux bootable drives. And many more.

0 notes

Text

Education and Learning Analytics Market Size, Share, Scope, Analysis, Forecast, Growth and Industry Report 2032 – Policy and Reg

The Education And Learning Analytics Market Size was valued at USD 6.94 Billion in 2023 and is expected to reach USD 42.72 Billion by 2032, growing at a CAGR of 22.4% over the forecast period 2024-2032.

Education And Learning Analytics Market is undergoing rapid transformation, driven by digital innovation and the demand for data-informed decisions. Educational institutions are embracing analytics to improve learning outcomes, retention, and curriculum effectiveness.

Education And Learning Analytics Market continues to evolve as schools, universities, and corporate training programs increasingly adopt data-driven tools to track student progress, personalize content, and improve institutional performance. With the rise of online learning and blended education models, analytics has become essential for real-time feedback and long-term planning.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/4674

Market Keyplayers:

IBM Corporation (Watson Education, IBM Cognos Analytics)

Microsoft Corporation (Azure Machine Learning, Microsoft Power BI)

SAP SE (SAP Analytics Cloud, SAP SuccessFactors)

Oracle Corporation (Oracle Learning Management, Oracle BI)

Google LLC (Google Classroom, Google Analytics for Education)

SAS Institute Inc. (SAS Visual Analytics, SAS Enterprise Guide)

Tableau Software (Tableau Desktop, Tableau Prep)

Blackboard Inc. (Blackboard Analytics for Learn, Blackboard Predict)

D2L Corporation (Brightspace Insights, Brightspace Performance+)

Cornerstone OnDemand, Inc. (Cornerstone Learning, Cornerstone Analytics)

Trends Driving Market Growth

Personalized Learning Paths Learning analytics tools are enabling personalized education by identifying individual strengths, weaknesses, and preferences. Institutions are using this data to customize learning paths, ensuring better student engagement and performance.

AI and Predictive Analytics Integration The use of artificial intelligence and predictive analytics is helping educators identify at-risk students, forecast outcomes, and intervene early. These tools provide actionable insights for improving student success rates.

Real-Time Dashboards for Decision-Making Modern analytics platforms offer real-time dashboards and reporting tools that help educators and administrators monitor student behavior, attendance, and academic performance instantly.

Increased Adoption in Corporate Learning Learning analytics is not limited to academic institutions. Corporations are leveraging it to optimize employee training programs, track skill development, and align learning with business outcomes.

Enquiry of This Report: https://www.snsinsider.com/enquiry/4674

Market Segmentation:

By Component

Software

Services

By Deployment

On-premises

Cloud

By End Use

Academics

K-12

Higher Education

Enterprises

By Type

Descriptive

Predictive

Prescriptive

By Application

Curriculum Development & Intervention Management

Performance Management

Student Engagement

Budget & Finance Management

Operations Management

People Acquisition & Retention

Market Analysis

High Demand for Digital Learning Solutions The global shift to e-learning, especially post-pandemic, has increased demand for platforms with integrated analytics. Schools and enterprises alike are investing in smart learning systems.

Government Initiatives and Policy Support Governments in many countries are promoting digital education infrastructure, with policies encouraging data-driven decision-making in schools and universities.

EdTech Startups Fueling Innovation A surge in education technology startups is driving innovation in analytics platforms. These companies are introducing user-friendly, AI-powered tools to make data accessible to educators of all levels.

Privacy and Data Security Challenges While growth is promising, concerns around student data privacy and compliance with regulations like GDPR and FERPA are critical challenges for market players.

Future Prospects

Education And Learning Analytics Market is expected to grow at a substantial CAGR over the next five years, with expansion across K–12, higher education, and corporate training sectors. Future developments are likely to focus on the following:

Greater Use of Learning Experience Platforms (LXPs) These platforms will combine analytics, AI, and content to deliver hyper-personalized learning experiences at scale.

Integration with Augmented and Virtual Reality (AR/VR) Analytics will play a key role in measuring the effectiveness of immersive learning technologies and optimizing their use in classrooms and corporate training.

Global Expansion in Developing Regions Emerging economies are rapidly investing in EdTech infrastructure, opening new markets for analytics providers.

Hybrid Learning Optimization As hybrid learning becomes the norm, analytics will help institutions balance in-person and digital learning formats for maximum impact.

Access Complete Report: https://www.snsinsider.com/reports/education-and-learning-analytics-market-4674

Conclusion

The Education And Learning Analytics Market is set to play a pivotal role in the future of global education. By enabling smarter, data-informed decisions, analytics platforms are empowering educators, improving student outcomes, and transforming how institutions deliver knowledge. As technological advancements continue and adoption deepens, stakeholders must also focus on ethical use, data protection, and accessibility to ensure inclusive and impactful growth in the years to come.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Global Supply Chain Analytics Software Market Is Predicted To Propel Owing To Increasing Adoption Of Cloud-Based Supply Chain Analytics: Ken Research

Buy Now

According to the report analysis, ‘Global Supply Chain Analytics Software Industry Research Report 2021 Segmented by Major Market Players, Types, Applications and Countries Forecast to 2027’ states that SAP, PeopleSoft, JDA, Manhattan, Ariba, SAS, IBM Cognos, Tableau, TARGIT, Kinaxis, Birst, Logility and many more are the foremost entities which presently operating in the global supply chain analytics software market more actively for keep maintaining the governing position, ruling around the globe, obtaining the competitive edge, registering the great value of market share, generating the highest percentage of revenue and leading the highest market growth by spreading the awareness connected to the applications and advantages of supply chain analytics software, delivering the better customer satisfaction, employing the young and active personnel, increasing the benefits and features of supply chain analytics software, implementing the policies and strategies of enlargement and profit making, improving the qualitative and quantitative measures of such, and establishing the countless research and development programs.

On the basis of types, the global supply chain analytics software market is classified into cloud based and web based. On the basis of application, the global supply chain analytics software market is categorized into large enterprises and SMEs.

Improvements in industrial-grade digital technology, augment in inclination toward cloud-based supply chain management software, and growth in requirement for improved supply chain visibility majorly underwrite toward the supply chain management market growth. However, shortage of IT infrastructure in the underdeveloped nations and augment in security & privacy concerns limit the growth of the supply chain management software market.

Request For Sample Report-https://kenresearch.com/sample-report.php?Frmdetails=NDQ0NjY5

Moreover, surge in requirement of demand management solutions among enterprises and growth in implementation of supply chain management software in healthcare and pharmaceutical entities further boosts the growth of the market. However, high cost limited with the implementation and maintenance of supply chain management solution and augment in security & privacy concerns among enterprises are projected to impede the supply chain management market growth. On the contrary, improvement in requirement for transportation management systems (TMS) software and incorporation of blockchain technology in supply chain management software are predicted to present foremost opportunities for market enlargement in the near future.

The effective growth in volume and velocity of the data and the requirement to advance operation and supply chain efficiencies are propelling the growth of the Global Supply Chain analytics Software Market. Also, the advent of artificial intelligence and increasing machine learning are fuelling the growth of the Global Supply Chain Analytics Software Market.

Growing volume and velocity of data, require to improve operation and supply chain efficiencies, and the advent of Artificial Intelligence (AI) and machine learning into supply chain management would propel the market growth. However, data security issues and imprecisions in data sets are predicted to limit the market growth. Aspects such as growing adoption of cloud-based supply chain analytics and augmenting the awareness of supply chain analytics software among organizations would generate the opportunities. Therefore, it is predicted that the market of supply chain analytics software will increase around the globe more actively over the near future.

For More Information, refer to below link:-

Global Supply Chain Analytics Software Market Research Report

Related Reports

2021-2027 Global and Regional Silicon Carbide (SIC) Power Semiconductors Industry Status and Prospects Professional Market Research Report Standard Version

2021-2027 Global and Regional Semiconductor CVD Equipment Industry Status and Prospects Professional Market Research Report Standard Version

Follow Us

LinkedIn | Instagram | Facebook | Twitter | YouTube

Contact Us:-

Ken Research

Ankur Gupta, Head Marketing & Communications

+91-9015378249

0 notes

Text

Location Analytics Market Recent Study Segments, Future Growth, Business Prospects

Location analytics is used by the retail sector to know the historical spending habits from different geographical locations in order to increase their sales and productivity. It is also used by the government to detect as well as prevent disaster.

The usage of spatial data, increasing usage of various analytics tool and increasing need for customer satisfaction are the factors contributing to the growth of the overall markets. Whereas, issues relating to security is hampering the growth of the market.

Various manufacturers are investing to find solutions, for instance, Esri Maps for IBM Cognos deliver maps and geographic information to the environment.

According to Market Research Future, market has been segmented into component, software, deployment, end-users and region.

Get Free Sample Copy Report of Location Analytics Market@ https://www.marketresearchfuture.com/sample_request/2759

Out of these end-users, retail segment accounted for the largest market share majorly due to increasing number of internet users, increasing consumer preference for smartphone and high growth in the number of tech savvy consumers.

Out of these software, geocoding accounted for the largest market share majorly due to increasing number of educational institutes, hotels among others is helping location analytics to make proper decisions.

The key players in the global location analytics market include TIBCO Software Inc. (U.S.), ESRI (U.S.), Pitney Bowes (U.S.), Oracle Corporation (U.S.), SAP SE (Germany), SAS Institute Inc. (U.S.), Galigeo (France), Cisco Systems, Inc. (U.S.), Alteryx, Inc. (U.S.), IBM Corporation (U.S.), Microsoft Corporation (U.S.) among others.

By Region, MRFR analysis shows that, North-America accounted for the largest market share because of the technological advancements, growing number of application developers and rapid digital transformation.

Access Complete Report @ https://www.marketresearchfuture.com/reports/location-analytics-market-2759

About Market Research Future:

At Market Research Future (MRFR), we enable our customers to unravel the complexity of various industries through our Cooked Research Report (CRR), Half-Cooked Research Reports (HCRR), Raw Research Reports (3R), Continuous-Feed Research (CFR), and Market Research & Consulting Services.

MRFR team have supreme objective to provide the optimum quality market research and intelligence services to our clients. Our market research studies by products, services, technologies, applications, end users, and market players for global, regional, and country level market segments, enable our clients to see more, know more, and do more, which help to answer all their most important questions.

In order to stay updated with technology and work process of the industry, MRFR often plans & conducts meet with the industry experts and industrial visits for its research analyst members.

Contact

AkashAnand,

Market Research Future

Office No. 528, Amanora Chambers

Magarpatta Road, Hadapsar,

Pune – 411028

Maharashtra, India

+1 646 845 9312

Email: [email protected]

0 notes

Text

Connected Device Analytics Market Evolution, Emerging Trends, and Forecast 2032

TheConnected Device Analytics Market Size was valued at USD 26.94 Billion in 2023 and is expected to reach USD 173.96 Billion by 2032 and grow at a CAGR of 23.0% over the forecast period 2024-2032

The Connected Device Analytics Market is experiencing rapid expansion as businesses and industries increasingly rely on IoT-driven data insights. With billions of connected devices generating vast amounts of real-time data, organizations are leveraging analytics to enhance efficiency, improve decision-making, and drive innovation. Growing demand for predictive analytics, AI-driven insights, and real-time monitoring is propelling this market forward.

The Connected Device Analytics Market continues to evolve as industries such as healthcare, manufacturing, retail, and smart cities integrate IoT devices into their operations. The ability to process, analyze, and derive actionable insights from connected devices is revolutionizing business models. As digital transformation accelerates, the demand for sophisticated analytics solutions that provide enhanced security, automation, and data-driven intelligence is expected to surge.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3727

Market Keyplayers:

Microsoft (US) (Azure IoT, Power BI)

Oracle Corporation (US) (Oracle IoT Cloud, Oracle Analytics Cloud)

IBM Corporation (US) (IBM Watson IoT, IBM Cognos Analytics)

SAP SE (Germany) (SAP Leonardo IoT, SAP Analytics Cloud)

PTC (US) (ThingWorx, Kepware)

Cisco (US) (Cisco Kinetic, Cisco Jasper)

Google (US) (Google Cloud IoT Core, Google BigQuery)

SAS Institute (US) (SAS IoT Analytics, SAS Visual Analytics)

Adobe (US) (Adobe Analytics, Adobe Sensei)

Teradata (US) (Teradata Vantage, Teradata IoT Analytics)

AWS (US) (AWS IoT Analytics, Amazon QuickSight)

HPE (US) (HPE Edgeline, HPE Vertica)

Hitachi (Japan) (Hitachi Lumada, Pentaho)

Software AG (Germany) (Cumulocity IoT, TrendMiner)

GE (US) (GE Digital Predix, GE APM (Asset Performance Management))

Cloudera (US) (Cloudera DataFlow, Cloudera Machine Learning)

Guavus (US) (Guavus AI-based Analytics, Guavus Reflex)

Splunk (US) (Splunk Industrial IoT, Splunk Enterprise)

TIBCO Software (US) (TIBCO Spotfire, TIBCO Streaming)

Qlik (US) (Qlik Sense, Qlik Data Integration)

Salesforce (US) (Salesforce IoT Cloud, Tableau)

Infor (US) (Infor IoT, Infor Birst)

Mnubo (Canada) (Mnubo SmartObjects, Mnubo Data Science Studio)

Arundo Analytics (US) (Arundo Edge, Arundo Analytics Platform)

Key Trends Driving Market Growth

1. Rise of Edge Computing and AI-Driven Analytics

With the increasing number of IoT devices, edge computing has emerged as a crucial trend. Organizations are leveraging AI-driven analytics at the edge to process data closer to the source, reducing latency and enhancing real-time decision-making. This approach enables faster responses in critical applications such as healthcare, autonomous vehicles, and industrial automation.

2. Expansion of Predictive and Prescriptive Analytics

Businesses are shifting from traditional descriptive analytics to predictive and prescriptive analytics to anticipate trends and optimize operations. Connected devices equipped with advanced analytics capabilities can forecast equipment failures, monitor energy usage, and improve supply chain efficiency, significantly reducing operational costs.

3. Growing Adoption of 5G Connectivity

The rollout of 5G networks is significantly enhancing the capabilities of connected devices. With ultra-low latency and high-speed data transfer, 5G enables seamless real-time analytics, making applications like smart cities, autonomous vehicles, and remote healthcare monitoring more efficient and reliable.

4. Increasing Focus on Cybersecurity and Data Privacy

As connected devices collect vast amounts of sensitive data, cybersecurity and data privacy have become critical concerns. Organizations are investing in advanced encryption, AI-powered threat detection, and blockchain technology to ensure data integrity and compliance with global security regulations such as GDPR and CCPA.

5. Integration of IoT with Cloud and Hybrid Analytics

Many enterprises are adopting cloud-based and hybrid analytics models to handle massive datasets generated by connected devices. Cloud platforms enable scalability, while hybrid approaches offer a balance between security and efficiency, ensuring businesses can analyze IoT data in real-time while maintaining control over sensitive information.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3727

Market Segmentation:

By Component

Solution

Service

By Application

Sales and Customer Management

Security & Emergency Management

Remote Monitoring

Predictive Maintenance Asset Management

Inventory Management

Energy Management

Building Automation

Others

By Organization Size

Large Enterprise

Small and Medium Size Enterprise

By Deployment Mode

On-premises

Cloud

Market Analysis and Current Landscape

Surging IoT Adoption: The number of IoT-connected devices is projected to exceed 30 billion by 2030, generating massive amounts of analyzable data.

Rising Need for Operational Efficiency: Companies are leveraging analytics to optimize processes, reduce downtime, and enhance predictive maintenance.

Government and Industry Regulations: Compliance with data security standards and regulations is prompting businesses to adopt robust analytics solutions to manage and secure IoT-generated data.

Competitive Industry Landscape: Tech giants such as Microsoft, IBM, Google, AWS, and SAP are investing in advanced connected device analytics platforms, intensifying market competition.

Despite these promising trends, challenges such as data silos, interoperability issues, and the high cost of analytics implementation remain barriers to widespread adoption. However, as technology advances, businesses are finding scalable and cost-effective solutions to overcome these obstacles.

Future Prospects: What Lies Ahead?

1. Evolution of AI-Powered Autonomous Systems

The next phase of connected device analytics will witness the rise of AI-powered autonomous systems capable of making real-time decisions without human intervention. These systems will be widely used in smart factories, healthcare, transportation, and logistics, driving unprecedented efficiency.

2. Growth of Digital Twins Technology

Digital twins, virtual replicas of physical assets, are becoming mainstream in industries such as manufacturing, construction, and energy. These AI-driven models use connected device analytics to simulate scenarios, predict failures, and optimize asset performance in real time.

3. Blockchain for Secure Data Transactions

Blockchain technology will play a crucial role in securing IoT transactions by ensuring transparency, immutability, and authentication. This will be particularly beneficial for industries dealing with sensitive data, such as financial services, healthcare, and supply chain management.

4. Expansion into Smart Homes and Consumer Electronics

As IoT adoption grows in the consumer segment, smart home devices, wearables, and connected appliances will rely on analytics to improve user experiences. AI-powered assistants, personalized recommendations, and home automation solutions will redefine how consumers interact with their devices.

5. Industry-Specific Analytics Solutions

Companies are increasingly demanding industry-tailored analytics solutions that cater to their specific operational needs. Custom-built analytics platforms for automotive, energy, retail, and telecom sectors will drive deeper market penetration and growth.

Access Complete Report: https://www.snsinsider.com/reports/connected-device-analytics-market-3727

Conclusion

The Connected Device Analytics Market is set for significant expansion, driven by technological innovations, increasing IoT adoption, and the rising demand for real-time data insights. As industries embrace AI, edge computing, and predictive analytics, businesses that invest in advanced analytics solutions will gain a competitive edge. The future of this market will be shaped by the seamless integration of cloud, AI, and cybersecurity measures, ensuring connected devices operate efficiently and securely. With continued advancements, connected device analytics will not only enhance business operations but also transform how industries leverage data for smarter decision-making and automation.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Connected Device Analytics Market#Connected Device Analytics Market Analysis#Connected Device Analytics Market Scope#Connected Device Analytics Market Growth#Connected Device Analytics Market Trends

0 notes

Text

Location Analytics Market Global Analysis with Focus on Opportunities, Development Strategy

Market Research Future (MRFR) Announces the Publication of its Half Cooked Research Report – Location Analytics Market 2016-2022

According to Market Research Future Analysis, Location Analytics Market has been valued at USD 25 billion by 2022 growing with 19% of CAGR during forecast period 2016 to 2022.

Location analytics is used by the retail sector to know the historical spending habits from different geographical locations in order to increase their sales and productivity. It is also used by the government to detect as well as prevent disaster.

The usage of spatial data, increasing usage of various analytics tool and increasing need for customer satisfaction are the factors contributing to the growth of the overall markets. Whereas, issues relating to security is hampering the growth of the market.

Various manufacturers are investing to find solutions, for instance, Esri Maps for IBM Cognos deliver maps and geographic information to the environment.

According to Market Research Future, market has been segmented into component, software, deployment, end-users and region.

Get Free Sample Copy Report of Location Analytics Market@ https://www.marketresearchfuture.com/sample_request/2759

Component-

Software

Service

Deployment

On-premises

Hosted

Software

Geocoding

Reverse Geocoding

Thematic Mapping

Reporting

Data Integration

Spatial analysis

Others

End-users

BFSI

IT & Telecommunications

Retail

Healthcare

Government

Transportation

Energy & Utilities

Government

Others

Region-

North-America

Europe

Asia-Pacific

RoW

Out of these end-users, retail segment accounted for the largest market share majorly due to increasing number of internet users, increasing consumer preference for smartphone and high growth in the number of tech savvy consumers.

Out of these software, geocoding accounted for the largest market share majorly due to increasing number of educational institutes, hotels among others is helping location analytics to make proper decisions.

The key players in the global location analytics market include TIBCO Software Inc. (U.S.), ESRI (U.S.), Pitney Bowes (U.S.), Oracle Corporation (U.S.), SAP SE (Germany), SAS Institute Inc. (U.S.), Galigeo (France), Cisco Systems, Inc. (U.S.), Alteryx, Inc. (U.S.), IBM Corporation (U.S.), Microsoft Corporation (U.S.) among others.

By Region, MRFR analysis shows that, North-America accounted for the largest market share because of the technological advancements, growing number of application developers and rapid digital transformation.

However, Asia-Pacific is expected to grow over the forecast period, 2016-2022. Countries such as China, Korea contributes largely to the overall growth of the market. The reason attributed are improved broadband infrastructure and usage of sensors.

Market Research Future (MRFR) publishes periodic market research reports on niche and emerging technologies. For more information on our market research, please visit-

Access Complete Report @ https://www.marketresearchfuture.com/reports/location-analytics-market-2759

About Market Research Future:

At Market Research Future (MRFR), we enable our customers to unravel the complexity of various industries through our Cooked Research Report (CRR), Half-Cooked Research Reports (HCRR), Raw Research Reports (3R), Continuous-Feed Research (CFR), and Market Research & Consulting Services.

MRFR team have supreme objective to provide the optimum quality market research and intelligence services to our clients. Our market research studies by products, services, technologies, applications, end users, and market players for global, regional, and country level market segments, enable our clients to see more, know more, and do more, which help to answer all their most important questions.

In order to stay updated with technology and work process of the industry, MRFR often plans & conducts meet with the industry experts and industrial visits for its research analyst members.

Contact

AkashAnand,

Market Research Future

Office No. 528, Amanora Chambers

Magarpatta Road, Hadapsar,

Pune – 411028

Maharashtra, India

+1 646 845 9312

Email: [email protected]

0 notes

Link

How to get Accounting Jobs in Hong Kong

Are you looking for accounting jobs in Hong Kong? if yes, then you must know that what can help you in finding the right accounting job here. Accounts is one of the most prominent work sectors in Hong Kong. With the constant need of potential candidates this domain has become one of the most popular work areas. Referred as language of business in Hong Kong, as it helps in interpreting and analyzing financial statements and information, the accountancy profession can work as a key to open doors for multitude of professional opportunities.

Educational Qualification Required for

To start a professional journey in Hong Kong as an accountant one needs to understand the set qualification, professional norms in the city. If one has to start a career in accounting here, one must have a CPA (Certified Public Accountant) certification which states your potential in front of the recruiter.

For a native to start a career in accounting job, he or she needs to complete four modules in accounting course:

Auditing and Information Management

Financial Reporting

Financial Management

Taxation

Each of these modules last for 15 weeks. After every module the candidates needs to appear an examination so, the authorities conduct six-hour open book examination in two sessions, to finally issue the passing certificate.

if you are a foreign professional, then you must have a valid accounting graduation degree from your native country and if you have some work experience then you must have a valid relieving letter. As a foreign national one has to undergo a small training and has to gain a CPA certification in order to get a job.

Skills Required for Accounting Jobs in Hong Kong:

Ability to work on advanced Excel.

One should have experience in enterprise resource planning (ERP) system (e.g., SAP, Oracle)

Familiarity with big data analysis, advanced financial modelling techniques and SQL

Understanding of business intelligence software (e.g., IBM Cognos)

Capable to work with Microsoft Visual Basic.

Familiarity with Hyperion (for analyst and financial reporting roles)

Tally

Familiarity with financial statements.

Understanding of QuickBooks (for positions with small and midsize firms)

User Interface Database and Query software System

Knowledge of Financial Audit software such as Brentmark Estate Planning, Delphi, RSM McGladrey Auditor Assistant, Oracle E-Business Suite Financials

Hands on experience with Taxation Software BNA Income Tax Planning, ATX Total Tax Office, Intuit Lacerte, IntelliMax Classic.

Ability to work on Accounting software such as Intuit, Fund accounting software, Sage 50 Accounting.

Work Visa Requirement for Foreign Professionals to Take-Up Accounting Jobs in Hong Kong

To gain a work visa in Hong Kong it is crucial to have a job offer in hand. Once you have a job offer in hand your employer will apply for your work visa the documents required for this are as follows:

Valid passport with at least 6 months validity.

Employment visa application form (fully filled).

Job offer letter.

Recent passport sized photographs (2 to 3).

Travel Insurance.

Health Insurance.

Once you get the work visa in Hong Kong you are a legal candidate to land in the city and work till the visa expires once the expiry date is near your employer can file for renew 6 months prior to the expiry date.

Salary One can Expect as Accounting professional in Hong Kong:

Finally reaching their late career with 20 years and higher work experience, employees earn an average total pay of HK$360,000.

As an entry-level Accountant with less than 1-year of work experience one can expect to earn an average total salary of HK$145,000.

At early career stage as Accountant with 1-4 years of work experience one can make an average total pay of HK$264,957.

Reaching mid-level career as Accountant with 5-9 years of work experience one can earn an average total pay of HK$291,695.

Becoming experienced as Accountant with 10-19 years of work experience one can earn an average total salary of HK$297,349.

So, if you want to take up the accounting job in Hong Kong, then read this article to understand the requirement of the job and make a great career.

Author’s Bio

Nikhil Mishra is a marketing communications professional with Monster.com. Monster is a global online employment solution for people seeking jobs and the employers who need great people. Nikhil has a diverse career and has been a former journalist, copywriter and an avid communications professional. His passion for the field of marketing and communications is a motivating force for his present career choice.

0 notes

Text

I did it my way. (With a Little Help from My Friends)

‘I planned each charted course Each careful step along the byway And more, much more than this I did it my way’ - Sinatra

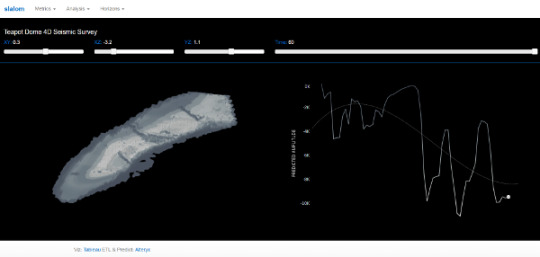

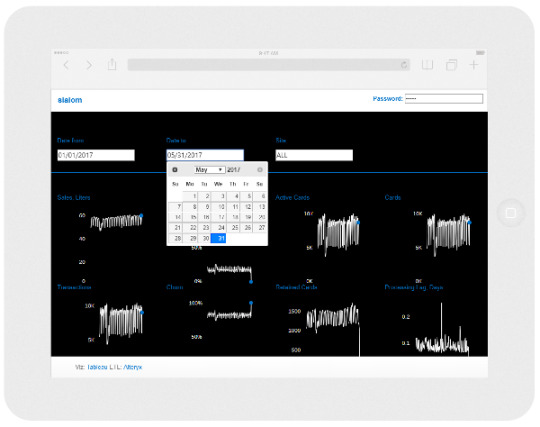

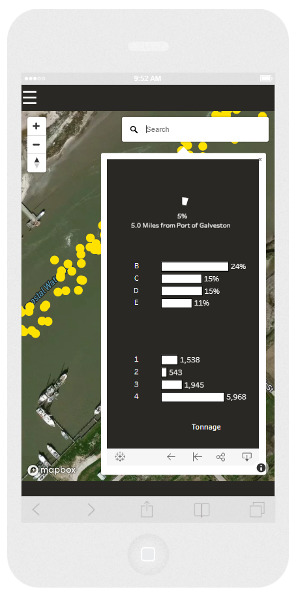

Over the last three years, I’ve built out some proof-of-concept data visualization applications for some large scale Enterprise clients, across a multitude of vertical markets.

These have included, in no particular order:

Semi-conductor manufacturing

Wearable technology manufacturing

Pharmaceutical distribution

Financial

Oil & Gas

Retail

Consumer Hardware & Software

Mobile Communications

Energy Utility

Without exception, every Enterprise client presented similar challenges - namely, how to visually represent data at scale in an insightful, and actionable format.

Here is my methodology.

I adopted a Data strategy:

Data as a service,

ETL as a service,

Data Science as a service, and

Data Visualization as a service.

Data as a Service (DaaS)

Data Acquisition

Technology is making acquiring data in an more automated manner arguably easier and relatively cheaper, increasing the volume and velocity of data produced.

SCADA (Supervisory control and data acquisition devices), Bank ATM’s, merchant credit card swipe terminals, website forms, and sensors - such as Infra-red, Radar and Sonar - even when you compose a tweet...all examples of data acquisition.

With more and more IoT (Internet of Things) devices becoming available, automation of data collection is becoming more even more universal and ubiquitous.

Data Storage

If a record has a time-stamp, it can be recognized as an event, or a transaction; i.e. something happened at this time, on this day, in this month, in this year. These records are (normally) stored in a database.

That was my bread and butter, making sense of events that have happened - or, what was happening in (near) real-time. In recent engagements, it’s the latter that seemed to be more pervasive - sustaining ‘live’ data connections that are capable of very fast refresh rates - sometimes on a per second basis (but more often than not, updated daily).

Data as a Service at the Enterprise meant I’d be connecting to a “Data Lake” such as Hadoop/Hive, a Teradata warehouse on-premise database, or a cloud database like Redshift on the Amazon Web Services platform.

Alternatively (or sometimes additionally), I’d be connecting to ‘NoSQL’ databases like Mongo and Cassandra, while location data was held in GIS (Geo-spatial Intelligence Software) databases like PostGIS or ESRI.

There were also databases that are designed to take advantage of in-memory technologies, and are suited to analytical applications; such as SAP Hana, Memqsl, and Snowflake.

My preferred solution for the foundation of a Data as a Service based architecture is Exasol, because it is proven to be capable of performing analytical tasks at scale, leveraging massively parallel processing and in-memory technologies, enabling rapid responses to intensive queries over massive data sets.

ETL (Extract, Transform, Load) as a Service

‘Extracting’ reads data from a source database (and potentially multiple other sources), ‘Transforming’ is the process of converting this data (joining, unions, performing calculations, cleansing and aggregating) and ‘Loading’ writes to the target database (or writing back to the source).

Business Intelligence applications such as Tableau, Qlik, and Microstrategy often require data to be ‘shaped’ or ‘structured’ in a certain way; usually in a columnar format.

This used to be an arduous task - involving writing batch scripts - but no longer. There are a plethora of enterprise ETL solutions available such as AWS Glue, Apache Kafka and Informatica.

My preferred solution for the basis of an ETL as a Service based architecture is Alteryx, because it is proven to be capable of extracting data from multiple sources - including Hadoop/Hive, Mongo, ESRI and Exasol.

Using an intuitive drag and drop GUI (Graphical User Interface) - it is possible to develop a repeatable, re-usable data transformation as an automated process (also known as a workflow) that can be run on a scheduled basis.

Data Science as a Service

Traditionally, Enterprises would refer complex analytical and statistical tasks such as predicting, modelling, forecasting and so forth to highly skilled data scientists.