#computer audio software

Explore tagged Tumblr posts

Text

106 notes

·

View notes

Text

I am, like, really enthusiastic about floating-point numbers. Idk. I think it's just because they are needlessly hated on by some software developers who never learned the details of the IEEE standard and seem to think floats encounter rounding errors at random. I guess I just like to be counterculture like that. I saw that Audacity said my encoding was 32-bit float and I was like "hell yeah, 24 bits of precision (23 explicit, 1 implicit), 8-bit mantissa, and 1 sign bit? You go, digital audio workstation!"

#float#floating point#32 bit#DAW#digital audio workstation#audio#number#numerical#digital audio#software#computing#technology#counterculture#IEEE

4 notes

·

View notes

Text

finally captured some recordings i'm happy with <3 rest of tonight is gonna be for decorating + scanning in the zine and linking downloads for the audio files

#btw transferring songs to computer is sooooo easy w this keyboard. my old casio would store songs in this weird proprietary audio format#that NOTHING could read. so you were just stuck. i had to find some reddit person who made software allowing you to convert the casio files#to midi#but w this current keyboard it saves shit as .wav and all you gotta do is hit 'copy' and it saves it to the flash drive in like 5 seconds#and i was able to play that shit on my computer immediately. so now all i gotta do is convert the wav to mp3

8 notes

·

View notes

Text

in other news i used a different computer to record a podfic and the quality is so much worse but i do Not want to do it again so. >:(

#BETRAYED!!!! BETRAYED BY MY OWN SOFTWARE!!!!!#i dont understand how this happened my settings are the same its the same mic its the same program#but the computer is different. so i guess its just gonna sound like shit now.#>:( >:( >:( I HAVE TO USE THIS COMPUTER TO EDIT FOR FUCKS SAKE BUT I CANT RECORD ON IT????#for context: headphones wont connect to my main laptop anymore. and bluetooth audio editing makes me want to leap of faith into a sidewalk.#so now i have to. 1) set up original laptop to record. 2) record. 3) get files to 2nd laptop. 4) set up 2nd laptop for editing. 5) export.#fuck me man i wouldnt even have this little bitch if my brother didnt ditch it but i guess its my responsibility now.#i hate this baby i dont even vibe w it :/

6 notes

·

View notes

Text

if i hear another person talk about a retro game, system, or computer having a specific "soundfont" i think i'm gonna lose all will to live and spontaneously drop dead in front of them

#juney.txt#not how it works besties#older 8 bit machines generally had bespoke synthesizer chips that could output some square waves and some noise.#maybe some sine and saw waves if you were lucky#the megadrive had a yamaha chip in it but sega the bastards never gave devs proper instructions on how to use it#so a lotttt of devs just used the inbuilt settings on the development software they had#so a lot of megadrive games sound similar even though the system was technically capable of a lot more </3#the snes was allllll samples all day#any sounds you wanted you had to hand to the chip in advance#no inbuilt synths for you#anyway in all of these cases music data was just stored as raw computer instructions.#literally just code telling the audio hardware what to do each frame#no midis involved and simply slapping a soundfont someone made on a midi file someone else made does not constitute an ''8-bit'' cover

12 notes

·

View notes

Text

singing is HARD, guys. especially when you’re trying to turn it into something 😭 garageband don’t fail me now

#silly nonsense#just rambling#no but seriously#anyone know of better audio editing software that’s free?#cause fuck#i’m not good at computer things lol

4 notes

·

View notes

Text

Me: yay hurray I’m done with college I’m graduating!

Adobe: you don’t get our products for free anymore

Me: OH SHIT

#browniefox speaks#anyway if anyone has audio recording recs#tell me#I also could use a video editing one#but I edit mostly on my sisters computer and she’s still a student#my laptop is a refurb and has something up with it’s like#visual processor or something?#idk I’m not a computer guy#I just know it hates video editing software

7 notes

·

View notes

Text

after literally spending hours today on it, I now have a highish-quality version of the complete first My Hero Academia stage play. I'm going to go insane

#first i couldn't figure out how to download the video i found on the internet and had to get a workaround#the download kept failing so every few minutes for literally like an hour and a half i had to keep telling it to retry#i get that download and it's way lower quality than the original video i downloaded#i figure out if i just screen record the video i get a higher quality recording#but this video is 2.5 hours so i have to figure out if i can turn the volume down on my computer and still record the audio#(I can thank god)#i leave that going for a little while#get back to it 2.5 hours later#realize the screen recordings have a max length of 2 hours#start recording from half an hour back#eventually i have 2 recordings that have the entirety of the screenplay#it's been like 10 hours since i started though to be fair i did take a 3 hour break to watch the queen's gambit with my mom#i open up the video in editing software#my computer decides i don't have enough storage so i have to clear a bunch of stuff#i realize that the video title has japanese characters in it is interfering with the editing software#i rename the files#i recreate the video#i restitch them together seamlessly and clear the excess at the beginning and end#at some point i try to separate the audio and video on the software bc that's something it can do#realize this is a huge mistake bc the audio quality goes WAY down#get distracted by izuku's actor's singing during bakugou and shouto's fight during the sports fest#bc hot DAMN he can SING#i have no idea what he's saying but i am having FEELINGS#that's my favorite song#anyway. now i have it. i have the video#it's exporting now

3 notes

·

View notes

Text

much like jesus himself, dan just wants to spread the Good Word

(that we should all be vers spinning)

unfortunately i do need you all to watch this 2 minute clip of dan talking about showering facing frontwards vs facing backwards which is also him talking about fucking which is also once again Daniel Howell Sharing Too Much About His Sex Life

Instagram Live - October 7, 2022

#one of his finest yaps I love him so much#also i need to confess#this was the first video I think I posted on here#and I didn’t know iPhones could screen record with audio#and I didn’t know how to screen record on my laptop either#so I just used the record feature of QuickTime in my macbook but that records the screen along with whatever audio the laptop mic picks up#which means the sound you hear is the audio that was coming out of the tinny computer speakers as well as my macbook fan working overtime#because that was literally what I thought was my best possible option#like i figured people who make edits and post clips must’ve had some special software#so sorry mortifying. the captions are great though! the hustle was there!#anyway fantastic iconic top tier dan clip#dan howell#dan and phil

644 notes

·

View notes

Text

Revolutionizing Sound: The Laptop Studio and the Future of Electronic Music Production

Key Insights Research highlights that modern electronic music, powered by laptops and software, has revolutionized the creative landscape. Tools like DAWs, AI, and cloud platforms are driving greater experimentation and collaboration among producers. While technology reshapes possibilities, there’s still a debate on its effect on the authenticity of artistic expression. The Laptop Studio…

#affordable music studio#beat making#bedroom producer#computer music production#creating music on a laptop#DAW#digital audio workstation#DIY music production#electronic music artists#electronic music creation#electronic music production#home studio#independent music production#laptop music studio setup#laptop studio#modern music production#music production equipment#music production essentials#music production for beginners#music production gear#music production gear for beginners#music production process#music production setup#music production software#music production techniques#music production tips#music production tools#music production tutorials#music production workflow#portable music production

0 notes

Text

What computer audio really means!

OK, so I realise that this may not be, for others, the revelation that I have had today. I’ve realised that I can quite easily and relatively cheaply create a ‘studio quality’ home audio system that will give me the functions of my current orthodox hi-fi system, but using a PC and upgradable/updatable software at the heart, as a virtual studio control centre, not merely as an additional file…

View On WordPress

0 notes

Text

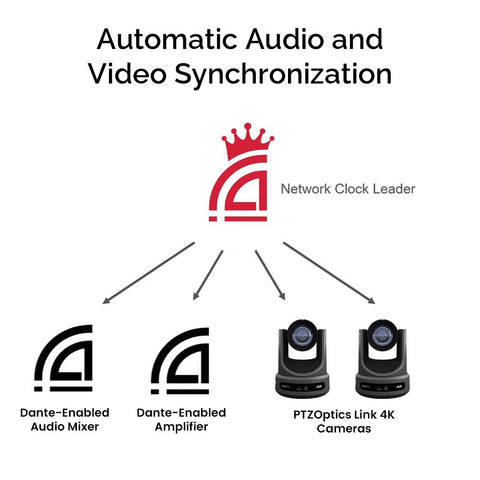

Exclusive Discount on DanteAV PTZ Cameras - Videoguys

New Post has been published on https://thedigitalinsider.com/exclusive-discount-on-danteav-ptz-cameras-videoguys/

Exclusive Discount on DanteAV PTZ Cameras - Videoguys

Save $500 on PTZOptics Link 4K PTZ Cameras

Your Dante Workflow is About to Get Easier Ready to add video to your Dante setup? With Dante AV-H™ and the latest in auto-tracking capabilities, the Link 4K fits easily into even the most complex Dante design or workflow. The Link 4K combines broadcast quality with Dante’s simple discovery, set up and management, simplifying and professionalizing any video production installation.

What is Dante AV-H? Dante AV-H brings device interoperability and the power of Dante to H.26x endpoints. With Dante AV-H, all video and audio routing, configuration, and management of devices is done with one easy to use platform. The Link 4K will automatically sync up with your Dante system clock leader ensuring seamless audio and video synchronization, and is able to output Dante AV-H video on your network natively, along with audio sources connected to the 3.5mm audio input. Designed with the Future in Mind Every Link 4K camera features SDI, HDMI, USB, and IP output for unparalleled performance and versatility. The Link 4K features 4K at 60fps over HDMI, USB, and IP. Using technology by Dante, the Link 4K can also provide 4K at 60fps resolution over Dante AV-H. The Link 4K offers built-in auto-tracking capabilities — no need to run software on another computer — freeing teams of the need for a camera operator.

PTZOptics also makes a line of PTZ Cameras with NDI|HX

Now with NDI|HX license included!

PTZ and auto-tracking features, now more accessible. Combining HDMI, SDI, USB, and IP outputs into one camera, with native NDI® support coming soon. Perfect for live streaming and video production, offering high-quality 1080p60fps resolution & excellent low-light performance thanks to SONY CMOS sensors.

starting at $999.00

Featuring auto-tracking for a more intelligent video production workflow. The Move 4K is capable of 4K at 60fps (1080p at 60fps over SDI), future-proofing your technology investment while still accommodating HD and Full HD video resolutions equipment. The Move 4K offers high performance in low-light, PoE+ capabilities, & a built-in tally light.

starting at $1,799.00

#4K#amp#audio#Cameras#computer#Design#devices#easy#endpoints#equipment#Features#Full#Future#hdmi#investment#Light#Link#management#network#One#performance#platform#power#Production#PTZOptics#resolution#sensors#setup#Software#sony

0 notes

Text

Recording Great Guitar Tones on a Budget

Recording Great Guitar Tones on a Budget, we show you some great free guitar software that you can use to get great tones.

In this Recording Great Guitar Tones on a Budget article, we will discuss some ways to get great recorded guitar tones without spending a fortune. The way to get huge guitar tones, with massive amounts of sustain and a sound that will make your guitar stand out from the pack. Recording Great Guitar Tones on a Budget For the first part of this series, I’m going to share some links to free…

View On WordPress

#4-Track tape recorder#Ableton Live Lite#amp#amplifier#analog#analogue#Apple#AU#Audacity#Audio Unit#budget#compression#computer#Cubase LE#DAW#Digital#effects#EQ#free software#Garageband#Guitar#Guitar Player 6#Guitar Tone#How to Record Great Rock Guitar Tones on a Budget#Les Paul#MAc#magnetic tape#metal#microphone#MPC Beats

1 note

·

View note

Text

my toxic trait is that maybe i have the fuck it we ball compilation and therefore all related WIPS written down in notepad ++ bc it's very lightweight, has a solid autosave feature and tabs. but at least when i push my computer too far by having 5 tabs of 2 month's worth of tumblr archives while processing a video file and photoshop open bc i got distracted halfway through a meme on top of all the other non fob stuff that i refuse to close i lose no progress.

#small mercy to my computer: I dont compose music so the audio editing software only opens for memes and venetian snares cat pictures#one day i prommy i will just write a fanfic in jupyter notebook just to say i've done that

1 note

·

View note

Text

Anthropic's stated "AI timelines" seem wildly aggressive to me.

As far as I can tell, they are now saying that by 2028 – and possibly even by 2027, or late 2026 – something they call "powerful AI" will exist.

And by "powerful AI," they mean... this (source, emphasis mine):

In terms of pure intelligence, it is smarter than a Nobel Prize winner across most relevant fields – biology, programming, math, engineering, writing, etc. This means it can prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch, etc. In addition to just being a “smart thing you talk to”, it has all the “interfaces” available to a human working virtually, including text, audio, video, mouse and keyboard control, and internet access. It can engage in any actions, communications, or remote operations enabled by this interface, including taking actions on the internet, taking or giving directions to humans, ordering materials, directing experiments, watching videos, making videos, and so on. It does all of these tasks with, again, a skill exceeding that of the most capable humans in the world. It does not just passively answer questions; instead, it can be given tasks that take hours, days, or weeks to complete, and then goes off and does those tasks autonomously, in the way a smart employee would, asking for clarification as necessary. It does not have a physical embodiment (other than living on a computer screen), but it can control existing physical tools, robots, or laboratory equipment through a computer; in theory it could even design robots or equipment for itself to use. The resources used to train the model can be repurposed to run millions of instances of it (this matches projected cluster sizes by ~2027), and the model can absorb information and generate actions at roughly 10x-100x human speed. It may however be limited by the response time of the physical world or of software it interacts with. Each of these million copies can act independently on unrelated tasks, or if needed can all work together in the same way humans would collaborate, perhaps with different subpopulations fine-tuned to be especially good at particular tasks.

In the post I'm quoting, Amodei is coy about the timeline for this stuff, saying only that

I think it could come as early as 2026, though there are also ways it could take much longer. But for the purposes of this essay, I’d like to put these issues aside [...]

However, other official communications from Anthropic have been more specific. Most notable is their recent OSTP submission, which states (emphasis in original):

Based on current research trajectories, we anticipate that powerful AI systems could emerge as soon as late 2026 or 2027 [...] Powerful AI technology will be built during this Administration. [i.e. the current Trump administration -nost]

See also here, where Jack Clark says (my emphasis):

People underrate how significant and fast-moving AI progress is. We have this notion that in late 2026, or early 2027, powerful AI systems will be built that will have intellectual capabilities that match or exceed Nobel Prize winners. They’ll have the ability to navigate all of the interfaces… [Clark goes on, mentioning some of the other tenets of "powerful AI" as in other Anthropic communications -nost]

----

To be clear, extremely short timelines like these are not unique to Anthropic.

Miles Brundage (ex-OpenAI) says something similar, albeit less specific, in this post. And Daniel Kokotajlo (also ex-OpenAI) has held views like this for a long time now.

Even Sam Altman himself has said similar things (though in much, much vaguer terms, both on the content of the deliverable and the timeline).

Still, Anthropic's statements are unique in being

official positions of the company

extremely specific and ambitious about the details

extremely aggressive about the timing, even by the standards of "short timelines" AI prognosticators in the same social cluster

Re: ambition, note that the definition of "powerful AI" seems almost the opposite of what you'd come up with if you were trying to make a confident forecast of something.

Often people will talk about "AI capable of transforming the world economy" or something more like that, leaving room for the AI in question to do that in one of several ways, or to do so while still failing at some important things.

But instead, Anthropic's definition is a big conjunctive list of "it'll be able to do this and that and this other thing and...", and each individual capability is defined in the most aggressive possible way, too! Not just "good enough at science to be extremely useful for scientists," but "smarter than a Nobel Prize winner," across "most relevant fields" (whatever that means). And not just good at science but also able to "write extremely good novels" (note that we have a long way to go on that front, and I get the feeling that people at AI labs don't appreciate the extent of the gap [cf]). Not only can it use a computer interface, it can use every computer interface; not only can it use them competently, but it can do so better than the best humans in the world. And all of that is in the first two paragraphs – there's four more paragraphs I haven't even touched in this little summary!

Re: timing, they have even shorter timelines than Kokotajlo these days, which is remarkable since he's historically been considered "the guy with the really short timelines." (See here where Kokotajlo states a median prediction of 2028 for "AGI," by which he means something less impressive than "powerful AI"; he expects something close to the "powerful AI" vision ["ASI"] ~1 year or so after "AGI" arrives.)

----

I, uh, really do not think this is going to happen in "late 2026 or 2027."

Or even by the end of this presidential administration, for that matter.

I can imagine it happening within my lifetime – which is wild and scary and marvelous. But in 1.5 years?!

The confusing thing is, I am very familiar with the kinds of arguments that "short timelines" people make, and I still find the Anthropic's timelines hard to fathom.

Above, I mentioned that Anthropic has shorter timelines than Daniel Kokotajlo, who "merely" expects the same sort of thing in 2029 or so. This probably seems like hairsplitting – from the perspective of your average person not in these circles, both of these predictions look basically identical, "absurdly good godlike sci-fi AI coming absurdly soon." What difference does an extra year or two make, right?

But it's salient to me, because I've been reading Kokotajlo for years now, and I feel like I basically get understand his case. And people, including me, tend to push back on him in the "no, that's too soon" direction. I've read many many blog posts and discussions over the years about this sort of thing, I feel like I should have a handle on what the short-timelines case is.

But even if you accept all the arguments evinced over the years by Daniel "Short Timelines" Kokotajlo, even if you grant all the premises he assumes and some people don't – that still doesn't get you all the way to the Anthropic timeline!

To give a very brief, very inadequate summary, the standard "short timelines argument" right now is like:

Over the next few years we will see a "growth spurt" in the amount of computing power ("compute") used for the largest LLM training runs. This factor of production has been largely stagnant since GPT-4 in 2023, for various reasons, but new clusters are getting built and the metaphorical car will get moving again soon. (See here)

By convention, each "GPT number" uses ~100x as much training compute as the last one. GPT-3 used ~100x as much as GPT-2, and GPT-4 used ~100x as much as GPT-3 (i.e. ~10,000x as much as GPT-2).

We are just now starting to see "~10x GPT-4 compute" models (like Grok 3 and GPT-4.5). In the next few years we will get to "~100x GPT-4 compute" models, and by 2030 will will reach ~10,000x GPT-4 compute.

If you think intuitively about "how much GPT-4 improved upon GPT-3 (100x less) or GPT-2 (10,000x less)," you can maybe convince yourself that these near-future models will be super-smart in ways that are difficult to precisely state/imagine from our vantage point. (GPT-4 was way smarter than GPT-2; it's hard to know what "projecting that forward" would mean, concretely, but it sure does sound like something pretty special)

Meanwhile, all kinds of (arguably) complementary research is going on, like allowing models to "think" for longer amounts of time, giving them GUI interfaces, etc.

All that being said, there's still a big intuitive gap between "ChatGPT, but it's much smarter under the hood" and anything like "powerful AI." But...

...the LLMs are getting good enough that they can write pretty good code, and they're getting better over time. And depending on how you interpret the evidence, you may be able to convince yourself that they're also swiftly getting better at other tasks involved in AI development, like "research engineering." So maybe you don't need to get all the way yourself, you just need to build an AI that's a good enough AI developer that it improves your AIs faster than you can, and then those AIs are even better developers, etc. etc. (People in this social cluster are really keen on the importance of exponential growth, which is generally a good trait to have but IMO it shades into "we need to kick off exponential growth and it'll somehow do the rest because it's all-powerful" in this case.)

And like, I have various disagreements with this picture.

For one thing, the "10x" models we're getting now don't seem especially impressive – there has been a lot of debate over this of course, but reportedly these models were disappointing to their own developers, who expected scaling to work wonders (using the kind of intuitive reasoning mentioned above) and got less than they hoped for.

And (in light of that) I think it's double-counting to talk about the wonders of scaling and then talk about reasoning, computer GUI use, etc. as complementary accelerating factors – those things are just table stakes at this point, the models are already maxing out the tasks you had defined previously, you've gotta give them something new to do or else they'll just sit there wasting GPUs when a smaller model would have sufficed.

And I think we're already at a point where nuances of UX and "character writing" and so forth are more of a limiting factor than intelligence. It's not a lack of "intelligence" that gives us superficially dazzling but vapid "eyeball kick" prose, or voice assistants that are deeply uncomfortable to actually talk to, or (I claim) "AI agents" that get stuck in loops and confuse themselves, or any of that.

We are still stuck in the "Helpful, Harmless, Honest Assistant" chatbot paradigm – no one has seriously broke with it since that Anthropic introduced it in a paper in 2021 – and now that paradigm is showing its limits. ("Reasoning" was strapped onto this paradigm in a simple and fairly awkward way, the new "reasoning" models are still chatbots like this, no one is actually doing anything else.) And instead of "okay, let's invent something better," the plan seems to be "let's just scale up these assistant chatbots and try to get them to self-improve, and they'll figure it out." I won't try to explain why in this post (IYI I kind of tried to here) but I really doubt these helpful/harmless guys can bootstrap their way into winning all the Nobel Prizes.

----

All that stuff I just said – that's where I differ from the usual "short timelines" people, from Kokotajlo and co.

But OK, let's say that for the sake of argument, I'm wrong and they're right. It still seems like a pretty tough squeeze to get to "powerful AI" on time, doesn't it?

In the OSTP submission, Anthropic presents their latest release as evidence of their authority to speak on the topic:

In February 2025, we released Claude 3.7 Sonnet, which is by many performance benchmarks the most powerful and capable commercially-available AI system in the world.

I've used Claude 3.7 Sonnet quite a bit. It is indeed really good, by the standards of these sorts of things!

But it is, of course, very very far from "powerful AI." So like, what is the fine-grained timeline even supposed to look like? When do the many, many milestones get crossed? If they're going to have "powerful AI" in early 2027, where exactly are they in mid-2026? At end-of-year 2025?

If I assume that absolutely everything goes splendidly well with no unexpected obstacles – and remember, we are talking about automating all human intellectual labor and all tasks done by humans on computers, but sure, whatever – then maybe we get the really impressive next-gen models later this year or early next year... and maybe they're suddenly good at all the stuff that has been tough for LLMs thus far (the "10x" models already released show little sign of this but sure, whatever)... and then we finally get into the self-improvement loop in earnest, and then... what?

They figure out to squeeze even more performance out of the GPUs? They think of really smart experiments to run on the cluster? Where are they going to get all the missing information about how to do every single job on earth, the tacit knowledge, the stuff that's not in any web scrape anywhere but locked up in human minds and inaccessible private data stores? Is an experiment designed by a helpful-chatbot AI going to finally crack the problem of giving chatbots the taste to "write extremely good novels," when that taste is precisely what "helpful-chatbot AIs" lack?

I guess the boring answer is that this is all just hype – tech CEO acts like tech CEO, news at 11. (But I don't feel like that can be the full story here, somehow.)

And the scary answer is that there's some secret Anthropic private info that makes this all more plausible. (But I doubt that too – cf. Brundage's claim that there are no more secrets like that now, the short-timelines cards are all on the table.)

It just does not make sense to me. And (as you can probably tell) I find it very frustrating that these guys are out there talking about how human thought will basically be obsolete in a few years, and pontificating about how to find new sources of meaning in life and stuff, without actually laying out an argument that their vision – which would be the common concern of all of us, if it were indeed on the horizon – is actually likely to occur on the timescale they propose.

It would be less frustrating if I were being asked to simply take it on faith, or explicitly on the basis of corporate secret knowledge. But no, the claim is not that, it's something more like "now, now, I know this must sound far-fetched to the layman, but if you really understand 'scaling laws' and 'exponential growth,' and you appreciate the way that pretraining will be scaled up soon, then it's simply obvious that –"

No! Fuck that! I've read the papers you're talking about, I know all the arguments you're handwaving-in-the-direction-of! It still doesn't add up!

280 notes

·

View notes

Text

Ok no, I have to get it out of my system, Conrad is bad at podcasting. And just recording anything in general.

The sound padding is doing absolutely nothing. Sure, they’ve got it slapped on the walls, but it’s off to the side. Not behind them. Not in front of them. Just... chilling. Which is useless. You want that padding facing the source of the sound so it can catch the waves before they start bouncing all over the place.

Since both Ruby and Conrad are facing forward with the padding off to the side, her voice is bouncing off the wall behind him and hitting his mic again a split second later. That would be making the audio sound muddy and hollow. Having the foam off to the side like that is like putting a bandage next to your cut and wondering why you’re still bleeding.

Right now, the foam is just there for vibes. Bad vibes.

And who told this man to record in a glass box? Glass is terrible for audio. Everything bounces. Nothing gets absorbed. It turns your voice into a pinball machine. You could have a thousand-dollar mic and it would still sound like you’re talking inside a fishbowl. Plus, that room looks like it’s in the middle of an office space? Why, Conrad, you amoeba-brained sycophant, would you record anything there ever?? The background noise alone would be hell on Earth to try to edit out.

Pop filter and foam windscreen (mic cover)??? Both are designed to reduce plosive sounds—like "p" and "b"—by dispersing the air before it hits the microphone diaphragm. While it’s not wrong to use both, it’s redundant unless you're outdoors or in a particularly plosive-heavy environment. Stacking them can even dull the audio a bit.

Your mic doesn’t need two hats. Calm down.

Not an audio note but a soft box light in the shot?? No. Just no. They should be behind the camera, pointing at you. Or at least off to the side, not pointing directly down the middle. And what really gets me? There are windows. Real, working windows with actual sunlight. And what did Conrad do? He covered them with that useless sound padding. So now it’s badly lit and echoey.

He blocked out free, natural light to keep in the bad sound.

"But what if the sun’s there right when he’s trying to record?" some might say. That’s why curtains exist. And the soft box would still be in a bad spot.

Also, his camera audio is peaking like crazy. Even if he's not using the camera mic for the final cut, it’s still useless to record it like this. You know that Xbox early Halo/COD mic sound? That’s what this would sound like.

This happens when the input gain is too high, causing the audio to clip. Basically, the mic can’t handle it and the sound gets distorted. Ideally, you want your audio levels to peak in the yellow zone, around -12 dB to -6 dB. Not constantly slamming into the red at 0 dB. That’s reserved for 13-year-old prepubescents cursing you out for ruining their kill streak. And that’s it.

On top of that, both the left and right channels on the camera audio look identical, meaning the audio’s been merged into a single mono track. Which isn’t wrong for speech, but it kills any sense of space or direction. For dynamic audio, especially in a two-person setup, you don’t want everything crammed into one lane. (OR they’re both just peaking at the same time continuously, even when they’re not talking, which means it’s picking up background noise at a level so loud it’s pushing the mic into clipping.)

And to make things worse, the little "LIVE" tag in the bottom corner implies this is a livestream. But there doesn’t seem to be any livestream software open on his laptop, so I’m assuming there’s either a second offscreen computer handling the stream, or it’s hooked up to broadcast natively.

Either way, unless those mics are also connected to the camera or that other computer, that peaky, crunchy camera audio is what people are actually hearing.

Finally... it really helps if you hit the record button. He’s just playing back audio. I think that’s more of a “show” thing, but still.

(look I got a fancy degree in this stuff and I have to use it somehow)

#as a sound designer this scene gave me a headache#Ok now back to my usual schedule of silly memes and text posts#also It's blurry so it's hard to tell#but I'm pretty sure that bitch using GarageBand to record his propaganda nonsense.#Like you can shell out for multiple hyper realistic Holywood quality costumes of a creature you saw once#but you can't afford Pro Tools? 🙄#Doctor Who#Doctor Who lucky day#lucky day#Doctor Who spoilers#15th doctor#fifteenth doctor#doctor who#doctor who spoilers#dw spoilers#spoilers#doctorwho#the doctor#dw s2 e4#sound design

317 notes

·

View notes