#container network interface azure

Explore tagged Tumblr posts

Text

The Most In-Demand Cybersecurity Skills Students Should Learn

The digital world is expanding at an unprecedented pace, and with it, the landscape of cyber threats is becoming increasingly complex and challenging. For students considering a career path with immense growth potential and global relevance, cybersecurity stands out. However, simply entering the field isn't enough; to truly succeed and stand out in 2025's competitive job market, mastering the right skills is crucial.

The demand for skilled cybersecurity professionals far outweighs the supply, creating a significant talent gap and offering bright prospects for those with the in-demand expertise. But what skills should students focus on learning right now to land those coveted entry-level positions and build a strong career foundation?

While foundational IT knowledge is always valuable, here are some of the most essential and sought-after cybersecurity skills students should prioritize in 2025:

Core Technical Foundations: The Bedrock

Before specializing, a solid understanding of fundamental technical concepts is non-negotiable.

Networking: Learn how networks function, including protocols (TCP/IP, HTTP, DNS), network architecture, and common networking devices (routers, switches, firewalls). Understanding how data flows is key to understanding how it can be attacked and defended.

Operating Systems: Gain proficiency in various operating systems, especially Linux, Windows, and a basic understanding of mobile OS security (Android, iOS), as threats target all environments. Familiarity with command-line interfaces is essential.

Programming and Scripting: While not every role requires deep programming, proficiency in languages like Python or PowerShell is highly valuable. These skills are crucial for automating tasks, analyzing malware, developing security tools, and performing scripting for security assessments.

Cloud Security: Securing the Digital Frontier

As businesses rapidly migrate to the cloud, securing cloud environments has become a top priority, making cloud security skills immensely in-demand.

Understanding Cloud Platforms: Learn the security models and services offered by major cloud providers like AWS, Azure, and Google Cloud Platform.

Cloud Security Concepts: Focus on concepts like Identity and Access Management (IAM) in the cloud, cloud security posture management (CSPM), data encryption in cloud storage, and securing cloud networks.

Threat Detection, Response, and Analysis: On the Front Lines

Organizations need professionals who can identify malicious activity, respond effectively, and understand the threat landscape.

Security Operations Center (SOC) Skills: Learn how to monitor security alerts, use Security Information and Event Management (SIEM) tools, and analyze logs to detect potential incidents.

Incident Response: Understand the phases of incident response – preparation, identification, containment, eradication, recovery, and lessons learned. Practical knowledge of how to act during a breach is critical.

Digital Forensics: Develop skills in collecting and analyzing digital evidence to understand how an attack occurred, crucial for incident investigation.

Threat Intelligence: Learn how to gather, analyze, and interpret threat intelligence to stay informed about the latest attack methods, threat actors, and vulnerabilities.

Offensive Security Fundamentals: Thinking Like an Attacker

Understanding how attackers operate is vital for building effective defenses.

Vulnerability Assessment: Learn how to identify weaknesses in systems, applications, and networks using various tools and techniques.

Introduction to Penetration Testing (Ethical Hacking): While entry-level roles may not be full-fledged penetration testers, understanding the methodology and mindset of ethical hacking is invaluable for identifying security gaps proactively.

Identity and Access Management (IAM): Controlling the Gates

Controlling who has access to what resources is fundamental to security.

IAM Principles: Understand concepts like authentication, authorization, single sign-on (SSO), and access controls.

Multi-Factor Authentication (MFA): Learn how MFA works and its importance in preventing unauthorized access.

Data Security and Privacy: Protecting Sensitive Information

With increasing data breaches and evolving regulations, skills in data protection are highly sought after.

Data Encryption: Understand encryption techniques and how to apply them to protect data at rest and in transit.

Data Protection Regulations: Familiarize yourself with key data protection laws and frameworks, such as global regulations like GDPR, as compliance is a major concern for businesses.

Automation and AI in Security: The Future is Now

Understanding how technology is used to enhance security operations is becoming increasingly important.

Security Automation: Learn how automation can be used to streamline repetitive security tasks, improve response times, and enhance efficiency.

Understanding AI's Impact: Be aware of how Artificial Intelligence (AI) and Machine Learning (ML) are being used in cybersecurity, both by defenders for threat detection and by attackers for more sophisticated attacks.

Soft Skills: The Underrated Essentials

Technical skills are only part of the equation. Strong soft skills are vital for success in any cybersecurity role.

Communication: Clearly articulate technical concepts and risks to both technical and non-technical audiences. Effective written and verbal communication is paramount.

Problem-Solving and Critical Thinking: Analyze complex situations, identify root causes, and develop creative solutions to security challenges.

Adaptability and Continuous Learning: The cybersecurity landscape changes constantly. A willingness and ability to learn new technologies, threats, and techniques are crucial for staying relevant.

How Students Can Acquire These Skills

Students have numerous avenues to develop these in-demand skills:

Formal Education: University degrees in cybersecurity or related fields provide a strong theoretical foundation.

Online Courses and Specializations: Platforms offer specialized courses and certifications focused on specific cybersecurity domains and tools.

Industry Certifications: Entry-level certifications like CompTIA Security+ or vendor-specific cloud security certifications can validate your knowledge and demonstrate commitment to potential employers.

Hands-on Labs and Personal Projects: Practical experience is invaluable. Utilize virtual labs, build a home lab, participate in Capture The Flag (CTF) challenges, and work on personal security projects.

Internships: Gaining real-world experience through internships is an excellent way to apply your skills and build your professional network.

Conclusion

The cybersecurity field offers immense opportunities for students in 2025. By strategically focusing on acquiring these in-demand technical and soft skills, staying current with threat trends, and gaining practical experience, students can position themselves for a successful and rewarding career safeguarding the digital world. The demand is high, the impact is significant, and the time to start learning is now.

0 notes

Text

MPI Intel Library: Reliable & Scalable Cluster Communication

Adjustable, effective, and scalable cluster communications from the MPI Intel Library.

Single Library for Multiple Fabrics

The Intel MPI Library passes multibaric messages using the open-source MPICH standard. Develop, maintain, and test complex programs that run faster on Intel-based and compatible HPC clusters using the library.

Create applications that can run on runtime-specified cluster interfaces.

Deliver excellent end-user performance quickly without OS or software changes.

Automatic tuning optimises latency, bandwidth, and scalability.

Use the latest optimised fabrics and a single library to save time to market.

Toolkit download

The Intel oneAPI HPC Toolkit contains MPI Intel Library. Get tools to assess, enhance, and scale apps.

Download the standalone version

Download Intel MPI Library separately. Choose your favourite source or Intel binaries.

Features

OpenFabrics Interface help

This optimised framework exports communication services to HPC applications. Key components include APIs, provider libraries, kernel services, daemons, and test programs.

Intel MPI Library manages all communications with OFI.

Allows an efficient path that ends with application code data communications.

It allows runtime fabric tuning using basic environment factors, including network-level utilities like multirail for bandwidth.

Provides optimal performance for large-scale Cornelis and Mellanox InfiniBand systems.

Results include higher throughput, decreased latency, simpler program architecture, and common communication infrastructure.

Scalability

This library implements high-performance MPI 3.1 and 4.0 on numerous fabrics. This lets you quickly optimise application performance without changing operating systems or applications, even if you switch interconnects.

Thread safety allows hybrid multithreaded MPI applications to be traced for optimal performance on Intel multicore and manycore platforms.

The process manager mpiexec.hydra enhances start scalability and launches concurrent processes.

It natively supports SSH, RSH, PBS, Slurm, and SGE.

Google Cloud Platform, Microsoft Azure, and Amazon Web Services integration

Performance and Tuning Tools

Two new enhancements optimise app performance.

Independence interconnect

The library offers an accelerated, universal, multibaric layer for fast OFI interconnects with the following configurations:

TCP sockets

Community memory

Ethernet and InfiniBand use RDMA.

Dynamically creating the connection only when needed reduces memory footprint. It automatically chooses the fastest way of transportation.

Knowing MPI code will operate on any network you provide at runtime, write it without attention to fabric.

Using two-phase communication buffer-enlargement, just enough memory should be allocated.

Compatibility of App Binary Interface

Applications use application binary interfaces (ABIs) to communicate between software components. It controls function invocation, data type size, layout, and alignment. ABI-compatible apps use the same runtime naming conventions.

The MPI Intel Library provides ABI compatibility with MPI-1.x and MPI-2.x programs. You can utilise the library's runtimes without recompiling to get its speed improvements, even if you're not ready for 3.1 and 4.0.

Intel MPI Benchmarks measure MPI performance for point-to-point and global communication across message sizes. Run each supported benchmark or give a single executable file via the command line to get subset results.

The benchmark data fully describes:

Throughput, network latency, and node performance of cluster systems

Efficiency of MPI implementation

Adjust the library's comprehensive default settings or leave them as is for optimal performance. If you want to tweak cluster or application settings, use mpitune. Continue tweaking the parameters until you obtain the best results.

MPI Intel Library: Windows OS Developer Guide

MPI Tuning: Intel MPI Library supports Autotuner.

Autotuner

Autotuning might improve MPI collective operation-heavy applications. Simple to use, Autotuner has low overhead.

Autotuner utility tunes MPI collective operation algorithms I_MPI_ADJUST_ family. The autotuner limits tuning to the cluster setup (fabric, ranks, node rankings). Turning on the autotuner while an application is running improves performance. Create a new tuning file with MPI collective operations appropriate to the application and supply it to the I_MPI_TUNING_BIN variable.

#technology#technews#govindhtech#news#technologynews#cloud computing#MPI Intel#Intel MPI Library#Toolkit#Intel MPI#MPI Intel Library

0 notes

Text

Cloud Computing Mastery: How It Works, Best Practices & Future Trends

Introduction

Cloud computing eliminates the need for expensive on-premises infrastructure and offers flexible, on-demand resources through the Internet. From small businesses to large enterprises, cloud computing enhances efficiency, reduces costs, and provides scalability. As organizations strive to keep up with technological advancements, understanding cloud computing’s key concepts, benefits, deployment models, service types, and future trends is essential.

What is Cloud Computing?

Cloud computing refers to the delivery of computing services—including storage, servers, networking, databases, analytics, and software—over the internet. Instead of maintaining physical data centers, users can rent computing power from cloud providers, allowing businesses to focus on their core operations without worrying about infrastructure management.

The key concept behind cloud computing is virtualization, where physical resources are abstracted and delivered as scalable services. Cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer various services tailored to different business needs.

How Cloud Computing Works

Cloud computing operates through a network of remote servers that process and store data, allowing users to access applications and services on demand. These servers are housed in data centres worldwide, ensuring high availability and disaster recovery.

Some key components of cloud computing include:

Virtual Machines (VMs): Simulated computer environments that allow multiple users to share resources.

Containers: Lightweight, portable environments for running applications efficiently.

APIs (Application Programming Interfaces): Enable applications to communicate with cloud services.

Key Benefits of Cloud Computing

Organizations are rapidly adopting cloud computing due to its numerous advantages. Here are some of the key benefits:

1. Cost Efficiency

Cloud computing eliminates the need for purchasing and maintaining expensive hardware and software. Businesses can pay for only the resources they use, following a pay-as-you-go or subscription-based pricing model. This reduces capital expenditures and allows companies to allocate budgets more effectively.

2. Scalability and Flexibility

With cloud computing, organizations can easily scale their resources up or down based on demand. This is particularly beneficial for businesses experiencing seasonal spikes in traffic or rapid growth, as they can adjust their computing power accordingly without investing in new infrastructure.

3. Accessibility and Mobility

Cloud-based applications enable users to access data from anywhere with an internet connection. This promotes remote work, collaboration, and real-time data sharing, which is crucial for businesses operating globally.

4. Security and Data Backup

Leading cloud providers implement advanced security measures, including encryption, multi-factor authentication, and AI-driven threat detection. Additionally, cloud computing offers built-in disaster recovery solutions, ensuring business continuity in the event of system failures or cyberattacks.

5. Automatic Updates and Maintenance

Cloud providers handle software updates, security patches, and system maintenance, reducing the burden on IT teams. This allows businesses to focus on innovation rather than infrastructure management.

Examples:

Google Workspace (Docs, Drive, Gmail) – Enables online collaboration.

Microsoft 365 (Word, Excel, Outlook) – Cloud-based office applications.

Dropbox – Cloud storage and file-sharing service.

Deployment Models of Cloud Computing

Cloud computing deployment models define how services are hosted and managed:

1. Public Cloud

Owned by third-party providers and shared among multiple users.

Cost-effective but offers limited customization.

Example: AWS, Google Cloud, Microsoft Azure.

2. Private Cloud

Dedicated to a single organization, offering greater security and control.

Suitable for businesses handling sensitive data, such as banking and healthcare.

Example: IBM Cloud Private, VMware Cloud.

3. Hybrid Cloud

A combination of public and private clouds, allowing businesses to balance performance, security, and cost-effectiveness.

Used for data-sensitive operations while utilizing public cloud scalability.

Example: AWS Hybrid, Google Anthos.

4. Multi-Cloud

Utilizes multiple cloud providers to avoid vendor lock-in and enhance reliability.

Ensures redundancy and disaster recovery.

Example: A company using both AWS and Azure for different workloads.

Future Trends in Cloud Computing

As cloud technology continues to evolve, several trends are shaping its future:

1. Edge Computing

Bringing computing closer to data sources to reduce latency and improve efficiency. This is crucial for IoT devices and real-time applications like autonomous vehicles.

2. Serverless Computing

Developers can run applications without managing underlying infrastructure, reducing operational complexity. AWS Lambda and Azure Functions are leading in this space.

3. AI and Machine Learning Integration

Cloud platforms are incorporating AI-driven analytics and automation, improving decision-making and enhancing productivity. Google AI and Azure AI are major players.

4. Quantum Computing

Emerging as a revolutionary field, quantum computing in the cloud aims to solve complex problems faster than traditional computing. IBM Quantum and AWS Braket are pioneers.

5. Enhanced Cloud Security

With increasing cyber threats, cloud providers are implementing zero-trust security models, AI-powered threat detection, and advanced encryption techniques.

Conclusion: Post Graduate Program in Cloud Computing

0 notes

Text

RHEL 8.8: A Powerful and Secure Enterprise Linux Solution

Red Hat Enterprise Linux (RHEL) 8.8 is an advanced and stable operating system designed for modern enterprise environments. It builds upon the strengths of its predecessors, offering improved security, performance, and flexibility for businesses that rely on Linux-based infrastructure. With seamless integration into cloud and hybrid computing environments, RHEL 8.8 provides enterprises with the reliability they need for mission-critical workloads.

One of the key enhancements in RHEL 8.8 is its optimized performance across different hardware architectures. The Linux kernel has been further refined to support the latest processors, storage technologies, and networking hardware. These RHEL 8.8 improvements result in reduced system latency, faster processing speeds, and better efficiency for demanding applications.

Security remains a top priority in RHEL 8.8. This release includes enhanced cryptographic policies and supports the latest security standards, including OpenSSL 3.0 and TLS 1.3. Additionally, SELinux (Security-Enhanced Linux) is further improved to enforce mandatory access controls, preventing unauthorized modifications and ensuring that system integrity is maintained. These security features make RHEL 8.8 a strong choice for organizations that prioritize data protection.

RHEL 8.8 continues to enhance package management with DNF (Dandified YUM), a more efficient and secure package manager that simplifies software installation, updates, and dependency management. Application Streams allow multiple versions of software packages to coexist on a single system, giving developers and administrators the flexibility to choose the best software versions for their needs.

The growing importance of containerization is reflected in RHEL 8.8’s strong support for containerized applications. Podman, Buildah, and Skopeo are included, allowing businesses to deploy and manage containers securely without requiring a traditional container runtime. Podman’s rootless container support further strengthens security by reducing the risks associated with privileged container execution.

Virtualization capabilities in RHEL 8.8 have also been refined. The integration of Kernel-based Virtual Machine (KVM) and QEMU ensures that enterprises can efficiently deploy and manage virtualized workloads. The Cockpit web interface provides an intuitive dashboard for administrators to monitor and control virtual machines, making virtualization management more accessible.

For businesses operating in cloud environments, RHEL 8.8 seamlessly integrates with leading cloud platforms, including AWS, Azure, and Google Cloud. Optimized RHEL images ensure smooth deployments, reducing compatibility issues and providing a consistent operating experience across hybrid and multi-cloud infrastructures.

Networking improvements in RHEL 8.8 further enhance system performance and reliability. The updated NetworkManager simplifies network configuration, while enhancements to IPv6 and high-speed networking interfaces ensure that businesses can handle increased data traffic with minimal latency.

Storage management in RHEL 8.8 is more robust, with support for Stratis, an advanced storage management solution that simplifies volume creation and maintenance. Enterprises can take advantage of XFS, EXT4, and LVM (Logical Volume Manager) for scalable and flexible storage solutions. Disk encryption and snapshot management improvements further protect sensitive business data.

Automation is a core focus of RHEL 8.8, with built-in support for Ansible, allowing IT teams to automate configurations, software deployments, and system updates. This reduces manual workload, minimizes errors, and improves system efficiency, making enterprise IT management more streamlined.

Monitoring and diagnostics tools in RHEL 8.8 are also improved. Performance Co-Pilot (PCP) and Tuned provide administrators with real-time insights into system performance, enabling them to identify bottlenecks and optimize configurations for maximum efficiency.

Developers benefit from RHEL 8.8’s comprehensive development environment, which includes programming languages such as Python 3, Node.js, Golang, and Ruby. The latest version of the GCC (GNU Compiler Collection) ensures compatibility with a wide range of applications and frameworks. Additionally, enhancements to the Web Console provide a more user-friendly administrative experience.

One of the standout features of RHEL 8.8 is its long-term support and enterprise-grade lifecycle management. Red Hat provides extended security updates, regular patches, and dedicated technical support, ensuring that businesses can maintain a stable and secure operating environment for years to come. Red Hat Insights, a predictive analytics tool, helps organizations proactively detect and resolve system issues before they cause disruptions.

In conclusion RHEL 8.8 is a powerful, secure, and reliable Linux distribution tailored for enterprise needs. Its improvements in security, containerization, cloud integration, automation, and performance monitoring make it a top choice for businesses that require a stable and efficient operating system. Whether deployed on physical servers, virtual machines, or cloud environments, RHEL 8.8 delivers the performance, security, and flexibility that modern enterprises demand.

0 notes

Text

Why Red Hat OpenShift?

In today's fast-paced technology landscape, enterprises need a robust, scalable, and secure platform to manage containerized applications efficiently. Red Hat OpenShift has emerged as a leading Kubernetes-based platform designed to empower organizations with seamless application development, deployment, and management. But why should businesses choose Red Hat OpenShift over other alternatives? Let’s explore its key advantages.

1. Enterprise-Grade Kubernetes

While Kubernetes is an excellent open-source container orchestration platform, running and managing it in production environments can be complex. OpenShift simplifies Kubernetes operations by offering:

Automated installation and upgrades

Built-in security features

Enterprise support from Red Hat

2. Enhanced Developer Productivity

OpenShift provides a developer-friendly environment with integrated tools for continuous integration and continuous deployment (CI/CD). Features such as:

Source-to-Image (S2I) for automated container builds

Developer consoles with an intuitive interface

OpenShift Pipelines for streamlined CI/CD workflows help teams accelerate software delivery without deep Kubernetes expertise.

3. Security and Compliance

Security is a top priority for enterprises, and OpenShift comes with built-in security measures, including:

Role-Based Access Control (RBAC) for fine-grained permissions

Image scanning and policy enforcement

Secure container runtimes with SELinux integration This makes OpenShift a preferred choice for businesses needing compliance with industry regulations.

4. Hybrid and Multi-Cloud Flexibility

OpenShift is designed to run seamlessly across on-premise data centers, private clouds, and public cloud providers like AWS, Azure, and Google Cloud. This flexibility allows businesses to:

Avoid vendor lock-in

Maintain a consistent deployment experience

Optimize workloads across different environments

5. Integrated DevOps and GitOps

With OpenShift, teams can adopt modern DevOps and GitOps practices effortlessly. OpenShift GitOps (based on ArgoCD) enables organizations to manage infrastructure and applications declaratively using Git repositories, ensuring:

Version-controlled deployments

Automated rollbacks and updates

Enhanced collaboration between Dev and Ops teams

6. Streamlined Application Modernization

Organizations looking to modernize legacy applications can leverage OpenShift’s built-in support for:

Microservices architectures

Serverless computing (Knative integration)

Service mesh (Istio) for advanced networking and observability

Conclusion

Red Hat OpenShift is not just another Kubernetes distribution; it’s a complete enterprise Kubernetes platform that simplifies deployment, enhances security, and fosters innovation. Whether you're a startup or a large enterprise, OpenShift provides the scalability, automation, and support needed to thrive in a cloud-native world.

Are you ready to leverage OpenShift for your business? Contact us at HawkStack Technologies to explore how we can help you with your OpenShift journey!

For more details www.hawkstack.com

0 notes

Text

Serverless Computing and Its Role in Building Future-Ready Applications

The digital era demands applications that are agile, scalable, and cost-effective to meet the rapidly changing needs of users. Serverless computing has emerged as a transformative approach, empowering developers to focus on innovation without worrying about infrastructure management.

In this blog, we’ll delve into serverless computing, the trends shaping its adoption, and how Cloudtopiaa is preparing to integrate this game-changing technology to help businesses build future-ready applications.

What is Serverless Computing?

Serverless computing doesn’t mean there are no servers involved — it means developers no longer have to manage them. With serverless architecture, cloud providers handle the backend operations, such as provisioning, scaling, and maintenance.

Key characteristics of serverless computing include:

On-Demand Scalability: Resources are allocated dynamically based on application requirements.

Pay-as-You-Go Pricing: Businesses are billed only for the exact resources consumed during runtime.

Developer-Centric Approach: Developers can focus on code and features, leaving infrastructure management to the cloud provider.

Popular services like AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions have paved the way for serverless adoption.

Why Serverless is the Future of Application Development

1. Increased Agility for Developers

By removing the burden of infrastructure management, serverless computing enables developers to focus on building features and deploying updates faster.

2. Cost Efficiency

With traditional setups, businesses often pay for idle server time. Serverless ensures that costs align directly with usage, leading to significant savings.

3. Scalability Without Complexity

Applications running on serverless architectures can automatically scale up or down based on traffic, ensuring seamless performance during peak times.

4. Environmentally Friendly

Serverless architectures optimize resource usage, reducing energy consumption and contributing to sustainability goals.

5. Future-Proof Applications

As businesses grow, serverless computing adapts, providing the flexibility and scalability required for long-term success.

Emerging Trends in Serverless Computing

Multi-Cloud and Hybrid Deployments: Businesses are adopting serverless models across multiple cloud providers or integrating them with on-premises systems.

Event-Driven Architectures: Serverless is becoming the backbone for event-driven systems that process real-time data, such as IoT and analytics applications.

AI and Machine Learning Workflows: Developers are leveraging serverless functions to power AI/ML models with reduced costs and faster processing times.

Container Integration: Serverless is blending with container technologies like Kubernetes to provide even greater flexibility.

How Cloudtopiaa is Preparing for the Serverless Revolution

At Cloudtopiaa, we believe serverless computing is essential for building future-ready applications. Here’s how we’re integrating serverless into our ecosystem:

1. Simplified Serverless Deployments

We’re developing tools that make it seamless for businesses to deploy serverless applications without requiring extensive expertise.

2. Flexible Workflows

Cloudtopiaa’s serverless solutions will support a wide range of use cases, from APIs and microservices to real-time data processing.

3. Cost-Effective Pricing Models

Our pay-as-you-go serverless infrastructure ensures businesses only pay for the resources they consume, maximizing ROI.

4. Developer-Centric Tools

We’re focused on providing user-friendly interfaces, SDKs, and APIs that allow developers to launch serverless functions efficiently.

5. Integration with Cloudtopiaa’s Ecosystem

Serverless computing will be fully integrated with our existing services, including compute instances, storage, and networking, providing a unified platform for all your needs.

Use Cases for Serverless Computing with Cloudtopiaa

E-Commerce: Handle high-traffic events like flash sales by automatically scaling serverless functions.

IoT Applications: Process real-time sensor data efficiently without worrying about backend scaling.

Content Delivery: Optimize media streaming and image processing pipelines for better user experiences.

AI/ML Workflows: Run model inference tasks cost-effectively using serverless functions.

Why Businesses Should Embrace Serverless Now

Adopting serverless computing offers immediate and long-term benefits, such as:

Faster time-to-market for new applications.

Simplified scaling for unpredictable workloads.

Lower operational costs by eliminating server management.

Businesses partnering with Cloudtopiaa for serverless solutions gain access to cutting-edge tools and support, ensuring a smooth transition to this powerful model.

Final Thoughts

Serverless computing is more than a trend — it’s the future of application development. By enabling agility, scalability, and cost-efficiency, it empowers businesses to stay competitive in an ever-evolving landscape.

Cloudtopiaa is committed to helping businesses harness the potential of serverless computing. Stay tuned for our upcoming serverless offerings that will redefine how you build, deploy, and scale applications.

Contact us today to explore how Cloudtopiaa can support your journey into serverless architecture and beyond.

#ServerlessComputing #CloudComputing #FutureReadyApps #TechInnovation #ServerlessArchitecture #ScalableSolutions #DigitalTransformation #ModernDevelopment #CloudNativeApps #TechTrends

0 notes

Text

Exploring the Modern Data Center and SAN: A Technological Revolution

In today's fast-paced digital landscape, data is the new currency. As businesses continue to generate vast amounts of information, the need for robust, efficient, and scalable data storage solutions has never been more critical. Enter the modern data center and Storage Area Network (SAN) - two pillars that support our ever-growing demand for data management and accessibility. Designed to handle massive volumes of data seamlessly, these technologies have evolved remarkably over recent years, providing enhanced performance, security, and flexibility to organizations worldwide. Whether you're a seasoned IT professional or a tech enthusiast eager to understand these marvels of modern engineering, this blog will delve into the intricacies of contemporary data centers and SANs.

Blog Body:

The transformation from traditional server rooms to sophisticated modern data centers is nothing short of revolutionary. At its core, a modern data center is an advanced facility designed to centralize an organization’s IT operations and equipment while ensuring optimal performance and security. Unlike their predecessors that relied heavily on physical hardware constraints, today’s data centers utilize virtualization technologies extensively. This shift allows businesses to maximize resource utilization by running multiple virtual machines on single physical servers. Additionally, cutting-edge cooling systems have become integral components in maintaining energy efficiency while mitigating the environmental impact associated with operating large-scale IT infrastructures.

With cloud computing becoming mainstream, hybrid cloud environments have emerged as a pivotal aspect of modern data centers. By integrating private clouds with public cloud services like Amazon Web Services (AWS) or Microsoft Azure, organizations can enjoy seamless scalability alongside enhanced control over sensitive information. This hybrid approach not only improves operational flexibility but also optimizes costs by dynamically allocating resources based on real-time demands. Moreover, advancements in artificial intelligence (AI) are further augmenting this ecosystem through predictive analytics capabilities that enhance decision-making processes across various touchpoints within the infrastructure.

Parallelly evolving alongside data centers is SAN technology—a high-speed network dedicated entirely to storage devices enabling centralized access across multiple servers without compromising speed or reliability aspects crucial for mission-critical applications demanding low-latency responses times such as databases banking transactions video streaming etcetera . Traditionally configured using Fibre Channel protocols; however recent innovations have introduced iSCSI Ethernet-based alternatives offering similar functionalities at reduced costs thereby making them accessible even small medium enterprises previously deterred by proprietary requirements associated legacy systems .

One cannot discuss modernization efforts without acknowledging role software-defined solutions play transforming both landscapes simultaneously . Software-defined Data Centers (SDDC) leverage abstraction layers decoupling hardware-specific dependencies allowing administrators automate provisioning deployment configurations via intuitive interfaces ultimately streamlining management processes reducing downtime possibilities caused human error inconsistencies configuration drifts meanwhile Software-defined Storage (SDS) empowers users build flexible scalable architectures transcending limitations imposed conventional setups facilitating seamless integration future-ready technologies including hyper-converged infrastructures containers edge computing environments .

Security remains paramount concern amidst growing cyber threats targeting valuable datasets housed within these facilities hence incorporation advanced encryption mechanisms multi-factor authentication stringent access control policies among other measures ensures integrity confidentiality availability crucial assets protected against unauthorized intrusions breaches potentially catastrophic consequences business continuity reputation stakeholder trust alike .

Conclusion:

As we journey deeper into an era defined by digital transformation where every byte matters significantly , understanding nuances surrounding modern-day solutions like Data Centers SAN becomes imperative enthusiasts professionals alike . These technological powerhouses continue redefine paradigms underpinning global connectivity reliance upon them grows exponentially day-by-day thus equipping oneself knowledge expertise navigate complexities involved managing securing leveraging effectively undoubtedly prove invaluable moving forward . Indeed embracing innovation adopting best practices will empower us harness full potential offered unprecedented opportunities awaiting horizon powered relentless pursuit progress technology advancement humanity betterment whole `.

0 notes

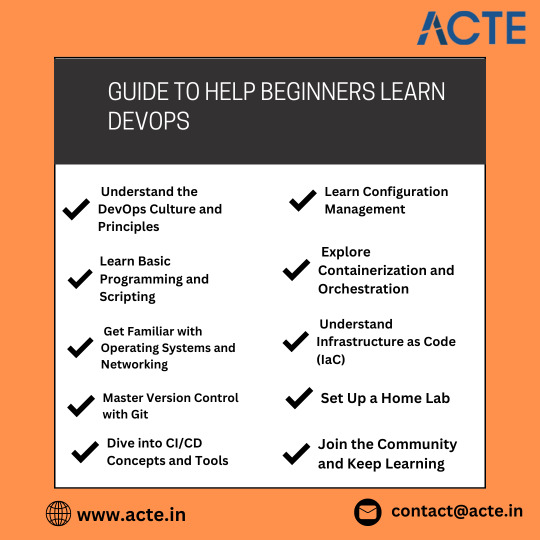

Text

Embarking on a journey to learn DevOps can be both exciting and overwhelming for beginners. DevOps, which focuses on the integration and automation of processes between software development and IT operations, offers a dynamic and rewarding career. Here’s a comprehensive guide to help beginners navigate the path to becoming proficient in DevOps. For individuals who want to work in the sector, a respectable DevOps Training in Pune can give them the skills and information they need to succeed in this fast-paced atmosphere.

Understanding the Basics

Before diving into DevOps tools and practices, it’s crucial to understand the fundamental concepts:

1. DevOps Culture: DevOps emphasizes collaboration between development and operations teams to improve efficiency and deploy software faster. It’s not just about tools but also about fostering a culture of continuous improvement, automation, and teamwork.

2. Core Principles: Familiarize yourself with the core principles of DevOps, such as Continuous Integration (CI), Continuous Delivery (CD), Infrastructure as Code (IaC), and Monitoring and Logging. These principles are the foundation of DevOps practices.

Learning the Essentials

To build a strong foundation in DevOps, beginners should focus on acquiring knowledge in the following areas:

1. Version Control Systems: Learn how to use Git, a version control system that tracks changes in source code during software development. Platforms like GitHub and GitLab are also essential for managing repositories and collaborating with other developers.

2. Command Line Interface (CLI): Becoming comfortable with the CLI is crucial, as many DevOps tasks are performed using command-line tools. Start with basic Linux commands and gradually move on to more advanced scripting.

3. Programming and Scripting Languages: Knowledge of programming and scripting languages like Python, Ruby, and Shell scripting is valuable. These languages are often used for automation tasks and writing infrastructure code.

4. Networking and Security: Understanding basic networking concepts and security best practices is essential for managing infrastructure and ensuring the security of deployed applications.

Hands-On Practice with Tools

Practical experience with DevOps tools is key to mastering DevOps practices. Here are some essential tools for beginners:

1. CI/CD Tools: Get hands-on experience with CI/CD tools like Jenkins, Travis CI, or CircleCI. These tools automate the building, testing, and deployment of applications.

2. Containerization: Learn about Docker, a platform that automates the deployment of applications in lightweight, portable containers. Understanding container orchestration tools like Kubernetes is also beneficial.

3. Configuration Management: Familiarize yourself with configuration management tools like Ansible, Chef, or Puppet. These tools automate the provisioning and management of infrastructure.

4. Cloud Platforms: Explore cloud platforms like AWS, Azure, or Google Cloud. These platforms offer various services and tools that are integral to DevOps practices. Enrolling in DevOps Online Course can enable individuals to unlock DevOps' full potential and develop a deeper understanding of its complexities.

Continuous Learning and Improvement

DevOps is a constantly evolving field, so continuous learning is essential:

1. Online Courses and Tutorials: Enroll in online courses and follow tutorials from platforms like Coursera, Udemy, and LinkedIn Learning. These resources offer structured learning paths and hands-on projects.

2. Community Involvement: Join DevOps communities, attend meetups, and participate in forums. Engaging with the community can provide valuable insights, networking opportunities, and support from experienced professionals.

3. Certification: Consider obtaining DevOps certifications, such as the AWS Certified DevOps Engineer or Google Professional DevOps Engineer. Certifications can validate your skills and enhance your career prospects.

Conclusion

Learning DevOps as a beginner involves understanding its core principles, gaining hands-on experience with essential tools, and continuously improving your skills. By focusing on the basics, practicing with real-world tools, and staying engaged with the DevOps community, you can build a solid foundation and advance your career in this dynamic field. The journey may be challenging, but with persistence and dedication, you can achieve proficiency in DevOps and unlock exciting career opportunities.

0 notes

Text

estrutura de um BLOG

Para criar um site com serviço (frequentemente referido como um site web com backend) moderno e escalável, você precisa considerar a estrutura em várias camadas, incluindo frontend, backend, banco de dados, e infraestrutura. Aqui está uma visão geral da arquitetura típica de um site com serviço:

1. Frontend (Cliente)

O frontend é a parte visível do site com a qual os usuários interagem diretamente. Pode ser desenvolvido usando várias tecnologias:

HTML: Linguagem de marcação para estruturar o conteúdo da web.

CSS: Folhas de estilo para estilizar o conteúdo HTML.

JavaScript: Linguagem de programação para adicionar interatividade e dinamismo ao site.

Frameworks e Bibliotecas: React, Angular, Vue.js para desenvolver interfaces de usuário dinâmicas e responsivas.

2. Backend (Servidor)

O backend é a parte do site que processa a lógica do servidor, gerencia dados e responde às solicitações dos clientes. Ele pode ser desenvolvido usando várias linguagens e frameworks:

Linguagens de Programação: Python, JavaScript (Node.js), Java, Ruby, PHP, etc.

Frameworks: Django (Python), Flask (Python), Express (Node.js), Spring (Java), Ruby on Rails (Ruby), Laravel (PHP), etc.

APIs: A criação de APIs (RESTful ou GraphQL) para comunicação entre o frontend e o backend.

3. Banco de Dados

O banco de dados armazena os dados do site. A escolha do banco de dados depende dos requisitos do projeto:

Relacionais (SQL): MySQL, PostgreSQL, Oracle Database, Microsoft SQL Server.

Não Relacionais (NoSQL): MongoDB, Redis, DynamoDB, Cassandra.

4. Infraestrutura

A infraestrutura refere-se ao ambiente onde o site é hospedado e como ele é gerenciado e escalado:

Servidores e Hospedagem: AWS, Google Cloud, Azure, DigitalOcean, Heroku, etc.

Containers e Orquestração: Docker para containerização e Kubernetes para orquestração de containers.

CI/CD (Integração Contínua/Entrega Contínua): Jenkins, Travis CI, CircleCI, GitHub Actions para automatizar o pipeline de desenvolvimento e implantação.

5. Segurança

A segurança é crítica em todas as camadas da arquitetura do site:

Autenticação e Autorização: OAuth, JWT, Passport.js.

Certificados SSL/TLS: Para criptografar a comunicação entre o cliente e o servidor.

Proteção contra Ataques: Proteção contra SQL injection, XSS, CSRF, etc.

6. Serviços e Funcionalidades Adicionais

Para adicionar funcionalidades e melhorar a experiência do usuário, você pode integrar vários serviços adicionais:

Serviços de Cache: Redis, Memcached para melhorar o desempenho do site.

Serviços de Mensageria: RabbitMQ, Apache Kafka para comunicação assíncrona.

CDN (Content Delivery Network): Cloudflare, Akamai para distribuir conteúdo e melhorar a velocidade de carregamento.

Exemplo de Estrutura de Projeto

Aqui está um exemplo simplificado de como os arquivos e diretórios podem ser organizados em um projeto típico

├── frontend/

│ ├── public/

│ │ └── index.html

│ ├── src/

│ │ ├── components/

│ │ ├── pages/

│ │ ├── App.js

│ │ └── index.js

│ ├── package.json

│ └── webpack.config.js

├── backend/

│ ├── src/

│ │ ├── controllers/

│ │ ├── models/

│ │ ├── routes/

│ │ ├── services/

│ │ └── app.js

│ ├── .env

│ ├── package.json

│ └── server.js

├── database/

│ └── schema.sql

├── docker-compose.yml

├── Dockerfile

├── README.md

└── .gitignore

Descrição dos Diretórios e Arquivos

frontend/: Contém todo o código do frontend.

public/: Arquivos públicos como index.html.

src/: Código fonte do frontend (componentes React/Vue/Angular).

backend/: Contém todo o código do backend.

controllers/: Lida com a lógica das requisições.

models/: Definições dos modelos de dados.

routes/: Definições das rotas da API.

services/: Serviços de negócios e integração com APIs externas.

app.js: Arquivo principal da aplicação.

database/: Scripts de banco de dados.

schema.sql: Definição do esquema do banco de dados.

docker-compose.yml: Arquivo de configuração do Docker Compose para orquestrar serviços.

Dockerfile: Instruções para construir a imagem Docker do projeto.

README.md: Documentação do projeto.

.gitignore: Arquivo para especificar quais arquivos/diretórios devem ser ignorados pelo Git.

Essa estrutura e essas tecnologias podem variar dependendo das necessidades específicas do seu projeto, mas fornecem uma boa base para construir um site com serviço moderno e escalável.

0 notes

Text

Hybrid Cloud Strategies for Modern Operations Explained

By combining these two cloud models, organizations can enhance flexibility, scalability, and security while optimizing costs and performance. This article explores effective hybrid cloud strategies for modern operations and how they can benefit your organization. Understanding Hybrid Cloud What is Hybrid Cloud? A hybrid cloud is an integrated cloud environment that combines private cloud (on-premises or hosted) and public cloud services. This model allows organizations to seamlessly manage workloads across both cloud environments, leveraging the benefits of each while addressing specific business needs and regulatory requirements. Benefits of Hybrid Cloud - Flexibility: Hybrid cloud enables organizations to choose the optimal environment for each workload, enhancing operational flexibility. - Scalability: By utilizing public cloud resources, organizations can scale their infrastructure dynamically to meet changing demands. - Cost Efficiency: Hybrid cloud allows organizations to optimize costs by balancing between on-premises investments and pay-as-you-go cloud services. - Enhanced Security: Sensitive data can be kept in a private cloud, while less critical workloads can be run in the public cloud, ensuring compliance and security. Key Hybrid Cloud Strategies 1. Workload Placement and Optimization Assessing Workload Requirements Evaluate the specific requirements of each workload, including performance, security, compliance, and cost considerations. Determine which workloads are best suited for the private cloud and which can benefit from the scalability and flexibility of the public cloud. Dynamic Workload Management Implement dynamic workload management to move workloads between private and public clouds based on real-time needs. Use tools like VMware Cloud on AWS, Azure Arc, or Google Anthos to manage hybrid cloud environments efficiently. 2. Unified Management and Orchestration Centralized Management Platforms Utilize centralized management platforms to monitor and manage resources across both private and public clouds. Tools like Microsoft Azure Stack, Google Cloud Anthos, and Red Hat OpenShift provide a unified interface for managing hybrid environments, ensuring consistent policies and governance. Automation and Orchestration Automation and orchestration tools streamline operations by automating routine tasks and managing complex workflows. Use tools like Kubernetes for container orchestration and Terraform for infrastructure as code (IaC) to automate deployment, scaling, and management across hybrid cloud environments. 3. Security and Compliance Implementing Robust Security Measures Security is paramount in hybrid cloud environments. Implement comprehensive security measures, including multi-factor authentication (MFA), encryption, and regular security audits. Use security tools like AWS Security Hub, Azure Security Center, and Google Cloud Security Command Center to monitor and manage security across the hybrid cloud. Ensuring Compliance Compliance with industry regulations and standards is essential for maintaining data integrity and security. Ensure that your hybrid cloud strategy adheres to relevant regulations, such as GDPR, HIPAA, and PCI DSS. Implement policies and procedures to protect sensitive data and maintain audit trails. 4. Networking and Connectivity Hybrid Cloud Connectivity Solutions Establish secure and reliable connectivity between private and public cloud environments. Use solutions like AWS Direct Connect, Azure ExpressRoute, and Google Cloud Interconnect to create dedicated network connections that enhance performance and security. Network Segmentation and Security Implement network segmentation to isolate and protect sensitive data and applications. Use virtual private networks (VPNs) and virtual LANs (VLANs) to segment networks and enforce security policies. Regularly monitor network traffic for anomalies and potential threats. 5. Disaster Recovery and Business Continuity Implementing Hybrid Cloud Backup Solutions Ensure business continuity by implementing hybrid cloud backup solutions. Use tools like AWS Backup, Azure Backup, and Google Cloud Backup to create automated backup processes that store data across multiple locations, providing redundancy and protection against data loss. Developing a Disaster Recovery Plan A comprehensive disaster recovery plan outlines the steps to take in the event of a major disruption. Ensure that your plan includes procedures for data restoration, failover mechanisms, and communication protocols. Regularly test your disaster recovery plan to ensure its effectiveness and make necessary adjustments. 6. Cost Management and Optimization Monitoring and Analyzing Cloud Costs Use cost monitoring tools like AWS Cost Explorer, Azure Cost Management, and Google Cloud’s cost management tools to track and analyze your cloud spending. Identify areas where you can reduce costs and implement optimization strategies, such as rightsizing resources and eliminating unused resources. Leveraging Cost-Saving Options Optimize costs by leveraging cost-saving options offered by cloud providers. Use reserved instances, spot instances, and committed use contracts to reduce expenses. Evaluate your workload requirements and choose the most cost-effective pricing models for your needs. Case Study: Hybrid Cloud Strategy in a Financial Services Company Background A financial services company needed to enhance its IT infrastructure to support growth and comply with stringent regulatory requirements. The company adopted a hybrid cloud strategy to balance the need for flexibility, scalability, and security. Solution The company assessed its workload requirements and placed critical financial applications and sensitive data in a private cloud to ensure compliance and security. Less critical workloads, such as development and testing environments, were moved to the public cloud to leverage its scalability and cost-efficiency. Centralized management and orchestration tools were implemented to manage resources across the hybrid environment. Robust security measures, including encryption, MFA, and regular audits, were put in place to protect data and ensure compliance. The company also established secure connectivity between private and public clouds and developed a comprehensive disaster recovery plan. Results The hybrid cloud strategy enabled the financial services company to achieve greater flexibility, scalability, and cost-efficiency. The company maintained compliance with regulatory requirements while optimizing performance and reducing operational costs. Adopting hybrid cloud strategies can significantly enhance modern operations by providing flexibility, scalability, and security. By leveraging the strengths of both private and public cloud environments, organizations can optimize costs, improve performance, and ensure compliance. Implementing these strategies requires careful planning and the right tools, but the benefits are well worth the effort. Read the full article

0 notes

Text

Hybrid Cloud Strategies for Modern Operations Explained

By combining these two cloud models, organizations can enhance flexibility, scalability, and security while optimizing costs and performance. This article explores effective hybrid cloud strategies for modern operations and how they can benefit your organization. Understanding Hybrid Cloud What is Hybrid Cloud? A hybrid cloud is an integrated cloud environment that combines private cloud (on-premises or hosted) and public cloud services. This model allows organizations to seamlessly manage workloads across both cloud environments, leveraging the benefits of each while addressing specific business needs and regulatory requirements. Benefits of Hybrid Cloud - Flexibility: Hybrid cloud enables organizations to choose the optimal environment for each workload, enhancing operational flexibility. - Scalability: By utilizing public cloud resources, organizations can scale their infrastructure dynamically to meet changing demands. - Cost Efficiency: Hybrid cloud allows organizations to optimize costs by balancing between on-premises investments and pay-as-you-go cloud services. - Enhanced Security: Sensitive data can be kept in a private cloud, while less critical workloads can be run in the public cloud, ensuring compliance and security. Key Hybrid Cloud Strategies 1. Workload Placement and Optimization Assessing Workload Requirements Evaluate the specific requirements of each workload, including performance, security, compliance, and cost considerations. Determine which workloads are best suited for the private cloud and which can benefit from the scalability and flexibility of the public cloud. Dynamic Workload Management Implement dynamic workload management to move workloads between private and public clouds based on real-time needs. Use tools like VMware Cloud on AWS, Azure Arc, or Google Anthos to manage hybrid cloud environments efficiently. 2. Unified Management and Orchestration Centralized Management Platforms Utilize centralized management platforms to monitor and manage resources across both private and public clouds. Tools like Microsoft Azure Stack, Google Cloud Anthos, and Red Hat OpenShift provide a unified interface for managing hybrid environments, ensuring consistent policies and governance. Automation and Orchestration Automation and orchestration tools streamline operations by automating routine tasks and managing complex workflows. Use tools like Kubernetes for container orchestration and Terraform for infrastructure as code (IaC) to automate deployment, scaling, and management across hybrid cloud environments. 3. Security and Compliance Implementing Robust Security Measures Security is paramount in hybrid cloud environments. Implement comprehensive security measures, including multi-factor authentication (MFA), encryption, and regular security audits. Use security tools like AWS Security Hub, Azure Security Center, and Google Cloud Security Command Center to monitor and manage security across the hybrid cloud. Ensuring Compliance Compliance with industry regulations and standards is essential for maintaining data integrity and security. Ensure that your hybrid cloud strategy adheres to relevant regulations, such as GDPR, HIPAA, and PCI DSS. Implement policies and procedures to protect sensitive data and maintain audit trails. 4. Networking and Connectivity Hybrid Cloud Connectivity Solutions Establish secure and reliable connectivity between private and public cloud environments. Use solutions like AWS Direct Connect, Azure ExpressRoute, and Google Cloud Interconnect to create dedicated network connections that enhance performance and security. Network Segmentation and Security Implement network segmentation to isolate and protect sensitive data and applications. Use virtual private networks (VPNs) and virtual LANs (VLANs) to segment networks and enforce security policies. Regularly monitor network traffic for anomalies and potential threats. 5. Disaster Recovery and Business Continuity Implementing Hybrid Cloud Backup Solutions Ensure business continuity by implementing hybrid cloud backup solutions. Use tools like AWS Backup, Azure Backup, and Google Cloud Backup to create automated backup processes that store data across multiple locations, providing redundancy and protection against data loss. Developing a Disaster Recovery Plan A comprehensive disaster recovery plan outlines the steps to take in the event of a major disruption. Ensure that your plan includes procedures for data restoration, failover mechanisms, and communication protocols. Regularly test your disaster recovery plan to ensure its effectiveness and make necessary adjustments. 6. Cost Management and Optimization Monitoring and Analyzing Cloud Costs Use cost monitoring tools like AWS Cost Explorer, Azure Cost Management, and Google Cloud’s cost management tools to track and analyze your cloud spending. Identify areas where you can reduce costs and implement optimization strategies, such as rightsizing resources and eliminating unused resources. Leveraging Cost-Saving Options Optimize costs by leveraging cost-saving options offered by cloud providers. Use reserved instances, spot instances, and committed use contracts to reduce expenses. Evaluate your workload requirements and choose the most cost-effective pricing models for your needs. Case Study: Hybrid Cloud Strategy in a Financial Services Company Background A financial services company needed to enhance its IT infrastructure to support growth and comply with stringent regulatory requirements. The company adopted a hybrid cloud strategy to balance the need for flexibility, scalability, and security. Solution The company assessed its workload requirements and placed critical financial applications and sensitive data in a private cloud to ensure compliance and security. Less critical workloads, such as development and testing environments, were moved to the public cloud to leverage its scalability and cost-efficiency. Centralized management and orchestration tools were implemented to manage resources across the hybrid environment. Robust security measures, including encryption, MFA, and regular audits, were put in place to protect data and ensure compliance. The company also established secure connectivity between private and public clouds and developed a comprehensive disaster recovery plan. Results The hybrid cloud strategy enabled the financial services company to achieve greater flexibility, scalability, and cost-efficiency. The company maintained compliance with regulatory requirements while optimizing performance and reducing operational costs. Adopting hybrid cloud strategies can significantly enhance modern operations by providing flexibility, scalability, and security. By leveraging the strengths of both private and public cloud environments, organizations can optimize costs, improve performance, and ensure compliance. Implementing these strategies requires careful planning and the right tools, but the benefits are well worth the effort. Read the full article

0 notes

Text

New Data Revealed: CI/CD Explained! Foster Agile Development with DVOPS!_over 20,000 uses monthly!

RoamNook - CI/CD Blog

What is CI/CD? Continuous Integration and Continuous Delivery Explained

Continuous integration (CI) and continuous delivery (CD), also known as CI/CD, embodies a culture and set of operating principles and practices that application development teams use to deliver code changes both more frequently and more reliably.

CI/CD Defined

Continuous integration is a coding philosophy and set of practices that drive development teams to frequently implement small code changes and check them in to a version control repository. Most modern applications require developing code using a variety of platforms and tools, so teams need a consistent mechanism to integrate and validate changes. Continuous integration establishes an automated way to build, package, and test their applications. Having a consistent integration process encourages developers to commit code changes more frequently, which leads to better collaboration and code quality.

Automating the CI/CD Pipeline

CI/CD tools help store the environment-specific parameters that must be packaged with each delivery. CI/CD automation then makes any necessary service calls to web servers, databases, and other services that need restarting. It can also execute other procedures following deployment.

How Continuous Integration Improves Collaboration and Code Quality

Continuous integration is a development philosophy backed by process mechanics and automation. When practicing continuous integration, developers commit their code into the version control repository frequently; most teams have a standard of committing code at least daily. The rationale is that it’s easier to identify defects and other software quality issues on smaller code differentials than on larger ones developed over an extensive period. In addition, when developers work on shorter commit cycles, it is less likely that multiple developers will edit the same code and require a merge when committing.

Stages in the Continuous Delivery Pipeline

Continuous delivery is the automation that pushes applications to one or more delivery environments. Development teams typically have several environments to stage application changes for testing and review. A devops engineer uses a CI/CD tool such as Jenkins, CircleCI, AWS CodeBuild, Azure DevOps, Atlassian Bamboo, Argo CD, Buddy, Drone, or Travis CI to automate the steps and provide reporting.

CI/CD Tools and Plugins

CI/CD tools typically support a marketplace of plugins. For example, Jenkins lists more than 1,800 plugins that support integration with third-party platforms, user interface, administration, source code management, and build management.

CI/CD with Kubernetes and Serverless Architectures

Many teams operating CI/CD pipelines in cloud environments also use containers such as Docker and orchestration systems such as Kubernetes. Containers allow for packaging and shipping applications in a standard, portable way. Containers make it easy to scale up or tear down environments with variable workloads.

Next Generation CI/CD Applications

There are many advanced areas for CI/CD pipeline development and management. Some notable ones include MLOps, synthetic data generation, AIOps platforms, microservices, network configuration, embedded systems, database changes, IoT, and AR/VR.

Conclusion

Continuous integration and continuous delivery play a crucial role in modern software development. By automating code integration and delivery, CI/CD enables development teams to deliver code changes more frequently and with higher quality. It improves collaboration, code quality, and the overall software development process. Implementing CI/CD pipelines using the right tools and practices, such as Kubernetes and serverless architectures, can further enhance the efficiency and scalability of software delivery. As an innovative technology company, RoamNook is dedicated to providing IT consultation, custom software development, and digital marketing services to help businesses fuel their digital growth through the adoption of CI/CD practices. Contact us today to learn more about how RoamNook can support your organization's journey towards continuous integration and continuous delivery.

Sponsored by RoamNook

Source: https://www.infoworld.com/article/3271126/what-is-cicd-continuous-integration-and-continuous-delivery-explained.html&sa=U&ved=2ahUKEwi4yuin5ZuFAxV1EVkFHY_9AYUQxfQBegQIBBAC&usg=AOvVaw28UskNK53wD5h8LzvVlec9

0 notes

Text

Advanced Network Observability: Hubble for AKS Clusters

Advanced Container Networking Services

The Advanced Container Networking Services are a new service from Microsoft’s Azure Container Networking team, which follows the successful open sourcing of Retina: A Cloud-Native Container Networking Observability Platform. It is a set of services designed to address difficult issues related to observability, security, and compliance that are built on top of the networking solutions already in place for Azure Kubernetes Services (AKS). Advanced Network Observability, the first feature in this suite, is currently accessible in public preview.

Advanced Container Networking Services: What Is It?

A collection of services called Advanced Container Networking Services is designed to greatly improve your Azure Kubernetes Service (AKS) clusters’ operational capacities. The suite is extensive and made to handle the complex and varied requirements of contemporary containerized applications. Customers may unlock a new way of managing container networking with capabilities specifically designed for security, compliance, and observability.

The primary goal of Advanced Container Networking Services is to provide a smooth, integrated experience that gives you the ability to uphold strong security postures, guarantee thorough compliance, and obtain insightful information about your network traffic and application performance. This lets you grow and manage your infrastructure with confidence knowing that your containerized apps meet or surpass your performance and reliability targets in addition to being safe and compliant.

Advanced Network Observability: What Is It?

The first aspect of the Advanced Container Networking Services suite, Advanced Network Observability, gives Linux data planes running on Cilium and Non-Cilium the power of Hubble’s control plane. It gives you deep insights into your containerized workloads by unlocking Hubble metrics, the Hubble user interface (UI), and the Hubble command line interface (CLI) on your AKS clusters. With Advanced Network Observability, users may accurately identify and identify the underlying source of network-related problems within a Kubernetes cluster.

This feature leverages extended Berkeley Packet Filter (eBPF) technology to collect data in real time from the Linux Kernel and offers network flow information at the pod-level granularity in the form of metrics or flow logs. It now provides detailed request and response insights along with network traffic flows, volumetric statistics, and dropped packets, in addition to domain name service (DNS) metrics and flow information.

eBPF-based observability driven by Retina or Cilium.

Experience without a Container Network Interface (CNI).

Using Hubble measurements, track network traffic in real time to find bottlenecks and performance problems.

Hubble command line interface (CLI) network flows allow you to trace packet flows throughout your cluster on-demand, which can help you diagnose and comprehend intricate networking behaviours.

Using an unmanaged Hubble UI, visualise network dependencies and interactions between services to guarantee optimal configuration and performance.

To improve security postures and satisfy compliance requirements, produce comprehensive metrics and records.

Image credit to Microsoft Azure

Hubble without a Container Network Interface (CNI)

Hubble control plane extended beyond Cilium with Advanced Network Observability. Hubble receives the eBPF events from Cilium in clusters that are based on Cilium. Microsoft Retina acts as the dataplane surfacing deep insights to Hubble in non-Cilium based clusters, giving users a smooth interactive experience.

Visualizing Hubble metrics with Grafana

Grafana Advanced Network Observability facilitates two integration techniques for visualization of Hubble metrics:

Grafana and Prometheus managed via Azure

If you’re an advanced user who can handle more administration overhead, bring your own (BYO) Grafana and Prometheus.

Azure provides integrated services that streamline the setup and maintenance of monitoring and visualization using the Prometheus and Grafana methodology, which is maintained by Azure. A managed instance of Prometheus, which gathers and maintains metrics from several sources, including Hubble, is offered by Azure Monitor.

Hubble CLI querying network flows

Customers can query for all or filtered network flows across all nodes using the Hubble command line interface (CLI) while using Advanced Network Observability.

Through a single pane of glass, users will be able to discern if flows have been discarded or forwarded from all nodes.

Hubble UI service dependency graph

To visualize service dependencies, customers can install Hubble UI on clusters that have Advanced Network Observability enabled. Customers can choose a namespace and view network flows between various pods within the cluster using Hubble UI, which offers an on-demand view of all flows throughout the cluster and surfaces detailed information about each flow.

Advantages

Increased network visibility

Unmatched network visibility is made possible by Advanced Network Observability, which delivers detailed insights into network activity down to the pod level. Administrators can keep an eye on traffic patterns, spot irregularities, and get a thorough grasp of network behavior inside their Azure Kubernetes Service (AKS) clusters thanks to this in-depth insight. Advanced Network Observability offers real-time metrics and logs that reveal traffic volume, packet drops, and DNS metrics by utilizing eBPF-based data collecting from the Linux Kernel. The improved visibility guarantees that network managers can quickly detect and resolve possible problems, preserving the best possible network security and performance.

Tracking of cross-node network flow

Customers in their Kubernetes clusters can monitor network flows over several nodes using Advanced Network Observability. This makes it feasible to precisely trace packet flows and comprehend intricate networking behaviors and node-to-node interactions. Through the ability to query network flows, Hubble CLI allows users to filter and examine particular traffic patterns. The ability to trace packets across nodes and discover dropped and redirected packets in a single pane of glass makes cross-node tracking a valuable tool for troubleshooting network problems.

Monitoring performance in real time

Customers can monitor performance in real time using Advanced Network Observability. Through the integration of Cilium or Retina-powered Hubble measurements, customers can track network traffic in real time and spot performance problems and bottlenecks as they arise. Maintaining high performance and making sure that any decline in network performance is quickly detected and fixed depend on this instantaneous feedback loop. Proactive management and quick troubleshooting are made possible by the continuous, in-depth insights into network operations provided by the monitored Hubble metrics and flow logs.

Historical analysis using several clusters

When combined with Azure Managed Prometheus and Grafana, Advanced Network Observability offers advantages that can be extended to multi-cluster systems. These capabilities include historical analysis, which is crucial for long-term network management and optimization. Network performance and dependability may be affected in the future by trends, patterns, and reoccurring problems that administrators can find by archiving and examining past data from several clusters. For the purposes of capacity planning, performance benchmarking, and compliance reporting, this historical perspective is essential. Future decisions about network setup and design are influenced by the capacity to examine and evaluate historical network data, which aids in understanding how network performance has changed over time.

Read more on Govindhtech.com

#AzureKubernetesServices#NetworkObservability#MicrosoftRetina#azure#LinuxKernel#AzureManaged#microsoft#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

The Power of Windows Server 2022: A Comprehensive Overview

Introduction:

Windows Server 2022, the latest iteration of Microsoft's renowned server operating system, has arrived with a host of new features, enhancements, and capabilities. Designed to meet the evolving needs of modern enterprises, this edition brings cutting-edge technologies, improved security measures, and enhanced performance. In this article, we'll explore the key aspects of Windows Server 2022 and how it can benefit organizations of all sizes.

1. Enhanced Security Features:

Security is a top priority in any IT infrastructure, and Windows Server 2022 has taken significant strides in this regard. The operating system introduces advancements in identity management, threat protection, and secure connectivity. Features like secured-core server and Azure Arc integration bolster the defense against evolving cyber threats, ensuring a robust security posture for your servers.

2. Containerization and Kubernetes Support:

Recognizing the growing trend towards containerization and microservices architecture, Windows Server 2022 comes with improved support for containers and Kubernetes. This enables organizations to modernize their applications, improve scalability, and enhance resource utilization. The integration of Windows Containers and Kubernetes allows for seamless orchestration and management of containerized workloads.

3. Azure Hybrid Integration:

Windows Server 2022 strengthens the bond between on-premises infrastructure and cloud services through enhanced Azure integration. The Azure Arc-enabled servers feature allows organizations to manage their servers, both on-premises and in the cloud, from a centralized Azure portal. This facilitates a unified and consistent management experience across diverse environments.

4. Performance Improvements:

Performance is a critical factor in server environments, and Windows Server 2022 brings notable improvements in this aspect. Whether it's handling increased workloads, optimizing storage performance, or enhancing networking capabilities, the new edition is engineered to deliver a more responsive and efficient server infrastructure.

5. Storage Innovations:

The latest version introduces Storage Migration Service, which simplifies the process of migrating servers and their data to newer storage infrastructure. Additionally, Storage Spaces Direct (S2D) enhancements provide improved resilience, performance, and flexibility in managing storage spaces, making it easier for organizations to scale their storage needs.

6. Improved Management Tools:

Windows Admin Center has been further refined to provide a more user-friendly and efficient management interface. It offers a centralized platform for managing servers, clusters, hyper-converged infrastructure, and more. The streamlined management experience helps IT administrators save time and effort in overseeing their server environments.

7. Support for Edge Computing:

Recognizing the growing importance of edge computing, Windows Server 2022 includes features that cater to the unique requirements of distributed environments. With improvements in Azure IoT Edge integration and support for ARM64 architecture, organizations can seamlessly extend their IT infrastructure to the edge for improved performance and reduced latency.

Conclusion:

Windows Server 2022 represents a significant leap forward in terms of security, performance, and integration capabilities. Whether you are a small business or a large enterprise, the features and enhancements in this edition are geared towards providing a robust, flexible, and secure foundation for your IT infrastructure. As organizations continue to navigate the complexities of modern technology landscapes, Windows Server 2022 stands out as a reliable and forward-looking choice for building and managing server environments.

0 notes

Text

AZ-104: Azure Administrator Course Outline