#copy-webpack-plugin

Explore tagged Tumblr posts

Text

Webpackで階層を維持してファイルをコピーするプラグイン。 なかなか見つからなかった… こちらも参考に…

0 notes

Text

Website Loading Woes: Speed Optimization for Musicians

In today’s fast-paced digital world, your website is often the first impression you make on potential fans, collaborators, and industry professionals. A slow-loading site can turn visitors away before they even get a chance to hear your music or learn about your upcoming shows. Speed optimization for musicians isn’t just a technical concern; it’s a crucial part of building and maintaining an effective online presence. In this post, we’ll explore practical tips to optimize your website’s loading times and ensure a smooth, fast experience for your visitors.

1. **Choose the Right Hosting Provider**

Your website’s performance starts with your hosting provider. A reliable and fast web host is essential for quick loading times.

- **Shared vs. Dedicated Hosting:** While shared hosting is cheaper, it often results in slower load times due to the number of sites sharing the same server. If you can afford it, consider upgrading to a dedicated hosting plan or a Virtual Private Server (VPS) for better performance.

- **Content Delivery Network (CDN):** A CDN stores copies of your site’s content on servers around the world, delivering it to users from the nearest server. This reduces the distance data has to travel and speeds up loading times for your global audience.

2. **Optimize Your Images**

Images are often the largest files on a website, and unoptimized images can significantly slow down your site.

- **Use the Right File Format:** JPEGs are great for photographs, while PNGs are better for images that require transparency. Avoid using BMPs or TIFFs, as they are not web-friendly.

- **Compress Images:** Use image compression tools like TinyPNG, JPEGmini, or Photoshop’s “Save for Web” option to reduce file sizes without sacrificing quality. This can drastically reduce load times.

- **Lazy Loading:** Implement lazy loading, a technique where images load only when they’re about to enter the user’s view. This reduces the initial load time and improves the user experience.

3. **Minimize HTTP Requests**

Every element on your web page—images, scripts, stylesheets—requires an HTTP request. The more requests, the slower your site.

- **Combine Files:** Combine multiple CSS files into one and do the same for JavaScript files. This reduces the number of requests and speeds up load times.

- **Use CSS Sprites:** CSS sprites allow you to combine multiple images into a single file. The browser then loads the single file and displays the correct image portion. This is especially useful for icons and buttons.

- **Reduce Plugins:** If you’re using a platform like WordPress, minimize the number of plugins. Each plugin adds to the number of HTTP requests, so only use the ones that are essential.

4. **Enable Browser Caching**

Browser caching allows your site to store files on a visitor’s device, so they don’t have to be downloaded every time the user visits your site.

- **Set Expiry Dates:** By setting expiry dates on cached content, you can control how long files are stored on the user’s device. Use tools like YSlow or Google PageSpeed Insights to identify which files should be cached.

- **Leverage .htaccess:** If you have access to your site’s .htaccess file, you can manually enable caching and set expiry dates for different types of content.

5. **Minify CSS, JavaScript, and HTML**

Minification is the process of removing unnecessary characters (like spaces and line breaks) from your code, making it smaller and faster to load.

- **Use Online Tools:** Tools like UglifyJS for JavaScript, CSSNano for CSS, and HTMLMinifier for HTML can help you minify your files.

- **Automate the Process:** If you’re using a build tool like Gulp or Webpack, you can automate minification during your site’s build process, ensuring your files are always optimized.

6. **Optimize Your Music Player**

If your site features a music player, it’s important to ensure it doesn’t slow down your site.

- **Use Streaming Services:** Instead of hosting large audio files on your server, embed music from streaming platforms like SoundCloud, Spotify, or Bandcamp. These platforms are optimized for fast loading and offer high-quality streaming.

- **Optimize Embedded Players:** If you’re embedding a music player, make sure it loads asynchronously, meaning it won’t hold up the rest of your site’s content from loading.

7. **Enable Gzip Compression**

Gzip compression reduces the size of your files before they are sent to the browser, which can significantly decrease loading times.

- **Activate Gzip:** Most web servers, including Apache and Nginx, support Gzip compression. You can enable it through your site’s .htaccess file or via your server’s configuration settings.

- **Check Compression:** Use online tools like Gtmetrix or Google PageSpeed Insights to check if Gzip compression is enabled on your site and see the difference in file sizes.

8. **Use a Lightweight Theme**

If you’re using a content management system (CMS) like WordPress, the theme you choose can greatly impact your site’s speed.

- **Choose a Fast Theme:** Opt for themes that are built with performance in mind. Avoid overly complex themes with excessive animations, sliders, and widgets that can slow down your site.

- **Custom vs. Premade Themes:** If you have the budget, consider a custom-built theme that’s optimized for your specific needs. Otherwise, choose a well-coded, lightweight premade theme and customize it to suit your style.

9. **Monitor Your Website’s Performance**

Regularly monitoring your website’s performance helps you identify issues and make necessary adjustments.

- **Use Speed Testing Tools:** Tools like Google PageSpeed Insights, Pingdom, and Gtmetrix provide detailed reports on your site’s speed and offer suggestions for improvement.

- **Analyze Traffic Spikes:** If you experience slowdowns during traffic spikes, consider using a service like Cloudflare to manage the increased load or upgrading your hosting plan to handle more visitors.

10. **Keep Your Site Updated**

Keeping your site’s software up-to-date is crucial for both security and performance.

- **Update CMS and Plugins:** Regularly update your CMS, plugins, and themes to ensure they’re optimized and free from vulnerabilities that could slow down your site.

- **Remove Unused Plugins and Themes:** Deactivate and delete any plugins or themes you’re not using. Even inactive plugins can slow down your site, so keep your installation clean.

Conclusion

Optimizing your website’s loading times is essential for keeping your audience engaged and improving your overall online presence. With the right strategies, you can ensure that your site is fast, efficient, and provides a great user experience, even if you’re working with limited resources. By choosing the right hosting, optimizing your images, minimizing HTTP requests, and staying on top of updates, you can create a site that not only looks great but also performs at its best. Remember, in the digital age, speed is not just a luxury—it’s a necessity.

#MusicianTips#MusicMarketing#FastLoading#FanEngagement#WebsiteOptimization#SpeedMatters#DigitalPresence#WebPerformance#OnlineMusic#TechForMusicians

0 notes

Text

JavaScript yang ringan dan efisien sangat penting untuk meningkatkan kinerja situs web. Dengan mengurangi ukuran dan kompleksitas kode JavaScript, Anda dapat mempercepat waktu muat halaman dan meningkatkan pengalaman pengguna. Berikut adalah langkah-langkah untuk membuat JavaScript yang lebih ringan: 1. Minifikasi Kode Minifikasi adalah proses menghapus semua karakter yang tidak perlu dari kode sumber tanpa mengubah fungsionalitasnya. Ini termasuk menghapus spasi, komentar, dan karakter baru. Anda dapat menggunakan alat seperti UglifyJS, Terser, atau terser-webpack-plugin untuk melakukan minifikasi secara otomatis. Contoh: javascript Copy code // Sebelum Minifikasi function add(a, b) { return a + b; } // Setelah Minifikasi function add(a,b){return a+b;} 2. Hapus Kode yang Tidak Digunakan Saring kode Anda untuk menemukan dan menghapus fungsi, variabel, atau bagian lain yang tidak terpakai. Anda juga bisa menggunakan alat seperti PurifyCSS atau PurgeCSS untuk membersihkan kode JavaScript yang tidak diperlukan. Contoh: javascript Copy code // Kode Tidak Digunakan function unusedFunction() { console.log(“Ini tidak digunakan”); } // Hapus fungsi yang tidak terpakai 3. Gunakan Modul dan Pembagian Kode Pecah kode JavaScript Anda menjadi modul-modul kecil yang dapat dimuat secara terpisah. Ini akan mengurangi beban awal dan hanya memuat kode yang diperlukan saat itu juga. Anda bisa menggunakan teknik code splitting dengan alat seperti Webpack. Contoh: javascript Copy code // Menggunakan dynamic import import(/* webpackChunkName: “moduleName” */ ‘./moduleName’).then(module => { // Gunakan module }); 4. Optimalkan Penggunaan Library dan Framework Jika Anda menggunakan library atau framework, pastikan untuk hanya memuat bagian yang diperlukan. Misalnya, saat menggunakan lodash, Anda bisa mengimpor hanya fungsi yang diperlukan daripada seluruh library. Contoh: javascript Copy code // Mengimpor seluruh lodash import _ from ‘lodash’; // Mengimpor hanya fungsi yang diperlukan import { debounce } from ‘lodash’; 5. Gunakan Event Delegation Alih-alih menambahkan event listener ke banyak elemen, gunakan event delegation untuk menambahkan listener pada elemen induk. Ini mengurangi jumlah event listener yang aktif di DOM. Contoh: javascript Copy code // Tanpa Delegation document.querySelectorAll(‘.item’).forEach(item => { item.addEventListener(‘click’, function() { console.log(‘Item clicked’); }); }); // Dengan Delegation document.querySelector(‘.parent’).addEventListener(‘click’, function(e) { if (e.target.classList.contains(‘item’)) { console.log(‘Item clicked’); } }); 6. Optimalkan Algoritma dan Logika Tinjau algoritma dan logika dalam kode Anda. Pastikan untuk menggunakan algoritma yang efisien dan menghindari pengulangan yang tidak perlu. Contoh: javascript Copy code // Pengulangan tidak efisien for (let i = 0; i < array.length; i++) { // Proses } // Gunakan metode yang lebih efisien array.forEach(item => { // Proses }); 7. Caché Data dan Hasil Jika Anda melakukan perhitungan yang berat atau memanggil API berulang kali, pertimbangkan untuk menyimpan hasilnya dalam variabel atau menggunakan teknik caching untuk menghindari perhitungan berulang. Contoh: javascript Copy code let cachedData = {}; function fetchData(id) { if (cachedData[id]) { return cachedData[id]; } // Lakukan fetch dan simpan hasilnya cachedData[id] = fetch(`api/data/${id}`); return cachedData[id]; } 8. Gunakan Asynchronous Loading Gunakan async atau defer pada tag Dengan mengikuti langkah-langkah di atas, Anda dapat membuat JavaScript yang lebih ringan dan efisien. Hal ini tidak hanya meningkatkan ki...

0 notes

Text

Understanding and Resolving the TypeError [ERR_UNKNOWN_FILE_EXTENSION]: Unknown File Extension “.javascript” Error

JavaScript, being one of the core technologies of the web, is widely used for creating interactive web pages and dynamic user interfaces. Node.js, a popular runtime for executing JavaScript outside the browser, has streamlined server-side scripting and brought JavaScript into the backend development sphere. However, developers occasionally encounter errors that can be perplexing, especially those related to file extensions. One such error is TypeError [ERR_UNKNOWN_FILE_EXTENSION]: Unknown file extension “.javascript” for c:\xxxx\xxxxx\xxxxx-xxxx\xxxxxxxxx.javascript. This article delves into the causes, implications, and resolutions for this specific error.

Understanding the Error

The TypeError [ERR_UNKNOWN_FILE_EXTENSION]: Unknown file extension “.javascript” error indicates that Node.js does not recognize the .javascript file extension. Node.js expects JavaScript files to have the .js extension. When it encounters a file with the .javascript extension, it throws this error, signaling that it does not know how to handle the file.

Causes of the Error

Incorrect File Extension: The most direct cause is the use of the .javascript extension instead of the standard .js extension.

Configuration Issues: Sometimes, custom configurations or build tools might mistakenly generate or refer to files with the .javascript extension.

Typographical Errors: Developers may accidentally type .javascript instead of .js when saving or referencing files.

Implications of the Error

This error prevents Node.js from executing the JavaScript file. As a result, the intended functionality will not be performed, potentially causing the entire application to malfunction or crash.

Steps to Resolve the Error

1. Renaming the File Extension

The simplest solution is to rename the file extension from .javascript to .js. Here���s how you can do it:

Locate the file on your filesystem.

Right-click the file and select “Rename.”

Change the extension from .javascript to .js.

Alternatively, you can use the command line:

bash

Copy code

mv c:\xxxx\xxxxx\xxxxx-xxxx\xxxxxxxxx.javascript c:\xxxx\xxxxx\xxxxx-xxxx\xxxxxxxxx.js

2. Updating File References

If your code references the file with the incorrect extension, update these references to use the .js extension. For example:

javascript

Copy code

// Before const script = require('./path/to/xxxxxxxxx.javascript'); // After const script = require('./path/to/xxxxxxxxx.js');

3. Configuring Build Tools

If you are using a build tool like Webpack, Gulp, or Grunt, ensure that the configuration does not inadvertently generate files with the .javascript extension. Check the tool's configuration files (e.g., webpack.config.js) for any rules or plugins that might be causing this issue.

Example Scenario

Consider a Node.js project with the following structure:

css

Copy code

project-root/ ├── src/ │ ├── index.js │ └── app.javascript └── package.json

Attempting to require the app.javascript file in index.js would result in the error:

javascript

Copy code

const app = require('./app.javascript'); // This line throws the error

To resolve this, you should:

Rename app.javascript to app.js.

Update the reference in index.js:

javascript

Copy code

const app = require('./app.js'); // Corrected line

Prevention Strategies

Consistent Naming Conventions: Establish and adhere to a consistent naming convention for file extensions within your team or project.

Code Reviews: Regular code reviews can help catch such issues before they become problematic.

Linting Tools: Use linting tools like ESLint to enforce file naming conventions. For example, you can create a custom rule to flag non-standard file extensions.

Automated Testing: Implement automated tests that include file loading and execution as part of your CI/CD pipeline. This ensures that any issues with file extensions are caught early in the development process.

Conclusion

The TypeError [ERR_UNKNOWN_FILE_EXTENSION]: Unknown file extension “.javascript” error in Node.js is a common yet easily resolvable issue. By understanding its causes and following best practices for file naming and configuration, developers can prevent this error and ensure smooth execution of their JavaScript applications. Consistent conventions, diligent reviews, and the use of automated tools are key strategies in maintaining a robust and error-free codebase.

0 notes

Text

Create a custom work item control with Azure DevOps extension SDK

The Azure DevOps Web Extension SD or Azure DevOps Extension SDK is a client SDK for developing extensions for Azure DevOps. In this example I will show you how to make a custom work item control using this SDK.

Here is an example of a small project with a custom work item.

Prerequisites

We will need the following before we get started with building our extension:

NodeJS

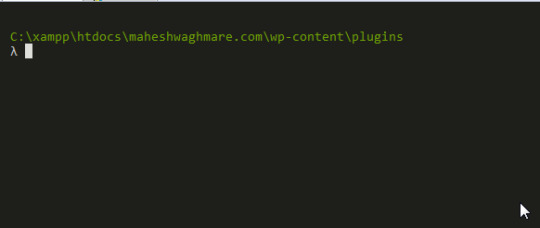

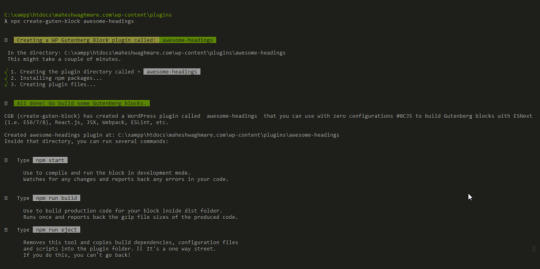

Setting up the project

We start setting up the project by running the following NPM command in your project directory:

npm init

You can configure these settings as you wish. These can be found in package.json.

we need to install the following packages a dependencies:

npm i azure-devops-extension-api azure-devops-extension-sdk azure-devops-ui react react-dom

as well as the following dev dependencies:

npm i @types/react @types/react-dom copy-webpack-plugin cross-env css-loader loader-utils node-sass rimraf sass sass-loader style-loader tfx-cli ts-loader typescript webpack webpack-cli --save-dev

Now your package.json should look something like, the packager versions might be different:

{ "name": "testextension", "version": "1.0.0", "description": "", "main": "index.js", "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "author": "", "license": "ISC", "dependencies": { "azure-devops-extension-api": "^1.158.0", "azure-devops-extension-sdk": "^2.0.11", "azure-devops-ui": "^2.167.49", "react": "^16.14.0", "react-dom": "^16.14.0" }, "devDependencies": { "@types/react": "^18.0.25", "@types/react-dom": "^18.0.8", "copy-webpack-plugin": "^11.0.0", "cross-env": "^7.0.3", "css-loader": "^6.7.1", "loader-utils": "^3.2.0", "node-sass": "^7.0.3", "rimraf": "^3.0.2", "sass": "^1.56.0", "sass-loader": "^13.1.0", "style-loader": "^3.3.1", "tfx-cli": "^0.12.0", "ts-loader": "^9.4.1", "typescript": "^4.8.4", "webpack": "^5.74.0", "webpack-cli": "^4.10.0" } }

Create two directories inside your root directory: src and static. in this example we won’t be adding anything to the static folder, but it is meant for, for example image files that your project uses. For now, you can add a file named .gitkeep instead.

Next up is configuring TypeScript. Create tsconfig.json in your root folder and put the following inside it:

{ "compilerOptions": { "charset": "utf8", "experimentalDecorators": true, "module": "amd", "moduleResolution": "node", "noImplicitAny": true, "noImplicitThis": true, "strict": true, "target": "es5", "rootDir": "src/", "outDir": "dist/", "jsx": "react", "lib": [ "es5", "es6", "dom", "es2015.promise", "es2019" ], "types": [ "react", "node" ], "esModuleInterop": true } }

Now we configure Webpack. Create webpack.config.js in your root folder and put the following inside it:

const path = require("path"); const fs = require("fs"); const CopyWebpackPlugin = require("copy-webpack-plugin");

const entries = {};

const ComponentsDir = path.join(__dirname, "src/Components"); fs.readdirSync(ComponentsDir).filter(dir => { if (fs.statSync(path.join(ComponentsDir, dir)).isDirectory()) { entries[dir] = "./" + path.relative(process.cwd(), path.join(ComponentsDir, dir, dir)); } });

module.exports = { entry: entries, output: { filename: "[name]/[name].js" }, resolve: { extensions: [".ts", ".tsx", ".js"], alias: { "azure-devops-extension-sdk": path.resolve("node_modules/azure-devops-extension-sdk") }, }, stats: { warnings: false }, module: { rules: [ { test: /\.tsx?$/, loader: "ts-loader" }, { test: /\.s[ac]ss?$/, use: ["style-loader", "css-loader", "azure-devops-ui/buildScripts/css-variables-loader", "sass-loader"] }, { test: /\.css?$/, use: ["style-loader", "css-loader"], }, { test: /\.woff?$/, type: 'asset/inline' }, { test: /\.html?$/, loader: "file-loader" } ] }, plugins: [ new CopyWebpackPlugin({ patterns: [ { from: "**/*.html", context: "src/Components" } ] }) ] };

The last configuration we need to make is specifically for Azure DevOps extensions. Again, in the root directory, create a new file, this time it’s called azure-devops-extension.json:

{ "manifestVersion": 1.0, "id": "textextension", "publisher": "your Visual Studio Marketplace publisher here", "version": "0.0.1", "public": false, "name": "testextension", "description": "custom control", "categories": [ "Azure Boards" ], "targets": [ { "id": "Microsoft.VisualStudio.Services" } ], "icons": { "default": "logo.png" }, "content": { "details": { "path": "README.md" } }, "scopes": [ "vso.work" ], "files": [ { "path": "static", "addressable": true }, { "path": "dist", "addressable": true } ] }

Now, you might notice how this configuration file needs two files: README.md and logo.png. You can add these files in your root folder.

Making the custom control

inside the src directory, create a new React component, let’s name the file Common.tsx.

import "azure-devops-ui/Core/override.css" import "es6-promise/auto" import * as React from "react" import * as ReactDOM from "react-dom" import "./Common.scss"

export function showRootComponent(component: React.ReactElement<any>) { ReactDOM.render(component, document.getElementById("root")) }

It is important we import "azure-devops-ui/Core/override.css", so that we can use standardized UI styling.

This component is more or less our root component, which renders our other components inside a HTML element with id “root”.

Also create a Common.scss file. All we’re going to add to this is:

body { margin: 0; padding: 0; }

Inside the src folder, let’s make another directory named Components and inside that folder create another one named TestExtensionComponent.

src │ Common.scss │ Common.tsx │ └───Components └───TestExtensionComponent

Inside the TestExtensionComponent folder, we’re going to add a few files. First off is TestExtensionComponent.html, this will be our html that will contain the component(s) of your custom control.

<!DOCTYPE html> <html xmlns="http://www.w3.org/1999/xhtml"> <body> <div id="root"></div> <script type="text/javascript" src="TestExtensionComponent.js" charset="utf-8"></script> </body> </html>

Next is TestExtensionComponent.scss.

@import "node_modules/azure-devops-ui/Core/_platformCommon.scss";

This will import the Azure DevOps styling.

Now add TestExtensionComponent.json, this is our component configuration file. Let’s add an input to the configuration, let’s call it SampleInput

{ "contributions": [ { "id": "TestExtensonComponent", "type": "ms.vss-work-web.work-item-form-control", "targets": [ "ms.vss-work-web.work-item-form" ], "properties": { "name": "cusom control", "uri": "dist/TestExtensonComponent/TestExtensonComponent.html", "inputs": [ { "id": "SampleInput", "name": "sample input", "description": "sample input", "validation": { "dataType": "String", "isRequired": true } } ] } } ], "scopes": [ "vso.work" ] }

Next is TestExtensionComponent.tsx

import React, { Component } from 'react' import * as SDK from "azure-devops-extension-sdk" import { IWorkItemFormService, WorkItemTrackingServiceIds, WorkItemOptions } from "azure-devops-extension-api/WorkItemTracking"

import "./TestExtensionComponent.scss"

import { showRootComponent } from "../../Common"

class TestExtensionComponent extends Component<{}, {}> {

constructor(props: {}) { super(props) }

public componentDidMount() { SDK.init({}) }

public render(): JSX.Element { return ( <></> ) } }

export default RESTRequestButton showRootComponent(<RESTRequestButton />)

The component doesn’t do much for now. What is important is the SDK.Init() inside the ComponentDidMount(). This makes sure we can use the Azure DevOps Extension SDK in our component.

So, what if we want to get input data? For example, our SampleInput we configured in the json. We can use the Azure DevOps Extension SDK for that.

To the constructor add:

this.state = { displayText: "default text", }

and to the Component after the extends keyword:

class TestExtensionComponent extends Component<{}, { displayText : string }>

SDK.Init() inside ComponentDidMount() is a Promise so, we can use then-chaining to set our state values there.

public componentDidMount() { SDK.init({}) .then( () => { this.setState({ displayText: SDK.getConfiguration().witInputs["SampleInput"] }) }) }

Now in our render() we can display the input data

public render(): JSX.Element { return ( <>{this.state.displayText}</> ) }

You might also want the data of the current Work Item, we can do this with the IWorkItemFormService interface.

const workItemFormService = await SDK.getService<IWorkItemFormService>( WorkItemTrackingServiceIds.WorkItemFormService )

Then we can use this to get specific fields

const fieldValues : Promise<{[fieldName: string]: Object}> = workItemFormService.getFieldValues(fields, options)

fields here is an array of strings containing key names of the work item, for example: System.Title, System.AssignedTo or Custom.HasPineapple

options is a class that implements WorkItemOptions, which can be done quite easily like

class Options implements WorkItemOptions { returnOriginalValue: boolean = true }

Now, the variablefieldValues is a Promise, so you can use then-chaining to get the data.

Say, we want to display the title of the work item instead on the SampleInput, we could modify our code to look like this:

public componentDidMount() { SDK.init({}) .then( () => { const workItemFormService = await SDK.getService<IWorkItemFormService>( WorkItemTrackingServiceIds.WorkItemFormService ) const fieldValues : Promise<{[fieldName: string]: Object}> = workItemFormService.getFieldValues(fields, options)

fieldValues.then( data =>

this.setState({ displayText: data["System.Title"] }) ) }) }

0 notes

Text

Npm minify

NPM MINIFY HOW TO

NPM MINIFY INSTALL

NPM MINIFY CODE

Webpack 5.5.0 compiled successfully in 6388 ms If you build the project now ( npm run build), you should notice that CSS has become smaller as it's missing comments and has been concatenated: ⬡ webpack: Build FinishedĪsset vendor.js 126 KiB (name: vendor ) (id hint: commons ) 2 related assetsĪsset main.js 3.32 KiB (name: main ) 2 related assetsĪsset 34.js 247 bytes 2 related assetsĪsset main.css 730 bytes (name: main ). const TerserPlugin = require ( "terser-webpack-plugin" ) To attach it to the configuration, define a part for it first: To get started, include the plugin to the project: npm add terser-webpack-plugin -develop To tune the defaults, we'll attach terser-webpack-plugin to the project so that it's possible to adjust it. In webpack, minification process is controlled through two configuration fields: optimization.minimize flag to toggle it and optimization.minimizer array to configure the process. Modifying JavaScript minification process #

NPM MINIFY CODE

Rewriting the parameters breaks code unless you take precautions against it in this case. For example, Angular 1 expects specific function parameter naming when using modules. Unsafe transformations can break code as they can lose something implicit the underlying code relies upon. Good examples of this include renaming variables or even removing entire blocks of code based on the fact that they are unreachable ( if (false)). Safe transformations do this without losing any meaning by rewriting code. The point of minification is to convert the code into a smaller form.

NPM MINIFY HOW TO

Compared to UglifyJS, the earlier standard for many projects, it's a future-oriented option.Īlthough webpack minifies the output by default, it's good to understand how to customize the behavior should you want to adjust it further or replace the minifier. Terser is an ES2015+ compatible JavaScript-minifier.

In package.Since webpack 4, the production output gets minified using terser by default.

NPM MINIFY INSTALL

It is possible to install two copies - global and local - like this: npm install uglify-js -global -save-dev The advantage of using -save-dev is that the dependency is listed in package.json so that when your code is placed on a different machine, running npm install installs all dependencies (except global ones). If you install globally with npm install -global uglifyjs, you don’t have to do this. node_modules/.bin/uglifyjs instead of just uglifyjs. node_modules/bin and if you want to run it directly from the command-line you need to write. Note: npm tools installed with -save-dev are located in.

Install in a terminal with npm install -save-dev uglify-js.

Major web browsers will run the minified code but show you the original code when you use their debugger).Ĭonfusingly there are two packages, uglify-js and uglify-es. Uglify can also produce a source map to your original TypeScript or ES6 code (source maps allow you to debug the minified code as if it was the original. Uglify-js is 100 times larger than jsmin ( 1.5MB vs 15KB) but has many more features, is more popular, and is still small compared to WebPack or the TypeScript compiler. "build" : "tsc -declaration & npm run minify" ,

In package.json in the "scripts" section, add a minify script that uses jsmin (replace name with the name of your JavaScript file, not your TypeScript file multiple filenames are allowed):.

Install in a terminal with npm install -save-dev jsmin.

It just removes spaces it does not shorten variable names so it does not provide the smallest possible size. This is a tiny (15KB), simple and old minifier, appropriate for simple programs/modules. Parcel’s production mode, parcel build, also uses a minifier. If you’re using webpack to build your code, webpack -p reportedly minifies the application using “UglifyJSPlugin”. tsc -declaration & tsc -removeComments) 1. As of 2018 there is no compiler option to avoid this (at least you can remove comments with "removeComments":true, but if you are producing a d.ts file this will also remove comments from the d.ts file unless you perform a separate comment-removing build that does not produce a d.ts file, e.g. Even if your TypeScript code uses two spaces for indentation and has compact expressions like x+1, the TypeScript compiler produces output with four spaces and adds spaces between things like x + 1. Unfortunately, the TypeScript compiler not only cannot produce minified output, it proactively wastes space. Obfuscators are closely related - their goal is to make code harder to understand. For this reason, we have minifiers to remove spaces, remove newlines and shorten variable names to a single character. Since web apps are sent over the internet, it’s good to keep them small so that the app loads quickly. Leave a comment Four ways to minify your code

0 notes

Text

Which Are The Best React Templates To Start App Development?

React Native templates are the best choice for you if you are going to develop mobile applications for Android or iOS. Every developer can use the JavaScript framework which is a flexible and powerful choice for them.

It may not feel easy to make a start with it especially if you are a newbie in building apps. Fortunately, users of React Native have a wide community. They put together the useful tools that help them to make a new start.

You will find variety in the names such as starter kits, boilerplates, templates, or blueprints. The goal of every tool and template is the same but the features are a little different.

The templates provide you themes, a set of useful components, and other useful resources that help you to kickstart the app development quickly. We have enlisted the top React templates here that help you to start app development in the best possible way.

Material Kit React Native

It offers you two hundred handcrafted elements and five customized plugins. The template is composed of five example pages. It is inspired by the Material Design of Google.

The app template is completely coded. You will get cards and components for mobile apps in Material Kit React Native. The template is built over Expo, React Native, and Galio.io.

Galio Framework

It provides you sixty above elements with eleven screens. The pre-made templates of Galio Framework offer you UI Kit blocks and base adaptable themes with comprehensive documentation.

The Galio framework has two versions. One is for designers and another version is made for the use of developers.

Ignite CLI

You will find a variety of boilerplates in Ignite CLI. All of these boilerplates are default. The template has an API test screen and a modular plugin system.

Use the component library with examples of usage. The templates support android and iOS. All the app templates that Ignite CLI contains, are default.

Argon React Native

It offers you two hundred handcrafted elements with example screens that are pre-built. There are five customized plugins and two hundred component variations that make the process of app development easy for the users and app developers.

The template is built over React Native, Expo, and Galio.io. It also has cards and components for the mobile applications of e-commerce. Moreover, Argon React Native provides you an immediate switch from the image to the page that is absolutely real.

NativeBase

The component styling of the NativeBase is much easier. It supports android and iOS. There are a variety of component options to use. You can also access and use the third-party libraries of native. It helps you to import the custom components.

The documentation through NativeBase is comprehensive and makes the process of app development quicker and easier for you.

React Native Walkthrough Flow

It has a stunning design with a quick user interface. You will get android and iOS compatibility in this template. Moreover, it offers you modularized strings, images, and colors.

The coding that React Native Walkthrough Flow offers you is extensible. You will find the implementation of React Native Walkthrough here.

SB Admin React

It has a modular structure with a responsive navigation menu. The template has a Webpack automation tool with nested routing and multi-level drop-down sidebars. The loading is lazy. The template has Bootstrap theme SB Admin v2.

React Native Starter

It offers you a mobile starter kit with sixteen pre-built components. Reactive Native Starter supports android and iOS. It has a chat application and a wide range of UI elements.

There are multiple color schemes in the React Native starters and the social media sign-ins.

React Native Seed

It has a starter kit with customized boilerplates and three state management libraries. React Native Seed supports android and iOS. You can easily download the kit.

Copy the code and work with the flow typing tool or TypeScript. React Native Seed has a React Native stack or CRNA tool to make the app development process easier for app developers.

Baker

It has a pre-configured toolset with various component sets and code generators that are multiple. Baker is based on the Parse server. It has completely ‘hackable’ internals.

You will find three server script modes in the Bakers. Moreover, the app deployment with Baker is Fastlane.

0 notes

Text

Architecting a Progressive Web App using React Native: Step by Step Guidance!

A Progressive Web Application (PWA) is a disruptive innovation that integrates the functionality of a native mobile app and the usability of a responsive website. Several business brands have harnessed the goodies of PWAs to reach unprecedented heights of success.

Take a look at the success stories of the following brands on account of PWA adoption, as published by the online research portal “Cloud Four”.

‘Flipkart’ experienced a 40% increase in the re-engagement rate.

‘5miles’ were able to reduce the bounce rate by 50% and boost the conversion rate by 30%.

With their new PWA, ‘Tinder’s’ load times decreased from 11.91 sec to 4.69 sec and also, the engagement rate shot up. Besides, the PWA is 90% smaller as compared to their native Android app.

‘Twitter’ witnessed a 65% spike in the pages per session, a 75% increase in Tweets, and a 20% decrease in the bounce rate.

‘Forbes’ experienced a 43% increase in the sessions per user, a 20% boost in the ad viewability, and a 100% spike in the engagement rate.

Thus, it is evident that progressive web app development is successfully fulfilling the demanding user expectations and challenging business requirements of modern times.

So, if you too are one of those planning to build a PWA, the obvious question that will crop up in your mind is, “Which framework is best suited for PWA development?” Many, businesses and corporates prefer React Native for end-to-end PWA development and hire efficient React Native developers for the same.

This blog guides you through crafting a PWA using the React Native Framework. But before we commence, let me enlighten you on some crucial facts about PWAs and the reasons to choose React Native Development.

Progressive Web App (PWA): Unique Strengths

The usual websites can be conveniently accessed from any device or browser, but they fail to leverage the platform-specific hardware that ensures high-performance. Native apps, on the other hand, can completely utilize the platform-specific hardware and software capacities to optimize performance but are available only for the particular platform for which they are designed. But, progressive web applications combine the best of both worlds and are known for delivering a native app-like experience in the browser. The distinct capabilities of this futuristic software solution are as follows.

Delivers a native-like experience.

Loads instantly and promptly respond to user inputs.

Integrates push notifications for maximizing user engagement.

Offers a highly responsive UI and consistent UX across mobile phones, tablets, and PCs.

Integrates with users’ devices and utilizes the device capabilities to the fullest for delivering improved performance.

Employs cached data from earlier interactions to provide offline access to content and certain features.

Are easily discoverable and can be installed by simply clicking a pop-up without having to visit the app store.

Possesses cross-platform compatibility and involves a cost-efficient developmental cycle.

Is reliable and secure.

Takes up less storage memory.

Why choose React Native Development for PWA Creation?

React Native is considered to be an apt progressive web app framework as it proves immensely advantageous for developers. Let’s peek into the reasons:

It is a JavaScript library containing multiple in-built packages and ready-to-use plugins.

The ‘create-react-app’ package helps in configuring the app with ready-to-use features. This speeds up development and makes it possible to create a PWA in real-time.

The SW-Precache-Webpack-plugin enables the creation of highly functional PWAs decked up with rich features. Besides, the fact that this plugin is integrated with create-react-app, eases out things further.

Thus, if a PWA is built using React Native, the end-product becomes more progressive with lesser efforts.

Key Steps on Creating a PWA with React Native

Check out the key requirements for PWA creation.

Adoption of a Secure Network Connection

Adopting a secure network connection for PWA creation ensures security and helps you to gain users’ trust. Sometime back, the Google team had declared HTTP web pages as not safe and secure and had advised going for an HTTPS connection that is more secure. So, it is essential that mobile app companies opt for HTTPS connection while developing PWA. For using HTTPS, one can employ service workers, thus, activating home screen installations.

Implementing the “Add to Home Screen” Option

After you serve the web on HTTPS, do not forget to implement the “Add to Home Screen” option for your users. This move is sure to improve the user experience and as such, expedite the conversion rate for your brand. To execute this task you need to add a Web App Manifest or manifest.json file to the PWA.

Employing Web App Manifest

Adding the manifest.json file to the app’s root directory allows the users to install the app on their smartphones effortlessly. It should contain details such as name, icons, description as well as a splash screen for the application. The manifest.json file can either be written by your React Native Developers or created employing a tool. This file consists of metadata in a public folder that controls the app’s visual appearance on the home screen of users.

So, given below are key terms used while coding manifest.json. (Let’s assume that your app’s name is Dizon)

Short_name: The name of the app (Dizon) is displayed when you add it to the users’ home screen.

Name: Browser uses this name when users add the application to their home screen. It is displayed as “Add Dizon to Home Screen.”

Icons: The icon of your app is visible on the users’ home screens.

Start_url: It is the URL that specifies the starting point of the PWA.

Theme_color: It regulates the toolbar color of the users’ browser.

Background color: When the app is launched, the background color of the splash screen can be changed.

Display: This feature enables one to tweak the browser view and you may run the app on a separate window or a full screen.

Implementing Custom Splash Screen

Whenever users launch a PWA on their Android devices, a white screen is displayed till the app is ready for use. This white blank screen is visible for a longer time, hence implementing a custom splash screen is important to get a better user experience. Custom splash screen enables you to employ an icon displaying your brand and a custom background for the PWA, imparting a native-like look and feel.

Usage of Pusher to add Real-time Functionalities

A React Native App Development Company should employ Pusher to add Real-time functionalities in their PWA. This is so because Pusher simplifies the task of binding the UI interactions to the events which are triggered by the server or the client. The setup process involves:

Logging in to the dashboard and building a new app

Copying the app_id, secret, key, cluster and then store these for future usage.

Setting up a server in node.js which will assist in triggering events using Pusher.

Creating a file called ‘server.js’ in the project’s root directory with the required content. Further details can be viewed in this linked content by Pusher

Integrating a Service Worker

A PWA development company needs to integrate a service worker - a script running in the background that does not interact with the actual app. Its function is to regulate installations, push notifications, caching, etc. Service Workers play a vital role by intercepting the network requests in the background and caching information to facilitate offline usage.

Auditing the Code with Lighthouse

Auditing the code with Google’s automated open-source tool called Lighthouse will help a Web App Development Company in monitoring the performance of a web application. This tool runs multiple tests for examining the performance, accessibility, etc. of a web app and generates a report for the same. These reports prove useful in fixing the weak aspects of the PWA like performance, best practices, accessibility, etc. Additionally, the Lighthouse plugin guides on resolving the issues and thus, improving performance.

Final Verdict:

React Progressive Web Applications help businesses across diverse domains establish their digital footprints successfully. In today’s smartphone dominated world, PWAs have become an absolute necessity for businesses to gain loyal and happy customers. Therefore, if you are planning to develop a web app or a website, it is advisable to deploy the same as a PWA as it adds convenience to the users and hence improves the user engagement and experience.

I hope this blog was beneficial.

Do share your opinions and experiences on PWAs in the comments section.

#React Native App Development Company#React Native Developers#react native development#progressive web app development#PWA development company#Web App Development Company

0 notes

Photo

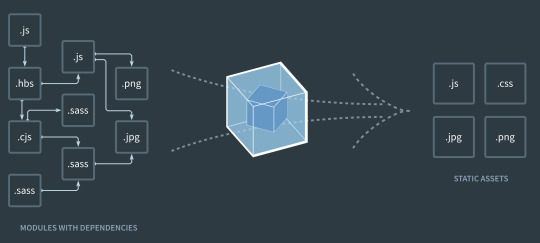

Setting up an ES6 Project Using Babel and webpack

In this article, we’re going to look at creating a build setup for handling modern JavaScript (running in web browsers) using Babel and Webpack.

This is needed to ensure that our modern JavaScript code in particular is made compatible with a wider range of browsers than it might otherwise be.

JavaScript, like most web-related technologies, is evolving all the time. In the good old days, we could drop a couple of <script> tags into a page, maybe include jQuery and a couple of plugins, then be good to go.

However, since the introduction of ES6, things have got progressively more complicated. Browser support for newer language features is often patchy, and as JavaScript apps become more ambitious, developers are starting to use modules to organize their code. In turn, this means that if you’re writing modern JavaScript today, you’ll need to introduce a build step into your process.

As you can see from the links beneath, converting down from ES6 to ES5 dramatically increases the number of browsers that we can support.

ES6 compatibility

ES5 compatibility

The purpose of a build system is to automate the workflow needed to get our code ready for browsers and production. This may include steps such as transpiling code to a differing standard, compiling Sass to CSS, bundling files, minifying and compressing code, and many others. To ensure these are consistently repeatable, a build system is needed to initiate the steps in a known sequence from a single command.

Prerequisites

In order to follow along, you’ll need to have both Node.js and npm installed (they come packaged together). I would recommend using a version manager such as nvm to manage your Node installation (here’s how), and if you’d like some help getting to grips with npm, then check out SitePoint’s beginner-friendly npm tutorial.

Set Up

Create a root folder somewhere on your computer and navigate into it from your terminal/command line. This will be your <ROOT> folder.

Create a package.json file with this:

npm init -y

Note: The -y flag creates the file with default settings, and means you don’t need to complete any of the usual details from the command line. They can be changed in your code editor later if you wish.

Within your <ROOT> folder, make the directories src, src/js, and public. The src/js folder will be where we’ll put our unprocessed source code, and the public folder will be where the transpiled code will end up.

Transpiling with Babel

To get ourselves going, we’re going to install babel-cli, which provides the ability to transpile ES6 into ES5, and babel-preset-env, which allows us to target specific browser versions with the transpiled code.

npm install babel-cli babel-preset-env --save-dev

You should now see the following in your package.json:

"devDependencies": { "babel-cli": "^6.26.0", "babel-preset-env": "^1.6.1" }

Whilst we’re in the package.json file, let’s change the scripts section to read like this:

"scripts": { "build": "babel src -d public" },

This gives us the ability to call Babel via a script, rather than directly from the terminal every time. If you’d like to find out more about npm scripts and what they can do, check out this SitePoint tutorial.

Lastly, before we can test out whether Babel is doing its thing, we need to create a .babelrc configuration file. This is what our babel-preset-env package will refer to for its transpile parameters.

Create a new file in your <ROOT> directory called .babelrc and paste the following into it:

{ "presets": [ [ "env", { "targets": { "browsers": ["last 2 versions", "safari >= 7"] } } ] ] }

This will set up Babel to transpile for the last two versions of each browser, plus Safari at v7 or higher. Other options are available depending on which browsers you need to support.

With that saved, we can now test things out with a sample JavaScript file that uses ES6. For the purposes of this article, I’ve modified a copy of leftpad to use ES6 syntax in a number of places: template literals, arrow functions, const and let.

"use strict"; function leftPad(str, len, ch) { const cache = [ "", " ", " ", " ", " ", " ", " ", " ", " ", " " ]; str = str + ""; len = len - str.length; if (len <= 0) return str; if (!ch && ch !== 0) ch = " "; ch = ch + ""; if (ch === " " && len < 10) return () => { cache[len] + str; }; let pad = ""; while (true) { if (len & 1) pad += ch; len >>= 1; if (len) ch += ch; else break; } return `${pad}${str}`; }

Save this as src/js/leftpad.js and from your terminal run the following:

npm run build

If all is as intended, in your public folder you should now find a new file called js/leftpad.js. If you open that up, you’ll find it no longer contains any ES6 syntax and looks like this:

"use strict"; function leftPad(str, len, ch) { var cache = ["", " ", " ", " ", " ", " ", " ", " ", " ", " "]; str = str + ""; len = len - str.length; if (len <= 0) return str; if (!ch && ch !== 0) ch = " "; ch = ch + ""; if (ch === " " && len < 10) return function () { cache[len] + str; }; var pad = ""; while (true) { if (len & 1) pad += ch; len >>= 1; if (len) ch += ch;else break; } return "" + pad + str; }

Continue reading %Setting up an ES6 Project Using Babel and webpack%

by Chris Perry via SitePoint https://ift.tt/2HA1AmE

1 note

·

View note

Text

Chrome ExtensionをTypeScriptとReactで作る環境を構築する

Chrome拡張機能を作るときの環境をいい感じにしてみます。

最終的なディレクトリ構造はこんな感じになります。

env-extension ├── dist // 拡張機能として読み込むディレクトリはこれ ├── public │ ├── index.html │ └── manifest.json ├── src │ ├── background │ │ └── background.ts │ ├── content │ │ ├── content.scss │ │ └── content.tsx │ └── popup │ └── index.tsx ├── gulpfile.js ├── package-lock.json ├── package.json ├── tsconfig.json └── webpack.config.js

npmの環境を作る

ディレクトリを作ってnpmの初期化を行います。

$ mkdir env-extension && cd env-extension $ npm init -y

React + TypeScript環境を作る

必要なパッケージを落っことします。

$ npm install --save-dev webpack webpack-cli html-loader html-webpack-plugin file-loader ts-loader typescript @types/react @types/react-dom copy-webpack-plugin $ npm install react react-dom

パッケージを軽く整理してみる。

webpack

モジュールバンドラー。JavaScriptファイルをブラウザで動くようにするために必要。

webpack-cli

webpackのコマンドセット。

file-loader

importやrequireで読み込まれるファイルを出力先のディレクトリに配置する

html-loader

htmlをstringにして出力する

html-webpack-plugin

htmlファイルの作成を簡単にする。

copy-webpack-plugin

すでにあるファイルやディレクトリを、ビルドディレクトリにコピーする

ts-loader

webpackについてのTypeScriptLoader

typescript

TypeScriptへの対応に必要

@types/react, @types/react-dom

reactのための型定義が入ってるパッケージ

tsconfig.json、webpack.config.jsを作成します。 webpackの中身はあとで!

// tsconfig.json { "compilerOptions": { "outDir": "./dist/", "allowSyntheticDefaultImports": true, "sourceMap": true, "noImplicitAny": true, "module": "esnext", "moduleResolution": "node", "target": "es5", "lib": [ "es5", "es6", "dom" ], "jsx": "react" }, "include": [ "./src/**/*" ] }

ChromeExtension用のファイルを用意

popup

popupにはhtmlとjsファイル双方必要なので用意。

src/popup/index.tsxを作成します。

// src/popup/index.tsx import React from 'react'; import ReactDOM from 'react-dom'; const Popup = () => <h1>Hello world</h1>; ReactDOM.render(<popup></popup>, document.getElementById("root"));

// public/index.html <meta charset="utf-8"><title>env test</title><div id="root"></div>

background

とりあえず確認のためのスクリプトを挟み込んでおきます。

// src/background/background.ts console.log("background test");

content

スタイルシートなしで、確認できるようにボタンを配置しておきます。

// src/content/content.tsx import React from 'react'; import ReactDOM from 'react-dom'; const Button = () => <button>Hello</button>; const app = document.createElement('div'); app.id = 'extension-button'; document.body.appendChild(app); ReactDOM.render(<button></button>, app);

manifest

manifestを作成します。今回は確認のためだけの最小限に留めます。

// public/manifest.json { "manifest_version": 2, "version": "0.0.1", "name": "env-sample", "description": "sample", "browser_action": { "default_popup": "index.html" }, "background": { "scripts": [ "background.js" ] }, "content_scripts": [ { "matches": [ "http://*/*", "https://*/*" ], "js": [ "content.js" ] } ], "content_security_policy": "script-src 'self' 'unsafe-eval'; object-src 'self'" }

ビルドの設定を作成

webpackの設定を行います。

// webpack.config.js const webpack = require('webpack'); const HtmlWebPackPlugin = require('html-webpack-plugin'); const CopyPlugin = require('copy-webpack-plugin'); module.exports = { mode: "production", entry: { popup: './src/popup/index.tsx', background: './src/background/background.ts', content: './src/content/content.tsx', }, output: { path: __dirname + '/dist', }, module: { rules: [ { test: /\.tsx?$/, loader: 'ts-loader', }, { test: /\.html$/, use: [ { loader: 'html-loader', options: { minimize: true }, }, ], }, ], }, resolve: { extensions: [ '.ts', '.js', '.tsx', '.jsx' ] }, plugins: [ new HtmlWebPackPlugin({ template: './public/index.html', filename: './index.html', chunks: ['popup'] // ここはentryの値を入れる }), new CopyPlugin({ patterns: [ { from: './public/manifest.json', to: 'manifest.json' } ] }), ], }

ビルドの自動化

package.jsonに以下を追記。ファイルの変更を検知して自動でビルドしてもらいましょう。。。

// package.json // webpack modeのデフォルトはproducion, configはあれば読んでくれるので省略 { ... "scripts": { "build": "webpack --mode production --config webpack.config.js", "build-watch": "webpack --watch", ... }, ... }

$ npm run build-watchでファイルの変更を監視して、変更があり次第ビルドしてくれます。

ついでにlintと自動修正

ついでにESLintを導入してみます。

まずはパッケージのダウンロード。

$ npm install --save-dev eslint-config-airbnb eslint-plugin-import eslint-plugin-react eslint-plugin-jsx-a11y eslint $ npm install --save-dev gulp gulp-eslint

eslint

JavaScriptのコードからフォーマットの違いとかを発見して通知してくれる。

eslint-config-airbnb

airbnbの設定を適用するのに必要

eslint-plugin-import

eslintでimport/exportに対応する

eslint-plugin-react

eslintでreactに対応する

eslint-plugin-jsx-a11y

jsxの静的解析に対応する

gulp

gulpを使うのに必要

gulp-eslint

gulpでeslintを使うために必要

設定ファイルの追加。項目は適宜変更してください。

// .eslintrc.js module.exports = { "env": { "browser": true, "es6": true }, "extends": "airbnb", "parserOptions": { "sourceType": "module" }, "rules": { "indent": [ "error", 2 ], "linebreak-style": [ "error", "unix" ], "quotes": [ "error", "single" ], "semi": [ "error", "always" ] } };

gulpでタスクにして、自動化します。

// gulpfile.js const gulp = require("gulp"); const eslint = require("gulp-eslint"); const applyLintPaths = [ "src/**/*.{js,jsx,ts,tsx}", "gulpfile.js" ]; /** * lint */ gulp.task("lint", function () { return ( gulp.src(applyLintPaths) .pipe(eslint({ fix: true })) .pipe(eslint.format()) .pipe(gulp.dest((file) => file.base)) // overwrite fixed file .pipe(eslint.failAfterError()) ); }); gulp.task("lint-watch", function () { return ( gulp.watch(applyLintPaths, gulp.task("lint")) ); });

package.jsonの書き換え。

// package.json { ... "scripts": { ... "lint": "gulp lint", "lint-watch": "gulp lint-watch", ... }, }

$ npm run lint or $ npm run lint-watchでlintできます。

ついでにsass対応

ソース内で、import '**.scss'が使えるようにします。

まずはパッケージのダウンロード。

$ npm install --save-dev style-loader css-loader sass-loader sass

style-loader

DOMにCSSを注入するのに必要

css-loader

importやrequireで読み込まれるcssファイル解決に必要

sass

sassをJavaScriptで実装したもの

sass-loader

sass, scssファイルを読み込んで、cssにコンパイルするのに必要

ビルドの設定をいじる。

// webpack.config.js module.exports = { module: { rules: [ ... { test: /\.s[ac]ss$/i, use: [ // Creates `style` nodes from JS strings "style-loader", // Translates CSS into CommonJS "css-loader", // Compiles Sass to CSS "sass-loader", ], }, ], }, };

contentをいじったり、sass書いたり。

// src/content/content.tsx ... import './content.scss'; ...

// src/content/content.scss $bgcolor: black; $color: white; button { background-color: $bgcolor; color: $color; }

tsでのエイリアスの貼り方

tsconfig.jsonの変更

{ "compilerOptions": { ... "baseUrl": "./", "paths": { "@/*": ["src/*"] } }, ... }

webpack.config.jsの変更

const path = require('path'); module.exports = { ... resolve: { alias: { '@': path.resolve(__dirname, 'src/') }, extensions: [ '.ts', '.js', '.tsx', '.jsx' ] }, ... }

viteを使っている場合はvite.config.tsを変更

import { defineConfig } from 'vite' import react from '@vitejs/plugin-react' // https://vitejs.dev/config/ export default defineConfig({ ... plugins: [react()], resolve: { alias: { "@/": `${__dirname}/src/`, } } })

lintも設定

$ npm install --save-dev eslint-import-resolver-webpack

.eslintrc.jsの変更

module.exports= { ... "settings": { "react": { "version": "detect" }, "import/resolver": { "webpack": { "config": path.join(__dirname, "webpack.config.js") } } } }

参考

ReactでChrome Extensionを開発するために必要なwebpackのビルド設定

Eslint --fix supresses errors but doesn't actually change file

sass-loader

npm

React: import時のaliasを設定するときはWebpack、TypeScript、ESLintの3つを対応しなければならない件 -qiita

【TypeScript】パスのエイリアスの設定方法

<2022/02更新>vite+TypeScriptでalias pathを~に設定する

0 notes

Text

Popular Front End Development Tools You Should Know

If you are just getting started with JavaScript, the number of tools and technologies you'll hear about may be overwhelming. And you might have a hard time deciding which tools you actually need.

Or maybe you're familiar with the tools, but you haven't given much thought to what problems they solve and how miserable your life would be without their help.

I believe it is important for Software Engineers and Developers to understand the purpose of the tools we use every day.

That's why, in this article, I look at NPM, Babel, Webpack, ESLint, and CircleCI and I try to clarify the problems they solve and how they solve them.

NPM

NPM is the default package manager for JavaScript development. It helps you find and install packages (programs) that you can use in your programs.

You can add npm to a project simply by using the "npm init" command. When you run this command it creates a "package.json" file in the current directory. This is the file where your dependencies are listed, and npm views it as the ID card of the project.

You can add a dependency with the "npm install (package_name)" command.

When you run this command, npm goes to the remote registry and checks if there is a package identified by this package name. If it finds it, a new dependency entry is added to your package.json and the package, with it's internal dependencies, is downloaded from the registry.

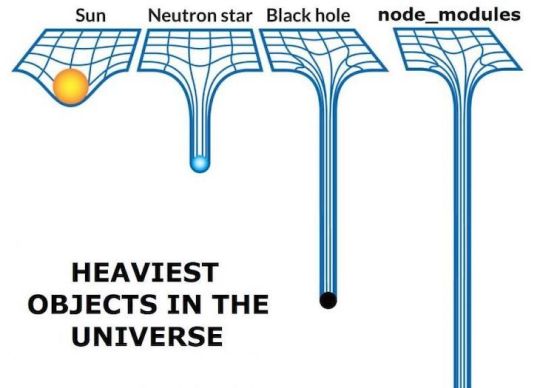

You can find downloaded packages or dependencies under the "node_modules" folder. Just keep in mind that it usually gets pretty big – so make sure to add it to .gitignore.

NPM does not only ease the process of finding and downloading packages but also makes it easier to work collaboratively on a project.

Without NPM, it would be hard to manage external dependencies. You would need to download the correct versions of every dependency by hand when you join an existing project. And that would be a real hassle.

With the help of npm, you can just run "npm install" and it will install all external dependencies for you. Then you can just run it again anytime someone on your team adds a new one.

Babel

Babel is a JavaScript compiler or transpiler which translates the ECMAScript 2015+ code into code that can be understood by older JavaScript engines.

Babel is the most popular Javascript compiler, and frameworks like Vue and React use it by default. That said, concepts we will talk about here are not only related to Babel and will apply to any JavaScript compiler.

Why do you need a compiler?

"Why do we need a compiler, isn't JavaScript an interpreted language?" you may ask if you are familiar with the concepts of compiled and interpreted languages.

It's true that we usually call something a "compiler" if it translates our human-readable code to an executable binary that can be understood by the CPU. But that is not the case here.

The term transpiler may be more appropriate since it is a subset of a compiler: Transpilers are compilers that translate the code from a programming language to another language (in this example, from modern JS to an older version).

JavaScript is the language of browsers. But there is a problem with browsers: Cross compatibility. JavaScript tools and the language itself are evolving rapidly and many browsers fail to match that pace. This results in compatibility issues.

You probably want to write code in the most recent versions of JavaScript so you can use its new features. But if the browser that your code is running has not implemented some of the new features in its JavaScript engine, the code will not execute properly on that browser.

This is a complex problem because every browser implements the features at a different speed. And even if they do implement those new features, there will always be people who use an older version of their browser.

So what if you want to be able to use the recent features but also want your users to view those pages without any problems?

Before Babel, we used polyfills to run older versions of certain code if the browser did not support the modern features. And when you use Babel, it uses polyfills behind the scenes and does not require you to do anything.

How do transpilers/compilers work?

Babel works similar to other compilers. It has parsing, transformation, and code generation stages.

We won't go in-depth here into how it works, since compilers are complicated things. But to understand the basics of how compilers work, you can check out the the-super-tiny-compiler project. It is also mentioned in Babel's official documentation as being helpful in understanding how Babel works.

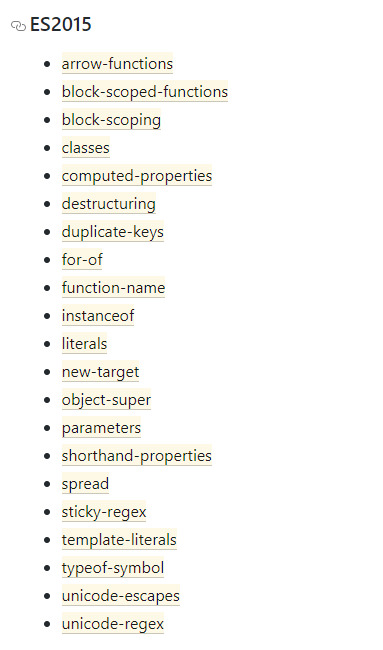

We can usually get away with knowing about Babel plugins and presets. Plugins are the snippets that Babel uses behind the scenes to compile your code to older versions of JavaScript. You can think of each modern feature as a plugin. You can go to this link to check out the full list of plugins.

List of plugins for ES5

Presets are collections of plugins. If you want to use Babel for a React project you can use the pre-made @babel/preset-react which contains the necessary plugins.

React Preset Plugins

You can add plugins by editing the Babel config file.

Do you need Babel for your React App?

For React, you need a compiler because React code generally uses JSX and JSX needs to be compiled. Also the library is built on the concept of using ES6 syntax.

Luckily, when you create a project with create-react-app, it comes with Babel already configured and you usually do not need to modify the config.

Examples of a compiler in action

Babel's website has an online compiler and it is really helpful to understand how it works. Just plug in some code and analyze the output.

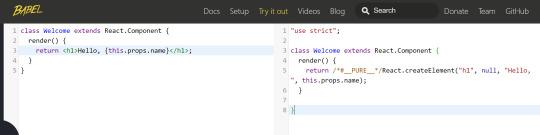

Webpack

Webpack is a static module bundler. When you create a new project, most JavaScript frameworks/libraries use it out of the box nowadays.

If the phrase "static module bundler" sounds confusing, keep reading because I have some great examples to help you understand.

Why do you need a bundler?

In web apps you're going to have a lot of files. This is especially the case for Single Page Applications (React, Vue, Angular), with each having their own dependencies.

What I mean by a dependency is an import statement – if file A needs to import file B to run properly, then we say A depends on B.

In small projects, you can handle the module dependencies with <script> tags. But when the project gets larger, the dependencies rapidly become hard to manage.

Maybe, more importantly, dividing the code into multiple files makes your website load more slowly. This is because the browser needs to send more requests compared to one large file, and your website starts to consume a ton of bandwidth, because of HTTP headers.

We, as developers want our code to be modular. We divide it into multiple files because we do not want to work with one file with thousands of lines. Still, we also want our websites to be performant, to use less bandwidth, and to load fast.

So now, we'll see how Webpack solves this issue.

How Webpack works

When we were talking about Babel, we mentioned that JavaScript code needs to be transpiled before the deployment.

But compiling with Babel is not the only operation you need before deploying your project.

You usually need to uglify it, transpile it, compile the SASS or SCSS to CSS if you are using any preprocessors, compile the TypeScript if you are using it...and as you can see, this list can get long easily.

You do not want to deal with all those commands and operations before every deployment. It would be great if there was a tool that did all that for you in the correct order and correct way.

The good news – there is: Webpack.

Webpack also provides features like a local server with hot reload (they call it hot module replacement) to make your development experience better.

So what's hot reloading? It means that whenever you save your code, it gets compiled and deployed to the local HTTP server running on your machine. And whenever a file changes, it sends a message to your browser so you do not even need to refresh the page.

If you have ever used "npm run serve", "npm start" or "npm run dev", those commands also start Webpack's dev server behind the scenes.

Webpack starts from the entry point of your project (index) and generates the Abstract Syntax Tree of the file. You can think of it as parsing the code. This operation is also done in compilers, which then look for import statements recursively to generate a graph of dependencies.

It then converts the files into IIFEs to modularize them (remember, putting code inside a function restricts its scope). By doing this, they modularize the files and make sure the variables and functions are not accessible to other files.

Without this operation, it would be like copying and pasting the code of the imported file and that file would have the same scope.

Webpack does many other advanced things behind the scenes, but this is enough to understand the basics.

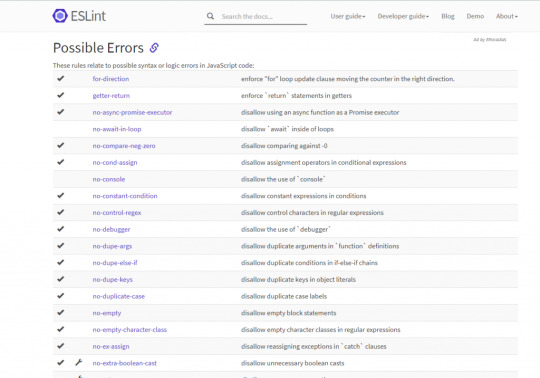

Bonus – ESLint

Code quality is important and helps keep your projects maintainable and easily extendable. While most of us developers recognize the significance of clean coding, we sometimes tend to ignore the long term consequences under the pressure of deadlines.

Many companies decide on coding standards and encourage developers to obey those standards. But how can you make sure that your code meets the standards?

Well, you can use a tool like ESLint to enforce rules in the code. For example, you can create a rule to enforce or disallow the usage of semicolons in your JavaScript code. If you break a rule, ESLint shows an error and the code does not even get compiled – so it is not possible to ignore that unless you disable the rule.

Linters can be used to enforce standards by writing custom rules. But you can also use the pre-made ESLint configs established by big tech companies to help devs get into the habit of writing clean code.

You can take a look at Google's ESLint config here – it is the one I prefer.

ESLint helps you get used to best practices, but that's not its only benefit. ESLint also warns you about possible bugs/errors in your code so you can avoid common mistakes.

Bonus – CI/CD (CircleCI)

Continuous Integration/Development has gained a lot of popularity in recent years as many companies have adopted Agile principles.

Tools like Jenkins and CircleCI allow you to automate the deployment and testing of your software so you can deploy more often and reliably without going through difficult and error-prone build processes by yourselves.

I mention CircleCI as the product here because it is free and used frequently in JavaScript projects. It's also quite easy to use.

Let's go over an example: Say you have a deployment/QA server and your Git repository. You want to deploy your changes to your deployment/QA server, so here is an example process:

Push the changes to Git

Connect to the server

Create a Docker container and run it

Pull the changes to the server, download all the dependencies (npm install)

Run the tests to make sure nothing is broken

Use a tool like ESLint/Sonar to ensure code quality

Merge the code if everything is fine

With the help of CircleCI, you can automatically do all these operations. You can set it up and configure to do all of the above operations whenever you push a change to Git. It will reject the push if anything goes wrong, for example a failing test.

I will not get into the details of how to configure CircleCI because this article is more about the "Why?" of each tool. But if you are interested in learning more and seeing it in action, you can check out this tutorial series.

Conclusion

The world of JavaScript is evolving rapidly and new tools are gaining popularity every year.

It's easy to react to this change by just learning how to use the tool – we are often too busy to take our time and think about the reason why that tool became popular or what problem it solves.

In this article, I picked the tools I think are most popular and shared my thoughts on their significance. I also wanted to make you think about the problems they solve rather than just the details of how to use them.

If you liked the article you can check out and subscribe to my blog where I try to write frequently. Also, let me know what you think by commenting so we can brainstorm or you can tell me what other tools you love to use :)

0 notes

Link