#create aws ec2 instance

Explore tagged Tumblr posts

Video

youtube

Create Instance and Security Group with Terraform on AWS

#youtube#Learn how to automate AWS infrastructure using Terraform! In this step-by-step tutorial you’ll create an EC2 instance and configure security

0 notes

Text

EC2 Instance Creation: Beginner's Ultimate Guide 2023

EC2 Amazon Web Services AWS has revolutionised the way businesses approach IT infrastructure Gone are the days

0 notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

Amazon DCV 2024.0 Supports Ubuntu 24.04 LTS With Security

NICE DCV is a different entity now. Along with improvements and bug fixes, NICE DCV is now known as Amazon DCV with the 2024.0 release.

The DCV protocol that powers Amazon Web Services(AWS) managed services like Amazon AppStream 2.0 and Amazon WorkSpaces is now regularly referred to by its new moniker.

What’s new with version 2024.0?

A number of improvements and updates are included in Amazon DCV 2024.0 for better usability, security, and performance. The most recent Ubuntu 24.04 LTS is now supported by the 2024.0 release, which also offers extended long-term support to ease system maintenance and the most recent security patches. Wayland support is incorporated into the DCV client on Ubuntu 24.04, which improves application isolation and graphical rendering efficiency. Furthermore, DCV 2024.0 now activates the QUIC UDP protocol by default, providing clients with optimal streaming performance. Additionally, when a remote user connects, the update adds the option to wipe the Linux host screen, blocking local access and interaction with the distant session.

What is Amazon DCV?

Customers may securely provide remote desktops and application streaming from any cloud or data center to any device, over a variety of network conditions, with Amazon DCV, a high-performance remote display protocol. Customers can run graphic-intensive programs remotely on EC2 instances and stream their user interface to less complex client PCs, doing away with the requirement for pricey dedicated workstations, thanks to Amazon DCV and Amazon EC2. Customers use Amazon DCV for their remote visualization needs across a wide spectrum of HPC workloads. Moreover, well-known services like Amazon Appstream 2.0, AWS Nimble Studio, and AWS RoboMaker use the Amazon DCV streaming protocol.

Advantages

Elevated Efficiency

You don’t have to pick between responsiveness and visual quality when using Amazon DCV. With no loss of image accuracy, it can respond to your apps almost instantly thanks to the bandwidth-adaptive streaming protocol.

Reduced Costs

Customers may run graphics-intensive apps remotely and avoid spending a lot of money on dedicated workstations or moving big volumes of data from the cloud to client PCs thanks to a very responsive streaming experience. It also allows several sessions to share a single GPU on Linux servers, which further reduces server infrastructure expenses for clients.

Adaptable Implementations

Service providers have access to a reliable and adaptable protocol for streaming apps that supports both on-premises and cloud usage thanks to browser-based access and cross-OS interoperability.

Entire Security

To protect customer data privacy, it sends pixels rather than geometry. To further guarantee the security of client data, it uses TLS protocol to secure end-user inputs as well as pixels.

Features

In addition to native clients for Windows, Linux, and MacOS and an HTML5 client for web browser access, it supports remote environments running both Windows and Linux. Multiple displays, 4K resolution, USB devices, multi-channel audio, smart cards, stylus/touch capabilities, and file redirection are all supported by native clients.

The lifecycle of it session may be easily created and managed programmatically across a fleet of servers with the help of DCV Session Manager. Developers can create personalized Amazon DCV web browser client applications with the help of the Amazon DCV web client SDK.

How to Install DCV on Amazon EC2?

Implement:

Sign up for an AWS account and activate it.

Open the AWS Management Console and log in.

Either download and install the relevant Amazon DCV server on your EC2 instance, or choose the proper Amazon DCV AMI from the Amazon Web Services Marketplace, then create an AMI using your application stack.

After confirming that traffic on port 8443 is permitted by your security group’s inbound rules, deploy EC2 instances with the Amazon DCV server installed.

Link:

On your device, download and install the relevant Amazon DCV native client.

Use the web client or native Amazon DCV client to connect to your distant computer at https://:8443.

Stream:

Use AmazonDCV to stream your graphics apps across several devices.

Use cases

Visualization of 3D Graphics

HPC workloads are becoming more complicated and consuming enormous volumes of data in a variety of industrial verticals, including Oil & Gas, Life Sciences, and Design & Engineering. The streaming protocol offered by Amazon DCV makes it unnecessary to send output files to client devices and offers a seamless, bandwidth-efficient remote streaming experience for HPC 3D graphics.

Application Access via a Browser

The Web Client for Amazon DCV is compatible with all HTML5 browsers and offers a mobile device-portable streaming experience. By removing the need to manage native clients without sacrificing streaming speed, the Web Client significantly lessens the operational pressure on IT departments. With the Amazon DCV Web Client SDK, you can create your own DCV Web Client.

Personalized Remote Apps

The simplicity with which it offers streaming protocol integration might be advantageous for custom remote applications and managed services. With native clients that support up to 4 monitors at 4K resolution each, Amazon DCV uses end-to-end AES-256 encryption to safeguard both pixels and end-user inputs.

Amazon DCV Pricing

Amazon Entire Cloud:

Using Amazon DCV on AWS does not incur any additional fees. Clients only have to pay for the EC2 resources they really utilize.

On-site and third-party cloud computing

Please get in touch with DCV distributors or resellers in your area here for more information about licensing and pricing for Amazon DCV.

Read more on Govindhtech.com

#AmazonDCV#Ubuntu24.04LTS#Ubuntu#DCV#AmazonWebServices#AmazonAppStream#EC2instances#AmazonEC2#News#TechNews#TechnologyNews#Technologytrends#technology#govindhtech

2 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

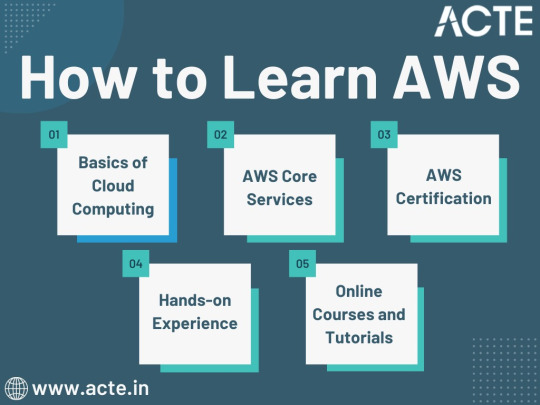

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

Navigating the Cloud: Unleashing the Potential of Amazon Web Services (AWS)

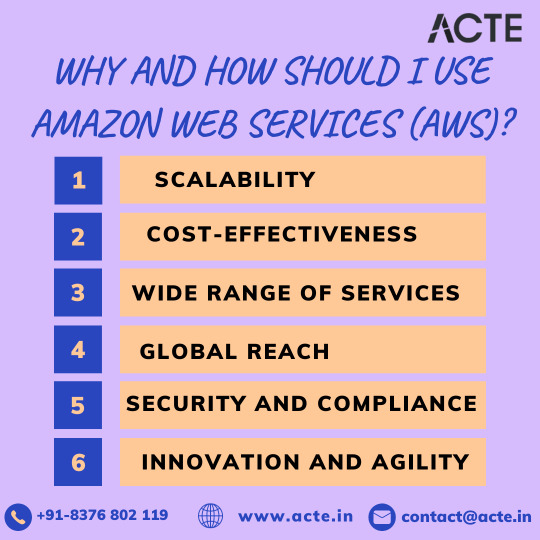

In the dynamic realm of technological progress, Amazon Web Services (AWS) stands as a beacon of innovation, offering unparalleled advantages for enterprises, startups, and individual developers. This article will delve into the compelling reasons behind the adoption of AWS and provide a strategic roadmap for harnessing its transformative capabilities.

Unveiling the Strengths of AWS:

1. Dynamic Scalability: AWS distinguishes itself with its dynamic scalability, empowering users to effortlessly adjust infrastructure based on demand. This adaptability ensures optimal performance without the burden of significant initial investments, making it an ideal solution for businesses with fluctuating workloads.

2. Cost-Efficient Flexibility: Operating on a pay-as-you-go model, AWS delivers cost-efficiency by eliminating the need for large upfront capital expenditures. This financial flexibility is a game-changer for startups and businesses navigating the challenges of variable workloads.

3. Comprehensive Service Portfolio: AWS offers a comprehensive suite of cloud services, spanning computing power, storage, databases, machine learning, and analytics. This expansive portfolio provides users with a versatile and integrated platform to address a myriad of application requirements.

4. Global Accessibility: With a distributed network of data centers, AWS ensures low-latency access on a global scale. This not only enhances user experience but also fortifies application reliability, positioning AWS as the preferred choice for businesses with an international footprint.

5. Security and Compliance Commitment: Security is at the forefront of AWS's priorities, offering robust features for identity and access management, encryption, and compliance with industry standards. This commitment instills confidence in users regarding the safeguarding of their critical data and applications.

6. Catalyst for Innovation and Agility: AWS empowers developers by providing services that allow a concentrated focus on application development rather than infrastructure management. This agility becomes a catalyst for innovation, enabling businesses to respond swiftly to evolving market dynamics.

7. Reliability and High Availability Assurance: The redundancy of data centers, automated backups, and failover capabilities contribute to the high reliability and availability of AWS services. This ensures uninterrupted access to applications even in the face of unforeseen challenges.

8. Ecosystem Synergy and Community Support: An extensive ecosystem with a diverse marketplace and an active community enhances the AWS experience. Third-party integrations, tools, and collaborative forums create a rich environment for users to explore and leverage.

Charting the Course with AWS:

1. Establish an AWS Account: Embark on the AWS journey by creating an account on the AWS website. This foundational step serves as the gateway to accessing and managing the expansive suite of AWS services.

2. Strategic Region Selection: Choose AWS region(s) strategically, factoring in considerations like latency, compliance requirements, and the geographical location of the target audience. This decision profoundly impacts the performance and accessibility of deployed resources.

3. Tailored Service Selection: Customize AWS services to align precisely with the unique requirements of your applications. Common choices include Amazon EC2 for computing, Amazon S3 for storage, and Amazon RDS for databases.

4. Fortify Security Measures: Implement robust security measures by configuring identity and access management (IAM), establishing firewalls, encrypting data, and leveraging additional security features. This comprehensive approach ensures the protection of critical resources.

5. Seamless Application Deployment: Leverage AWS services to deploy applications seamlessly. Tasks include setting up virtual servers (EC2 instances), configuring databases, implementing load balancers, and establishing connections with various AWS services.

6. Continuous Optimization and Monitoring: Maintain a continuous optimization strategy for cost and performance. AWS monitoring tools, such as CloudWatch, provide insights into the health and performance of resources, facilitating efficient resource management.

7. Dynamic Scaling in Action: Harness the power of AWS scalability by adjusting resources based on demand. This can be achieved manually or through the automated capabilities of AWS Auto Scaling, ensuring applications can handle varying workloads effortlessly.

8. Exploration of Advanced Services: As organizational needs evolve, delve into advanced AWS services tailored to specific functionalities. AWS Lambda for serverless computing, AWS SageMaker for machine learning, and AWS Redshift for data analytics offer specialized solutions to enhance application capabilities.

Closing Thoughts: Empowering Success in the Cloud

In conclusion, Amazon Web Services transcends the definition of a mere cloud computing platform; it represents a transformative force. Whether you are navigating the startup landscape, steering an enterprise, or charting an individual developer's course, AWS provides a flexible and potent solution.

Success with AWS lies in a profound understanding of its advantages, strategic deployment of services, and a commitment to continuous optimization. The journey into the cloud with AWS is not just a technological transition; it is a roadmap to innovation, agility, and limitless possibilities. By unlocking the full potential of AWS, businesses and developers can confidently navigate the intricacies of the digital landscape and achieve unprecedented success.

2 notes

·

View notes

Text

Title: Amazon EC2: Unleash Your Superpowers in the Cloud!

Introduction:

Welcome to the extraordinary world of Amazon Elastic Compute Cloud (EC2), where you can harness the power of the cloud to achieve remarkable feats. In this short and simple blog post, we'll explore the key features and benefits of Amazon EC2, empowering you to become a cloud computing superhero!

Elasticity and Scalability: With EC2, you have the ability to scale your compute resources up or down effortlessly. No task is too big or small as you adapt to changing workloads with ease.

Versatile Instance Types: EC2 offers a wide range of instance types tailored to your specific needs. Choose the perfect fit, whether you require general-purpose instances or high-performance computing clusters.

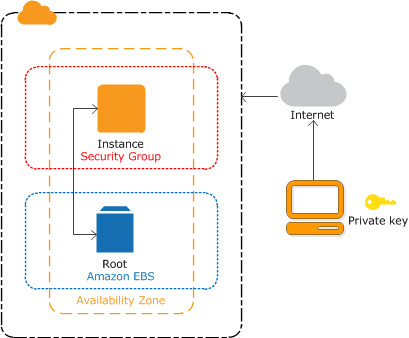

Security and Control: EC2 ensures the utmost security for your applications and data. You have complete control over your instances, including firewall settings and storage encryption, bolstering your defenses against threats.

Storage Options: EC2 provides flexible storage options, including persistent block-level storage (EBS) and scalable file storage (EFS). Leverage these options to securely store and access your data.

Seamless Integration: EC2 seamlessly integrates with other AWS services, allowing you to build comprehensive cloud architectures. Connect with databases, perform scalable data processing, and create a powerful ecosystem.

Conclusion:

With Amazon EC2, you possess the superpowers needed to conquer the cloud. Embrace the elasticity, select the right instance type, secure your applications, leverage storage options, and integrate seamlessly with other services. It's time to unleash your inner superhero and embark on an exciting cloud computing adventure with Amazon EC2!

15 notes

·

View notes

Text

AWS creates EC2 instance types tailored for demanding on-prem workloads

http://securitytc.com/TKSXPx

0 notes

Video

youtube

Learn to Deploy a Web Server on AWS using Terraform - Infrastructure as ...

#youtube#In this step-by-step tutorial you'll discover how to automate AWS infrastructure provisioning using Terraform. We'll create an EC2 instance

0 notes

Text

Mastering the Cloud: Why Every Modern Business Needs an AWS Solutions Architect

With the age of cloud domination, with scalability, flexibility, and digitization driving business success, the role of an AWS Solutions Architect has become one of the most important and coveted careers in the technology sector. They are not technical experts—business facilitators, system architects, and strategic thinkers that empower businesses to harness the potential of Amazon Web Services (AWS) and establish elastic, secure, and future-proofed cloud infrastructures. From a start-up launching a new digital solution to a multinational corporation transitioning its operations into the cloud, an AWS Solutions Architect is such a digital ecosystem's infrastructure architect. Thiers has a wide range of experience in AWS computing, storage, networking, and database services with a strong background in how to piece together and integrate these components to meet business needs in a quality and cost-effective way.

The Part They Play: Architecture, Strategy, and Innovation in the Cloud

Finally, an AWS Solutions Architect is responsible for creating technically viable architectures as well as architectures that are also in alignment with the organization's long-term strategy. They review existing systems, identify where they can implement cloud solutions, and create full-scale architectural designs that are security-oriented, reliable, and economical. They get to choose from an immense collection of AWS services and features—anything from EC2 instances for computation, to S3 for scalable storage, to serverless computers like Lambda. But it's what they get to do with them that's special: build highly available and fault-tolerant systems, and optimize cost and minimize maintenance while doing so. They act as a bridge between technical teams and business stakeholders, translating difficult cloud technologies into simple, actionable plans that add value. They also continue to watch and tune architectures after deployment in order to keep up with shifting requirements, industry trends, and the constantly changing AWS service environment.

Skills, Certifications, and the Value They Bring to Your Business

It ought not only be aware of cloud fundamentals, but there needs to be firm know-how in networking, security, DevOps practices, and disaster recovery. Increasingly, more aim to acquire a qualification like the AWS Certified Solutions Architect – Associate or Professional that assures them that they are capable of designing and implementing secure AWS solutions. These certifications are appreciated by market leaders as they show that one has quality knowledge of best practices in the cloud. Having an AWS Solutions Architect on staff through hiring or contracting is basically having an expert with knowledge that can reduce risks in operations, improve product deployment cycles, and have your cloud strategy cost-effective and technologically sound. With the cloud driving digital transformation initiatives, it is no longer a luxury to have an architect with AWS competencies—it's a competitive necessity.

Conclusion: Investing in the Cloud Starts with the Right Architect

In a digital-first economy where businesses are under pressure at all times to find ways to innovate, grow, and remain ahead of the game, it matters to invest in the proper cloud infrastructure. But beyond that, investing in the right individual to architect and execute that infrastructure is what pays for itself over the long term. An AWS Solutions Architect does more than deploy technology—they enable your business to succeed on the cloud, transforming problems into possibilities and ideas into iterable solutions. Whether you are setting off on a fresh cloud journey or optimizing a mature successful current AWS infrastructure, employing an in-house AWS Solutions Architect might be the best move you ever made.

0 notes

Text

Creating a Scalable Amazon EMR Cluster on AWS in Minutes

Minutes to Scalable EMR Cluster on AWS

AWS EMR cluster

Spark helps you easily build up an Amazon EMR cluster to process and analyse data. This page covers Plan and Configure, Manage, and Clean Up.

This detailed guide to cluster setup:

Amazon EMR Cluster Configuration

Spark is used to launch an example cluster and run a PySpark script in the course. You must complete the “Before you set up Amazon EMR” exercises before starting.

While functioning live, the sample cluster will incur small per-second charges under Amazon EMR pricing, which varies per location. To avoid further expenses, complete the tutorial’s final cleaning steps.

The setup procedure has numerous steps:

Amazon EMR Cluster and Data Resources Configuration

This initial stage prepares your application and input data, creates your data storage location, and starts the cluster.

Setting Up Amazon EMR Storage:

Amazon EMR supports several file systems, but this article uses EMRFS to store data in an S3 bucket. EMRFS reads and writes to Amazon S3 in Hadoop.

This lesson requires a specific S3 bucket. Follow the Amazon Simple Storage Service Console User Guide to create a bucket.

You must create the bucket in the same AWS region as your Amazon EMR cluster launch. Consider US West (Oregon) us-west-2.

Amazon EMR bucket and folder names are limited. Lowercase letters, numerals, periods (.), and hyphens (-) can be used, but bucket names cannot end in numbers and must be unique across AWS accounts.

The bucket output folder must be empty.

Small Amazon S3 files may incur modest costs, but if you’re within the AWS Free Tier consumption limitations, they may be free.

Create an Amazon EMR app using input data:

Standard preparation involves uploading an application and its input data to Amazon S3. Submit work with S3 locations.

The PySpark script examines 2006–2020 King County, Washington food business inspection data to identify the top ten restaurants with the most “Red” infractions. Sample rows of the dataset are presented.

Create a new file called health_violations.py and copy the source code to prepare the PySpark script. Next, add this file to your new S3 bucket. Uploading instructions are in Amazon Simple Storage Service’s Getting Started Guide.

Download and unzip the food_establishment_data.zip file, save the CSV file to your computer as food_establishment_data.csv, then upload it to the same S3 bucket to create the example input data. Again, see the Amazon Simple Storage Service Getting Started Guide for uploading instructions.

“Prepare input data for processing with Amazon EMR” explains EMR data configuration.

Create an Amazon EMR Cluster:

Apache Spark and the latest Amazon EMR release allow you to launch the example cluster after setting up storage and your application. This may be done with the AWS Management Console or CLI.

Console Launch:

Launch Amazon EMR after login into AWS Management Console.

Start with “EMR on EC2” > “Clusters” > “Create cluster”. Note the default options for “Release,” “Instance type,” “Number of instances,” and “Permissions”.

Enter a unique “Cluster name” without <, >, $, |, or `. Install Spark from “Applications” by selecting “Spark”. Note: Applications must be chosen before launching the cluster. Check “Cluster logs” to publish cluster-specific logs to Amazon S3. The default destination is s3://amzn-s3-demo-bucket/logs. Replace with S3 bucket. A new ‘logs’ subfolder is created for log files.

Select your two EC2 keys under “Security configuration and permissions”. For the instance profile, choose “EMR_DefaultRole” for Service and “EMR_EC2_DefaultRole” for IAM.

Choose “Create cluster”.

The cluster information page appears. As the EMR fills the cluster, its “Status” changes from “Starting” to “Running” to “Waiting”. Console view may require refreshing. Status switches to “Waiting” when cluster is ready to work.

AWS CLI’s aws emr create-default-roles command generates IAM default roles.

Create a Spark cluster with aws emr create-cluster. Name your EC2 key pair –name, set –instance-type, –instance-count, and –use-default-roles. The sample command’s Linux line continuation characters () may need Windows modifications.

Output will include ClusterId and ClusterArn. Remember your ClusterId for later.

Check your cluster status using aws emr describe-cluster –cluster-id myClusterId>.

The result shows the Status object with State. As EMR deployed the cluster, the State changed from STARTING to RUNNING to WAITING. When ready, operational, and up, the cluster becomes WAITING.

Open SSH Connections

Before connecting to your operating cluster via SSH, update your cluster security groups to enable incoming connections. Amazon EC2 security groups are virtual firewalls. At cluster startup, EMR created default security groups: ElasticMapReduce-slave for core and task nodes and ElasticMapReduce-master for main.

Console-based SSH authorisation:

Authorisation is needed to manage cluster VPC security groups.

Launch Amazon EMR after login into AWS Management Console.

Select the updateable cluster under “Clusters”. The “Properties” tab must be selected.

Choose “Networking” and “EC2 security groups (firewall)” from the “Properties” tab. Select the security group link under “Primary node”.

EC2 console is open. Select “Edit inbound rules” after choosing “Inbound rules”.

Find and delete any public access inbound rule (Type: SSH, Port: 22, Source: Custom 0.0.0.0/0). Warning: The ElasticMapReduce-master group’s pre-configured rule that allowed public access and limited traffic to reputable sources should be removed.

Scroll down and click “Add Rule”.

Choose “SSH” for “Type” to set Port Range to 22 and Protocol to TCP.

Enter “My IP” for “Source” or a range of “Custom” trustworthy client IP addresses. Remember that dynamic IPs may need updating. Select “Save.”

When you return to the EMR console, choose “Core and task nodes” and repeat these steps to provide SSH access to those nodes.

Connecting with AWS CLI:

SSH connections may be made using the AWS CLI on any operating system.

Use the command: AWS emr ssh –cluster-id –key-pair-file <~/mykeypair.key>. Replace with your ClusterId and the full path to your key pair file.

After connecting, visit /mnt/var/log/spark to examine master node Spark logs.

The next critical stage following cluster setup and access configuration is phased work submission.

#AmazonEMRcluster#EMRcluster#DataResources#SSHConnections#AmazonEC2#AWSCLI#technology#technews#technologynews#news#govindhtech

0 notes

Text

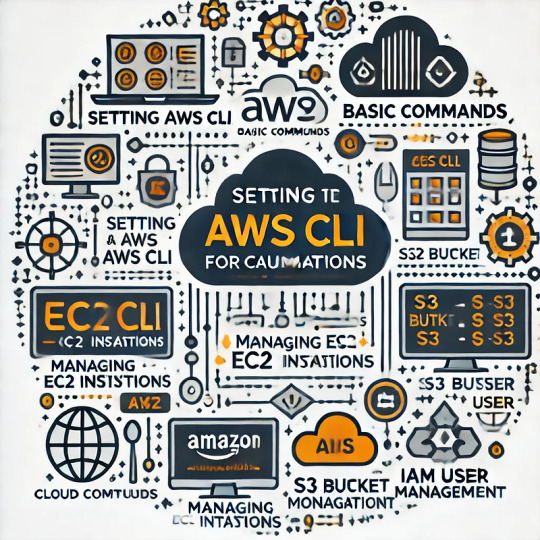

How to Use AWS CLI: Automate Cloud Management with Command Line

The AWS Command Line Interface (AWS CLI) is a powerful tool that allows developers and system administrators to interact with AWS services directly from the terminal. It provides automation capabilities, improves workflow efficiency, and enables seamless cloud resource management.

Why Use AWS CLI?

Automation: Automate repetitive tasks using scripts.

Efficiency: Manage AWS services without navigating the AWS Management Console.

Speed: Perform bulk operations faster than using the web interface.

Scripting & Integration: Combine AWS CLI commands with scripts for complex workflows.

1. Installing AWS CLI

Windows

Download the AWS CLI installer from AWS official site.

Run the installer and follow the prompts.

Verify installation:

aws --version

macOS

Install using Homebrew:

brew install awscli

Verify installation:

aws --version

Linux

Install using package manager:

curl "https://awscli.amazonaws.com/AWSCLIV2.pkg" -o "awscliv2.pkg" sudo installer -pkg awscliv2.pkg -target /

Verify installation:

aws --version

2. Configuring AWS CLI

After installation, configure AWS CLI with your credentials:aws configure

You’ll be prompted to enter:

AWS Access Key ID

AWS Secret Access Key

Default Region (e.g., us-east-1)

Default Output Format (json, text, or table)

Example:AWS Access Key ID [****************ABCD]: AWS Secret Access Key [****************XYZ]: Default region name [us-east-1]: Default output format [json]:

To verify credentials:aws sts get-caller-identity

3. Common AWS CLI Commands

Managing EC2 Instances

List EC2 instances:

aws ec2 describe-instances

Start an instance:

aws ec2 start-instances --instance-ids i-1234567890abcdef0

Stop an instance:

aws ec2 stop-instances --instance-ids i-1234567890abcdef0

S3 Bucket Operations

List all S3 buckets:

aws s3 ls

Create a new S3 bucket:

aws s3 mb s3://my-new-bucket

Upload a file to a bucket:

aws s3 cp myfile.txt s3://my-new-bucket/

Download a file from a bucket:

aws s3 cp s3://my-new-bucket/myfile.txt .

IAM User Management

List IAM users:

aws iam list-users

Create a new IAM user:

aws iam create-user --user-name newuser

Attach a policy to a user:

aws iam attach-user-policy --user-name newuser --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess

Lambda Function Management

List Lambda functions:

aws lambda list-functions

Invoke a Lambda function:

aws lambda invoke --function-name my-function output.json

CloudFormation Deployment

Deploy a stack:

aws cloudformation deploy --stack-name my-stack --template-file template.yaml

Delete a stack:

aws cloudformation delete-stack --stack-name my-stack

4. Automating Tasks with AWS CLI and Bash Scripts

AWS CLI allows users to automate workflows using scripts. Below is an example script to start and stop EC2 instances at scheduled intervals:#!/bin/bash INSTANCE_ID="i-1234567890abcdef0"# Start instance aws ec2 start-instances --instance-ids $INSTANCE_IDecho "EC2 Instance $INSTANCE_ID started."# Wait 60 seconds before stopping sleep 60# Stop instance aws ec2 stop-instances --instance-ids $INSTANCE_IDecho "EC2 Instance $INSTANCE_ID stopped."

Make the script executable:chmod +x manage_ec2.sh

Run the script:./manage_ec2.sh

5. Best Practices for AWS CLI Usage

Use IAM Roles: Avoid storing AWS credentials locally. Use IAM roles for security.

Enable MFA: Add Multi-Factor Authentication for additional security.

Rotate Access Keys Regularly: If using access keys, rotate them periodically.

Use Named Profiles: Manage multiple AWS accounts efficiently using profiles.

aws configure --profile my-profile

Log Command Outputs: Store logs for debugging and monitoring purposes.

aws s3 ls > s3_log.txt

Final Thoughts

AWS CLI is a powerful tool that enhances productivity by automating cloud operations. Whether you’re managing EC2 instances, deploying Lambda functions, or securing IAM users, AWS CLI simplifies interactions with AWS services.

By following best practices and leveraging automation, you can optimize your cloud management and improve efficiency. Start experimenting with AWS CLI today and take full control of your AWS environment!

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

Elevate Your Career With AWS: A In-depth Guide to Becoming an AWS Expert

In the fast-paced and ever-evolving realm of modern technology, proficiency in Amazon Web Services (AWS) has emerged as an invaluable asset, a passport to the boundless opportunities of the digital age. AWS, the colossal titan of cloud computing, offers an extensive array of services that have revolutionized the way businesses operate, innovate, and scale in today's interconnected world. However, mastering AWS is not a mere task; it is a journey that calls for a structured approach, hands-on experience, and access to a treasure trove of reputable learning resources.

Welcome to the world of AWS mastery, where innovation knows no bounds, where your skills become the catalyst for transformative change. Your journey begins now, as we set sail into the horizon of AWS excellence, ready to explore the limitless possibilities that await in the cloud.

Step 1: Setting Sail - Sign Up for AWS

Your AWS voyage begins with a simple yet crucial step - signing up for an AWS account. Fortunately, AWS offers the Free Tier, a generous offering that grants limited free access to many AWS services for the first 12 months. This enables you to explore AWS, experiment with its services, and learn without incurring costs.

Step 2: Unveiling the Map - Official AWS Documentation

Before you embark on your AWS adventure, it's essential to understand the lay of the land. AWS provides extensive documentation for all its services. This documentation is a treasure of knowledge, offering insights into each service, its use cases, and comprehensive guides on how to configure and utilize them. It's a valuable resource that is regularly updated to keep you informed about the latest developments.

Step 3: Guided Tours - Online Courses and Tutorials

While solo exploration is commendable, guided tours can significantly enhance your learning experience. Enroll in online courses and tutorials offered by reputable platforms such as Coursera, Udemy, ACTE, or AWS Training and Certification. These courses often include video lectures, hands-on labs, and quizzes to reinforce your understanding. Consider specialized AWS training programs like those offered by ACTE Technologies, where expert-led courses can take your AWS skills to the next level.

Step 4: Raising the Flag - AWS Certification

Achieving AWS certification is akin to hoisting your flag of expertise in the AWS realm. AWS offers a range of certifications that validate your proficiency in specific AWS areas, including Solutions Architect, Developer, SysOps Administrator, and more. Preparing for these certifications provides in-depth knowledge, and there are study guides and practice exams available to aid your preparation.

Step 5: Hands-on Deck - Practical Experience

In the world of AWS, knowledge is best acquired through hands-on experience. Create AWS accounts designated for practice purposes, set up virtual machines (EC2 instances), configure storage (S3), and experiment with various AWS services. Building real projects is an effective way to solidify your understanding and showcase your skills.

Step 6: Navigating the AWS Console and CLI

As you progress, it's essential to be fluent in navigating AWS. Familiarize yourself with the AWS Management Console, a web-based interface for managing AWS resources. Additionally, learn to wield the AWS Command Line Interface (CLI), a powerful tool for scripting and automating tasks, giving you the agility to manage AWS resources efficiently.

Step 7: Joining the Crew - Community Engagement

Learning is often more enriching when you're part of a community. Join AWS-related forums and communities, such as the AWS subreddit and AWS Developer Forums. Engaging with others who are on their own AWS learning journeys can help you get answers to your questions, share experiences, and gain valuable insights.

Step 8: Gathering Wisdom - Blogs and YouTube Channels

Stay updated with the latest trends and insights in the AWS ecosystem by following AWS blogs and YouTube channels. These platforms provide tutorials, case studies, and deep dives into AWS services. Don't miss out on AWS re:Invent sessions, available on YouTube, which offer in-depth explorations of AWS services and solutions.

Step 9: Real-World Adventures - Projects

Application of your AWS knowledge to practical projects is where your skills truly shine. Whether it's setting up a website, creating a scalable application, or orchestrating a complex migration to AWS, hands-on experience is invaluable. Real-world projects not only demonstrate your capabilities but also prepare you for the challenges you might encounter in a professional setting.

Step 10: Staying on Course - Continuous Learning

The AWS landscape is ever-evolving, with new services and features being introduced regularly. Stay informed by following AWS news, subscribing to newsletters, and attending AWS events and webinars. Continuous learning is the compass that keeps you on course in the dynamic world of AWS.

Step 11: Guiding Lights - Mentorship

If possible, seek out a mentor with AWS experience. Mentorship provides valuable guidance and insights as you learn. Learning from someone who has navigated the AWS waters can accelerate your progress and help you avoid common pitfalls.

Mastering AWS is not a destination; it's a continuous journey. As you gain proficiency, you can delve into advanced topics and specialize in areas that align with your career goals. The key to mastering AWS lies in a combination of self-study, hands-on practice, and access to reliable learning resources.

In conclusion, ACTE Technologies emerges as a trusted provider of IT training and certification programs, including specialized AWS training. Their expert-led courses and comprehensive curriculum make them an excellent choice for those looking to enhance their AWS skills. Whether you aim to propel your career or embark on a thrilling journey into the world of cloud computing, ACTE Technologies can be your steadfast partner on the path to AWS expertise.

AWS isn't just a skill; it's a transformative force in the world of technology. It's the catalyst for innovation, scalability, and boundless possibilities. So, set sail on your AWS journey, armed with knowledge, practice, and the determination to conquer the cloud. The world of AWS awaits your exploration.

9 notes

·

View notes

Text

Navigating AWS: A Comprehensive Guide for Beginners

In the ever-evolving landscape of cloud computing, Amazon Web Services (AWS) has emerged as a powerhouse, providing a wide array of services to businesses and individuals globally. Whether you're a seasoned IT professional or just starting your journey into the cloud, understanding the key aspects of AWS is crucial. With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries. This blog will serve as your comprehensive guide, covering the essential concepts and knowledge needed to navigate AWS effectively.

1. The Foundation: Cloud Computing Basics

Before delving into AWS specifics, it's essential to grasp the fundamentals of cloud computing. Cloud computing is a paradigm that offers on-demand access to a variety of computing resources, including servers, storage, databases, networking, analytics, and more. AWS, as a leading cloud service provider, allows users to leverage these resources seamlessly.

2. Setting Up Your AWS Account

The first step on your AWS journey is to create an AWS account. Navigate to the AWS website, provide the necessary information, and set up your payment method. This account will serve as your gateway to the vast array of AWS services.

3. Navigating the AWS Management Console

Once your account is set up, familiarize yourself with the AWS Management Console. This web-based interface is where you'll configure, manage, and monitor your AWS resources. It's the control center for your cloud environment.

4. AWS Global Infrastructure: Regions and Availability Zones

AWS operates globally, and its infrastructure is distributed across regions and availability zones. Understand the concept of regions (geographic locations) and availability zones (isolated data centers within a region). This distribution ensures redundancy and high availability.

5. Identity and Access Management (IAM)

Security is paramount in the cloud. AWS Identity and Access Management (IAM) enable you to manage user access securely. Learn how to control who can access your AWS resources and what actions they can perform.

6. Key AWS Services Overview

Explore fundamental AWS services:

Amazon EC2 (Elastic Compute Cloud): Virtual servers in the cloud.

Amazon S3 (Simple Storage Service): Scalable object storage.

Amazon RDS (Relational Database Service): Managed relational databases.

7. Compute Services in AWS

Understand the various compute services:

EC2 Instances: Virtual servers for computing capacity.

AWS Lambda: Serverless computing for executing code without managing servers.

Elastic Beanstalk: Platform as a Service (PaaS) for deploying and managing applications.

8. Storage Options in AWS

Explore storage services:

Amazon S3: Object storage for scalable and durable data.

EBS (Elastic Block Store): Block storage for EC2 instances.

Amazon Glacier: Low-cost storage for data archiving.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Top AWS Training Institute.

9. Database Services in AWS

Learn about managed database services:

Amazon RDS: Managed relational databases.

DynamoDB: NoSQL database for fast and predictable performance.

Amazon Redshift: Data warehousing for analytics.

10. Networking Concepts in AWS

Grasp networking concepts:

Virtual Private Cloud (VPC): Isolated cloud networks.

Route 53: Domain registration and DNS web service.

CloudFront: Content delivery network for faster and secure content delivery.

11. Security Best Practices in AWS

Implement security best practices:

Encryption: Ensure data security in transit and at rest.

IAM Policies: Control access to AWS resources.

Security Groups and Network ACLs: Manage traffic to and from instances.

12. Monitoring and Logging with AWS CloudWatch and CloudTrail

Set up monitoring and logging:

CloudWatch: Monitor AWS resources and applications.

CloudTrail: Log AWS API calls for audit and compliance.

13. Cost Management and Optimization

Understand AWS pricing models and manage costs effectively:

AWS Cost Explorer: Analyze and control spending.

14. Documentation and Continuous Learning

Refer to the extensive AWS documentation, tutorials, and online courses. Stay updated on new features and best practices through forums and communities.

15. Hands-On Practice

The best way to solidify your understanding is through hands-on practice. Create test environments, deploy sample applications, and experiment with different AWS services.

In conclusion, AWS is a dynamic and powerful ecosystem that continues to shape the future of cloud computing. By mastering the foundational concepts and key services outlined in this guide, you'll be well-equipped to navigate AWS confidently and leverage its capabilities for your projects and initiatives. As you embark on your AWS journey, remember that continuous learning and practical application are key to becoming proficient in this ever-evolving cloud environment.

2 notes

·

View notes

Text

Amazon EBS: Reliable Cloud Storage Made Simple

Introduction:

Amazon Elastic Block Store (EBS) is a dependable and flexible storage solution offered by Amazon Web Services (AWS). It provides persistent block-level storage volumes for your EC2 instances, ensuring data durability and accessibility. Let's explore the key features and benefits of Amazon EBS in a nutshell.

Key Features of Amazon EBS:

Durability and High Availability: EBS replicates your data within availability zones, safeguarding it against hardware failures and ensuring high data durability.

Elasticity and Scalability: EBS allows you to easily resize storage volumes, providing flexibility to accommodate changing storage needs and optimizing costs by paying only for what you use.

Performance Options: With different volume types available, you can choose the optimal balance of cost and performance for your specific requirements, ranging from General Purpose SSD to Throughput Optimized HDD.

Snapshot and Replication: EBS supports creating snapshots of your volumes, allowing you to back up data and restore or create new volumes from these snapshots. It also enables cross-region replication for enhanced data protection and availability.

Integration with AWS Services: EBS seamlessly integrates with other AWS services such as RDS, EMR, and EKS, making it suitable for a wide range of applications including databases, big data analytics, and containerized environments.

Use Cases for Amazon EBS:

Database Storage: EBS provides durable and scalable storage for various database workloads, ensuring reliable data persistence and efficient access.

Big Data and Analytics: With its high throughput and capacity, EBS supports big data platforms and enables processing and analysis of large datasets.

High-Performance Computing (HPC): EBS with Provisioned IOPS offers high I/O performance, making it ideal for demanding computational workloads such as simulations and financial modeling.

Disaster Recovery: Utilizing EBS snapshots and cross-region replication, you can implement robust disaster recovery strategies for your data.

Conclusion:

Amazon EBS is a powerful storage solution within the AWS ecosystem. With its durability, scalability, performance options, and integration capabilities, EBS caters to diverse storage needs, from databases to big data analytics. By leveraging EBS, you can ensure the reliability, availability, and flexibility of your cloud storage infrastructure.

16 notes

·

View notes