#data.visualization

Explore tagged Tumblr posts

Text

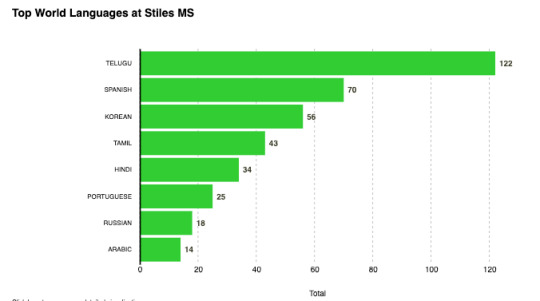

Simple start. Generated the same chart below with the help of ChatGPT. Took a few iterations to get the tooltips, font and colors to work.

https://szmtz.github.io/new-simple-bar-chart-languages/

______________________________________________________________

0 notes

Text

Brain-to-brain interfaces can change human existence forever.

Can the mind connect directly with artificial intelligence, robots and other minds through brain-computer interface (BCI) technologies to transcend our human limitations? For some, it is a necessity to our survival. Indeed, we would need to become cyborgs to be relevant in an artificial intelligence age.

A BCI is a computer-based system that acquires brain signals, analyzes them, and translates them into commands that are relayed to an output device to carry out a desired action. Thus, BCIs do not use the brain's normal output pathways of peripheral nerves and muscles. ... The user and the BCI work together. Some prototypes can translate brain activity into text or instructions for a computer, and in theory, as the technology improves, we'll see people using BCIs to write memos or reports at work. We could also imagine a work environment that adapts automatically to your stress level or thoughts. Read the part below written by Alexandre Gonfalonieri on Medium.

Definition

Brain-Computer Interface (BCI): devices that enable its users to interact with computers by mean of brain-activity only, this activity being generally measured by ElectroEncephaloGraphy (EEG).Electroencephalography (EEG): physiological method of choice to record the electrical activity generated by the brain via electrodes placed on the scalp surface.Functional magnetic resonance imaging (fMRI): measures brain activity by detecting changes associated with blood flow.Functional Near-Infrared Spectroscopy (fNIRS): the use of near-infrared spectroscopy (NIRS) for the purpose of functional neuroimaging. Using fNIRS, brain activity is measured through hemodynamic responses associated with neuron behaviour.Convolutional Neural Network (CNN): a type of artificial neural network used in image recognition and processing that is specifically designed to process pixel data.Visual Cortex: part of the cerebral cortex that receives and processes sensory nerve impulses from the eyes

History

Sarah Marsch, Guardian news reporter, said “ Brain-computer interfaces (BCI) aren’t a new idea. Various forms of BCI are already available, from ones that sit on top of your head and measure brain signals to devices that are implanted into your brain tissue.” (source) Most BCIs were initially developed for medical applications. According to Zaza Zuilhof, Lead Designer at Tellart, “Some 220,000 hearing impaired already benefit from cochlear implants, which translate audio signals into electrical pulses sent directly to their brains.” (source) The article called “The Brief History of Brain Computer Interfaces” gives us many information related to the history of BCI. Indeed, the article says “In the 1970s, research on BCIs started at the University of California, which led to the emergence of the expression brain–computer interface. The focus of BCI research and development continues to be primarily on neuroprosthetics applications that can help restore damaged sight, hearing, and movement. The mid-1990s marked the appearance of the first neuroprosthetic devices for humans. BCI doesn’t read the mind accurately, but detects the smallest of changes in the energy radiated by the brain when you think in a certain way. A BCI recognizes specific energy/ frequency patterns in the brain. June 2004 marked a significant development in the field when Matthew Nagle became the first human to be implanted with a BCI, Cyberkinetics’s BrainGate™. In December 2004, Jonathan Wolpaw and researchers at New York State Department of Health’s Wadsworth Center came up with a research report that demonstrated the ability to control a computer using a BCI. In the study, patients were asked to wear a cap that contained electrodes to capture EEG signals from the motor cortex — part of the cerebrum governing movement. BCI has had a long history centered on control applications: cursors, paralyzed body parts, robotic arms, phone dialing, etc. Recently Elon Musk entered the industry, announcing a $27 million investment in Neuralink, a venture with the mission to develop a BCI that improves human communication in light of AI. And Regina Dugan presented Facebook’s plans for a game changing BCI technology that would allow for more efficient digital communication.” According to John Thomas, Tomasz Maszczyk, Nishant Sinha, Tilmann Kluge, and Justin Dauwels “A BCI system has four major components: signal acquisition, signal preprocessing, feature extraction, and classification.” (source)

Why does it matter?

According to Davide Valeriani, Post-doctoral Researcher in Brain-Computer Interfaces at the University of Essex, “The combination of humans and technology could be more powerful than artificial intelligence. For example, when we make decisions based on a combination of perception and reasoning, neurotechnologies could be used to improve our perception. This could help us in situations such when seeing a very blurry image from a security camera and having to decide whether to intervene or not.” (source) read the full article above here

No longer science fiction: Brain-to-brain interfaces can transmit thoughts

read the article study written by Jordan Herrod on GeneticLiteracyProject While brain-to-brain interfaces have existed in science fiction novels for decades, they have long been thought to be a technological innovation only feasible in our wildest dreams. Between our lack of understanding of the intricacies of the human brain, the ethical barriers to the types of experiments long believed to be necessary for such interfaces, and a lack of well-defined need for such technology, researchers have instead focused on developing brain-to-machine interfaces for prosthetics, rehabilitation, and mental health treatment. In spite of that, some progress has been made towards brain-to-brain interfaces. Studies on brain-to-brain interfaces have been performed in rodent models and brain-machine interfaces in human studies for several years. In humans, these studies typically focused on treating epilepsy or paralysis, but were limited to a single person. The few brain-to-brain interfaces that have been studied in humans aimed to show that information can be transmitted from one person to another without audio or visual cues, but were not typically direct interfaces – someone had to push a button or transmit information in a way other than through the brain interface for the information to reach each participant. …a future in which our brains are part of an interconnected social system may be closer than we think. More recently, researchers have been able to remove those intermediate steps and directly connect one brain to another. In fact, Massive covered one of those interfaces last fall. However, multi-person brain-to-brain interfaces, which would allow us to see whether the collective intelligence of a group can complete a task without any other form of communication, have eluded researchers until recently. Scientists at the University of Washington and Carnegie Mellon University created a brain-to-brain interface system that allows one or more people, called “Senders,” to influence the decisions of an individual, called a “Receiver,” with the goal of helping the Receiver play a Tetris-like game that only the Senders can see. Although preliminary, with the influence of Senders used to help a Receiver win an unseen game of Tetris, this development makes it clear that a future in which our brains are part of an interconnected social system may be closer than we think.

“Player 2 get in here I need help!!” Image credit: Wikimedia To do this, they drew on their past work with brain-to-brain interfaces. The Senders wore electroencephalography (EEG) caps, which allowed the researchers to measure brain activity via electrical signals, and watched a Tetris-like game with a falling shape that needed to be rotated to fit into a row at the bottom of the screen. In another room, the Receiver sat with a transcranial magnetic stimulation (TMS) apparatus positioned near the visual cortex. The Receiver could only see the falling shape, not the gap that it needed to fill, so their decision to rotate the block was not based on the gap that needed to be filled. If a Sender thought the Receiver should rotate the shape, they would look at a light flashing at 17 hertz (Hz) for Yes. Otherwise, they would look at a light flashing 15Hz for No. Based on the frequency that was more apparent in the Senders’ EEG data, the Receiver’s TMS apparatus would stimulate their visual cortex above or below a threshold, signaling the Receiver to make the choice of whether to rotate. With this experiment, the Receiver was correct 81 percent of the time. What makes this study particularly interesting is the incorporation of a situation where the Senders do not agree – that is, where one Sender wants to rotate the shape and the other does not. To test this, the researchers intentionally made one Sender less reliable than the other to see if the Receiver could figure out whether one Sender was more likely to be correct than the other. They quantified this by creating a “Mutual Information” score that measured how reliably information was conveyed from one of the Senders to the Receiver, and saw that the Receiver was statistically more likely to act on information from the more reliable Sender than from the less reliable one.

The future of video games. Image credit: Petter Kallioinen via Wikimedia commons Looking forward, this study may lay the groundwork for multi-person brain-to-brain interfaces that allow sharing of more complex thoughts or actions. Imagine a world where ideas and experiences could be shared directly with other people, allowing them to quite literally walk in your shoes or help you navigate unfamiliar and dangerous territory. From divers to soldiers to patients who cannot communicate verbally, brain-to-brain interfaces could allow groups to make decisions based on their collective knowledge without saying a word. With machine learning becoming increasingly adept at understanding our brain signals, it is certainly possible. Though such a breakthrough is still a ways off – scientists currently don’t have a deep enough understanding of the brain to relate EEG signals to specific actions or thoughts needed to realize that goal. There are also ethical ramifications to consider. After all, ideas and thoughts should only be transmitted between people if both parties agree. As this technology develops, the safety and security of the people who will eventually use it should be considered at every step. Read the full article

0 notes

Text

1st d3 bar chart

created a first simple bar chart in d3js thanks to the datavizdad for the guidance.

______________________________________________________________

0 notes

Text

______________________________________________________________

0 notes

Text

______________________________________________________________

0 notes

Text

______________________________________________________________

0 notes

Text

______________________________________________________________

0 notes

Text

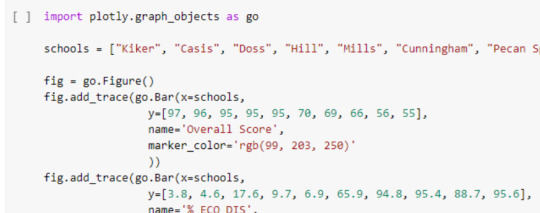

Final Project for 5709 Data Visualization and Communication utilizing Plotly Chart Studio, Plotly for Python and Google Colab.

______________________________________________________________

0 notes

Link

0 notes