#dgx

Text

Nvidia HGX vs DGX: Key Differences in AI Supercomputing Solutions

Nvidia HGX vs DGX: What are the differences?

Nvidia is comfortably riding the AI wave. And for at least the next few years, it will likely not be dethroned as the AI hardware market leader. With its extremely popular enterprise solutions powered by the H100 and H200 “Hopper” lineup of GPUs (and now B100 and B200 “Blackwell” GPUs), Nvidia is the go-to manufacturer of high-performance computing (HPC) hardware.

Nvidia DGX is an integrated AI HPC solution targeted toward enterprise customers needing immensely powerful workstation and server solutions for deep learning, generative AI, and data analytics. Nvidia HGX is based on the same underlying GPU technology. However, HGX is a customizable enterprise solution for businesses that want more control and flexibility over their AI HPC systems. But how do these two platforms differ from each other?

Nvidia DGX: The Original Supercomputing Platform

It should surprise no one that Nvidia’s primary focus isn’t on its GeForce lineup of gaming GPUs anymore. Sure, the company enjoys the lion’s share among the best gaming GPUs, but its recent resounding success is driven by enterprise and data center offerings and AI-focused workstation GPUs.

Overview of DGX

The Nvidia DGX platform integrates up to 8 Tensor Core GPUs with Nvidia’s AI software to power accelerated computing and next-gen AI applications. It’s essentially a rack-mount chassis containing 4 or 8 GPUs connected via NVLink, high-end x86 CPUs, and a bunch of Nvidia’s high-speed networking hardware. A single DGX B200 system is capable of 72 petaFLOPS of training and 144 petaFLOPS of inference performance.

Key Features of DGX

AI Software Integration: DGX systems come pre-installed with Nvidia’s AI software stack, making them ready for immediate deployment.

High Performance: With up to 8 Tensor Core GPUs, DGX systems provide top-tier computational power for AI and HPC tasks.

Scalability: Solutions like the DGX SuperPOD integrate multiple DGX systems to form extensive data center configurations.

Current Offerings

The company currently offers both Hopper-based (DGX H100) and Blackwell-based (DGX B200) systems optimized for AI workloads. Customers can go a step further with solutions like the DGX SuperPOD (with DGX GB200 systems) that integrates 36 liquid-cooled Nvidia GB200 Grace Blackwell Superchips, comprised of 36 Nvidia Grace CPUs and 72 Blackwell GPUs. This monstrous setup includes multiple racks connected through Nvidia Quantum InfiniBand, allowing companies to scale thousands of GB200 Superchips.

Legacy and Evolution

Nvidia has been selling DGX systems for quite some time now — from the DGX Server-1 dating back to 2016 to modern DGX B200-based systems. From the Pascal and Volta generations to the Ampere, Hopper, and Blackwell generations, Nvidia’s enterprise HPC business has pioneered numerous innovations and helped in the birth of its customizable platform, Nvidia HGX.

Nvidia HGX: For Businesses That Need More

Build Your Own Supercomputer

For OEMs looking for custom supercomputing solutions, Nvidia HGX offers the same peak performance as its Hopper and Blackwell-based DGX systems but allows OEMs to tweak it as needed. For instance, customers can modify the CPUs, RAM, storage, and networking configuration as they please. Nvidia HGX is actually the baseboard used in the Nvidia DGX system but adheres to Nvidia’s own standard.

Key Features of HGX

Customization: OEMs have the freedom to modify components such as CPUs, RAM, and storage to suit specific requirements.

Flexibility: HGX allows for a modular approach to building AI and HPC solutions, giving enterprises the ability to scale and adapt.

Performance: Nvidia offers HGX in x4 and x8 GPU configurations, with the latest Blackwell-based baseboards only available in the x8 configuration. An HGX B200 system can deliver up to 144 petaFLOPS of performance.

Applications and Use Cases

HGX is designed for enterprises that need high-performance computing solutions but also want the flexibility to customize their systems. It’s ideal for businesses that require scalable AI infrastructure tailored to specific needs, from deep learning and data analytics to large-scale simulations.

Nvidia DGX vs. HGX: Summary

Simplicity vs. Flexibility

While Nvidia DGX represents Nvidia’s line of standardized, unified, and integrated supercomputing solutions, Nvidia HGX unlocks greater customization and flexibility for OEMs to offer more to enterprise customers.

Rapid Deployment vs. Custom Solutions

With Nvidia DGX, the company leans more into cluster solutions that integrate multiple DGX systems into huge and, in the case of the DGX SuperPOD, multi-million-dollar data center solutions. Nvidia HGX, on the other hand, is another way of selling HPC hardware to OEMs at a greater profit margin.

Unified vs. Modular

Nvidia DGX brings rapid deployment and a seamless, hassle-free setup for bigger enterprises. Nvidia HGX provides modular solutions and greater access to the wider industry.

FAQs

What is the primary difference between Nvidia DGX and HGX?

The primary difference lies in customization. DGX offers a standardized, integrated solution ready for deployment, while HGX provides a customizable platform that OEMs can adapt to specific needs.

Which platform is better for rapid deployment?

Nvidia DGX is better suited for rapid deployment as it comes pre-integrated with Nvidia’s AI software stack and requires minimal setup.

Can HGX be used for scalable AI infrastructure?

Yes, Nvidia HGX is designed for scalable AI infrastructure, offering flexibility to customize and expand as per business requirements.

Are DGX and HGX systems compatible with all AI software?

Both DGX and HGX systems are compatible with Nvidia’s AI software stack, which supports a wide range of AI applications and frameworks.

Final Thoughts

Choosing between Nvidia DGX and HGX ultimately depends on your enterprise’s needs. If you require a turnkey solution with rapid deployment, DGX is your go-to. However, if customization and scalability are your top priorities, HGX offers the flexibility to tailor your HPC system to your specific requirements.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Quest Diagnostics Inc defies expectations with a surge in earnings per share despite a decline in revenue https://csimarket.com/stocks/news.php?code=DGX&date=2024-02-23132508&utm_source=dlvr.it&utm_medium=tumblr

0 notes

Text

Exploring the Key Differences: NVIDIA DGX vs NVIDIA HGX Systems

A frequent topic of inquiry we encounter involves understanding the distinctions between the NVIDIA DGX and NVIDIA HGX platforms. Despite the resemblance in their names, these platforms represent distinct approaches NVIDIA employs to market its 8x GPU systems featuring NVLink technology. The shift in NVIDIA’s business strategy was notably evident during the transition from the NVIDIA P100 “Pascal” to the V100 “Volta” generations. This period marked the significant rise in prominence of the HGX model, a trend that has continued through the A100 “Ampere” and H100 “Hopper” generations.

NVIDIA DGX versus NVIDIA HGX What is the Difference

Focusing primarily on the 8x GPU configurations that utilize NVLink, NVIDIA’s product lineup includes the DGX and HGX lines. While there are other models like the 4x GPU Redstone and Redstone Next, the flagship DGX/HGX (Next) series predominantly features 8x GPU platforms with SXM architecture. To understand these systems better, let’s delve into the process of building an 8x GPU system based on the NVIDIA Tesla P100 with SXM2 configuration.

DeepLearning12 Initial Gear Load Out

Each server manufacturer designs and builds a unique baseboard to accommodate GPUs. NVIDIA provides the GPUs in the SXM form factor, which are then integrated into servers by either the server manufacturers themselves or by a third party like STH.

DeepLearning12 Half Heatsinks Installed 800

This task proved to be quite challenging. We encountered an issue with a prominent server manufacturer based in Texas, where they had applied an excessively thick layer of thermal paste on the heatsinks. This resulted in damage to several trays of GPUs, with many experiencing cracks. This experience led us to create one of our initial videos, aptly titled “The Challenges of SXM2 Installation.” The difficulty primarily arose from the stringent torque specifications required during the GPU installation process.

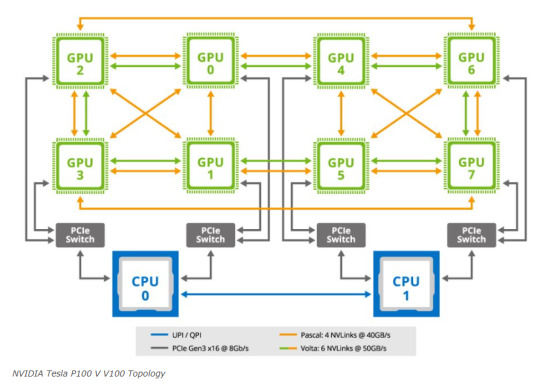

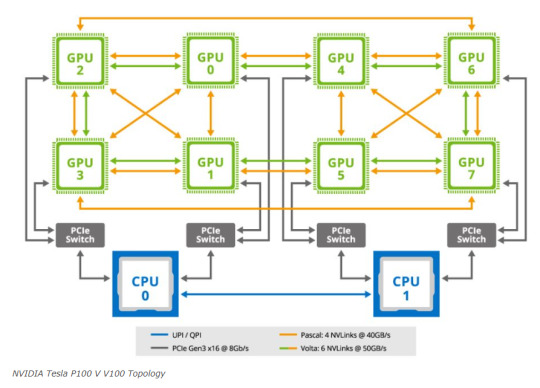

NVIDIA Tesla P100 V V100 Topology

During this development, NVIDIA established a standard for the 8x SXM GPU platform. This standardization incorporated Broadcom PCIe switches, initially for host connectivity, and subsequently expanded to include Infiniband connectivity.

Microsoft HGX 1 Topology

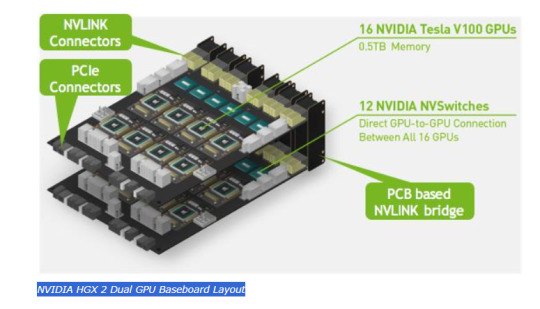

It also added NVSwitch. NVSwitch was a switch for the NVLink fabric that allowed higher performance communication between GPUs. Originally, NVIDIA had the idea that it could take two of these standardized boards and put them together with this larger switch fabric. The impact, though, was that now the NVIDIA GPU-to-GPU communication would occur on NVIDIA NVSwitch silicon and PCIe would have a standardized topology. HGX was born.

NVIDIA HGX 2 Dual GPU Baseboard Layout

Let’s delve into a comparison of the NVIDIA V100 setup in a server from 2020, renowned for its standout color scheme, particularly in the NVIDIA SXM coolers. When contrasting this with the earlier P100 version, an interesting detail emerges. In the Gigabyte server that housed the P100, one could notice that the SXM2 heatsinks were without branding. This marked a significant shift in NVIDIA’s approach. With the advent of the NVSwitch baseboard equipped with SXM3 sockets, NVIDIA upped its game by integrating not just the sockets but also the GPUs and their cooling systems directly. This move represented a notable advancement in their hardware design strategy.

Consequences

The consequences of this development were significant. Server manufacturers now had the option to acquire an 8-GPU module directly from NVIDIA, eliminating the need to apply excessive thermal paste to the GPUs. This change marked the inception of the NVIDIA HGX topology. It allowed server vendors the flexibility to customize the surrounding hardware as they desired. They could select their preferred specifications for RAM, CPUs, storage, and other components, while adhering to the predetermined GPU configuration determined by the NVIDIA HGX baseboard.

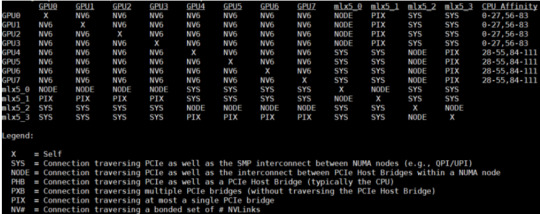

Inspur NF5488M5 Nvidia Smi Topology

This was very successful. In the next generation, the NVSwitch heatsinks got larger, the GPUs lost a great paint job, but we got the NVIDIA A100.

The codename for this baseboard is “Delta”.

Officially, this board was called the NVIDIA HGX.

Inspur NF5488A5 NVIDIA HGX A100 8 GPU Assembly 8x A100 And NVSwitch Heatsinks Side 2

NVIDIA, along with its OEM partners and clients, recognized that increased power could enable the same quantity of GPUs to perform additional tasks. However, this enhancement came with a drawback: higher power consumption led to greater heat generation. This development prompted the introduction of liquid-cooled NVIDIA HGX A100 “Delta” platforms to efficiently manage this heat issue.

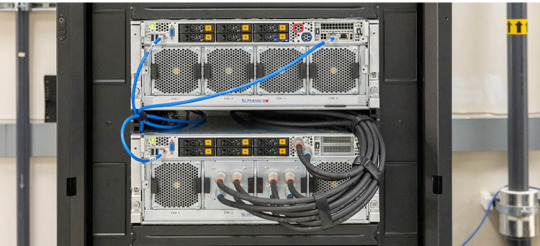

Supermicro Liquid Cooling Supermicro

The HGX A100 assembly was initially introduced with its own brand of air cooling systems, distinctively designed by the company.

In the newest “Hopper” series, the cooling systems were upscaled to manage the increased demands of the more powerful GPUs and the enhanced NVSwitch architecture. This upgrade is exemplified in the NVIDIA HGX H100 platform, also known as “Delta Next”.

NVIDIA DGX H100

NVIDIA’s DGX and HGX platforms represent cutting-edge GPU technology, each serving distinct needs in the industry. The DGX series, evolving since the P100 days, integrates HGX baseboards into comprehensive server solutions. Notable examples include the DGX V100 and DGX A100. These systems, crafted by rotating OEMs, offer fixed configurations, ensuring consistent, high-quality performance.

While the DGX H100 sets a high standard, the HGX H100 platform caters to clients seeking customization. It allows OEMs to tailor systems to specific requirements, offering variations in CPU types (including AMD or ARM), Xeon SKU levels, memory, storage, and network interfaces. This flexibility makes HGX ideal for diverse, specialized applications in GPU computing.

Conclusion

NVIDIA’s HGX baseboards streamline the process of integrating 8 GPUs with advanced NVLink and PCIe switched fabric technologies. This innovation allows NVIDIA’s OEM partners to create tailored solutions, giving NVIDIA the flexibility to price HGX boards with higher margins. The HGX platform is primarily focused on providing a robust foundation for custom configurations.

In contrast, NVIDIA’s DGX approach targets the development of high-value AI clusters and their associated ecosystems. The DGX brand, distinct from the DGX Station, represents NVIDIA’s comprehensive systems solution.

Particularly noteworthy are the NVIDIA HGX A100 and HGX H100 models, which have garnered significant attention following their adoption by leading AI initiatives like OpenAI and ChatGPT. These platforms demonstrate the capabilities of the 8x NVIDIA A100 setup in powering advanced AI tools. For those interested in a deeper dive into the various HGX A100 configurations and their role in AI development, exploring the hardware behind ChatGPT offers insightful perspectives on the 8x NVIDIA A100’s power and efficiency.

M.Hussnain

Visit us on social media: Facebook | Twitter | LinkedIn | Instagram | YouTube TikTok

#nvidia#nvidia dgx h100#nvidia hgx#DGX#HGX#Nvidia HGX A100#Nvidia HGX H100#Nvidia H100#Nvidia A100#Nvidia DGX H100#viperatech

0 notes

Text

Smart Trading: Expert Signals & Strategy - 5 January 2024

The stock market is a dynamic and complex environment that requires constant attention and adaptation. To succeed in this field, you need to have smart trading, expert signals and strategy. Smart trading is the use of sophisticated software and algorithms to monitor and predict the market movements. Expert signals are the tips and advice from professional traders or experts who have a deep…

View On WordPress

#ALB#AMC#ATAI#BIIB#BTI#C3 AI#CLX#CMCSA#CTVA#Dash#DGX#DVN#EPD#ETR#EVRG#HAL#HD#ICL#KR#LKQ#LVS#MAA#MCD#MDT#MGPI#MIRM#MKC#NI#NOW#PLTK

0 notes

Photo

Laughing Water Capital is thriving. The city is a center of financial activity and the most important metropolis in the world. The future is bright and the city is prospering. That is, until the letter arrives. The letter is from the mayor and it is not good news. The city is in trouble, the finances are a mess and the future is in doubt. The only way to save the city is to sell off the Laughing Water Capital. The news is a shock and the city is in an uproar. The decision will devastate the city, but there is no choice. The sale goes through and the city is saved. But at what cost?

0 notes

Text

Nvidia、DGX H100とOVXシステムを組み合わせたスーパーコンピュータ「Taipei-1」を計画

Nvidiaは、台湾で「Taipei-1」と呼ばれる新たなスーパーコンピュータの発表を計画しています。

このスーパーコンピュータは、64台のDGX H100 AIシステムと64台のOVXシステムを搭載します。 個々のDGXは、8基のH100 GPUと2基のIntel Xeon Platinum 8480C CPUを備え、負荷の高いAIワークロードを対象としており、OVXはL40 GPUと高性能ネットワーキングを組み合わせ、よりデジタルツインやシミュレーション用途向けに設計されています。

このプラットフォームは、企業や研究者らがNvidia製品を購入する前、あるいは購入する代わりに検証するための方法としてのNvidia DGX…

View On WordPress

0 notes

Video

youtube

Nvidia DGX1 H100 - NVIDIA DGX with 8x H100 GPUs

0 notes

Text

youtube

0 notes

Text

How Jensen Huang's Nvidia Is Powering the A.I. Revolution

0 notes

Text

NVIDIA (NVDA) joins the Trillion Dollar Club

NVIDIA has joined Alphabet (GOOG), Apple (AAPL), and Microsoft (MSFT) in the trillion dollar club. NVIDIA (NVDA) achieved a $1 trillion market capitalization on 30 May 2023.

NVIDIA’s stock price will have to stay over $404.86 to keep the trillion-dollar value, CNBC reports. Thus, NVIDIA is close to regaining $1 trillion status because Mr. Market was paying $391.71 for its shares on 5 June…

View On WordPress

#AI Fuels NVIDIA’s Explosive Growth#NVIDIA (NASDAQ: NVDA)#NVIDIA (NVDA)#NVIDIA (NVDA) joins the Trillion Dollar Club#NVIDIA DGX Cloud#The Trillion Dollar Cloud

0 notes

Text

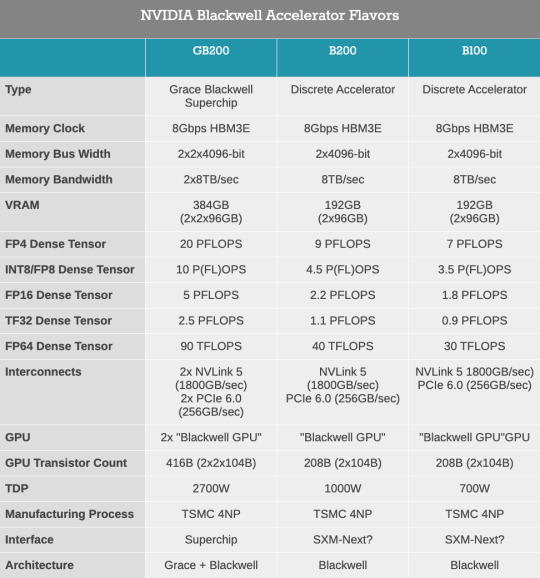

NVIDIA Steps Up Its Game: Unveiling the Blackwell Architecture and the Powerhouse B200/B100 Accelerators

NVIDIA, a titan in the world of generative AI accelerators, is not one to rest on its laurels. Despite already dominating the accelerator market, the tech giant is determined to push the envelope further. With the unveiling of its next-generation Blackwell architecture and the B200/B100 accelerators, NVIDIA is set to redefine what’s possible in AI computing yet again.

As we witnessed the return of the in-person GTC for the first time in five years, NVIDIA’s CEO, Jensen Huang, took center stage to introduce an array of new enterprise technologies. However, it was the announcement of the Blackwell architecture that stole the spotlight. This move marks a significant leap forward, building upon the success of NVIDIA’s H100/H200/GH200 series.

Named after the American statistical and mathematical pioneer Dr. David Harold Blackwell, this architecture embodies NVIDIA’s commitment to innovation. Blackwell aims to elevate the performance of NVIDIA’s datacenter and high-performance computing (HPC) accelerators by integrating more features, flexibility, and transistors. This approach is a testament to NVIDIA’s strategy of blending hardware advancements with software optimization to tackle the evolving needs of high-performance accelerators.

With an impressive 208 billion transistors across the complete accelerator, NVIDIA’s first multi-die chip represents a bold step in unified GPU performance. The Blackwell GPUs are designed to function as a single CUDA GPU, thanks to the NV-High Bandwidth Interface (NV-HBI) facilitating an unprecedented 10TB/second of bandwidth. This architectural marvel is complemented by up to 192GB of HBM3E memory, significantly enhancing both the memory capacity and bandwidth.

However, it’s not just about packing more power into the hardware. The Blackwell architecture is engineered to dramatically boost AI training and inference performance while achieving remarkable energy efficiency. This ambition is evident in NVIDIA’s projections of a 4x increase in training performance and a staggering 30x surge in inference performance at the cluster level.

Moreover, NVIDIA is pushing the boundaries of precision with its second-generation transformer engine, capable of handling computations down to FP4 precision. This advancement is crucial for optimizing inference workloads, offering a significant leap in throughput for AI models.

In summary, NVIDIA’s Blackwell architecture and the B200/B100 accelerators represent a formidable advancement in AI accelerator technology. By pushing the limits of architectural efficiency, memory capacity, and computational precision, NVIDIA is not just maintaining its leadership in the AI space but setting new benchmarks for performance and efficiency. As we await the rollout of these groundbreaking products, the tech world watches with anticipation, eager to see how NVIDIA’s latest innovations will shape the future of AI computing.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Yamaha DGX-660 Portable Digital Piano Review - Contemporary & Versatile

youtube

Introduction

Hello and welcome to our Yamaha DGX-660 review here at Merriam Pianos. In this article, we'll be looking at the Yamaha DGX-660 Portable Grand, one of the most popular Yamaha keyboards.

This is an 88-key digital piano that's been on the market for a few years now, but it brings a unique combination of features to a price point that really doesn't have very many other close comparable options, and has a ton of favorable customer reviews out there.

As such, we've wanted to review this instrument for a while. We'll take a look at the action, the sound engine, and explore all of the cool arranger-type features that the DGX-660 digital piano is outfitted with.

Thanks for joining us!

Yamaha's CFIIIS Concert Grand Piano Sound Sampling

Let's start right away by checking out the sound on the DGX-660.

The first thing that stands out, and it also happens to be the case with the Yamaha P125, is that the speakers are upward facing.

If you have a powerful set of speakers, it is beneficial to have them pointing down as the sound reflecting off the floor creates a big sense of space and better low end. Given the size and strength of the speakers here - 2 mains and 2 tweeters which are quite high quality driven by 12 watts of power - it's a better choice to go with upward-facing as they've done here.

Getting into the sound engine, and we've got Yamaha's Pure CF sound engine, which is using sampling technology to reproduce Yamaha's CFIIIS 9 Foot Concert grand piano. The Pure CF sound engine is a great piano sound engine, and you're given a lot of control over the sound courtesy of the Piano Room feature.

Piano Room offers you control over things like Reverb algorithms, as well as sound-related aspects like damper resonance, essentially offering your own personal piano environment. There's a variety of piano patches as well, all with their own distinct character, but the default grand piano sound is definitely the focus.

The Pure CF engine offers 192 notes worth of max polyphony, and a high count like this is important with any arranger-oriented instrument as the polyphony can get eaten up quickly with things like multitracking.

In fact, while 192 notes for solo acoustic settings is more than sufficient, it's actually almost a bare minimum considering the variety of interactive features that the DGX-660 has.

If you press Voice Mode on the front panel, you're given access to over 500 total sounds, including 388 XGLite sounds, which is Yamaha's version of the GM2, as well as 15 SFX kits.

After the acoustic piano tones we already mentioned, you start getting into e-piano sounds, including all of those classical Yamaha electric pianos from the '80s.

There are some organs, lush sounding pads, synths, and a whole lot more given the large selection of voices here. There's also a variety of digital effects and a ton of DSP effects for altering the sounds.

Another cool sound feature is the Intelligent Acoustic Control (IAC) function which automatically EQ's sounds in real-time for optimization, though there is a Master EQ setting as well.

Yamaha DGX-660 Graded Hammer Action

When it comes to the key action, the number of keys is 88 and we're looking at a weighted action, so those important boxes are checked off.

The action here is Yamaha's Graded Hammer Standard action (GHS), which has been on the market for a number of years now and is the most basic hammer action that Yamaha makes. As such, it has both pros and cons.

On paper, it's definitely lagging behind Roland's PHA4 action and Kawai's RHCII. That doesn't mean you won't have a satisfying playing experience with the GHS keyboard,

It's using a dual sensor, which means it won't be as accurate from an expressiveness piano touch response standpoint as would a triple sensor action. That said, new players will certainly be able to learn finger techniques and start to develop their piano expertise with this action.

There also isn't any texture to speak of on any of the white keys, which means your fingers will probably catch on the keys in more humid playing environments. The black key has a nice textured matte finish, but we'd like to see the same on the white keys, as is the case with most digital piano actions, and the more advanced ones Yamaha makes.

Alternatively, something that we really like about the GHS action is the weight as it's a slightly heavier touch that resembles that of an acoustic piano action.

There's no escapement sensation, but only Roland's PHA-4 action offers escapement in the price point.

Most beginner players aren't going to have any issues with this action, but with the lack of dynamic sensitivity, there will be a learning curve when jumping to an acoustic piano.

Features

Now we'll get into the arranger aspects of this instrument, such as the auto-accompaniment and built-in songs. After all, if you don't need features like this, it probably doesn't make sense to be looking at the DGX-660 anyway.

Things like a basic metronome are covered, as is a pitch bend wheel.

The auto-accompaniment features over 200 accompaniment styles, and there's an extra level of control over how the feature actually accompanies you with the Fingering (Single Finger, Multi Finger or AI) and Style control settings. For example, Smart Chord ensures the chords being generated are diatonic the notes you're playing.

This is a deep, intuitive feature that would be great for solo piano performances where you would like some accompaniment, especially with the Style Recommender.

Next, there's a 5-track recorder that acts a multitrack recording function. You can record WAV and SMF files to a USB device - USB audio recording is handy as that way you don't need to rely on the piano's limited memory and you can easily transfer stuff over to a DAW for more development. A big plus for the USB audio recorder here.

There's also a cool lyric display and score display feature wherein the LCD screen will display the sheet music or lyrics on any MIDI song the DGX-660 happens to be playing.

For connectivity, there's a DC In for your basic power supply or power adapter, a 1/4" headphone jack, a sustain pedal port (comes with a basic footswitch but you can upgrade to a better damper pedal), 3-pedal port (for optional LP-7A pedal unit), a microphone input, AUX in (to connect to a music player) as well as both types of USB.

It doesn't have dedicated line outputs, but you can use the 1/4" stereo headphone jack as an output.

It's also lacking built-in Bluetooth connectivity which is a bummer, but Yamaha does make adapters you can buy which will allow you to use WI-FI to stream audio and MIDI from an Android or iOS device to the DGX-660 without cables.

A really nice design feature of the 660 is that it works both as a portable slab-based digital piano, but it also includes a designer keyboard stand in the box, and you can add the optional LP-7A triple pedal to that if you'd like. A music rest is also included.

Closing Thoughts

For the money, the Yamaha DGX-660 is one of the best blends of features you can find, both in terms of the number of sounds, and in terms of the range of functions.

It's going to be a great option for someone who wants a ton of built-in sounds, and a wide degree of functionality for a ton of music fun.

It's not going to be an ideal choice for a student looking to learn piano, or for an advanced pianist, as the inherent limitations of the action will be challenging to overcome.

But, if you're not overly concerned with a super authentic piano touch and are otherwise enticed by the total package you're seeing here, the DGX-660 could be a great fit.

Shop more digital pianos from Merriam Pianos.

The post Yamaha DGX-660 Portable Digital Piano Review — Contemporary & Versatile first appeared on Merriam Pianos

2359 Bristol Cir #200, Oakville, ON L6H 6P8

merriammusic.com

(905) 829–2020

0 notes

Text

Exploring the Key Differences: NVIDIA DGX vs NVIDIA HGX Systems

A frequent topic of inquiry we encounter involves understanding the distinctions between the NVIDIA DGX and NVIDIA HGX platforms. Despite the resemblance in their names, these platforms represent distinct approaches NVIDIA employs to market its 8x GPU systems featuring NVLink technology. The shift in NVIDIA’s business strategy was notably evident during the transition from the NVIDIA P100 “Pascal” to the V100 “Volta” generations. This period marked the significant rise in prominence of the HGX model, a trend that has continued through the A100 “Ampere” and H100 “Hopper” generations.

NVIDIA DGX versus NVIDIA HGX What is the Difference

Focusing primarily on the 8x GPU configurations that utilize NVLink, NVIDIA’s product lineup includes the DGX and HGX lines. While there are other models like the 4x GPU Redstone and Redstone Next, the flagship DGX/HGX (Next) series predominantly features 8x GPU platforms with SXM architecture. To understand these systems better, let’s delve into the process of building an 8x GPU system based on the NVIDIA Tesla P100 with SXM2 configuration.

DeepLearning12 Initial Gear Load Out

Each server manufacturer designs and builds a unique baseboard to accommodate GPUs. NVIDIA provides the GPUs in the SXM form factor, which are then integrated into servers by either the server manufacturers themselves or by a third party like STH.

DeepLearning12 Half Heatsinks Installed 800

This task proved to be quite challenging. We encountered an issue with a prominent server manufacturer based in Texas, where they had applied an excessively thick layer of thermal paste on the heatsinks. This resulted in damage to several trays of GPUs, with many experiencing cracks. This experience led us to create one of our initial videos, aptly titled “The Challenges of SXM2 Installation.” The difficulty primarily arose from the stringent torque specifications required during the GPU installation process.

NVIDIA Tesla P100 V V100 Topology

During this development, NVIDIA established a standard for the 8x SXM GPU platform. This standardization incorporated Broadcom PCIe switches, initially for host connectivity, and subsequently expanded to include Infiniband connectivity.

Microsoft HGX 1 Topology

It also added NVSwitch. NVSwitch was a switch for the NVLink fabric that allowed higher performance communication between GPUs. Originally, NVIDIA had the idea that it could take two of these standardized boards and put them together with this larger switch fabric. The impact, though, was that now the NVIDIA GPU-to-GPU communication would occur on NVIDIA NVSwitch silicon and PCIe would have a standardized topology. HGX was born.

NVIDIA HGX 2 Dual GPU Baseboard Layout

Let’s delve into a comparison of the NVIDIA V100 setup in a server from 2020, renowned for its standout color scheme, particularly in the NVIDIA SXM coolers. When contrasting this with the earlier P100 version, an interesting detail emerges. In the Gigabyte server that housed the P100, one could notice that the SXM2 heatsinks were without branding. This marked a significant shift in NVIDIA’s approach. With the advent of the NVSwitch baseboard equipped with SXM3 sockets, NVIDIA upped its game by integrating not just the sockets but also the GPUs and their cooling systems directly. This move represented a notable advancement in their hardware design strategy.

Consequences

The consequences of this development were significant. Server manufacturers now had the option to acquire an 8-GPU module directly from NVIDIA, eliminating the need to apply excessive thermal paste to the GPUs. This change marked the inception of the NVIDIA HGX topology. It allowed server vendors the flexibility to customize the surrounding hardware as they desired. They could select their preferred specifications for RAM, CPUs, storage, and other components, while adhering to the predetermined GPU configuration determined by the NVIDIA HGX baseboard.

Inspur NF5488M5 Nvidia Smi Topology

This was very successful. In the next generation, the NVSwitch heatsinks got larger, the GPUs lost a great paint job, but we got the NVIDIA A100.

The codename for this baseboard is “Delta”.

Officially, this board was called the NVIDIA HGX.

Inspur NF5488A5 NVIDIA HGX A100 8 GPU Assembly 8x A100 And NVSwitch Heatsinks Side 2

NVIDIA, along with its OEM partners and clients, recognized that increased power could enable the same quantity of GPUs to perform additional tasks. However, this enhancement came with a drawback: higher power consumption led to greater heat generation. This development prompted the introduction of liquid-cooled NVIDIA HGX A100 “Delta” platforms to efficiently manage this heat issue.

Supermicro Liquid Cooling Supermicro

The HGX A100 assembly was initially introduced with its own brand of air cooling systems, distinctively designed by the company.

In the newest “Hopper” series, the cooling systems were upscaled to manage the increased demands of the more powerful GPUs and the enhanced NVSwitch architecture. This upgrade is exemplified in the NVIDIA HGX H100 platform, also known as “Delta Next”.

NVIDIA DGX H100

NVIDIA’s DGX and HGX platforms represent cutting-edge GPU technology, each serving distinct needs in the industry. The DGX series, evolving since the P100 days, integrates HGX baseboards into comprehensive server solutions. Notable examples include the DGX V100 and DGX A100. These systems, crafted by rotating OEMs, offer fixed configurations, ensuring consistent, high-quality performance.

While the DGX H100 sets a high standard, the HGX H100 platform caters to clients seeking customization. It allows OEMs to tailor systems to specific requirements, offering variations in CPU types (including AMD or ARM), Xeon SKU levels, memory, storage, and network interfaces. This flexibility makes HGX ideal for diverse, specialized applications in GPU computing.

Conclusion

NVIDIA’s HGX baseboards streamline the process of integrating 8 GPUs with advanced NVLink and PCIe switched fabric technologies. This innovation allows NVIDIA’s OEM partners to create tailored solutions, giving NVIDIA the flexibility to price HGX boards with higher margins. The HGX platform is primarily focused on providing a robust foundation for custom configurations.

In contrast, NVIDIA’s DGX approach targets the development of high-value AI clusters and their associated ecosystems. The DGX brand, distinct from the DGX Station, represents NVIDIA’s comprehensive systems solution.

Particularly noteworthy are the NVIDIA HGX A100 and HGX H100 models, which have garnered significant attention following their adoption by leading AI initiatives like OpenAI and ChatGPT. These platforms demonstrate the capabilities of the 8x NVIDIA A100 setup in powering advanced AI tools. For those interested in a deeper dive into the various HGX A100 configurations and their role in AI development, exploring the hardware behind ChatGPT offers insightful perspectives on the 8x NVIDIA A100’s power and efficiency.

0 notes

Text

Smart Trading: Expert Signals & Strategy - 29 December 2023

Learn how to use the Smart Trading Expert Signals strategy to increase your profits from trading. Trade win rate is 48.18%.

#PWR #RKT #NEE #NI #SCHW #DUK #EL #SABR #TLRY #WAT #MCD #CPB #EPD #BURL #MDT #CMA #IPG #ATAI #ILMN #CLH #WRLD #HD #ALB #CPMS #UMC

The stock market is a dynamic and complex environment that requires constant attention and adaptation. To succeed in this field, you need to have smart trading, expert signals and strategy. Smart trading is the use of sophisticated software and algorithms to monitor and predict the market movements. Expert signals are the tips and advice from professional traders or experts who have a deep…

View On WordPress

#AEYE#ALB#ARM#ATAI#ATUS#BAER#BIIB#BMBL#BURL#C3 AI#CLX#CMCSA#CMPS#CTVA#Dash#DGX#DVN#EL#EMR#FVRR#GM#HAL#HD#ICL#ILMN#IPG#KR#LKQ#LL#LVS

0 notes

Text

My tumblr is FIXED, BABY!!!

So…. Have some ikemen persona 4 arena to celebrate. Tbh I’d do anything for a game centering around this trio specifically

Source: Dgx on Pixiv

#persona 4#tohru adachi#persona#souji seta#yu narukami#adachi#p4#persona 4 adachi#persona 4 arena#persona 4 arena ultimax#sho minazuki#p4a#persona 4 protagonist

91 notes

·

View notes