#docker overlay networks

Explore tagged Tumblr posts

Text

Docker and Kubernetes Training - Hyderabad

Docker Overlay Network Without Swarm

Introduction:

Docker has revolutionized the way applications are deployed and managed by enabling containerization. One such networking option provided by Docker is the overlay network. While commonly associated with Docker Swarm, overlay networks can also be utilized without Swarm for various deployment scenarios.

Understanding Docker Overlay Network:

Overlay networks in Docker facilitate communication between containers across multiple Docker hosts. This network type abstracts the underlying physical network infrastructure, allowing containers to communicate as if they were on the same host. - Docker and Kubernetes Training

Deployment without Swarm:

Contrary to popular belief, Docker overlay networks can be set up and utilized without Docker Swarm. While Swarm provides orchestration capabilities for managing multiple Docker hosts, overlay networks can be created and managed independently using Docker's native networking commands.

Here's a step-by-step guide to deploying a Docker overlay network without Swarm:

Network Creation: Start by creating an overlay network using the `docker network create` command. Specify the driver as "overlay" to indicate the network type. - Kubernetes Online Training

Connect Containers: Once the overlay network is created, containers can be connected to it using the `docker network connect` command. Specify the network name and the container you want to connect to the overlay network.

Container Communication: Containers connected to the same overlay network can communicate with each other using their container names or IP addresses. Docker handles the routing of network traffic between containers seamlessly, regardless of the underlying host infrastructure.

Network Configuration: Docker allows for advanced network configuration options such as subnet configuration, ingress and egress network control, and encryption for secure communication between containers.

Benefits of Using Overlay Networks without Swarm:

Deploying Docker overlay networks without Swarm offers several advantages

Simplified Deployment: Setting up overlay networks without Swarm eliminates the need for additional orchestration overhead, making it suitable for smaller deployments or development environments. - Docker Online Training

Flexibility: Without Swarm, developers have more control over the networking configuration and can tailor it to their specific requirements without relying on Swarm's default settings.

Compatibility: Docker overlay networks deployed without Swarm remain compatible with Docker Swarm environments, allowing for seamless integration or migration in the future.

Conclusion:

By understanding the fundamentals of overlay networks and leveraging Docker's native networking capabilities, developers can build scalable and resilient containerized applications without the need for additional orchestration layers.

Visualpath is the Leading and Best Institute for learning Docker And Kubernetes Online in Ameerpet, Hyderabad. We provide Docker Online Training Course, you will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit : https://www.visualpath.in/DevOps-docker-kubernetes-training.html

Blog : https://dockerandkubernetesonlinetraining.blogspot.com/

#docker and kubernetes training#docker online training#docker training in hyderabad#kubernetes training hyderabad#docker and kubernetes online training#docker online training hyderabad#kubernetes online training#kubernetes online training hyderabad

0 notes

Text

Exploring Docker Networking: Introduction to Network Drivers, Bridge Networks, Overlay Networks, and More

Docker networking plays a crucial role in connecting containers and enabling communication between them. Understanding the intricacies of Docker networking is essential for building scalable and distributed applications. In this in-depth guide, we will delve into various aspects of Docker networking, covering topics such as bridge networks, overlay networks, network drivers, IPAM drivers,…

View On WordPress

0 notes

Text

Run Your Cyphernodl ~ Francis Pouliot ~ Understanding Bitcoin

Run Your Cyphernodl ~ Francis Pouliot ~ Understanding Bitcoin

cyphernode: Modular Bitcoin full-node microservices API server architecture and utilities toolkit to build scalable, secure and featureful apps and services without trusted third parties.

An open-source self-hosted API which allows you to spawn and call your encrypted overlay network of dockerized Bitcoin and crypto software projects (virtual machines). You can use it to build Bitcoin services…

View On WordPress

1 note

·

View note

Text

This guide will walk you through the installation of CRI-O Container Runtime on Ubuntu 22.04|20.04|18.04. CRI-O is an OCI-based implementation of Kubernetes Container Runtime Interface (CRI) designed to provide an integration path between OCI conformant runtimes and the kubelet. CRI-O is created to provide following core functionalities: Support multiple image formats including the existing Docker image format Support for multiple means to download images including trust & image verification Container image management (managing image layers, overlay filesystems, etc) Container process lifecycle management Monitoring and logging required to satisfy the CRI Resource isolation as required by the CRI The libraries used by CRI-O are: Runtime: runc (or any OCI runtime-spec implementation) and oci runtime tools Images: Image management using containers/image Storage: Storage and management of image layers using containers/storage Networking: Networking support through use of CNI Install CRI-O Container Runtime on Ubuntu 22.04|20.04|18.04 We will use pre-built binary packages to install CRI-O container runtime. Follow the steps below to install CRI-O Container Runtime on Ubuntu 22.04|20.04|18.04. Step 1: Update System Ensure your Ubuntu system is updated. If you’re afraid this could break your system you can skip. sudo apt update && sudo apt upgrade It is recommended to reboot your system to ensure it is running on updated version. sudo systemctl reboot Step 2: Add CRI-O Kubic repository Add the Kubic repository which host binary packages for Debian based systems. If using CRI-O with Kubernetes, install the version matching Kubernetes version you’ll setup. If your Kubernetes version is 1.23, install CRI-O version 1.23. We’ll start by adding the APT repository which contains CRI-O packages: Ubuntu 22.04/20.04: OS=xUbuntu_20.04 CRIO_VERSION=1.23 echo "deb https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/ /"|sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list echo "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/$CRIO_VERSION/$OS/ /"|sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION.list Ubuntu 18.04: OS=xUbuntu_18.04 CRIO_VERSION=1.23 echo "deb https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/ /"|sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list echo "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/$CRIO_VERSION/$OS/ /"|sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION.list Once the repository is added to your system, import GPG key: curl -L https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION/$OS/Release.key | sudo apt-key add - curl -L https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/Release.key | sudo apt-key add - Step 3: Install CRI-O on Ubuntu22.04|20.04|18.04 When repository is added, update apt cache and install CRI-O on Ubuntu. sudo apt update sudo apt install cri-o cri-o-runc Accept installation prompt with y key. The following additional packages will be installed: conmon containers-common Suggested packages: cri-o-runc | runc containernetworking-plugins The following NEW packages will be installed: conmon containers-common cri-o 0 upgraded, 3 newly installed, 0 to remove and 94 not upgraded. Need to get 20.2 MB of archives. After this operation, 99.4 MB of additional disk space will be used. Do you want to continue? [Y/n] y Checking the version of CRI-O installed on Ubuntu: $ apt show cri-o Package: cri-o Version: 1.23.0~0 Priority: optional Section: devel Maintainer: Peter Hunt Installed-Size: 98.3 MB Depends: libgpgme11, libseccomp2, conmon, containers-common (>= 0.1.27), tzdata Suggests: cri-o-runc | runc (>= 1.0.0), containernetworking-plugins

Replaces: cri-o-1.19, cri-o-1.20, cri-o-1.21 Homepage: https://github.com/cri-o/cri-o Download-Size: 19.9 MB Start and enable crio service: sudo systemctl enable crio.service sudo systemctl start crio.service Service status can be checked with the command: $ systemctl status crio ● crio.service - Container Runtime Interface for OCI (CRI-O) Loaded: loaded (/lib/systemd/system/crio.service; enabled; vendor preset: enabled) Active: active (running) since Sun 2020-06-07 20:16:50 CEST; 37s ago Docs: https://github.com/cri-o/cri-o Main PID: 2461 (crio) Tasks: 13 Memory: 7.7M CGroup: /system.slice/crio.service └─2461 /usr/bin/crio Jun 07 20:16:50 ubuntu systemd[1]: Starting Container Runtime Interface for OCI (CRI-O)... Jun 07 20:16:50 ubuntu systemd[1]: Started Container Runtime Interface for OCI (CRI-O). Step 4: Using CRI-O on Ubuntu22.04|20.04|18.04 The command line tool crioctl can be installed through cri-tools package. sudo apt install cri-tools Check existence of crictl command: $ sudo crictl info "status": "conditions": [ "type": "RuntimeReady", "status": true, "reason": "", "message": "" , "type": "NetworkReady", "status": false, "reason": "NetworkPluginNotReady", "message": "Network plugin returns error: Missing CNI default network" ] Pull a test image: $ sudo crictl pull nginx Image is up to date for docker.io/library/nginx@sha256:c870bf53de0357813af37b9500cb1c2ff9fb4c00120d5fe1d75c21591293c34d $ sudo crictl pull hello-world Image is up to date for docker.io/library/hello-world@sha256:6a65f928fb91fcfbc963f7aa6d57c8eeb426ad9a20c7ee045538ef34847f44f1 $ sudo crictl pull busybox Image is up to date for docker.io/library/busybox@sha256:95cf004f559831017cdf4628aaf1bb30133677be8702a8c5f2994629f637a209 List available images: $ sudo crictl images IMAGE TAG IMAGE ID SIZE docker.io/library/alpine latest a24bb4013296f 5.85MB docker.io/library/busybox latest 1c35c44120825 1.44MB docker.io/library/hello-world latest bf756fb1ae65a 20kB docker.io/library/nginx latest 4392e5dad77db 136MB Create pod sandbox config file: cat >nginx.json

0 notes

Text

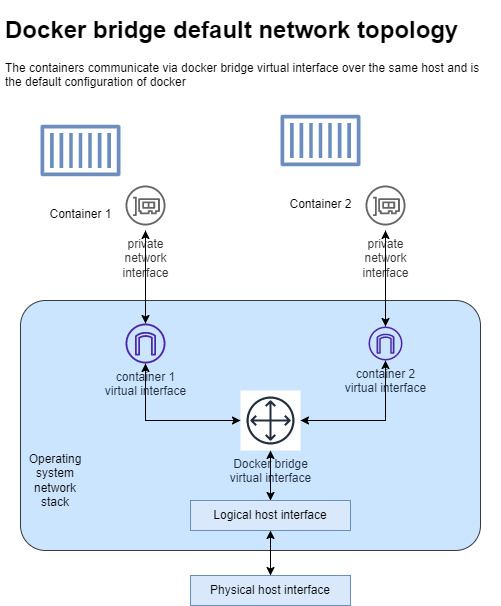

What is Docker Networking?-Visualpath

For Docker boxes to talk with every different and the outdoor international thru the host machine, there must be a layer of networking involved. Docker helps unique forms of networks, every match for positive use cases. For example, constructing an software which runs on a unmarried Docker box could have a unique community setup in comparison to an internet software with a cluster with database, software and cargo balancers which span a couple of boxes that want to talk with every different. Additionally, customers from the outdoor international will want to get admission to the net software box. Docker Default networking (docker0) When Docker is installed, a default bridge community named docker0 is created. Each new Docker field is routinely connected to this community, until a custom community is specified. Besides docker0, different networks get created routinely with the aid of using Docker: host (no isolation among host and boxes in this community, to the outdoor international they may be at the equal community) and none (connected boxes run on field-precise community stack). Docker Network Types Docker comes with community drivers geared in the direction of exclusive use cases. The maximum not unusual place community kinds being: bridge, overlay, and macvlan. Bridge Networks Bridge networking is the maximum not unusual place community type. It is constrained to packing containers inside a unmarried host going for walks the Docker engine. Bridge networks are smooth to create, manipulate and troubleshoot. For the packing containers on bridge community to talk or be on hand from the outdoor world, port mapping desires to be configured. As an example, don't forget you could have a Docker field going for walks an internet provider on port eighty. Because this field is connected to the bridge community on a personal subnet, a port at the host device like 8000 desires to be mapped to port eighty at the field for outdoor visitors to attain the net provider. Overlay Networks An overlay community makes use of software program virtualization to create extra layers of community abstraction walking on pinnacle of a bodily community. In Docker, an overlay community driving force is used for multi-host community communication. This driving force makes use of Virtual Extensible LAN (VXLAN) generation which gives portability among cloud, on-premise and digital environments. VXLAN solves not unusual place portability obstacles via way of means of extending layer 2 subnets throughout layer three community boundaries; therefore bins can run on overseas IP subnets. To create an overlay community named my-overlay-net, you’ll additionally want the --subnet parameter to specify the community block that Docker will use to assign IP addresses to the bins: Macvlan Networks The macvlan driving force is used to attach Docker boxes at once to the host community interfaces thru layer 2 segmentation. No use of port mapping or community deal with translation (NAT) is wanted and boxes may be assigned a public IP deal with which is obtainable from the outdoor world. Latency in macvlan networks is low due to the fact that packets are routed at once from Docker host community interface controller (NIC) to the boxes. Note that macvlan needs to be configured according to host, and has aid for bodily NIC, sub-interface, community bonded interfaces or even teamed interfaces. Traffic is explicitly filtered via way of means of the host kernel modules for isolation and security. To create a macvlan community named macvlan-net, you’ll want to offer a --gateway parameter to specify the IP deal with of the gateway for the subnet, and a -o parameter to set driving force particular options. For more Details Click Here Contact number +91-9989971070

0 notes

Text

Шпаргалка по Docker

Установка

Linux curl -sSL https://get.docker.com/ | sh Mac Скачайте dmg по этой ссылке: https://download.docker.com/mac/stable/Docker.dmg Windows Используйте MSI-инсталлятор: https://download.docker.com/win/stable/InstallDocker.msi

Реестры и репозитории Docker

Вход в реестр docker login docker login localhost:8080 Выход из реестра docker logout docker logout localhost:8080 Поиск образа docker search nginx docker search nginx -- filter stars=3 --no-trunc busybox Pull (выгрузка из реестра) образа docker pull nginx docker pull eon01/nginx localhost:5000/myadmin/nginx Push (загрузка в реестр) образа docker push eon01/nginx docker push eon01/nginx localhost:5000/myadmin/nginx

Первые действия с контейнерами

Создание контейнера docker create -t -i eon01/infinite --name infinite Первый запуск контейнера docker run -it --name infinite -d eon01/infinite Переименование контейнера docker rename infinite infinity Удаление контейнера docker rm infinite Обновление контейнера docker update --cpu-shares 512 -m 300M infinite

Запуск и остановка контейнеров

Запуск остановленного контейнера docker start nginx Остановка docker stop nginx Перезагрузка docker restart nginx Пауза (приостановка всех процессов контейнера) docker pause nginx Снятие паузы docker unpause nginx Блокировка (до остановки контейнера) docker wait nginx Отправка SIGKILL (завершающего сигнала) docker kill nginx Отправка другого сигнала docker kill -s HUP nginx Подключение к существующему контейнеру docker attach nginx

Получение информации о контейнерах

Работающие контейнеры docker ps docker ps -a Логи контейнера docker logs infinite Информация о контейнере docker inspect infinite docker inspect --format '{{ .NetworkSettings.IPAddress }}' $(docker ps -q) События контейнера docker events infinite Публичные порты docker port infinite Выполняющиеся процессы docker top infinite Использование ресурсов docker stats infinite Изменения в файлах или директориях файловой системы контейнера docker diff infinite

Управление образами

Список образов docker images Создание образов docker build . docker build github.com/creack/docker-firefox docker build - < Dockerfile docker build - < context.tar.gz docker build -t eon/infinite . docker build -f myOtherDockerfile . curl example.com/remote/Dockerfile | docker build -f - . Удаление образа docker rmi nginx Загрузка репозитория в tar (из файла или стандартного ввода) docker load < ubuntu.tar.gz docker load --input ubuntu.tar Сохранение образа в tar-архив docker save busybox > ubuntu.tar Просмотр истории образа docker history Создание образа из контейнера docker commit nginx Тегирование образа docker tag nginx eon01/nginx Push (загрузка в реестр) образа docker push eon01/nginx

Сеть

Создание сети docker network create -d overlay MyOverlayNetwork docker network create -d bridge MyBridgeNetwork docker network create -d overlay \ --subnet=192.168.0.0/16 \ --subnet=192.170.0.0/16 \ --gateway=192.168.0.100 \ --gateway=192.170.0.100 \ --ip-range=192.168.1.0/24 \ --aux-address="my-router=192.168.1.5" --aux-address="my-switch=192.168.1.6" \ --aux-address="my-printer=192.170.1.5" --aux-address="my-nas=192.170.1.6" \ MyOverlayNetwork Удаление сети docker network rm MyOverlayNetwork Список сетей docker network ls Получение информации о сети docker network inspect MyOverlayNetwork Подключение работающего контейнера к сети docker network connect MyOverlayNetwork nginx Подключение контейнера к сети при его запуске docker run -it -d --network=MyOverlayNetwork nginx Отключение контейнера от сети docker network disconnect MyOverlayNetwork nginx

Очистка Docker

Удаление работающего контейнера docker rm nginx Удаление контейнера и его тома (volume) docker rm -v nginx Удаление всех контейнеров со статусом exited docker rm $(docker ps -a -f status=exited -q) Удаление всех остановленных контейнеров docker container prune docker rm `docker ps -a -q` Удаление контейнеров, остановленных более суток назад docker container prune --filter "until=24h" Удаление образа docker rmi nginx Удаление неиспользуемых (dangling) образов docker image prune docker rmi $(docker images -f dangling=true -q) Удаление неиспользуемых (dangling) образов даже с тегами docker image prune -a Удаление всех образов docker rmi $(docker images -a -q) Удаление всех образов без тегов docker rmi -f $(docker images | grep "^<none>" | awk "{print $3}") Остановка и удаление всех контейнеров docker stop $(docker ps -a -q) && docker rm $(docker ps -a -q) Удаление неиспользуемых (dangling) томов docker volume prune docker volume rm $(docker volume ls -f dangling=true -q) Удаление неиспользуемых (dangling) томов по фильтру docker volume prune --filter "label!=keep" Удаление неиспользуемых сетей docker network prune Удаление всех неиспользуемых объектов docker system prune По умолчанию для Docker 17.06.1+ тома не удаляются. Чтобы удалились и они тоже: docker system prune --volumes

Docker Swarm

Установка Docker Swarm curl -ssl https://get.docker.com | bash Прим. перев.: в Docker версий 1.12.0+ ничего дополнительно устанавливать не требуется, т.к. Docker Swarm встроен в Docker Engine в виде специального режима (Swarm mode). Инициализация Swarm docker swarm init --advertise-addr 192.168.10.1 Подключение рабочего узла (worker) к Swarm docker swarm join-token worker Подключение управляющего узла (manager) к Swarm docker swarm join-token manager Список сервисов docker service ls Список узлов docker node ls Создание сервиса docker service create --name vote -p 8080:80 instavote/vote Список заданий Swarm docker service ps Масштабирование сервиса docker service scale vote=3 Обновление сервиса docker service update --image instavote/vote:movies vote docker service update --force --update-parallelism 1 --update-delay 30s nginx docker service update --update-parallelism 5--update-delay 2s --image instavote/vote:indent vote docker service update --limit-cpu 2 nginx docker service update --replicas=5 nginx

P.S.

Прим. перев.: Напомню, что оригинальная (англоязычная) версия Docker Cheat Sheet доступна и обновляется в Git-репозитории. Автор будет рад исправлениям/пополнениям от сообщества. Читайте также в нашем блоге:

«Play with Docker — онлайн-сервис для практического знакомства с Docker».

«В чём суть проекта Moby и почему главным репозиторием Docker вдруг стал moby/moby?»

«Собираем Docker-образы для CI/CD быстро и удобно вместе с dapp (обзор и видео)».

1 note

·

View note

Text

Cloud, Associate (JNCIA-Cloud) JN0-212 Practice Test Questions

JN0-212 Cloud, Associate (JNCIA-Cloud) exam is the new exam replacement of JN0-211 exam. PassQuestion provides the latest Cloud, Associate (JNCIA-Cloud) JN0-212 Practice Test Questions to help you improve your preparation for the real exam. Make sure that you are using all the Cloud, Associate (JNCIA-Cloud) JN0-212 Practice Test Questions that will allow you to improve the preparation level before attempting a real exam. Once you have gone through all the JN0-212 Practice Test Questions, you will be able to clear Juniper JN0-212 exam on your first attempt. It is the right way to attempt a real exam so you can achieve the best results.

Cloud, Associate (JNCIA-Cloud) Certification

The Cloud track enables you to demonstrate competence with cloud networking architectures such as multiclouds, software-defined networking, SD-WAN, and other cloud technologies. JNCIA-Cloud, the associate-level certification in this track, is designed for networking professionals with introductory-level knowledge of Juniper Networks cloud-based networking architectures, theory, and best practices. The written exam verifies your understanding of cloud-based networking principles and technologies.

JNCIA-Cloud Exam Information

Exam Code: JN0-212 Prerequisite Certification: None Delivered by: Pearson VUE Exam Length: 90 minutes Exam Type: 65 multiple-choice questions Software Versions: Contrail 21.4, OpenStack Wallaby, Kubernetes 1.21

JNCIA-Cloud Exam TopicsCloud FundamentalsIdentify the concepts or functionality of various fundamental elements of cloud networking:

Deployment models (public, private, hybrid cloud)

Service models: Software as a Service (SaaS), Infrastructure as a Service (IaaS), Platform as a Service(PaaS)

Cloud native architectures

Cloud automation tools

Cloud Infrastructure: Network Functions Virtualization (NFV) and SDNIdentify the concepts, benefits, or functionality of network function virtualization:

NFV architecture

NFV orchestration

Virtualized network functions (VNFs)

Identify the concepts, benefits, or functionality of SDN:

SDN architecture

SDN controller

SDN solutions

Network VirtualizationIdentify concepts, operation, or functionality of network virtualization:

Virtual network types

Underlay and overlay networks

Encapsulation and tunneling (MPLSoGRE, MPLSoUDP, VXLAN, EVPN with VXLAN)

Cloud VirtualizationIdentify the concepts, operation, or functionality of Linux virtualization:

Linux architecture

Hypervisor type (type 1 and 2)

Hypervisor operations and concepts

Kernal-based virtual machine (KVM), Quick Emulator (QEMU) concepts and operations

Creation of virtual machines

Identify the concepts, operation, or functionality of Linux containers:

Container versus virtual machine

Container components

Creation of containers using Docker

Cloud Orchestration with OpenStackIdentify the concepts, operation, or functionality of OpenStack:

Creation and management of virtual machines in OpenStack

Automation using HEAT templates in Yet Another Markup Language (YAML)

OpenStack UIs usage

OpenStack networking plugins

OpenStack Security Groups

Cloud Orchestration with KubernetesIdentify the concepts, operation, or functionality of Kubernetes:

Creation and management of containers in Kubernetes

Kubernetes API Objects (Pods, ReplicaSets, Deployments, Services)

Kubernetes namespaces and Container Network Interface (CNI) plugins

Contrail NetworkingIdentify concepts, operation, or functionality of Contrail Networking:

Architecture

Orchestration integration

Multitenancy

Service chaining

Automation or security

Configuration

View Online Cloud, Associate (JNCIA-Cloud) JN0-212 Free Questions

Which statement is true about the vSRX Series and VMware NSX integration A.The NSX Distributed Firewall provides a container based layer of protection. B.VMware NSX provides advanced Layer 4 through Layer 7 security services. C.You can add the vSRX virtual firewall as security element in the VMware NSX environment. D.The NSX Distributed Firewall users application identification. Answer : C

Which two hypervisors does the vMX support? (Choose two) A.KVM B.xen C.ESXi D.Hyper V Answer : A, C

Which two product are required when deploying vSRX as a partner security service in VMware NSX? (Choose two) A.VMware vROPs B.VMware NSX manager C.Junos Space Security Director D.IDP sensor Answer : B, C

Which OpenStack component is responsible for user authentication and authorization? A.Glance B.Nova C.Keystone D.Neutron Answer : C

What are two roles of sandboxing in Sky ATP? (choose two) A.To test the operation of security rules B.To analyze the behavior of potential security threats C.To validate the operation of third-party components D.To store infected files for further analysis Answer : B, D

0 notes

Link

Docker — Overlay Network

In my last article “Docker — Cross-Host Networking”, I introduced ibnetwork and CNM which are Docker container network library and core component. I also mentioned Docker has two native cross-host configurations:overlay and macvlan . Let’s explore overlay network. via Pocket https://ift.tt/grLaGpB March 06, 2022 at 04:13PM

0 notes

Text

What are the resources and the ways to learn Docker and Kubernetes?

There are lots of sources out there about “getting” and mastering Kubernetes. Unfortunately, not all of them are actually helpful, to-the-point and worth your time. As with Docker, the Kubernetes industry is very well-known and moving extremely fast. There’s a lot of disturbance, outdated material, company bias and badly informed advice. Here’s a hand-picked choice of great sources for various factors of getting started with Docker and Kubernetes! 1. There’s a course offering an "Introduction to Kubernetes".

2. The "Kubernetes Fundamentals" program is quite a good and will carry you pretty far in direction of the certification chance. Be sure to generally do the exercises :)

3.“The best way to learn Docker for Free: Play-With-Docker (PWD)”

If you want to Learn Docker and Kubernetes with Real-time Projects with Clear Explanation Contact Visualpath. They will Provide Docker and Kubernetes online and Classroom Training with Real Time Projects.

What Will They Cover In this Course:Docker Engine:Docker OverviewDocker ArchitectureImages and layersUnderlying technology of Docker like namespaces, cgroups etc.,Docker CE Vs Docker EE and supported platformsPulling images from Docker registry The Docker HubDocker Engine Installation on Linux Servers (CentOS/Ubuntu)Docker commandsImages, ps, pull, push, run, create, commit, attach, exec, cp, rm, rmi, login, export, import, pause, unpause, system, volumes, build, rename, save, tag, network, logs, port, search, history Docker network

Container volume managementCreating custom network (bridge)Building custom images using Dockerfile and through container and pushing to the Docker hubCreating containers with limited resources (CPU, memory etc.,)Building apache with mysql database storage using DockerfileAssigning/remove multiple network to the running container.Selecting storage driver for the Docker EngineSetting limit on the resource like CPU, memory for running containerSetup and configure universal control plane(UCP) and docker trusted repository (DTR)Container lifecycleUnderstanding Docker Machine and Docker Swarm (Cluster).Setting up swarm (Configure manager)Setting up nodes (Adding nodes to the manager)Managing applications in Swarm with serviceReplication in SwarmDemonstrate the usage of templates with “docker service create”Identify the steps needed to troubleshoot a service not deployingDescribe How Storage and Volumes Can Be Used Across Cluster Nodes for Persistent Storage Kubernetes Orchestration:Difference between Docker Swarm and Kubernetes OrchestrationKubernetes overviewKubernetes ArchitectureUnderstanding the underlying concept of Kubernetes OrchestrationDesigning a kubernetes clusterhardware and underlying infrastructureService running on manage node and minionsOverview of pods, replication, deployment, service, endpointsDeploying the application through PODsBuilding multiple pods with high availabilityRolling updates of the Pods with the DeploymentKubernetes underlying network like overlay network with flannel, etcd etc.,Storage types in KubernetesUpgrading kubernetes componentsTroubleshooting in kubernetesNote: Practical examples on above scenarios like building applications mysql, wordpress etc.,

1 note

·

View note

Text

Best Graphic Design Software Free (Updated 2021)

Are you planning to begin your graphic design career and confused about where to start? There are lots of graphic design software out there in the market but most of them are costly and complex, to begin with. If you are a professional graphic designer, you need professional software like Adobe Photoshop, Adobe Illustrator, and so on.

Beginning your graphic designing career as an amateur does not require extensive designing applications. You can begin with the awesome free graphic design software and advance your skills. In this article, we will explore the top 10 Best Graphic Design Software free that provide beautiful features.

Vectr

Vectr is one of the best graphic design software free for producing various projects may it be simple graphics production, infographics, or complex creation for websites. This software is quick to learn and easy to use as you do not need any massive learning curve to get started.

Vectr works on vector graphics that are always crisp, clean, and robust. You can scale your designs to any size without losing quality. The software lets you produce blur-free logos, brochures, 2D graphics, presentations, and website mockups.

Important features of Vectr

You can use Vectr online on the web and also can use it in your PC. The software will automatically save and synchronize your project in real-time and lets you access it across all platforms.

Users can send a Vectr document for real-time collaboration and anyone can observe users’ creation and designs live both in the web app and desktop version.

Anyone can get started with Vectr immediately due to its user friendly and easy appearance

Gravit Designer

Gravit Designer is another free vector graphic design application software that is available on all platforms. This software is best for beginners and even professional designers. Users can furnish their creativity with the precision vector tools that allow creating shapes, lines, points, and provide incredibility to project.

The software offers you to create stunning graphics for websites, social media, presentations, UI designs, marketing materials, and much more. Gravit Designer executes perfectly on all platforms including Windows, Linux, Mac OS, Chrome OS, browsers, Progressive Web App, and also allows users to easily transit from one device to many others.

Important features of Gravit Designer

Works across all platforms providing real-time collaboration

Can be used online in browser or offline in PC

Users can easily share Gravit Cloud files with other users with the use of real-time collaboration

Provides flexible, non-destructive object styling

Canva

Canva is a famous online graphic design application that specializes in its service for social media platforms and design presentations. This application is easy to use as it allows users to use the drag and drop feature and professional layouts to create stunning graphics.

You can design your graphics with millions of images that includes stock photographs, vectors, illustrations, and even use your own photos. Besides, Canva provides beautiful photo filters, free icons, shapes, and hundreds of fonts.

Important features of Canva

Provides Drag-and-drop feature

Easy and fast to use (takes only 23 seconds to learn as claimed by the company)

Creates visually compelling graphics for social medias and presentation

Features thousands of beautiful layouts

Inkscape

Inkscape is the best graphic design software free and open-source vector graphics designed for Windows, macOS, Linux, and provides a rich set of tools for artistic and technical graphics like logos, cartoons, clip arts, diagrams, flowcharts, typography, and others. It makes proper use of vector graphics to create sharp printouts and renderings.

This application is simple to use, supports multiple language and users can customize it with addons. In addition, it can import as well as export famous and standard file formats like PNG, PDF, EPS, AI, SVG, and PS.

Important features of Inkscape

Supports important file formats like PDF, PNG, EPS, AI, PS, DXF, sk1

Allows quality printing at unlimited resolutions

Uses SVG file format (supported by other applications and web-browsers) as its main format

Vecteezy

Vecteezy is another popular vector graphics application that provides professional and creative resources to complete your projects faster without compromising quality. It provides millions of free resources including amazing photographs, videos, and alluring vector illustrations.

This application is trusted by the world’s top brands such as Amazon, BBC, Microsoft, Facebook, Walt Disney, Google, GAP, and DELL.

Important features of Vecteezy

Provides millions of free resources like stock photos, videos, vector illustrations

Vecteezy network of contributors continuously update fresh contents

Krita

Krita is a free professional graphic design software developed by artists as an open-source painting program. This application emphasizes conceptual art, texture, matte painting, illustrations, and comics. It provides all the tools you require to grow as a successful artist.

Krita received the best free painting software in 2019 provided by the famous website techradar.

Important features of Krita

Offers clean and flexible interface with customizable layout, over 30 dockers, dark and light color themes

Provides all the tools required for an artist

Features over 100 professionally produced preloaded brushes

Allows simple and powerful 2D animation

Supports full color management

Daz 3D

Daz 3D is one of the best free graphic design software that offers platforms like Hexagon, Genesis 8, and Daz Studio. These platforms work in collaboration to produce 3D designs including human models. It is most suitable for designers, illustrators, and animation creators.

Daz 3D is mostly used for 3D animation, figure creation, and rendering. Users can download Daz Studio, explore the library of huge free 3D content, and start building custom scenes, characters within seconds with the smart content library.

Important features of Daz 3D

Offers huge library of free 3D content like hair, creatures, vehicles, characters, environments, and more

Allows creating custom scenes, characters in no-time with smart content library

Allows exporting photo-realistic images and animations with studio-class processing

Offers file formats feasible for Maya, Blender, 3ds Max, Cinema 4D, Unreal and Unity

Pixlr

Pixlr is an online photo editor that allows editing photos and graphic designs in your browsers for free. It features AI-powered tools for next-level photo editing, graphic designing, and supports almost all required file formats like PSD, PNG, JPEG, WebP, SVG, and many more.

Pixlr allows users to begin project with an empty canvas or start with professionally crafted templates.

Important features of Pixlr

Features AI-powered smarter tools

Faster Editing time with simpler steps

Easier content creation with library of stickers, borders, icons, overlays, decorative texts

Easiest-to-use photo editor

RawTherapee

RawTherapee is a cross-platform free raw image processing software that provides a powerful set of tools to create stunning photos and depict creativity. It features non-destructive, advanced coloring, detail editing to produce images of the optimum quality from your raw files.

As it is a cross-platform software, you can run it on Microsoft Windows, macOS, or Linux. It also supports multiple languages (available in over 15 languages internationally).

Important features of RawTherapee

Offers high image quality

Provides outstanding raw support using two demosaicing algorithms

Completely free and open source software

Blender

Blender is a free and open-source 3D software that provides 3D pipeline features like modeling, rigging, animation, simulation, compositing, motion tracking, video editing, game creation, and rendering. It is suitable for individuals and small studios who want to grow as in the animation industry.

Blender is cross-platform compatible as it runs well on Microsoft Windows, Linux, and macOS. It is licensed as GNU GPL and is free and open-source forever.

Important features of Blender

Features Cycles Render Engine that offers stunning ultra-realistic rendering

Provides comprehensive modeling tools

Offers VFX opportunity

Provides high quality rigging and animation tools

Allows drawing directly in a 3D viewport

Read More at SKEDUCATES.COM

#graphics design#graphic design softwares#graphic design tools#pixel graphics#free graphic design softwares

1 note

·

View note

Text

Kubernetes 的失敗案例

有人把 Kubernetes (通常縮寫成「K8S」) 的失敗案例 (轉移失敗、爛掉、…) 整理到 GitHub 上:「Kubernetes Failure Stories」,裡面有文章也有演講影片,然後也有重複的公司在不同時間點說明。

先來講 K8S 好了,如果要粗略的解釋 K8S 是什麼東西,我會說就像是架一組 AWS 服務起來,但是是基於 container 而非 VM。

拿 AWS 的詞彙來說,他在上面疊了一層 Amazon VPC (會對應到 Kubernetes 的 overlay network 與 CNI),然後也提供 AMI (透過 Docker Image) 與 EC2 (因為是比喻,這邊就拿 AMI + EC2 來對比),還有基本的 ELB (各種 NodePort、HostPort 與 Ingress) 與 Service Discovery。

比較特別的是…

View On WordPress

1 note

·

View note

Text

300+ TOP KUBERNETES Interview Questions and Answers

KUBERNETES Interview Questions for freshers experienced :-

1. What is Kubernetes? Kubernetes is an extensible, portable, and an open-source platform used for managing services and containerized workloads. It is a large and fast-growing ecosystem as its services, tools, and supports that are frequently and widely available. 2. Describe the history of Kubrnetes? Kubernetes word has been originated from Greek with a meaning pilot or helmsman. It was foremostly designed by Google in 2014. It has become the building block for running the workload productions at scale for Google. Later it has been maintained by Cloud Native Computing Foundation. 3. What are the major differences between Kubernetes and Docker Swarm? Features Kubernetes Docker Swarm Application definition The application is set up using a consolidation of pods and deployments. In this, the applications are set as micro-services. Logging and monitoring Kubernetes supports multiple versions of monitoring and logging. It is supported for only auditing of third-party applications. Scalability Highly scalable More scalable when compared to Kubernetes Networking In this the networking model is flat. The overlay of the network is created by joining a node with the cluster. Availability It provides a huge amount of availability among the nodes. It also provides large and more availability for the services that are depicted in Swarm 4. What is are the reasons why Kubernetes is more useful by walking back in time? Kubernetes mainly contains three important deployments. They are: Traditional Deployment Virtualized Deployment Container Deployment These three are the most crucial aspects that are useful by going back in time Traditional Deployment: Earlier in this era, applications can run on the physical servers by various organizations. This causes allocation issues related to resources, which can be solved by running each and every application on the different servers. Virtualized Deployment: The introduction of virtualization was done so, that it allows us to run many numbers of virtual machines on only one server CPU. Container Deployment: Container deployment has flexible isolation properties in order to share an operating system among applications. 5. Why do we need Kubernetes and what it can do? Kubernetes is the container that provides a good way to run and bundle your applications. We need to effectively manage the containers in the production environment that allows us to run applications. It also provides a framework to run distributed systems resiliently. 6. What are the features of Kubernetes? The features of Kubernetes are as follows: Storage orchestration Automated rollbacks and rollouts self-healing Configuration management Packing of bin automatically Load balancing and service discovery 7. List out the components of Kubernetes? There are mainly three components to deliver a functioning Kubernetes cluster. They are: Addons Node components Master components 8. How does Kubernetes relate to Docker? Kubernetes is a container for the Docker which is more comprehensive than Docker Swarm and is designed to counterpart clusters of the nodes at a level in a well-defined manner. Whereas, Docker is the platform tool for building and running the Docker containers. 9. Define Kube Scheduler? It is the important aspect of the master node which notices the newly created pods with no assigned node for it, and selects one of the nodes to run on them. 10. What are the benefits of Kubernetes? The benefits of Kubernetes are as follows: It provides easy service organizations with pods. It works on any of the OS as it an open-source modular tool. It has a huge community among container orchestration tools.

KUBERNETES Interview Questions 11. Define Kubernetes Namespace? Namespaces are used in environments where there are multiple users in a respective team or project. It is mainly designed to provide scops for the names and the assigned names must be unique within the namespace. Moreover, they provide a way to divide cluster resources within the existing namespace itself. 12. Mention the namespaces that initially the Kubernetes starts with? Initially the Kubernetes starts with three namespaces, and they are: kube-public: This is created automatically and can be read by all the users and it is the most reserved for cluster usage. default: It is for the objects who do not contain namespaces. kube-system: It is for the objects which are created by the Kubernetes system. Example of the initial namespaces in Kubernetes is given below: kubectl get namespace NAME STATUS AGE default Active 1d kube-system Active 1d kube-public Active 1d 13. What are Kubernetes pods? Pods are defined as the group of the containers that are set up on the same host. Applications within the pod also have access to shared volumes. 14. Define Kubelets? It is the node agent that runs on each node. It works based on PodSpec, which JSON object in terms of a pod. The Kubelet logs take a set of PodSpecs that provides various mechanisms and ensures that the PodSpecs are running effectively. 15. What is the command Kubectl and its syntax? It is defined as a CLI (command-line interface. for performing and running commands against Kubernetes clusters. The syntax for Kubectl is kubectl 16. How does the Kubernetes Cluster work? Answer: The master is the one who is responsible for managing clusters. Kubernetes automates the scheduling and distribution of application containers across the cluster in a more effective manner. In Kubernetes, Minikubes is used to create clusters. The Kubernetes cluster consists of mainly two important sources, and they are: The master coordinates the cluster Nodes are the workers who run applications

Kubernetes Cluster 17. What do you understand by the term Kube-proxy? This is a network-proxy that runs on each and every node and also reflects as defined in the Kubernetes API. This proxy can also perform stream forwarding across a set of backends. It is one of the optional add-ons that provide the DNS cluster for the cluster APIs. The syntax to configure Proxy is: kube-proxy 18. Describe in brief the working of the master node in Kubernetes? Kubernetes master is mainly designed to control the nodes and the nodes mainly consist of a crucial part called containers. Now, here comes the pods these pods are made up of a group of containers based upon the requirements and configurations. Every container which we utilize is present inside a pod so, if the set-up for the pod is made then the ca deploy using CUI (Command Line Interface). Scheduling of the pods is done based on the node and relevant requirements. The connection between the node and the master components in the Kubernetes is made using the Kube-apiserver. 19. What is the function of Kube-apiserver? This API server of Kubernetes is mainly used to configure and validate API objects that include replication controllers, services, pods, and many more. Kube-apiserver services the REST operations and provides the frontend to the cluster’s shared region through which interaction takes place between the components. The representation for Kube-apiserver is provided as follows: kube-apiserver 20. What is the role of a Kube-scheduler? It is defined as a workload-specific, policy rich, and topology-aware function which majorly impacts on availability, capability, and performance. The duty of scheduler is to collect individual and collective resource requirements, data locality, hardware/software policy constraints, inter-workload interference, and many more into its account. API shows or displays the necessary workload requirements. The representation for the Kube-scheduler is: kube-scheduler 21. Describe a few words about Kuberntes Controller Manager? Kube-controller-manager is a divinity that embeds the crucial core control loops shipped with the Kubernetes. In most of the robotic and automation applications, control loops are the non-terminating loops that regulate the state of the particular system. In Kubernetes, the controller itself is the control loop that watches the shared state of the cluster using the apiserver. Examples of the controllers that ships today with Kubernetes are namespaces, replications, and many more. The representation for the Kube-controller-manager is given as: kube-controller-manager 22. What do you mean by the term etcd? Kubernetes uses etcd to store all its data. The reason behind it is that Kubernetes is a distributed system so as to store distributed data it uses etcd. Etcd s a distributed, most reliable key-value for storing the most critical data. 23. Define the term Minikube in Kubernetes? To easily learn Kubernetes locally minikube tools is used. This runs on the single-node Kubernetes cluster inside a virtual machine. 24. What is Kubernete’s load balancing? The process of load balancing lets you show or display the services. There are two types of load balancing in kubernetes, and they are: Internal load balancing External load balancing Internal load balancing: This balancing is used to balance the loads automatically and allocates the pods within the necessary configuration. External load balancing: It transfers or drags the entire traffic from the external loads to backend pods. 25. List out the components that interact with the node interface of Kubernetes? The following are the components that interact with the node interface of Kubernetes, and they are: Node Controller Kubelet Kubectl 26. Name the process which runs on Kubernetes Master Node? The process that runs on Kubernetes Master Node is called the Kube-apiserver process. 27. What are Kubernetes Minions? Node in the Kubernetes is called as minions previously, it is a work machine in the Kubernetes. Each and every node in the Kuberntes contains the services to run the pods. 28. What is heapster? Heapster is a metrics collection and performance management system for the Kubernetes versions. It allows us for a collection of workloads, pods, and many more. 29. Explain Kubernetes architecture with a neat diagram?

Kubernetes architecture 30. What is the future scope for Kubernetes? Kubernetes will become one of the most used operating system (OS. for the cloud in the future. The future of Kubernetes mostly lies in virtual machines (VM. than n containers. 31. What do you mean by Kubernetes? Kubernetes is a technology developed by Google Research and Development Team, is available open source. Kubernetes allows Docker containers to establish multiple services at a time. Simply, it is a container management tool designed to work in different types of platforms. This tool is widely used to automate, scale, monitor, manage and deploy container based applications. 32. State the important features of Kubernetes. The features of Kubernetes are stated as follows: It provides better infrastructure for Container based applications It provides uninterrupted development services, deployment services and integration services. It schedules operations automatically. It restores a database or program to a previous defined state automatically. It grades operations according to a scale automatically. It corrects errors automatically. 33. What are the differences between Docker Swarm and Kubernetes? Docker Swarm Kubernetes Installing and Configuring the clusters is very easy but the clusters were not resilient Installing and Configuring the clusters is very difficult but the clusters were resilient (strong) Docker Swarm does not contain GUI Kubernetes dashboard is present in a GUI Scales good with five times more faster than Kubernetes Scales faster but slower than Docker swarm Docker swarm does not scale automatically Kubernetes scales automatically Possess automatic load balancing feature through which it balances in case of any traffic between docker containers in cluster Does not contain automatic load balancing feature and therefore require manual help in case of any traffic between containers and the pods. 34. What are the components of Kubernetes Master machine? Explain The following are the key components of Kubernetes Master machine: ETCD: ETCD is used to store the configuration data of every node present in the cluster. It can store good amount of key values which can be shared with several nodes in the cluster. Because of its sensitivity, Kubernetes API Server can only access ETCD. But, it contain a shared key value store which can be accessed by everyone. API Server: Kubernetes itself is an API server controls and manages the operations in cluster through API Server. This server provides an interface to access various system libraries and tools to communicate with it. Process Planner(Scheduler): Scheduling is the major component of Kubernetes Master machine. Scheduler shares the workload. Scheduler is responsible to monitor the amount of workload distributed and used in the cluster nodes. It also keeps the workload after monitoring on the available resources to receive the workload. Control Manager: This component is responsible to administer the current position of cluster. It is equivalent to a daemon process continuously runs in a unending loop which collects and sends the collected data to the API server. It handles and controls various controllers. 35. Explain the node components of Kubernetes. The following are the major components of a server node to exchange information with Kubernetes. Docker: Every node contain Docker to run the containers smoothly and effectively. Docker is the basic component of every node in a cluster. Proxy service of Kubernetes: Proxy service is responsible to establish communication to the host. Every node communicates with the host through proxy. Proxy service helps nodes to transmit data to the containers upon its request and is also responsible for load balancing. It is also responsible to control pods present in node, data volumes, creation of new containers, secrets etc., Service of Kubelet: Kubelet service helps every node to share information from the control pane and vice versa. Kubelet is responsible to read the details of node configuration and the write values which were present in the ETCD store. This service administers the port forwarding, protocols of network etc., 36. What do you mean by Kubernetes Name space? Namespaces are given to provide an identity to the user to differentiate them from the other users. Namespace assigned to a user must be unique. Through namespaces, cluster resources can be separated and shared within the assigned namespace itself. 37. State the functions of Kubernetes name space. The primary functions of Kubernetes namespace are stated below: Namespaces assist information exchange between pod to pod through the same namespace. They are considered as virtual clusters which will be present on the same cluster. Namespaces are used to deliver logical segregation of team and their corresponding environments. 38. How do you create a Namespace? To create a name space, the following command should be written: kubet create –f namespace.yml 39. Write commands to control the Namespace. To control the name space, we have to create a name space initially: kubet create –f namespace.yml Then, we have to check the available namespaces from the list: kubet get namespace To get a specific name space we require, use the following command: kubet get namespace To describe the services offered by the namespace, use the command: kubet describe namespace If you want to delete a namespace from the list, use the following command: kubet deletenamespace Note: xyz is given for example. You can give any name in the namespace region. 40. Explain how will you setup Kubernetes. Virtual Data Center is the basic setup before installing Kubernetes. Virtual Data center is actually believed to be set of machines which can interact with each of them through a network. If the user does not have any existing infrastructure for cloud, he can go for setting up Virtual Data Center in the PROFITBRICKS. Once completing this setup, the user has to setup and configure the master and node. For an instance, we can consider the setup in Linux Ubuntu. Same setup can be followed in other Linux machines. Installation of Docker is the basic setup to run Kubernetes. But, there are some prerequisites needed before installing Kubernetes. We shall install Docker initially to start with. Following steps should be followed to install Docker. User has to provide login credentials and login as a root user Install the apt package and update it if necessary. If update is needed, use the commands: sudo apt-get update sudo apt-get install apt-transport-https ca-certificates. 41. Once update is installed, add new key for GPG using the command: sudo apt-key adv This key will be extracted from the Docker list Further, update the image of the API package using the command: sudo apt-get update Install Docker Engine. Check whether the kernel version you are using is the right one. After installing Docker Engine, install etcd. Now, install Kubernetes on the machines. 42. What do you know about Kubelets? Kubelet is nothing but a node agent which runs on every node on a cluster. Kubelet works on the basis of the specifications in Pods. These Kubelets were responsible to check whether the PodSpecs are working perfectly. For this purpose, Kubelets create logs and monitor the PodSpecs. 43. What do you know about Pods in Kubernetes? Pods are actually contain a class of containers which are installed and run on the same host. Containers were present on pods and therefore configuring the pods as per the specifications is important. As per the requirement of the nodes in a cluster, scheduling of pods can be established. 44. What are the types of Kubernetes pods? How do you create them? Kubernetes contain two kinds of Pods. They are: Single Container Pod: User has to give Kubectl run command where he defined the image in Docker registry to create a single container pod. The following command is used to create a single container pod: kubectl run –image where abcd….. name of the pod xyz234….image name on the registry Multicontainer pods: To create multicontainer pods, we need to create a yaml file including the details of the containers. User has to define the complete specifications of the containers such as its name, image, port details, image pull policy, database name, etc., 45. What is the use of the API server in Kubernetes? The API server is responsible to provide a front end to the clusters that are shared. Through this interface, the master and node communicate with one another. The primary function of API server is to substantiate and configure the API objects which includes pods, associated services, controllers etc., 46. What do you mean by Kubernetes images? There is no specific support to Kubernetes images as on date and Docker images actually support Kubernetes. To create an infrastructure for Containers, Docker images are the primary elements to form it. Every container present inside a pod will contain a Docker image running on it. 47. Tell me about the functions of Kubernetes Jobs. The important function of Kubernetes job is to form a single or multiple pods and to monitor, log how well they are running. Jobs reflect the running of pods and they assure how many pods finished successfully. A job is said to be complete if the specified number of pods successfully run and complete. 48. What do you know about Labels in Kubernetes? Keys will contain some values. Labels contain pair of key values connected to pods, associated services and the replication controllers. Generally, labels were added to some object during creation. During run time, they can be modified. 49. What do you know about Selectors and what are the types of selectors in Kubernetes API? Since multiple objects have the possibility of same labels, selectors are used in Kubernetes. Label Selectors are unique and users use it to choose a set of objects. Till date, Kubernetes API allows two kinds of Label selectors. They are: Selectors based on Set: This kind of selector permits to filter the keys as per the set of values. Selectors based on Equality: This kind of selector permit filter as per key and by value. If there is any matching object found, it should meet the expectations of the specified labels. 50. What do you know about Minions? Explain. Minion is nothing but a node present in the Kubernetes cluster on a working machine. Minions can either be a virtual machine, a physical one or a cloud sample. Every node present in a cluster should meet the configuration specifications to run a pod on the node. Two prime services such as kubelet and proxy services along with Docker were needed to establish interface and communication with the nodes which runs the Docker containers present in the pod which were created on the node. Minions were not actually formed by Kubernetes but could be formed by a cluster manager present in virtual or physical machines or by a service provider for a cloud. 51. What do you mean by Node Controller? Node controller are the group of services which were running in the Kubernetes Master. Node controllers are responsible to observe the activities of the nodes present on a cluster. They do this as per the identity of metadata name assigned to a node. Node controller checks for the validity of a node. If the node is found valid, it assigns a fresh created pod to the valid node. If the node is invalid, node controller will wait till the node becomes valid so as to assign a pod. 52. Tell me about Google container Engine. Google container Engine is available open source and is a Kubernetes based Engine which supports for clusters which can run within the public cloud services of Google. This engine services as a platform for Docker containers and clusters. 53. What do you mean byIngress network? Ingress network provides set of rules to enter into the Kubernetes cluster. This network is responsible to provide the incoming connections further This allows inbound connections, further configured according to the required specifications so as to offer give services through URLs which are available externally,through load balance traffic, or by providing virtual hosting which is name based. Therefore, Ingress network can be defines as an API object that controls and administers external access to the services present in a cluster, through HTTP. 54. What do you know about Kubernetes Service? Kubernetes service is defined as analytical pairs of pods. As per the information present on top of the pod, it will contain a DNS name and one IP address through which pods can be accessed. Kubernetes service is very useful to regulate and administer load balancing as per specific requirements. Kubernetes service also supports pods in scaling them too easily. 55. What are the types of Kubernetes services? The following are the types of Kubernetes services: Node port: Node port helps to fetch the details of a static port of the node deployed currently. With the assistance of Cluster IP, Node port routing can be established automatically. User can access this node port service away from the cluster through the following command: NodeIP:nodePort. ClusterIP: Cluster IP is responsible to fetch the information present in a Kubernetes cluster. It also aids in limiting the service within a cluster. Load balancing: Load balancing is an important service available in Kubernetes to automatically balance the load in case of traffic. The above two services such as NodePort and ClusterIP were automatically created with which they help the external load balancer to do routing. 56. What are the functions of Replication controller? The following are the main functions of the replication controller: It is responsible to control and administer the lifecycle of the pod. It is responsible to monitor and verify whether the allowed number of pod replicas were running It helps the user to check the running status of the pod Replication controller lets the user to alter a particular pod. The user can drag its position to the top or to the bottom. 57. What do you know about Replica set? Replica set is considered as a substitute to the replication controller. The prime function of replica set is to assure the number of pod replicas running. There are two types of Label selectors supported by Kubernet API. They are: Equality based selectors and Set based selectors. The primary difference between the replication controller and replica set is that, replication controller supports equality based selector alone whereas the replica set allows both the types of selectors. 58. How do you update, delete and rollback in a Deployment strategy? Update: Through this feature, user could be able to update the existing deployment during runtime and before its completion. Through update, the ongoing deployment will end and a fresh deployment will be created. Delete: Through this feature, the user could be able to cancel or pause the ongoing deployment by deleting the deployment before its completion. Creating similar deployment will resume the deployment. Rollback: User can restore a database or program to a previously defined state. This process is called as Rollback. User could be able to rollback the ongoing deployment through this feature. 59. What do you mean by “Recreate” and “Rolling Update” in Deployment strategy? With the aid of Deployment strategies, user could be able to replace the existing replication controller to a new replication controller. Recreate is used to kill all the running (existing. replication controllers and creates newer replication controllers. Recreate helps the user in faster deployment whereas it increases the downtime, if in case the new pods haven’t replace the down old pods. Rolling update also helps the user to replace the existing replica controller to newer ones. But, the deployment time is slow and in fact, we could say, there is no deployment at all. Here, some old pods and some new pods were readily available to the user to process any time. 60. Write a command to create and fetch the deployment: To create: kubectl create –f Deployment.yaml –record To fetch: kubectl get deployments 61. Write a command to check the status of deploymentand to update a deployment. To check the status: kubectl rollout status deployment/Deployment To update a deployment: kubectl setimage deployment/Deployment tomcat = tomcat:6.0 62. What do you mean by volumes? What are the differences between Docker volumes and Kubernetes Volumes? Volumes can be considered as directories through which the containers in a pod can be accessed. The differences between Kubernetes volumes and Docker volumes are: Kubernetes Volumes Docker Volumes Volumes are not limited to any particular container Volumes are limited to a particular pod in a container It supports all or any of the container deployed in a pod of kubernetes Does not support all container deployed in Docker Supports many types of storage on the pod and also supports multiple of storage at the same time No such support in Docker 63. List the Kubernetes volume you are aware of. The following are some of the Kubernetes volume which are widely used: NFS: Network File System lets an ongoing NFS to let you mount on your pod. Though you remove the pod from the node, NFS volume will not be erased but only the volume is unmounted. Flocker: Flocker is available open source and is used to control and administer data volumes. It is a manager for data volume for a clustered container. Through Flocker volume, user can create a Flocker dataset and mount the same to the pod. If in case, there is no such dataset available in Flocker, the user has to create the same through Flocker API. EmptyDIR: Once a pod is assigned to a node, EmptyDIR is created. This volume stay active till the pod is alive and running on that particular node. EmptyDIR volume does not contain anything in the initial state and is empty; the user can read or write files from this volume. The data present in the volume gets erased once the pod is removed from that particular node. AWS Elastic Block Store: This volume mounts Amazon Web Services Elastic Block Store on to your pod. Though you remove the pod from the node, data in the volume remains. GCE Persistent Disk: This volume mounts Google Compute Engine Persistent Disk on to your pod. Similar to AWS Elastic Block store, the data in the volume remains even after removing the pod from the node. Host path: Host path mounts a directory or file from the file system of the host on to your pod. RBD: Rados Block Device volume lets a Rados Block device to be mounted on to your pod. Similar to AWS Elastic Block store and GCE Persistent Disk Volumes, even after removing the pod from the node, the data in the volume remain. 64. What do you mean by Persistent Volume? Persistent Volume is a network storage unit controlled by the administrator. PV is a strategy used to control an individual pod present in a cluster. 65. What do you mean by Persistent Volume Claim? Persistent Volume Claim is actually the storage provided to the pods in Kubernetes after the request from Kubernetes. User is not expected to have knowledge in the provisioning and the claims has to be created where the pod is created and in the same namespace. 66. Define Secrets in Kubernetes. As the name implies, secrets are sensitive information and in this context, they are login credentials of the user. Secrets are objects in Kubernetes which stores sensitive information namely the user name and the passwords after encrypting them. 67. How do you create secrets in Kubernetes? Secrets can be created in various ways in Kubernetes. Some of them are Through Text (txt. files Through Yaml File To create secrets from these files, user has to create username and password using kubectl command. The secret file has to be saved in the corresponding file format. 68. Explain the Network Policy in Kubernetes. Network policy contains a set of protocol to achieve information transfer between the pods and defines how those pods present in the same name space transfers information with one another. It also defines data transfer with the network endpoint. User has to enable the network policy in the API server while configuring it in run time. Through the resources available in the network policy, select pods using labels and set the rules to permit the data traffic to a particular pod. 69. What will happen while adding new API to Kubernetes? If you add a fresh API to Kubernetes, the same will provide extra features to Kubernetes. So, adding a new API will improve the functioning ability of Kubernetes. But, this will increase the cost and maintenance of the entire system. So, there is a need to maintain the cost and complexity of the system. This can be achieved by defining some sets for the new API. 70. How do you make changes in the API? Changes in the API server has to be done by the team members of Kubernetes. They are responsible to add a new API without affecting the functions in the existing system. 71. What are the API versions available? Explain. Kubernetes supports several versions of API in order to provide support to multiple structures. Versioning is available at Alpha level, Beta level and Stable level. All these version features are in multiple standards. Alpha level versions have alpha values. This version is prone to errors but the user can drop for support to rectify errors at any time. But, this version is limited to test in short time alone. Beta level versions contain beta values. Scripts present in this version will be firm since because they are completely tested. User can look for support any time in case of any errors. This version is not recommended to use in commercial applications. Stable level versions get many updates often. User has to get the recent version. Generally the version name will be vX, where ‘v’ refers to the version and ‘x’ refers to an integer. 72. Explain Kubectl command. Kubectl commands provides an interface to establish communication between pods. They are also used to control and administer the pods present in the Kubernetes cluster. To communicate with the Kubernetes cluster, user has to declare kubectl command locally. These commands are also used to communicate and control the cluster and the Kubernetes objects. 73. What are the kubectl commands you are aware of? kubectl apply kubectl annotate kubectl attach kubectl api-versions kubectl autoscale kubectl config kubectl cluster-info kubectl cluster-info dump kubectl set cluster kubectl get clusters kubectl set-credentials 74. Using create command along with kubectl, what are the things possible? User can create several things using the create command with kubectl. They are: Creating name space Creating deployment Creating secrets Creating secret generic Creating secret docker registry Creating quota Creating service account Creating node port Creating load balancer Creating Cluster IP 75. What is kubectl drain? kubectl drain command is used to drain a specific node during maintenance. Once this command is given, the node goes for maintenance and is made unavailable to any user. This is done to avoid assigning this node to a new container. The node will be made available once it completes maintenance. 76. How do you create an application in Kubernetes? Creating an application in Kubernetes requires creating an application in Docker, since Docker is essential for Kubernetes to perform its operation smoothly. User can do any of the following two things to install Docker: can download or do the installation using Docker file. Since Docker is available open source, the existing image from Docker hub can be downloaded and the same has to be stored in a local Docker registry. To create a new application using Docker file, user has to create a Docker file initially. Once creating an image, the same can be transferred to the container after testing it completely. 77. What do you mean by application deployment in Kubernetes? Deployment is the process of transferring images to the container and assigning the images to pods present in Kubernetes cluster. Application deployment automatically sets up the application cluster thereby setting the pod, replication controller, replica set and the deployment of service. Cluster set up is organized properly so as to ensure proper communication between the pods. This set up also sets up a load balancer to divert traffic between pods. Pods exchange information between one another through objects in Kubernetes. 78. Define Autoscaling in Kubernetes. One of the important feature of Kubernetes is Autoscaling. Autoscaling can be defined as scaling the nodes according to the demand for service response. Through this feature, cluster increases the number of nodes as per the service response demand and decreases the nodes in case of the decrease in service response requirement. This feature is supported currently in Google Container Engine and Google Cloud Engine and AWS is expected to provide this feature at the earliest. 79. How will you do monitoring in Kubernetes? To manage larger clusters, monitoring is needed. Monitoring is yet another important support in Kubernetes. To do monitoring, we have several tools. Monitoring through Promotheus is a famous and widely used tool. This tool not monitors, but also comes with an alert system. It is available as open source. Promotheus is developed at Sound Cloud. This method has the capability to handle multi-dimensional data more accurately than other methods. Promotheus needs some more components to do monitoring. They are Promotheus node explore Grafana Ranch-eye Infux DB Prom ranch exporter 80. What is Kubernetes Log? Kubernetes container logs are much similar to Docker container logs. But, Kubernetes allows users to view logs of deployed pods i.e running pods. Through the following functions in Kubernetes, we can get even specific information as well. Container name of Kubernetes Pod name of Kubernetes Name space of Kubernetes Kubernetes UID and Docker image name KUBERNETES Questions and Answers Pdf Download Read the full article

0 notes

Text

Hello good people. If you’re on this page you must be working on an interesting project!. We’ll look at the steps of Installing OpenContrail with Ansible on CentOS 7 server. I’m doing this on a freshly installed CentOS 7 server for POC and testing purposes. We can just define what OpenContrail is before we dive into the installation steps. This will be helpful for those new to OpenContrail. What’s OpenContrail? The official OpenContrail website defines OpenContrail as “an Apache 2.0-licensed project that is built using standards-based protocols and provides all the necessary components for network virtualization–SDN controller, virtual router, analytics engine, and published northbound APIs. It has an extensive REST API to configure and gather operational and analytics data from the system”. OpenContrail Key Features. Routing and Switching Load Balancing Network Services Performance and Scale Security and Policies Gateway Services Rich Analytics HAs and Upgrades APIs and Orchestration Please click on the provided links for a detailed explanation of the items. What we’re trying to achieve in our Project with OpenContrail is: Set up an Overlay networking to interconnect two data centers without relying on the underlying network links. This will help with VM Mobility across the 2 zones. Achieve a better functionality for the VPCs(Virtual Private Cloud) for VMs under our Openstack / Cloudstack and VMware Infrastructure. Using OpenContrail as a controller for the SD WAN services Setup Prerequisites For this setup, I’m running everything on a single server. Once convinced to run it on production, we’ll have to do a multi-server installation with HA. My dedicated server specs are: Dell PowerEdge R610 Intel(R) Xeon(R) CPU L5630 @ 2.13GHz (2×8) 96GB RAM OS: CentOS Linux release 7.4.1708 (Core) The Prerequisites are: Python 2.7 Docker python-pip docker-compose docker-py ansible Kubernetes – kubelet kubeadm kubectl kubernetes-cni Installing Prerequisites Disable SELinux unless you’re a SELinux Guru: # setenforce 0 # sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config # cat /etc/selinux/config Also, disable firewalld, you’ll have a good number of ports that need to be opened. You can later turn it on when everything is running. # systemctl disable firewalld && systemctl stop firewalld Add epel repository: To add the epel repo, run the command: # yum -y install epel-release Install Docker For Docker installation, use our guide: How to install Docker CE on Ubuntu / Debian / Fedora Install ansible, python-pip, docker-compose and docker-py python modules We’ll require these items installed on our server for the next parts. So install them here. # yum -y install ansible python-pip docker-compose # pip install --upgrade pip # pip install docker-py docker-compose Install kubelet kubeadm kubectl kubernetes-cni You have to first add the official Kubernetes repository for CentOS 7. cat

0 notes