#execution plan in oracle sql developer

Explore tagged Tumblr posts

Text

ChatGPT & Data Science: Your Essential AI Co-Pilot

The rise of ChatGPT and other large language models (LLMs) has sparked countless discussions across every industry. In data science, the conversation is particularly nuanced: Is it a threat? A gimmick? Or a revolutionary tool?

The clearest answer? ChatGPT isn't here to replace data scientists; it's here to empower them, acting as an incredibly versatile co-pilot for almost every stage of a data science project.

Think of it less as an all-knowing oracle and more as an exceptionally knowledgeable, tireless assistant that can brainstorm, explain, code, and even debug. Here's how ChatGPT (and similar LLMs) is transforming data science projects and how you can harness its power:

How ChatGPT Transforms Your Data Science Workflow

Problem Framing & Ideation: Struggling to articulate a business problem into a data science question? ChatGPT can help.

"Given customer churn data, what are 5 actionable data science questions we could ask to reduce churn?"

"Brainstorm hypotheses for why our e-commerce conversion rate dropped last quarter."

"Help me define the scope for a project predicting equipment failure in a manufacturing plant."

Data Exploration & Understanding (EDA): This often tedious phase can be streamlined.

"Write Python code using Pandas to load a CSV and display the first 5 rows, data types, and a summary statistics report."

"Explain what 'multicollinearity' means in the context of a regression model and how to check for it in Python."

"Suggest 3 different types of plots to visualize the relationship between 'age' and 'income' in a dataset, along with the Python code for each."

Feature Engineering & Selection: Creating new, impactful features is key, and ChatGPT can spark ideas.

"Given a transactional dataset with 'purchase_timestamp' and 'product_category', suggest 5 new features I could engineer for a customer segmentation model."

"What are common techniques for handling categorical variables with high cardinality in machine learning, and provide a Python example for one."

Model Selection & Algorithm Explanation: Navigating the vast world of algorithms becomes easier.

"I'm working on a classification problem with imbalanced data. What machine learning algorithms should I consider, and what are their pros and cons for this scenario?"

"Explain how a Random Forest algorithm works in simple terms, as if you're explaining it to a business stakeholder."

Code Generation & Debugging: This is where ChatGPT shines for many data scientists.

"Write a Python function to perform stratified K-Fold cross-validation for a scikit-learn model, ensuring reproducibility."

"I'm getting a 'ValueError: Input contains NaN, infinity or a value too large for dtype('float64')' in my scikit-learn model. What are common reasons for this error, and how can I fix it?"

"Generate boilerplate code for a FastAPI endpoint that takes a JSON payload and returns a prediction from a pre-trained scikit-learn model."

Documentation & Communication: Translating complex technical work into understandable language is vital.

"Write a clear, concise docstring for this Python function that preprocesses text data."

"Draft an executive summary explaining the results of our customer churn prediction model, focusing on business impact rather than technical details."

"Explain the limitations of an XGBoost model in a way that a non-technical manager can understand."

Learning & Skill Development: It's like having a personal tutor at your fingertips.

"Explain the concept of 'bias-variance trade-off' in machine learning with a practical example."

"Give me 5 common data science interview questions about SQL, and provide example answers."

"Create a study plan for learning advanced topics in NLP, including key concepts and recommended libraries."

Important Considerations and Best Practices

While incredibly powerful, remember that ChatGPT is a tool, not a human expert.

Always Verify: Generated code, insights, and especially factual information must always be verified. LLMs can "hallucinate" or provide subtly incorrect information.

Context is King: The quality of the output directly correlates with the quality and specificity of your prompt. Provide clear instructions, examples, and constraints.

Data Privacy is Paramount: NEVER feed sensitive, confidential, or proprietary data into public LLMs. Protecting personal data is not just an ethical imperative but a legal requirement globally. Assume anything you input into a public model may be used for future training or accessible by the provider. For sensitive projects, explore secure, on-premises or private cloud LLM solutions.

Understand the Fundamentals: ChatGPT is an accelerant, not a substitute for foundational knowledge in statistics, machine learning, and programming. You need to understand why a piece of code works or why an an algorithm is chosen to effectively use and debug its outputs.

Iterate and Refine: Don't expect perfect results on the first try. Refine your prompts based on the output you receive.

ChatGPT and its peers are fundamentally changing the daily rhythm of data science. By embracing them as intelligent co-pilots, data scientists can boost their productivity, explore new avenues, and focus their invaluable human creativity and critical thinking on the most complex and impactful challenges. The future of data science is undoubtedly a story of powerful human-AI collaboration.

0 notes

Text

Seamless Cross Database Migration with RalanTech

In today's rapidly evolving digital landscape, businesses must ensure their data management systems are both efficient and adaptable. Cross database migration has become a critical strategy for organizations aiming to upgrade their infrastructure, enhance performance, and reduce costs. RalanTech stands out as a leader in this domain, offering affordable database migration services and expert consulting to facilitate smooth transitions.

Understanding Cross Database Migration

Cross database migration involves transferring data between different database management systems (DBMS), such as moving from Oracle to PostgreSQL or from Sybase to SQL Server. This process is essential for organizations seeking to modernize their systems, improve scalability, or integrate new technologies. However, it requires meticulous planning and execution to maintain data integrity and minimize downtime.

The Importance of Affordable Database Migration Services

Cost is a significant consideration in any migration project. Affordable database migration services ensure that businesses of all sizes can access the benefits of modern DBMS without prohibitive expenses. RalanTech offers cost-effective solutions tailored to meet specific business needs, ensuring a high return on investment.

RalanTech's Expertise in Database Migration Consulting

With a team of seasoned professionals, RalanTech provides comprehensive database migration consulting services. Their approach includes assessing current systems, planning strategic migrations, and executing transitions with minimal disruption. By leveraging their expertise, businesses can navigate the complexities of migration confidently.

Why Choose RalanTech for Your Migration Needs?

Proven Track Record

RalanTech has successfully completed over 295 projects, demonstrating their capability and reliability in handling complex migration tasks.

Customized Solutions

Understanding that each business has unique requirements, RalanTech offers tailored migration strategies that align with specific goals and operational needs.

Comprehensive Support

From initial assessment to post-migration support, RalanTech ensures continuous assistance, addressing any challenges that arise during the migration process.

The Migration Process: A Step-by-Step Overview

Assessment and Planning: Evaluating the existing database environment to identify potential risks and develop a strategic migration plan.

Data Mapping and Extraction: Ensuring data compatibility and accurately extracting data from the source system.

Data Transformation and Loading: Converting data to fit the target system's structure and loading it efficiently.

Testing and Validation: Conducting thorough tests to verify data integrity and system functionality.

Deployment and Optimization: Implementing the new system and optimizing performance for seamless operation.

Post-Migration Support: Providing ongoing assistance to address any post-migration issues and ensure system stability.

Ensuring Data Integrity and Security

Maintaining data integrity and security is paramount during migration. RalanTech employs robust protocols to protect sensitive information and ensure compliance with industry standards.

Minimizing Downtime and Disruption

Understanding the importance of business continuity, RalanTech designs migration strategies that minimize downtime and operational disruption, allowing businesses to maintain productivity throughout the transition.

Scalability and Future-Proofing Your Database

RalanTech's migration solutions are designed with scalability in mind, enabling businesses to accommodate future growth and technological advancements seamlessly.

Leveraging Cloud Technologies

Migrating databases to the cloud offers enhanced flexibility and cost savings. RalanTech specializes in cloud migrations, facilitating transitions to platforms like AWS, Azure, and Google Cloud.

Industry-Specific Migration Solutions

RalanTech tailors its migration services to meet the unique demands of various industries, including healthcare, finance, and manufacturing, ensuring compliance and optimized performance.

Training and Empowering Your Team

Beyond technical migration, RalanTech offers training to internal teams, empowering them to manage and optimize the new database systems effectively.

Measuring Success: Post-Migration Metrics

RalanTech emphasizes the importance of post-migration evaluation, utilizing key performance indicators to assess the success of the migration and identify areas for further optimization.

Continuous Improvement and Support

Committed to long-term client success, RalanTech provides ongoing support and continuous improvement strategies to adapt to evolving business needs and technological landscapes.

#DataMigration#DigitalTransformation#CloudMigration#CloudComputing#CloudServices#BusinessTransformation#DatabaseMigration#DataManagement#ITConsulting

0 notes

Text

Career Path and Growth Opportunities for Integration Specialists

The Growing Demand for Integration Specialists.

Introduction

In today’s interconnected digital landscape, businesses rely on seamless data exchange and system connectivity to optimize operations and improve efficiency. Integration specialists play a crucial role in designing, implementing, and maintaining integrations between various software applications, ensuring smooth communication and workflow automation. With the rise of cloud computing, APIs, and enterprise applications, integration specialists are essential for driving digital transformation.

What is an Integration Specialist?

An Integration Specialist is a professional responsible for developing and managing software integrations between different systems, applications, and platforms. They design workflows, troubleshoot issues, and ensure data flows securely and efficiently across various environments. Integration specialists work with APIs, middleware, and cloud-based tools to connect disparate systems and improve business processes.

Types of Integration Solutions

Integration specialists work with different types of solutions to meet business needs:

API Integrations

Connects different applications via Application Programming Interfaces (APIs).

Enables real-time data sharing and automation.

Examples: RESTful APIs, SOAP APIs, GraphQL.

Cloud-Based Integrations

Connects cloud applications like SaaS platforms.

Uses integration platforms as a service (iPaaS).

Examples: Zapier, Workato, MuleSoft, Dell Boomi.

Enterprise System Integrations

Integrates large-scale enterprise applications.

Connects ERP (Enterprise Resource Planning), CRM (Customer Relationship Management), and HR systems.

Examples: Salesforce, SAP, Oracle, Microsoft Dynamics.

Database Integrations

Ensures seamless data flow between databases.

Uses ETL (Extract, Transform, Load) processes for data synchronization.

Examples: SQL Server Integration Services (SSIS), Talend, Informatica.

Key Stages of System Integration

Requirement Analysis & Planning

Identify business needs and integration goals.

Analyze existing systems and data flow requirements.

Choose the right integration approach and tools.

Design & Architecture

Develop a blueprint for the integration solution.

Select API frameworks, middleware, or cloud services.

Ensure scalability, security, and compliance.

Development & Implementation

Build APIs, data connectors, and automation workflows.

Implement security measures (encryption, authentication).

Conduct performance optimization and data validation.

Testing & Quality Assurance

Perform functional, security, and performance testing.

Identify and resolve integration errors and data inconsistencies.

Conduct user acceptance testing (UAT).

Deployment & Monitoring

Deploy integration solutions in production environments.

Monitor system performance and error handling.

Ensure smooth data synchronization and process automation.

Maintenance & Continuous Improvement

Provide ongoing support and troubleshooting.

Optimize integration workflows based on feedback.

Stay updated with new technologies and best practices.

Best Practices for Integration Success

✔ Define clear integration objectives and business needs. ✔ Use secure and scalable API frameworks. ✔ Optimize data transformation processes for efficiency. ✔ Implement robust authentication and encryption. ✔ Conduct thorough testing before deployment. ✔ Monitor and update integrations regularly. ✔ Stay updated with emerging iPaaS and API technologies.

Conclusion

Integration specialists are at the forefront of modern digital ecosystems, ensuring seamless connectivity between applications and data sources. Whether working with cloud platforms, APIs, or enterprise systems, a well-executed integration strategy enhances efficiency, security, and scalability. Businesses that invest in robust integration solutions gain a competitive edge, improved automation, and streamlined operations.

Would you like me to add recommendations for integration tools or comparisons of middleware solutions? 🚀

Integration Specialist:

#SystemIntegration

#APIIntegration

#CloudIntegration

#DataAutomation

#EnterpriseSolutions

0 notes

Text

Firebird to Oracle Migration – Ask On Data

In this article, we explore the complexities and best practices involved in Firebird to Oracle Migration. As organizations grow and their data needs evolve, transitioning to a robust and scalable database system like Oracle becomes imperative. We will guide you through the entire migration process, highlighting potential challenges and offering practical solutions to ensure a seamless transition. From initial planning and data mapping to execution and post-migration optimization, this comprehensive guide aims to equip you with the knowledge and tools needed for a successful Firebird to Oracle migration.

What is Firebird

Firebird is an advanced open-source relational database management system renowned for its efficiency and reliability. It supports a wide range of platforms, including Windows, Linux, and macOS, offering seamless cross-platform capabilities. With features like multi-generational architecture, SQL compliance, and robust security, Firebird caters to both small applications and large enterprise solutions. Its lightweight footprint and minimal maintenance requirements make it an attractive option for developers and businesses seeking a cost-effective yet powerful database solution. Dive into the capabilities of Firebird and discover why it remains a popular choice in the database community.

What is Oracle

Oracle is a leading relational database management system known for its robust performance, scalability, and comprehensive feature set. It provides unparalleled reliability and security, making it a preferred choice for enterprises handling large volumes of data and complex transactions. With support for advanced SQL features, PL/SQL programming, and extensive data analytics, Oracle enables organizations to optimize their data management and gain actionable insights. Its high availability and disaster recovery solutions ensure business continuity, while its integration capabilities allow seamless connectivity with various applications and systems. Discover how Oracle can transform your data infrastructure and drive your business forward.

Advantages of Firebird to Oracle Migration

Enhanced Security: Oracle offers advanced data encryption, access controls, and auditing capabilities.

Superior Performance Optimization: Oracle's query optimization and performance tuning tools ensure efficient data processing.

High Availability and Disaster Recovery: Oracle provides robust solutions to ensure business continuity and minimize downtime.

Scalability: Oracle supports seamless scalability to handle growing data volumes and transaction loads.

Comprehensive Analytics: Oracle's analytics and reporting tools offer deep insights for informed decision-making.

Broad Ecosystem and Support: Oracle's extensive ecosystem and support services provide access to expert resources and third-party integrations.

Method 1: Migrating Data from Firebird to Oracle Using the Manual Method

Data Export: Export data from Firebird using tools like isql or custom scripts to extract data in a suitable format.

Data Transformation: Transform the exported data to match Oracle's schema and data types, ensuring compatibility.

Schema Creation: Manually create the corresponding tables, indexes, and constraints in Oracle to mirror the Firebird schema.

Data Import: Use Oracle tools like SQL*Loader or direct SQL inserts to import the transformed data into Oracle.

Validation: Validate the migrated data by comparing record counts and sample data between Firebird and Oracle.

Testing: Perform thorough testing to ensure that the data migration is successful and that applications function correctly with the new Oracle database.

Disadvantages of Migrating Data from Firebird to Oracle Using the Manual Method

High Error Risk with lot of manual efforts

Difficult in achieving Data Transformation

Dependency on tech resources

No Automation

Limited Scalability

For every table, this work has to be done.

No automated methods of handling errors, notifications

No automated methods of roll back in case

No automated direct methods of logs and knowing amount of data transferred

No automated direct methods in case if you would like to have methods like incremental load (Change Data Capture)

Method 2: Migrating Data from Firebird to Oracle Using ETL Tools

There are various advantages which ETL

Data Extraction: ETL tools can automatically extract data from Firebird using connectors or custom queries, simplifying the extraction process.

Data Transformation: The ETL process allows for automated transformation of data, including converting data types, cleaning, and restructuring data to match Oracle's schema.

Data Loading: The ETL tools efficiently load transformed data into Oracle, ensuring compatibility with the target database's structure.

Error Handling: Most ETL tools include error handling features, which automatically detect and log issues during migration, reducing the risk of data corruption.

Automation and Scheduling: ETL tools offer the ability to automate and schedule migration tasks, saving time and reducing manual intervention during the process.

Scalability and Efficiency: ETL tools are designed to handle large volumes of data, making them more scalable and efficient than manual methods, especially for ongoing migrations or large datasets.

Challenges of Using ETL Tools for Data Migration:

Complex Setup and Configuration in case of on-premise deployment

Steep learning curve to use these tools

Dependency on using highly technical resources/ Data Engineers to do this kind of work

Cost

Scalability Issues

Limited Customization

Maintenance Overhead

Why Ask On Data is the Best Tool for Migrating Data from Firebird to Oracle

User-Friendly Interface: Ask On Data offers an intuitive interface that simplifies the migration process, making it easy for users of all skill levels.

Seamless Integration: The tool connects smoothly with both Firebird and Oracle, ensuring a hassle-free data transfer without complicated setups.

Automated Data Transformation: It automatically transforms and cleans your data, reducing the risk of errors and saving you time during migration.

Real-Time Monitoring: Ask On Data provides real-time monitoring of the migration process, allowing you to track progress and quickly address any issues.

Cost-Effective Solution: With a flexible pricing model, Ask On Data helps you manage migration costs without sacrificing quality or performance.

Usage of Ask On Data : A chat based AI powered Data Engineering Tool

Ask On Data is world’s first chat based AI powered data engineering tool. It is present as a free open source version as well as paid version. In free open source version, you can download from Github and deploy on your own servers, whereas with enterprise version, you can use Ask On Data as a managed service.

Advantages of using Ask On Data

Built using advanced AI and LLM, hence there is no learning curve.

Simply type and you can do the required transformations like cleaning, wrangling, transformations and loading

No dependence on technical resources

Super fast to implement (at the speed of typing)

No technical knowledge required to use

Below are the steps to do the data migration activity

Step 1: Connect to Firebird(which acts as source)

Step 2 : Connect to Oracle (which acts as target)

Step 3: Create a new job. Select your source (Firebird) and select which all tables you would like to migrate.

Step 4 (OPTIONAL): If you would like to do any other tasks like data type conversion, data cleaning, transformations, calculations those also you can instruct to do in natural English. NO knowledge of SQL or python or spark etc required.

Step 5: Orchenstrate/schedule this. While scheduling you can run it as one time load, or change data capture or truncate and load etc.

For more advanced users, Ask On Data is also providing options to write SQL, edit YAML, write PySpark code etc.

There are other functionalities like error logging, notifications, monitoring, logs etc which can provide more information like the amount of data transferred, logs, any error information if the job did not run and other kind of monitoring information etc.

Trying Ask On Data

You can reach out to us on mailto:[email protected] for a demo, POC, discussion and further pricing information. You can make use of our managed services or you can also download and install on your own servers our community edition from Github.

0 notes

Text

Leveraging Oracle SQL Developer Tools for Streamlined Database Management

In the world of database management, efficiency, and performance are paramount. Oracle SQL Developer tools have long been a staple for database administrators, developers, and IT professionals looking to optimize database environments. Whether you are managing a large-scale Oracle Database or integrating with Oracle E-Business Suite (EBS), having the right tools at your disposal can dramatically improve workflow efficiency and data management capabilities.

This blog will explore the powerful features of Oracle SQL Developer, focusing on its role in managing databases and supporting Oracle E-Business Suite environments. We’ll cover essential features, best practices, and how SQL Developer can help streamline database management tasks in your organization.

What is Oracle SQL Developer?

Oracle SQL Developer is an integrated development environment (IDE) provided by Oracle that allows database developers, administrators, and users to manage Oracle databases with ease. This free tool is designed to simplify database tasks like querying, reporting, and database management. SQL Developer supports both Oracle and non-Oracle databases, making it a versatile option for database administration.

The tool offers a graphical interface to interact with databases, allowing users to run SQL queries, manage database objects, generate reports, and analyze execution plans. It also features integration with other Oracle products like Oracle E-Business Suite, providing a cohesive environment for managing enterprise-level applications.

Key Features of Oracle SQL Developer

SQL Querying and Development: SQL Developer’s query editor offers syntax highlighting, code completion, and auto-formatting, making it easier to write and execute SQL queries. For those working with Oracle E-Business Suite, it’s essential to be able to query databases efficiently for insights into financials, supply chain management, HR, and more.

Database Management: SQL Developer makes managing database objects a breeze. It provides a tree-like view of database structures, allowing users to easily navigate and modify tables, views, and stored procedures. It’s an invaluable tool for administrators managing complex Oracle Database environments, especially when supporting Oracle E-Business Suite.

Database Migration: SQL Developer supports data migration between Oracle databases and even to/from non-Oracle systems. For businesses using Oracle EBS, migrating data between versions or to cloud environments becomes more straightforward. This feature is particularly useful during cloud migrations, ensuring a seamless transition without data loss.

Reports and Analytics: With built-in reporting tools, SQL Developer allows users to generate detailed reports from SQL queries. This is highly beneficial for administrators and managers needing insights from Oracle E-Business Suite data to make informed decisions in areas like finance, operations, and HR.

PL/SQL Debugging: Debugging PL/SQL code is essential for identifying and fixing issues in custom applications or reports developed for Oracle E-Business Suite. SQL Developer’s debugging tools allow you to step through code, inspect variables, and resolve issues efficiently.

Version Control Integration: For teams collaborating on database development and modification, SQL Developer supports version control integration with systems like Git. This ensures that changes to Oracle E-Business Suite customizations and configurations can be tracked, backed up, and reverted if necessary.

How Oracle SQL Developer Enhances Oracle E-Business Suite Management

Oracle E-Business Suite (EBS) consists of several interconnected applications for managing critical business functions such as finance, supply chain, HR, and procurement. EBS uses Oracle Databases to store vast amounts of transactional and operational data. To ensure optimal performance and reliability, it’s vital to manage and query the database effectively.

Oracle SQL Developer simplifies the administration of Oracle EBS databases in the following ways:

Efficient Querying of EBS Data: Oracle SQL Developer allows database administrators to easily query and analyze the vast amount of data stored in Oracle EBS. Whether it’s pulling financial reports, analyzing inventory levels, or reviewing HR data, SQL Developer makes it easy to access and manipulate the data stored in Oracle databases, ensuring that businesses can make informed decisions.

Customizing EBS Reports: Oracle EBS allows businesses to tailor reports to their specific needs, but this requires SQL and PL/SQL knowledge. SQL Developer’s rich editing and debugging tools make it easier to create, troubleshoot, and fine-tune these reports, enabling organizations to extract the exact data they need.

Database Performance Tuning: Performance optimization is crucial for ensuring Oracle EBS runs smoothly. SQL Developer helps administrators analyze query execution plans, identify slow-performing queries, and optimize database operations. This is particularly important when dealing with large Oracle EBS environments, as inefficient queries can impact performance across the entire system.

Data Migration for EBS Upgrades: When businesses need to upgrade Oracle EBS or migrate data to a new instance, SQL Developer offers tools to automate and streamline the data migration process. This ensures that critical EBS data is safely transferred without disruptions to business operations.

Integration with Oracle Cloud: As more businesses adopt Oracle Cloud Infrastructure (OCI), SQL Developer provides seamless integration with cloud environments, enabling EBS users to manage both on-premise and cloud-based Oracle databases. This integration is essential for businesses transitioning to hybrid cloud environments and needing to maintain compatibility between legacy on-premise systems and modern cloud applications.

Best Practices for Using Oracle SQL Developer

To get the most out of Oracle SQL Developer, it’s important to adopt best practices for usage:

Use Version Control for EBS Customizations: When customizing Oracle EBS or creating custom reports, make sure to use version control systems to track changes. This practice helps prevent errors and enables the team to revert to previous versions if needed.

Regularly Monitor and Optimize Database Performance: Use SQL Developer’s tools to regularly monitor your Oracle EBS database performance. Identify long-running queries or inefficient processes and optimize them to ensure the system runs smoothly.

Automate Routine Tasks: SQL Developer supports automation through scripts. Automate repetitive tasks like data imports, backups, and report generation to improve efficiency and reduce manual intervention.

Ensure Consistent Backups: Always ensure that your Oracle EBS database is backed up regularly. SQL Developer can help automate backups and recovery processes, minimizing downtime in case of failures.

Leverage Community Resources: SQL Developer has a vibrant user community and extensive documentation. If you run into issues or need help with specific Oracle EBS queries, tap into the community for advice and troubleshooting tips.

Conclusion

Oracle SQL Developer is an indispensable tool for database administrators and developers working with Oracle E-Business Suite. By simplifying the management of Oracle databases, streamlining querying processes, and supporting data migration and integration with cloud environments, SQL Developer makes managing large-scale Oracle EBS systems easier than ever.

By adopting best practices and leveraging SQL Developer’s extensive features, organizations can optimize their Oracle E-Business Suite environments, improve performance, and ensure smooth operations. Whether you’re troubleshooting complex issues, customizing reports, or migrating data to the cloud, SQL Developer empowers teams to work more efficiently and effectively.

0 notes

Text

Presenting Graph Spanner: A Reimagining of Graph Databases

Spanner Graph

Operational databases offer the basis for creating enterprise AI applications that are precise, pertinent, and based on enterprise truth as the use of AI continues to grow. At Google Cloud, the team strive to provide the greatest databases for creating and executing AI applications. In light of this, they are thrilled to introduce Graph Spanner, vector search, and sophisticated full-text search a few new features that will make it simpler for you to create effective gen AI products.

With the release of Bigtable SQL and Bigtable distributed counters, the team also revolutionising the developer experience by simplifying the process of creating at-scale apps. Lastly, they are announcing significant updates to help customers modernise their data estates by supporting their traditional corporate workloads, such as SQL Server and Oracle. Now let’s get started!

Using a graph database can be a valuable, albeit complex, approach for enterprises to gain insights from their connected data so they can create more intelligent applications. Google is excited to present Spanner Graph today, a ground-breaking solution that combines Spanner, Google’s constantly available, globally consistent database, with capabilities specifically designed for graph databases.

Graphs are a natural way to show relationships in data, which makes them useful for analysing data that is related, finding hidden patterns, and enabling applications that depend on connection knowledge. There are several applications for graphs, including route planning, customer 360, fraud detection, recommendation engines, network security, knowledge graphs, and data lineage tracing.

But implementing separate graph databases to handle these use cases frequently comes with the following drawbacks:

Data fragmentation and operational overhead: Separate graph database maintenance frequently results in data silos, added complexity, and inconsistent data copies, all of which make it more difficult to conduct effective analysis and decision-making.

Bottlenecks in terms of scalability and availability: As data volumes and complexity increase and impede corporate growth, many standalone graph databases find it difficult to fulfil the expectations of mission-critical applications in terms of scalability and availability.

Ecosystem friction and skill gaps: Adopting a fully new graph paradigm may be more difficult for organisations because of their significant investments in infrastructure and SQL knowledge. They require more resources and training to accomplish this, which could take resources away from other pressing corporate requirements.

With almost infinite scalability, Graph Spanner reinvents graph data management by delivering a unified database that seamlessly merges relational, search, graph, and AI capabilities. Spanner Graph provides you with:

Native graph experience: Based on open standards, the ISO Graph Query Language (GQL) interface provides a simple and clear method for matching patterns and navigating relationships.

Unified relational and graph models: Complete GQL and SQL interoperability eliminates data silos and gives developers the freedom to select the best tool for each given query. Data from tables and graphs can be tightly integrated to reduce operational overhead and the requirement for expensive, time-consuming data transfers.

Built-in search features: Graph data may be efficiently retrieved using keywords and semantic meaning thanks to rich vector and full-text search features.

Scalability, availability, and consistency that lead the industry: You can rely on Spanner’s renowned scalability, availability, and consistency to deliver reliable data foundations.

AI-powered insights: By integrating deeply with Vertex AI, Spanner Graph gains direct access to a robust set of AI models, which speeds up AI workflows.

Let’s examine more closely what makes Spanner Graph special

Spanner Graph provides a recognisable and adaptable graph database interface. Supported by Graph Spanner is ISO GQL, the latest global standard for graph databases. It makes it simpler to find hidden links and insights by providing a clear and simple method for matching patterns, navigating relationships, and filtering results in graph data.

Spanner Graph functions well with both full-text and vector searches. With the combination, you may use GQL to navigate relationships within graph structures and search to locate graph contents. To be more precise, you can use full-text search to identify nodes or edges that include particular keywords or use vector search to find nodes or edges based on semantic meaning. GQL allows you to easily explore the remainder of the graph from these beginning locations. This unified capability enables you to find hidden connections, patterns, and insights that would be challenging to find using any one method by combining various complimentary strategies.

Spanner Graph provides industry-leading consistency and availability while scaling beyond trillions of edges. Since Graph Spanner inherits Spanner’s nearly limitless scalability, industry-best availability, and worldwide consistency, it’s an excellent choice for even the most crucial graph applications. Specifically, without requiring your involvement, Spanner’s transparent sharding may leverage massively parallel query processing and scale elastically to very huge data sets.

Spanner Graph’s tight integration with Vertex AI speeds up your AI workflows. Spanner Graph has a close integration with Vertex AI, the unified, fully managed AI development platform offered by Google Cloud. Using the Graph Spanner schema and query, you may immediately access Vertex AI’s vast collection of generative and predictive models, which can expedite your AI workflow. To enrich your graph, for instance, you can utilise LLMs to build text embeddings for graph nodes and edges. After that, you can use vector search to extract data from your graph in the semantic space.

You may create more intelligent applications with Spanner Graph

With practically limitless scalability, Spanner Graph effortlessly combines graph, relational, search, and AI capabilities, creating a plethora of opportunities:

Product recommendations: To create a knowledge network full of context, Spanner network represents the intricate interactions that exist between people, products, and preferences. By fusing full-text search with quick graph traversal, you may recommend products based on user searches, past purchases, preferences, and similarity to other users.

Financial fraud detection: It is simpler to spot questionable connections when financial entities like accounts, transactions, and people are represented naturally in Spanner Graphs. Similar patterns and anomalies in the embedding space are found by vector search. Financial institutions can minimise financial losses by promptly and correctly identifying possible dangers through the combination of these technologies.

Social networks: Even in the biggest social networks, the Graph Spanner model logically individuals, groups, interests, and interactions. For individualised recommendations, it facilitates the quick identification of trends like overlapping group memberships, mutual friends, or related interests. Users can quickly locate individuals, groups, posts, or particular subjects by using natural language searches with the integrated full-text search feature.

Gaming: Player characters, objects, places, and the connections between them are all natural representations of elements in game environments. Effective link traversal is made possible by the Spanner Graph, and this is crucial for game features like pathfinding, inventory control, and social interactions. Furthermore, even at times of high usage, Spanner Graph’s global consistency and scalability ensure a smooth and fair experience for every player.

Network security: Recognising trends and abnormalities requires an understanding of the relationships that exist between individuals, devices, and events over time. Security experts can employ graph capabilities to trace the origins of attacks, evaluate the effect of security breaches, and correlate these findings with temporal trends for proactive threat detection and mitigation thanks to Graph Spanner relational and graph interoperability.

GraphRAG: By utilising a knowledge graph to anchor foundation models, Spanner Graph elevates Retrieval Augmented Generation (RAG) to a new level. Furthermore, Spanner Graph’s integration of tabular and graph data enhances your AI applications by providing contextual information that neither format could provide on its own. It is capable of handling even the largest knowledge graphs due to its unparalleled scalability. Your GenAI workflows are streamlined with integrated Vertex AI and built-in vector search.

Read more on govindhtech.com

#PresentingGraphSpanner#GraphDatabases#SpannerGraph#VertexAI#AIapplications#GoogleCloud#AIproducts#vectorsearch#utiliseLLMs#game#ai#GraphSpanner#datasilos#technology#technews#news#govindhtech

0 notes

Text

Newt Global’s Expertise in Oracle to GCP PostgreSQL Migration

Understanding the Need for Migration

Businesses often face challenges with high licensing costs and limited scalability when using Oracle databases. GCP PostgreSQL provides an open-source alternative that is cost-effective and scalable. It also integrates seamlessly with GCP's suite of services, enabling enhanced analytics and machine learning capabilities.

Essential Tools to Migrate Oracle to GCP PostgreSQL: Streamlining Data Transfer and Schema Conversion Several tools facilitate the migration process from Oracle to GCP PostgreSQL. These tools streamline data transfer, and schema conversion, and ensure minimal downtime.

Google Database Migration Service (DMS)

Functionality: DMS provides a managed service for migrating databases to GCP with minimal downtime.

Advantages: Automated taking care of the migration process, high availability, and persistent information replication.

Ora2Pg

Functionality: An open-source tool that converts Oracle schemas to PostgreSQL-compatible schemas.

Advantages: Comprehensive pattern change, support for PL/SQL to PL/pgSQL transformation, and data migration capabilities.

Schema Conversion Tool (SCT)

Functionality: A tool by AWS that can also be used for schema conversion to PostgreSQL.

Advantages: Detailed analysis and conversion of database schema, stored procedures, and functions.

Google Cloud SQL

Functionality: Managed database service that supports PostgreSQL.

Advantages: Simplifies database administration assignments, gives automatic backups and ensures high accessibility.

How Newt Global Facilitates Migration

Newt Global, a leading cloud transformation company, specializes in database migration services. Their expertise in Oracle to GCP PostgreSQL migration ensures a smooth transition with minimal disruption to business operations. Here’s how Newt Global can assist:

Expert Assessment and Planning

Customized Assessment: Newt Global conducts a thorough assessment of your Oracle databases, identifying dependencies and potential challenges.

Tailored Planning: They develop a detailed migration plan tailored to your business needs, ensuring a seamless transition.

Efficient Schema Conversion

Advanced Tools: Utilizing tools like Ora2Pg and custom scripts, Newt Global ensures accurate schema conversion.

Manual Optimization: Their experts manually fine-tune complex objects and stored procedures, ensuring optimal performance in PostgreSQL.

Data Migration with Minimal Downtime

Robust Data Transfer: Using Google DMS, Newt Global ensures secure and efficient data transfer from Oracle to PostgreSQL.

Continuous Replication: They set up continuous data replication to ensure the latest data is always available during the migration process.

Comprehensive Testing and Validation

Data Integrity Verification: Newt Global performs extensive data integrity checks to ensure data consistency.

Application and Performance Testing: They conduct thorough application and performance testing, ensuring your systems function correctly post-migration.

Post-Migration Optimization and Support

Performance Tuning: Newt Global gives progressing execution tuning and optimization administrations.

24/7 Support: Their support team is available around the clock to address any issues and ensure smooth operations.

Migration Process

Assessment and Planning

Inventory Assessment: Identify the Oracle databases and their dependencies.

Compatibility Check: Evaluate the compatibility of Oracle features with PostgreSQL.

Planning: Develop a point-by-point migration plan including counting timelines, asset allotment, and risk mitigation procedures.

Schema Conversion

Using Ora2Pg: Convert Oracle schema objects (tables, indexes, triggers) to PostgreSQL.

Manual Adjustments: Review and manually adjust any complex objects or stored procedures that require fine-tuning.

Data Migration

Initial Data Load: Use tools like DMS to perform the initial data load from Oracle to PostgreSQL.

Continuous Data Replication: Set up continuous replication to ensure that changes in the Oracle database are mirrored in PostgreSQL until the cutover.

Testing and Validation

Data Integrity: Validate data integrity by comparing data between Oracle and PostgreSQL.

Application Testing: Ensure that applications interacting with the database function correctly.

Performance Testing: Conduct performance testing to ensure that the PostgreSQL database meets the required performance benchmarks.

Cutover and Optimization

Final Synchronization: Perform a final synchronization of data before the cutover.

Switch Over: Redirect applications to the new PostgreSQL database.

Optimization: Optimize the PostgreSQL database for performance, including indexing and query tuning.

Expertly Migrate Oracle to GCP PostgreSQL: Newt Global's Comprehensive Services

Migrating from Oracle to GCP PostgreSQL can unlock significant cost savings, scalability, and advanced analytics capabilities for your organization. Leveraging tools like Google DMS, Ora2Pg, and Cloud SQL, along with a structured migration process, ensures a seamless transition. The future of your database infrastructure on GCP PostgreSQL promises enhanced performance, integration with cutting-edge GCP services, and robust security and compliance measures.

Newt Global's expertise in database migration further ensures that your transition is smooth and efficient. Their tailored assessment and planning, advanced schema conversion, and comprehensive testing and validation processes help mitigate risks and minimize downtime. Post-migration, Newt Global offers performance tuning, ongoing support, and optimization services to ensure your PostgreSQL environment operates at peak efficiency.

By partnering with Newt Global, you gain access to a team of experts dedicated to making your migration journey as seamless as possible. This empowers you to focus on leveraging the modern capabilities of GCP PostgreSQL to drive business insights and development. Embrace the future of cloud-based database solutions with confidence, knowing that Newt Global is there to support every step of your migration journey. Thanks For Reading

For More Information, Visit Our Website: https://newtglobal.com/

0 notes

Text

Skills required in IT Sector

The IT sector is broad and diverse, so the skills required can vary depending on the specific role. However, here are some fundamental skills and competencies that are valuable across many IT positions:

**Technical Skills**:

- **Programming Languages**: Knowledge of languages such as Python, Java, C++, JavaScript, and SQL.

- **Database Management**: Understanding of database systems like MySQL, PostgreSQL, MongoDB, and Oracle.

- **Networking**: Familiarity with network protocols, configuration, and security (e.g., TCP/IP, DNS, VPNs).

- **Operating Systems**: Proficiency in Windows, Linux, and macOS.

- **Cloud Computing**: Experience with cloud platforms like AWS, Azure, and Google Cloud.

- **Cybersecurity**: Knowledge of security practices, threat assessment, and mitigation techniques.

**Analytical Skills**:

- **Problem-Solving**: Ability to troubleshoot and resolve technical issues efficiently.

- **Data Analysis**: Skills in analyzing and interpreting data to make informed decisions.

**Soft Skills**:

- **Communication**: Clear communication with team members, stakeholders, and clients, both written and verbal.

- **Teamwork**: Ability to work effectively in a team environment, often across different departments.

- **Project Management**: Organizational skills to manage projects, including planning, executing, and monitoring.

**Adaptability**:

- **Learning Agility**: Willingness and ability to quickly learn new technologies and adapt to changes in the IT landscape.

**Technical Support**:

- **Customer Service**: Skills to provide support and assistance to end-users, understanding their needs and resolving issues.

**Software Development**:

- **Development Frameworks**: Familiarity with frameworks and libraries relevant to the job, such as React for web development or TensorFlow for machine learning.

**System Administration**:

- **Server Management**: Knowledge of setting up, configuring, and maintaining servers and other IT infrastructure.

**Project Management Tools**:

- **Familiarity with Tools**: Experience with tools like Jira, Trello, or Asana for managing tasks and projects.

These skills can be developed through formal education, certifications, hands-on experience, and continuous learning.

TCCI Computer classes provide the best training in all computer courses online and offline through different learning methods/media located in Bopal Ahmedabad and ISCON Ambli Road in Ahmedabad.

For More Information:

Call us @ +91 98256 18292

Visit us @ http://tccicomputercoaching.com/

#Computer skills course in bopal-Ahmedabad#computer coding class in Iscon Ambli Road-Ahmedabad#computer engineering class in bopal-ahmedabad#computer science online class#IT computer course in bopal-ahmedabad

0 notes

Text

Comprehensive Guide for Oracle to PostgreSQL Migration at Quadrant

Migrating from Oracle to PostgreSQL at Quadrant is a multi-faceted process involving meticulous planning, schema conversion, data migration, and thorough testing. This guide offers a detailed step-by-step approach to ensure a smooth and efficient transition.

Phase 1: Pre-Migration Assessment

Inventory of Database Objects:

Start by cataloging all objects in your Oracle database, including tables, views, indexes, triggers, sequences, procedures, functions, packages, and synonyms. This comprehensive inventory will help you scope the migration accurately.

Analysis of SQL and PL/SQL Code:

Review all SQL queries and PL/SQL code for Oracle-specific features and syntax. This step is crucial for planning necessary modifications and ensuring compatibility with PostgreSQL.

Phase 2: Schema Conversion

Data Type Mapping:

Oracle and PostgreSQL have different data types. Here are some common mappings:

Oracle Data Type PostgreSQL Data Type

NUMBER NUMERIC

VARCHAR2, NVARCHAR2 VARCHAR

DATE TIMESTAMP

CLOB TEXT

BLOB BYTEA

RAW BYTEA

TIMESTAMP WITH TIME ZONE TIMESTAMPTZ

TIMESTAMP WITHOUT TIME ZONE TIMESTAMP

Tools for Schema Conversion:

Utilize tools designed to facilitate schema conversion at Quadrant :

ora2pg: A robust open-source tool specifically for Oracle to PostgreSQL migration.

SQL Developer Migration Workbench: An Oracle tool to aid database migrations.

pgloader: Capable of both schema and data migration.

Update Connection Strings:

Modify your application’s database connection strings to point to the PostgreSQL database. This involves updating configuration files, environment variables, or code where connection strings are defined.

Modify SQL Queries:

Review and adjust SQL queries to ensure compatibility with PostgreSQL. Replace Oracle-specific functions with PostgreSQL equivalents, handle case sensitivity, and rewrite joins and subqueries as needed.

Rewrite PL/SQL Code:

Rewrite Oracle PL/SQL code (procedures, functions, packages) in PostgreSQL’s procedural language, PL/pgSQL. Adapt the code to accommodate syntax and functionality differences.

Phase 5: Testing

Functional Testing:

Conduct thorough functional testing to ensure that all application features work correctly with the PostgreSQL database. This includes testing all CRUD operations and business logic.

Performance Testing:

Compare the performance of your application on PostgreSQL against its performance on Oracle. Identify and optimize any slow queries or processes.

Data Integrity Testing:

Verify the accuracy of data post-migration by checking for data loss, corruption, and ensuring the integrity of relationships and constraints.

Phase 6: Cutover

Final Backup:

Take a final backup of the Oracle database before the cutover to ensure you have a fallback option in case of any issues.

Final Data Sync:

Perform a final incremental data sync to capture any changes made during the migration process.

Go Live:

Switch your application to use the PostgreSQL database. Ensure that all application components are pointing to the new database and that all services are operational.

Additional Resources

Official Documentation:

Refer to the official documentation of migration tools (ora2pg, pgloader, PostgreSQL) for detailed usage instructions and options.

Community and Support:

Engage with community forums, Q&A sites, and professional support for assistance during migration. The PostgreSQL community is active and can provide valuable help.

Conclusion

Migrating from Oracle to PostgreSQL requires careful planning, thorough testing, and methodical execution. By following this guide, you can systematically convert your Oracle schema, migrate your data, and update your application to work seamlessly with PostgreSQL. This transition will allow you to leverage PostgreSQL’s open-source benefits, advanced features, and robust community support.

For more detailed guidance and practical examples, explore our in-depth migration guide from Oracle to PostgreSQL. This resource provides valuable insights and tips to facilitate your migration journey.

0 notes

Text

Oracle SQL Server Training in Mohali accesses your database opportunity

In the computerized age, information rules, driving development, effectiveness, and upper hand across enterprises. As organizations saddle the force of information to go with informed choices and smooth out activities, the interest for gifted experts capable in data set administration advancements, for example, Oracle and SQL Server keeps on taking off. In the energetic city of Mohali, hopeful people and old pros the same are quickly taking advantage of the chance to improve their aptitude through particular training programs custom fitted to meet the developing requirements of the business.

Oracle\SQL Server training mohali offers a far reaching educational program intended to outfit members with the information, abilities, and active experience expected to succeed in data set organization, improvement, and enhancement. With an emphasis on pragmatic, genuine applications, these training programs overcome any issues among hypothesis and work on, enabling students to explore complex information bases with certainty and accuracy.

One of the critical benefits of Oracle\SQL Server training in Mohali is the admittance to master teachers who bring an abundance of industry experience and knowledge to the homeroom. These old pros influence their aptitude to direct members through the complexities of data set plan, execution, and the board, offering significant bits of knowledge and best practices gathered from long stretches of active involvement with the field.

Besides, Oracle\SQL Server training in Mohali is recognized by its cutting edge offices and state of the art innovation framework. Members approach vigorous programming stages and devices ordinarily utilized in data set administration, furnishing them with a sensible learning climate where they can apply hypothetical ideas to reasonable situations. From data set displaying and questioning to execution tuning and catastrophe recuperation, these training programs cover many points fundamental for outcome in the powerful universe of information the board.

Besides, Oracle\SQL Server training in Mohali takes special care of students of all levels, from fledglings hoping to lay out areas of strength for an in data set essentials to experienced experts trying to grow their range of abilities and keep up to date with the most recent industry patterns. With adaptable booking choices and modified training modules, members can fit their growth opportunity to suit their singular objectives, inclinations, and accessibility.

The complete idea of Oracle\SQL Server training in Mohali stretches out past specialized capability to envelop fundamental delicate abilities like correspondence, coordinated effort, and critical thinking. Through intelligent talks, active activities, and gathering projects, members foster decisive abilities to reason and cooperation abilities fundamental for outcome in the present high speed, cooperative workplaces.

Oracle\SQL Server training mohali offers significant systems administration open doors, permitting members to interface with peers, industry specialists, and possible businesses. These systems administration occasions, studios, and courses give a stage to information sharing, proficient turn of events, and professional success, enabling members to fabricate significant connections and grow their expert organization inside the nearby and worldwide data set local area.

Oracle\SQL Server training in Mohali fills in as an impetus for profession development and progression in the field of data set administration. By giving extensive, active training, master guidance, and significant systems administration potential open doors, these projects engage members to dominate fundamental abilities, upgrade their skill, and open new open doors in the powerful universe of information. Whether you're an old pro hoping to remain on the ball or a hopeful individual anxious to break into the field, Oracle\SQL Server training in Mohali offers the information, assets, and backing you really want to prevail in the present information driven scene.

For More Info:-

Animation & Multimedia training mohali

0 notes

Text

SAP Basis Software

SAP Basis: The Backbone of Your SAP Landscape

SAP systems are at the heart of many large enterprises around the world. These complex software suites power everything from accounting and finance to supply chain management and human resources. However, SAP applications and their underlying modules don’t run in a vacuum. They rely heavily on a core technological foundation known as SAP Basis.

What is SAP Basis?

SAP Basis is a collection of middleware programs and tools provided by SAP that form the technical platform for all SAP applications. Think of Basis as the operating system for your SAP environment. It performs several critical functions:

Platform Independence: Basis enables SAP applications to run on a variety of operating systems (Windows, Linux, Unix, etc.) and databases (Oracle, IBM DB2, SQL Server, etc.), ensuring portability and flexibility.

System Administration: Basis administrators manage the installation, configuration, and maintenance of the SAP landscape. This includes tasks like system updates, user management, and security setup.

Performance Monitoring: SAP Basis includes tools to monitor system health, analyze performance bottlenecks, and perform troubleshooting.

Communication and Integration: Basis handles communication between SAP modules, external systems, and different hardware components, facilitating seamless data flow.

Key Components of SAP Basis

SAP Basis comprises a comprehensive set of tools and services. Some of the most important include:

SAP NetWeaver Application Server (AS): The core runtime environment where SAP applications execute. It exists in both ABAP (SAP’s proprietary programming language) and Java versions.

Database Management: Basis provides tools for interacting with the underlying database systems, managing data storage, and ensuring database consistency.

Transport Management System (TMS): TMS facilitates the organized movement of system changes, configurations, and code between different SAP environments (development, testing, production).

SAP Solution Manager: A centralized platform for monitoring, managing, and optimizing the entire SAP landscape.

The Role of SAP Basis Administrators

SAP Basis administrators are the guardians of your SAP system’s health. Their responsibilities include:

Installation and Configuration: Setting up new SAP systems, applying patches, and configuring system settings.

Performance Tuning: Optimizing system parameters, database queries, and code to ensure the SAP system runs efficiently.

Troubleshooting: Identifying and resolving technical issues such as system crashes, errors, and performance problems.

Security: Implementing security measures, managing user authorizations, and patching vulnerabilities.

Backup and Recovery: Ensuring critical data is regularly backed up and developing disaster recovery plans.

Why is SAP Basis Important?

SAP Basis is integral to the success of any SAP implementation. It directly impacts:

System Stability: A well-maintained SAP Basis environment ensures that your SAP applications are always available and reliable.

Performance: SAP Basis optimization leads to faster transaction processing, quicker reports, and a better overall user experience.

Agility: Basis allows for efficient code deployments, upgrades, and system changes, enabling businesses to adapt quickly to changing needs.

Security: Robust Basis security protects sensitive business data and helps prevent cyberattacks.

Learning SAP Basis

If you’re interested in a career in SAP, becoming proficient in SAP Basis is incredibly valuable. There are many online courses, training institutes, and certifications available to help you gain the necessary skills.

In Conclusion

SAP Basis may operate behind the scenes, but its influence on the success of any SAP system cannot be overstated. As the technological foundation, it provides the bedrock for stability, performance, and security that modern enterprises need.

youtube

You can find more information about SAP BASIS in this SAP BASIS Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for SAP BASIS Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on SAP BASIS here – SAP BASIS Blogs

You can check out our Best In Class SAP BASIS Details here – SAP BASIS Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook:https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeek

#Unogeeks #training #Unogeekstraining

1 note

·

View note

Text

Understanding the World of SAP Basis Consultants: Roles and Responsibilities

1. Database Administrator

A SAP Database Administrator (DBA) in SAP Basis Consultancy is a specialized role focused on the management and optimization of databases used by SAP systems. Their primary responsibility is to ensure the reliability, performance, and integrity of the databases that store critical business data within the SAP landscape.

The role of a SAP Database Administrator in SAP Basis Consultancy typically includes the following responsibilities:

Database Installation and Configuration: Installing and configuring database management systems (DBMS) such as SAP HANA, Oracle, Microsoft SQL Server, or IBM Db2 to support SAP applications, ensuring adherence to SAP and DBMS vendor recommendations and best practices.

Database Monitoring and Performance Tuning: Monitoring database performance, resource utilization, and system health using monitoring tools and techniques, and optimizing database configurations, indexes, and queries to improve performance and scalability.

Backup and Recovery: Implementing backup and recovery strategies to protect data integrity and ensure business continuity in the event of system failures, data corruption, or disasters, including regular backups, data replication, and disaster recovery planning.

Database Security: Implementing database security measures such as access controls, encryption, and auditing to protect sensitive data stored within the database and ensure compliance with regulatory requirements and industry standards.

Database Upgrades and Patching: Planning and executing database upgrades, patches, and version migrations to apply bug fixes, security updates, and new features, while minimizing downtime and disruption to business operations.

Database Maintenance: Performing routine database maintenance tasks such as database reorganizations, data purging, and space management to optimize storage utilization and maintain database performance over time.

Database Troubleshooting and Problem Resolution: Identifying and resolving database-related issues, errors, and performance bottlenecks through troubleshooting, root cause analysis, and collaboration with other technical teams and vendors.

Database Documentation and Knowledge Transfer: Documenting database configurations, procedures, and best practices, and providing training and knowledge transfer to other team members and stakeholders to ensure effective database management and support.

Overall, the role of a SAP Database Administrator in SAP Basis Consultancy is critical in ensuring the stability, performance, and security of databases supporting SAP systems, enabling businesses to leverage SAP technologies effectively to drive innovation, efficiency, and growth. They play a key role in maintaining data integrity, availability, and compliance within the SAP landscape, thereby contributing to the overall success of the organization.

2. Transport and Batch Job Administrator

A Transport and Batch Job Administrator in SAP Basis Consultancy is responsible for managing and controlling the transport of development objects and scheduling batch jobs within the SAP landscape. Their role involves ensuring the orderly and efficient movement of changes from development through testing and into production environments, as well as the scheduling and monitoring of batch jobs for automated processing of tasks within SAP systems.

The responsibilities of a Transport and Batch Job Administrator in SAP Basis Consultancy typically include:

Transport Management: Administering the transport landscape, including configuring transport routes, transport layers, and transport targets to facilitate the controlled movement of development objects (e.g., programs, configurations, reports) between SAP systems (e.g., development, quality assurance, production).

Transport Requests: Managing transport requests created by developers or functional consultants, reviewing and releasing requests for import into target systems, and resolving any transport-related issues or conflicts that may arise during the import process.

Transport Monitoring: Monitoring the status of transport requests, tracking their progress through the transport landscape, and ensuring that transports are completed successfully and in a timely manner to avoid disruptions to development or production environments.

Transport Security: Implementing security measures to protect transport requests and prevent unauthorized changes to system landscapes, including role-based access controls, transport group assignments, and change management procedures.

Batch Job Scheduling: Scheduling and managing batch jobs within SAP systems to automate repetitive tasks such as data processing, report generation, and system maintenance activities, using tools such as SAP Solution Manager or SAP Batch Management.

Batch Job Monitoring: Monitoring the execution of batch jobs, verifying job completion status, and troubleshooting any job failures or errors that occur during processing, ensuring that critical business processes are executed accurately and on schedule.

Batch Job Optimization: Optimizing batch job scheduling and execution parameters to improve system performance, resource utilization, and throughput, while minimizing job runtime and processing delays.

Documentation and Reporting: Documenting transport configurations, transport logs, batch job schedules, and performance metrics, and generating reports on transport activities, batch job execution, and system utilization for analysis and auditing purposes.

Overall, the role of a Transport and Batch Job Administrator in SAP Basis Consultancy is essential in ensuring the smooth and controlled movement of changes within the SAP landscape, as well as the efficient execution of batch processes to support business operations. They play a critical role in maintaining system integrity, stability, and reliability, thereby enabling organizations to leverage SAP technologies effectively to achieve their strategic objectives.

In SAP Basis Consultancy, a DDIC (Data Dictionary) Manager is responsible for overseeing and managing the Data Dictionary components within the SAP system landscape. The Data Dictionary is a central repository within SAP systems where data definitions, metadata, and database structures are stored and managed. The role of a DDIC Manager involves ensuring the integrity, consistency, and efficient use of data dictionary objects across the SAP landscape.

3. DDIC Manager

The responsibilities of a DDIC Manager in SAP Basis Consultancy typically include:

Data Dictionary Management: Managing the creation, modification, and deletion of data dictionary objects such as tables, views, domains, data elements, and structures within SAP systems, ensuring adherence to data modeling standards and best practices.

Data Modeling: Collaborating with functional consultants and business stakeholders to design and implement data models that accurately represent business entities, processes, and relationships, and support the requirements of SAP applications and custom developments.

Data Integrity and Consistency: Ensuring the integrity and consistency of data dictionary objects across different SAP systems and client environments, through proper transport management, version control, and synchronization mechanisms.

Data Governance: Establishing data governance policies, procedures, and standards to govern the creation, maintenance, and usage of data dictionary objects, and ensuring compliance with regulatory requirements and data management best practices.

Performance Optimization: Optimizing data dictionary objects and database structures for performance, scalability, and efficiency, by analyzing usage patterns, access methods, and indexing strategies, and implementing appropriate optimization techniques.

Security and Access Control: Implementing security measures and access controls to protect sensitive data stored in the data dictionary, and ensuring that only authorized users have the necessary permissions to create, modify, or access data dictionary objects.

Documentation and Training: Documenting data dictionary structures, definitions, and dependencies, and providing training and knowledge transfer to developers, administrators, and other stakeholders on data dictionary concepts, tools, and best practices.

Integration and Interoperability: Ensuring seamless integration and interoperability between data dictionary objects and other SAP components such as ABAP programs, SAP Fiori apps, and SAP BW/BI systems, to enable consistent data management and reporting across the SAP landscape.

Overall, the role of a DDIC Manager in SAP Basis Consultancy is crucial in maintaining the integrity, consistency, and usability of data dictionary objects within the SAP landscape, thereby supporting effective data management, application development, and business operations. They play a key role in enabling organizations to leverage SAP technologies effectively to drive innovation, efficiency, and growth.

For More Details Please Visit Our Website – Www.Rhsofttech.com

1 note

·

View note

Text

Integration Specialist: Bridging the Gap Between Systems and Efficiency

The Key to Scalable, Secure, and Future-Ready IT Solutions.

Introduction

In today’s interconnected digital landscape, businesses rely on seamless data exchange and system connectivity to optimize operations and improve efficiency. Integration specialists play a crucial role in designing, implementing, and maintaining integrations between various software applications, ensuring smooth communication and workflow automation. With the rise of cloud computing, APIs, and enterprise applications, integration specialists are essential for driving digital transformation.

What is an Integration Specialist?

An Integration Specialist is a professional responsible for developing and managing software integrations between different systems, applications, and platforms. They design workflows, troubleshoot issues, and ensure data flows securely and efficiently across various environments. Integration specialists work with APIs, middleware, and cloud-based tools to connect disparate systems and improve business processes.

Types of Integration Solutions

Integration specialists work with different types of solutions to meet business needs:

API Integrations

Connects different applications via Application Programming Interfaces (APIs).

Enables real-time data sharing and automation.

Examples: RESTful APIs, SOAP APIs, GraphQL.

Cloud-Based Integrations

Connects cloud applications like SaaS platforms.

Uses integration platforms as a service (iPaaS).

Examples: Zapier, Workato, MuleSoft, Dell Boomi.

Enterprise System Integrations

Integrates large-scale enterprise applications.

Connects ERP (Enterprise Resource Planning), CRM (Customer Relationship Management), and HR systems.

Examples: Salesforce, SAP, Oracle, Microsoft Dynamics.

Database Integrations

Ensures seamless data flow between databases.

Uses ETL (Extract, Transform, Load) processes for data synchronization.

Examples: SQL Server Integration Services (SSIS), Talend, Informatica.

Key Stages of System Integration

Requirement Analysis & Planning

Identify business needs and integration goals.

Analyze existing systems and data flow requirements.

Choose the right integration approach and tools.

Design & Architecture

Develop a blueprint for the integration solution.

Select API frameworks, middleware, or cloud services.

Ensure scalability, security, and compliance.

Development & Implementation

Build APIs, data connectors, and automation workflows.

Implement security measures (encryption, authentication).

Conduct performance optimization and data validation.

Testing & Quality Assurance

Perform functional, security, and performance testing.

Identify and resolve integration errors and data inconsistencies.

Conduct user acceptance testing (UAT).

Deployment & Monitoring

Deploy integration solutions in production environments.

Monitor system performance and error handling.

Ensure smooth data synchronization and process automation.

Maintenance & Continuous Improvement

Provide ongoing support and troubleshooting.

Optimize integration workflows based on feedback.

Stay updated with new technologies and best practices.

Best Practices for Integration Success

✔ Define clear integration objectives and business needs. ✔ Use secure and scalable API frameworks. ✔ Optimize data transformation processes for efficiency. ✔ Implement robust authentication and encryption. ✔ Conduct thorough testing before deployment. ✔ Monitor and update integrations regularly. ✔ Stay updated with emerging iPaaS and API technologies.

Conclusion

Integration specialists are at the forefront of modern digital ecosystems, ensuring seamless connectivity between applications and data sources. Whether working with cloud platforms, APIs, or enterprise systems, a well-executed integration strategy enhances efficiency, security, and scalability. Businesses that invest in robust integration solutions gain a competitive edge, improved automation, and streamlined operations.

Would you like me to add recommendations for integration tools or comparisons of middleware solutions? 🚀

0 notes

Text

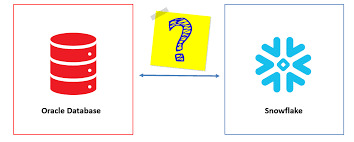

"Seamless Data Migration: Oracle to Snowflake Integration"

In the ever-evolving landscape of data management and analytics, organizations often find themselves needing to migrate their data from one platform to another. Oracle, a widely-used relational database system, and Snowflake, a cloud-based data warehousing platform, are two powerful tools that serve different purposes in the data ecosystem. This article explores the significance of migrating data from Oracle to Snowflake, the challenges involved, and the strategies for a successful transition.

Why Migrate from Oracle to Snowflake?

1. Cloud Transformation