#vectorsearch

Explore tagged Tumblr posts

Text

Valkey 7.2 On Memorystore: Open-Source Key-Value Service

The 100% open-source key-value service Memorystore for Valkey is launched by Google Cloud.

In order to give users a high-performance, genuinely open-source key-value service, the Memorystore team is happy to announce the preview launch of Valkey 7.2 support for Memorystore.

Memorystore for Valkey

A completely managed Valkey Cluster service for Google Cloud is called Memorystore for Valkey. By utilizing the highly scalable, reliable, and secure Valkey service, Google Cloud applications may achieve exceptional performance without having to worry about handling intricate Valkey deployments.

In order to guarantee high availability, Memorystore for Valkey distributes (or “shards”) your data among the primary nodes and duplicates it among the optional replica nodes. Because Valkey performance is greater on many smaller nodes rather than fewer bigger nodes, the horizontally scalable architecture outperforms the vertically scalable architecture in terms of performance.

Memorystore for Valkey is a game-changer for enterprises looking for high-performance data management solutions reliant on 100% open source software. It was added to the Memorystore portfolio in response to customer demand, along with Memorystore for Redis Cluster and Memorystore for Redis. From the console or gcloud, users can now quickly and simply construct a fully-managed Valkey Cluster, which they can then scale up or down to suit the demands of their workloads.

Thanks to its outstanding performance, scalability, and flexibility, Valkey has quickly gained popularity as an open-source key-value datastore. Valkey 7.2 provides Google Cloud users with a genuinely open source solution via the Linux Foundation. It is fully compatible with Redis 7.2 and the most widely used Redis clients, including Jedis, redis-py, node-redis, and go-redis.

Valkey is already being used by customers to replace their key-value software, and it is being used for common use cases such as caching, session management, real-time analytics, and many more.

Customers may enjoy a nearly comparable (and code-compatible) Valkey Cluster experience with Memorystore for Valkey, which launches with all the GA capabilities of Memorystore for Redis Cluster. Similar to Memorystore for Redis Cluster, Memorystore for Valkey provides RDB and AOF persistence, zero-downtime scaling in and out, single- or multi-zone clusters, instantaneous integrations with Google Cloud, extremely low and dependable performance, and much more. Instances up to 14.5 TB are also available.

Memorystore for Valkey, Memorystore for Redis Cluster, and Memorystore for Redis have an exciting roadmap of features and capabilities.

The momentum of Valkey

Just days after Redis Inc. withdrew the Redis open-source license, the open-source community launched Valkey in collaboration with the Linux Foundation in March 2024 (1, 2, 3). Since then, they have had the pleasure of working with developers and businesses worldwide to propel Valkey into the forefront of key-value data stores and establish it as a premier open source software (OSS) project. Google Cloud is excited to participate in this community launch with partners and industry experts like Snap, Ericsson, AWS, Verizon, Alibaba Cloud, Aiven, Chainguard, Heroku, Huawei, Oracle, Percona, Ampere, AlmaLinux OS Foundation, DigitalOcean, Broadcom, Memurai, Instaclustr from NetApp, and numerous others. They fervently support open source software.

The Valkey community has grown into a thriving group committed to developing Valkey the greatest open source key-value service available thanks to the support of thousands of enthusiastic developers and the former core OSS Redis maintainers who were not hired by Redis Inc.

With more than 100 million unique active users each month, Mercado Libre is the biggest finance, logistics, and e-commerce company in Latin America. Diego Delgado discusses Valkey with Mercado Libre as a Software Senior Expert:

At Mercado Libre, Google Cloud need to handle billions of requests per minute with minimal latency, which makes caching solutions essential. Google Cloud especially thrilled about the cutting-edge possibilities that Valkey offers. They have excited to investigate its fresh features and add to this open-source endeavor.”

The finest is still to come

By releasing Memorystore for Valkey 7.2, Memorystore offers more than only Redis Cluster, Redis, and Memcached. And Google Cloud is even more eager about Valkey 8.0’s revolutionary features. Major improvements in five important areas performance, reliability, replication, observability, and efficiency were introduced by the community in the first release candidate of Valkey 8.0. With a single click or command, users will be able to accept Valkey 7.2 and later upgrade to Valkey 8.0. Additionally, Valkey 8.0 is compatible with Redis 7.2, exactly like Valkey 7.2 was, guaranteeing a seamless transition for users.

The performance improvements in Valkey 8.0 are possibly the most intriguing ones. Asynchronous I/O threading allows commands to be processed in parallel, which can lead to multi-core nodes working at a rate that is more than twice as fast as Redis 7.2. From a reliability perspective, a number of improvements provided by Google, such as replicating slot migration states, guaranteeing automatic failover for empty shards, and ensuring slot state recovery is handled, significantly increase the dependability of Cluster scaling operations. The anticipation for Valkey 8.0 is already fueling the demand for Valkey 7.2 on Memorystore, with a plethora of further advancements across several dimensions (release notes).

Similar to how Redis previously expanded capability through modules with restricted licensing, the community is also speeding up the development of Valkey’s capabilities through open-source additions that complement and extend Valkey’s functionality. The capabilities covered by recently published RFCs (“Request for Comments”) include vector search for extremely high performance vector similarly search, JSON for native JSON support, and BloomFilters for high performance and space-efficient probabilistic filters.

Former vice president of Gartner and principal analyst of SanjMo Sanjeev Mohan offers his viewpoint:

The advancement of community-led initiatives to offer feature-rich, open-source database substitutes depends on Valkey. Another illustration of Google’s commitment to offering really open and accessible solutions for customers is the introduction of Valkey support in Memorystore. In addition to helping developers looking for flexibility, their contributions to Valkey also support the larger open-source ecosystem.

It seems obvious that Valkey is going to be a game-changer in the high-performance data management area with all of the innovation in Valkey 8.0, as well as the open-source improvements like vector search and JSON, and for client libraries.

Valkey is the secret to an OSS future

Take a look at Memorystore for Valkey right now, and use the UI console or a straightforward gcloud command to establish your first cluster. Benefit from OSS Redis compatibility to simply port over your apps and scale in or out without any downtime.

Read more on govindhtech.com

#Valkey72#Memorystore#OpenSourceKey#stillcome#GoogleCloud#MemorystoreforRedisCluster#opensource#Valkey80#vectorsearch#OSSfuture#momentum#technology#technews#news#govindhtech

2 notes

·

View notes

Photo

Qdrant Vector Database: Powering AI with Unstructured Data

#AI-powereddatabases#enterpriseAIsolutions#MachineLearning#Qdrantvectordatabase#Rust-baseddatabase#unstructureddatamanagement#vectorsearch#vectorsimilaritysearch

0 notes

Text

RAG implementation of LLMs by using Python, Haystack & React (Part - 1)

Today, I will share a new post in a part series about creating end-end LLMs that feed source data with RAG implementation. I'll also use OpenAI python-based SDK and Haystack embeddings in this case. #Python #OpenAI #RAG #haystack #LLMs

Today, I will share a new post in a part series about creating end-end LLMs that feed source data with RAG implementation. I’ll also use OpenAI python-based SDK and Haystack embeddings in this case. In this post, I’ve directly subscribed to OpenAI & I’m not using OpenAI from Azure. However, I’ll explore that in the future as well. Before I explain the process to invoke this new library, why not…

View On WordPress

0 notes

Link

The field of information retrieval has rapidly evolved due to the exponential growth of digital data. With the increasing volume of unstructured data, efficient methods for searching and retrieving relevant information have become more crucial than #AI #ML #Automation

0 notes

Text

Smart Adaptive Filtering Improves AlloyDB AI Vector Search

A detailed look at AlloyDB's vector search improvements

Intelligent Adaptive Filtering Improves Vector Search Performance in AlloyDB AI

Google Cloud Next 2025: Google Cloud announced new ScaNN index upgrades for AlloyDB AI to improve structured and unstructured data search quality and performance. The Google Cloud Next 2025 advancements meet the increased demand for developers to create generative AI apps and AI agents that explore many data kinds.

Modern relational databases like AlloyDB for PostgreSQL now manage unstructured data with vector search. Combining vector searches with SQL filters on structured data requires careful optimisation for high performance and quality.

Filtered Vector Search issues

Filtered vector search allows specified criteria to refine vector similarity searches. An online store managing a product catalogue with over 100,000 items in an AlloyDB table may need to search for certain items using structured information (like colour or size) and unstructured language descriptors (like “puffer jacket”). Standard queries look like this:

Selected items: * WHERE text_embedding <-> Color=maroon, text-embedding-005, puff jacket, google_ml.embedding LIMIT 100

In the second section, the vector-indexed text_embedding column is vector searched, while the B-tree-indexed colour column is treated to the structured filter color='maroon'.

This query's efficiency depends on the database's vector search and SQL filter sequence. The AlloyDB query planner optimises this ordering based on workload. The filter's selectivity heavily influences this decision. Selectivity measures how often a criterion appears in the dataset.

Optimising with Pre-, Post-, and Inline Filters

AlloyDB's query planner intelligently chooses techniques using filter selectivity:

High Selectivity: The planner often employs a pre-filter when a filter is exceedingly selective, such as 0.2% of items being "maroon." Only a small part of data meets the criterion. After applying the filter (e.g., WHERE color='maroon'), the computationally intensive vector search begins. Using a B-tree index, this shrinks the candidate set from 100,000 to 200 products. Only this smaller set is vector searched (also known as a K-Nearest Neighbours or KNN search), assuring 100% recall in the filtered results.

Low Selectivity: A pre-filter that doesn't narrow the search field (e.g., 90% of products are “blue”) is unsuccessful. Planners use post-filter methods in these cases. First, an Approximate Nearest Neighbours (ANN) vector search using indexes like ScaNN quickly identifies the top 100 candidates based on vector similarity. After retrieving candidates, the filter condition (e.g., WHERE color='blue') is applied. This strategy works effectively for filters with low selectivity because many initial candidates fit the criteria.

Medium Selectivity: AlloyDB provides inline filtering (in-filtering) for filters with medium selectivity (0.5–10%, like “purple”). This method uses vector search and filter criteria. A bitmap from a B-tree index helps AlloyDB find approximate neighbours and candidates that match the filter in one run. Pre-filtering narrows the search field, but post-filtering on a highly selective filter does not produce too few results.

Learn at query time with adaptive filtering

Complex real-world workloads and filter selectivities can change over time, causing the query planner to make inappropriate selectivity decisions based on outdated facts. Poor execution tactics and results may result.

AlloyDB ScaNN solves this using adaptive filtration. This latest update lets AlloyDB use real-time information to determine filter selectivity. This real-time data allows the database to change its execution schedule for better filter and vector search ranking. Adaptive filtering reduces planner miscalculations.

Get Started

These innovations, driven by an intelligent database engine, aim to provide outstanding search results as data evolves.

In preview, adaptive filtering is available. With AlloyDB's ScaNN index, vector search may begin immediately. New Google Cloud users get $300 in free credits and a 30-day AlloyDB trial.

#AdaptiveFiltering#AlloyDBAI#AlloyDBScaNNindex#vectorsearch#AlloyDBAIVectorSearch#AlloyDBqueryplanner#technology#technews#technologynews#news#govindhtech

0 notes

Text

Microsoft SQL Server 2025: A New Era Of Data Management

Microsoft SQL Server 2025: An enterprise database prepared for artificial intelligence from the ground up

The data estate and applications of Azure clients are facing new difficulties as a result of the growing use of AI technology. With privacy and security being more crucial than ever, the majority of enterprises anticipate deploying AI workloads across a hybrid mix of cloud, edge, and dedicated infrastructure.

In order to address these issues, Microsoft SQL Server 2025, which is now in preview, is an enterprise AI-ready database from ground to cloud that applies AI to consumer data. With the addition of new AI capabilities, this version builds on SQL Server’s thirty years of speed and security innovation. Customers may integrate their data with Microsoft Fabric to get the next generation of data analytics. The release leverages Microsoft Azure innovation for customers’ databases and supports hybrid setups across cloud, on-premises datacenters, and edge.

SQL Server is now much more than just a conventional relational database. With the most recent release of SQL Server, customers can create AI applications that are intimately integrated with the SQL engine. With its built-in filtering and vector search features, SQL Server 2025 is evolving into a vector database in and of itself. It performs exceptionally well and is simple for T-SQL developers to use.Image credit to Microsoft Azure

AI built-in

This new version leverages well-known T-SQL syntax and has AI integrated in, making it easier to construct AI applications and retrieval-augmented generation (RAG) patterns with safe, efficient, and user-friendly vector support. This new feature allows you to create a hybrid AI vector search by combining vectors with your SQL data.

Utilize your company database to create AI apps

Bringing enterprise AI to your data, SQL Server 2025 is a vector database that is enterprise-ready and has integrated security and compliance. DiskANN, a vector search technology that uses disk storage to effectively locate comparable data points in massive datasets, powers its own vector store and index. Accurate data retrieval through semantic searching is made possible by these databases’ effective chunking capability. With the most recent version of SQL Server, you can employ AI models from the ground up thanks to the engine’s flexible AI model administration through Representational State Transfer (REST) interfaces.

Furthermore, extensible, low-code tools provide versatile model interfaces within the SQL engine, backed via T-SQL and external REST APIs, regardless of whether clients are working on data preprocessing, model training, or RAG patterns. By seamlessly integrating with well-known AI frameworks like LangChain, Semantic Kernel, and Entity Framework Core, these tools improve developers’ capacity to design a variety of AI applications.

Increase the productivity of developers

To increase developers’ productivity, extensibility, frameworks, and data enrichment are crucial for creating data-intensive applications, such as AI apps. Including features like support for REST APIs, GraphQL integration via Data API Builder, and Regular Expression enablement ensures that SQL will give developers the greatest possible experience. Furthermore, native JSON support makes it easier for developers to handle hierarchical data and schema that changes regularly, allowing for the development of more dynamic apps. SQL development is generally being improved to make it more user-friendly, performant, and extensible. The SQL Server engine’s security underpins all of its features, making it an AI platform that is genuinely enterprise-ready.

Top-notch performance and security

In terms of database security and performance, SQL Server 2025 leads the industry. Enhancing credential management, lowering potential vulnerabilities, and offering compliance and auditing features are all made possible via support for Microsoft Entra controlled identities. Outbound authentication support for MSI (Managed Service Identity) for SQL Server supported by Azure Arc is introduced in SQL Server 2025.

Additionally, it is bringing to SQL Server performance and availability improvements that have been thoroughly tested on Microsoft Azure SQL. With improved query optimization and query performance execution in the latest version, you may increase workload performance and decrease troubleshooting. The purpose of Optional Parameter Plan Optimization (OPPO) is to greatly minimize problematic parameter sniffing issues that may arise in workloads and to allow SQL Server to select the best execution plan based on runtime parameter values supplied by the customer.

Secondary replicas with persistent statistics mitigate possible performance decrease by preventing statistics from being lost during a restart or failover. The enhancements to batch mode processing and columnstore indexing further solidify SQL Server’s position as a mission-critical database for analytical workloads in terms of query execution.

Through Transaction ID (TID) Locking and Lock After Qualification (LAQ), optimized locking minimizes blocking for concurrent transactions and lowers lock memory consumption. Customers can improve concurrency, scalability, and uptime for SQL Server applications with this functionality.

Change event streaming for SQL Server offers command query responsibility segregation, real-time intelligence, and real-time application integration with event-driven architectures. New database engine capabilities will be added, enabling near real-time capture and publication of small changes to data and schema to a specified destination, like Azure Event Hubs and Kafka.

Azure Arc and Microsoft Fabric are linked

Designing, overseeing, and administering intricate ETL (Extract, Transform, Load) procedures to move operational data from SQL Server is necessary for integrating all of your data in conventional data warehouse and data lake scenarios. The inability of these conventional techniques to provide real-time data transfer leads to latency, which hinders the development of real-time analytics. In order to satisfy the demands of contemporary analytical workloads, Microsoft Fabric provides comprehensive, integrated, and AI-enhanced data analytics services.

The fully controlled, robust Mirrored SQL Server Database in Fabric procedure makes it easy to replicate SQL Server data to Microsoft OneLake in almost real-time. In order to facilitate analytics and insights on the unified Fabric data platform, mirroring will allow customers to continuously replicate data from SQL Server databases running on Azure virtual machines or outside of Azure, serving online transaction processing (OLTP) or operational store workloads directly into OneLake.

Azure is still an essential part of SQL Server. To help clients better manage, safeguard, and control their SQL estate at scale across on-premises and cloud, SQL Server 2025 will continue to offer cloud capabilities with Azure Arc. Customers can further improve their business continuity and expedite everyday activities with features like monitoring, automatic patching, automatic backups, and Best Practices Assessment. Additionally, Azure Arc makes SQL Server licensing easier by providing a pay-as-you-go option, giving its clients flexibility and license insight.

SQL Server 2025 release date

Microsoft hasn’t set a SQL Server 2025 release date. Based on current data, we can make some confident guesses:

Private Preview: SQL Server 2025 is in private preview, so a small set of users can test and provide comments.

Microsoft may provide a public preview in 2025 to let more people sample the new features.

General Availability: SQL Server 2025’s final release date is unknown, but it will be in 2025.

Read more on govindhtech.com

#MicrosoftSQLServer2025#DataManagement#SQLServer#retrievalaugmentedgeneration#RAG#vectorsearch#GraphQL#Azurearc#MicrosoftAzure#MicrosoftFabric#OneLake#azure#microsoft#technology#technews#news#govindhtech

0 notes

Text

Boost AI Production With Data Agents And BigQuery Platform

Data accessibility can hinder AI adoption since so much data is unstructured and unmanaged. Data should be accessible, actionable, and revolutionary for businesses. A data cloud based on open standards, that connects data to AI in real-time, and conversational data agents that stretch the limits of conventional AI are available today to help you do this.

An open real-time data ecosystem

Google Cloud announced intentions to combine BigQuery into a single data and AI use case platform earlier this year, including all data formats, numerous engines, governance, ML, and business intelligence. It also announces a managed Apache Iceberg experience for open-format customers. It adds document, audio, image, and video data processing to simplify multimodal data preparation.

Volkswagen bases AI models on car owner’s manuals, customer FAQs, help center articles, and official Volkswagen YouTube videos using BigQuery.

New managed services for Flink and Kafka enable customers to ingest, set up, tune, scale, monitor, and upgrade real-time applications. Data engineers can construct and execute data pipelines manually, via API, or on a schedule using BigQuery workflow previews.

Customers may now activate insights in real time using BigQuery continuous queries, another major addition. In the past, “real-time” meant examining minutes or hours old data. However, data ingestion and analysis are changing rapidly. Data, consumer engagement, decision-making, and AI-driven automation have substantially lowered the acceptable latency for decision-making. The demand for insights to activation must be smooth and take seconds, not minutes or hours. It has added real-time data sharing to the Analytics Hub data marketplace in preview.

Google Cloud launches BigQuery pipe syntax to enable customers manage, analyze, and gain value from log data. Data teams can simplify data conversions with SQL intended for semi-structured log data.

Connect all data to AI

BigQuery clients may produce and search embeddings at scale for semantic nearest-neighbor search, entity resolution, semantic search, similarity detection, RAG, and recommendations. Vertex AI integration makes integrating text, photos, video, multimodal data, and structured data easy. BigQuery integration with LangChain simplifies data pre-processing, embedding creation and storage, and vector search, now generally available.

It previews ScaNN searches for large queries to improve vector search. Google Search and YouTube use this technology. The ScaNN index supports over one billion vectors and provides top-notch query performance, enabling high-scale workloads for every enterprise.

It is also simplifying Python API data processing with BigQuery DataFrames. Synthetic data can replace ML model training and system testing. It teams with Gretel AI to generate synthetic data in BigQuery to expedite AI experiments. This data will closely resemble your actual data but won’t contain critical information.

Finer governance and data integration

Tens of thousands of companies fuel their data clouds with BigQuery and AI. However, in the data-driven AI era, enterprises must manage more data kinds and more tasks.

BigQuery’s serverless design helps Box process hundreds of thousands of events per second and manage petabyte-scale storage for billions of files and millions of users. Finer access control in BigQuery helps them locate, classify, and secure sensitive data fields.

Data management and governance become important with greater data-access and AI use cases. It unveils BigQuery’s unified catalog, which automatically harvests, ingests, and indexes information from data sources, AI models, and BI assets to help you discover your data and AI assets. BigQuery catalog semantic search in preview lets you find and query all those data assets, regardless of kind or location. Users may now ask natural language questions and BigQuery understands their purpose to retrieve the most relevant results and make it easier to locate what they need.

It enables more third-party data sources for your use cases and workflows. Equifax recently expanded its cooperation with Google Cloud to securely offer anonymized, differentiated loan, credit, and commercial marketing data using BigQuery.

Equifax believes more data leads to smarter decisions. By providing distinctive data on Google Cloud, it enables its clients to make predictive and informed decisions faster and more agilely by meeting them on their preferred channel.

Its new BigQuery metastore makes data available to many execution engines. Multiple engines can execute on a single copy of data across structured and unstructured object tables next month in preview, offering a unified view for policy, performance, and workload orchestration.

Looker lets you use BigQuery’s new governance capabilities for BI. You can leverage catalog metadata from Looker instances to collect Looker dashboards, exploration, and dimensions without setting up, maintaining, or operating your own connector.

Finally, BigQuery has catastrophe recovery for business continuity. This provides failover and redundant compute resources with a SLA for business-critical workloads. Besides your data, it enables BigQuery analytics workload failover.

Gemini conversational data agents

Global organizations demand LLM-powered data agents to conduct internal and customer-facing tasks, drive data access, deliver unique insights, and motivate action. It is developing new conversational APIs to enable developers to create data agents for self-service data access and monetize their data to differentiate their offerings.

Conversational analytics

It used these APIs to create Looker’s Gemini conversational analytics experience. Combine with Looker’s enterprise-scale semantic layer business logic models. You can root AI with a single source of truth and uniform metrics across the enterprise. You may then use natural language to explore your data like Google Search.

LookML semantic data models let you build regulated metrics and semantic relationships between data models for your data agents. LookML models don’t only describe your data; you can query them to obtain it.

Data agents run on a dynamic data knowledge graph. BigQuery powers the dynamic knowledge graph, which connects data, actions, and relationships using usage patterns, metadata, historical trends, and more.

Last but not least, Gemini in BigQuery is now broadly accessible, assisting data teams with data migration, preparation, code assist, and insights. Your business and analyst teams can now talk with your data and get insights in seconds, fostering a data-driven culture. Ready-to-run queries and AI-assisted data preparation in BigQuery Studio allow natural language pipeline building and decrease guesswork.

Connect all your data to AI by migrating it to BigQuery with the data migration application. This product roadmap webcast covers BigQuery platform updates.

Read more on Govindhtech.com

#DataAgents#BigQuery#BigQuerypipesyntax#vectorsearch#BigQueryDataFrames#BigQueryanalytics#LookMLmodels#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Vector Search In Memorystore For Redis Cluster And Valkey

Memorystore for Redis Cluster became the perfect platform for Gen AI application cases like Retrieval Augmented Generation (RAG), recommendation systems, semantic search, and more with the arrival of vector search earlier this year. Why? due to its exceptionally low latency vector search. Vector search over tens of millions of vectors may be done with a single Memorystore for Redis instance at a latency of one digit millisecond. But what happens if you need to store more vectors than a single virtual machine can hold?

Google is presenting vector search on the new Memorystore for Redis Cluster and Memorystore for Valkey, which combine three exciting features:

1) Zero-downtime scalability (in or out);

2) Ultra-low latency in-memory vector search;

3) Robust high performance vector search over millions or billions of vectors.

Vector support for these Memorystore products, which is now in preview, allows you to scale up your cluster by up to 250 shards, allowing you to store billions of vectors in a single instance. Indeed, vector search on over a billion vectors with more than 99% recall can be carried out in single-digit millisecond latency on a single Memorystore for Redis Cluster instance! Demanding enterprise applications, such semantic search over a worldwide corpus of data, are made possible by this scale.

Modular in-memory vector exploration

Partitioning the vector index among the cluster’s nodes is essential for both performance and scalability. Because Memorystore employs a local index partitioning technique, every node has an index partition corresponding to the fraction of the keyspace that is kept locally. The OSS cluster protocol has already uniformly sharded the keyspace, so each index split is about similar in size.

This architecture leads to index build times for all vector indices being improved linearly with the addition of nodes. Furthermore, adding nodes enhances the performance of brute-force searches linearly and Hierarchical Navigable Small World (HNSW) searches logarithmically, provided that the number of vectors remains constant. All in all, a single cluster may support billions of vectors that are searchable and indexable, all the while preserving quick index creation times and low search latencies at high recall.

Hybrid queries

Google is announcing support for hybrid queries on Memorystore for Valkey and Memorystore for Redis Cluster, in addition to better scalability. You can combine vector searches with filters on tag and numeric data in hybrid queries. Memorystore combines tag, vector, and numeric search to provide complicated query answers.

Additionally, these filter expressions feature boolean logic, allowing for the fine-tuning of search results to only include relevant fields by combining numerous fields. Applications can tailor vector search queries to their requirements with this new functionality, leading to considerably richer results than previously.

OSS Valkey in the background

The Valkey key-value datastore has generated a lot of interest in the open-source community. It has coauthored a Request for Comments (RFC) and are collaborating with the open source community to contribute its vector search capabilities to Valkey as part of its dedication to making it fantastic. The community alignment process begins with an RFC, and it encourage comments on its proposal and execution. Its main objective is to make it possible for Valkey developers worldwide to use Valkey vector search to build incredible next-generation AI applications.

The quest for a scalable and quick vector search is finished

In addition to the features already available on Memorystore for Redis, Memorystore now offers ultra-low latency across all of its most widely used engines with the addition of fast and scalable vector search on Memorystore for Redis Cluster and Memorystore for Valkey . Therefore, Memorystore will be difficult to beat for developing generative AI applications that need reliable and consistent low-latency vector search. To experience the speed of in-memory search, get started right now by starting a Memorystore for Valkey or Memorystore for Redis Cluster instance.

Read more on Govindhtech.com

#VectorSearch#Memorystore#RedisCluster#Valkey#virtualmachine#Google#generativeAI#AI#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Spanner’s Standard Edition To Create Solid, intelligent apps

Spanner is the globally consistent, always-on database with almost infinite scalability that powers a plethora of contemporary applications in many industries. It is now the cornerstone of many forward-thinking companies’ data-driven transformations. Google is launching Spanner throughout graph today, along with new features like vector search, full-text search, and more. With the help of these advancements, businesses can create intelligent apps that seamlessly integrate several data models and condense their operational deployments onto a single, potent database.

From Spanner versions, you may choose the Standard Edition, Enterprise, and Enterprise Plus editions with the right set of capabilities to fit your needs and budget.

With Spanner Graph, you can easily and succinctly match patterns, navigate relationships, and filter results across interconnected data for popular graph use cases like fraud detection, community discovery, and personalised recommendations.

Building on Google’s many years of search experience, advanced full-text search offers stronger matching and relevance ranking than unstructured text.

Vector search builds on more than a decade of Google research and innovation in approximate closest neighbour algorithms to facilitate semantic information retrieval, the cornerstone of generative AI applications.

Furthermore, in response to Google’s enterprise customers’ increasingly complicated needs regarding latency, cost, and compliance, Google has improved Spanner’s basic infrastructure.

With geo-partitioning, you can store some of your data in particular places to provide quick local access while still deploying globally.

Data sovereignty needs are respected and multi-regional availability attributes are provided via dual-region arrangements.

By dynamically adjusting the size of your Spanner deployment, auto-scaling frees you from having to overprovision and enables you to respond swiftly to changes in traffic patterns.

Presenting the Spanner editions

Google is pleased to present Spanner editions, a new tiered pricing structure that enables you to benefit from these advancements while improving cost transparency and cost-saving options. You can select the Standard, Enterprise, and Enterprise Plus editions from Spanner editions to match your demands and budget with the appropriate collection of features.

The Standard edition is a feature-rich package that comes with additional features like scheduled backups and reverse ETL from BigQuery in addition to all of the current capabilities that are generally available (GA) in single-region settings. The Enterprise edition leverages multi-model features, such as vector search, enhanced full-text search, and the new Spanner Graph, to enhance the Standard edition.

Moreover, it offers improved data security and operational ease with incremental backups and automated autoscaling. The new geo-partitioning feature and multi-region setups with 99.999% availability are included in the Enterprise Plus version for workloads that demand the greatest levels of availability, performance, compliance, and governance.

Let’s examine each of the Spanner editions in more detail

Standard Edition

Everything you adore about Spanner is present in the standard edition, and then some. Access to Spanner’s single-region configurations, which include transparent failover, synchronous replication, and automatic sharding for almost infinite scalability and 99.99% availability, is provided by the Standard edition. All of the features available in the GA today, including Google’s enterprise-grade security and data protection measures, are included in the standard edition.

Furthermore, Google is adding new features to the Standard edition. Google is growing their offering with additional features like reverse ETL and external datasets with BigQuery, in addition to Spanner’s strong connection with BigQuery, which includes features like Data Boost and BigQuery federation. Google is also added scheduled backups to further its data protection capabilities. These improved features in the Standard edition are available to all Spanner customers.

Customers can gain from Standard edition in the following ways:

Migrate your relational workloads to Spanner to save operational toil, minimise costly scheduled and unplanned downtime, and cut the overall cost of ownership.

Shard MySQL or other complicated application-level sharding using Spanner’s automatic sharding instead.

Spanner’s nativePostgreSQL interface lets you modernise your apps without being locked into outdated features.

Elevate your NoSQL workloads with multirow transactions and stable secondary indexes thanks to support for adapters for Cassandra and DynamoDB that are compatible with APIs.

Avoid complex ETL pipelines and data silos by consolidating your data into a single source-of-truth database and running analytics on up-to-date data with Spanner Data Boost and BigQuery federation.

Utilise reverse ETL to service complicated analytics use-cases from Spanner and BigQuery to compute them at high QPS and low latency.

Enterprise Edition

Expanded data modalities are available to you with the Enterprise edition, enabling you to combine your operational deployments and provide your applications with true multi-model access. Spanner Graph, full-text search, and vector search capabilities are available for your data with the Enterprise edition, in addition to the relational and NoSQL functionality that Spanner currently offers.

The best part is that by combining all of this functionality, Spanner’s primary advantages of high availability, scalability, and consistency can be preserved in a genuine, completely interoperable multi-model database. Having all of your data in one location eliminates the need to transfer data between transactional and speciality databases, allowing you to minimise data silos and duplication, streamline data governance, cut down on operational complexity, and eventually minimise total cost of ownership.

Customers can gain from Enterprise edition in the following ways:

By fusing the advantages of SQL and GQL, you can supercharge your applications with Spanner Graph capabilities at nearly infinite scale. This allows analysts and developers to query linked and structured data in a single action.

Use full-text search to modernise your search apps and streamline your operational pipelines. You may leverage sophisticated search features without copying and indexing data into a separate search solution.

By basing fundamental models on contextual, domain-specific, and real-time data with native vector search capability in your operational data, you may enhance the AI-assisted user experience.

Launch robust multi-model applications, such a generative AI product that grounds LLMs using relational and knowledge graph RAG approaches, or a searchable product catalogue that mixes traditional and semantic information retrieval through the combination of full-text and vector search.

Enterprise Plus Edition

Spanner’s most feature-rich and widely available edition is called Enterprise Plus. Enterprise Plus includes new dual-region configurations in addition to our 99.999% multi-region options, which let you take advantage of Spanner’s unparalleled availability while also adhering to your data sovereignty needs. You may run a truly global deployment with Enterprise Plus and still retain low latency and data locality for parts of your data by utilising geo-partitioning.

Customers can gain from Enterprise Plus edition in the following ways:

By using Spanner’s multi-region settings for application deployment, you can achieve real 0-RTO and 0-RPO deployments along with 99.999% availability, eliminating the need to construct and manage complex disaster recovery solutions.

Use Spanner’s dual-region configurations in places like Australia, Germany, India, and Japan to meet data sovereignty needs without sacrificing availability.

Partition your data to make it always available where you need it, latency-free, and manage your worldwide deployment while preserving data locality.

Increased openness and cost efficiency

Google is also introducing a more straightforward pricing approach with the new Spanner editions, shifting to per-replica charging and separating the expenses of compute and data replication to improve efficiency and cost transparency. Each edition has various cost-saving features, and switching to a new edition is meant to be a smooth process.

Customers in a single region can purchase the standard edition for the same price. Instead than paying multiple prices for every function, customers can choose the Enterprise version, which has a single price point, if they want increased multi-model and search capabilities. They can also take advantage of the price-performance improvements like incremental backups and managed autoscaler.

Even more, the Enterprise Plus edition offers lower compute expenses, separates computation and replication, and does not charge for witness replica storage. Finally, since configurable read-only replicas will be priced the same as read-write replicas for each edition, customers employing these replicas will benefit from significant cost savings. Making the switch to Spanner editions is easy, and you can take advantage of all the improvements Spanner has to offer while cutting prices.

The availability of the Spanner versions is scheduled for September 24, 2024. See the overview page for Spanner versions for further details.

Read more on govindhtech.com

#SpannerStandardEdition#CreateSolid#intelligentapps#SpannerGraph#Vectorsearch#dualregionarrangements#BigQuery#datasecurity#data#dataprotection#ETLservice#datagovernance#graphRAG#TECHNEWS#EnterprisePlus#overviewpagefoRSpannerversions#technology#news#govindhtech

1 note

·

View note

Text

Presenting Graph Spanner: A Reimagining of Graph Databases

Spanner Graph

Operational databases offer the basis for creating enterprise AI applications that are precise, pertinent, and based on enterprise truth as the use of AI continues to grow. At Google Cloud, the team strive to provide the greatest databases for creating and executing AI applications. In light of this, they are thrilled to introduce Graph Spanner, vector search, and sophisticated full-text search a few new features that will make it simpler for you to create effective gen AI products.

With the release of Bigtable SQL and Bigtable distributed counters, the team also revolutionising the developer experience by simplifying the process of creating at-scale apps. Lastly, they are announcing significant updates to help customers modernise their data estates by supporting their traditional corporate workloads, such as SQL Server and Oracle. Now let’s get started!

Using a graph database can be a valuable, albeit complex, approach for enterprises to gain insights from their connected data so they can create more intelligent applications. Google is excited to present Spanner Graph today, a ground-breaking solution that combines Spanner, Google’s constantly available, globally consistent database, with capabilities specifically designed for graph databases.

Graphs are a natural way to show relationships in data, which makes them useful for analysing data that is related, finding hidden patterns, and enabling applications that depend on connection knowledge. There are several applications for graphs, including route planning, customer 360, fraud detection, recommendation engines, network security, knowledge graphs, and data lineage tracing.

But implementing separate graph databases to handle these use cases frequently comes with the following drawbacks:

Data fragmentation and operational overhead: Separate graph database maintenance frequently results in data silos, added complexity, and inconsistent data copies, all of which make it more difficult to conduct effective analysis and decision-making.

Bottlenecks in terms of scalability and availability: As data volumes and complexity increase and impede corporate growth, many standalone graph databases find it difficult to fulfil the expectations of mission-critical applications in terms of scalability and availability.

Ecosystem friction and skill gaps: Adopting a fully new graph paradigm may be more difficult for organisations because of their significant investments in infrastructure and SQL knowledge. They require more resources and training to accomplish this, which could take resources away from other pressing corporate requirements.

With almost infinite scalability, Graph Spanner reinvents graph data management by delivering a unified database that seamlessly merges relational, search, graph, and AI capabilities. Spanner Graph provides you with:

Native graph experience: Based on open standards, the ISO Graph Query Language (GQL) interface provides a simple and clear method for matching patterns and navigating relationships.

Unified relational and graph models: Complete GQL and SQL interoperability eliminates data silos and gives developers the freedom to select the best tool for each given query. Data from tables and graphs can be tightly integrated to reduce operational overhead and the requirement for expensive, time-consuming data transfers.

Built-in search features: Graph data may be efficiently retrieved using keywords and semantic meaning thanks to rich vector and full-text search features.

Scalability, availability, and consistency that lead the industry: You can rely on Spanner’s renowned scalability, availability, and consistency to deliver reliable data foundations.

AI-powered insights: By integrating deeply with Vertex AI, Spanner Graph gains direct access to a robust set of AI models, which speeds up AI workflows.

Let’s examine more closely what makes Spanner Graph special

Spanner Graph provides a recognisable and adaptable graph database interface. Supported by Graph Spanner is ISO GQL, the latest global standard for graph databases. It makes it simpler to find hidden links and insights by providing a clear and simple method for matching patterns, navigating relationships, and filtering results in graph data.

Spanner Graph functions well with both full-text and vector searches. With the combination, you may use GQL to navigate relationships within graph structures and search to locate graph contents. To be more precise, you can use full-text search to identify nodes or edges that include particular keywords or use vector search to find nodes or edges based on semantic meaning. GQL allows you to easily explore the remainder of the graph from these beginning locations. This unified capability enables you to find hidden connections, patterns, and insights that would be challenging to find using any one method by combining various complimentary strategies.

Spanner Graph provides industry-leading consistency and availability while scaling beyond trillions of edges. Since Graph Spanner inherits Spanner’s nearly limitless scalability, industry-best availability, and worldwide consistency, it’s an excellent choice for even the most crucial graph applications. Specifically, without requiring your involvement, Spanner’s transparent sharding may leverage massively parallel query processing and scale elastically to very huge data sets.

Spanner Graph’s tight integration with Vertex AI speeds up your AI workflows. Spanner Graph has a close integration with Vertex AI, the unified, fully managed AI development platform offered by Google Cloud. Using the Graph Spanner schema and query, you may immediately access Vertex AI’s vast collection of generative and predictive models, which can expedite your AI workflow. To enrich your graph, for instance, you can utilise LLMs to build text embeddings for graph nodes and edges. After that, you can use vector search to extract data from your graph in the semantic space.

You may create more intelligent applications with Spanner Graph

With practically limitless scalability, Spanner Graph effortlessly combines graph, relational, search, and AI capabilities, creating a plethora of opportunities:

Product recommendations: To create a knowledge network full of context, Spanner network represents the intricate interactions that exist between people, products, and preferences. By fusing full-text search with quick graph traversal, you may recommend products based on user searches, past purchases, preferences, and similarity to other users.

Financial fraud detection: It is simpler to spot questionable connections when financial entities like accounts, transactions, and people are represented naturally in Spanner Graphs. Similar patterns and anomalies in the embedding space are found by vector search. Financial institutions can minimise financial losses by promptly and correctly identifying possible dangers through the combination of these technologies.

Social networks: Even in the biggest social networks, the Graph Spanner model logically individuals, groups, interests, and interactions. For individualised recommendations, it facilitates the quick identification of trends like overlapping group memberships, mutual friends, or related interests. Users can quickly locate individuals, groups, posts, or particular subjects by using natural language searches with the integrated full-text search feature.

Gaming: Player characters, objects, places, and the connections between them are all natural representations of elements in game environments. Effective link traversal is made possible by the Spanner Graph, and this is crucial for game features like pathfinding, inventory control, and social interactions. Furthermore, even at times of high usage, Spanner Graph’s global consistency and scalability ensure a smooth and fair experience for every player.

Network security: Recognising trends and abnormalities requires an understanding of the relationships that exist between individuals, devices, and events over time. Security experts can employ graph capabilities to trace the origins of attacks, evaluate the effect of security breaches, and correlate these findings with temporal trends for proactive threat detection and mitigation thanks to Graph Spanner relational and graph interoperability.

GraphRAG: By utilising a knowledge graph to anchor foundation models, Spanner Graph elevates Retrieval Augmented Generation (RAG) to a new level. Furthermore, Spanner Graph’s integration of tabular and graph data enhances your AI applications by providing contextual information that neither format could provide on its own. It is capable of handling even the largest knowledge graphs due to its unparalleled scalability. Your GenAI workflows are streamlined with integrated Vertex AI and built-in vector search.

Read more on govindhtech.com

#PresentingGraphSpanner#GraphDatabases#SpannerGraph#VertexAI#AIapplications#GoogleCloud#AIproducts#vectorsearch#utiliseLLMs#game#ai#GraphSpanner#datasilos#technology#technews#news#govindhtech

0 notes

Text

Using Vector Index And Multilingual Embeddings in BigQuery

The Tower of Babel reborn? Using vector search and multilingual embeddings in BigQuery Finding and comprehending reviews in a customer’s favourite language across many languages can be difficult in today’s globalised marketplace. Large datasets, including reviews, may be managed and analysed with BigQuery.

In order to enable customers to search for products or company reviews in their preferred language and obtain results in that language, google cloud describe a solution in this blog post that makes use of BigQuery multilingual embeddings, vector index, and vector search. These technologies translate textual data into numerical vectors, enabling more sophisticated search functions than just matching keywords. This improves the relevancy and accuracy of search results.

Vector Index

A data structure called a Vector Index is intended to enable the vector index function to carry out a more effective vector search of embeddings. In order to enhance search performance when vector index is possible to employ a vector index, the function approximates nearest neighbour search method, which has the trade-off of decreasing recall and yielding more approximate results.

Authorizations and roles

You must have the bigquery tables createIndex IAM permission on the table where the vector index is to be created in order to create one. The bigquery tables deleteIndex permission is required in order to drop a vector index. The rights required to operate with vector indexes are included in each of the preset IAM roles listed below:

Establish a vector index

The build VECTOR INDEX data definition language (DDL) statement can be used to build a vector index.

Access the BigQuery webpage.

Run the subsequent SQL statement in the query editor

Swap out the following:

The vector index you’re creating’s name is vector index. The index and base table are always created in the same project and dataset, therefore these don’t need to be included in the name.

Dataset Name: The dataset name including the table.

Table Name: The column containing the embeddings data’s name in the table.

Column Name:The column name containing the embeddings data is called Column name. ARRAY is the required type for the column. No child fields may exist in the column. The array’s items must all be non null, and each column’s values must have the same array dimensions. Stored Column Name: the vector index’s storage of a top-level table column name. A column cannot have a range type. If a policy tag is present in a column or if the table has a row-level access policy, then stored columns are not used. See Store columns and pre-filter for instructions on turning on saved columns.

Index Type:The vector index building algorithm is denoted by Index type. There is only one supported value: IVF. By specifying IVF, the vector index is constructed as an inverted file index (IVF). An IVF splits the vector data according to the clusters it created using the k-means method. These partitions allow the vector search function to search the vector data more efficiently by limiting the amount of data it must read to provide a result.

Distance Type: When utilizing this index in a vector search, distance type designates the default distance type to be applied. COSINE and EUCLIDEAN are the supported values. The standard is EUCLIDEAN.

While the distance utilised in the vector search function may vary, the index building process always employs EUCLIDEAN distance for training.

The Diatance type value is not used if you supply a value for the distance type argument in the vector search function. Num Lists: an INT64 value that is equal to or less than 5,000 that controls the number of lists the IVF algorithm generates. The IVF method places data points that are closer to one another on the same list, dividing the entire data space into a number of lists equal to num lists. A smaller number for num lists results in fewer lists with more data points, whereas a bigger value produces more lists with fewer data points.

To generate an effective vector search, utilise num list in conjunction with the fraction lists to search argument in the vector list function. Provide a low fraction lists to search value to scan fewer lists in vector search and a high num lists value to generate an index with more lists if your data is dispersed among numerous small groups in the embedding space. When your data is dispersed in bigger, more manageable groups, use a fraction lists to search value that is higher than num lists. Building the vector index may take longer if you use a high num lists value.

In addition to adding another layer of refinement and streamlining the retrieval results for users, google cloud’s solution translates reviews from many languages into the user’s preferred language by utilising the Translation API, which is easily integrated into BigQuery. Users can read and comprehend evaluations in their preferred language, and organisations can readily evaluate and learn from reviews submitted in multiple languages. An illustration of this solution can be seen in the architecture diagram below.

Google cloud took business metadata (such address, category, and so on) and review data (like text, ratings, and other attributes) from Google Local for businesses in Texas up until September 2021. There are reviews in this dataset that are written in multiple languages. Google cloud’s approach allows consumers who would rather read reviews in their native tongue to ask inquiries in that language and obtain the evaluations that are most relevant to their query in that language even if the reviews were originally authored in a different language.

For example, in order to investigate bakeries in Texas, google cloud asked, “Where can I find Cantonese-style buns and authentic Egg Tarts in Houston?” It is difficult to find relevant reviews among thousands of business profiles for these two unique and frequently available bakery delicacies in Asia, but less popular in Houston.

Google cloud system allows users to ask questions in Chinese and get the most appropriate answers in Chinese, even if the reviews were written in other languages at first, such Japanese, English, and so on. This solution greatly improves the user’s ability to extract valuable insights from reviews authored by people speaking different languages by gathering the most pertinent information regardless of the language used in the reviews and translating them into the language requested by the user.

Consumers may browse and search for reviews in the language of their choice without encountering any language hurdles; you can then utilise Gemini to expand the solution by condensing or categorising the reviews that were sought for. By simply adding a search function, you may expand the application of this solution to any product, business reviews, or multilingual datasets, enabling customers to find the answers to their inquiries in the language of their choice. Try it out and think of additional useful data and AI tools you can create using BigQuery!

Read more on govindhtech.com

#Usingvectorindex#Multilingual#Embeddings#BigQuery#vectorsearch#Googlecloud#AItools#Swapout#Authorizations#technology#technews#news#govindhtech

0 notes

Text

Run MongoDB Atlas AWS for Scalable and Secure Applications

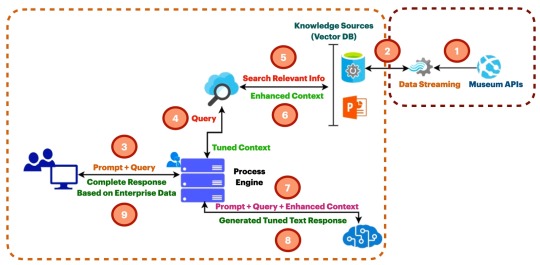

MongoDB Atlas AWS

Billions of parameters are used in the training of foundational models (FMs) on massive amounts of data. However, they must refer to a reliable knowledge base that is distinct from the model’s training data sources in order to respond to inquiries from clients on domain-specific private data. Retrieval Augmented Generation (RAG) is a technique that is widely used to accomplish this. Without requiring the model to be retrained, RAG expands the capabilities of FMs to certain domains by retrieving data from the company’s own or internal sources. It is an affordable method of enhancing model output to ensure that it is accurate, relevant, and helpful in a variety of situations.

Without needing to create unique connections for data sources and manage data flows, Knowledge Bases for Amazon Bedrock is a fully managed feature that aids in the implementation of the complete RAG workflow, from ingestion to retrieval and quick augmentation.

The availability of MongoDB Atlas as a vector store in Knowledge Bases for Amazon Bedrock has been announced by AWS. You may create RAG solutions to safely link FMs in Amazon Bedrock to your company’s private data sources with MongoDB Atlas vector store connection. With this integration, the vector engines for Amazon OpenSearch Serverless, Pinecone, Redis Enterprise Cloud, and Amazon Aurora PostgreSQL-Compatible Edition are now supported by Knowledge Bases for Amazon Bedrock.

MongoDB Atlas and Amazon Bedrock Knowledge Bases build RAG apps

The vectorSearch index type in MongoDB Atlas powers vector search. The vector type of the field containing the vector data must be specified in the index specification. You must first establish an index, ingest source data, produce vector embeddings, and store them in a MongoDB Atlas collection before you can use MongoDB Atlas vector search in your application. In order to execute searches, you must first convert the input text into a vector embedding. After that, you may execute vector search queries against fields that are indexed as the vector type in a vectorSearch type index using an aggregation pipeline stage.

The majority of the labor-intensive work is handled by the MongoDB Atlas integration with Knowledge Bases for Amazon Bedrock. You can integrate RAG into your apps once the knowledge base and vector search index are set up. Your input (prompt) will be transformed into embeddings by Amazon Bedrock, which will then query the knowledge base, add contextual information from the search results to the FM prompt, and return the resulting response.

What is MongoDB Atlas

The most sophisticated cloud database service available, featuring built in automation for workload and resource optimization, unparalleled data distribution and mobility across AWS, Azure, and Google Cloud, and much more.

With a cloud database at its core, MongoDB Atlas is an integrated set of data services that streamlines and expedites the process of building with data. With a developer data platform that assists in resolving your data difficulties, you can build more quickly and intelligently.

Use apps anywhere

Use Atlas to run somewhere in the world. With AWS, Azure, and Google Cloud, you can deploy a database in over 90 regions and grow it to be global, multi-regional, or multi-cloud as needed. For extreme low latency and stringent compliance, pin data to specific areas.

Scale operations with assurance

Construct with assurance. Best practices are pre installed in Atlas, and it cleverly automates necessary tasks to guarantee that your data is safe and your database functions as it should.

Decrease the intricacy of the architecture

Using a single query API, access and query your data for any use case. The whole AWS platform, including full-text search, analytics, and visualisations, can instantaneously access data stored in Atlas.

Pay attention to the shipping features

Whether traffic triples or new features are added, don’t stop your apps from operating. In order to ensure that you always have the database resources you need to keep creating, Atlas includes sophisticated speed optimization capabilities.

MongoDB Atlas on AWS

Utilise AWS with MongoDB to create intelligent, enterprise ready applications. To assist you in rapidly developing reliable new AI experiences, MongoDB Atlas interacts with essential AWS services and unifies operational data, metadata, and vector data into a single platform. Streamline your data management, spur large scale innovation, and provide precise user experiences supported by up to date company data.

By default, MongoDB Atlas is secure. It makes use of security elements that are already present across your deployment. Robust security safeguards safeguard your data in accordance with HIPAA, GDPR, ISO 27001, PCI DSS, and other requirements.

Complex RAG implementations are easier to construct when an operational database has native vector search capabilities. Regarding retrieval-augmented generation (RAG), a technique that produces responses that are more accurate by utilizing Large Language Models (LLM) supplemented with your own data. You don’t need a separate add-on vector database in order to store, index, and query vector embeddings of your data using MongoDB.

Use Atlas Device Sync to transform your mobile app development process. With the help of this fully managed device to cloud synchronization solution, your team will be able to create better mobile apps more quickly.

MongoDB Atlas pricing

Depending on your requirements, MongoDB Atlas offers several different pricing tiers:

Is MongoDB Atlas Free

Free Tier: Small production, testing, and development workloads are best suited for this tier. It has 50 writes, 100 million reads, and 512MB of storage each month.

MongoDB Atlas Cost

Shared Cluster: This plan, which costs $9 per month, is best suited for individual projects or applications with little traffic.

Serverless: This tier is a suitable choice for apps with irregular or fluctuating traffic because it costs $0.10 per million reads.

Dedicated Cluster: This tier, which begins at $57 per month (calculated based on $0.08 per hour), provides the greatest control and scalability. Production applications with heavy workloads are best suited for it.

Keep in mind that these are only estimates; the real cost will vary depending on your unique usage, including the amount of storage needed, network traffic, backup choices, and other features.

Currently accessible

Both the US West (Oregon) and US East (North Virginia) regions offer access to the MongoDB Atlas vector store in Knowledge Bases for Amazon Bedrock. For upcoming updates, make sure to view the entire Region list.

Read more on govindhtech.com

#MongoDB#AmazonBedrock#RAGSolutions#GoogleCloud#Azure#largelanguagemodels#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes