#fastcompression fastvideo SDK JPEG Resize

Explore tagged Tumblr posts

Text

Web Resize on-the-fly: up to one thousand images per second on Tesla V100 GPU

Fastvideo company has been developing GPU-based image processing SDK since 2011, and we also got some outstanding results for software performance on NVIDIA GPU (mobile, laptop, desktop, server). We’ve implemented the first JPEG codec on CUDA, which is still the fastest solution on the market. Apart from JPEG, we’ve also released JPEG2000 codec on GPU and SDK with high performance image processing algorithms on CUDA. Our SDK offers just exceptional speed for many imaging applications, especially in situations when CPU-based solutions are unable to offer either sufficient performance or latency. Now we would like to introduce our Resize image on the fly solution.

JPEG Resize on-the-fly

In various imaging applications we have to do image resize and quite often we need to resize JPEG images. In such a case the task gets more complicated, as soon as we can't do resize directly, because images are compressed. Solution is not difficult, we just need to decompress the image, then do resize and encode it to get resized image. Nevertheless, we can face some difficulties if we assume that we need to resize many millions of images every day and here comes questions concerning performance optimization. Now we need not only to get it right, we have to do that very fast. And there is a good news that it can be done this way.

In the standard set of demo applications from Fastvideo SDK for NVIDIA GPUs there is a sample application for JPEG resize. It's supplied both in binaries and with source codes to let users integrate it easily into their software solutions. This is the software to solve the problem of fast resize (JPEG resize on-the-fly), which is essential for many high performance applications, including high load web services. That application can do JPEG resize very fast, and user can test the binary to check image quality and performance.

If we consider high load web application as an example, we can formulate the following task: we have big database of images in JPEG format, and we need to perform fast resize for these images with minimum latency. This is also a problem for big sites with responsive design: how to prepare set of images with optimal resolutions to minimize traffic and to do that as fast as possible?

At first we need to answer the question “Why JPEG?”. Modern internet services get most of such images from their users, which create them with mobile phones or cameras. For such a situation JPEG is a standard and reasonable choice. Other formats on mobile phones and cameras do exist, but they are not so widespread as JPEG. Many images are stored as WebP, but that format is still not so popular as JPEG. Moreover, encoding and decoding of WebP images are much slower in comparison with JPEG, and this is also very important.

Quite often, such high load web services utilize sets of multiple image copies of the same image with different resolutions to get low latency response. That approach leads to extra expenses on storage, especially for high performance applications, web services and big image databases. The idea to implement better solution is quite simple: we can try to store just one JPEG image at the database instead of image series and to transform it to desired resolution on the fly, which means very fast and with minimum latency.

How to prepare image database

We will store all images in the database at JPEG format, but this is not a good idea to utilize them “as is”. It’s important to prepare all images from the database for future fast decoding. That is the reason why we need to pre-process at off-line all images in the database to insert so called “JPEG restart markers” into each image. JPEG Standard allows such markers and most of JPEG decoders can easily process JPEG images with these markers without problem. Most of smart phones and cameras don’t produce JPEGs with restart markers, that’s why we can add these markers with our software. This is lossless procedure, so we don’t change image content, though file size will be slightly more after that.

To make full solution efficient, we can utilize some statistics about user device resolutions which are most frequent. As soon as users utilize their phones, laptops, PCs to see pictures, and quite often these pictures need just a part of the screen, then image resolutions should not too big and this is the ground to conclude that most of images from our database could have resolutions not more than 1K or 2K. We will consider both choices to evaluate latency and performance. In the case if we need bigger resolution at user device, we just can do resize with upscaling algorithm. Still, there is a possibility to choose bigger default image resolution for the database, general solution will be the same.

For practical purposes we consider JPEG compression with parameters which correspond to “visually lossless compression”. It means JPEG compression quality around 90% with subsampling 4:2:0 or 4:4:4. To evaluate time of JPEG resize, for testing we choose downscaling to 50% both for width and height. In real life we could utilize various scaling coefficients, but 50% could be considered as standard case for testing.

Algorithm description for JPEG Resize on-the-fly software

This is full image processing pipeline for fast JPEG resize that we've implemented in our software:

Copy JPEG images from database to system memory

Parse JPEG and check EXIF sections (orientation, color profile, etc.)

If we see color profile at JPEG image, we read it from file header and save it for future use

Copy JPEG image from CPU to GPU memory

JPEG decoding

Image resize according to Lanczos algorithm (50% downscaling as an example)

Sharp

JPEG encoding

Copy new image from GPU to system memory

Add previously saved color profile to the image header (to EXIF)

We could also implement the same solution with better precision. Before resize we could apply reverse gamma to all color components of the pixel, in order to perform resize in linear space. Then we will apply that gamma to all pixels right after sharp. Visual difference is not big, though it's noticeable, computational cost for such an algorithm modification is low, so it could be easily done. We just need to add reverse and forward gamma to image processing pipeline on GPU.

There is one more interesting approach to solve the same task of JPEG Resize. We can do JPEG decoding on multicore CPU with libjpeg-turbo software. Each image could be decoded in a separate CPU thread, though all the rest of image processing is done on GPU. If we have sufficient number of CPU cores, we could achieve high performance decoding on CPU, though the latency will degrade significantly. If the latency is not our priority, then that approach could be very fast as well, especially in the case when original image resolution is small.

General requirements for fast jpg resizer

The main idea is to avoid storing of several dozens copies of the same image with different resolutions. We can create necessary image with required resolution immediately, right after receiving external request. This is the way to reduce storage size, because we need to have just one original image instead of series of copies.

We have to accomplish JPEG resize task very quickly. That is the matter of service quality due to fast response to client’s requests.

Image quality of resized version should be high.

To ensure precise color reproduction, we need to save color profile from EXIF of original image.

Image file size should be as small as possible and image resolution should coincide with window size on the client’s device: а) If image size is not the same as window size, then client’s device (smart phone, tablet, laptop, PC) will apply hardware-based resize right after image decoding on the device. In OpenGL such a resize is always bilinear, which could create some artifacts or moire on the images with high-frequency detail. b) Screen resize consumes extra energy from the device. c) If we consider the situation with multiple image copies at different resolutions, then in most cases we will not be able to match exactly image resolution with window size, and that's why we will send more traffic than we could.

Full pipeline for web resize, step by step

We collect images from users in any format and resolution

At off-line mode with ImageMagick which supports various image formats, we transform original images to standard 24-bit BMP/PPM format, apply high quality resize with downscale to 1K or 2K, then do JPEG encoding which should include restart markers embedding. The last action could be done either with jpegtran utility on CPU or with Fastvideo JPEG Сodeс on GPU. Both of them can work with JPEG restart markers.

Finally, we create database of such 1K or 2K images to work with further.

After receiving user’s request, we get full info about required image and its resolution.

Find the required image from the database, copy it to system memory and notify resizing software that new image is ready for processing.

On GPU we do the following: decoding, resizing, sharpening, encoding. After that the software copies compressed image to system memory an adds color profile to EXIF. Now the image is ready to be sent to user.

We can run several threads or processes for JPEG Resize application on each GPU to ensure performance scaling. This is possible because GPU occupancy is not high, while working with 1K and 2K images. Usually 2-4 threads/processes are sufficient to get maximum performance at single GPU.

The whole system should be built on professional GPUs like NVIDIA Tesla P40 or V100. This is vitally important, as soon as NVIDIA GeForce GPU series is not intended to 24/7 operation with maximum performance during years. NVIDIA Quadro GPUs have multiple monitor outputs which are not necessary in the task of fast jpg resize. Requirements for GPU memory size are very low and that's why we don’t need GPUs with big size of GPU memory.

As additional optimization issue, we can also create a cache for most frequently processed images to get faster access for such images.

Software parameters for JPEG Resize

Width and height of the resized image could be arbitrary and they are defined with one pixel precision. It's a good idea to preserve original aspect ratio of the image, though the software can also work with any width and height.

We utilize JPEG subsampling modes 4:2:0 and 4:4:4.

Maximum image quality we can get with 4:4:4, though minimum file size corresponds to 4:2:0 mode. We can do subsampling because human visual system better recognizes luma image component, rather than chroma.

JPEG image quality and subsampling for all images the database.

We do sharpening with 3×3 window and we can control sigma (radius).

We need to specify JPEG quality and subsampling mode for output image as well. It’s not necessary that these parameters should be the same as for input image. Usually JPEG quality 90% is considered to be visually lossless and it means that user can’t see compression artifacts at standard viewing conditions. In general case, one can try JPEG image quality up to 93-95%, but then we will have bigger file sizes both for input and output images.

Important limitations for Web Resizer

We can get very fast JPEG decoding on GPU only in the case if we have built-in restart markers in all our images. Without these restart markers one can’t make JPEG decoding parallel algorithm and we will not be able finally to get high performance at the decoding stage. That’s why we need to prepare the database with images which have sufficient number of restart markers.

At the moment, as we believe, JPEG compression algorithm is the best choice for such a task because performance of JPEG Codec on GPU is much faster in comparison with any competitive formats/codecs for image compression and decompression: WebP, PNG, TIFF, JPEG2000, etc. This is not just the matter of format choice, that is the matter of available high-performance codecs for these image formats.

Standard image resolution for prepared database could be 1K, 2K, 4K or anything else. Our solution will work with any image size, but total performance could be different.

Performance measurements for resize of 1K and 2K jpg images

We’ve done testing on NVIDIA Tesla V100 (OS Windows Server 2016, 64-bit, driver 24.21.13.9826) on 24-bit images 1k_wild.ppm and 2k_wild.ppm with resolutions 1K and 2K (1280×720 and 1920×1080). Tests were done with different number of threads, running at the same GPU. To process 2K images we need around 110 MB of GPU memory per one thread, for four threads we need up to 440 MB.

At the beginning we've encoded test images to JPEG with quality 90% and subsampling 4:2:0 or 4:4:4. Then we ran test application, did decoding, resizing, sharpening and encoding with the same quality and subsampling. Input JPEG images resided at system memory, we copied the processed image from GPU to the system memory as well. We measured timing for that procedure.

Command line example to process 1K image: PhotoHostingSample.exe -i 1k_wild.90.444.jpg -o 1k_wild.640.jpg -outputWidth 640 -q 90 -s 444 -sharp_after 0.95 -repeat 200

Performance for 1K images

N | Quality | Subsampling | Resize | Threads | FPS

1 | 90% | 4:4:4 / 4:2:0 | 2 times | 1 | 868 / 682

2 | 90% | 4:4:4 / 4:2:0 | 2 times | 2 | 1039 / 790

3 | 90% | 4:4:4 / 4:2:0 | 2 times | 3 | 993 / 831

4 | 90% | 4:4:4 / 4:2:0 | 2 times | 4 | 1003 / 740

Performance for 2K images

N | Quality | Subsampling | Resize | Threads | FPS

1 | 90% | 4:4:4 / 4:2:0 | 2 times | 1 | 732 / 643

2 | 90% | 4:4:4 / 4:2:0 | 2 times | 2 | 913 / 762

3 | 90% | 4:4:4 / 4:2:0 | 2 times | 3 | 891 / 742

4 | 90% | 4:4:4 / 4:2:0 | 2 times | 4 | 923 / 763

JPEG subsampling 4:2:0 for input image leads to slower performance, but image sizes for input and output images are less in that case. For subsampling 4:4:4 we get better performance, though image sizes are bigger. Total performance is mostly limited by JPEG decoder module and this is the key algorithm to improve to get faster solution in the future.

Resume

From the above tests we see that on just one NVIDIA Tesla V100 GPU, resize performance could reach 1000 fps for 1K images and 900 fps for 2K images at specified test parameters for JPEG Resize. To get maximum speed, we need to run 2-4 threads on the same GPU.

Latency around just one millisecond is very good result. To the best of our knowledge, one can’t get such a latency on CPU for that task and this is one more important vote for GPU-based resize of JPEG images at high performance professional solutions.

To process one billion of JPEG images with 1K or 2K resolutions per day, we need up to 16 NVIDIA Tesla V100 GPUs for JPEG Resize on-the-fly task. Some of our customers have already implemented that solution at their facilities, the others are currently testing that software.

Please note that GPU-based resize could be very useful not only for high load web services. There are much more high performance imaging applications where fast resize could be really important. For example, it could be utilized at the final stage of almost any image processing pipeline before image output to monitor. That software can work with any NVIDIA GPU: mobile, laptop, desktop, server.

Benefits of GPU-based JPEG Resizer

Reduced storage size

Less infrastructure costs on initial hardware and software purchasing

Better quality of service due to low latency response

High image quality for resized images

Min traffic

Less power consumption on client devices

Fast time-to-market software development on Linux and Windows

Outstanding reliability and speed of heavily-tested resize software

We don't need to store multiple image resolutions, so we don't have additional load to file system

Fully scalable solution which is applicable both to a big project and to a single device

Better ROI due to GPU usage and faster workflow

To whom it may concern

Fast resize of JPEG images is definitely the issue for high load web services, big online stores, social networks, online photo management and sharing applications, e-commerce services and enterprise-level software. Fast resize can offer better results at less time and less cost.

Software developers could benefit from GPU-based library with latency in the range of several milliseconds to resize jpg images on GPU.

That solution could also be a rival to NVIDIA DALI project for fast jpg loading at training stage of Machine Learning or Deep Learning frameworks. We can offer super high performance for JPEG decoding together with resize and other image augmentation features on GPU to make that solution useful for fast data loading at CNN training. Please contact us concerning that matter if you are interested.

Roadmap for jpg resize algorithm

Apart from JPEG codec, resize and sharp we can also add crop, color correction, gamma, brightness, contrast, rotations to 90/180/270 degrees - these modules are ready.

Advanced file format support (JP2, TIFF, CR2, DNG, etc.)

Parameter optimizations for NVIDIA Tesla P40 or V100.

Further JPEG Decoder performance optimization.

Implementation of batch mode for image decoding on GPU.

Useful links

Full list of features from Fastvideo Image Processing SDK

Benchmarks for image processing algorithms from Fastvideo SDK

Update

The latest version of the software offers 1400 fps performance on Tesla V100 for 1K images at the same testing conditions.

Original article see here: https://www.fastcompression.com/blog/web-resize-on-the-fly-one-thousand-images-per-second-on-tesla-v100-gpu.htm

1 note

·

View note

Text

Fastvideo SDK vs NVIDIA NPP Library

Author: Fyodor Serzhenko

Why is Fastvideo SDK better than NPP for camera applications?

What is Fastvideo SDK?

Fastvideo SDK is a set of software components which correspond to high quality image processing pipeline for camera applications. It covers all image processing stages starting from raw image acquisition from the camera to JPEG compression with storage to RAM or SSD. All image processing is done completely on GPU, which leads to real-time performance or even faster for the full pipeline. We can also offer a high-speed imaging SDK for non-camera applications on NVIDIA GPUs: offline raw processing, high performance web, digital cinema, video walls, FFmpeg codecs and filters, 3D, AR/VR, AI, etc.

Who are Fastvideo SDK customers?

Fastvideo SDK is compatible with Windows/Linux/ARM and is mostly intended for camera manufacturers and system integrators developing end-user solutions containing video cameras as a part of their products.

The other type of Fastvideo SDK customers are developers of new hardware or software solutions in various fields: digital cinema, machine vision and industrial, transcoding, broadcasting, medical, geospatial, 3D, AR/VR, AI, etc.

All the above customers need faster image processing with higher quality and better latency. In most cases CPU-based solutions are unable to meet such requirements, especially for multicamera systems.

Customer pain points

According to our experience and expertise, when developing end-user solutions, customers usually have to deal with the following obstacles.

Before starting to create a product, customers need to know the image processing performance, quality and latency for the final application.

Customers need reliable software which has already been tested and will not glitch when it is least expected.

Customers are looking for an answer on how to create a new solution with higher performance and better image quality.

Customers need external expertise in image processing, GPU software development and camera applications.

Customers have limited (time/human) resources to develop end-user solutions bound by contract conditions.

They need a ready-made prototype as a part of the solution to demonstrate a proof of concept to the end user.

They want immediate support and answers to their questions regarding the fast image processing software's performance, image quality and other technical details, which can be delivered only by industry experts with many years of experience.

Fastvideo SDK business benefits

Fastvideo SDK as a part of complex solutions allows customers to gain competitive advantages.

Customers are able to design solutions which earlier may have seemed to be impossible to develop within required timeframes and budgets.

The product helps to decrease the time to market of end-user solutions.

At the same time, it increases overall end-user satisfaction with reliable software and prompt support.

As a technology solution, Fastvideo SDK improves image quality and processing performance.

Fastvideo serves customers as a technology advisor in the field of fast image processing: the team of experts provides end-to-end service to customers. That means that all customer questions regarding Fastvideo SDK, as well as any other technical questions about fast image processing are answered in a timely manner.

Fastvideo SDK vs NVIDIA NPP comparison

NVIDIA NPP can be described as a general-purpose solution, because the company implemented a huge set of functions intended for applications in various industries, and the NPP solution mainly focuses on various image processing tasks. Moreover, NPP lacks consistency in feature delivery, as some specific image processing modules are not presented in the NPP library. This leads us to the conclusion that NPP is a good solution for basic camera applications only. It is just a set of functions which users can utilize to develop their own pipeline.

Fastvideo SDK, on the other hand, is designed to implement a full 16/32-bit image processing pipeline on GPU for camera applications (machine vision, scientific, digital cinema, etc). Our end-user applications are based on Fastvideo SDK, and we collect customer feedback to improve the SDK’s quality and performance. We are armed with profound knowledge of customer needs and offer an exceptionally reliable and heavily tested solution.

Fastvideo uses a specific approach in Fastvideo SDK which is based on components (not on functions as in NPP). It is easier to build a pipeline based on components, as the components' input and output are standardized. Every component executes a complete operation, and it can have a complex architecture, whereas NPP only uses several functions. It is important to emphasize here that developing an application using built-in Fastvideo SDK is much less complex than creating a solution based on NVIDIA NPP.

The Fastvideo JPEG codec and lots of other SDK features have been heavily tested by our customers for many years with a total performance benchmark of more than million images per second. This is a question of software reliability, and we consider it as one of our most important advantages.

The major part of the Fastvideo SDK components (debayer and codecs) can offer both high performance and image quality, leaving behind the NPP alternatives. What’s more, this is also true for embedded solutions on Jetson where computing performance is quite limited. For example, NVIDIA NPP only has a bilinear debayer, so it can be regarded as a low-quality solution, best suited only for software prototype development.

Summing up this section, we need to specify the following technological advantages of the Fastvideo SDK over NPP in terms of image processing modules for camera applications:

High-performance codecs: JPEG, JPEG2000 (lossless and lossy)

High-performance 12-bit JPEG encoder

Raw Bayer Codec

Flat-Field Correction together with dark frame subtraction

Dynamic bad pixel suppression in Bayer images

Four high quality demosaicing algorithms

Wavelet-based denoiser on GPU for Bayer and RGB images

Filters and codecs on GPU for FFmpeg

Other modules like color space and format conversions

To summarize, Fastvideo SDK offers an image processing workflow which is standard for digital cinema applications, and could be very useful for other imaging applications as well.

Why should customers consider Fastvideo SDK instead of NVIDIA NPP?

Fastvideo SDK provides better image quality and processing performance for implementing key algorithms for camera applications. The real-time mode is an essential requirement for any camera application, especially for multi-camera systems.

Over the last few years, we've tested NPP intensely and encountered software bugs which weren't fixed. In the meantime, if customers come to us with any bug in Fastvideo SDK, we fix it within a couple of days, because Fastvideo possesses all the source code and the image processing modules are implemented by the Fastvideo development team. Support is our priority: that's why our customers can rely on our SDK.

We offer custom development to meet specific our customers' requirements. Our development team can build GPU-based image processing modules from scratch according to the customer's request, whereas in contrast NVIDIA provides nothing of the kind.

We are focused on high-performance camera applications and we have years of experience, and our solutions have been heavily tested in many projects. For example, our customer vk.com has been processing 400,000 JPG images per second for years without any issue, which means our software is extremely reliable.

Software downloads to evaluate the Fastvideo SDK

GPU Camera Sample application with source codes including SDKs for Windows/Linux/ARM - https://github.com/fastvideo/gpu-camera-sample

Fast CinemaDNG Processor software for Windows and Linux - https://www.fastcinemadng.com/download/download.html

Demo applications (JPEG and J2K codecs, Resize, MG demosaic, MXF player, etc.) from https://www.fastcompression.com/download/download.htm

Fast JPEG2000 Codec on GPU for FFmpeg

You can test your RAW/DNG/MLV images with Fast CinemaDNG Processor software. To create your own camera application, please download the source codes from GitHub to get a ready solution ASAP.

Useful links for projects with the Fastvideo SDK

1. Software from Fastvideo for GPU-based CinemaDNG processing is 30-40 times faster than Adobe Camera Raw:

http://ir-ltd.net/introducing-the-aeon-motion-scanning-system

2. Fastvideo SDK offers high-performance processing and real-time encoding of camera streams with very high data rates:

https://www.fastcompression.com/blog/gpixel-gmax3265-image-sensor-processing.htm

3. GPU-based solutions from Fastvideo for machine vision cameras:

https://www.fastcompression.com/blog/gpu-software-machine-vision-cameras.htm

4. How to work with scientific cameras with 16-bit frames at high rates in real-time:

https://www.fastcompression.com/blog/hamamatsu-orca-gpu-image-processing.htm

Original article see at: https://www.fastcompression.com/blog/fastvideo-sdk-vs-nvidia-npp.htm Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

Software for Hamamatsu ORCA Processing on GPU

Author: Fyodor Serzhenko

Scientific research demands modern cameras with low noise, high resolution, frame rate and bit depth. Such imaging solutions are indispensable in microscopy, experiments with cold atom gases, astronomy, photonics, etc. Apart from outstanding hardware there is a need for high performance software to process streams in realtime with high precision.

Hamamatsu Photonincs company is a world leader in scientific cameras, light sources, photo diodes and advanced imaging applications. For high performance scientific cameras and advanced imaging applications, Hamamatsu introduced ORCA cameras with outstanding features. ORCA cameras are high precision instruments for scientific imaging due to on-board FPGA processing enabling intelligent data reduction, pixel-level calibrations, increased USB 3.0 frame rates, purposeful and innovative triggering capabilities, patented lightsheet read out modes and individual camera noise characterization.

ORCA-Flash4.0 cameras have always provided the advantage of low camera noise. In quantitative applications, like single molecule imaging and super resolution microscopy imaging, fully understanding camera noise is also important. Every ORCA-Flash4.0 V3 is carefully calibrated to deliver outstanding linearity, especially at low light, to offer improved photo response non-uniformity (PRNU) and dark signal non-uniformity (DSNU), to minimize pixel differences and to reduce fixed pattern noise (FPN).

The ORCA-Flash4.0 V3 includes patented Lightsheet Readout Mode, which takes advantage of sCMOS rolling shutter readout to enhance the quality of lightsheet images. When paired with W-VIEW GEMINI image splitting optics, a single ORCA-Flash4.0 V3 camera becomes a powerful dual wavelength imaging device. In "W-VIEW Mode" each half of the image sensor can be exposed independently, facilitating balanced dual color imaging with a single camera. And this feature can be combined with the new and patented "Dual Lightsheet Mode" to offer simultaneous dual wavelength lightsheet microscopy.

Applications for Hamamatsu ORCA cameras

There are quite a lot of scientific imaging tasks which could be solved with Hamamatsu ORCA cameras:

Digital Microscopy

Light Sheet Fluorescence Microscopy

Live-Cell Microscopy and Live-Cell Imaging

Laser Scanning Confocal Microscopy

Biophysics and Biophotonics

Biological and Biomedical Sciences

Bioimaging and Biosensing

Neuroimaging

Hamamatsu ORCA-Flash4.0 V3 Digital CMOS camera (image from https://camera.hamamatsu.com/jp/en/product/search/C13440-20CU/index.html)

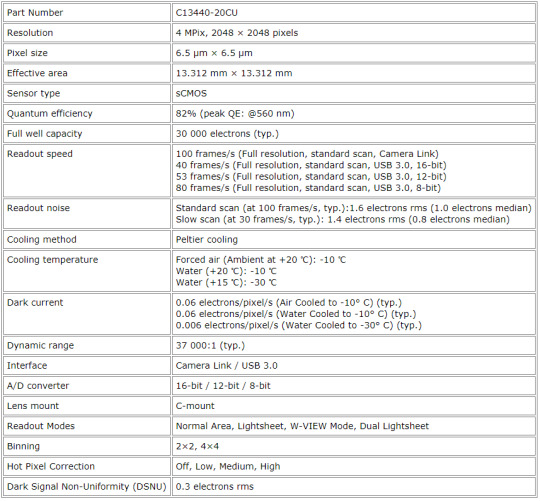

Hamamatsu ORCA-Flash4.0 V3 Digital CMOS camera: C13440-20CU

Image processing for Hamamatsu ORCA-Flash4.0 V3 Digital CMOS camera

That camera generates quite high data rate. Maximum performance for Hamamatsu ORCA-Flash4.0 V3 could be evaluated as 100 fps * 4 MPix * 2 Byte/Pix = 800 MByte/s. As soon as these are 16-bit monochrome frames, that high data rate could be a bottleneck to save such streams to SSD for two-camera system for long-term recording, which is quite usual for microscopy applications.

If we consider one-day recoding duration, storage for such a stream could be a problem. That two-camera system generates 5.76 TB data per hour and it could be a good idea to implement realtime compression to cut storage cost. To compress 16-bit frames, we can't utilize either JPEG or H.265 encoding algorithms because they don't support more than 12-bit data. The best choice here is JPEG2000 compression algorithm which is working natively with 16-bit images. On NVIDIA GeForce GTX 1080 we've got the performance around 240 fps for lossy JPEG2000 encoding with compression ratio around 20. This is the result that we can't achieve on CPU because corresponding JPEG2000 implementations (OpenJPEG, Jasper, J2K, Kakadu) are much slower. Here you can see JPEG2000 benchmark comparison for widespread J2K encoders.

JPEG2000 lossless compression algorithm is also available, but it offers much less compression ratio, usually in the range of 2-2.5 times. Still, it's useful option to store original compressed data without any losses which could be mandatory for particular image processing workflow. In any way, lossless compression makes data rate less, so it's always good for storage and performance issues.

Optimal compression ratio for lossy JPEG2000 encoding should be defined by checking different quality metrics and their correspondence to a particular task to be solved. Still, there is no good alternative for fast JPEG2000 compression for 16-bit data, so JPEG2000 looks as the best fit. We would also recommend to add the following image processing modules to the full pipeline to get better image quality:

Dynamic Bad Pixel Correction

Data linearization with 1D LUT

Dark Frame Subtraction

Flat Field Correction (vignette removal)

White/Black Points

Exposure Correction

Curves and Levels

Denoising

Crop, Flip/Flop, Rotate 90/180/270, Resize

Geometric transforms, Rotation to an arbitrary angle

Sharp

Gamma Correction

Realtime Histogram and Parade

Mapping and monitor output

Output JPEG2000 encoding (lossless or lossy)

The above image processing pipeline could be fully implemented on GPU to achieve realtime performance or even faster. It could be done with Fastvideo SDK and NVIDIA GPU. That SDK is supplied with sample applications in source codes, so user can create his own GPU-based application very fast. Fastvideo SDK is avalilable for Windows, Linux, L4T.

There is also a gpu-camera-sample application which is based on Fastvideo SDK. You can download source codes and/or binaries for Windows from the following link on Github - gpu camera sample. Binaries are able to work with raw images in PGM format (8/12/16-bit), even without a camera. User can add support for Hamamatsu cameras to process images in realtime on NVIDIA GPU.

Fastvideo SDK to process on GPU raw images from Hamamatsu ORCA sCMOS cameras

The performance of JPEG2000 codec strongly depends on GPU, image content, encoding parameters and complexity of the full image processing pipeline. To scale the performance, user can also utilize several GPUs for image processing at the same time. Multiple GPU processing option is the part of Fastvideo SDK.

If you have any questions, please fill the form below with your task description and send us your sample images for evaluation.

Links

Hamamatsu ORCA-Flash4.0 V3 Digital sCMOS camera

GPU Software for camera applications

JPEG2000 Codec on NVIDIA GPU

Image and Video Processing SDK for NVIDIA GPUs

GPU Software for machine vision and industrial cameras

Original article see at: https://www.fastcompression.com/blog/hamamatsu-orca-gpu-image-processing.htm

Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

JPEG Optimizer Library on CPU and GPU

Fastvideo has implemented the fastest JPEG Codec and Image Processing SDK for NVIDIA GPUs. That software could work at maximum performance with full range of NVIDIA GPUs, starting from mobile Jetson to professional Quadro and Tesla server GPUs. Now we've extended these solutions to be able to offer various optimizations to Standard JPEG algorithm. This is vitally important issue to get better image compression while retaining the same perceived image quality within existing JPEG Standard.

Our expert knowledge in JPEG Standard and GPU programming are proved by performance benchmarks of our JPEG Codec. This is also a ground for our custom software design to solve various time-critical tasks in connection with JPEG images and corresponding services.

Our customers have been utilizing that GPU-based software for fast JPEG encoding and decoding, JPEG resize for high load web applications and they asked us to implement more optimizations which are indispensable for web solutions. These are the most demanding tasks:

JPEG recompression to decrease file size without loosing perceived image quality

JPEG optimization to get better user experience while loading JPEG images via slow connection

JPEG processing on users' devices

JPEG resize on-demand:

Implementations of JPEG Baseline, Extended, Progressive and Lossless parts of the Standard

Other tasks related to JPEG images

to store just one source image (to cut storage costs)

to match resolution of user's device (to exclude JPEG Resize on user's device)

to minimize traffic

to ensure minimum server response time

to offer better user experience

The idea about image optimization is very popular and it really makes sense. As soon as JPEG is so widespread at web, we need to optimize JPEG images for web as well. By decreasing image size, we can save space for image storage, minimize traffic, improve latency, etc. There are many methods of JPEG optimization and recompression which could bring us better compression ratio while saving perceptual image quality. In our products we strive to combine all of them with the idea about better performance on multicore CPUs and on modern GPUs.

There is a great variety of image processing tasks which are connected with JPEG handling. They could be solved either on CPU or on GPU. We are ready to offer custom software design to meet special requirements that our customers could have. Please fill the form below and send us your task description.

JPEG Optimizer Library and other software from Fastvideo

JPEG Optimizer Library (SDK for GPU/CPU on Windows/Linux) to recompress and to resize JPEG images for corporate customers: high load web services, photo stock applications, neural network training, etc.

Standalone JPEG optimizer application - in progress

Projects under development

JPEG optimizer SDK on CPU and GPU

Mobile SDK on CPU for Android/IOS for image decoding and visualization on smartphones

JPEG recompression library that runs inside your web app and optimizes images before upload

JPEG optimizer API for web

Online service for JPEG optimization

Fastvideo publications on the subject

JPEG Optimization Algorithms Review

Web resize on-the-fly on GPU

JPEG resize on-demand: FPGA vs GPU. Which is the fastest?

Jpeg2Jpeg Acceleration with CUDA MPS on Linux

JPEG compress and decompress with CUDA MPS

Original article see at: https://www.fastcompression.com/products/jpeg-optimizer-library.htm

Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

Benchmark comparison for Jetson Nano, TX2, Xavier NX and AGX

Author: Fyodor Serzhenko

NVIDIA has released a series of Jetson hardware modules for embedded applications. NVIDIA® Jetson is the world's leading embedded platform for image processing and DL/AI tasks. Its high-performance, low-power computing for deep learning and computer vision makes it the ideal platform for mobile compute-intensive projects.

We've developed an Image & Video Processing SDK for NVIDIA Jetson hardware. Here we present performance benchmarks for the available Jetson modules. As an image processing pipeline, we consider a basic camera application as a good example for benchmarking.

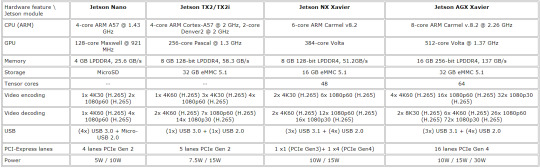

Hardware features for Jetson Nano, TX2, Xavier NX and AGX Xavier

Here we present a brief comparison for Jetsons hardware features to see the progress and variety of mobile solutions from NVIDIA. These units are aimed at different markets and tasks

Table 1. Hardware comparison for Jetson modules

In camera applications, we can usually hide Host-to-Device transfers by implementing GPU Zero Copy or by overlapping GPU copy/compute. Device-to-Host transfers can be hidden via copy/compute overlap.

Hardware and software for benchmarking

CPU/GPU NVIDIA Jetson Nano, TX2, Xavier NX and AGX Xavier

OS L4T (Ubuntu 18.04)

CUDA Toolkit 10.2 for Jetson Nano, TX2, Xavier NX and AGX Xavier

Fastvideo SDK 0.16.4

NVIDIA Jetson Comparison: Nano vs TX2 vs Xavier NX vs AGX Xavier

For these NVIDIA Jetson modules, we've done performance benchmarking for the following standard image processing tasks which are specific for camera applications: white balance, demosaic (debayer), color correction, resize, JPEG encoding, etc. That's not the full set of Fastvideo SDK features, but it's just an example to see what kind of performance we could get from each Jetson. You can also choose a particular debayer algorithm and output compression (JPEG or JPEG2000) for your pipeline.

Table 2. GPU kernel times for 2K image processing (1920×1080, 16 bits per channel, milliseconds)

Total processing time is calculated for the values from the gray rows of the table. This is done to show the maximum performance benchmarks for a specified set of image processing modules which correspond to real-life camera applications.

Each Jetson module was run with maximum performance

MAX-N mode for Jetson AGX Xavier

15W for Jetson Xavier NX and Jetson TX2

10W for Jetson Nano

Here we've compared just the basic set of image processing modules from Fastvideo SDK to let Jetson developers evaluate the expected performance before building their imaging applications. Image processing from RAW to RGB or RAW to JPEG are standard tasks, and now developers can get detailed info about expected performance for the chosen pipeline according to the table above. We haven't tested Jetson H.264 and H.265 encoders and decoders in that pipeline. As soon as H.264 and H.265 encoders are working at the hardware level, encoding can be done in parallel with CUDA code, so we should be able to get even better performance.

We've done the same kernel time measurements for NVIDIA GeForce and Quadro GPUs. Here you can get the document with the benchmarks.

Software for Jetson performance comparison

We've released the software for a GPU-based camera application on GitHub, and it's available to download both binaries and source codes for our gpu camera sample project. It's implemented for Windows 7/10, Linux Ubuntu 18.04 and L4T. Apart from a full image processing pipeline on GPU for still images from SSD and for live camera output, there are options for streaming and for glass-to-glass (G2G) measurements to evaluate real latency for camera systems on Jetson. The software currently works with machine vision cameras from XIMEA, Basler, JAI, Matrix Vision, Daheng Imaging, etc.

To check the performance of Fastvideo SDK on a laptop/desktop/server GPU without any programming, you can download Fast CinemaDNG Processor software with GUI for Windows or Linux. That software has a Performance Benchmarks window, and there you can see timing for each stage of image processing. This is a more sofisticated method of performance testing, because the image processing pipeline in that software can be quite advanced, and you can test any module you need. You can also perform various tests on images with different resolutions to see how much the performance depends on image size, content and other parameters.

Other blog posts from Fastvideo about Jetson hardware and software

Jetson Image Processing

Jetson Zero Copy

Jetson Nano Benchmarks on Fastvideo SDK

Jetson AGX Xavier performance benchmarks

JPEG2000 performance benchmarks on Jetson TX2

Remotely operated walking excavator on Jetson

Low latency H.264 streaming on Jetson TX2

Performance speedup for Jetson TX2 vs AGX Xavier

Source codes for GPU-Camera-Sample software on GitHub to connect USB3 and other cameras to Jetson

Original article see at: https://www.fastcompression.com/blog/jetson-benchmark-comparison.htm

Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes