#find iocs linux

Explore tagged Tumblr posts

Text

How to search for IOCs on a Linux machine using Loki IOC and APT scanner

This is a quick guide to running Loki APT Scanner to check for IOCs on a Linux machine. Debian/Ubuntu: – Run the below to setup install loki, get rules and update Loki. sudo su - apt-get install libssl-dev git clone https://github.com/Neo23x0/Loki.git cd Loki source bin/activate pip install colorama yara-python psutil rfc5424-logging-handler netaddr python3 loki-upgrader.py – To run Loki Scanner…

2 notes

·

View notes

Text

APT41’s Actions Highlight the Need for Threat Monitoring

This blog post discusses malware attack chain technology, delivery tactics, and other APT41 operations. We also explain indications of compromise (IOCs) to help security professionals protect against similar attacks. GTIG used customised detection signatures, stopped attacker-controlled infrastructure, and strengthened Safe Browsing to defeat this attempt.

APT41

APT41, a Chinese cyber threat group, commits both commercial cybercrime and state-sponsored espionage. Group uses modern espionage software for its own gain. China promotes its economic interests through high-tech and healthcare strategic espionage and profits from video gaming. APT41 is skilled, persistent, and agile, using malware, supply chain breaches, and spear-phishing. Cybercrime and government are complex, as APT41's criminal acts may have government ties.

Chinese Cyber Group APT41 Blends Personal Crime with State Espionage

According to a detailed FireEye Intelligence study published on Google Cloud Blog, APT41, a well-known Chinese cyber threat cell, is purportedly conducting state-sponsored espionage and financially driven operations. This group is notable among China-based entities being watched because it appears to exploit private malware, generally used for espionage, for personal gain. Evidence suggests APT41 has been committing cybercrime and cyberespionage since 2014.

APT41's espionage targets match China's five-year economic growth goals. They have established key access to telecommunications, high-tech, and healthcare companies. Targeting telecom firms' call record data and targeting news/media corporations, travel agencies, and higher education institutions shows that the organisation tracks people and conducts surveillance. APT41 may have targeted a hotel's reservation systems before Chinese authorities came for security reconnaissance.

APT41 has targeted the video game sector with ransomware and virtual currency manipulation. They can switch between Linux and Windows to reach game production environments. The cryptographic certificates and source code needed to sign malware are taken from these settings. Importantly, it has used this access to put malicious code into trustworthy files and disseminate them to victim firms using supply chain breach techniques.

These supply chain compromises have defined APT41's most notorious espionage. APT41 restricts the deployment of follow-on malware in multi-stage operations by matching against unique system IDs, considerably obfuscating the intended targets and limiting delivery to only the targeted victims, notwithstanding the effort.

The malware families and tools used by APT41 include public utilities, shared malware with other Chinese espionage organisations, and unique tools. Spear-phishing emails with HTML attachments often compromise. After entering, they can utilise rootkits, credential stealers, keyloggers, and backdoors. APT41 sparingly uses rootkits and MBR bootkits to mask malware and maintain persistence on high-value targets. This adds stealth because the function runs before OS initialisation.

The group is fast and relentless. They quickly find and break intermediary systems to access network portions. In two weeks, they breached hundreds of systems across segments and regions. In addition, they are persistent and quick to adapt. After a victim company makes changes or users download infected attachments, APT41 can gather new malware, register new infrastructure, and re-establish itself in compromised systems across numerous locations within hours.

APT41 may be linked to Chinese-language forum users “Zhang Xuguang” and “Wolfzhi”. These people suggested publicising their skills and services to be hired.

Comparing online gaming goals to APT41's working hours, “Zhang Xuguang” recommended “moonlighting.” These individuals are suspected of espionage due to persona data, programming proficiency, and targeting of Chinese-market online games. Operational activity mapping since 2012 suggests APT41 conducts financially motivated operations outside of workdays.

APT41's originality, expertise, and resourcefulness are shown by their unique use of supply chain breaches, regular use of compromised digital certificates to sign malware, and infrequent use of bootkits among Chinese APT groups. Since 2015, APT41, like other Chinese espionage organisations, has shifted from direct intellectual property theft to strategic information gathering and access management, despite their continued financial interest in the video game sector. As their targeting and capabilities have grown, supply chain compromises in various industries may increase.

APT41 may receive safeguards to conduct for-profit operations or be ignored due to its linkages to state-sponsored and underground markets. They could have also avoided notice. These operations show a blurred line between government and crime, which threatens ecosystems and is exemplified by APT41.

For more details visit govindhtech.com

#APT41#supplychain#cybercrime#Chinesecyberthreat#MasterBootRecord#indicatorsofcompromise#technology#technews#technologynews#news#govindhtech

0 notes

Text

Hiring Entry-Level Cyber Talent? Start with CSA Certification

In today's increasingly digital landscape, the threat of cyberattacks looms larger than ever. Businesses of all sizes are grappling with sophisticated threats, making robust cybersecurity defenses not just a luxury, but a necessity. At the forefront of this defense are Security Operations Center (SOC), and the unsung heroes within them: SOC Analysts.

However, a critical challenge many organizations face is bridging the talent gap in cybersecurity. The demand for skilled professionals far outstrips the supply, particularly at the entry-level. This is where strategic hiring practices, coupled with valuable certifications like the Certified SOC Analyst (C|SA) certification, become paramount.

The Ever-Growing Need for SOC Analysts

The role of a SOC analyst is pivotal. They are the frontline defenders, tirelessly monitoring an organization's systems and networks for suspicious activity, detecting threats, and initiating rapid responses to mitigate potential damage. From analyzing logs and alerts to investigating incidents and implementing containment measures, their work is continuous and critical.

The job outlook for information security analysts, which includes SOC analysts, is incredibly strong. The U.S. Bureau of Labor Statistics projects a 33% growth from 2023 to 2033, a rate significantly faster than the average for all occupations. This translates to approximately 17,300 job openings each year, highlighting the immense demand for these professionals. As cyber threats evolve in sophistication and frequency, and as businesses increasingly embrace digital transformation and remote work, the need for skilled SOC analysts will only intensify.

What Does an Entry-Level SOC Analyst Do?

An entry-level SOC analyst, often referred to as a Tier 1 analyst, serves as the first line of defense within a Security Operations Center. Their primary responsibilities include:

Monitoring Security Alerts: Continuously observing security alerts generated by various systems such as SIEM (Security Information and Event Management), IDS/IPS (Intrusion Detection/Prevention Systems), and endpoint protection1 tools.

Initial Triage and Prioritization: Assessing the severity and legitimacy of alerts, distinguishing between false positives and genuine threats, and prioritizing them for further investigation.

Log Analysis: Examining logs from different sources (servers, workstations, network devices) to understand security events and identify indicators of compromise (IoCs).

Following Playbooks: Executing established procedures and playbooks for common security scenarios and incident response.

Documentation: Meticulously documenting findings, actions taken, and the progression of security incidents.

Escalation: Escalating confirmed or complex threats to higher-tier SOC analysts (Tier 2 or Tier 3) for deeper investigation and remediation.

While a bachelor's degree in computer science or a related field can be beneficial, it's not always a strict prerequisite for entry-level SOC roles. Many successful SOC analysts enter the field through dedicated cybersecurity courses, bootcamps, or relevant certifications.

Key Skills for Aspiring SOC Analysts

To excel as an entry-level SOC analyst, a combination of technical and soft skills is essential:

Technical Skills:

Network Fundamentals: A solid understanding of network protocols (TCP/IP, DNS, DHCP), network architecture, and common network devices (firewalls, routers).

Operating System Knowledge: Familiarity with various operating systems (Windows, Linux) and their security configurations.

Security Technologies: Practical knowledge of security tools like SIEM systems, intrusion detection/prevention systems (IDS/IPS), antivirus software, and vulnerability scanners.

Log Analysis: The ability to effectively analyze security logs from diverse sources to identify anomalies and malicious activities.

Incident Response Basics: Understanding the fundamental steps of incident response, including detection, containment, eradication, and recovery.

Threat Intelligence: An awareness of current cyber threats, attack methodologies, and indicators of compromise.

Basic Scripting (Optional but beneficial): Familiarity with scripting languages like Python can help automate tasks and analyze data more efficiently.

Soft Skills:

Analytical and Problem-Solving: The ability to think critically, analyze complex information, and identify root causes of security incidents.

Attention to Detail: Meticulousness in examining logs and alerts to avoid missing crucial details.

Communication: Clear and concise written and verbal communication skills to document incidents and collaborate with team members and other departments.

Adaptability: The cybersecurity landscape is constantly evolving, so the ability to learn new technologies and adapt to emerging threats is vital.

Teamwork: SOC operations are often a collaborative effort, requiring effective communication and coordination with colleagues.

The Value of SOC Certifications for Entry-Level Talent

For individuals looking to break into the cybersecurity field as a SOC analyst, and for employers seeking to identify qualified entry-level talent, SOC certifications play a crucial role. These certifications validate a candidate's foundational knowledge and practical skills, providing a standardized benchmark of competence.

While several certifications can aid an aspiring SOC analyst, the Certified SOC Analyst (C|SA) certification by EC-Council stands out as a strong starting point, particularly for those targeting Tier I and Tier II SOC roles.

Why CSA Certification is a Game-Changer for Entry-Level SOC Hiring

The Certified SOC Analyst (C|SA) certification is specifically engineered to equip current and aspiring SOC analysts with the proficiency needed to perform entry-level and intermediate-level operations. Here's why the C|SA certification is a significant asset for hiring entry-level cyber talent:

Tailored for SOC Operations: Unlike broader cybersecurity certifications, C|SA is designed with the explicit needs of a Security Operations Center in mind. Its curriculum covers the end-to-end SOC workflow, from initial alert monitoring to incident response and reporting.

Comprehensive Skill Development: The C|SA program delves into critical areas such as:

Security Operations and Management: Understanding the principles and practices of managing a SOC.

Understanding Cyber Threats, IoCs, and Attack Methodology: Gaining knowledge of common cyber threats, indicators of compromise, and attack techniques.

Incidents, Events, and Logging: Learning about log management, correlation, and the significance of various security events.

Incident Detection with SIEM: Mastering the use of Security Information and Event Management (SIEM) solutions for effective incident detection.

Enhanced Incident Detection with Threat Intelligence: Integrating threat intelligence feeds into SIEM for proactive threat identification.

Elaborate Understanding of SIEM Deployment: Gaining practical insights into deploying and configuring SIEM solutions.

Hands-On, Practical Learning: The C|SA program emphasizes practical skills through lab-intensive sessions. Candidates get hands-on experience with incident monitoring, detection, triaging, analysis, containment, eradication, recovery, and reporting. This practical exposure is invaluable for entry-level professionals who often lack real-world experience.

Real-time Environment Simulation: The labs in the C|SA program simulate real-time SOC environments, allowing candidates to practice identifying and validating intrusion attempts using SIEM solutions and threat intelligence, mirroring actual job scenarios.

Compliance with Industry Frameworks: The C|SA certification aligns 100% with the National Initiative for Cybersecurity Education (NICE) framework, specifically under the "Protect and Defend (PR)" category for the role of Cyber Defense Analysis (CDA). This alignment ensures that C|SA-certified individuals possess skills recognized and valued across the industry.

Global Recognition: Accredited by EC-Council, a globally recognized authority in cybersecurity certifications, the C|SA credential enhances career prospects and demonstrates proficiency to potential employers worldwide.

Clear Career Pathway: For aspiring SOC analyst professionals, the C|SA serves as the foundational step, providing them with the necessary skills and knowledge to enter a SOC team at Tier I or Tier II level. This creates a clear and achievable career path.

Beyond Certification: What Else to Look For

While the C|SA certification is an excellent indicator of a candidate's readiness for an entry-level SOC analyst role, employers should also consider other factors during the hiring process:

Passion and Curiosity: Cybersecurity is a rapidly evolving field. Look for candidates who demonstrate genuine enthusiasm for continuous learning and a strong desire to stay updated on the latest threats and technologies.

Problem-Solving Aptitude: Assess their ability to think critically and approach challenges systematically.

Communication Skills: Strong communication is vital for collaborating within the SOC team and explaining technical issues to non-technical stakeholders.

Any Relevant Experience: Even internships, personal projects, or volunteer work in cybersecurity can demonstrate practical application of skills.

Cultural Fit: A candidate's ability to integrate into the team dynamics and contribute positively to the SOC environment.

Conclusion

As the cybersecurity landscape continues to grow in complexity and threat sophistication, the demand for skilled SOC analysts will only intensify. For organizations seeking to build a robust and responsive security team, investing in entry-level talent is crucial. The Certified SOC Analyst (C|SA) certification offers a highly relevant and practical pathway for aspiring professionals to gain the necessary skills, making them a valuable asset from day one. By prioritizing candidates with foundational certifications like C|SA, employers can confidently onboard individuals who are not just theoretically knowledgeable, but also practically equipped to contribute to their security operations and safeguard their digital assets.

0 notes

Text

Splunk & RTO

some interesting challenge and course. Bascially on some Splunk and Red teaming stuff.

1. Hunting for indicators of compromise (IOCs) pertaining to APT29 attack techniques.

ATTCK T1036.002 Right-to-Left OverrideDefense Evasion The RTLO character is a non-printing Unicode character that causes the text that follows it to be displayed in reverse.

It's a bit tricky to hunt RTLO in Splunk since it's invisiable character. UNICODE explorer will be helpful when you are looking for some uncommon character. https://unicode-explorer.com/c/202E

In this case, we click copy, then paste to splunk, then we will find suspicous file name. The query seems still empty inside quote but the invisiable character indeed there.

Another possible way is to type this character manully, but didn't success in remote desktop unfortunately.

Windows:

hold alt type + type 202E release alt

Linux:

hold ctrl+shift type U 202E release ctrl+shift

2. Hunting network connection and process execution

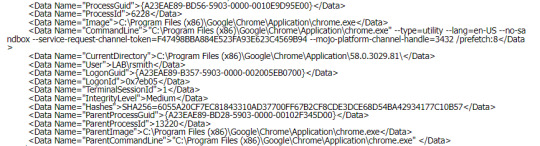

There are two sysmon events were used in the challenge

Sysmon Event ID 1 - Process creation https://www.ultimatewindowssecurity.com/securitylog/encyclopedia/event.aspx?eventid=90001

There are some lovely field that can help you corrlerate the process in the domain sope, especially for Guid that can help you link between child and parent process, LogonGuid also helpful when you want to corrlerate KDC event.

Classic scenario for phishing action chain Event ID 1: Process creation(Outlook) -> Event ID 11: FileCreate->Event ID 1: Process creation(Word with micro)-> Event ID 11: FileCreate(Malicious powershell)

Sysmon Event ID 3 - Network connection https://www.ultimatewindowssecurity.com/securitylog/encyclopedia/event.aspx?eventid=90003

This event will help you to identify process connection, information like IP, Port, and Process name will be extremely helpful during the investigation.

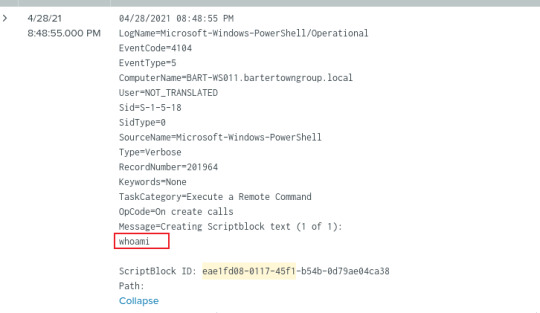

PowerShellCore/Operational log EventCode=4104

This event will documents all script blocks processed when ScriptBlockLogging is enabled. We will be able to see the execution of a remote PowerShell command.

PowerShellCore/Operational log EventCode=4103

module logging is a bit different in that it includes the command invocations and portions of the script. It’s possible it will not have the entire details of the execution and the results. Example: malicious module to collect sensitive file

2. RTO compliance

Before starting the task, we need to double check if service hosting on some providers like amazon, azure, google, these platform has specific ruls when security assessments performed from infrastructure. For example, if red team compromised the client server that hosted on google, when google detected suspicious C2, it may cause unexpected service shutdown due to Google security policy violation and cause the business damage on client side. Red tean operator must confirm with the client to make sure both side understandstand the risk to build a mature plan and able to remediate the influence when unexpected serivce outage occured.

0 notes

Text

Cloud Sniper- To Manage Virtual Security Operations

Cloud Sniper is a platform designed to manage Security Operations in cloud environments. It is an open platform which allows responding to security incidents by accurately analyzing and correlating native cloud artifacts.

It is to be used as a Virtual Security Operations Center (vSOC) to detect and remediate security incidents providing a complete visibility of the company’s cloud security posture.

With this platform, you will have a complete and comprehensive management of the security incidents, reducing the costs of having a group of level-1 security analysts hunting for cloud-based Indicators of Compromise (IOC). These IOCs, if not correlated, will generate difficulties in detecting complex attacks.

At the same time Cloud Sniper enables advanced security analysts integrate the platform with external forensic or incident-and-response tools to provide security feeds into the platform.

The cloud-based platform is deployed automatically and provides complete and native integration with all the necessary information sources, avoiding the problem that many vendors have when deploying or collecting data.

Cloud Sniper releases

1. Automatic Incident and Response 1. WAF filtering 2. NACLs filtering 3. IOCs knowledge database. 4. Tactics, Techniques and Procedures (TTPs) used by the attacker 2. Security playbooks 1. NIST approach 3. Automatic security tagging 4. Cloud Sniper Analytics 1. Beaconing detection with VPC Flow Logs (C2 detection analytics)

Cloud Sniper receives cloud-based and third-parties feeds and automatically responds protecting your infrastructure and generating a knowledge database of the IOCs that are affecting your platform. This is the best way to gain visibility in environments where information can be bounded by the Shared Responsibility Model enforced by cloud providers.

To detect advanced attack techniques, which may easily be ignored, the Cloud Sniper Analytics module correlates the events generating IOCs. These will give visibility on complex artifacts to analyze, helping both to stop the attack and to analyze the attacker’s TTPs.

Cloud Sniper is currently available for AWS, but it is to be extended to others cloud platforms.

Automatic infrastructure deployment (for AWS)

Cloud Sniper deployment

How it works

Cloud Sniper – AWS native version

Cloud Sniper receives cloud-based or third-party feeds to take remediation actions in the cloud. Currently, the AWS native version, gets feeds from GuardDuty, a continuous security monitoring service that detects threats based on CloudTrial Logs/VPC Flow Logs/DNS Logs artifacts.

The GuardDuty security analysis is based on the Shared Responsibility Model of cloud environments, in which the provider has access to hidden information for the security analyst (such as DNS logs). GuardDuty provides findings, categorized in a pseudo MITRE’s TTP’s tagging.

Also Read: How To Configure Kali Linux on AWS Cloud

When GuardDuty detects an incident, Cloud Sniper automatically analyzes what actions are available to mitigate and remediate that threat. If layer 7/4/3 attacks are taking place, it blocks the corresponding sources, both in the WAF and in the Network Access Control Lists of the affected instances.

A knowledge database will be created to store the IOCs that affect the cloud environments and will build its own Threat Intelligence feeds to use in the future.

The Cloud Sniper Analytics module allows to analyze the flow logs of the entire network where an affected instance is deployed and obtain analytics on traffic behavior, looking for Command and Control (C2) activity.

Installation (for AWS)

Cloud Sniper uses Terraform to automatically deploy the entire infrastructure in the cloud. The core is programmed in python, so it can be extended according to the needs of each vSOC.

You must have:

1. AWS cli installed 2. A programmatic access key 3. AWS local profile configured 4. Terraform client installed

To deploy Cloud Sniper you must run:

1. ~$ git clone https://ift.tt/32gDfxO 2. ~$ cd Cloud-Sniper 3. Set the environment variables corresponding to the account in the variables.tf file 4. Edit the main.tf file

provider “aws” { region = “region” shared_credentials_file = “/your-home/.aws/credentials” profile = “your-profile” }

5. ~/Cloud-Sniper$ terraform init 6. ~/Cloud-Sniper$ terraform plan 7. ~/Cloud-Sniper$ terraform apply [yes]

AWS artifacts integration:

The platform is integrated with the following cloud technologies:

1. GuardDuty findings 2. SQS 3. CloudWatch 4. WAF 5. EC2 6. VPC Flow Logs 7. DynamoDB 8. IAM 9. S3 10. Lambda 11. Kinesis Firehose

Upcoming Features and Integrations

1. Security playbooks for cloud-based environments 2. Security incidents centralized management for multiple accounts. Web Management UI 3. WAF analytics 4. Case management (automatic case creation) 5. IOCs enrichment and Threat Intelligence feeds 6. Automatic security reports based on well-known security standards (NIST) 7. Integration with third-party security tools (DFIR)

Download Cloud-Sniper

The post Cloud Sniper- To Manage Virtual Security Operations appeared first on HackersOnlineClub.

from HackersOnlineClub https://ift.tt/2EvfdXO from Blogger https://ift.tt/3gubebj

0 notes

Text

300+ TOP LARAVEL Interview Questions and Answers

Laravel Interview Questions for freshers experienced :-

1. What is Laravel? An open source free "PHP framework" based on MVC Design Pattern. It is created by Taylor Otwell. Laravel provides expressive and elegant syntax that helps in creating a wonderful web application easily and quickly. 2. List some official packages provided by Laravel? Below are some official packages provided by Laravel Cashier: Laravel Cashier provides an expressive, fluent interface to Stripe's and Braintree's subscription billing services. It handles almost all of the boilerplate subscription billing code you are dreading writing. In addition to basic subscription management, Cashier can handle coupons, swapping subscription, subscription "quantities", cancellation grace periods, and even generate invoice PDFs.Read More Envoy: Laravel Envoy provides a clean, minimal syntax for defining common tasks you run on your remote servers. Using Blade style syntax, you can easily setup tasks for deployment, Artisan commands, and more. Currently, Envoy only supports the Mac and Linux operating systems. Read More Passport: Laravel makes API authentication a breeze using Laravel Passport, which provides a full OAuth2 server implementation for your Laravel application in a matter of minutes. Passport is built on top of the League OAuth2 server that is maintained by Alex Bilbie. Read More Scout: Laravel Scout provides a simple, driver based solution for adding full-text search to your Eloquent models. Using model observers, Scout will automatically keep your search indexes in sync with your Eloquent records.Read More Socialite: Laravel Socialite provides an expressive, fluent interface to OAuth authentication with Facebook, Twitter, Google, LinkedIn, GitHub and Bitbucket. It handles almost all of the boilerplate social authentication code you are dreading writing.Read More 3. What is the latest version of Laravel? Laravel 5.8.29 is the latest version of Laravel. Here are steps to install and configure Laravel 5.8.29 4. What is Lumen? Lumen is PHP micro framework that built on Laravel's top components. It is created by Taylor Otwell. It is the perfect option for building Laravel based micro-services and fast REST API's. It's one of the fastest micro-frameworks available. 5. List out some benefits of Laravel over other Php frameworks? Top benifits of laravel framework Setup and customization process is easy and fast as compared to others. Inbuilt Authentication System. Supports multiple file systems Pre-loaded packages like Laravel Socialite, Laravel cashier, Laravel elixir,Passport,Laravel Scout. Eloquent ORM (Object Relation Mapping) with PHP active record implementation. Built in command line tool "Artisan" for creating a code skeleton ,database structure and build their migration. 6. List out some latest features of Laravel Framework Inbuilt CRSF (cross-site request forgery ) Protection. Laravel provided an easy way to protect your website from cross-site request forgery (CSRF) attacks. Cross-site request forgeries are malicious attack that forces an end user to execute unwanted actions on a web application in which they're currently authenticated. Inbuilt paginations Laravel provides an easy approach to implement paginations in your application.Laravel's paginator is integrated with the query builder and Eloquent ORM and provides convenient, easy-to-use pagination of database. Reverse Routing In Laravel reverse routing is generating URL's based on route declarations.Reverse routing makes your application so much more flexible. Query builder: Laravel's database query builder provides a convenient, fluent interface to creating and running database queries. It can be used to perform most database operations in your application and works on all supported database systems. The Laravel query builder uses PDO parameter binding to protect your application against SQL injection attacks. There is no need to clean strings being passed as bindings. read more Route caching Database Migration IOC (Inverse of Control) Container Or service container. 7. How can you display HTML with Blade in Laravel? To display html in laravel you can use below synatax. {!! $your_var !!} 8. What is composer? Composer is PHP dependency manager used for installing dependencies of PHP applications.It allows you to declare the libraries your project depends on and it will manage (install/update) them for you. It provides us a nice way to reuse any kind of code. Rather than all of us reinventing the wheel over and over, we can instead download popular packages. 9. How to install Laravel via composer? To install Laravel with composer run below command on your terminal. composer create-project Laravel/Laravel your-project-name version 10. What is php artisan. List out some artisan commands? PHP artisan is the command line interface/tool included with Laravel. It provides a number of helpful commands that can help you while you build your application easily. Here are the list of some artisian command. php artisan list php artisan help php artisan tinker php artisan make php artisan –versian php artisan make model model_name php artisan make controller controller_name 11. How to check current installed version of Laravel? Use php artisan –version command to check current installed version of Laravel Framework Usage: php artisan --version 12. List some Aggregates methods provided by query builder in Laravel? Aggregate function is a function where the values of multiple rows are grouped together as input on certain criteria to form a single value of more significant meaning or measurements such as a set, a bag or a list. Below is list of some Aggregates methods provided by Laravel query builder. count() Usage:$products = DB::table(‘products’)->count(); max() Usage:$price = DB::table(‘orders’)->max(‘price’); min() Usage:$price = DB::table(‘orders’)->min(‘price’); avg() Usage:$price = DB::table(‘orders’)->avg(‘price’); sum() Usage: $price = DB::table(‘orders’)->sum(‘price’); 13. Explain Events in Laravel? Laravel events: An event is an incident or occurrence detected and handled by the program.Laravel event provides a simple observer implementation, that allow us to subscribe and listen for events in our application.An event is an incident or occurrence detected and handled by the program.Laravel event provides a simple observer implementation, that allows us to subscribe and listen for events in our application. Below are some events examples in Laravel:- A new user has registered A new comment is posted User login/logout New product is added. 14. How to turn off CRSF protection for a route in Laravel? To turn off or diasble CRSF protection for specific routes in Laravel open "app/Http/Middleware/VerifyCsrfToken.php" file and add following code in it //add this in your class private $exceptUrls = ; //modify this function public function handle($request, Closure $next) { //add this condition foreach($this->exceptUrls as $route) { if ($request->is($route)) { return $next($request); } } return parent::handle($request, $next);} 15. What happens when you type "php artisan" in the command line? When you type "PHP artisan" it lists of a few dozen different command options. 16. Which template engine Laravel use? Laravel uses Blade Templating Engine. Blade is the simple, yet powerful templating engine provided with Laravel. Unlike other popular PHP templating engines, Blade does not restrict you from using plain PHP code in your views. In fact, all Blade views are compiled into plain PHP code and cached until they are modified, meaning Blade adds essentially zero overhead to your application. Blade view files use the .blade.php file extension and are typically stored in the resources/views directory. 17. How can you change your default database type? By default Laravel is configured to use MySQL.In order to change your default database edit your config/database.php and search for ‘default’ => ‘mysql’ and change it to whatever you want (like ‘default’ => ‘sqlite’). 18. Explain Migrations in Laravel? How can you generate migration . Laravel Migrations are like version control for your database, allowing a team to easily modify and share the application’s database schema. Migrations are typically paired with Laravel’s schema builder to easily build your application’s database schema. Steps to Generate Migrations in Laravel To create a migration, use the make:migration Artisan command When you create a migration file, Laravel stores it in /database/migrations directory. Each migration file name contains a timestamp which allows Laravel to determine the order of the migrations. Open the command prompt or terminal depending on your operating system. 19. What are service providers in laravel? Service providers are the central place of all Laravel application bootstrapping. Your own application, as well as all of Laravel’s core services are bootstrapped via service providers. Service provider basically registers event listeners, middleware, routes to Laravel’s service container. All service providers need to be registered in providers array of app/config.php file. 20. How do you register a Service Provider? To register a service provider follow below steps: Open to config/app.php Find ‘providers’ array of the various ServiceProviders. Add namespace ‘Iluminate\Abc\ABCServiceProvider:: class,’ to the end of the array. 21. What are Implicit Controllers? Implicit Controllers allow you to define a single route to handle every action in the controller. You can define it in route.php file with Route: controller method. Usage : Route::controller('base URI',''); 22. What does "composer dump-autoload" do? Whenever we run "composer dump-autoload" Composer re-reads the composer.json file to build up the list of files to autoload. 23. Explain Laravel service container? One of the most powerful feature of Laravel is its Service Container . It is a powerful tool for resolving class dependencies and performing dependency injection in Laravel. Dependency injection is a fancy phrase that essentially means class dependencies are "injected" into the class via the constructor or, in some cases, "setter" methods. 24. How can you get users IP address in Laravel? You can use request’s class ip() method to get IP address of user in Laravel. Usage:public function getUserIp(Request $request){ // Getting ip address of remote user return $user_ip_address=$request->ip(); } 25. What are Laravel Contracts? Laravel’s Contracts are nothing but set of interfaces that define the core services provided by the Laravel framework. 26. How to enable query log in Laravel? Use the enableQueryLog method: Use the enableQueryLog method: DB::connection()->enableQueryLog(); You can get an array of the executed queries by using the getQueryLog method: $queries = DB::getQueryLog(); 27. What are Laravel Facades? Laravel Facades provides a static like interface to classes that are available in the application’s service container. Laravel self ships with many facades which provide access to almost all features of Laravel’s. Laravel Facades serve as "static proxies" to underlying classes in the service container and provides benefits of a terse, expressive syntax while maintaining more testability and flexibility than traditional static methods of classes. All of Laravel’s facades are defined in the IlluminateSupportFacades namespace. You can easily access a Facade like so: use IlluminateSupportFacadesCache; Route::get('/cache', function () { return Cache::get('key'); }); 28. How to use custom table in Laravel Model? We can use custom table in Laravel by overriding protected $table property of Eloquent. Below is sample uses: class User extends Eloquent{ protected $table="my_custom_table"; } 29. How can you define Fillable Attribute in a Laravel Model? You can define fillable attribute by overiding the fillable property of Laravel Eloquent. Here is sample uses Class User extends Eloquent{ protected $fillable =array('id','first_name','last_name','age'); } 30. What is the purpose of the Eloquent cursor() method in Laravel? The cursor method allows you to iterate through your database records using a cursor, which will only execute a single query. When processing large amounts of data, the cursor method may be used to greatly reduce your memory usage. Example Usageforeach (Product::where('name', 'bar')->cursor() as $flight) { //do some stuff } 31. What are Closures in Laravel? Closures are an anonymous function that can be assigned to a variable or passed to another function as an argument.A Closures can access variables outside the scope that it was created. 32. What is Kept in vendor directory of Laravel? Any packages that are pulled from composer is kept in vendor directory of Laravel. 33. What does PHP compact function do? Laravel's compact() function takes each key and tries to find a variable with that same name.If the variable is found, them it builds an associative array. 34. In which directory controllers are located in Laravel? We kept all controllers in App/Http/Controllers directory 35. Define ORM? Object-relational Mapping (ORM) is a programming technique for converting data between incompatible type systems in object-oriented programming languages. 36. How to create a record in Laravel using eloquent? To create a new record in the database using Laravel Eloquent, simply create a new model instance, set attributes on the model, then call the save method: Here is sample Usage.public function saveProduct(Request $request ){ $product = new product; $product->name = $request->name; $product->description = $request->name; $product->save(); } 37. How to get Logged in user info in Laravel? Auth::User() function is used to get Logged in user info in Laravel. Usage:- if(Auth::check()){ $loggedIn_user=Auth::User(); dd($loggedIn_user); } 38. Does Laravel support caching? Yes, Laravel supports popular caching backends like Memcached and Redis. By default, Laravel is configured to use the file cache driver, which stores the serialized, cached objects in the file system .For large projects it is recommended to use Memcached or Redis. 39. What are named routes in Laravel? Named routing is another amazing feature of Laravel framework. Named routes allow referring to routes when generating redirects or Url’s more comfortably. You can specify named routes by chaining the name method onto the route definition: Route::get('user/profile', function () { // })->name('profile'); You can specify route names for controller actions: Route::get('user/profile', 'UserController@showProfile')->name('profile'); Once you have assigned a name to your routes, you may use the route's name when generating URLs or redirects via the global route function: // Generating URLs... $url = route('profile'); // Generating Redirects... return redirect()->route('profile'); 40. What are traits in Laravel? Laravel Traits are simply a group of methods that you want include within another class. A Trait, like an abstract classes cannot be instantiated by itself.Trait are created to reduce the limitations of single inheritance in PHP by enabling a developer to reuse sets of methods freely in several independent classes living in different class hierarchies. Laravel Triats Exampletrait Sharable { public function share($item) { return 'share this item'; } } You could then include this Trait within other classes like this: class Post { use Sharable; } class Comment { use Sharable; } Now if you were to create new objects out of these classes you would find that they both have the share() method available: $post = new Post; echo $post->share(''); // 'share this item' $comment = new Comment; echo $comment->share(''); // 'share this item' 41. How to create migration via artisan? Use below commands to create migration data via artisan. php artisan make:migration create_users_table 42. Explain validations in Laravel? In Programming validations are a handy way to ensure that your data is always in a clean and expected format before it gets into your database. Laravel provides several different ways to validate your application incoming data.By default Laravel’s base controller class uses a ValidatesRequests trait which provides a convenient method to validate all incoming HTTP requests coming from client.You can also validate data in laravel by creating Form Request. 43. Explain Laravel Eloquent? Laravel’s Eloquent ORM is one the most popular PHP ORM (OBJECT RELATIONSHIP MAPPING). It provides a beautiful, simple ActiveRecord implementation to work with your database. In Eloquent each database table has the corresponding MODEL that is used to interact with table and perform a database related operation on the table. Sample Model Class in Laravel.namespace App; use Illuminate\Database\Eloquent\Model; class Users extends Model { } 44. Can laravel be hacked? Answers to this question is NO.Laravel application’s are 100% secure (depends what you mean by "secure" as well), in terms of things you can do to prevent unwanted data/changes done without the user knowing. Larevl have inbuilt CSRF security, input validations and encrypted session/cookies etc. Also, Laravel uses a high encryption level for securing Passwords. With every update, there’s the possibility of new holes but you can keep up to date with Symfony changes and security issues on their site. 45. Does Laravel support PHP 7? Yes,Laravel supports php 7 46. Define Active Record Implementation. How to use it Laravel? Active Record Implementation is an architectural pattern found in software engineering that stores in-memory object data in relational databases. Active Record facilitates the creation and use of business objects whose data is required to persistent in the database. Laravel implements Active Records by Eloquent ORM. Below is sample usage of Active Records Implementation is Laravel. $product = new Product; $product->title = 'Iphone 6s'; $product->save(); Active Record style ORMs map an object to a database row. In the above example, we would be mapping the Product object to a row in the products table of database. 47. List types of relationships supported by Laravel? Laravel support 7 types of table relationships, they are One To One One To Many One To Many (Inverse) Many To Many Has Many Through Polymorphic Relations Many To Many Polymorphic Relations 48. Explain Laravel Query Builder? Laravel's database query builder provides a suitable, easy interface to creating and organization database queries. It can be used to achieve most database operations in our application and works on all supported database systems. The Laravel query planner uses PDO restriction necessary to keep our application against SQL injection attacks. 49. What is Laravel Elixir? Laravel Elixir provides a clean, fluent API for defining basic Gulp tasks for your Laravel application. Elixir supports common CSS and JavaScript preprocessors like Sass and Webpack. Using method chaining, Elixir allows you to fluently define your asset pipeline. 50. How to enable maintenance mode in Laravel 5? You can enable maintenance mode in Laravel 5, simply by executing below command. //To enable maintenance mode php artisan down //To disable maintenance mode php artisan up 51. List out Databases Laravel supports? Currently Laravel supports four major databases, they are :- MySQL Postgres SQLite SQL Server 52. How to get current environment in Laravel 5? You may access the current application environment via the environment method. $environment = App::environment(); dd($environment); 53. What is the purpose of using dd() function iin Laravel? Laravel's dd() is a helper function, which will dump a variable's contents to the browser and halt further script execution. 54. What is Method Spoofing in Laravel? As HTML forms does not supports PUT, PATCH or DELETE request. So, when defining PUT, PATCH or DELETE routes that are called from an HTML form, you will need to add a hidden _method field to the form. The value sent with the _method field will be used as the HTTP request method: To generate the hidden input field _method, you may also use the method_field helper function: In Blade template you can write it as below {{ method_field('PUT') }} 55. How to assign multiple middleware to Laravel route ? You can assign multiple middleware to Laravel route by using middleware method. Example:// Assign multiple multiple middleware to Laravel to specific route Route::get('/', function () { // })->middleware('firstMiddleware', 'secondMiddleware'); // Assign multiple multiple middleware to Laravel to route groups Route::group(], function () { // }); Laravel Questions and Answers Pdf Download Read the full article

0 notes

Text

Original Post from Security Affairs Author: Pierluigi Paganini

Two hacking groups associated with large-scale crypto mining campaigns, Pacha Group and Rocke Group, wage war to compromise as much as possible cloud-based infrastructure.

The first group tracked as Pacha Group has Chinese origins, it was first detected in September 2018 and is known to deliver the Linux.GreedyAntd miner.

The Pacha Group’s attack chain starts by compromising vulnerable servers by launching brute-force attacks against services like WordPress or PhpMyAdmin, or in some cases leveraging a known exploit for an outdated version of alike services.

Researchers at Intezer Labs continued to monitor this cybercrime group and discovered that it is also targeting cloud-based environments and working to disrupt operations of other crypto-mining groups, such as the Rocke Group.

“Despite sharing nearly 30% of code with previous variants, detection rates of the new Pacha Group variants are low” reads the analysis published by Intezer Labs.

“The main malware infrastructure appears to be identical to previous Pacha Group campaigns, although there is a distinguishable effort to detect and mitigate Rocke Group’s implants.”

The Rocke group also used a cryptocurrency miner in campaigns going as early as April 2018 that attempts to kill any other cryptocurrency malware running of the infected machine.

Pacha Group to fight the rivals added a list of hardcoded IP addresses to the blacklist implemented by the Linux.GreedyAntd aimed at blocking Rocke’s miners by routing their traffic back to the compromised machines.

“After analyzing the IP blacklist we discovered that some of these IPs, even though they may not necessarily be malicious, are known to have been used by Rocke Group in the past.” continues the report. “As an example, systemten[.]org is in this blacklist and it is known that Rocke Group has used this domain for their crypto-mining operations. The following are some domains that correspond to their hardcoded IPs in Linux.GreedyAntd’s blacklist that have Rocke Group correlations”

Both groups are actively targeting cloud infrastructure to run their cryptocurrency miners and started fighting each other.

The miners used by both groups are able to search for and to disable cloud security and monitoring products from various vendors such as Alibaba Cloud. Both malware also includes a lightweight user-mode rootkit known as Libprocesshider and have abused the Atlassian vulnerability–

“We believe that these findings are relevant within the context of raising awareness about cloud-native threats, particularly on vulnerable Linux servers,” reads the report published by the experts. “While threat actor groups are competing with one another, this evidence may suggest that threats to cloud infrastructure are increasing.”

Further details, including the list of Indicators of Compromise (IOCs), are reported in the analysis published by Intezer Labs.

window._mNHandle = window._mNHandle || {}; window._mNHandle.queue = window._mNHandle.queue || []; medianet_versionId = "3121199";

try { window._mNHandle.queue.push(function () { window._mNDetails.loadTag("762221962", "300x250", "762221962"); }); } catch (error) {}

Pierluigi Paganini

(SecurityAffairs – Pacha Group, cryptocurrency miners)

The post Pacha Group declares war to rival crypto mining hacking groups appeared first on Security Affairs.

#gallery-0-6 { margin: auto; } #gallery-0-6 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-6 img { border: 2px solid #cfcfcf; } #gallery-0-6 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Pierluigi Paganini Pacha Group declares war to rival crypto mining hacking groups Original Post from Security Affairs Author: Pierluigi Paganini Two hacking groups associated with large-scale crypto mining campaigns, …

0 notes

Text

Hacktivity 2017 Wrap-Up Day 1

My wrap-up crazy week continues… I’m now in Budapest to attend Hacktivity for the first time. During the opening ceremony some figures were given about this event: 14th edition(!), 900 attendees from 23 different countries and 36 speakers. Here is a nice introduction video. The venue is nice with two tracks in parallel, workshops (called “Hello Workshops”), a hacker center, sponsor’ booths and… a wall-of-sheep! After so many years, you realize immediately that it is well organized and everything is under control.

As usual, the day started with a keynote. Costin Raiu from Kaspersky presented “Why some APT research is like palaeontology?” Daily, Kaspersky collects 500K malware samples and less than 50 are really interesting for his team. The idea to compare this job with palaeontology came from a picture of Nessie (the Lochness monster). We some something on the picture but are we sure that it’s a monster? Costin gave the example of Regin: They discovered the first sample in 1999, 1 in 2003 and 43 in 2007. Based in this, how to be certain that you found something interesting? Finding IOCs, C&Cs is like finding bones of a dinosaur. At the end, you have a complete skeleton and are able to publish your findings (the report). In the next part of the keynote, Costin gave examples of interesting cases they found with nice stories like the 0-day that was discovered thanks to the comment left by the developer in his code. The Costin’s advice is to learn Yara and to write good signatures to spot interesting stuff.

The first regular talk was presented by Zoltán Balázs: “How to hide your browser 0-days?‘. It was a mix of crypto and exploitation. The Zoltán’s research started after a discussion with a vendor that was sure to catch all kind of 0-day exploits against browsers. “Challenge accepted” for him. The problem with 0-day exploits is that they quickly become ex-0-day exploits when they are distributed by exploit kits. Why? Quickly, security researchers will find samples, analyze them and patches will be available soon. From an attacker point of view, this is very frustrating. You spend a lot of money and lose it quickly. The idea was to deliver the exploit using an encrypted channel between the browser and the dropper. The shellcode is encrypted, executed then download the malware (also via a safe channel is required). Zoltán explained how he implemented the encrypted channel using ECDH (that was the part of the talk about crypto). This is better than SSL because if you control the client, it is too easy to play MitM and inspect the traffic. It’s not possible with the replay attack that implemented Zoltán. The proof of concept has been released.

Then another Zoltán came on stage: Zoltán Wollner with a presentation called “Behind the Rabbit and beyond the USB“. He started with a scene of the show Mr Robot where they use a Rubber Ducky to get access to a computer. Indeed a classic USB stick might have hidden/evil features. The talk was in fact a presentation of the Bash Bunny tool from Hak5. This USB stick is … what you want! A keyboard, a flash drive, an Ethernet/serial adapter and more! He demonstrated some scenarios:

QuickCreds: stealing credentials from a locked computer

EternalBlue

This device is targeting low-hanging fruits but … just works! Note that it requires physical access to the target computer.

After the lunch coffee break, Mateusz Olejarka presented “REST API, pentester’s perspective“. Mateusz is a pentester and, by experience, he is facing more and more API when conducting penetration tests. The first time that an API was spotted in an attack was when @sehacure pwned a lot of Facebook accounts via the API and the password reset feature. On the regular website, he was rejected after a few attempts but the anti-bruteforce protection was not enabled on the beta Facebook site! Today RASK API are everywhere and most of the application and web tools have an API. An interesting number: by 2018, 50% of B2B exchanges will be performed via web APIs. The principle of an API is simple: a web service that offers methods and process data in JSON (most of the time). Methods are GET/PUT/PATCH/DELETE/POST/… To test a REST API, we need some information: the endpoint, the documentation, get access to access key and sample calls. Mateusz explained how to find them. To find endpoints, we just try URI like “/api”, “/v1”, “/v1.1”, “/api/v1” or “/ping”, “/status”, “/health”, … Sometimes the documentation is available online or returned by the API itself. To find keys, two reliable sources are:

Apps / mobile apps

Github!

Also, fuzzing can be interesting to stress test the API. This one of my favourite talk, plenty of useful information if you are working in the pentesting area.

The next speaker was Leigh-Anne Galloway: “Money makes money: How to buy an ATM and what you can do with it“. She started with the history of ATMs. The first one was invented in 1967 (for Barclay’s in the UK). Today, there are 3.8M devices in the wild. The key players are Siemens Nixdorf, NSC and Fujitsu. She explained how difficult is was for her to just buy an ATM. Are you going through the official way or the “underground” way? After many issues, she finally was able to have an ATM delivered at her home. Cool but impossible to bring it in her apartment without causing damages. She decided to leave it on the parking and to perform the tests outside. In the second part, Leigh-Anne explained the different tests/attacks performed against the ATM: bruteforce, attack at OS level, at hardware and software level.

The event was split into two tracks, so I had to make choice. The afternoon started with Julien Thomas and “Limitations of Android permission system: packages, processes and user privacy“. He explained in details how are the access rights and permissions defined and enforced by Android. Amongst a deep review of the components, he also demonstrated an app that, once installed has no access, but, due to the process of revocation weaknesses, the app gets more access than initially.

Then Csaba Fitzl talked about malware and techniques used to protect themselves against security researchers and analysts: “How to convince a malware to avoid us?“. Malware authors are afraid of:

Security researchers

Sandboxes

Virtual machines

Hardened machines

Malware hates to be analysed and they sometimes avoid to infect certain targets (ex: they check the keyboard mapping to detect the country of the victim). Czaba reviewed several examples of known malware and how to detect if they are being monitored. The techniques are multiple and, as said Csaba, it could take weeks to review all of them. He also gave nice tips to harden your virtual machine/sandboxes to make them look really like a real computer used by humans. Then he gave some tips that he solved by writing small utilities to protect the victim. Example: mutex-grabber which monitors malwr.com and automatically creates the found Mutexes on the local OS. The tools reviewed on the presentation are available here. Also a great talk with plenty of useful tips.

After the last coffee break, Harman Singh presented “Active Directory Threats & Detection: Heartbeat that keeps you alive may also kill you!“. Active Directories remain a juicy target because they are implemented in almost all organizations worldwide! He reviewed all the components of an Active Directory then explained some techniques like enumeration of accounts, how to collect data, how to achieve privilege escalation and access to juicy data.

Finally, Ignat Korchagin closed the day with a presentation “Exploiting USB/IP in Linux“. When he asked who know or use USB/IP in the room, nobody raised hands. Nobody was aware of this technique, same for me! The principle is nice: USB/IP allows you to use a USB device connected on computer A from computer B. The USB traffic (URB – USB Request Blocks) are sent over TCP/IP. More information is available here. This looks nice! But… The main problem is that the application level protocol is implemented at kernel level! A packet is based on a header + payload. The kernel gets the size of data to process via the header. This one can be controlled by an attacker and we are facing a nice buffer overflow! This vulnerability is referenced as CVE-2016-3955. Ignat also found a nice name for his vulnerability: “UBOAT” for “(U)SB/IP (B)uffer (O)verflow (AT)tack“. He’s still like for a nice logo :). Hopefully, to be vulnerable, many requirements must be fulfilled:

The kernel must be unpatched

The victim must use USB/IP

The victim must be a client

The victim must import at least one device

The victim must be root

The attacker must own the server or play MitM.

Ignat completed his talk with a live demo that crashed the computer (DoS) but there is probably a way use the head application to get remote code execution.

Enough for today, stay tuned for the second day!

[The post Hacktivity 2017 Wrap-Up Day 1 has been first published on /dev/random]

from Xavier

0 notes

Text

DFIR - Analyze Windows Event Logs (evtx) from a Linux machine using sigma rules, chainsaw and evtx dump

At work, I had a task to perform a quick compromise assessment for a hacked Windows server and I got a bunch of etvx files from the suspected host for analysis. I run Linux Mint + i3-gaps and its much easier and productive performing forensics from a Linux machine than Windows in my honest opinion. This post is meant for Linux users who want to perform Digital Forensics to find IOCs from Windows…

0 notes

Text

Original Post from Security Affairs Author: Pierluigi Paganini

Security experts at Bad Packets uncovered a DNS hijacking campaign that is targeting the users of popular online services, including Gmail, Netflix, and PayPal.

Experts at Bad Packets uncovered a DNS hijacking campaign that has been ongoing for the past three months, attackers are targeting the users of popular online services, including Gmail, Netflix, and PayPal.

Hackers compromised consumer routers and modified the DNS settings to redirect users to fake websites designed to trick victims into providing their login credentials.

.@IXIAcom researchers have posted their findings on the DNS hijacking attacks here: https://t.co/OFLrYZKh3W. Targeted sites include Netflix, PayPal, Uber, Gmail, and more.

They’ve also detected additional rogue DNS servers: 195.128.124.150 () 195.128.124.181 ()

— Bad Packets Report (@bad_packets) April 5, 2019

Bad Packets experts have identified four rogue DNS servers being used by attackers to hijack user traffic.

“Over the last three months, our honeypots have detected DNS hijacking attacks targeting various types of consumer routers.” reads the report published by Bad Packets. “All exploit attempts have originated from hosts on the network of Google Cloud Platform (AS15169). In this campaign, we’ve identified four distinct rogue DNS servers being used to redirect web traffic for malicious purposes.”

Experts pointed out that all exploit attempts have originated from hosts on the network of Google Cloud Platform (AS15169).

The first wave of DNS hijacking attacks targeted D-Link DSL modems, including D-Link DSL-2640B, DSL-2740R, DSL-2780B, and DSL-526B. The DNS server used in this attack was hosted by OVH Canada (66[.]70.173.48).

WARNING Unauthenticated Remote DNS Change Exploit Detected

Target: D-Link routers (https://t.co/TmYBAAR1T7) Source IP: 35.190.195.236 (AS15169) Rogue DNS server: 66.70.173.48 (AS16276) pic.twitter.com/fRnCoXQM3H

— Bad Packets Report (@bad_packets) December 30, 2018

The second wave of attacks targeted the same D-Link modems, but attackers used a different rogue DNS server (144[.]217.191.145) hosted by OVH Canada.

WARNING Unauthenticated Remote DNS Change Exploit Detected

Target: D-Link routers (https://t.co/TmYBAAR1T7) Source IP: 35.240.128.42 (AS15169) Rogue DNS server: 144.217.191.145 (AS16276) pic.twitter.com/B4uW4kYq1H

— Bad Packets Report (@bad_packets) February 6, 2019

“As Twitter user “parseword” noted, the majority of the DNS requests were being redirected to two IPs allocated to a crime-friendly hosting provider (AS206349) and another pointing to a service that monetizes parked domain names (AS395082).” continues the experts.

The third wave of attacks observed in March hit a larger number of router models, including ARG-W4 ADSL routers, DSLink 260E routers, Secutech routers, and TOTOLINK routers.

WARNING Multiple Remote DNS Change Exploits Detectedhttps://t.co/Ku6Wv997Yc Target: Multiple (see attached list of routers) Source IP: Multiple @googlecloud hosts (AS15169) Recon Scan Type: Masscan Rogue DNS servers: 195.128.124.131 & 195.128.126.165 (AS47196) pic.twitter.com/IKXQDZBjv1

— Bad Packets Report (@bad_packets) March 30, 2019

The fourth DNS hijacking attacks originated from three distinct Google Cloud Platform hosts and involved two rogue DNS servers hosted in Russia by Inoventica Services (195[.]128.126.165 and 195[.]128.124.131).

In all the DNS hijacking attacks the operators performed an initial recon scan using Masscan. Attackers check for active hosts on port 81/TCP before launching the DNS hijacking exploits.

The campaigns aim at users Gmail, PayPal, Netflix, Uber, attackers also hit several Brazilian banks. , says.

Experts found over 16,500 vulnerable routers potentially exposed to this DNS hijacking campaign.

“Establishing a definitive total of vulnerable devices would require us to employ the same tactics used by the threat actors in this campaign. Obviously this won’t be done, however we can catalog how many are exposing at least one service to the public internet via data provided by BinaryEdge” continues Bad Packets.

Experts explained that attackers abused Google’s Cloud platform for these attacks because it is easy for everyone with a Google account to access a “Google Cloud Shell.” This service offers users the equivalent of a Linux VPS with root privileges directly in a web browser.

Further technical details, including IoCs, are reported in the analysis published by Bad Packets:

https://badpackets.net/ongoing-dns-hijacking-campaign-targeting-consumer-routers/

window._mNHandle = window._mNHandle || {}; window._mNHandle.queue = window._mNHandle.queue || []; medianet_versionId = "3121199";

try { window._mNHandle.queue.push(function () { window._mNDetails.loadTag("762221962", "300x250", "762221962"); }); } catch (error) {}

Pierluigi Paganini

(SecurityAffairs – DNS hijacking, hacking)

The post DNS hijacking campaigns target Gmail, Netflix, and PayPal users appeared first on Security Affairs.

#gallery-0-6 { margin: auto; } #gallery-0-6 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-6 img { border: 2px solid #cfcfcf; } #gallery-0-6 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Pierluigi Paganini DNS hijacking campaigns target Gmail, Netflix, and PayPal users Original Post from Security Affairs Author: Pierluigi Paganini Security experts at Bad Packets uncovered a DNS hijacking campaign that is targeting the users of popular online services, including Gmail, Netflix, and PayPal.

0 notes

Text

Original Post from Security Affairs Author: Pierluigi Paganini

The popular expert unixfreaxjp analyzed a new China ELF DDoS’er malware tracked as “Linux/DDoSMan” that evolves from the Elknot malware to deliver new ELF bot.

Non-Technical-Premise

“This report is meant for incident response or Linux forensics purpose, TO HELP admin & IR folks”, with this the very beginning sentence starts the new analysis of one of the most talented reverser of the worldwide extended security community, the head of MalwareMustDie team, Mr. unixfreaxjp. And the first thought coming at the mind is: while everybody is looking for “fame” and “glory” here there is working someone hard just “TO HELP”. It is there a security group greater than this?

But let’s go to the finding.

The new Mr. unixfreaxjp’s analysis talks about a new China ELF malware DDoS’er “Linux/DDoSMan” which seems to be a new artifact that uses the old Elknot code to deliver new ELF bot: in fact there are two new ELF bot binaries which are dropped by Elknot Trojan (also known as ChickDDoS or Mayday).

About this attribution Mr. unixfreaxjp comments on VirusTotal as follows: “This is the new bot client of the “DDOS manager” toolkit used by China(PRC) DoS attacker. (….) The code seems inspired from multiple source code of China basis DDoS client, like Elknot. Not xorDDoS or ChinaZ one, not a surprise since many of code shared openly.”

But what kind of malware is this Elknot Trojan? The story is well documented going back in the past years when one project of MalwareMustDie team was very active to monitor the China origin ELF DDoS’er malware threat. The growth was very rapid at that time (Sept. 2014), as described on the MMD blog when MMD detected 5 variants active under almost 15 panels scattered in China network.

On the MMD blog is still possible to read “I am quite active in supporting the team members of this project, so recently almost everyday I reverse ELF files between 5-10 binaries. They are not aiming servers with x32 or x64 architecture but the router devices that runs on Linux too.” We could say here to have a ““Mirai” idea “ante-litteram” 2 years before.

But if we go on the Akamai blog we can still find a reference to Elknot posted on April 4, 2016 on a topic referred to “BillGates”, another DDoS malware whose “attack vectors available within the toolkit include: ICMP flood, TCP flood, UDP flood, SYN flood, HTTP Flood (Layer7) and DNS reflection floods. This malware is an update and reuse from the Elknot’s malware source code. It’s been detected in the wild for a few years now.”. So we can see that Akamai blog explicitly talks about Elknot linking directly the web page of MalwareMustDie blog and telling with the language of the politically correct that for the “botnet activity, most of the organizations are located in the Asia region”.

If we go deeper in the Elknot MMD blog in the post(“about the ARM version of Eknot with so many specific modification reversed and reported”) we get many interesting information and we learn a lot about China malware including Elknot scheme.

Figure 1: The ARM version of Elknot malware on MMD blog

And inside this post we can find a lot of considerations about the behavior of the malware and of the threat actor like the encryption of the binary and of the communication: “This a sign of protection, someone want to hide something, in the end that person is hiding EVERYTHING which ending up to be very suspicious – So the binary could be packed or encrypted protection, we have many possibility.

Further details of this family of ELF malware we posted regularly in here:–>[link]”

The further details are on kernelmode.info as is referred by Mr. unifreaxjp on his new analysis that can be found here: https://www.kernelmode.info/forum/viewtopic.php?f=16&t=3483

The new Malware

But let’s go back to the new analysis: we have a combination of “new” and “old” code that is able to perform an interaction among different platforms: the ELF bot (the client) is delivered on a Linux platform while the C&C (Win32 PE) is in listening mode on a Windows platform waiting for a callbacks by infected bots: the new ELF is the “downloader” and “installer” while the old Elkont code is responsible to manage the configuration, to make stats , threading, DDOS attacks, etc, as is shown in the next figure.

Figure 2: The C2 software for Linux DDoS

Going deeper in the Mr. unixfreaxjp’s analysis we read more about the new scheme adopted in the malware configuration:

“The C2 tool is having IP node scanner and attack function to compromise weak x86?32 server secured auth, DoS attack related commands to contrl the botnet nodes, and the payload management tools. Other supportive samples are also exists to help to distribute the Linux bot installer to be sent successfully to the compromised device, it works under control of the C2 tool. This C2 scheme is new, along with the installer / updater. The Elknot DoS ELF dropped is not new.”

But let’s see what are the execution binaries and what an administrator will see because this analysis IS for rise the system administration awareness:

Code execution:

execve("/tmp/upgrade""); // to execute upgrade

execve("/bin/update-rc.d", ["update-rc.d", "python3.O", "defaults"]); // for updating the malicious task

execve("/usr/bin/chkconfig", ["chkconfig", "--add", "python3.O"]); // for persistence

What administrator will see:

(Unknown) process with image executed from /proc/{PID}/cmdline, with forked from “evil” crond (dropped, executed and deleted malware) process.

The Client Side

Giving a look to the client bot we’ll see that once the malware has infected the remote host the installer ELF will read all server info by launching open(“/proc/{PID}/cmdline”) from which can create a specific information header to send to the C2 by using an encoding function to encrypt and decrypt the requests during the communication with the C2.

But what are the machine info and how are they collected?

Figure 3: Header of the ELF communication

This data is sent via the malware’s “fabricated” headers as follows:

Content-Type: application/x-www-form-urlencodedrn

Content-Length: {SIZE OF SENT DATA}rn

Host: 193[.]201[.]224[.]238:8852rn

User-Agent: LuaSocket 3.0-rc1rn

TE: trailersrn

Connection: close, TErnrn

and are being processed further in the C2 tool of the threat actor.

This is also new, Elknot will send its data to C2 always in the encoded form instead, with a lot of padding 00 in between.

After the initial communication a dropped (downloader) is saved and executed on the infected node and in the analysis Mr. unixfreaxjp says: “The dropped & executed downloader embedded ELF is actually the one that responsible for the persistence setup operation too. This part haven’t been seen in Elknot. And this is not even in the main sample file too. In THIS dropped ELF you can see well the downloader and the persistence installer in the same file.”

See the next figure for the explanation:

Figure 4: Snapshot of the Installer/downloader

To be very synthetic the same connection is reused and the initial code that opens the connection toward the C2 is also responsible to manage the update of the malware on the infected node.

“So only one dropped binary is the Elknot.” says Mr. unixfreaxjp, “Obviously, there is no DDoS functionality in the main sample ELF file or the Downloader ELF file too, the Elknot has it, and the adversary tend to use that function from the C2 tool.”

The Server Silde (C2 Tool)

Regarding the C2 tool we have a “win32” PE and it has the Elknot basis C2 form, along with many additional other forms as we reported in the Figure 2. We can see the scanner tool that command to inject code execution to open a shell after attack has been performed successfully. With this the threat actor can use any kind of compromised Windows machine to manage the C2 from its attacks.

To perform the malicious intent the attacker will need the ELF file to send, the script to be sent to hacked PC and the ELF file to be installed after infecting along with its execution toolset.

In order to have an idea on “how the adversary work in making this toolset” MMD has produced a very interesting video published on Youtube describing the techniques adopted by the China threat actors

Figure 5: MMD Video on Youtube describing China threat actors techniques to delivery malware and recording them live from a compromised server.

Reversing the C2 tool it smells of China even if the reader is not able to translate.

Figure 6: MMD reverse of the C2 Tool

The adversary network is as per below (domain, IP and port)

cctybt.com. 3600 IN A 103.119.28.12 tcp/8080

193.201.224.238 tcp/8852

Located in these networks:

AS136782 | 103.119.28.0/24 | PINGTAN-AS | AP Kirin Networks, CN

AS25092 | 193.201.224.0/22 | OPATELECOM, | UA

For the full IOCs and other details of the malware please refer to the Mr. unixfreaxjp research at: https://imgur.com/a/57uOiTu

About the Author:

Odisseus – Independent Security Researcher involved in Italy and worldwide in topics related to hacking, penetration testing and development.

unixfreaxjp team leader of the MalwareMustDie team.

window._mNHandle = window._mNHandle || {}; window._mNHandle.queue = window._mNHandle.queue || []; medianet_versionId = "3121199";

try { window._mNHandle.queue.push(function () { window._mNDetails.loadTag("762221962", "300x250", "762221962"); }); } catch (error) {}

Pierluigi Paganini

(SecurityAffairs – Elknot malware, DDoS)

The post New Linux/DDosMan threat emerged from an evolution of the older Elknot appeared first on Security Affairs.

#gallery-0-6 { margin: auto; } #gallery-0-6 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-6 img { border: 2px solid #cfcfcf; } #gallery-0-6 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Pierluigi Paganini New Linux/DDosMan threat emerged from an evolution of the older Elknot Original Post from Security Affairs Author: Pierluigi Paganini The popular expert unixfreaxjp analyzed a new China ELF DDoS’er malware tracked as “Linux/DDoSMan” that evolves from the Elknot malware to deliver…

0 notes

Text

TROOPERS 2017 Day #2 Wrap-Up

This is my wrap-up for the 2nd day of “NGI” at TROOPERS. My first choice for today was “Authenticate like a boss” by Pete Herzog. This talk was less technical than expected but interesting. It focussed on a complex problem: Identification. It’s not only relevant for users but for anything (a file, an IP address, an application, …). Pete started by providing a definition. Authentication is based on identification and authorisation. But identification can be easy to fake. A classic example is the hijacking of a domain name by sending a fax with a fake ID to the registrar – yes, some of them are still using fax machines! Identification is used at any time to ensure the identity of somebody to give access to something. It’s not only based on credentials or a certificate.

Identification is extremely important. You have to distinguish the good and bad at any time. Not only people but files, IOC’s, threat intelligence actors, etc. For files, metadata can help to identify. Another example reported by Pete: the attribution of an attack. We cannot be 100% confident about the person or the group behind the attack.The next generation Internet needs more and more identification. Especially with all those IoT devices deployed everywhere. We don’t even know what the device is doing. Often, the identification process is not successful. How many times did you send a “hello” to somebody that was not the right person on the street or while driving? Why? Because we (as well as objects) are changing. We are getting older, wearing glasses, etc… Every interaction you have in a process increases your attack surface the same amount as one vulnerability. What is more secure? Let a user choose his password or generate a strong one for him? He’ll not remember ours and write it down somewhere. In the same way, what’s best? a password or a certificate? An important concept explained by Pete is the “intent”. The problem is to have a good idea of the intent (from 0 – none – to 100% – certain).

Example: If an attacker is filling your firewall state table, is it a DoS attack? If somebody is performed a traceroute to your IP addresses, is it a foot-printing? Can be a port scan automatically categorized as hunting? And a vulnerability scan will be immediately followed by an attempt to exploit? Not always… It’s difficult to predict specific action. To conclude, Pete mentioned machine learning as a tool that may help in the indicators of intent.

After an expected coffee break, I switched to the second track to follow “Introduction to Automotive ECU Research” by Dieter Spaar. ECU stands for “Electronic Control Unit”. It’s some kind of brain present in modern cars that helps to control the car behaviour and all its options. The idea of the research came after the problem that BMW faced with the unlock of their cars. Dieter’s Motivations were multiple: engine tuning, speedometer manipulation, ECU repair, information privacy (what data are stored by a car?), the “VW scandal” and eCall (Emergency calls). Sometimes, some features are just a question of ECU configuration. They are present but not activated. Also, from a privacy point of view, what infotainment systems collect from your paired phone? How much data is kept by your GPS? ECU’s depend on the car model and options. In the picture below, yellow blocks are ECU activated, others (grey) are optional (this picture is taken from an Audi A3 schema):