#intrusion detection model in cloud computing environment example

Explore tagged Tumblr posts

Text

DESIGN AN ENHANCED INTRUSION DETECTION MODEL IN A CLOUD COMPUTING ENVIRONMENT.

DESIGN AN ENHANCED INTRUSION DETECTION MODEL IN A CLOUD COMPUTING ENVIRONMENT ABSTRACT Cloud computing is a new type of service that provides large-scale computing resources to each customer. Cloud computing systems can be easily threatened by various cyberattacks because most cloud computing systems provide services to so many people who are not proven to be trustworthy. Therefore, a cloud…

#a transformer based network intrusion detection approach for cloud security#CLOUD COMPUTING ENVIRONMENT#cloud computing journal pdf#cloud computing research#cloud computing research paper#cloud intrusion detection system#DESIGN AN ENHANCED INTRUSION DETECTION MODEL IN A CLOUD COMPUTING ENVIRONMENT.#ENHANCED INTRUSION DETECTION MODEL#google scholar#introduction to cloud engineering#intrusion detection in cloud computing#INTRUSION DETECTION MODEL#intrusion detection model in cloud computing environment example#intrusion detection model in cloud computing environment pdf#journal of cloud computing

0 notes

Text

The Impact of Cloud Computing on Mobile App Development Companies

The rapid advancements in technology have dramatically transformed the landscape of mobile app development. One of the most significant game-changers in recent years is cloud computing.

This innovative technology has revolutionized the way mobile app development companies operate, enabling them to create more robust, scalable, and efficient applications.

Let’s explore how cloud computing has impacted mobile app development companies and reshaped the industry.

1. Enhanced Scalability and Flexibility

Cloud computing offers unparalleled scalability and flexibility, which are crucial for mobile app development. Companies can scale their infrastructure up or down based on the app’s demand without investing in physical hardware.

For instance, during peak usage periods, apps can leverage cloud resources to ensure seamless performance. This scalability not only improves user experience but also helps companies manage costs effectively.

Moreover, cloud-based platforms allow developers to work from anywhere, enabling seamless collaboration across geographies. This flexibility has been particularly beneficial in an era where remote work is becoming the norm. Teams can access project files, share updates, and manage tasks in real-time, enhancing productivity and efficiency.

2. Cost Efficiency

Developing and maintaining traditional on-premises infrastructure can be expensive. It requires significant investment in hardware, software, and ongoing maintenance.

Cloud computing eliminates these costs by offering a pay-as-you-go model, where companies only pay for the resources they use. This cost-efficiency is especially advantageous for startups and small-scale mobile app development companies with limited budgets.

Additionally, cloud service providers often include advanced security features, regular updates, and technical support as part of their services, reducing the need for dedicated IT teams. This allows developers to focus on creating innovative apps rather than managing backend infrastructure.

3. Faster Development Cycles

Cloud computing has significantly shortened the app development lifecycle. With cloud-based tools and platforms, developers can build, test, and deploy applications much faster.

For example, Platform-as-a-Service (PaaS) offerings provide pre-configured environments that eliminate the need to set up servers or configure software manually.

Continuous Integration and Continuous Deployment (CI/CD) pipelines hosted on the cloud enable developers to automate testing and deployment processes.

This not only speeds up development but also ensures that apps are delivered with minimal bugs and errors. The faster time-to-market gives companies a competitive edge in the dynamic mobile app market.

4. Enhanced Collaboration and Productivity

Collaboration is a critical aspect of mobile app development, especially when teams are distributed across different locations.

Cloud computing facilitates real-time collaboration through tools like shared workspaces, version control systems, and integrated communication platforms.

Developers, designers, and project managers can work together seamlessly, reducing delays and improving overall productivity.

Moreover, cloud-based Integrated Development Environments (IDEs) allow multiple team members to code, debug, and review applications simultaneously. This fosters innovation and ensures that the final product meets high-quality standards.

5. Improved Security

Data security is a top concern for mobile app development companies, particularly with the increasing prevalence of cyber threats.

Cloud computing providers invest heavily in advanced security measures, including encryption, firewalls, and intrusion detection systems. They also offer compliance with industry standards such as GDPR, HIPAA, and ISO, ensuring that apps meet regulatory requirements.

For app developers, this means reduced risk of data breaches and greater confidence in the security of their applications. Additionally, cloud-based backup and disaster recovery solutions ensure that critical data is protected and can be restored quickly in case of any disruptions.

6. Support for Innovation

Cloud computing provides a fertile ground for innovation in mobile app development. With access to cutting-edge technologies like Artificial Intelligence (AI), Machine Learning (ML), and the Internet of Things (IoT) through cloud platforms, developers can create more sophisticated and intelligent applications.

For instance, AI-powered chatbots, real-time analytics, and personalized recommendations are now becoming standard features in mobile apps.

Furthermore, cloud computing enables the integration of multiple APIs and third-party services, expanding the functionality of mobile applications. This allows developers to focus on delivering unique and engaging user experiences rather than reinventing the wheel.

7. Global Reach and Performance Optimization

Mobile apps often cater to a global audience, and ensuring optimal performance across different regions can be challenging.

Cloud computing addresses this issue through Content Delivery Networks (CDNs) that distribute app resources across multiple data centers worldwide. This minimizes latency and ensures a consistent user experience regardless of the user’s location.

Additionally, cloud platforms provide advanced monitoring and analytics tools to track app performance in real-time. Developers can identify bottlenecks, optimize resource usage, and implement fixes promptly, ensuring that the app runs smoothly at all times.

8. Simplified Maintenance and Updates

Cloud computing simplifies the maintenance and updating process for mobile apps. Developers can push updates directly to the cloud, ensuring that users always have access to the latest version of the app without needing to download it manually.

This is particularly useful for apps that require frequent updates or have large user bases.

Moreover, cloud-based analytics tools provide insights into user behavior and app performance, enabling developers to make data-driven decisions. This helps in refining the app’s features and functionality over time, leading to better user satisfaction and retention.

Conclusion

The impact of cloud computing on Mobile App Development Company cannot be overstated. From enhancing scalability and reducing costs to fostering innovation and improving collaboration, cloud technology has transformed the way apps are developed, deployed, and maintained.

As the mobile app market continues to grow, companies that embrace cloud computing will be better positioned to meet user demands, stay competitive, and drive innovation in the digital age.

In a world where efficiency, speed, and adaptability are paramount, cloud computing has proven to be an indispensable tool for mobile app development companies. Its influence will only continue to expand, shaping the future of app development and empowering companies to deliver exceptional experiences to their users.

0 notes

Text

Top Technology and AI blogs to read before 2025

Fulfillment Center

A fulfillment center/house or 3PLs are service hubs that take care of all logistics processes needed to get a product from the seller to the customer.

Private cloud

The private cloud model offers extended, virtualized computing resources through physical components that are stored on-premise or at provider’s data

Gradient Clipping

Gradient Clipping handles one of the most difficult challenges in backpropagation in neural networks during the gradient training process in AI.

Content-based filtering

Content-based filtering uses item features to recommend other items similar to what user likes, based on their previous actions or explicit feedback.

Speech synthesis

Speech synthesis is the artificial, computer-generated production of human speech. It is pretty much the counterpart of speech or voice recognition.

Evolutionary computation

Evolutionary computation techniques are used to handle problems that have far more variables than what traditional algorithms can handle.

On-Time Resolution

When a question is resolved within the required time frame given in the Service Level Agreement, it is considered as an on-time resolution.

Ebert Test

The Ebert Test is a test for synthesized voice, proposed by film critic, Roger Ebert. In his 2011 TED talk, Roger put forth the Ebert Test.

Cognitive computing

It is the technique of simulating human thought processes in complicated scenarios,the answers might be vague. It does so by using computerized models

Dropshipping

Dropshipping is an online retail model that involves shipping products directly from your manufacturers or suppliers to your customers on demand.

Similarity measure

A similarity measure in data science is a metric that is used for the purpose of measuring how data samples are related or close to each other.

Biometric Recognition

Biometric recognition refers to the automated recognition of people by their behavioral and biological traits. For example — face, retina, voice, etc.

Tiered support

Tiered support involves organizing your support center into levels to deal with incoming support queries in the most efficient and effective manner.

Intrusion detection

An Intrusion Detection System (IDS) is a network security technology built and intended to detect vulnerability exploits against a target application

Deep Learning

Deep Learning is an Artificial Intelligence function that imitates workings of human brain in processing data & creating patterns for decision making

Virtual Reality

VR refers to an artificial, immersive environment created with the help of computer technology through sensory stimuli (such as sight and audio).

Enterprise resource planning

Enterprise resource planning (ERP) is a type of enterprise software that organizations use to manage day-to-day tasks and business activities.

Swarm intelligence

Swarm Intelligence systems consist typically of a population of simple agents interacting locally with one another and with their environment.

Inbound Call Centre

An inbound call center deals with service functions whose primary responsibility is to handle customer queries & providing a good experience.

Customer Experience Transformation

Customer experience transformation is the process and design that businesses take to enhance customer experience for optimal customer satisfaction.

Concept drift

In machine learning, predictive modeling, and data mining, concept drift is the gradual change in the relationships between input data and output data

Average Handling Time

AHT is a metric usually used in BPO’s as a performance measuring KPI. It shows us the average time a representative spends on call with a customer.

0 notes

Text

The Benefits of Real-Time Analytics for Enterprises

Real-time analytics is a new technology that redefines how IT companies capture actionable business information, identify cybersecurity threats, and assess the performance of critical software and services deployed on the network or cloud.

Real-time analytics is a software solution capable of analyzing large amounts of incoming data when the IT infrastructure generates or stores it. Enterprise IT security tools such as Security Event Management (SEM) or Security Information and Event Management (SIEM) technologies also feature real-time monitoring capabilities for large data sets.

Today, corporates are deploying more software than ever before in the cloud. Every application or server generates computer-generated records known as event logs of all its activities. Organizations rely on real-time analytics to effectively combine data for specific trends and insights that drive responsive IT and business decision-making with millions of fresh event logs generated every day.

Understanding Real-Time Analytics

Real-time data processing implies that we perform an operation on the data only milliseconds after it becomes accessible. A real-time response is required to mitigate cyber-attacks when it comes to monitoring your security posture, detecting threats, and triggering rapid quarantine responses before hackers can damage systems or steal data.

In today's cybersecurity climate, after-the-fact review of incident logs to decide if an attack has occurred is no longer successful. Real-time analytics helps organizations mitigate attacks as they occur by analyzing event logs milliseconds after they are created.

Analytics is a software capability that, in a human-readable format, takes data input from different sources, scans it for patterns, interprets those patterns, and ultimately communicates the results. Using mathematics, statistics, probabilities, and predictive models, analytics software discovers hidden correlations in data sets that are too complex and varied to be manually analyzed efficiently.

In order to achieve a defined objective, the best analytics tools today combine advanced technology such as Artificial Intelligence (AI) and machine learning with other software features. Analytics tools are used in IT organizations to review event logs and compare events from across applications to detect Indicators of Compromise (IoCs) and respond to security incidents.

Benefits of Real-Time Analytics

Real-time analytics helps organizations of all sizes collect valuable information by leveraging insights from large data volumes more rapidly than ever before. IT companies most frequently implement this technology in industries that create or collect vast volumes of data within a short time - transportation, finance, or IT, for example. Here are three ways that real-time analytics can support organizations.

Empowering IT Operations: Rapid Monitoring and Troubleshooting

IT operations teams are responsible for carrying out the routine operational and maintenance activities required to ensure that the IT infrastructure works. IT department is directly responsible for the monitoring of the IT infrastructure by means of a given collection of control tools (SEM, SIM or SIEM tools, etc.), for the backup of databases to avoid the loss of data and for the restoration of the system in the event of failures. Big data analytics can be used in real-time to analyze event logs from across the network, allowing problems that affect customers to be easily detected and remedied.

Enhancing IT Security: Rapid Incident Response Capabilities

In the Security Operations Center (SOC), IT security analysts operate and are responsible for maintaining the security posture of the enterprises and protecting against cyber-attacks.

Data analysts rely on real-time data and analytics in today's IT security environment to sift through millions of aggregated log files from across the network and identify signs of network intrusion. Security analysts use analytics software to collect threat information, automate threat detection and response, and perform forensic investigations after a cyber-attack occurs.

Collection and Management of Performance Data

The effect of real-time analytics goes beyond tracking the IT infrastructure and protecting it. This technology can also be used to collect information about application use and analyze the efficiency of cloud services deployed. Companies should analyze the application performance data in order to drive product development decisions that increase customer loyalty by prioritizing the right features and changes at the right time.

0 notes

Link

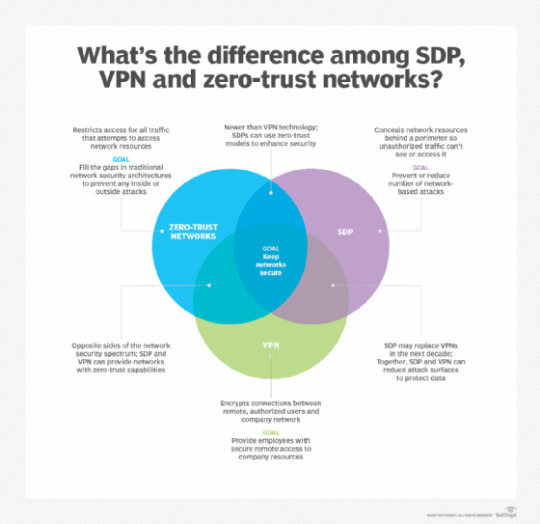

Pre-pandemic, many experts touted VPN's demise. During the pandemic, VPNs became lifelines for remote workers to do their jobs. Here's what the future may hold for VPNs.

The importance of VPNs changed significantly in early 2020, as the coronavirus pandemic caused massive digital transformation for many businesses and office workers. VPN trends that started prior to the pandemic were accelerated within days.

What does the future of VPNs look like from the middle of the pandemic?

The past and future of VPN connectivity

The migration of office workers to a work-from-home environment created a new dilemma: How should organizations support workers who may use computers and mobile devices from home to access corporate resources?

The traditional VPN uses a fat client model to build a secure tunnel from the client device to the corporate network. All network communications use this tunnel. However, this model comes at a cost: Access to public cloud resources must transit the VPN tunnel to the corporate site, which then forwards access back out to the internet-based cloud provider. This is known as hairpinning.

For the future of VPNs, end systems' increasing power will facilitate the migration of more software-based VPN technology into endpoints. VPN technologies will evolve to take advantage of local process capabilities, which make VPNs easier for users and network administrators alike. Network admins will control VPN administration through central systems.

Some predictions for the future of VPNs suggest hardware isn't necessary in a software world. Yet, as something must make the physical connections, hardware will still be necessary. More likely, x86 compute systems that perform functions previously done in hardware will replace some dedicated hardware devices -- particularly at the network edge, where distributed computational resources are readily available. The network core will continue to require speeds only dedicated hardware can provide for the foreseeable future.

VPNs enable authorized remote users to securely connect to their organization's network.

VPNs may also begin to function like software-defined WAN products, where connectivity is independent of the underlying physical network -- wired, wireless or cellular -- and its addressing. These VPN systems should use multiple paths and transparently switch between them.

The past and future of VPN security

Corporate VPNs provide the following two major functions:

encrypt data streams and secure communications; and

protect the endpoint from unauthorized access as if it were within the corporate boundary.

The straightforward use of encryption technology is to secure communications. Encryption technology is relatively old and is built into modern browsers, which makes the browsers easy to use. Secure Sockets Layer or Transport Layer Security VPNs can provide this functionality.

Modern VPN systems protect endpoints from unauthorized access, as these systems require all network communications to flow over a VPN between endpoints and a corporate VPN concentrator. Other corporate resources, like firewalls, intrusion detection systems and intrusion prevention systems, protect endpoints with content filtering, malware detection and safeguards from known bad actors.

In the future, IT professionals should expect to see more examples of AI and machine learning applied to these security functions to increase their effectiveness without corresponding increases in network or security administrator support.

Innovative new technologies, such as software-defined perimeter and zero-trust models, will greatly influence the future of VPNs.

VPN paths become less efficient when an endpoint communicates with internet-based resources, like SaaS systems. The endpoint must first send data to the VPN concentrator, which then forwards the data to the cloud-based SaaS application and, therefore, adds to network latency. In addition, network overhead increases within the VPN because the SaaS application also employs its own encryption.

Split tunneling is a potential solution to this inefficiency, but IT teams must select VPN termination points carefully to avoid a security hole. Integration with smart DNS servers, like Cisco Umbrella, enables split tunneling to specific sites under the control of network or security administrators.

An even better security stance relies on a zero-trust model, which assumes endpoints are compromised, regardless of their location. Forrester Research introduced zero trust in 2010, and it has become the new standard to which networks should conform. Zero-trust security components include allowlisting and microsegmentation. The future of VPNs includes automated methods to create and maintain these security functions.

IT professionals can expect the future of VPN technology to provide an increase in security while reducing the effort needed to implement and maintain that security.

0 notes

Text

Anomaly Detection — Another Challenge for Artificial Intelligence

Image Credit: unsplash.com

It is true that the Industrial Internet of Things will change the world someday. So far, it is the abundance of data that makes the world spin faster. Piled in sometimes unmanageable datasets, big data turned from the Holy Grail into a problem pushing businesses and organizations to make faster decisions in real-time. One way to process data faster and more efficiently is to detect abnormal events, changes or shifts in datasets. Thus, anomaly detection, a technology that relies on Artificial Intelligence to identify abnormal behavior within the pool of collected data, has become one of the main objectives of the Industrial IoT .

Anomaly detection refers to identification of items or events that do not conform to an expected pattern or to other items in a dataset that are usually undetectable by a human expert. Such anomalies can usually be translated into problems such as structural defects, errors or frauds.

Examples of potential anomalies:

A leaking connection pipe that leads to the shutting down of the entire production line;

Multiple failed login attempts indicating the possibility of fishy cyber activity;

Fraud detection in financial transactions.

Why is it important?

Modern businesses are beginning to understand the importance of interconnected operations to get the full picture of their business. Besides, they need to respond to fast-moving changes in data promptly, especially in case of cybersecurity threats. Anomaly detection can be a key for solving such intrusions, as while detecting anomalies, perturbations of normal behavior indicate a presence of intended or unintended induced attacks, defects, faults, and such.

Unfortunately, there is no effective way to handle and analyze constantly growing datasets manually. With the dynamic systems having numerous components in perpetual motion where the “normal” behavior is constantly redefined, a new proactive approach to identify anomalous behavior is needed.

Statistical Process Control

Statistical Process Control, or SPC, is a gold-standard methodology for measuring and controlling quality in the course of manufacturing. Quality data in the form of product or process measurements are obtained in real-time during the manufacturing process and plotted on a graph with predetermined control limits that reflect the capability of the process. Data that falls within the control limits indicates that everything is operating as expected. Any variation within the control limits is likely due to a common cause — the natural variation that is expected as part of the process. If data falls outside of the control limits, this indicates that an assignable cause might be the source of the product variation, and something within the process needs to be addressed and changed to fix the issue before defects occur. In this way, SPC is an effective method to drive continuous improvement. By monitoring and controlling a process, we can assure that it operates at its fullest potential and detect anomalies at early stages.

Introduced in 1924, the method is likely to stay in the heart of industrial quality assurance forever. However, its integration with Artificial Intelligence techniques will be able to make it more accurate and precise and give more insights into the manufacturing process and the nature of anomalies.

Tasks for Artificial Intelligence

When human resources are not enough to handle the elastic environment of cloud infrastructure, microservices and containers, Artificial Intelligence comes in, offering help in many aspects:

Tasks for Artificial Intelligence

Automation: AI-driven anomaly detection algorithms can automatically analyze datasets, dynamically fine-tune the parameters of normal behavior and identify breaches in the patterns.

Real-time analysis: AI solutions can interpret data activity in real time. The moment a pattern isn’t recognized by the system, it sends a signal.

Scrupulousness: Anomaly detection platforms provide end-to-end gap-free monitoring to go through minutiae of data and identify smallest anomalies that would go unnoticed by humans

Accuracy: AI enhances the accuracy of anomaly detection avoiding nuisance alerts and false positives/negatives triggered by static thresholds.

Self-learning: AI-driven algorithms constitute the core of self-learning systems that are able to learn from data patterns and deliver predictions or answers as required.

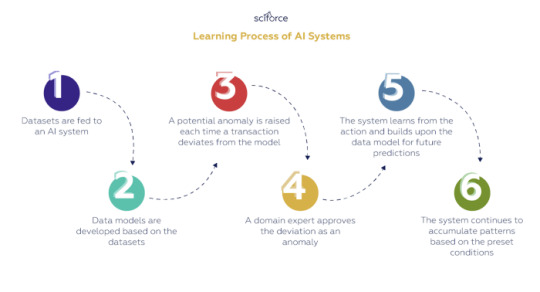

Learning Process of AI Systems

One of the best things about AI systems and ML-based solutions is that they can learn on the go and deliver better and more precise results with every iteration. The pipeline of the learning process is pretty much the same for every system and comprises the following automatic and human-assisted stages:

Datasets are fed to an AI system

Data models are developed based on the datasets

A potential anomaly is raised each time a transaction deviates from the model

A domain expert approves the deviation as an anomaly

The system learns from the action and builds upon the data model for future predictions

The system continues to accumulate patterns based on the preset conditions

Learning Process of AI Systems

As elsewhere in AI-powered solutions, the algorithms to detect anomalies are built on supervised or unsupervised machine learning techniques.

Supervised Machine Learning for Anomaly Detection

The supervised method requires a labeled training set with normal and anomalous samples for constructing a predictive model. The most common supervised methods include supervised neural networks, support vector machine, k-nearest neighbors, Bayesian networks and decision trees.

Probably, the most popular nonparametric technique is K-nearest neighbor (k-NN) that calculates the approximate distances between different points on the input vectors and assigns the unlabeled point to the class of its K-nearest neighbors. Another effective model is the Bayesian network that encodes probabilistic relationships among variables of interest.

Supervised models are believed to provide a better detection rate than unsupervised methods due to their capability of encoding interdependencies between variables, along with their ability to incorporate both prior knowledge and data and to return a confidence score with the model output.

Unsupervised Machine Learning for Anomaly Detection

Unsupervised techniques do not require manually labeled training data. They presume that most of the network connections are normal traffic and only a small amount of percentage is abnormal and anticipate that malicious traffic is statistically different from normal traffic. Based on these two assumptions, groups of frequent similar instances are assumed to be normal and the data groups that are infrequent are categorized as malicious.

The most popular unsupervised algorithms include K-means, Autoencoders, GMMs, PCAs, and hypothesis tests-based analysis.

The most popular unsupervised algorithms

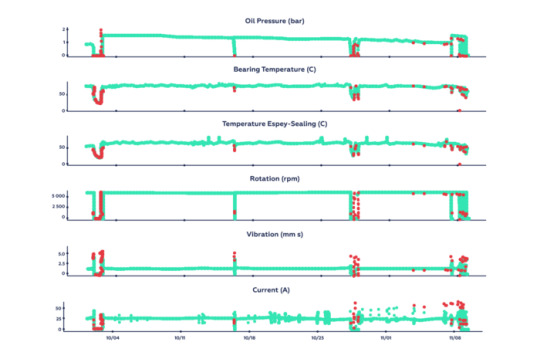

SciForce’s Chase for Anomalies

Like probably any company specialized in Artificial Intelligence and dealing with solutions for IoT, we found ourselves hunting for anomalies for our client from the manufacturing industry. Using generative models for likelihood estimation, we detected the algorithm defects, speeding up regular processing algorithms, increasing the system stability, and creating a customized processing routine which takes care of anomalies.

For anomaly detection to be used commercially, it needs to encompass two parts: anomaly detection itself and prediction of future anomalies.

Anomaly detection part

For the anomaly detection part, we relied on autoencoders — models that map input data into a hidden representation and then attempt to restore the original input from this internal representation. For regular pieces of data, such reconstruction will be accurate, while in case of anomalies, the decoding result will differ noticeably from the input.

Results of our anomaly detection model. Potential anomalies are marked in red.

In addition to the autoencoder model, we had a quantitative assessment of the similarity between the reconstruction and the original input. For this, we first computed sliding window averages for sensor inputs, i.e. the average value for each sensor over a 1-min. interval each 30 sec. and fed the data to the autoencoder model. Afterwards, we calculated distances between the input data and the reconstruction on a set of data and computed quantiles for distances distribution. Such quantiles allowed us to translate an abstract distance number into a meaningful measure and mark samples that exceeded a present threshold (97%) as an anomaly.

Sensor readings prediction

With enough training data, quantiles can serve as an input for prediction models based on recurrent neural networks (RNNs). The goal of our prediction model was to estimate sensor readings in future.

Though we used each sensor to predict other sensors’ behavior, we had trained a separate model for each sensor. Since the trends in data samples were clear enough, we used linear autoregressive models that used previous readings to predict future values.

Similarly to the anomaly detection part, we computed average each sensor values over 1-min. interval each 30 sec. Then we built a 30-minute context (or the number of previous timesteps) by stacking 30 consecutive windows. The resulting data was fed into prediction models for each sensor and the predictions were saved as estimates of the sensor readings for the following 1-minute window. To expand over time, we gradually substituted the older windows with predicted values.

Results of prediction models outputs with historical data marked in blue and predictions in green.

It turned out that the context is crucial for predicting the next time step. With the scarce data available and relatively small context windows we could make accurate predictions for up to 10 minutes ahead.

Conclusion

Anomaly detection alone or coupled with the prediction functionality can be an effective means to catch the fraud and discover strange activity in large and complex datasets. It may be crucial for banking security, medicine, marketing, natural sciences, and manufacturing industries which are dependent on the smooth and secure operations. With Artificial Intelligence, businesses can increase effectiveness and safety of their digital operations — preferably, with our help.

0 notes

Photo

Tips for Finding the Right Hybrid Cloud Provider

So, your organization has decided to adopt a hybrid cloud architecture? That’s great news! Soon you’ll have a flexible model in place, with the ability to strategically run workloads of data where it makes the most sense. Either in the public cloud, private cloud, on-premise on dedicated servers, virtual or cloud servers, or some combination of these. Not only that, with this hybrid cloud approach your enterprise will be more adept at managing security and compliance requirements, and having the ability to scale as requirements change.

That’s why leading enterprises are moving to the hybrid cloud model in droves, particularly when it comes to Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) solutions. According to Forbes, IaaS/PaaS markets could rise from some $38 billion in 2016 to $173 billion in 2026. Not only that, Synergy Research Group found that private and hybrid cloud infrastructures have the second highest growth rate (after public IaaS/PaaS services) with 45% growth in 2015 (Source: Forbes).

The only big decision left is selecting the right hybrid cloud provider. Finding a provider with the most experience and support options related to an organization’s current and future needs is priority one. If you’re considering a hybrid cloud service provider, here are some additional tips to consider:

Outline business objectives- Before speaking with various providers, it’s important to take the time upfront to outline the immediate and long-term business objectives of cloud adoption. A hybrid cloud environment provides a secure, unified environment to run diverse applications, and only pay for the resources consumed. That means that each application and workload selected for the hybrid environment makes up the optimal infrastructure type, including databases, web servers, application servers, storage, firewalls, etc. Often the largest benefit of the hybrid cloud environment includes integrating two applications running on private and public clouds, such as integrating SalesForce.com or a business analytics tool with a legacy system. In this example, it’s important to look for a hybrid cloud service provider that has not only the capabilities required but also the big-picture grasp of the benefit of integration across the infrastructure and mixing private cloud elements in a single workload. To leverage the full value of hybrid cloud, look for providers that offer interoperability across the infrastructure, application portability and data governance across infrastructures. This way, regardless of where compute, application, networking, and storage resources are running they are working together and they are accessible through a unified management platform. Ask cloud providers what platforms are available to streamline the management of cloud apps and deployments and the creation of hybrid cloud instances.

Evaluate migration paths - Because hybrid cloud environments allow organizations to methodically and purposefully make a shift to the cloud, it’s the perfect model for provisioning applications and scaling on-the-fly. It provides the ability to add new applications quickly without being throttled by red tape. However, as mentioned earlier, that doesn’t mean enterprises shouldn’t have business objectives set and a plan in place for migrating to the cloud. When shopping cloud providers, ask if they provide classifications for defining workloads to prioritize which applications are best suited for a cloud environment. This classification system may help rank which applications and workloads should be migrated first, and which should follow. For instance, if an application development and testing environment are needed prior to production, it may make sense to spin up a virtual cloud instance to run these workloads before moving CRM applications. It’s also important to assess the experience and resource availability of internal IT resources during a cloud migration and to evaluate how cloud service providers can complement this skill set.

Consider compliance and security concerns- The hybrid cloud is all about determining which workloads can efficiently and cost-effectively be transitioned in a very dynamic enterprise environment. It’s important to evaluate functional requirements for Quality of Service (QoS), pricing, security and latency, and of course, requirements related to regulatory and privacy needs. While there is no one-size-fits-all approach to meeting and maintaining compliance, there are best practices for running effective compliance management initiatives. It is imperative organizations look for hybrid providers that have the right people AND technology in place. This could include technologies like firewalls, Intrusion Detection Systems (IDS) and log management appliances. But providers should also employ skilled resources who are knowledgeable about PCI-DSS rules. For instance, an experienced team would recognize that data must be kept locally, with a secure connection to the public cloud for processing.

When evaluating hybrid cloud providers, look for partners that specialize in product knowledge that’s most applicable to your enterprise. Finding a partner that can support current business objectives along with the ability to support business and infrastructure changes down the road. This will help prevent slowdowns during the migration effort and provide a solid foundation for future growth and hybrid adoption.

0 notes

Text

New study provides evidence for decades-old theory to explain the odd behaviors of water

Water, so ordinary and so essential to life, acts in ways that are quite puzzling to scientists. For example, why is ice less dense than water, floating rather than sinking the way other liquids do when they freeze?

Now a new study provides strong evidence for a controversial theory that at very cold temperatures water can exist in two distinct liquid forms, one being less dense and more structured than the other.

Researchers at Princeton University and Sapienza University of Rome conducted computer simulations of water molecules to discover the critical point at which one liquid phase transforms into the other. The study was published this week in the journal Science.

“The presence of the critical point provides a very simple explanation for water’s oddities,” said Princeton’s Dean for Research Pablo Debenedetti, the Class of 1950 Professor in Engineering and Applied Science, and professor of chemical and biological engineering. “The finding of the critical point is equivalent to finding a good, simple explanation for the many things that make water odd, especially at low temperatures.”

Water’s oddities include that as water cools, it expands rather than contracting, which is why frozen water is less dense than liquid water. Water also becomes more squeezable — or compressible — at lower temperatures. There are also at least 17 ways in which its molecules can arrange when frozen.

A critical point is a unique value of temperature and pressure at which two phases of matter become indistinguishable, and it occurs just prior to matter transforming from one phase into the other.

Water’s oddities are easily explained by the presence of a critical point, Debenedetti said. The presence of a critical point is felt on the properties of the substance quite far away from the critical point itself. At the critical point, the compressibility and other thermodynamic measures of how the molecules behave, such as the heat capacity, are infinite.

Using two different computational methods and two highly realistic computer models of water, the team identified the liquid-liquid critical point as lying in a range of about 190 to 170 degrees Kelvin (about -117 degrees to -153 degrees Fahrenheit) at about 2,000 times the atmospheric pressure at sea level.

The detection of the critical point is a satisfying step for researchers involved in the decades-old quest to determine the underlying physical explanation for water’s unusual properties. Several decades ago, physicists theorized that cooling water to temperatures below its freezing point while maintaining it as a liquid — a “supercooled” state that occurs in high-altitude clouds — would expose water’s two unique liquid forms at sufficiently high pressures.

Fig A (left): Using two distinct computer simulations of water (top and bottom panels), researchers detected swings in density characteristic of supercooled water oscillating between two liquid phases that differ by density. Fig B (right): The simulations revealed a critical point between the two liquid phases, whose different densities originate microscopically in the intrusion of an extra water molecule in the local environment of a generic central molecule in the high-density liquid.

Reprinted with permission from PG Debenedetti et al, Science Vol 369 Issue 6501, DOI:10.1126/science.abb9796

To test the theory, researchers turned to computer simulations. Experiments with real-life water molecules have not so far provided unambiguous evidence of a critical point, in part due to the tendency for supercooled water to rapidly freeze into ice.

Francesco Sciortino, a professor of physics at the Sapienza University of Rome, conducted one of the first such modeling studies while a postdoctoral researcher in 1992. That study, published in the journal Nature, was the first to suggest the existence of a critical point between the two liquid forms.

The new finding is extremely satisfying for Sciortino, who is also a co-author of the new study in Science. The new study used today’s much faster and more powerful research computers and newer and more accurate models of water. Even with today’s powerful research computers, the simulations took roughly 1.5 years of computation time.

“You can imagine the joy when we started to see the critical fluctuations exactly behaving the way they were supposed to,” Sciortino said. “Now I can sleep well, because after 25 years, my original idea has been confirmed.”

In the case of the two liquid forms of water, the two phases coexist in uneasy equilibrium at temperatures below freezing and at sufficiently high pressures. As the temperature dips, the two liquid phases engage in a tug of war until one wins out and the entire liquid becomes low-density.

In the simulations performed by postdoctoral researcher Gül Zerze at Princeton and Sciortino in Rome, as they brought down the temperature well below freezing into the supercooled range, the density of water fluctuated wildly just as predicted.

Some of the odd behaviors of water are likely to be behind water’s life-giving properties, Zerze said. “The fluid of life is water, but we still don’t know exactly why water is not replaceable by another liquid. We think the reason has to do with the abnormal behavior of water. Other liquids don’t show those behaviors, so this must be linked to water as the liquid of life.”

The two phases of water occur because the water molecule’s shape can lead to two ways of packing together. In the lower density liquid, four molecules cluster around a central fifth molecule in a geometric shape called a tetrahedron. In the higher density liquid, a sixth molecule squeezes in, which has the effect of increasing the local density.

The team detected the critical point in two different computer models of water. For each model, the researchers subjected the water molecules to two different computational approaches to looking for the critical point. Both approaches yielded the finding of a critical point.

Peter Poole, a professor of physics at St. Francis Xavier University in Canada, and a graduate student when he collaborated with Sciortino and coauthored the 1992 paper in Nature, said the result was satisfying. “It’s very comforting to have this new result,” he said. “It’s been a long and sometimes lonely wait since 1992 to see another unambiguous case of a liquid-liquid phase transition in a realistic water model.”

C. Austen Angell, Regents Professor at Arizona State University, is one of the pioneers of experiments in the 1970s on the nature of supercooled water. “No doubt that this is a heroic effort in the simulation of water physics with a very interesting, and welcome, conclusion,” said Angell, who was not involved in the present study, in an email. “As an experimentalist with access to equilibrium (long-term) physical measurements on real water, I had always felt ‘safe’ from preemption by computer simulators. But the data presented in the new paper shows that this is no longer true.”

The simulations were performed at Princeton Research Computing, a consortium of groups including the Princeton Institute for Computational Science and Engineering (PICSciE) and the Office of Information Technology’s High Performance Computing Center and Visualization Laboratory at Princeton University, and on computational resources managed and supported by the physics department of Sapienza University Rome. Support for the study was provided by the National Science Foundation (grant CHE-1856704).

The study, “Second critical point in two realistic models of water,” by Pablo G. Debenedetti, Francesco Sciortino, and Gül Zerze, was published in the July 17 issue of the journal Science. DOI: 10.1126/science.abb9796.

source https://scienceblog.com/517397/new-study-provides-evidence-for-decades-old-theory-to-explain-the-odd-behaviors-of-water/

0 notes

Text

Cisco Application Centric Infrastructure Security

Cisco® Application Centric Infrastructure (ACI) is an innovative architecture that radically simplifies, optimizes, and accelerates the entire application deployment lifecycle. Cisco ACI uses a holistic systems-based approach, with tight integration between physical and virtual elements, an open ecosystem model, and innovation-spanning application-specific integrated circuits (ASICs), hardware, and software. This unique approach uses a common policy-based operating model across network and security elements that support Cisco ACI (computing; storage in the future), overcoming IT silos and drastically reducing costs and complexity.

Security Problems Addressed by Cisco ACI Cisco ACI addresses the security and compliance challenges of next-generation data center and cloud environments.

With organizations transitioning to next-generation data center and cloud environments, automation of security policies is needed to support on-demand provisioning and dynamic scaling of applications. The manual device-centric approach to security management is both error prone and insecure. As application workloads are being added, modified, and moved in an agile data center environment, the security policies need to be carried with the application endpoints. Dynamic policy creation and deletion is needed to secure east-west traffic and handle application mobility. Visibility into the traffic is important to identify and mitigate new advanced targeted attacks and secure the tenants.

Cisco ACI Security for Next-Generation Data Center and Cloud The Cisco ACI Security Solution uses a holistic, systems-based approach to address security needs for next-generation data center and cloud environments (Figure 1). Unlike alternative overlay-based virtualized network security solutions, which offer limited visibility and scale and require separate management of underlay and overlay network devices and security policies, the Cisco ACI Security Solution uniquely addresses the security needs of the next-generation data center by using an application-centric approach and a common policy-based operations model while helping ensure compliance and reducing the risk of security breaches.

The Cisco ACI Security enables unified security policy lifecycle management with the capability to enforce policies anywhere in the data center across physical and virtual workloads. It offers complete automation of Layer 4 through 7 security policies and supports a defense-in-depth strategy with broad ecosystem support while enabling deep visibility, automated policy compliance, and accelerated threat detection and mitigation. Cisco ACI is the only approach that focuses on the application by delivering segmentation that is dynamic and application centered.

Main Benefits of Cisco ACI Security Main features and benefits of the Cisco ACI Security include:

Application-centric policy model: Cisco ACI provides a higher-level abstraction using endpoint groups (EPGs) and contracts to more easily define policies using the language of applications rather than network topology. The Cisco ACI whitelistbased policy approach supports a zero-trust model by denying traffic between EPGs unless a policy explicitly allows traffic between the EPGs.

Unified Layer 4 through 7 security policy management: Cisco ACI automates and centrally manages Layer 4 through 7 security policies in the context of an application using a unified application–centric policy model that works across physical and virtual boundaries as well as third-party devices. This approach reduces operational complexity and increases IT agility without compromising security.

Policy-based segmentation: Cisco ACI enables detailed and flexible segmentation of both physical and virtual endpoints based on group policies, thereby reducing the scope of compliance and mitigating security risks.

Automated compliance: Cisco ACI helps ensure that the configuration in the fabric always matches the security policy. Cisco APIs can be used to pull the policy and audit logs from the Cisco Application Policy Infrastructure Controller (APIC) and create compliance reports (for example, a PCI compliance report). This feature enables real-time IT risk assessment and reduces the risk of noncompliance for organizations.

Integrated Layer 4 security for east-west traffic: The Cisco ACI fabric includes a built-in distributed Layer 4 stateless firewall to secure east-west traffic between application components and across tenants in the data center.

Open security framework: Cisco ACI offers an open security framework (including APIs and OpFlex protocol) to support advanced service insertion for critical Layer 4 through 7 security services such as intrusion detection systems (IDS) and intrusion prevention systems (IPS), and next-generation firewall services (such as the Cisco Adaptive Security Virtual Appliance (ASAv), the Cisco ASA 5585-X Adaptive Security Appliance, and third-party security devices) in the application flow regardless of their location in the data center. This feature enables a defense-in-depth security strategy and investment protection.

Deep visibility and accelerated attack detection: Cisco ACI gathers time-stamped network traffic data and supports atomic counters to offer real-time network intelligence and deep visibility across physical and virtual network boundaries. This feature enables accelerated attack detection early in the attack cycle.

Automated incident response: Cisco ACI supports automated response to threats identified in the network by enabling integration with security platforms using northbound APIs.

Why Cisco? The Cisco ACI architectural approach provides a continuous and pervasive way to weave security into the fabric of today’s dynamic, application-oriented data centers. The Cisco ACI Security delivers visibility across the entire application and servicesoriented environment and along the entire attack continuum. The Cisco ACI Security enables organizations to deploy security measures more quickly and effectively where and when they are needed. The solution protects the company before, during, and after an attack without compromising network performance, agility, or functions.

More Details Visit http://bit.ly/2YGmhGZ

0 notes

Text

Original Post from SC Magazine Author: Doug Olenick

Traditional security solutions were designed to identify threats at the perimeter of the enterprise, which was primarily defined by the network. Whether called firewall, intrusion detection system, or intrusion prevention system, these tools delivered “network-centric” solutions.

Innovation was slow because activity was dictated, in large part, by the capabilities, and limitations, of available technology resources. Much like a sentry guarding the castle, they emphasized identification and were not meant to investigate activity that might have gotten past their surveillance.

Originally, firewalls performed the task of preventing unwanted, and potentially dangerous, traffic. Then security vendors started pitching “next generation firewalls” which was based on a model that targeted applications, users and content. It was a shift that provided visibility and context into the data and assets that organizations were trying to protect.

Modern environments require a new approach

Now, with modern architectures, threats that target public clouds (PaaS or IaaS platforms) demand a new level of insight and action. They operate differently than traditional datacenters: applications come and go instantaneously, network addresses and ports are recycled seemingly at random, and even the fundamental way traffic flows have changed. To operate successfully in modern IT infrastructures, you have to reset how you think about security in cloud.

Surprisingly, many organizations continue to use network-based security and rely on available network traffic data as their security approach. It’s important for decision makers to understand the limitations inherent in this kind of approach so they don’t operate on a false sense of security. A purpose-built cloud solution is the only thing that will provide the type of visibility and protection required.

The limits of “next generation firewall”

Security teams in modern environments must first realize that in the cloud, most traffic is encrypted; that means the network has no ability to inspect it. Even if you could perform a “Man in the Middle” attack to decrypt the data, the scale and elasticity of the cloud would make the current Next-Generation Firewalls useless.

In an IaaS environment, applications are custom-written, which means there are no known signatures that can identify the app. The application becomes identified based on its security profile, and that can change based upon how it’s used. For example, a security profile and behavior of a database app will be different in communication patterns, like for HR or Finance use cases. From a launch perspective however, they are the same application and a next generation firewall cannot distinguish between them to understand the application behavior or required policy. For example, the same user in a production environment versus a development environment, working on the same application, will still have a different security profile.

As environments increasingly make use containers and orchestration systems like Kubernetes, as well as serverless computing, they present even more challenges for outdated security tools. These new types of tools are built with microservices, an innovation that befuddles next generation firewalls because they are blind to how they work.

An approach built for the cloud

One of the greatest cloud security challenges comes from the fact that the cloud delivers its infrastructure components, things like gateways, servers, storage, compute, and all the resources and assets that make up the cloud platform environment, as virtual services. There is no traditional network or infrastructure architecture in the cloud.

Deploying workloads into the cloud can quickly involve complex sets of microservices and serverless instances that function in fluid architectures that change every few minutes or seconds, creating a constantly changing security environment. Here are some of the common security challenges presented by the cloud:

Microservices

Infrastructure as code

Machine based alerts do not make sense and machines cannot be used to understand apps

The combined effect of all this innovation? Exponential growth in a cloud environment’s attack surface. A busy cloud environment can generate as many as hundreds of millions of connections per hour, which makes threat detection a much more challenging proposition. Of course, attackers are well aware of these vulnerabilities and are working frantically to exploit them.

The only way to secure a continuously changing cloud environment is through continuous approaches to security. These security functions need to include the following capabilities:

Continuous anomaly detection and behavioral analysis that is capable of monitoring all event activity in your cloud environment, correlate activity among containers, applications, and users, and log that activity for analysis after containers and other ephemeral workloads have been recycled. This monitoring and analysis must be able to trigger automatic alerts. Behavioral analytics makes it possible to perform non-rules based event detection and analysis in an environment that is adapting to serve continuously changing operational demands.

Continuous, real-time configuration and compliance auditing across cloud storage and compute instances.

Continuous real time monitoring of access and account activity across APIs as well as developer and user accounts.

Continuous, real time workload and deep container activity monitoring, abstracted from the network. A public cloud environment provides limited visibility into network activity, so this requires having agents on containers or hosts that monitor orchestration tools, file integrity, and access control.

Moving beyond – WAY beyond – next generation firewall

New security tools designed to deeply monitor cloud infrastructure and analyze workload and account activity in real time make it possible to deploy and scale without compromising security. When operating in the cloud, businesses need to know that their infrastructure remains secure as it scales. They need assurance that they can deploy services that are not compromising compliance or introducing new risk. This can only happen with new tools designed specifically for highly dynamic cloud environments, tools that provide continuous, real-time monitoring, analysis, and alerting.

About the author:

Sanjay Kalra is co-founder and CPO at Lacework, leading the company’s product strategy, drawing on more than 20 years of success and innovation in the cloud, networking, analytics, and security industries. Prior to Lacework, Sanjay was GM of the Application Services Group at Guavus, where he guided the company to market leadership and a successful exit. Sanjay also served as Senior Director of Security Product Management for Juniper Networks, and spearheaded continued innovations in the company’s various security markets. Sanjay has also held senior positions at Cisco and ACC. He holds 12 patents in networking and security.

The post Leaving behind old school security tendencies appeared first on SC Media.

#gallery-0-6 { margin: auto; } #gallery-0-6 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-6 img { border: 2px solid #cfcfcf; } #gallery-0-6 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Doug Olenick Leaving behind old school security tendencies Original Post from SC Magazine Author: Doug Olenick Traditional security solutions were designed to identify threats at the perimeter of the enterprise, which was primarily defined by the network.

0 notes

Photo

Tips for Finding the Right Hybrid Cloud Provider

So, your organization has decided to adopt a hybrid cloud architecture? That’s great news! Soon you’ll have a flexible model in place, with the ability to strategically run workloads of data where it makes the most sense. Either in the public cloud, private cloud, on-premise on dedicated servers, virtual

or cloud servers, or some combination of these. Not only that, with this hybrid cloud approach your enterprise will be more adept at managing security and compliance requirements, and having the ability to scale as requirements change.

That’s why leading enterprises are moving to the hybrid cloud model in droves, particularly when it comes to Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) solutions. According to Forbes, IaaS/PaaS markets could rise from some $38 billion in 2016 to $173 billion in 2026. Not only that, Synergy Research Group found that private and hybrid cloud infrastructures have the second highest growth rate (after public IaaS/PaaS services) with 45% growth in 2015 (Source: Forbes).

The only big decision left is selecting the right hybrid cloud provider. Finding a provider with the most experience and support options related to an organization’s current and future needs is priority one. If you’re considering a hybrid cloud service provider, here are some additional tips to consider:

Outline business objectives- Before speaking with various providers, it’s important to take the time upfront to outline the immediate and long-term business objectives of cloud adoption. A hybrid cloud environment provides a secure, unified environment to run diverse applications, and only pay for the resources consumed. That means that each application and workload selected for the hybrid environment makes up the optimal infrastructure type, including databases, web servers, application servers, storage, firewalls, etc. Often the largest benefit of the hybrid cloud environment includes integrating two applications running on private and public clouds, such as integrating SalesForce.com or a business analytics tool with a legacy system. In this example, it’s important to look for a hybrid cloud service provider that has not only the capabilities required but also the big-picture grasp of the benefit of integration across the infrastructure and mixing private cloud elements in a single workload.

To leverage the full value of hybrid cloud, look for providers that offer interoperability across the infrastructure, application portability and data governance across infrastructures. This way, regardless of where compute, application, networking and storage resources are running they are working together and they are accessible through a unified management platform. Ask cloud providers what platforms are available to streamline the management of cloud apps and deployments and the creation of hybrid cloud instances.

Evaluate migration paths - Because hybrid cloud environments allow organizations to methodically and purposefully make a shift to the cloud, it’s the perfect model for provisioning applications and scaling on-the-fly. It provides the ability to add new applications quickly without being throttled by red tape. However, as mentioned earlier, that doesn’t mean enterprises shouldn’t have business objectives set and a plan in place for migrating to the cloud. When shopping cloud providers, ask if they provide classifications for defining workloads to prioritize which applications are best suited for a cloud environment. This classification system may help rank which applications and workloads should be migrated first, and which should follow. For instance, if an application development and testing environment are needed prior to production, it may make sense to spin up a virtual cloud instance to run these workloads before moving CRM applications.

It’s also important to assess the experience and resource availability of internal IT resources during a cloud migration and to evaluate how a cloud service providers can complement this skill set.

Consider compliance and security concerns- The hybrid cloud is all about determining which workloads can efficiently and cost-effectively be transitioned in a very dynamic enterprise environment. It’s important to evaluate functional requirements for Quality of Service (QoS), pricing, security and latency, and of course, requirements related to regulatory and privacy needs. While there is no one-size-fits-all approach to meeting and maintaining compliance, there are best practices to running effective compliance management initiatives. It is imperative organizations look for hybrid providers that have the right people AND technology in place. This could include technologies like firewalls, Intrusion Detection Systems (IDS) and log management appliances. But providers should also employ skilled resources who are knowledgeable about PCI-DSS rules. For instance, an experienced team would recognize that data must be kept locally, with a secure connection to the public cloud for processing.

When evaluating hybrid cloud providers, look for partners that specialize in product knowledge that’s most applicable to your enterprise. Finding a partner that can support current business objectives along with the ability to support business and infrastructure changes down the road. This will help prevent slowdowns during the migration effort and provide a solid foundation for future growth and hybrid adoption.

0 notes

Text

Data Center Security Market — Explore latest facts on networking 2025

Global Data Center Security Market: Snapshot

Data center security refers to a set of practices that safeguards a data center from different kinds of threats and attacks. Data centers are integral for companies as they contain sensitive business data, which necessitates dedicated security for them.

Typically, data centers are central IT facilities and are served by hi-tech networking equipment and resources. They receive business data from several places and store it for later use. Data centers of complex configuration may employ IT tools called middleware to monitor flow of data from the data center or to monitor the flow of data within the system. This safeguards data to be less accessible to hackers or to anyone who is not authorized to seek access to the data.

Request Sample Copy of the Report @

https://www.tmrresearch.com/sample/sample?flag=B&rep_id=14

Data center security can be of several types. Physical security for data centers involve setbacks, thick walls, landscaping, and other features of a building in order to create a physical obstacle around the data center. Network security is of paramount importance for data centers. As data centers are connected to networks, these networks need to be adequately protected to prevent loss of information. This involves security engineers to install anti-virus programs, firewalls, or other software that prevents data breach or security threat.

Data center security is also dependent on the type of data center in consideration. For example, identifying a data center on the basis of tier configuration shows its fault tolerance and the type of security that would be needed. Generally, experts recommend redundant utilities such as multiple power sources and several environmental controls for data centers. The security needed for a data center is also dependent on the degree of network virtualization and other characteristics of complex IT systems.

Request TOC of the Report @

https://www.tmrresearch.com/sample/sample?flag=T&rep_id=14

Data Center Security Market: Comprehensive Overview and Trends

The data center security market is constantly evolving and the technologies involved have been undergoing several developments. One of the most recent developments in the field is the replacement of single firewall and antivirus programs with network security infrastructure and clustered software. Data center servers and other devices are susceptible to physical attacks and thefts and companies and industries are resorting to data center security to safeguard their critical data and network infrastructure from such threats.

The global market for data center security is fueled by rising physical attacks and insider threats, growing incidences of cyber threats and attacks, and virtualization in data centers. On the down side, the absence of a common platform offering integrated physical and virtual security solutions and high procurement costs threaten to curb the growth of the data center security market. Players, however, are presented with a great opportunity thanks to a global rise in spending on data security, a surge in mega data centers across the globe, and an increase in cloud computing and virtualization adoption.

Data Center Security Market: Segmentation Analysis

The data center security market has been evaluated on the basis of logical security solutions and services, physical security solutions and service, end users and environments, and geography.

By way of logical security solutions and services, the market for data center security can be categorized into access control and compliance, threat and application security solutions, professional services, and data protection solutions. The segment of threat and application security solutions consists of virtualization security solutions, intrusion prevention and detection systems (IDS/IPS), firewall, domain name system (DNS), distributed denial of service (DDOS) protection, antivirus and unified threat management (UTM), and servers/secure socket later (SSL).

Access control and compliance can be sub-segmented into security information and event management (SIEM), identity access management (IAM), and web filtering solutions. Data protection solutions include information lifecycle management (ILM) solutions, data leakage protection (DLP), and disaster recovery solutions. Professional services comprise security consulting services and managed security services (MSS).

By way of physical security solutions and services, the data center security market can be classified into analysis and modeling (physical identity and access management (PIAM) and physical security information management (PSIM)), video surveillance (IP cameras and HDCCTV), and access control (biometrics and card readers). On the basis of end users and environment, the market for data center security solutions can be segmented into collocation environments, cloud provider environments, and enterprise data center environments such as government, banking, financial services and insurance (BFSI), public sector and utilities, healthcare and life sciences, and telecommunication and IT. By region, the global data center security market covers North America, Europe, Asia Pacific, and the Rest of the World (RoW).

Data Center Security Market: Vendor Landscape

There are a number of players operating in the data center security market. Some of the top companies are Akamai Technologies, Inc., Check Point Software Technologies, Citrix Systems, Inc., Dell, Inc., EMC Corporation, Cisco Systems, Inc., Fortinet Inc., Genetec, Honeywell International Inc., Hewlett-Packard Development Company, L.P., Juniper Networks, Inc., MacAfee, Inc. (Subsidiary Of Intel Corporation), Siemens Ag, Trend Micro, Inc., and VMware, Inc.

The study presents reliable qualitative and quantitative insights into:

Market segments and sub-segments

Market trends and dynamics

Supply and demand chain of the market

Market valuation (revenue and/or volume)

Key trends/opportunities/challenges

Forces defining present and estimated future state of the competitive landscape

Technological developments

Value chain and stakeholder analysis

The regional analysis covers:

North America

Latin America

Europe

Asia Pacific

Middle East and Africa

About TMR Research

TMR Research is a premier provider of customized market research and consulting services to business entities keen on succeeding in today’s supercharged economic climate. Armed with an experienced, dedicated, and dynamic team of analysts, we are redefining the way our clients’ conduct business by providing them with authoritative and trusted research studies in tune with the latest methodologies and market trends.

Contact:

TMR Research,

3739 Balboa St # 1097,

San Francisco, CA 94121

United States

Tel: +1-415-520-1050

Email: [email protected]

0 notes

Text

Data Center Security Market: Growth Opportunities & Forecast 2017-2025

Global Data Center Security Market: Snapshot

Data center security refers to a set of practices that safeguards a data center from different kinds of threats and attacks. Data centers are integral for companies as they contain sensitive business data, which necessitates dedicated security for them.

Typically, data centers are central IT facilities and are served by hi-tech networking equipment and resources. They receive business data from several places and store it for later use. Data centers of complex configuration may employ IT tools called middleware to monitor flow of data from the data center or to monitor the flow of data within the system. This safeguards data to be less accessible to hackers or to anyone who is not authorized to seek access to the data.

Data center security can be of several types. Physical security for data centers involve setbacks, thick walls, landscaping, and other features of a building in order to create a physical obstacle around the data center. Network security is of paramount importance for data centers. As data centers are connected to networks, these networks need to be adequately protected to prevent loss of information. This involves security engineers to install anti-virus programs, firewalls, or other software that prevents data breach or security threat.

Request Sample Copy of the Report @

https://www.tmrresearch.com/sample/sample?flag=B&rep_id=14

Data center security is also dependent on the type of data center in consideration. For example, identifying a data center on the basis of tier

configuration shows its fault tolerance and the type of security that would be needed. Generally, experts recommend redundant utilities such as multiple power sources and several environmental controls for data centers. The security needed for a data center is also dependent on the degree of network virtualization and other characteristics of complex IT systems.

Data Center Security Market: Comprehensive Overview and Trends

The data center security market is constantly evolving and the technologies involved have been undergoing several developments. One of the most recent developments in the field is the replacement of single firewall and antivirus programs with network security infrastructure and clustered software. Data center servers and other devices are susceptible to physical attacks and thefts and companies and industries are resorting to data center security to safeguard their critical data and network infrastructure from such threats.

The global market for data center security is fueled by rising physical attacks and insider threats, growing incidences of cyber threats and attacks, and virtualization in data centers. On the down side, the absence of a common platform offering integrated physical and virtual security solutions and high procurement costs threaten to curb the growth of the data center security market. Players, however, are presented with a great opportunity thanks to a global rise in spending on data security, a surge in mega data centers across the globe, and an increase in cloud computing and virtualization adoption.

Request TOC of the Report @

https://www.tmrresearch.com/sample/sample?flag=T&rep_id=14

Data Center Security Market: Segmentation Analysis

The data center security market has been evaluated on the basis of logical security solutions and services, physical security solutions and service, end users and environments, and geography.

By way of logical security solutions and services, the market for data center security can be categorized into access control and compliance, threat and application security solutions, professional services, and data protection solutions. The segment of threat and application security solutions consists of virtualization security solutions, intrusion prevention and detection systems (IDS/IPS), firewall, domain name system (DNS), distributed denial of service (DDOS) protection, antivirus and unified threat management (UTM), and servers/secure socket later (SSL).

Access control and compliance can be sub-segmented into security information and event management (SIEM), identity access management (IAM), and web filtering solutions. Data protection solutions include information lifecycle management (ILM) solutions, data leakage protection (DLP), and disaster recovery solutions. Professional services comprise security consulting services and managed security services (MSS).

Enquiry For Discount @

https://www.tmrresearch.com/sample/sample?flag=D&rep_id=14

By way of physical security solutions and services, the data center security market can be classified into analysis and modeling (physical identity and access management (PIAM) and physical security information management (PSIM)), video surveillance (IP cameras and HDCCTV), and access control (biometrics and card readers). On the basis of end users and environment, the market for data center security solutions can be segmented into collocation environments, cloud provider environments, and enterprise data center environments such as government, banking, financial services and insurance (BFSI), public sector and utilities, healthcare and life sciences, and telecommunication and IT. By region, the global data center security market covers North America, Europe, Asia Pacific, and the Rest of the World (RoW).

Data Center Security Market: Vendor Landscape

There are a number of players operating in the data center security market. Some of the top companies are Akamai Technologies, Inc., Check Point Software Technologies, Citrix Systems, Inc., Dell, Inc., EMC Corporation, Cisco Systems, Inc., Fortinet Inc., Genetec, Honeywell International Inc., Hewlett-Packard Development Company, L.P., Juniper Networks, Inc., MacAfee, Inc. (Subsidiary Of Intel Corporation), Siemens Ag, Trend Micro, Inc., and VMware, Inc.