#kenneth liberman

Text

Brown and Laurier (2005) cite a story written about a small Hungarian detachment of troops in the Alps that had become lost in a snowstorm. They had considered themselves lost "and waited for the end. And then one of us found a map in his pocket. That calmed us down." When the unit arrived back in camp three days later their worried lieutenant examined their map and discovered that "It was not a map of the Alps but of the Pyrenees."

0 notes

Text

百度研究院引入前密西西比大學校長,加入顧問委員會

訪問購買頁面:

小度智能旗艦店

據了解,Jeffrey Vitter師從於1974年圖靈獎獲得者Donald Knut,在數據科學方面,特別是處理、壓縮和傳遞大量信息的算法方面,擁有豐碩成果。他曾當選為古根海姆基金會、美國國家發明學會、美國科學促進會、計算機協會和電氣和電子工程師協會等科研機構的會員,也是國家科學基金會總統青年研究員、富布賴特學者和IBM教師發展獎獲得者。

除了科研方面的成就外,Jeffrey Vitter也是一位出色的教學管理者,擁有39年的教學和研究經驗。他曾在2016年1月至2019年1月期間擔任美國密西西比大學第17任校長,並先後在包括布朗大學、杜克大學、普渡大學、堪薩斯大學在內的美國著名高校擔任教學和領導職務。

百度研究院顧問委員會成立於2018年,被稱為百度研究院的“智囊”。目前,百度研究院顧問委員會已有AT&T和貝爾實驗室前副總裁及首席科學家David Belanger,伊利諾伊大學厄巴納-香檳分校終身教授、計算機視覺領域頂級科學家David Forsyth,著名的計算語言學專家Mark Liberman ,明尼蘇達大學終身教授、知識發現與數據挖掘(KDD)領域的最高技術榮譽ACM SIGKDD創新獎得主Vipin Kumar,量子密碼學的共同發明者之一、牛津大學終身教授、新加坡國立大學量子技術中心主任Artur Konrad Ekert等國際知名科學家加入。

百度研究院由百度首席技術官王海峰博士兼任院長,下設大數據實驗室(BDL)、商業智能實驗室(BIL)、認知計算實驗室(CCL)、深度學習實驗室(IDL)、量子計算研究所(IQC)、機器人與自動駕駛實驗室(RAL)和矽谷人工智能實驗室(SVAIL)七大實驗室,匯聚包括Kenneth Ward Church、段潤堯、郭國棟、黃亮、李平、熊輝、楊睿剛等AI領域的世界級科學家。

百度研究院的研究領域幾乎囊括了從底層基礎到感知、認知技術的AI全領域範疇,包括機器學習、數據挖掘、計算機視覺、語音、自然語言處理、商業智能、量子計算等。

.(tagsToTranslate)Baidu 百度(t)百度研究院引入前密西西比大學校長,加入顧問委員會(t)kknews.xyz

from 百度研究院引入前密西西比大學校長,加入顧問委員會

via KKNEWS

0 notes

Text

Andre Emmerich was an exceptional art dealer. Robert Motherwell introduced Emmerich to “the small group of eccentric painters we now know as the New York Abstract Expressionist School”. During the second half of the 20th century the Emmerich Gallery was located in New York City and since 1959 in the Fuller Building at 41 East 57th Street and in the 1970s also at 420 West Broadway in Manhattan and in Zürich, Switzerland.

The gallery displayed leading artists working in a wide variety of styles including Abstract Expressionism, Op Art, Color field painting, Hard-edge painting, Lyrical Abstraction, Minimal Art, Pop Art and Realism, among other movements. He organized important exhibitions of pre-Columbian art and wrote two acclaimed books, “Art Before Columbus” (1963) and “Sweat of the Sun and Tears of the Moon: Gold and Silver in Pre-Columbian Art” (1965), on the subject.

In addition to David Hockney, and John D. Graham the gallery represented many internationally known artists and estates including: Hans Hofmann, Morris Louis, Helen Frankenthaler, Kenneth Noland, Sam Francis, Sir Anthony Caro, Jules Olitski, Jack Bush, John Hoyland, Alexander Liberman, Al Held, Anne Ryan, Miriam Schapiro, Paul Brach, Herbert Ferber, Esteban Vicente, Friedel Dzubas, Neil Williams, Theodoros Stamos, Anne Truitt, Karel Appel, Pierre Alechinsky, Larry Poons, Larry Zox, Dan Christensen, Ronnie Landfield, Stanley Boxer, Pat Lipsky, Robert Natkin, Judy Pfaff, John Harrison Levee, William H. Bailey, Dorothea Rockburne, Nancy Graves, John McLaughlin, Ed Moses, Beverly Pepper, Piero Dorazio, among others.

Between 1982-96, Emmerich ran a 150-acre sculpture park called Top Gallant in Pawling, New York, on his country estate that once was a Quaker farm. There he displayed large-scale works by, among others, Alexander Calder, Beverly Pepper, Bernar Venet, Tony Rosenthal, Isaac Witkin, Mark di Suvero and George Rickey, as well as the work of younger artists like Keith Haring. Many of the above mentioned artists are available with different publications at www.ftn-books.com

OLYMPUS DIGITAL CAMERA

OLYMPUS DIGITAL CAMERA

OLYMPUS DIGITAL CAMERA

, but FTN books also has some specific Emmerich publications available.

In 1996, Sotheby’s bought the Andre Emmerich Gallery, with the aim of handling artists’ estates. One year later the Josef and Anni Albers Foundation, the main beneficiary of the Albers’ estates, did not renew its three-year contract.The gallery was eventually closed by Sotheby’s in 1998.

André Emmerich (1924-2007) Andre Emmerich was an exceptional art dealer. Robert Motherwell introduced Emmerich to "the small group of eccentric painters we now know as the New York Abstract Expressionist School".

0 notes

Text

Generalized compatibilism

When we speak to the “complexity” of phenomena, we perhaps give some token nod to “emergence,” or the relation between parts. But we do not so often discuss the real causes and implications of our operating inside high-entropy systems which refuse simplistic explanation.

These systems are multivariate and indeed almost infinitely variate, with a kind of “approaching limit” of null effect from the least influential factors. They are not just situated in a complex world (there is no such thing as “autonomy”); they are also constantly in flux and ambiguously bounded.

It seems obviously a mistake then to appeal to reductionism—reductionism not in the naturalist, material sense, but in the causal and dynamic sense of a system in progress. Very very few systems we care about can be explained by one to two variables while also providing majority coverage of the phenomena. And yet this reductionism is constantly what we see; it may not be explicitly stated, but it is evidenced in the implicit non-compatibilism of the scholarly outlook, an outlook no doubt motivated in large part by the natural incentives of the Bourdieusian field: scarcities of recognition, scarcities of attention, scarcities of funding.

Similarly, in the public domain, we see an absolutism which refuses to cede any legitimacy to the positions of the other side: the moral standing of the fetus as human being must be flatly denied rather than simply subordinated to the rights of the bearing woman. Acknowledgment is avoided because it would oblige not just integration of the perspective but legitimation of the “other side,” in other words, requiring both bounding (compromise) and a ceding of right-to-speak—neither of which is an acceptable outcome for an interested partisan.

In other words, we not only wish to distinguish ourselves in the sense of achievement; we wish for exclusivity, because exclusivity is distinction. The achievement is merely what sets apart, or excludes. It is the vehicle, not the destination.

And yet a generalized compatibilism appears to be the best way we know to apprehend or process complexity—through recourse to phenomenons’ multidimensionality. We see this in our contemporary treatment of literary “interpretations.” Each reveals another dimension implicit in the work, and while any one interpretive dimension can be challenged, there is never an expectation of mutual exclusivity.

Georgia Warnke, 1999, Legitimate Differences:

We assume that these new dimensions of the work can appear because different interpreters approach it with different experiences and concerns, view it from within different contexts, and come at it from the vantage point of different interpretive traditions. We assume that we can learn from these interpretations and, indeed, that we can learn in a distinctive sense: not in the sense that we approach the one true or real meaning of the text or work of art but rather in the sense simply that we come to understand new dimensions of its meaning and thus to understand it in an expanded way.

I emphasize “contemporary treatment” because this attitude is not natural. It is the product of a century of discourse in literary theory, much of which began with warring over the “correct” interpretation of a text, then moved on to warring over the “proper” interpretive frame/s, and has finally settled on a pluralistic indeterminacy. In other words, it is anticompatibilism and exclusivity of explanation which apparently constitute our natural positions on matters. (And here I wish I could strikethrough the term natural in the way of Derrida, to show the necessity and hopeless misfit of the word).

Of much relevance here is Ken Liberman’s observations of coffee-tasting, which builds off the work of Merleau-Ponty on “chiasmic intertwining.”

When you drink the coffee, descriptors give you access to dimensions of the flavor and you can communicate those dimensions to other tasters... [you can] direct someone to find what can possibly be located with the use of that descriptor... [it] is opening up the taste of the coffee but at the same time it's closing it down, because once you categorize this basket of fruit... then how do you taste, you know, vanilla that's going on at the same time... Nobody's even attuning to it. You might not even sense it.

Our descriptions both unveil and veil simultaneously. In obscuring, we illuminate. To Merleau-Ponty, Heidegger, and many others, this is a central paradox of phenomenology.

Generalized compatibilism is a view of verbal, moral, and theoretical disputes alike. (See “Linguistic Conquests” for an explanation as to the reductionism of narrow-and-conquer methods in philosophy, which is mirrored in literary theory’s disputes over proper factoring of the handle “meaning.”) I take it as an extension of what is now typically perceived as the most sophisticated understanding of the free will and determinism debate, known as compatibilism. I believe that the characteristics of that philosophical debate are shared by many ongoing debates across the humanities and inexact sciences: an appearance of irreconcilable difference which, when reconciled, present us with greater clarity than either position on its own.

There are deep motivations to refusing to acknowledge an oppositional interpretation. But the most incisive—if not recognized—thinkers historically are those who manage to integrate their opposition into their own frame, who “yes and” or qualify, who can to “maintain coverage” or “preserve ground” rather than reduce multidimensional reality into one-dimensional explanations.

John Shook on John Dewey’s fin-de-siècle philosophical practice:

There were Hegelian rationalisms flourishing, other kinds of idealisms, various kinds of radical naive empiricisms, all kinds of anti-Darwinian alternatives. And Dewey [...] incorporated what they were trying to say into a naturalistic framework so thoroughly that nowadays the field looks very cleaned up from our perspective.

#roy wagner#maurice merleau-ponty#john shook#kenneth liberman#georgia warnke#jacques derrida#martin heidegger

3 notes

·

View notes

Text

Return-maximizing criticism

One thing predictive processing stresses is that our perception, at all times, is constituted by the conjunction of our environment, our sensory organs, and, crucially, our cognitive “interpretive” schema, which processes and encodes sensory input in a hierarchical fashion, higher levels predicting, top-down, the flow of sense data, bottom-up. In part, the schema contributes to the constitution of experienced reality by narrowing and stabilizing as “dominant” a few aspects of reality from many possible options, in other words, fabrication through subtraction, isolating deemed-relevant signal from deemed-irrelevant noise. But it also constitutes this reality more directly, something closer to hallucination: in the case of minor conflicts between bottom-up and top-down error, sense data can be overwritten by the predisposed prediction. We may leave out a doubled, redundant word in a sentence (e.g. an extra “and”) or interpret a friend’s utterance in a way concordant with our broad impression of them (attribution error). This hallucination is always constrained by reality, and will frequently be corrected, as later details contradict and retroactively “rewrite” interpretation. But it adds a subjective fuzziness to the world that is always present.

This is also a core insight of the phenomenology tradition (which influences to structuralism and poststructuralism; the contention that our reality is “culturally constituted” becomes more clear, since these interpretive schemas are of course largely the products of our environments). To Husserl, and many after him such as Heidegger and Merleau-Ponty, there are the “sensual” and then the “intentive” components of sensory experience, the latter making sense of (i.e. making intelligible) the former. The intentive component both opens up (draws attention to) and closes down (filters out) reality as experience, and indeed can even “hallucinate” it.

ii.

Since it is the interaction between schema and world, narrative and reality, which determines our experience, the schema gains most influence in situations where reality is vague or hard-to-discern, and the same is true vice-versa. Coffee tasting, as Ken Liberman describes it in More Studies in Ethnomethodology, is a high-stakes and socially constituted craft where tasters go back and forth (oscillate dialectically) between frame and experience, reconciling labels with sensory inputs (or else reconciling their own discovered descriptors with those of other tasters)—testing one against the other and finding an individuated “truth” through the correspondence between the levels. One taster, for instance, might suggest a hint of cinnamon; another will then taste his cup, and either node in agreement or contradict the first—that, in fact, the flavor seems closer to chocolate, in which case the first taster now returns to his cup, testing the contradiction against his (newly re-schematized) experience and remarking that, yes, it is somewhere in that range. The utterance or discovery of a label (sign) enables, by “focusing” the experience, the taster to “pick out” and experience the signified flavor from a varied, changing, and vague sensory experience—it stabilizes the instability of being, while at the same time even creating a reality, as the hierarchical prediction system will “round up” its inputs to the top-down descriptor (for instance, a taster might experience a coffee that is somewhere approximately between cinnamon and chocolate as either flavor, depending on his initial anchor). At last, as the coffee beans are rolled out to market, these descriptors will help consumers further “pick out” and simultaneously “create” an experience of chocolate.

The relevance of phenomenology and predictive processing to experiences of art should be clear. Like the taste of coffee, a literary or artistic experience is a high-dimensional, complex, vague, and polysemous occurrence. There are impossibly many things to be attended to in a 90-minute movie, and given the size and scale of discourse now, there are many traditions and conversations which the artistic choices of any given scene might be related to, and yet upon reading a critic, or dredging in one’s own long-assembled frame of previous experience made intelligible, one is able to pick out what are arguably the most salient details from the vast spread of possibles. Many people talk of reading reviews after seeing a performance, or reading a book, or watching a film, because this process helps clarify for them their own thoughts, but it is equally the case that ex-ante information (the reputation of the work among one’s social group, or the marketing campaign leading up to its release, or even the reputation of its author or genre) becomes an anchoring point-of-departure for future interpretation, even at unconscious levels. Let’s walk quickly through the process of schematic “projection” which Liberman discusses (it is more in the continental tradition than CogSci’s) and then we can return to the implications of this “priming”:

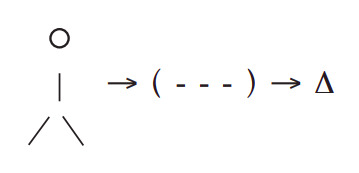

The notion of projection can be conveyed schematically by means of this diagram, which depicts a person actively projecting “→” his or her structure of understanding “(- - -)” upon an object “∆”. The structure of understanding is the lens through which she or he comes to know the object, and according to the phenomenological idea of projection this structure of understanding actively participates in organizing the object’s intelligibility:

Since the tasting is inevitably infected by what is being projected, one might consider such tasting to be unfairly prejudiced, except that no tasting exists that does not involve this structure of thinking—i.e., the projection of some sense—and so this situation might as well be considered to be ultimate. What is extraordinarily interesting is that most persons quickly forget the responsibility they have had in producing what they know. It is as if their model was something more like this, which is a very different matter:

Everything that is true of coffee tasting is equally or more true of experience in the visual arts. It is already well-known in the VizArts, where creative work is perhaps (along with poetry) at its least accessible, its most opaque and conceptual, that the role of curator “completes” the work. I would add that, in many cases, great critical treatments have similarly “completed” mosthistoric works, providing defenses through framing which allowed audiences to suddenly see the work as glorious rather than insipid, stupid, or febrile. It is more or less uncontroversial in the field that one’s interpretive frame has an enormous role in determining the understood meaning (and subjective experience) of the work.

iii.

The pr(e)(o)mise of what I’ll term a “return-maximizing”[1] mindset of art criticism is improving outcomes, in enriching (in a biased way) rather than coldly “evaluating” (in a pretend-neutral way), and we might as well call the quality of improving outcomes “value.” Value is not experiential pleasure. Experiential pleasure (the prioritization of hedonistic consumption) is one dimension of value but far from all of it.

In chemistry, there’s a process called reagent testing. One adds a reagent — Chemical A—to a reactant—Chemical B—and watches to see if a reaction takes place. If B is an unknown chemical, and we are trying to figure out whether it’s chlorine, we must add the correct reagent. If it is, in fact, chlorine, Chemical B will turn (let’s say) bright green. If it isn’t chlorine, it might turn a host of different colors, or no color at all. If one adds the incorrect reagent (Chemical A) to the reactant chlorine, Chemical B might be perfectly good and high quality, pure chlorine, but it still won’t turn the desired bright green. Similarly, if one applies the improper values hierarchy when judging a text, it will not come back with a proper reading. The assessment will be flawed; it will yield either bad results, misleading/opposite results, or no results at all. This is a large reason why works which broke into new genres, or are now seen as highly original (”setting their own terms for evaluation”), are historically panned by critics. They did not properly “fit” into the genres or interpretive schemas which contemporaneous reviewers wished them too. Rather, the proper interpretive schema needed to be discovered to “unlock” the work.

Return-maximizing criticism would seek to make itself a reagent that transforms the original work into the prettiest turquoise blue. Much of historical debate in hermeneutics has been over what interpretive frames are “proper” or “supreme”; there is obviously, firstly, no answer to this question unless a teleological end is specified, but secondly, it is equally silly to suppose that there is “one frame to rule them all,” that all texts yield equally to the same frame, etc. Rather it seems clear that the best frame for a work is dependent on the work; it would be self-defeating to judge a detective novel on the values hierarchy of so-called literary fiction, and vice-versa. So there are many meanings, and many potential interpretive frames, and if none can claim supremacy in an inherent sense, we can make recourse to either best “fit,” or best consequence, and I would argue that these two more or less are the same thing in the case of great works: that the frame(s) which unlock them most appropriately are also those of best outcome, are “return maximizing.”

But not all works are great, indeed most aren’t. David Cooper Moore’s “The Scary, Misunderstood Power of a ‘Teen Mom’ Star’s Album” discusses Farrah Abraham’s infamous (infamously bad) pop record My Teenage Dream Ended:

It’s tempting to consider My Teenage Dream Ended alongside other reality TV star vanity albums, like Paris Hilton’s excellent (and unfairly derided) dance-pop album Paris from 2006 or projects by Heidi Montag, Brooke Hogan, and Kim Kardashian that range from uneven to inept.

But the album also begs comparisons to a different set of niche celebrities— “outsider” artists.

On the I Love Music message board, music obsessives imagined the album as outsider art in the mold of cult favorite Jandek or indie press darling Ariel Pink. Other curious listeners noted similarities to briefly trendy “witch house” music, a self-consciously lo-fi subgenre of electronic dance music. In the Village Voice, music editor Maura Johnston compared Abraham to witch-house group Salem:

”If [‘Rock Bottom’] had been serviced to certain music outlets under a different artist name and by a particularly influential publicist, you’d probably be reading bland praise of its ‘electro influences’ right now.”

Phil Freeman wrote about the album as a “brilliantly baffling and alienating” experimental work in his io9 review. Freeman hedged his references to Peaches, Laurie Anderson, and Le Tigre with a disclaimer that his loftiest claim was sarcastic: “Abraham has taken a form — the therapeutic/confessional pop song-seemingly inextricably bound by cliché and, through the imaginative use of technology, broken it free and dragged it into the future.”

Freeman & Johnston’s bits cuts to the heart of it. It is the reagent applied to the reactant which determines the chemical outcome. The two work in conjunction; a work’s success is determined by what it is setting out to do, and what it is setting out to do is determined by it’s self-definition and self-positioning within the signification landscape.

Some in the rationalist community, like Julia Galef and Gwern, have mentioned patching plot holes as satisfying “apologetics” (aka “fanwanking”), a para-artistic practice that increases the sophistication of unsophisticated media and which more critics ought strive toward. This isn’t necessarily wrong, but I’m wary of rationalist utilitizing of art: prior attempts have prioritized ease over complexity or rigor, have acquiesced to low and middlebrow sensibilities, have understood the maximization of good as the maximization of pleasure, and used this understanding as a defense of sloppy artistry, short-term thinking, or else an attack on the avant-garde.

iv.

In the world of literary theory, return maximization has no room, time, or patience for questions like, “What is the meaning of the text?” “What did the author intend?” or “Is it a good work?” Such an “absolute” or “eternal” grounding may be instrumentally necessary but only in a noble lie of convenience kind of way. Interpretation and its angles—formal, experimental, empirical, reader-response, traditional—are means to ends in the return-maximizing framework, ways of getting value out of a literary work, ways of making a text do work. There are no truths, only instruments, echoing Harold Bloom’s “what is it good for, what can I do with it, what can it do for me, what can I make it mean?”

Caring about returns means caring about consequence. Caring about consequence requires a shunning of deontology. We move past hedgehog schools into foxy frames, and slam stereotypes against each other, hashing out the correspondences and breakages between each and the object of inquiry, approximate a circle with many tilted squares. Cartographic veracity (distinguished from something “feeling true” or “resonating” at a gut level) is important in fiction only so far as deception and falsehood can distort the world in desirable ways.

Distortive interpretation is, as an occasional practice and presence, already part of the critical landscape. Critics will ascribe more credibility and intentionality to authors than is probably “true” or “the case,” and over-patternicity results. We are as gods and might as well get good at it, etc.

Miller’s Law for Aesthetics: First accept a work is good, then figure out how and why that might be the case. In other words, generosity is a prerequisite of appreciation. Gabe Duquette can believe return maximization is the equivalent of Stockholm Syndrome all he wants: Stockholm Syndrome is the functional equivalent of love.

*

[1] I decided on return-maximizing over utilitarian because of the obvious argument that critiquing (in the sense of evaluating) bad art likely has positive effects towards a culture producing more good art in the long run. Arguments over whether a sober or bright-side approach is better for a culture in sum (or where on the spectrum between approaches is a so-called sweet spot) makes for an interesting conversation but one outside the scope of this specific critical mode.

#gabe duquette#kenneth liberman#maurice merleau-ponty#martin heidegger#karl friston#andy clark#edmund husserl

1 note

·

View note

Text

Mutual Modeling of Futures

I think a lot of the work that human beings are up to is basically about self-representing future states. Schelling talks about what strategy is in The Strategy of Conflict: basically, it’s mutual anticipation. Because your actions (and your future actions) are going to change the environment in which my own decisions and actions exist. That means that if I want to optimize my own future, then I need to anticipate yours.

He calls tacit bargaining a situation where two sides with some conflicting and some mutual interests are playing a variable sum game and they act on the basis of what they think the other party is going to do, which is in turn influenced by what the other party does, and so on recursively. A classic example is Rock-Paper-Scissors:

I played rock last move, so they know I know I’m not going to play rock again, because I lost last turn. So they know not to use paper. They’re going to play either rock or scissors. And then, obviously, if that’s a common pattern, I’m going to think about how you might be thinking that’s the strategy I’ll take, etc.

This is commonplace in everyday strategy games, it’s basically just predictive processing meets theory of mind.

Say person A and person B are walking down the sidewalk in opposite directions, approaching each other on a narrow path. They’re definitely going run into each other soon if they keep walking forward. One strategy is to “perform obliviousness”, as Kenneth Liberman calls it. (We might also think of it as "performing dominance" or expressing a strong preference for non-adjustment.) You basically act as if you don’t know or care I’m there, you’re not changing course. If person A is performing obliviousness, then person B can say “You know what, I know what your path is. Let me just slip to the side and then we’re both fine, we won’t run into each other.” But as soon as you get into the more recursive modeling, e.g. Person A looks at Person B while they’re still thinking and thinks: “Wait, is Person B also performing obliviousness?” and decides to be more reactive, then you end-up in this weird side-to-side shuffle. For instance, you’re ten yards apart, you’re both going back and forth horizontally, getting in each others’ way. It’s in both your interests to get out of each others’s way, but it’s in each of your interest to not move or to move the least. Lot’s of reasons that might be—laziness, energy expenditure minimization, or even status games.

What this comes down to is that Person A, who’s performing obliviousness, is broadcasting a self-representation of a future state. Doing this allows other agents to organize around them, to coordinate more effectively.

A similar example is playing “Chicken”. Let’s say you’re driving towards another car, betting on who’s going to turn away first. If you throw your steering wheel out the window or spray paint over your windshield in advance, there is no way to steer or react in time, respectively. You’ve self-bound to a course of action. Your opponent no longer has a choice, if they don’t turn away, the two cars will crash. You know that your opponent is a rational agent, or at least rational enough to want to live. So you’ve won. Your opponent has to get out of the way, because they know for a fact that you won’t. It’s better for them to lose face then run into you and die. You’ve established a single Schelling point, a single outcome the system will coordinate to if no further communication or changes can take place.

I create this self-representation, I broadcast it, and then we optimize around a shared future reality that I’ve created. Problems emerge because humans can represent one way and act another way. There’s nothing inherently binding about language. I think that’s really interesting—it’s what optikratics and self-deception is all about.

When you throw your steering wheel out the window, you’ve self-bound. There’s a physical limitation on what you can do now. If the other person driving towards you can see that self-binding of futures then they know you just can’t make another decision, even if it would rational, even if it would be better for you to get out of the way at the very last second. You can’t make the decision anymore. You’ve said: I win or bust.

You’re essentially making a tradeoff: I’m going to sacrifice some still good, but not quite optimal futures, like veering away first in the game of Chicken. I’m going to throw my steering wheel out the window and sacrifice these not-bad futures, for the most optimal future: the one where I win and don’t die. You can put all your eggs in one basket, put all your resources into achieving the optimal outcome. But problems emerge: you can represent things one way, but act in another. Consider the theatrical effect of throwing out a dummy steering wheel and making sure the real one wasn’t visible through a heavily tinted windshield.

Schelling talks about how different it would be if you had a society where you could linguistically invoke an oath, e.g. to God. Imagine a society full of perfectly rational, hell-fearing individuals. Invoking an oath to God is perfectly binding because nothing is worse than an eternity of damnation. In such a society there’d be no such thing as boring. I’d say “I swear to God, I won’t pay more than $16k for this car.” Now the salesman can take it or leave it. Normally, there’s a whole range. Let’s say the car costs the salesman $12k wholesale. Let’s say it’s worth $20k to me. So any sale between $12k and $20k is going to be mutually beneficial—kind of like getting out of the way in Chicken. It’s a positive sum game, so it’s better to negotiate than stalemate. A stalemate here would mean the seller doesn’t make any money and I don’t get a car.

Schelling talks about how conflict is not just about conflicting interests, they have a whole base of mutual interests and then some conflicting interests. Other than wars of total annihilation, there’s no such thing as pure conflict. In this case it’s clear: the seller wants money and the buyer (me), wants a car. For anything between $12k and $20k there’s mutual interest, but the settling point of the negotiation has a lot to do with bargaining power. If one party considers the other rational, then they have to somehow convince the other party that they won’t go lower or higher. If the seller thinks they’re dealing with a rational buyer, who’s interest it is to buy at $12k, then the seller has to somehow convince the buyer that he won’t go that low. The buyer has to show that he won’t buy at $20k even though it’s still in his interests, in order to get the best deal possible. In essence, you’re approximating the swear-on-God technology that would allow you demonstrate you won’t buy at a given price.

This makes me think about Blockchain, because Blockchain smart contracts are a form of self-binding. Without Blockchain, though, I can still go to a 3rd-party and say “Hey, if I pay more than $16k for the car, then I’m going to owe you $10k.” and sign a formal legal document to this effect. You bring a copy of your little legal agreement to the car seller, with the proper documentation to confirm its legal authority. Now the seller has to basically take $16k or leave it. (Note that we’re assuming there aren’t any other buyers in this ideal situation.) Basically, the seller is going to take the $16k unless he’s got more than $16k worth of spite, because he has perfect evidence that you’re not going to go higher than that. (If he’s got more than $16k worth of spite he’ll tell you to fuck-off with your fancy third-party leveraging strategies.)

I think a lot of human behavior comes down to this self-legibilizing process. The way you dress, the way you behave, the patterns of conversation you engage in all assert this. We think of manipulation as distorting someone’s priors so they’ll act a certain way. You could also distort their values, but values are harder to budge than priors in general. Values often exist independent of facts and are usually ingrained. It’s much easier to say “You thought these guys were the good guys, but they screwed you over, and I’m going to exploit your already-existing belief in Justice.” The general background notion of justice we often lay-claim to is likely just biological priors that were selected for certain kinds of cooperation.

A lot of manipulation happens by distorting someone’s impression of you. An impression is just a sense of how someone is going to behave. If you have a sense of how someone else is going to behave, then you can say, “You know what, I can behave this way, which has consequence X for the other party, because I know they’re not going to get too mad at me. Or they’re not going to burn the bridge. Or they’re going to be fair about it if I present them with this problem.” For example, if you know how mad a landlord will get with you for a breaking a certain rule, that influences if (or how often) you might choose to break it.

We constantly want to do things that rely on predicting the behaviors of others. I want to know that you’re going to wire me back this money, because you really are an exotic prince who just happens to be in a really bad situation. That’s a dramatic example, but the world is full of examples where manipulation matters: our sidewalk example from earlier, negotiating salaries, or even micro-behaviors like doing the dishes. You do the dishes because you think it’ll strain the relationship between you and your roommate if you don’t. If your roommate gets upset regularly then their actions create a model in your head of how they’re going to act that can be strategically deployed. Knowing that in the future your roommate is going to get mad if you don’t do the dishes means it’s now in your interest to do the dishes, or that you can use them as payback if revenge is what you’re after.

Human beings communicate & coordinate by self-legibilizing. The self-representation of properties that are predictive of the future is key to stabilizing this dynamic (and often recursive) mutual-modeling process, which is the essence of social dynamics.

3 notes

·

View notes