#lightweight kubernetes distributions

Explore tagged Tumblr posts

Text

Microk8s vs k3s: Lightweight Kubernetes distribution showdown

Microk8s vs k3s: Lightweight Kubernetes distribution showdown #homelab #kubernetes #microk8svsk3scomparison #lightweightkubernetesdistributions #k3sinstallationguide #microk8ssnappackagetutorial #highavailabilityinkubernetes #k3s #microk8s #portainer

Especially if you are into running Kubernetes in the home lab, you may look for a lightweight Kubernetes distribution. Two distributions that stand out are Microk8s and k3s. Let’s take a look at Microk8s vs k3s and discover the main differences between these two options, focusing on various aspects like memory usage, high availability, and k3s and microk8s compatibility. Table of contentsWhat is…

View On WordPress

#container runtimes and configurations#edge computing with k3s and microk8s#High Availability in Kubernetes#k3s installation guide#kubernetes cluster resources#Kubernetes on IoT devices#lightweight kubernetes distributions#memory usage optimization#microk8s snap package tutorial#microk8s vs k3s comparison

0 notes

Text

Best Backend Frameworks for Web Development 2025: The Future of Scalable and Secure Web Applications

The backbone of any web application is its backend—handling data processing, authentication, server-side logic, and integrations. As the demand for high-performance applications grows, choosing the right backend framework becomes critical for developers and businesses alike. With continuous technological advancements, the best backend frameworks for web development 2025 focus on scalability, security, and efficiency.

To build powerful and efficient backend systems, developers also rely on various backend development tools and technologies that streamline development workflows, improve database management, and enhance API integrations.

This article explores the top backend frameworks in 2025, their advantages, and the essential tools that power modern backend development.

1. Why Choosing the Right Backend Framework Matters

A backend framework is a foundation that supports server-side functionalities, including:

Database Management – Handling data storage and retrieval efficiently.

Security Features – Implementing authentication, authorization, and encryption.

Scalability – Ensuring the system can handle growing user demands.

API Integrations – Connecting frontend applications and external services.

With various options available, selecting the right framework can determine how efficiently an application performs. Let’s explore the best backend frameworks for web development 2025 that dominate the industry.

2. Best Backend Frameworks for Web Development 2025

a) Node.js (Express.js & NestJS) – The JavaScript Powerhouse

Node.js remains one of the most preferred backend frameworks due to its non-blocking, event-driven architecture. It enables fast and scalable web applications, making it ideal for real-time apps.

Why Choose Node.js in 2025?

Asynchronous Processing: Handles multiple requests simultaneously, improving performance.

Rich Ecosystem: Thousands of NPM packages for rapid development.

Microservices Support: Works well with serverless architectures.

Best Use Cases

Real-time applications (Chat apps, Streaming platforms).

RESTful and GraphQL APIs.

Single Page Applications (SPAs).

Two popular Node.js frameworks:

Express.js – Minimalist and lightweight, perfect for API development.

NestJS – A modular and scalable framework built on TypeScript for enterprise applications.

b) Django – The Secure Python Framework

Django, a high-level Python framework, remains a top choice for developers focusing on security and rapid development. It follows the "batteries-included" philosophy, providing built-in features for authentication, security, and database management.

Why Choose Django in 2025?

Strong Security Features: Built-in protection against SQL injection and XSS attacks.

Fast Development: Auto-generated admin panels and ORM make development quicker.

Scalability: Optimized for handling high-traffic applications.

Best Use Cases

E-commerce websites.

Data-driven applications.

Machine learning and AI-powered platforms.

c) Spring Boot – The Java Enterprise Solution

Spring Boot continues to be a dominant framework for enterprise-level applications, offering a robust, feature-rich environment with seamless database connectivity and cloud integrations.

Why Choose Spring Boot in 2025?

Microservices Support: Ideal for distributed systems and large-scale applications.

High Performance: Optimized for cloud-native development.

Security & Reliability: Built-in authentication, authorization, and encryption mechanisms.

Best Use Cases

Enterprise applications and banking software.

Large-scale microservices architecture.

Cloud-based applications with Kubernetes and Docker.

d) Laravel – The PHP Framework That Keeps Evolving

Laravel continues to be the most widely used PHP framework in 2025. Its expressive syntax, security features, and ecosystem make it ideal for web applications of all sizes.

Why Choose Laravel in 2025?

Eloquent ORM: Simplifies database interactions.

Blade Templating Engine: Enhances frontend-backend integration.

Robust Security: Protects against common web threats.

Best Use Cases

CMS platforms and e-commerce websites.

SaaS applications.

Backend for mobile applications.

e) FastAPI – The Rising Star for High-Performance APIs

FastAPI is a modern, high-performance Python framework designed for building APIs. It has gained massive popularity due to its speed and ease of use.

Why Choose FastAPI in 2025?

Asynchronous Support: Delivers faster API response times.

Data Validation: Built-in support for type hints and request validation.

Automatic Documentation: Generates API docs with Swagger and OpenAPI.

Best Use Cases

Machine learning and AI-driven applications.

Data-intensive backend services.

Microservices and serverless APIs.

3. Essential Backend Development Tools and Technologies

To build scalable and efficient backend systems, developers rely on various backend development tools and technologies. Here are some must-have tools:

a) Database Management Tools

PostgreSQL – A powerful relational database system for complex queries.

MongoDB – A NoSQL database ideal for handling large volumes of unstructured data.

Redis – A high-speed in-memory database for caching.

b) API Development and Testing Tools

Postman – Simplifies API development and testing.

Swagger/OpenAPI – Generates interactive API documentation.

c) Containerization and DevOps Tools

Docker – Enables containerized applications for easy deployment.

Kubernetes – Automates deployment and scaling of backend services.

Jenkins – A CI/CD tool for continuous integration and automation.

d) Authentication and Security Tools

OAuth 2.0 / JWT – Secure authentication for APIs.

Keycloak – Identity and access management.

OWASP ZAP – Security testing tool for identifying vulnerabilities.

e) Performance Monitoring and Logging Tools

Prometheus & Grafana – Real-time monitoring and alerting.

Logstash & Kibana – Centralized logging and analytics.

These tools and technologies help developers streamline backend processes, enhance security, and optimize performance.

4. Future Trends in Backend Development

Backend development continues to evolve. Here are some key trends for 2025:

Serverless Computing – Cloud providers like AWS Lambda, Google Cloud Functions, and Azure Functions are enabling developers to build scalable, cost-efficient backends without managing infrastructure.

AI-Powered Backend Optimization – AI-driven database queries and performance monitoring are enhancing efficiency.

GraphQL Adoption – More applications are shifting from REST APIs to GraphQL for flexible data fetching.

Edge Computing – Backend processing is moving closer to the user, reducing latency and improving speed.

Thus, selecting the right backend framework is crucial for building modern, scalable, and secure web applications. The best backend frameworks for web development 2025—including Node.js, Django, Spring Boot, Laravel, and FastAPI—offer unique advantages tailored to different project needs.

Pairing these frameworks with cutting-edge backend development tools and technologies ensures optimized performance, security, and seamless API interactions. As web applications continue to evolve, backend development will play a vital role in delivering fast, secure, and efficient digital experiences.

0 notes

Text

Service Mesh with Istio and Linkerd: A Practical Overview

As microservices architectures continue to dominate modern application development, managing service-to-service communication has become increasingly complex. Service meshes have emerged as a solution to address these complexities — offering enhanced security, observability, and traffic management between services.

Two of the most popular service mesh solutions today are Istio and Linkerd. In this blog post, we'll explore what a service mesh is, why it's important, and how Istio and Linkerd compare in real-world use cases.

What is a Service Mesh?

A service mesh is a dedicated infrastructure layer that controls communication between services in a distributed application. Instead of hardcoding service-to-service communication logic (like retries, failovers, and security policies) into your application code, a service mesh handles these concerns externally.

Key features typically provided by a service mesh include:

Traffic management: Fine-grained control over service traffic (routing, load balancing, fault injection)

Observability: Metrics, logs, and traces that give insights into service behavior

Security: Encryption, authentication, and authorization between services (often using mutual TLS)

Reliability: Retries, timeouts, and circuit breaking to improve service resilience

Why Do You Need a Service Mesh?

As applications grow more complex, maintaining reliable and secure communication between services becomes critical. A service mesh abstracts this complexity, allowing teams to:

Deploy features faster without worrying about cross-service communication challenges

Increase application reliability and uptime

Gain full visibility into service behavior without modifying application code

Enforce security policies consistently across the environment

Introducing Istio

Istio is one of the most feature-rich service meshes available today. Originally developed by Google, IBM, and Lyft, Istio offers deep integration with Kubernetes but can also support hybrid cloud environments.

Key Features of Istio:

Advanced traffic management: Canary deployments, A/B testing, traffic shifting

Comprehensive security: Mutual TLS, policy enforcement, and RBAC (Role-Based Access Control)

Extensive observability: Integrates with Prometheus, Grafana, Jaeger, and Kiali for metrics and tracing

Extensibility: Supports custom plugins through WebAssembly (Wasm)

Ingress/Egress gateways: Manage inbound and outbound traffic effectively

Pros of Istio:

Rich feature set suitable for complex enterprise use cases

Strong integration with Kubernetes and cloud-native ecosystems

Active community and broad industry adoption

Cons of Istio:

Can be resource-heavy and complex to set up and manage

Steeper learning curve compared to lighter service meshes

Introducing Linkerd

Linkerd is often considered the original service mesh and is known for its simplicity, performance, and focus on the core essentials.

Key Features of Linkerd:

Lightweight and fast: Designed to be resource-efficient

Simple setup: Easy to install, configure, and operate

Security-first: Automatic mutual TLS between services

Observability out of the box: Includes metrics, tap (live traffic inspection), and dashboards

Kubernetes-native: Deeply integrated with Kubernetes

Pros of Linkerd:

Minimal operational complexity

Lower resource usage

Easier learning curve for teams starting with service mesh

High performance and low latency

Cons of Linkerd:

Fewer advanced traffic management features compared to Istio

Less customizable for complex use cases

Choosing the Right Service Mesh

Choosing between Istio and Linkerd largely depends on your needs:

Choose Istio if you require advanced traffic management, complex security policies, and extensive customization — typically in larger, enterprise-grade environments.

Choose Linkerd if you value simplicity, low overhead, and rapid deployment — especially in smaller teams or organizations where ease of use is critical.

Ultimately, both Istio and Linkerd are excellent choices — it’s about finding the best fit for your application landscape and operational capabilities.

Final Thoughts

Service meshes are no longer just "nice to have" for microservices — they are increasingly a necessity for ensuring resilience, security, and observability at scale. Whether you pick Istio for its powerful feature set or Linkerd for its lightweight design, implementing a service mesh can greatly enhance your service architecture.

Stay tuned — in upcoming posts, we'll dive deeper into setting up Istio and Linkerd with hands-on labs and real-world use cases!

Would you also like me to include a hands-on quickstart guide (like "how to install Istio and Linkerd on a local Kubernetes cluster")? 🚀

For more details www.hawkstack.com

0 notes

Text

Cloud Microservice Market Growth Driven by Demand for Scalable and Agile Application Development Platforms

The Cloud Microservice Market: Accelerating Innovation in a Modular World

The global push toward digital transformation has redefined how businesses design, build, and deploy applications. Among the most impactful trends in recent years is the rapid adoption of cloud microservices a modular approach to application development that offers speed, scalability, and resilience. As enterprises strive to meet the growing demand for agility and performance, the cloud microservice market is experiencing significant momentum, reshaping the software development landscape.

What Are Cloud Microservices?

At its core, a microservice architecture breaks down a monolithic application into smaller, loosely coupled, independently deployable services. Each microservice addresses a specific business capability, such as user authentication, payment processing, or inventory management. By leveraging the cloud, these services can scale independently, be deployed across multiple geographic regions, and integrate seamlessly with various platforms.

Cloud microservices differ from traditional service-oriented architectures (SOA) by emphasizing decentralization, lightweight communication (typically via REST or gRPC), and DevOps-driven automation.

Market Growth and Dynamics

The cloud microservice market is witnessing robust growth. According to recent research, the global market size was valued at over USD 1 billion in 2023 and is projected to grow at a compound annual growth rate (CAGR) exceeding 20% through 2030. This surge is driven by several interlocking trends:

Cloud-First Strategies: As more organizations migrate workloads to public, private, and hybrid cloud environments, microservices provide a flexible architecture that aligns with distributed infrastructure.

DevOps and CI/CD Adoption: The increasing use of continuous integration and continuous deployment pipelines has made microservices more attractive. They fit naturally into agile development cycles and allow for faster iteration and delivery.

Containerization and Orchestration Tools: Technologies like Docker and Kubernetes have become instrumental in managing and scaling microservices in the cloud. These tools offer consistency across environments and automate deployment, networking, and scaling of services.

Edge Computing and IoT Integration: As edge devices proliferate, there is a growing need for lightweight, scalable services that can run closer to the user. Microservices can be deployed to edge nodes and communicate with centralized cloud services, enhancing performance and reliability.

Key Industry Players

Several technology giants and cloud providers are investing heavily in microservice architectures:

Amazon Web Services (AWS) offers a suite of tools like AWS Lambda, ECS, and App Mesh that support serverless and container-based microservices.

Microsoft Azure provides Azure Kubernetes Service (AKS) and Azure Functions for scalable and event-driven applications.

Google Cloud Platform (GCP) leverages Anthos and Cloud Run to help developers manage hybrid and multicloud microservice deployments.

Beyond the big three, companies like Red Hat, IBM, and VMware are also influencing the microservice ecosystem through open-source platforms and enterprise-grade orchestration tools.

Challenges and Considerations

While the benefits of cloud microservices are significant, the architecture is not without challenges:

Complexity in Management: Managing hundreds or even thousands of microservices requires robust monitoring, logging, and service discovery mechanisms.

Security Concerns: Each service represents a potential attack vector, requiring strong identity, access control, and encryption practices.

Data Consistency: Maintaining consistency and integrity across distributed systems is a persistent concern, particularly in real-time applications.

Organizations must weigh these complexities against their business needs and invest in the right tools and expertise to successfully navigate the microservice journey.

The Road Ahead

As digital experiences become more demanding and users expect seamless, responsive applications, microservices will continue to play a pivotal role in enabling scalable, fault-tolerant systems. Emerging trends such as AI-driven observability, service mesh architecture, and no-code/low-code microservice platforms are poised to further simplify and enhance the development and management process.

In conclusion, the cloud microservice market is not just a technological shift it's a foundational change in how software is conceptualized and delivered. For businesses aiming to stay competitive, embracing microservices in the cloud is no longer optional; it’s a strategic imperative.

0 notes

Text

Microservices Programming

Microservices architecture is revolutionizing the way modern software is built. Instead of a single monolithic application, microservices break down functionality into small, independent services that communicate over a network. This approach brings flexibility, scalability, and easier maintenance. In this post, we’ll explore the core concepts of microservices and how to start programming with them.

What Are Microservices?

Microservices are a software development technique where an application is composed of loosely coupled, independently deployable services. Each service focuses on a specific business capability and communicates with others through lightweight APIs, usually over HTTP or messaging queues.

Why Use Microservices?

Scalability: Scale services independently based on load.

Flexibility: Use different languages or technologies for different services.

Faster Development: Small teams can build, test, and deploy services independently.

Resilience: Failure in one service doesn't crash the entire system.

Better Maintainability: Easier to manage, update, and test smaller codebases.

Key Components of Microservices Architecture

Services: Individual, self-contained units with specific functionality.

API Gateway: Central access point that routes requests to appropriate services.

Service Discovery: Automatically locates services within the system (e.g., Eureka, Consul).

Load Balancing: Distributes incoming traffic across instances (e.g., Nginx, HAProxy).

Containerization: Deploy services in isolated environments (e.g., Docker, Kubernetes).

Messaging Systems: Allow asynchronous communication (e.g., RabbitMQ, Apache Kafka).

Popular Tools and Frameworks

Spring Boot + Spring Cloud (Java): Full-stack support for microservices.

Express.js (Node.js): Lightweight framework for building RESTful services.

FastAPI (Python): High-performance framework ideal for microservices.

Docker: Container platform for packaging and running services.

Kubernetes: Orchestrates and manages containerized microservices.

Example: A Simple Microservices Architecture

User Service: Manages user registration and authentication.

Product Service: Handles product listings and inventory.

Order Service: Manages order placement and status.

Each service runs on its own server or container, communicates through REST APIs, and has its own database to avoid tight coupling.

Best Practices for Microservices Programming

Keep services small and focused on a single responsibility.

Use versioned APIs to ensure backward compatibility.

Centralize logging and monitoring using tools like ELK Stack or Prometheus + Grafana.

Secure your APIs using tokens (JWT, OAuth2).

Automate deployments and CI/CD pipelines with tools like Jenkins, GitHub Actions, or GitLab CI.

Avoid shared databases between services — use event-driven architecture for coordination.

Challenges in Microservices

Managing communication and data consistency across services.

Increased complexity in deployment and monitoring.

Ensuring security between service endpoints.

Conclusion

Microservices programming is a powerful approach to building modern, scalable applications. While it introduces architectural complexity, the benefits in flexibility, deployment, and team autonomy make it an ideal choice for many large-scale projects. With the right tools and design patterns, you can unlock the full potential of microservices for your applications.

0 notes

Text

Understanding Microservices - A Comprehensive Guide for Beginners

In recent years, microservices have become a popular way to build software, changing how apps are built and handled. The microservices concept fundamentally involves separating a software program into smaller, self-contained pieces, each concentrated on a certain business function. This modularity sets microservices apart from conventional monolithic designs, which firmly marry all elements. Microservices provide a more flexible and scalable approach to creating applications that change with the times by working autonomously.

One of the primary benefits of microservices is the ability to scale individual services based on demand. Instead of scaling the entire application, as necessary in a monolithic system, you can scale specific microservices experiencing high traffic. This selective scalability leads to more efficient resource utilization, ensuring that only the required components consume additional computational power. As a result, you achieve better performance and cost savings in operational environments, particularly in cloud-based systems.

Another hallmark of microservices is their support for technological diversity. Unlike monolithic architectures that often impose a uniform set of tools and languages, microservices empower developers to choose the most appropriate technologies for each service. For instance, developers might write one service in Python while using Java for another, depending on which language best suits the tasks. This approach not only boosts the efficiency of each service but also encourages innovation, as teams are free to experiment with new frameworks and tools tailored to their needs.

The independence of microservices also enhances fault tolerance within applications. Since each service operates in isolation, a failure in one part of the system does not necessarily cascade to others. For example, if a payment processing service goes offline, other application parts, such as user authentication or browsing, can continue functioning. This isolation minimizes downtime and simplifies identifying and resolving issues, contributing to system reliability.

Microservices naturally align with modern agile development practices. In a microservices architecture, development teams can work simultaneously on different services without interfering with one another. This concurrent workflow accelerates the development lifecycle, enabling faster iteration and deployment cycles. Moreover, microservices support continuous integration and delivery (CI/CD), further streamlining updates and allowing teams to respond more quickly to user feedback or market changes.

However, microservices are not without challenges. Communication between services often becomes complex, as each service needs to interact with others to perform end-to-end business processes. This necessitates robust communication protocols, often using APIs or message queues. Ensuring data consistency across services is another critical concern, particularly when each service maintains its database. Strategies such as eventual consistency and distributed transactions are sometimes employed, but these solutions can add complexity to the system design.

The advent of containerization technologies, such as Docker and Kubernetes, has made implementing microservices more accessible. Containers provide a lightweight and consistent environment for deploying individual services, regardless of the underlying infrastructure. With cloud platforms like AWS, Azure, or Google Cloud, organizations can leverage these tools to build scalable and resilient applications. This synergy between microservices and modern infrastructure tools has driven their adoption across industries.

Despite their advantages, microservices may not always be the optimal choice. For smaller applications with limited complexity, the overhead of managing multiple independent services can become burdensome. Monolithic architectures may serve as a more straightforward and more cost-effective solution in such cases. Evaluating your application's specific needs and long-term goals is essential before committing to a microservices-based approach.

0 notes

Text

DevOps for Edge Computing: Challenges and Opportunities

Edge computing is rapidly reshaping how organizations process and analyze data by bringing computation closer to the data source. This paradigm shift enhances speed, reduces latency, and supports applications requiring real-time decision-making, such as autonomous vehicles and IoT devices. However, the distributed nature of edge computing introduces unique challenges for DevOps practices, making it critical to adopt strategies to ensure efficiency, scalability, and reliability.

The Unique Landscape of Edge Computing

Edge computing deploys workloads across distributed locations with limited resources, enabling faster processing and reduced bandwidth usage. However, this decentralized setup adds complexity to deployment and management. Unlike centralized cloud servers, edge environments require tailored configurations for each node, emphasizing the need for robust automation to streamline processes and ensure efficiency.

Key Challenges in Implementing DevOps for Edge Computing

Fragmented Infrastructure: Edge computing environments often involve a diverse array of hardware and software platforms. Ensuring compatibility and standardization across these disparate systems is a significant challenge for DevOps teams.

Limited Resources: Unlike cloud servers, edge nodes have restricted computational power, storage, and bandwidth. DevOps practices must account for these limitations, optimizing deployments to ensure smooth operation without overloading resources.

Security Risks: With a larger attack surface due to the distributed nature of edge environments, securing deployments becomes critical. Vulnerabilities in one node can compromise the entire network, necessitating advanced security protocols and continuous monitoring.

Monitoring and Observability: Real-time insights into edge nodes are essential for maintaining performance and detecting issues. However, collecting and analyzing telemetry data from multiple locations without centralized access poses a unique challenge.

Opportunities to Leverage DevOps in Edge Computing

Despite these challenges, the integration of DevOps into edge computing presents exciting opportunities to drive innovation and efficiency:

Automation at Scale: DevOps tools like Ansible and Terraform can automate configuration management and deployment, streamlining processes across multiple edge nodes.

Containerization and Orchestration: Lightweight containers, such as those managed by Kubernetes, are ideal for edge environments. They allow applications to run consistently across diverse hardware while enabling efficient resource usage. Kubernetes distributions like K3s are tailored for edge computing, further simplifying orchestration.

Improved Resilience: Continuous integration and continuous delivery (CI/CD) pipelines in edge computing can ensure frequent updates without service interruptions. Canary deployments and blue-green strategies help validate changes in smaller environments before system-wide implementation.

Enhanced Data Processing: By integrating AI-driven DevOps tools, edge computing environments can benefit from predictive analytics and automated incident resolution. These capabilities enhance system reliability and reduce downtime.

Future Trends in DevOps for Edge Computing

The convergence of AI and edge computing is driving DevOps innovation. AI-powered tools optimize resources, predict failures, and enhance security, while 5G accelerates data transfers and responsiveness. As edge computing grows in industries like healthcare and manufacturing, flexible DevOps strategies will position organizations to capitalize on these advancements effectively.

Optimizing Edge Systems for Maximum Impact

DevOps for edge computing bridges innovation and efficiency by addressing challenges like fragmented infrastructure, resource limits, and security risks. DevOps training equips professionals to unlock edge computing's potential with seamless deployments and strong performance. Ascendient Learning offers expert training to help teams excel in this evolving landscape.

For more information visit: https://www.ascendientlearning.com/it-training/agile-devops/devops-fundamentals-66737-detail.html

0 notes

Text

k0s vs k3s - Battle of the Tiny Kubernetes distros

k0s vs k3s - Battle of the Tiny Kubernetes distros #100daysofhomelab #homelab @vexpert #vmwarecommunities #KubernetesDistributions, #k0svsk3s, #RunningKubernetes, #LightweightKubernetes, #KubernetesInEdgeComputing, #KubernetesInBareMetal

Kubernetes has redefined the management of containerized applications. The rich ecosystem of Kubernetes distributions testifies to its widespread adoption and versatility. Today, we compare k0s vs k3s, two unique Kubernetes distributions designed to seamlessly run Kubernetes across varied infrastructures, from cloud instances to bare metal and edge computing settings. Those with home labs will…

View On WordPress

#k0s vs k3s#Kubernetes Cluster Efficiency#Kubernetes distributions#Kubernetes for Production Workloads#Kubernetes in Bare Metal#Kubernetes in Cloud Instances#Kubernetes in Edge Computing#Kubernetes on Virtual Machines#Lightweight Kubernetes#Running Kubernetes

0 notes

Text

Optimizing Applications with Cloud Native Deployment

Cloud-native deployment has revolutionized the way applications are built, deployed, and managed. By leveraging cloud-native technologies such as containerization, microservices, and DevOps automation, businesses can enhance application performance, scalability, and reliability. This article explores key strategies for optimizing applications through cloud-native deployment.

1. Adopting a Microservices Architecture

Traditional monolithic applications can become complex and difficult to scale. By adopting a microservices architecture, applications are broken down into smaller, independent services that can be deployed, updated, and scaled separately.

Key Benefits

Improved scalability and fault tolerance

Faster development cycles and deployments

Better resource utilization by scaling specific services as needed

Best Practices

Design microservices with clear boundaries using domain-driven design

Use lightweight communication protocols such as REST or gRPC

Implement service discovery and load balancing for better efficiency

2. Leveraging Containerization for Portability

Containers provide a consistent runtime environment across different cloud platforms, making deployment faster and more efficient. Using container orchestration tools like Kubernetes ensures seamless management of containerized applications.

Key Benefits

Portability across multiple cloud environments

Faster deployment and rollback capabilities

Efficient resource allocation and utilization

Best Practices

Use lightweight base images to improve security and performance

Automate container builds using CI/CD pipelines

Implement resource limits and quotas to prevent resource exhaustion

3. Automating Deployment with CI/CD Pipelines

Continuous Integration and Continuous Deployment (CI/CD) streamline application delivery by automating testing, building, and deployment processes. This ensures faster and more reliable releases.

Key Benefits

Reduces manual errors and deployment time

Enables faster feature rollouts

Improves overall software quality through automated testing

Best Practices

Use tools like Jenkins, GitHub Actions, or GitLab CI/CD

Implement blue-green deployments or canary releases for smooth rollouts

Automate rollback mechanisms to handle failed deployments

4. Ensuring High Availability with Load Balancing and Auto-scaling

To maintain application performance under varying workloads, implementing load balancing and auto-scaling is essential. Cloud providers offer built-in services for distributing traffic and adjusting resources dynamically.

Key Benefits

Ensures application availability during high traffic loads

Optimizes resource utilization and reduces costs

Minimizes downtime and improves fault tolerance

Best Practices

Use cloud-based load balancers such as AWS ELB, Azure Load Balancer, or Nginx

Implement Horizontal Pod Autoscaler (HPA) in Kubernetes for dynamic scaling

Distribute applications across multiple availability zones for resilience

5. Implementing Observability for Proactive Monitoring

Monitoring cloud-native applications is crucial for identifying performance bottlenecks and ensuring smooth operations. Observability tools provide real-time insights into application behavior.

Key Benefits

Early detection of issues before they impact users

Better decision-making through real-time performance metrics

Enhanced security and compliance monitoring

Best Practices

Use Prometheus and Grafana for monitoring and visualization

Implement centralized logging with Elasticsearch, Fluentd, and Kibana (EFK Stack)

Enable distributed tracing with OpenTelemetry to track requests across services

6. Strengthening Security in Cloud-Native Environments

Security must be integrated at every stage of the application lifecycle. By following DevSecOps practices, organizations can embed security into development and deployment processes.

Key Benefits

Prevents vulnerabilities and security breaches

Ensures compliance with industry regulations

Enhances application integrity and data protection

Best Practices

Scan container images for vulnerabilities before deployment

Enforce Role-Based Access Control (RBAC) to limit permissions

Encrypt sensitive data in transit and at rest

7. Optimizing Costs with Cloud-Native Strategies

Efficient cost management is essential for cloud-native applications. By optimizing resource usage and adopting cost-effective deployment models, organizations can reduce expenses without compromising performance.

Key Benefits

Lower infrastructure costs through auto-scaling

Improved cost transparency and budgeting

Better efficiency in cloud resource allocation

Best Practices

Use serverless computing for event-driven applications

Implement spot instances and reserved instances to save costs

Monitor cloud spending with FinOps practices and tools

Conclusion

Cloud-native deployment enables businesses to optimize applications for performance, scalability, and cost efficiency. By adopting microservices, leveraging containerization, automating deployments, and implementing robust monitoring and security measures, organizations can fully harness the benefits of cloud-native computing.

By following these best practices, businesses can accelerate innovation, improve application reliability, and stay competitive in a fast-evolving digital landscape. Now is the time to embrace cloud-native deployment and take your applications to the next level.

#Cloud-native applications#Cloud-native architecture#Cloud-native development#Cloud-native deployment

1 note

·

View note

Text

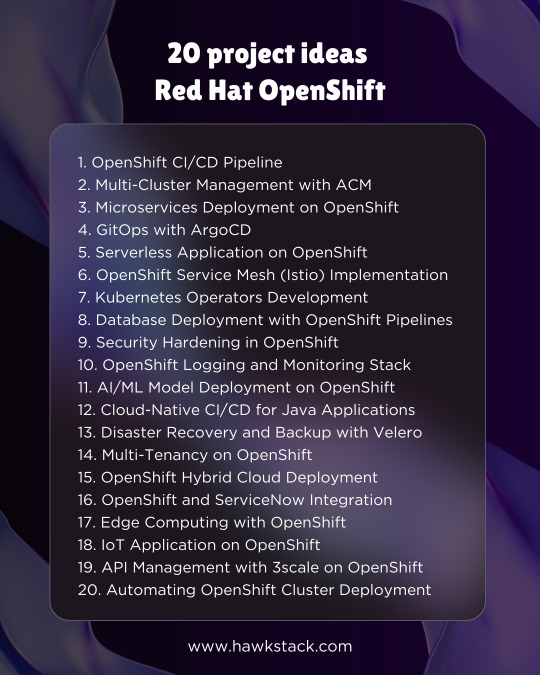

20 project ideas for Red Hat OpenShift

1. OpenShift CI/CD Pipeline

Set up a Jenkins or Tekton pipeline on OpenShift to automate the build, test, and deployment process.

2. Multi-Cluster Management with ACM

Use Red Hat Advanced Cluster Management (ACM) to manage multiple OpenShift clusters across cloud and on-premise environments.

3. Microservices Deployment on OpenShift

Deploy a microservices-based application (e.g., e-commerce or banking) using OpenShift, Istio, and distributed tracing.

4. GitOps with ArgoCD

Implement a GitOps workflow for OpenShift applications using ArgoCD, ensuring declarative infrastructure management.

5. Serverless Application on OpenShift

Develop a serverless function using OpenShift Serverless (Knative) for event-driven architecture.

6. OpenShift Service Mesh (Istio) Implementation

Deploy Istio-based service mesh to manage inter-service communication, security, and observability.

7. Kubernetes Operators Development

Build and deploy a custom Kubernetes Operator using the Operator SDK for automating complex application deployments.

8. Database Deployment with OpenShift Pipelines

Automate the deployment of databases (PostgreSQL, MySQL, MongoDB) with OpenShift Pipelines and Helm charts.

9. Security Hardening in OpenShift

Implement OpenShift compliance and security best practices, including Pod Security Policies, RBAC, and Image Scanning.

10. OpenShift Logging and Monitoring Stack

Set up EFK (Elasticsearch, Fluentd, Kibana) or Loki for centralized logging and use Prometheus-Grafana for monitoring.

11. AI/ML Model Deployment on OpenShift

Deploy an AI/ML model using OpenShift AI (formerly Open Data Hub) for real-time inference with TensorFlow or PyTorch.

12. Cloud-Native CI/CD for Java Applications

Deploy a Spring Boot or Quarkus application on OpenShift with automated CI/CD using Tekton or Jenkins.

13. Disaster Recovery and Backup with Velero

Implement backup and restore strategies using Velero for OpenShift applications running on different cloud providers.

14. Multi-Tenancy on OpenShift

Configure OpenShift multi-tenancy with RBAC, namespaces, and resource quotas for multiple teams.

15. OpenShift Hybrid Cloud Deployment

Deploy an application across on-prem OpenShift and cloud-based OpenShift (AWS, Azure, GCP) using OpenShift Virtualization.

16. OpenShift and ServiceNow Integration

Automate IT operations by integrating OpenShift with ServiceNow for incident management and self-service automation.

17. Edge Computing with OpenShift

Deploy OpenShift at the edge to run lightweight workloads on remote locations, using Single Node OpenShift (SNO).

18. IoT Application on OpenShift

Build an IoT platform using Kafka on OpenShift for real-time data ingestion and processing.

19. API Management with 3scale on OpenShift

Deploy Red Hat 3scale API Management to control, secure, and analyze APIs on OpenShift.

20. Automating OpenShift Cluster Deployment

Use Ansible and Terraform to automate the deployment of OpenShift clusters and configure infrastructure as code (IaC).

For more details www.hawkstack.com

#OpenShift #Kubernetes #DevOps #CloudNative #RedHat #GitOps #Microservices #CICD #Containers #HybridCloud #Automation

0 notes

Text

Golang developer,

Golang developer,

In the evolving world of software development, Go (or Golang) has emerged as a powerful programming language known for its simplicity, efficiency, and scalability. Developed by Google, Golang is designed to make developers’ lives easier by offering a clean syntax, robust standard libraries, and excellent concurrency support. Whether you're starting as a new developer or transitioning from another language, this guide will help you navigate the journey of becoming a proficient Golang developer.

Why Choose Golang?

Golang’s popularity has grown exponentially, and for good reasons:

Simplicity: Go's syntax is straightforward, making it accessible for beginners and efficient for experienced developers.

Concurrency Support: With goroutines and channels, Go simplifies writing concurrent programs, making it ideal for systems requiring parallel processing.

Performance: Go is compiled to machine code, which means it executes programs efficiently without requiring a virtual machine.

Scalability: The language’s design promotes building scalable and maintainable systems.

Community and Ecosystem: With a thriving developer community, extensive documentation, and numerous open-source libraries, Go offers robust support for its users.

Key Skills for a Golang Developer

To excel as a Golang developer, consider mastering the following:

1. Understanding Go Basics

Variables and constants

Functions and methods

Control structures (if, for, switch)

Arrays, slices, and maps

2. Deep Dive into Concurrency

Working with goroutines for lightweight threading

Understanding channels for communication

Managing synchronization with sync package

3. Mastering Go’s Standard Library

net/http for building web servers

database/sql for database interactions

os and io for system-level operations

4. Writing Clean and Idiomatic Code

Using Go’s formatting tools like gofmt

Following Go idioms and conventions

Writing efficient error handling code

5. Version Control and Collaboration

Proficiency with Git

Knowledge of tools like GitHub, GitLab, or Bitbucket

6. Testing and Debugging

Writing unit tests using Go’s testing package

Utilizing debuggers like dlv (Delve)

7. Familiarity with Cloud and DevOps

Deploying applications using Docker and Kubernetes

Working with cloud platforms like AWS, GCP, or Azure

Monitoring and logging tools like Prometheus and Grafana

8. Knowledge of Frameworks and Tools

Popular web frameworks like Gin or Echo

ORM tools like GORM

API development with gRPC or REST

Building a Portfolio as a Golang Developer

To showcase your skills and stand out in the job market, work on real-world projects. Here are some ideas:

Web Applications: Build scalable web applications using frameworks like Gin or Fiber.

Microservices: Develop microservices architecture to demonstrate your understanding of distributed systems.

Command-Line Tools: Create tools or utilities to simplify repetitive tasks.

Open Source Contributions: Contribute to Golang open-source projects on platforms like GitHub.

Career Opportunities

Golang developers are in high demand across various industries, including fintech, cloud computing, and IoT. Popular roles include:

Backend Developer

Cloud Engineer

DevOps Engineer

Full Stack Developer

Conclusion

Becoming a proficient Golang developer requires dedication, continuous learning, and practical experience. By mastering the language’s features, leveraging its ecosystem, and building real-world projects, you can establish a successful career in this growing field. Start today and join the vibrant Go community to accelerate your journey.

0 notes

Text

Master Microservices: From Learner to Lead Architect

Microservices architecture has become a cornerstone of modern software development, revolutionizing how developers build and scale applications. If you're aspiring to become a lead architect or want to master the intricacies of microservices, this guide will help you navigate your journey. From learning the basics to becoming a pro, let’s explore how to master microservices effectively.

What Are Microservices?

Microservices are a software development technique where applications are built as a collection of small, independent, and loosely coupled services. Each service represents a specific business functionality, making it easier to scale, maintain, and deploy applications.

Why Microservices Matter in Modern Development

Monolithic architecture, the predecessor to microservices, often led to challenges in scaling and maintaining applications. Microservices address these issues by enabling:

Scalability: Easily scale individual services as needed.

Flexibility: Developers can work on different services simultaneously.

Faster Time-to-Market: Continuous delivery becomes easier.

Core Principles of Microservices Architecture

To effectively master microservices, you need to understand the foundational principles that guide their design and implementation:

Decentralization: Split functionalities across services.

Independent Deployment: Deploy services independently.

Fault Isolation: Isolate failures to prevent cascading issues.

API-Driven Communication: Use lightweight protocols like REST or gRPC.

Skills You Need to Master Microservices

1. Programming Languages

Microservices can be developed using multiple programming languages such as:

Java

Python

Go

Node.js

2. Containers and Orchestration

Docker: For creating, deploying, and running microservices in containers.

Kubernetes: To orchestrate containerized applications for scalability.

3. DevOps Tools

CI/CD Pipelines: Tools like Jenkins, CircleCI, or GitHub Actions ensure seamless integration and deployment.

Monitoring Tools: Prometheus and Grafana help monitor service health.

Steps to Master Microservices

1. Understand the Basics

Begin with understanding key microservices concepts, such as service decomposition, data decentralization, and communication protocols.

2. Learn API Design

APIs act as the backbone of microservices. Learn how to design and document RESTful APIs using tools like Swagger or Postman.

3. Get Hands-On with Frameworks

Use frameworks and libraries to simplify microservices development:

Spring Boot (Java)

Flask (Python)

Express.js (Node.js)

4. Implement Microservices Security

Focus on securing inter-service communication using OAuth, JWT, and API gateways like Kong or AWS API Gateway.

5. Build Scalable Architecture

Adopt cloud platforms such as AWS, Azure, or Google Cloud for deploying scalable microservices.

Key Tools and Technologies for Microservices

1. Containerization and Virtualization

Tools like Docker and Kubernetes allow developers to package services in lightweight containers for seamless deployment.

2. API Gateways

API gateways such as Kong and NGINX streamline routing, authentication, and throttling.

3. Event-Driven Architecture

Leverage message brokers like Kafka or RabbitMQ for asynchronous service communication.

Benefits of Mastering Microservices

Career Advancement: Expertise in microservices can make you a strong candidate for lead architect roles.

High Demand: Organizations transitioning to modern architectures are actively hiring microservices experts.

Flexibility and Versatility: Knowledge of microservices enables you to work across industries, from e-commerce to finance.

Challenges in Microservices Implementation

1. Complexity

Managing multiple services can lead to operational overhead.

2. Debugging Issues

Tracing bugs in distributed systems is challenging but manageable with tools like Jaeger and Zipkin.

3. Security Concerns

Each service requires secure communication and authorization mechanisms.

Building a Microservices Portfolio

To master microservices, build a portfolio of projects demonstrating your skills. Some ideas include:

E-commerce Applications: Separate services for inventory, payment, and user authentication.

Social Media Platforms: Modularized services for messaging, user profiles, and notifications.

Certifications to Enhance Your Microservices Journey

Obtaining certifications can validate your expertise and boost your resume:

Certified Kubernetes Administrator (CKA)

AWS Certified Solutions Architect

Google Cloud Professional Cloud Architect

Real-World Use Cases of Microservices

1. Netflix

Netflix leverages microservices to handle millions of user requests daily, ensuring high availability and seamless streaming.

2. Amazon

Amazon's e-commerce platform uses microservices to manage inventory, payments, and shipping.

3. Spotify

Spotify utilizes microservices for features like playlists, user recommendations, and search.

Becoming a Lead Architect in Microservices

To transition from a learner to a lead architect, focus on:

Design Patterns: Understand patterns like Service Mesh and Domain-Driven Design (DDD).

Leadership Skills: Lead cross-functional teams and mentor junior developers.

Continuous Learning: Stay updated on emerging trends and tools in microservices.

Conclusion

Mastering microservices is a transformative journey that can elevate your career as a software developer or architect. By understanding the core concepts, learning relevant tools, and building real-world projects, you can position yourself as a microservices expert. This architecture is not just a trend but a critical skill in the future of software development.

FAQs

1. What are microservices?Microservices are small, independent services within an application, designed to perform specific business functions and communicate via APIs.

2. Why should I learn microservices?Microservices are essential for scalable and flexible application development, making them a highly sought-after skill in the software industry.

3. Which programming language is best for microservices?Languages like Java, Python, Go, and Node.js are commonly used for building microservices.

4. How can I start my journey with microservices?Begin with learning the basics, explore frameworks like Spring Boot, and practice building modular applications.

5. Are microservices suitable for all applications?No, they are best suited for applications requiring scalability, flexibility, and modularity, but not ideal for small or simple projects.

0 notes

Text

Why Java Spring Boot is Ideal for Building Microservices

In modern software development, microservices have become the go-to architecture for creating scalable, flexible, and maintainable applications. Java full-stack development is one of the most popular frameworks used for building microservices, thanks to its simplicity, powerful features, and seamless integration with other technologies. In this blog, we will explore why Java Spring Boot is an ideal choice for building microservices.

What are Microservices?

Microservices architecture is a design pattern where an application is broken down into smaller, independent services that can be developed, deployed, and scaled individually. Each microservice typically focuses on a specific business functionality, and communicates with other services via APIs (often RESTful). Microservices offer several advantages over traditional monolithic applications, including improved scalability, flexibility, and maintainability.

Why Spring Boot for Microservices?

Spring Boot, a lightweight, open-source Java framework, simplifies the development of stand-alone, production-grade applications. It comes with several features that make it an excellent choice for building microservices. Here are some key reasons why:

1. Rapid Development with Minimal Configuration

Spring Boot is known for its "convention over configuration" approach, which makes it incredibly developer-friendly. It removes the need for complex XML configurations, allowing developers to focus on the business logic rather than boilerplate code. For microservices, this means you can quickly spin up new services with minimal setup, saving time and increasing productivity.

Spring Boot comes with embedded servers (like Tomcat, Jetty, and Undertow), so you don’t need to worry about setting up and managing separate application servers. This makes deployment and scaling easier in microservices environments.

2. Microservice-Friendly Components

Spring Boot is tightly integrated with the Spring Cloud ecosystem, which provides tools specifically designed for building microservices. Some of these key components include:

Spring Cloud Config: Centralizes configuration management for multiple services in a microservices architecture, allowing you to manage configuration properties in a version-controlled repository.

Spring Cloud Netflix: Includes several tools like Eureka (for service discovery), Hystrix (for fault tolerance), and Ribbon (for client-side load balancing), which are essential for building resilient and scalable microservices.

Spring Cloud Gateway: Provides a simple, effective way to route requests to different microservices, offering features like load balancing, security, and more.

Spring Cloud Stream: A framework for building event-driven microservices, making it easier to work with messaging middleware (e.g., RabbitMQ, Kafka).

These tools help you quickly build and manage your microservices in a distributed architecture.

3. Scalability and Flexibility

One of the main reasons organizations adopt microservices is the ability to scale individual components independently. Spring Boot’s lightweight nature makes it an ideal choice for microservices because it enables easy scaling both vertically (scaling up resources for a single service) and horizontally (scaling across multiple instances of a service).

With Spring Boot, you can run multiple instances of microservices in containers (e.g., Docker) and orchestrate them using platforms like Kubernetes. This makes it easier to handle high traffic, optimize resource usage, and maintain high availability.

4. Fault Tolerance and Resilience

In a microservices architecture, failures in one service can affect others. Spring Boot provides built-in mechanisms for handling fault tolerance and resilience, which are critical for maintaining the integrity and uptime of your application. With Spring Cloud Netflix Hystrix, you can implement circuit breakers that prevent cascading failures, providing a more robust and fault-tolerant system.

By using tools like Resilience4j, Spring Boot makes it easier to implement strategies like retries, timeouts, and fallbacks to ensure your services remain resilient even when some of them fail.

5. Easy Integration with Databases and Messaging Systems

Microservices often require interaction with various data stores and messaging systems. Spring Boot makes this integration straightforward by providing support for relational databases (like MySQL, PostgreSQL), NoSQL databases (like MongoDB, Cassandra), and message brokers (like RabbitMQ, Kafka).

With Spring Data, you can easily interact with databases using a simplified repository model, without having to write much boilerplate code. This enables microservices to manage their own data stores, promoting the independence of each service.

6. Security Features

Security is critical in microservices, as services often need to communicate with each other over the network. Spring Security provides a comprehensive security framework that integrates well with Spring Boot. With Spring Security, you can secure your microservices with features like:

Authentication and Authorization: Implementing OAuth2, JWT tokens, or traditional session-based authentication to ensure that only authorized users or services can access certain endpoints.

Secure Communication: Enabling HTTPS, encrypting data in transit, and ensuring that communications between services are secure.

Role-Based Access Control (RBAC): Ensuring that each microservice has the appropriate permissions to access certain resources.

These security features help ensure that your microservices are protected from unauthorized access and malicious attacks.

7. Monitoring and Logging

Monitoring and logging are essential for maintaining microservices in a production environment. With Spring Boot, you can easily implement tools like Spring Boot Actuator to expose useful operational information about your microservices, such as metrics, health checks, and system properties.

In addition, Spring Cloud Sleuth provides distributed tracing capabilities, allowing you to trace requests as they flow through multiple services. This helps you track and diagnose issues more efficiently in a microservices architecture.

Conclusion

Java full-stack development provides a solid foundation for building microservices, making it an excellent choice for developers looking to implement a modern, scalable, and resilient application architecture. The framework’s ease of use, integration with Spring Cloud components, scalability, and security features are just a few of the reasons why Spring Boot is an ideal platform for microservices.

As a Java full-stack development, understanding how to build microservices with Spring Boot will not only enhance your skill set but also open doors to working on more complex and modern systems. If you’re looking to develop scalable, flexible, and fault-tolerant applications, Java Spring Boot is the right tool for the job.

This concludes the blog on "Why Java full-stack development is Ideal for Building Microservices". Let me know if you'd like to continue to the next topic!

0 notes

Text

In today’s modern software development world, container orchestration has become an essential practice. Imagine containers as tiny, self-contained boxes holding your application and all it needs to run; lightweight, portable, and ready to go on any system. However, managing a swarm of these containers can quickly turn into chaos. That's where container orchestration comes in to assist you. In this article, let’s explore the world of container orchestration. What Is Container Orchestration? Container orchestration refers to the automated management of containerized applications. It involves deploying, managing, scaling, and networking containers to ensure applications run smoothly and efficiently across various environments. As organizations adopt microservices architecture and move towards cloud-native applications, container orchestration becomes crucial in handling the complexity of deploying and maintaining numerous container instances. Key Functions of Container Orchestration Deployment: Automating the deployment of containers across multiple hosts. Scaling: Adjusting the number of running containers based on current load and demand. Load balancing: Distributing traffic across containers to ensure optimal performance. Networking: Managing the network configurations to allow containers to communicate with each other. Health monitoring: Continuously checking the status of containers and replacing or restarting failed ones. Configuration management: Keeping the container configurations consistent across different environments. Why Container Orchestration Is Important? Efficiency and Resource Optimization Container orchestration takes the guesswork out of resource allocation. By automating deployment and scaling, it makes sure your containers get exactly what they need, no more, no less. As a result, it keeps your hardware working efficiently and saves you money on wasted resources. Consistency and Reliability Orchestration tools ensure that containers are consistently configured and deployed, reducing the risk of errors and improving the reliability of applications. Simplified Management Managing a large number of containers manually is impractical. Orchestration tools simplify this process by providing a unified interface to control, monitor, and manage the entire lifecycle of containers. Leading Container Orchestration Tools Kubernetes Kubernetes is the most widely used container orchestration platform. Originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes offers a comprehensive set of features for deploying, scaling, and managing containerized applications. Docker Swarm Docker Swarm is Docker's native clustering and orchestration tool. It integrates seamlessly with Docker and is known for its simplicity and ease of use. Apache Mesos Apache Mesos is a distributed systems kernel that can manage resources across a cluster of machines. It supports various frameworks, including Kubernetes, for container orchestration. OpenShift OpenShift is an enterprise-grade Kubernetes distribution by Red Hat. It offers additional features for developers and IT operations teams to manage the application lifecycle. Best Practices for Container Orchestration Design for Scalability Design your applications to scale effortlessly. Imagine adding more containers as easily as stacking building blocks which means keeping your app components independent and relying on external storage for data sharing. Implement Robust Monitoring and Logging Keep a close eye on your containerized applications' health. Tools like Prometheus, Grafana, and the ELK Stack act like high-tech flashlights, illuminating performance and helping you identify any issues before they become monsters under the bed. Automate Deployment Pipelines Integrate continuous integration and continuous deployment (CI/CD) pipelines with your orchestration platform.

This ensures rapid and consistent deployment of code changes, freeing you up to focus on more strategic battles. Secure Your Containers Security is vital in container orchestration. Implement best practices such as using minimal base images, regularly updating images, running containers with the least privileges, and employing runtime security tools. Manage Configuration and Secrets Securely Use orchestration tools' built-in features for managing configuration and secrets. For example, Kubernetes ConfigMaps and Secrets allow you to decouple configuration artifacts from image content to keep your containerized applications portable. Regularly Update and Patch Your Orchestration Tools Stay current with updates and patches for your orchestration tools to benefit from the latest features and security fixes. Regular maintenance reduces the risk of vulnerabilities and improves system stability.

0 notes

Text

Linux for Developers: Essential Tools and Environments for Coding

For developers, Linux is not just an operating system—it's a versatile platform that offers a powerful array of tools and environments tailored to coding and development tasks. With its open-source nature and robust performance, Linux is a preferred choice for many developers. If you're looking to get the most out of your Linux development environment, leveraging resources like Linux Commands Practice Online, Linux Practice Labs, and Linux Online Practice can significantly enhance your skills and productivity.

The Linux Advantage for Developers

Linux provides a rich environment for development, featuring a wide range of tools that cater to various programming needs. From command-line utilities to integrated development environments (IDEs), Linux supports an extensive ecosystem that can streamline coding tasks, improve efficiency, and foster a deeper understanding of system operations.

Essential Linux Tools for Developers

Text Editors and IDEs: A good text editor is crucial for any developer. Linux offers a variety of text editors, from lightweight options like Vim and Nano to more feature-rich IDEs like Visual Studio Code and Eclipse. These tools enhance productivity by providing syntax highlighting, code completion, and debugging features.

Version Control Systems: Git is an indispensable tool for version control, and its integration with Linux is seamless. Using Git on Linux allows for efficient version management, collaboration, and code tracking. Tools like GitHub and GitLab further streamline the development process by offering platforms for code sharing and project management.

Package Managers: Linux distributions come with powerful package managers such as apt (Debian/Ubuntu), yum (CentOS/RHEL), and dnf (Fedora). These tools facilitate the installation and management of software packages, enabling developers to quickly set up their development environment and access a wide range of libraries and dependencies.

Command-Line Tools: Mastery of Linux commands is vital for efficient development. Commands like grep, awk, and sed can manipulate text and data effectively, while find and locate assist in file management. Practicing these commands through Linux Commands Practice Online resources helps sharpen your command-line skills.

Containers and Virtualization: Docker and Kubernetes are pivotal in modern development workflows. They allow developers to create, deploy, and manage applications in isolated environments, which simplifies testing and scaling. Linux supports these technologies natively, making it an ideal platform for container-based development.

Enhancing Skills with Practice Resources

To get the most out of Linux, practical experience is essential. Here’s how you can use Linux Practice Labs and Linux Online Practice to enhance your skills:

Linux Practice Labs: These labs offer hands-on experience with real Linux environments, providing a safe space to experiment with commands, configurations, and development tools. Engaging in Linux Practice Labs helps reinforce learning by applying concepts in a controlled setting.

Linux Commands Practice Online: Interactive platforms for practicing Linux commands online are invaluable. They offer scenarios and exercises that simulate real-world tasks, allowing you to practice commands and workflows without the need for a local Linux setup. These exercises are beneficial for mastering command-line utilities and scripting.

Linux Online Practice Platforms: Labex provide structured learning paths and practice environments tailored for developers. These platforms offer a variety of exercises and projects that cover different aspects of Linux, from basic commands to advanced system administration tasks.

Conclusion

Linux offers a powerful and flexible environment for developers, equipped with a wealth of tools and resources that cater to various programming needs. By leveraging Linux Commands Practice Online, engaging in Linux Practice Labs, and utilizing Linux Online Practice platforms, you can enhance your development skills, streamline your workflow, and gain a deeper understanding of the Linux operating system. Embrace these resources to make the most of your Linux development environment and stay ahead in the ever-evolving tech landscape.

0 notes

Text

Scaling Cloud Infrastructure: Challenges and Solutions for CTOs

As technology evolves at an unprecedented pace, businesses increasingly rely on the scalability and flexibility offered by cloud infrastructure. Cloud computing has revolutionized organizations' operations, providing on-demand access to computing power, storage, and applications. However, as more businesses migrate their operations to the cloud, CTOs face unique challenges in scaling their cloud infrastructure to meet growing demands. This blog post will explore the common challenges CTOs face when scaling their cloud infrastructure and discuss potential solutions to address these issues.

At Flentas, an AWS consulting partner, we understand the complexities of scaling cloud infrastructure. We offer comprehensive managed services to help businesses optimize their cloud environments and ensure seamless scalability. Our expertise in cloud architecture and infrastructure management enables us to provide tailored solutions to CTOs seeking to scale their cloud infrastructure effectively.

The Challenges of Scaling Cloud Infrastructure for CTOs:

Performance Bottlenecks: As businesses expand their operations and user base, they often encounter performance bottlenecks in their cloud infrastructure. This can lead to slower response times, decreased reliability, and poor user experience. Identifying the root cause of these bottlenecks and optimizing performance becomes crucial for CTOs aiming to scale their cloud infrastructure successfully.

Cost Optimization: Scaling infrastructure often comes with increased costs. CTOs need to balance providing enough resources to handle the workload efficiently and minimizing unnecessary expenses. With proper cost optimization measures, businesses may spend on cloud resources and under-provisioning, which can positively impact performance.

Security and Compliance: Scaling cloud infrastructure brings additional challenges in maintaining security and compliance standards. With a larger infrastructure footprint, CTOs must ensure robust security measures like access controls, data encryption, and monitoring. As the infrastructure grows, complying with industry regulations and data privacy laws becomes increasingly complicated.

Resource Allocation and Monitoring: Scaling cloud infrastructure requires thoroughly understanding resource allocation and utilization patterns. CTOs must monitor and manage their cloud resources effectively to optimize performance and cost. This involves identifying underutilized resources, automating resource provisioning, and implementing efficient monitoring and alerting systems.

Solutions for Scaling Cloud Infrastructure for CTOs:

Horizontal Scaling: One of the most common approaches to scaling cloud infrastructure is horizontal scaling. Businesses can achieve better performance and handle increased traffic by adding more instances or nodes to distribute the workload. This approach often requires load balancing and auto-scaling mechanisms to allocate resources based on demand dynamically.

Utilize Containerization and Orchestration: Containerization technologies like Docker and container orchestration platforms like Kubernetes can simplify scaling. Containers provide lightweight, isolated environments for applications, making deploying and scaling them easier. Orchestration tools enable CTOs to manage containers at scale, automating deployment, scaling, and management processes.

Implement Serverless Architecture: Serverless computing abstracts the infrastructure layer, allowing CTOs to focus on writing and deploying code without managing underlying servers. Serverless architectures, like AWS Lambda, scale automatically based on the incoming workload, minimizing operational complexities. This approach can significantly simplify scaling infrastructure for certain types of workloads.

DevOps and Automation: Implementing DevOps practices and leveraging automation tools can streamline scaling. Automation helps ensure consistency, reduces human error and accelerates the scaling process. Continuous integration and delivery (CI/CD) pipelines enable faster and more reliable deployments, while infrastructure-as-code (IaC) tools like AWS CloudFormation or Terraform provide a declarative approach to provisioning and managing infrastructure resources.

Scaling cloud infrastructure is critical for CTOs as businesses grow and adapt to evolving market demands. By addressing performance bottlenecks, optimizing costs, strengthening security measures, and implementing effective resource allocation and monitoring strategies, CTOs can scale their cloud infrastructure successfully. Flentas, as an AWS consulting partner, offers AWS managed services and expertise to support CTOs in overcoming the challenges associated with scaling cloud infrastructure.

As businesses continue to leverage the benefits of cloud computing, CTOS needs to stay updated with the latest advancements in scaling techniques and technologies. By partnering with Flentas, CTOs can ensure that their cloud infrastructure scales seamlessly, enabling their organizations to thrive in the ever-evolving digital landscape.

For more details about our services, please visit our website – Flentas Services

0 notes