#Kubernetes distributions

Explore tagged Tumblr posts

Text

k0s vs k3s - Battle of the Tiny Kubernetes distros

k0s vs k3s - Battle of the Tiny Kubernetes distros #100daysofhomelab #homelab @vexpert #vmwarecommunities #KubernetesDistributions, #k0svsk3s, #RunningKubernetes, #LightweightKubernetes, #KubernetesInEdgeComputing, #KubernetesInBareMetal

Kubernetes has redefined the management of containerized applications. The rich ecosystem of Kubernetes distributions testifies to its widespread adoption and versatility. Today, we compare k0s vs k3s, two unique Kubernetes distributions designed to seamlessly run Kubernetes across varied infrastructures, from cloud instances to bare metal and edge computing settings. Those with home labs will…

View On WordPress

#k0s vs k3s#Kubernetes Cluster Efficiency#Kubernetes distributions#Kubernetes for Production Workloads#Kubernetes in Bare Metal#Kubernetes in Cloud Instances#Kubernetes in Edge Computing#Kubernetes on Virtual Machines#Lightweight Kubernetes#Running Kubernetes

0 notes

Text

Install Canonical Kubernetes on Linux | Snap Store

Fast, secure & automated application deployment, everywhere Canonical Kubernetes is the fastest, easiest way to deploy a fully-conformant Kubernetes cluster. Harnessing pure upstream Kubernetes, this distribution adds the missing pieces (e.g. ingress, dns, networking) for a zero-ops experience. Get started in just two commands: sudo snap install k8s –classic sudo k8s bootstrap — Read on…

View On WordPress

#dns#easiest way to deploy a fully-conformant Kubernetes cluster. Harnessing pure upstream Kubernetes#everywhere Canonical Kubernetes is the fastest#Fast#networking) for a zero-ops experience. Get started in just two commands: sudo snap install k8s --classic sudo k8s bootstrap#secure & automated application deployment#this distribution adds the missing pieces (e.g. ingress

1 note

·

View note

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

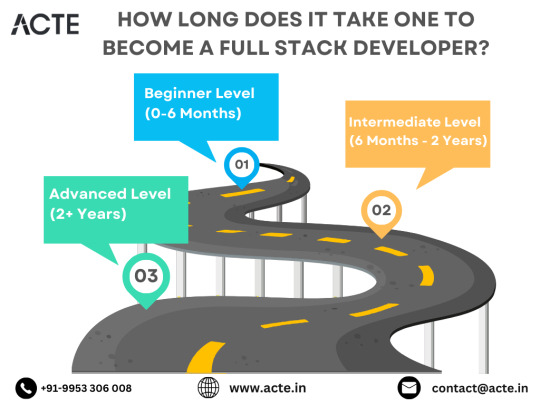

The Roadmap to Full Stack Developer Proficiency: A Comprehensive Guide

Embarking on the journey to becoming a full stack developer is an exhilarating endeavor filled with growth and challenges. Whether you're taking your first steps or seeking to elevate your skills, understanding the path ahead is crucial. In this detailed roadmap, we'll outline the stages of mastering full stack development, exploring essential milestones, competencies, and strategies to guide you through this enriching career journey.

Beginning the Journey: Novice Phase (0-6 Months)

As a novice, you're entering the realm of programming with a fresh perspective and eagerness to learn. This initial phase sets the groundwork for your progression as a full stack developer.

Grasping Programming Fundamentals:

Your journey commences with grasping the foundational elements of programming languages like HTML, CSS, and JavaScript. These are the cornerstone of web development and are essential for crafting dynamic and interactive web applications.

Familiarizing with Basic Data Structures and Algorithms:

To develop proficiency in programming, understanding fundamental data structures such as arrays, objects, and linked lists, along with algorithms like sorting and searching, is imperative. These concepts form the backbone of problem-solving in software development.

Exploring Essential Web Development Concepts:

During this phase, you'll delve into crucial web development concepts like client-server architecture, HTTP protocol, and the Document Object Model (DOM). Acquiring insights into the underlying mechanisms of web applications lays a strong foundation for tackling more intricate projects.

Advancing Forward: Intermediate Stage (6 Months - 2 Years)

As you progress beyond the basics, you'll transition into the intermediate stage, where you'll deepen your understanding and skills across various facets of full stack development.

Venturing into Backend Development:

In the intermediate stage, you'll venture into backend development, honing your proficiency in server-side languages like Node.js, Python, or Java. Here, you'll learn to construct robust server-side applications, manage data storage and retrieval, and implement authentication and authorization mechanisms.

Mastering Database Management:

A pivotal aspect of backend development is comprehending databases. You'll delve into relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. Proficiency in database management systems and design principles enables the creation of scalable and efficient applications.

Exploring Frontend Frameworks and Libraries:

In addition to backend development, you'll deepen your expertise in frontend technologies. You'll explore prominent frameworks and libraries such as React, Angular, or Vue.js, streamlining the creation of interactive and responsive user interfaces.

Learning Version Control with Git:

Version control is indispensable for collaborative software development. During this phase, you'll familiarize yourself with Git, a distributed version control system, to manage your codebase, track changes, and collaborate effectively with fellow developers.

Achieving Mastery: Advanced Phase (2+ Years)

As you ascend in your journey, you'll enter the advanced phase of full stack development, where you'll refine your skills, tackle intricate challenges, and delve into specialized domains of interest.

Designing Scalable Systems:

In the advanced stage, focus shifts to designing scalable systems capable of managing substantial volumes of traffic and data. You'll explore design patterns, scalability methodologies, and cloud computing platforms like AWS, Azure, or Google Cloud.

Embracing DevOps Practices:

DevOps practices play a pivotal role in contemporary software development. You'll delve into continuous integration and continuous deployment (CI/CD) pipelines, infrastructure as code (IaC), and containerization technologies such as Docker and Kubernetes.

Specializing in Niche Areas:

With experience, you may opt to specialize in specific domains of full stack development, whether it's frontend or backend development, mobile app development, or DevOps. Specialization enables you to deepen your expertise and pursue career avenues aligned with your passions and strengths.

Conclusion:

Becoming a proficient full stack developer is a transformative journey that demands dedication, resilience, and perpetual learning. By following the roadmap outlined in this guide and maintaining a curious and adaptable mindset, you'll navigate the complexities and opportunities inherent in the realm of full stack development. Remember, mastery isn't merely about acquiring technical skills but also about fostering collaboration, embracing innovation, and contributing meaningfully to the ever-evolving landscape of technology.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

9 notes

·

View notes

Text

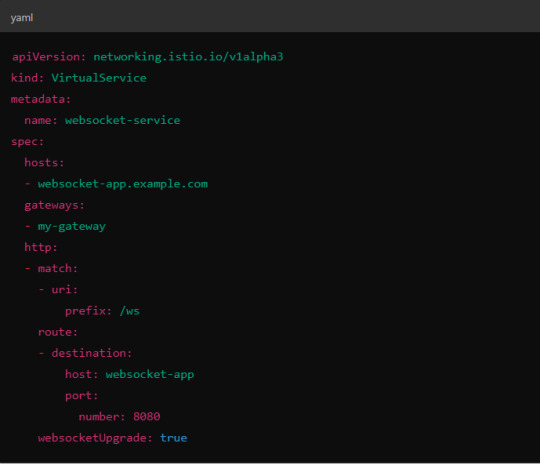

Load Balancing Web Sockets with K8s/Istio

When load balancing WebSockets in a Kubernetes (K8s) environment with Istio, there are several considerations to ensure persistent, low-latency connections. WebSockets require special handling because they are long-lived, bidirectional connections, which are different from standard HTTP request-response communication. Here’s a guide to implementing load balancing for WebSockets using Istio.

1. Enable WebSocket Support in Istio

By default, Istio supports WebSocket connections, but certain configurations may need tweaking. You should ensure that:

Destination rules and VirtualServices are configured appropriately to allow WebSocket traffic.

Example VirtualService Configuration.

Here, websocketUpgrade: true explicitly allows WebSocket traffic and ensures that Istio won’t downgrade the WebSocket connection to HTTP.

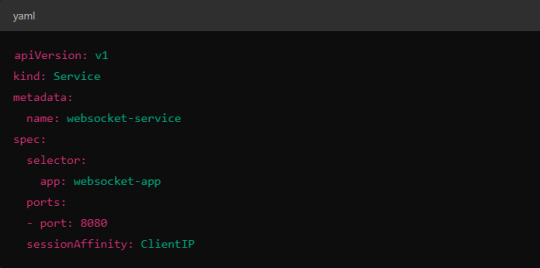

2. Session Affinity (Sticky Sessions)

In WebSocket applications, sticky sessions or session affinity is often necessary to keep long-running WebSocket connections tied to the same backend pod. Without session affinity, WebSocket connections can be terminated if the load balancer routes the traffic to a different pod.

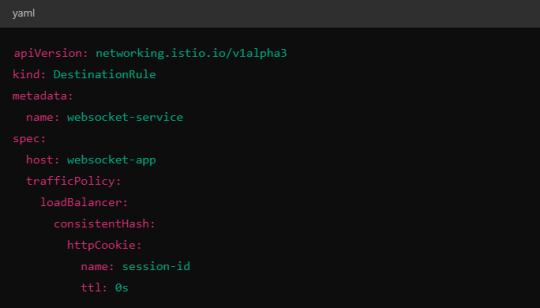

Implementing Session Affinity in Istio.

Session affinity is typically achieved by setting the sessionAffinity field to ClientIP at the Kubernetes service level.

In Istio, you might also control affinity using headers. For example, Istio can route traffic based on headers by configuring a VirtualService to ensure connections stay on the same backend.

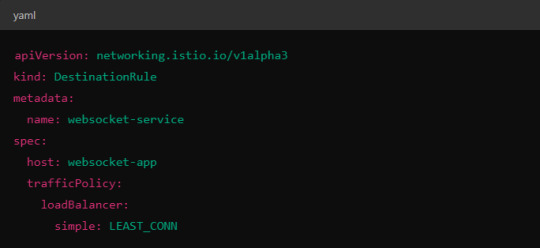

3. Load Balancing Strategy

Since WebSocket connections are long-lived, round-robin or random load balancing strategies can lead to unbalanced workloads across pods. To address this, you may consider using least connection or consistent hashing algorithms to ensure that existing connections are efficiently distributed.

Load Balancer Configuration in Istio.

Istio allows you to specify different load balancing strategies in the DestinationRule for your services. For WebSockets, the LEAST_CONN strategy may be more appropriate.

Alternatively, you could use consistent hashing for a more sticky routing based on connection properties like the user session ID.

This configuration ensures that connections with the same session ID go to the same pod.

4. Scaling Considerations

WebSocket applications can handle a large number of concurrent connections, so you’ll need to ensure that your Kubernetes cluster can scale appropriately.

Horizontal Pod Autoscaler (HPA): Use an HPA to automatically scale your pods based on metrics like CPU, memory, or custom metrics such as open WebSocket connections.

Istio Autoscaler: You may also scale Istio itself to handle the increased load on the control plane as WebSocket connections increase.

5. Connection Timeouts and Keep-Alive

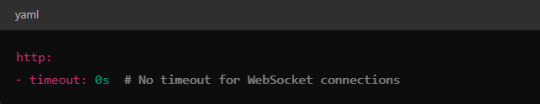

Ensure that both your WebSocket clients and the Istio proxy (Envoy) are configured for long-lived connections. Some settings that need attention:

Timeouts: In VirtualService, make sure there are no aggressive timeout settings that would prematurely close WebSocket connections.

Keep-Alive Settings: You can also adjust the keep-alive settings at the Envoy level if necessary. Envoy, the proxy used by Istio, supports long-lived WebSocket connections out-of-the-box, but custom keep-alive policies can be configured.

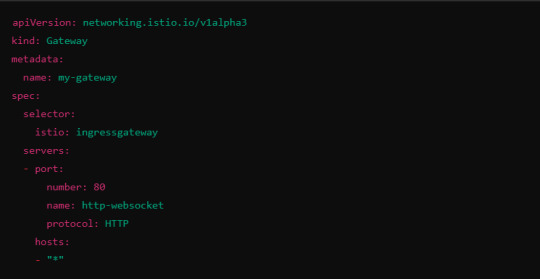

6. Ingress Gateway Configuration

If you're using an Istio Ingress Gateway, ensure that it is configured to handle WebSocket traffic. The gateway should allow for WebSocket connections on the relevant port.

This configuration ensures that the Ingress Gateway can handle WebSocket upgrades and correctly route them to the backend service.

Summary of Key Steps

Enable WebSocket support in Istio’s VirtualService.

Use session affinity to tie WebSocket connections to the same backend pod.

Choose an appropriate load balancing strategy, such as least connection or consistent hashing.

Set timeouts and keep-alive policies to ensure long-lived WebSocket connections.

Configure the Ingress Gateway to handle WebSocket traffic.

By properly configuring Istio, Kubernetes, and your WebSocket service, you can efficiently load balance WebSocket connections in a microservices architecture.

#kubernetes#websockets#Load Balancing#devops#linux#coding#programming#Istio#virtualservices#Load Balancer#Kubernetes cluster#gateway#python#devlog#github#ansible

5 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Top 5 Proven Strategies for Building Scalable Software Products in 2025

Building scalable software products is essential in today's dynamic digital environment, where user demands and data volumes can surge unexpectedly. Scalability ensures that your software can handle increased loads without compromising performance, providing a seamless experience for users. This blog delves into best practices for building scalable software, drawing insights from industry experts and resources like XillenTech's guide on the subject.

Understanding Software Scalability

Software scalability refers to the system's ability to handle growing amounts of work or its potential to accommodate growth. This growth can manifest as an increase in user traffic, data volume, or transaction complexity. Scalability is typically categorized into two types:

Vertical Scaling: Enhancing the capacity of existing hardware or software by adding resources like CPU, RAM, or storage.

Horizontal Scaling: Expanding the system by adding more machines or nodes, distributing the load across multiple servers.

Both scaling methods are crucial, and the choice between them depends on the specific needs and architecture of the software product.

Best Practices for Building Scalable Software

1. Adopt Micro Services Architecture

Micro services architecture involves breaking down an application into smaller, independent services that can be developed, deployed, and scaled separately. This approach offers several advantages:

Independent Scaling: Each service can be scaled based on its specific demand, optimizing resource utilization.

Enhanced Flexibility: Developers can use different technologies for different services, choosing the best tools for each task.

Improved Fault Isolation: Failures in one service are less likely to impact the entire system.

Implementing micro services requires careful planning, especially in managing inter-service communication and data consistency.

2. Embrace Modular Design

Modular design complements micro services by structuring the application into distinct modules with specific responsibilities.

Ease of Maintenance: Modules can be updated or replaced without affecting the entire system.

Parallel Development: Different teams can work on separate modules simultaneously, accelerating development.

Scalability: Modules experiencing higher demand can be scaled independently.

This design principle is particularly beneficial in MVP development, where speed and adaptability are crucial.

3. Leverage Cloud Computing

Cloud platforms like AWS, Azure, and Google Cloud offer scalable infrastructure that can adjust to varying workloads.

Elasticity: Resources can be scaled up or down automatically based on demand.

Cost Efficiency: Pay-as-you-go models ensure you only pay for the resources you use.

Global Reach: Deploy applications closer to users worldwide, reducing latency.

Cloud-native development, incorporating containers and orchestration tools like Kubernetes, further enhances scalability and deployment flexibility.

4. Implement Caching Strategies

Caching involves storing frequently accessed data in a temporary storage area to reduce retrieval times. Effective caching strategies:

Reduce Latency: Serve data faster by avoiding repeated database queries.

Lower Server Load: Decrease the number of requests hitting the backend systems.

Enhance User Experience: Provide quicker responses, improving overall satisfaction.

Tools like Redis and Memcached are commonly used for implementing caching mechanisms.

5. Prioritize Continuous Monitoring and Performance Testing

Regular monitoring and testing are vital to ensure the software performs optimally as it scales.

Load Testing: Assess how the system behaves under expected and peak loads.

Stress Testing: Determine the system's breaking point and how it recovers from failures.

Real-Time Monitoring: Use tools like New Relic or Datadog to track performance metrics continuously.

These practices help in identifying bottlenecks and ensuring the system can handle growth effectively.

Common Pitfalls in Scalable Software Development

While aiming for scalability, it's essential to avoid certain pitfalls:

Over engineering: Adding unnecessary complexity can hinder development and maintenance.

Neglecting Security: Scaling should not compromise the application's security protocols.

Inadequate Testing: Failing to test under various scenarios can lead to unforeseen issues in production.

Balancing scalability with simplicity and robustness is key to successful software development.

Conclusion

Building scalable software products involves strategic planning, adopting the right architectural patterns, and leveraging modern technologies. By implementing micro services, embracing modular design, utilizing cloud computing, and maintaining rigorous testing and monitoring practices, businesses can ensure their software scales effectively with growing demands. Avoiding common pitfalls and focusing on continuous improvement will further enhance scalability and performance.

0 notes

Text

K3s vs K8s: The Best Kubernetes Home Lab Distribution

K3s vs K8s: The Best Home Lab Kubernetes Distribution @vexpert #vmwarecommunities #100daysofhomelab #homelab #KubernetesHomeLab #k3svsk8s #LightweightKubernetes #KubernetesDistributions #EdgeComputing #HighAvailabilityinKubernetes #KubernetesScalability

Kubernetes, a project under the Cloud Native Computing Foundation, is a popular container orchestration platform for managing distributed systems. Many who are running home labs or want to get into running Kubernetes in their home lab to get experience with modern applications may wonder which Kubernetes distribution is best to use. Today, we will compare the certified Kubernetes distribution…

View On WordPress

#Certified Kubernetes Distribution#edge computing#High Availability in Kubernetes#K3s for Local Development#k3s vs k8s#Kubernetes distributions#Kubernetes home lab#Kubernetes Scalability#Lightweight Kubernetes#Resource-Constrained Environments

0 notes

Text

What Are the Challenges of Scaling a Fintech Application?

Scaling a fintech application is no small feat. What starts as a minimal viable product (MVP) quickly becomes a complex digital ecosystem that must support thousands—or even millions—of users. As financial technology continues to disrupt traditional banking and investment models, the pressure to scale efficiently, securely, and reliably has never been greater.

Whether you're offering digital wallets, lending platforms, or investment tools, successful fintech software development requires more than just technical expertise. It demands a deep understanding of regulatory compliance, user behavior, security, and system architecture. As Fintech Services expand, so too do the challenges of maintaining performance and trust at scale.

Below are the key challenges companies face when scaling a fintech application.

1. Regulatory Compliance Across Regions

One of the biggest hurdles in scaling a fintech product is adapting to varying financial regulations across different markets. While your application may be fully compliant in your home country, entering a new region might introduce requirements like additional identity verification, data localization laws, or different transaction monitoring protocols.

Scaling means compliance must be baked into your infrastructure. Your tech stack must allow for modular integration of different compliance protocols based on regional needs. Failing to do so not only risks penalties but also erodes user trust—something that's critical for any company offering Fintech Services.

2. Maintaining Data Security at Scale

Security becomes exponentially more complex as the number of users grows. More users mean more data, more access points, and more potential vulnerabilities. While encryption and multi-factor authentication are baseline requirements, scaling applications must go further with role-based access control (RBAC), real-time threat detection, and zero-trust architecture.

In fintech software development, a single breach can be catastrophic, affecting not only financial data but also brand reputation. Ensuring that your security architecture scales alongside user growth is essential.

3. System Performance and Reliability

As usage grows, so do demands on your servers, databases, and APIs. Fintech users expect fast, seamless transactions—delays or downtimes can directly affect business operations and customer satisfaction.

Building a highly available and resilient system often involves using microservices, container orchestration tools like Kubernetes, and distributed databases. Load balancing, horizontal scaling, and real-time monitoring must be in place to ensure consistent performance under pressure.

4. Complexity of Integrations

Fintech applications typically rely on multiple third-party integrations—payment processors, banking APIs, KYC/AML services, and more. As the application scales, managing these integrations becomes increasingly complex. Each new integration adds potential points of failure and requires monitoring, updates, and compliance reviews.

Furthermore, integrating with legacy banking systems, which may not support modern protocols or cloud infrastructure, adds another layer of difficulty.

5. User Experience and Onboarding

Scaling is not just about infrastructure—it’s about people. A growing user base means more diverse needs, devices, and technical competencies. Ensuring that onboarding remains simple, intuitive, and compliant can be a challenge.

If users face friction during onboarding—like lengthy KYC processes or difficult navigation—they may abandon the application altogether. As your audience expands globally, you may also need to support multiple languages, currencies, and localization preferences, all of which add complexity to your frontend development.

6. Data Management and Analytics

Fintech applications generate vast amounts of transactional, behavioral, and compliance data. Scaling means implementing robust data infrastructure that can collect, store, and analyze this data in real-time. You’ll need to ensure that your analytics pipeline can handle increasing data volumes without latency or errors.

Moreover, actionable insights from data become critical for fraud detection, user engagement strategies, and personalization. Your data stack should evolve from basic reporting to real-time analytics, machine learning, and predictive modeling.

7. Team and Process Scalability

As your application scales, so must your team and internal processes. Engineering teams must adopt agile methodologies and DevOps practices to keep pace with rapid iteration. Communication overhead increases, and maintaining product quality while shipping faster becomes a balancing act.

Documentation, version control, and automated testing become non-negotiable components of scalable development. Without them, technical debt grows quickly and future scalability is compromised.

8. Cost Management

Finally, scaling often leads to ballooning infrastructure and operational costs. Cloud services, third-party integrations, and security tools can become increasingly expensive. Without proper monitoring, you might find yourself with an unsustainable burn rate.

Cost-efficient scaling requires regular performance audits, architecture optimization, and intelligent use of auto-scaling and serverless technologies.

Real-World Perspective

A practical example of tackling these challenges can be seen in the approach used by Xettle Technologies, which emphasizes modular architecture, automated compliance workflows, and real-time analytics to support scaling without sacrificing security or performance. Their strategy demonstrates how thoughtful planning and the right tools can ease the complexities of scale in fintech ecosystems.

Conclusion

Scaling a fintech application is a multifaceted challenge that touches every part of your business—from backend systems to regulatory frameworks. It's not just about growing bigger, but about growing smarter. The demands of fintech software development extend far beyond coding; they encompass strategic planning, regulatory foresight, and deep customer empathy.

Companies that invest early in scalable architecture, robust security, and user-centric design are more likely to thrive in the competitive world of Fintech Services. By anticipating these challenges and addressing them proactively, you set a foundation for sustainable growth and long-term success.

0 notes

Text

ARM Embedded Controllers ARMxy and Datadog for Machine Monitoring and Data Analytics

Case Details

ARM Embedded Controllers

ARM-based embedded controllers are low-power, high-performance microcontrollers or processors widely used in industrial automation, IoT, smart devices, and edge computing. Key features include:

High Efficiency: ARM architecture excels in energy efficiency, ideal for real-time data processing and complex computations.

Real-Time Performance: Supports Real-Time Operating Systems (RTOS) for low-latency industrial control.

Low Power Consumption: Optimized for continuous operation in sensors and monitoring nodes.

Flexibility: Compatible with industrial protocols (CAN, Modbus, MT connect).

Scalability: Cortex-M series for basic tasks to Cortex-A series for advanced edge computing.

Datadog

Datadog is a leading cloud-native monitoring and analytics platform for infrastructure, application performance, and log management. Core capabilities:

Data Aggregation: Collects metrics, logs, and traces from servers, cloud services, and IoT devices.

Custom Dashboards: Real-time visualization of trends and anomalies.

Smart Alerts: ML-driven anomaly detection and threshold-based notifications.

Integration Ecosystem: 600+ pre-built integrations (AWS, Kubernetes, etc.).

Predictive Analytics: Identifies patterns to forecast failures or bottlenecks.

Benefits of Combining ARM Controllers with Datadog

1. End-to-End Machine Monitoring Solution

Edge Data Collection: ARM controllers act as edge nodes, interfacing directly with sensors (e.g., temperature, vibration, current sensors).

Cloud-Based Intelligence: Data sent via MQTT/HTTP to Datadog for AI/ML-driven analysis (e.g., detecting abnormal vibration frequencies).

Use Case: Predictive maintenance for factory CNC machines by correlating sensor data with operational logs.

2. Low Latency and Real-Time Response

Edge Preprocessing: ARM controllers perform local computations (e.g., FFT analysis), reducing bandwidth usage by uploading only critical data.

Instant Alerts: Datadog triggers alerts via Slack/email for threshold breaches (e.g., overheating), minimizing downtime.

3. Remote Monitoring and Centralized Management

Global Device Oversight: Monitor distributed ARM devices worldwide via Datadog’s unified dashboard.

OTA Updates: Deploy firmware updates remotely using Datadog APIs, reducing on-site maintenance.

4. Cost and Energy Efficiency

Bandwidth Optimization: Edge computing reduces cloud storage and transmission costs.

Power-Saving Design: ARM’s low power consumption aligns with Datadog’s pay-as-you-go model for scalable deployments.

5. Scalability and Ecosystem Compatibility

Industrial Protocol Support: ARM controllers integrate with Modbus, OPC UA; Datadog ingests data via plugins or custom APIs.

Elastic Scalability: Datadog handles data from single devices to thousands of nodes without architectural overhauls.

6. Data-Driven Predictive Maintenance

Historical Insights: Datadog stores long-term data to train models predicting equipment lifespan (e.g., bearing wear trends).

Root Cause Analysis: Combine ARM controller logs with metrics to diagnose issues (e.g., power fluctuations causing downtime).

Typical Applications

Industry 4.0 Production Lines: Monitor CNC machine health with Datadog optimizing production schedules.

Wind Turbine Monitoring: ARM nodes collect gearbox vibration data; Datadog predicts failures to schedule maintenance.

Smart Buildings: ARM-based sensor networks track HVAC performance, with Datadog adjusting energy usage for sustainability.

Conclusion

The integration of ARM embedded controllers and Datadog delivers a robust machine monitoring framework, combining edge reliability with cloud-powered intelligence. The ARMxy BL410 series is equipped with 1 Tops NPU, low-power data acquisition, while Datadog enables predictive analytics and global scalability. This synergy is ideal for industrial automation, energy management, and smart manufacturing, driving efficiency and reducing operational risks.

0 notes

Text

Big Data Course in Kochi: Transforming Careers in the Age of Information

In today’s hyper-connected world, data is being generated at an unprecedented rate. Every click on a website, every transaction, every social media interaction — all of it contributes to the vast oceans of information known as Big Data. Organizations across industries now recognize the strategic value of this data and are eager to hire professionals who can analyze and extract meaningful insights from it.

This growing demand has turned big data course in Kochi into one of the most sought-after educational programs for tech enthusiasts, IT professionals, and graduates looking to enter the data-driven future of work.

Understanding Big Data and Its Relevance

Big Data refers to datasets that are too large or complex for traditional data processing applications. It’s commonly defined by the 5 V’s:

Volume – Massive amounts of data generated every second

Velocity – The speed at which data is created and processed

Variety – Data comes in various forms, from structured to unstructured

Veracity – Quality and reliability of the data

Value – The insights and business benefits extracted from data

These characteristics make Big Data a crucial resource for industries ranging from healthcare and finance to retail and logistics. Trained professionals are needed to collect, clean, store, and analyze this data using modern tools and platforms.

Why Enroll in a Big Data Course?

Pursuing a big data course in Kochi can open up diverse opportunities in data analytics, data engineering, business intelligence, and beyond. Here's why it's a smart move:

1. High Demand for Big Data Professionals

There’s a huge gap between the demand for big data professionals and the current supply. Companies are actively seeking individuals who can handle tools like Hadoop, Spark, and NoSQL databases, as well as data visualization platforms.

2. Lucrative Career Opportunities

Big data engineers, analysts, and architects earn some of the highest salaries in the tech sector. Even entry-level roles can offer impressive compensation packages, especially with relevant certifications.

3. Cross-Industry Application

Skills learned in a big data course in Kochi are transferable across sectors such as healthcare, e-commerce, telecommunications, banking, and more.

4. Enhanced Decision-Making Skills

With big data, companies make smarter business decisions based on predictive analytics, customer behavior modeling, and real-time reporting. Learning how to influence those decisions makes you a valuable asset.

What You’ll Learn in a Big Data Course

A top-tier big data course in Kochi covers both the foundational concepts and the technical skills required to thrive in this field.

1. Core Concepts of Big Data

Understanding what makes data “big,” how it's collected, and why it matters is crucial before diving into tools and platforms.

2. Data Storage and Processing

You'll gain hands-on experience with distributed systems such as:

Hadoop Ecosystem: HDFS, MapReduce, Hive, Pig, HBase

Apache Spark: Real-time processing and machine learning capabilities

NoSQL Databases: MongoDB, Cassandra for unstructured data handling

3. Data Integration and ETL

Learn how to extract, transform, and load (ETL) data from multiple sources into big data platforms.

4. Data Analysis and Visualization

Training includes tools for querying large datasets and visualizing insights using:

Tableau

Power BI

Python/R libraries for data visualization

5. Programming Skills

Big data professionals often need to be proficient in:

Java

Python

Scala

SQL

6. Cloud and DevOps Integration

Modern data platforms often operate on cloud infrastructure. You’ll gain familiarity with AWS, Azure, and GCP, along with containerization (Docker) and orchestration (Kubernetes).

7. Project Work

A well-rounded course includes capstone projects simulating real business problems—such as customer segmentation, fraud detection, or recommendation systems.

Kochi: A Thriving Destination for Big Data Learning

Kochi has evolved into a leading IT and educational hub in South India, making it an ideal place to pursue a big data course in Kochi.

1. IT Infrastructure

Home to major IT parks like Infopark and SmartCity, Kochi hosts numerous startups and global IT firms that actively recruit big data professionals.

2. Cost-Effective Learning

Compared to metros like Bangalore or Hyderabad, Kochi offers high-quality education and living at a lower cost.

3. Talent Ecosystem

With a strong base of engineering colleges and tech institutes, Kochi provides a rich talent pool and a thriving tech community for networking.

4. Career Opportunities

Kochi’s booming IT industry provides immediate placement potential after course completion, especially for well-trained candidates.

What to Look for in a Big Data Course?

When choosing a big data course in Kochi, consider the following:

Expert Instructors: Trainers with industry experience in data engineering or analytics

Comprehensive Curriculum: Courses should include Hadoop, Spark, data lakes, ETL pipelines, cloud deployment, and visualization tools

Hands-On Projects: Theoretical knowledge is incomplete without practical implementation

Career Support: Resume building, interview preparation, and placement assistance

Flexible Learning Options: Online, weekend, or hybrid courses for working professionals

Zoople Technologies: Leading the Way in Big Data Training

If you’re searching for a reliable and career-oriented big data course in Kochi, look no further than Zoople Technologies—a name synonymous with quality tech education and industry-driven training.

Why Choose Zoople Technologies?

Industry-Relevant Curriculum: Zoople offers a comprehensive, updated big data syllabus designed in collaboration with real-world professionals.

Experienced Trainers: Learn from data scientists and engineers with years of experience in multinational companies.

Hands-On Training: Their learning model emphasizes practical exposure, with real-time projects and live data scenarios.

Placement Assistance: Zoople has a dedicated team to help students with job readiness—mock interviews, resume support, and direct placement opportunities.

Modern Learning Infrastructure: With smart classrooms, cloud labs, and flexible learning modes, students can learn in a professional, tech-enabled environment.

Strong Alumni Network: Zoople’s graduates are placed in top firms across India and abroad, and often return as guest mentors or recruiters.

Zoople Technologies has cemented its position as a go-to institute for aspiring data professionals. By enrolling in their big data course in Kochi, you’re not just learning technology—you’re building a future-proof career.

Final Thoughts

Big data is more than a trend—it's a transformative force shaping the future of business and technology. As organizations continue to invest in data-driven strategies, the demand for skilled professionals will only grow.

By choosing a comprehensive big data course in Kochi, you position yourself at the forefront of this evolution. And with a trusted partner like Zoople Technologies, you can rest assured that your training will be rigorous, relevant, and career-ready.

Whether you're a student, a working professional, or someone looking to switch careers, now is the perfect time to step into the world of big data—and Kochi is the ideal place to begin.

0 notes

Text

Service Mesh with Istio and Linkerd: A Practical Overview

As microservices architectures continue to dominate modern application development, managing service-to-service communication has become increasingly complex. Service meshes have emerged as a solution to address these complexities — offering enhanced security, observability, and traffic management between services.

Two of the most popular service mesh solutions today are Istio and Linkerd. In this blog post, we'll explore what a service mesh is, why it's important, and how Istio and Linkerd compare in real-world use cases.

What is a Service Mesh?

A service mesh is a dedicated infrastructure layer that controls communication between services in a distributed application. Instead of hardcoding service-to-service communication logic (like retries, failovers, and security policies) into your application code, a service mesh handles these concerns externally.

Key features typically provided by a service mesh include:

Traffic management: Fine-grained control over service traffic (routing, load balancing, fault injection)

Observability: Metrics, logs, and traces that give insights into service behavior

Security: Encryption, authentication, and authorization between services (often using mutual TLS)

Reliability: Retries, timeouts, and circuit breaking to improve service resilience

Why Do You Need a Service Mesh?

As applications grow more complex, maintaining reliable and secure communication between services becomes critical. A service mesh abstracts this complexity, allowing teams to:

Deploy features faster without worrying about cross-service communication challenges

Increase application reliability and uptime

Gain full visibility into service behavior without modifying application code

Enforce security policies consistently across the environment

Introducing Istio

Istio is one of the most feature-rich service meshes available today. Originally developed by Google, IBM, and Lyft, Istio offers deep integration with Kubernetes but can also support hybrid cloud environments.

Key Features of Istio:

Advanced traffic management: Canary deployments, A/B testing, traffic shifting

Comprehensive security: Mutual TLS, policy enforcement, and RBAC (Role-Based Access Control)

Extensive observability: Integrates with Prometheus, Grafana, Jaeger, and Kiali for metrics and tracing

Extensibility: Supports custom plugins through WebAssembly (Wasm)

Ingress/Egress gateways: Manage inbound and outbound traffic effectively

Pros of Istio:

Rich feature set suitable for complex enterprise use cases

Strong integration with Kubernetes and cloud-native ecosystems

Active community and broad industry adoption

Cons of Istio:

Can be resource-heavy and complex to set up and manage

Steeper learning curve compared to lighter service meshes

Introducing Linkerd

Linkerd is often considered the original service mesh and is known for its simplicity, performance, and focus on the core essentials.

Key Features of Linkerd:

Lightweight and fast: Designed to be resource-efficient

Simple setup: Easy to install, configure, and operate

Security-first: Automatic mutual TLS between services

Observability out of the box: Includes metrics, tap (live traffic inspection), and dashboards

Kubernetes-native: Deeply integrated with Kubernetes

Pros of Linkerd:

Minimal operational complexity

Lower resource usage

Easier learning curve for teams starting with service mesh

High performance and low latency

Cons of Linkerd:

Fewer advanced traffic management features compared to Istio

Less customizable for complex use cases

Choosing the Right Service Mesh

Choosing between Istio and Linkerd largely depends on your needs:

Choose Istio if you require advanced traffic management, complex security policies, and extensive customization — typically in larger, enterprise-grade environments.

Choose Linkerd if you value simplicity, low overhead, and rapid deployment — especially in smaller teams or organizations where ease of use is critical.

Ultimately, both Istio and Linkerd are excellent choices — it’s about finding the best fit for your application landscape and operational capabilities.

Final Thoughts

Service meshes are no longer just "nice to have" for microservices — they are increasingly a necessity for ensuring resilience, security, and observability at scale. Whether you pick Istio for its powerful feature set or Linkerd for its lightweight design, implementing a service mesh can greatly enhance your service architecture.

Stay tuned — in upcoming posts, we'll dive deeper into setting up Istio and Linkerd with hands-on labs and real-world use cases!

Would you also like me to include a hands-on quickstart guide (like "how to install Istio and Linkerd on a local Kubernetes cluster")? 🚀

For more details www.hawkstack.com

0 notes

Text

Cloud Microservice Market Growth Driven by Demand for Scalable and Agile Application Development Platforms

The Cloud Microservice Market: Accelerating Innovation in a Modular World

The global push toward digital transformation has redefined how businesses design, build, and deploy applications. Among the most impactful trends in recent years is the rapid adoption of cloud microservices a modular approach to application development that offers speed, scalability, and resilience. As enterprises strive to meet the growing demand for agility and performance, the cloud microservice market is experiencing significant momentum, reshaping the software development landscape.

What Are Cloud Microservices?

At its core, a microservice architecture breaks down a monolithic application into smaller, loosely coupled, independently deployable services. Each microservice addresses a specific business capability, such as user authentication, payment processing, or inventory management. By leveraging the cloud, these services can scale independently, be deployed across multiple geographic regions, and integrate seamlessly with various platforms.

Cloud microservices differ from traditional service-oriented architectures (SOA) by emphasizing decentralization, lightweight communication (typically via REST or gRPC), and DevOps-driven automation.

Market Growth and Dynamics

The cloud microservice market is witnessing robust growth. According to recent research, the global market size was valued at over USD 1 billion in 2023 and is projected to grow at a compound annual growth rate (CAGR) exceeding 20% through 2030. This surge is driven by several interlocking trends:

Cloud-First Strategies: As more organizations migrate workloads to public, private, and hybrid cloud environments, microservices provide a flexible architecture that aligns with distributed infrastructure.

DevOps and CI/CD Adoption: The increasing use of continuous integration and continuous deployment pipelines has made microservices more attractive. They fit naturally into agile development cycles and allow for faster iteration and delivery.

Containerization and Orchestration Tools: Technologies like Docker and Kubernetes have become instrumental in managing and scaling microservices in the cloud. These tools offer consistency across environments and automate deployment, networking, and scaling of services.

Edge Computing and IoT Integration: As edge devices proliferate, there is a growing need for lightweight, scalable services that can run closer to the user. Microservices can be deployed to edge nodes and communicate with centralized cloud services, enhancing performance and reliability.

Key Industry Players

Several technology giants and cloud providers are investing heavily in microservice architectures:

Amazon Web Services (AWS) offers a suite of tools like AWS Lambda, ECS, and App Mesh that support serverless and container-based microservices.

Microsoft Azure provides Azure Kubernetes Service (AKS) and Azure Functions for scalable and event-driven applications.

Google Cloud Platform (GCP) leverages Anthos and Cloud Run to help developers manage hybrid and multicloud microservice deployments.

Beyond the big three, companies like Red Hat, IBM, and VMware are also influencing the microservice ecosystem through open-source platforms and enterprise-grade orchestration tools.

Challenges and Considerations

While the benefits of cloud microservices are significant, the architecture is not without challenges:

Complexity in Management: Managing hundreds or even thousands of microservices requires robust monitoring, logging, and service discovery mechanisms.

Security Concerns: Each service represents a potential attack vector, requiring strong identity, access control, and encryption practices.

Data Consistency: Maintaining consistency and integrity across distributed systems is a persistent concern, particularly in real-time applications.

Organizations must weigh these complexities against their business needs and invest in the right tools and expertise to successfully navigate the microservice journey.

The Road Ahead

As digital experiences become more demanding and users expect seamless, responsive applications, microservices will continue to play a pivotal role in enabling scalable, fault-tolerant systems. Emerging trends such as AI-driven observability, service mesh architecture, and no-code/low-code microservice platforms are poised to further simplify and enhance the development and management process.

In conclusion, the cloud microservice market is not just a technological shift it's a foundational change in how software is conceptualized and delivered. For businesses aiming to stay competitive, embracing microservices in the cloud is no longer optional; it’s a strategic imperative.

0 notes

Text

Why Chennai Is a Thriving Tech Hub

Chennai has rapidly emerged as one of India’s foremost technology hubs, offering a dynamic ecosystem for businesses to thrive. From startups to multinational corporations, organizations seeking scalable and cost‑effective solutions turn to the best software development company in Chennai. With a robust talent pool, advanced infrastructure, and a supportive business environment, Chennai consistently delivers top‑tier software services that cater to global standards.

Understanding a Software Development Company in Chennai

A Software Development Company in Chennai brings together expertise in multiple domains—custom application development, enterprise software, mobile apps, and emerging technologies like AI, IoT, and blockchain. These firms typically follow agile methodologies, ensuring timely delivery and iterative improvements. Here’s what you can expect from a leading Chennai‑based software partner:

Full‑Stack Development: End‑to‑end solutions covering front‑end frameworks (React, Angular, Vue) and back‑end technologies (Node.js, .NET, Java, Python).

Mobile App Engineering: Native (Swift, Kotlin) and cross‑platform (Flutter, React Native) mobile development for iOS and Android.

Cloud & DevOps: AWS, Azure, and Google Cloud deployments, automated CI/CD pipelines, containerization with Docker and Kubernetes.

Quality Assurance & Testing: Manual and automated testing services to ensure reliability, performance, and security.

UI/UX Design: User‑centric interfaces that prioritize accessibility, responsiveness, and engagement.

What Sets the Best Software Development Company in Chennai Apart

Talent and Expertise Chennai’s educational institutions and coding bootcamps produce a steady stream of skilled engineers. The best software development companies in Chennai invest heavily in continuous training—ensuring teams stay up‑to‑date with the latest frameworks, security protocols, and best practices.

Cost‑Effectiveness Without Compromise By leveraging competitive operational costs and local talent, Chennai firms offer attractive pricing models—fixed‑bid, time‑and‑materials, or dedicated teams—without sacrificing quality. Many global clients report savings of 30–40% compared to Western markets, while still benefiting from seasoned professionals.

Strong Communication and Transparency English proficiency is high among Chennai’s tech workforce, facilitating clear requirements gathering and regular progress updates. Top companies implement robust project‑management tools (Jira, Trello, Asana) and schedule daily stand‑ups, sprint reviews, and monthly road‑map sessions to keep you in the loop.

Cutting‑Edge Infrastructure Chennai’s IT parks and technology corridors, such as TIDEL Park and OMR’s “IT Corridor,” are equipped with world‑class facilities—high‑speed internet, reliable power backup, and on‑site data centers. This infrastructure underpins uninterrupted development and deep collaboration between distributed teams.

Commitment to Security and Compliance Whether handling GDPR‑sensitive data, implementing PCI‑DSS standards for e‑commerce, or conducting regular penetration testing, the best software development companies in Chennai prioritize security. ISO‑certified processes and ISMS frameworks ensure your project adheres to global compliance requirements.

How to Choose the Right Partner

Portfolio and Case Studies Review a prospective partner’s past work—industry verticals, technology stacks, scalability achievements, and client testimonials. Look for success stories in your domain to validate domain‑specific expertise.

Engagement Model Decide whether you need a project‑based model, a dedicated offshore team, or staff augmentation. The best software development company in Chennai will offer flexible engagement options aligned with your budget and timelines.

Technical Interviews and Audits Conduct technical screenings or request code audits to assess coding standards, architecture decisions, and test coverage. An open‑book approach to code review often signals confidence in quality.

Cultural Fit and Long‑Term Vision Beyond technical prowess, ensure cultural alignment—communication styles, work ethics, and shared goals. A partner who understands your long‑term roadmap becomes a strategic extension of your in‑house team.

Conclusion

Choosing the best software development company in Chennai means tapping into a vibrant tech ecosystem fueled by innovation, cost‑efficiency, and a commitment to excellence. Whether you’re launching a new digital product or modernizing legacy systems, a Software Development Company in Chennai can deliver tailor‑made solutions that drive growth and empower your business for the digital age. Reach out today to explore how Chennai’s top tech talent can transform your vision into reality.

0 notes