#Cloud-native deployment

Explore tagged Tumblr posts

Text

Optimizing Applications with Cloud Native Deployment

Cloud-native deployment has revolutionized the way applications are built, deployed, and managed. By leveraging cloud-native technologies such as containerization, microservices, and DevOps automation, businesses can enhance application performance, scalability, and reliability. This article explores key strategies for optimizing applications through cloud-native deployment.

1. Adopting a Microservices Architecture

Traditional monolithic applications can become complex and difficult to scale. By adopting a microservices architecture, applications are broken down into smaller, independent services that can be deployed, updated, and scaled separately.

Key Benefits

Improved scalability and fault tolerance

Faster development cycles and deployments

Better resource utilization by scaling specific services as needed

Best Practices

Design microservices with clear boundaries using domain-driven design

Use lightweight communication protocols such as REST or gRPC

Implement service discovery and load balancing for better efficiency

2. Leveraging Containerization for Portability

Containers provide a consistent runtime environment across different cloud platforms, making deployment faster and more efficient. Using container orchestration tools like Kubernetes ensures seamless management of containerized applications.

Key Benefits

Portability across multiple cloud environments

Faster deployment and rollback capabilities

Efficient resource allocation and utilization

Best Practices

Use lightweight base images to improve security and performance

Automate container builds using CI/CD pipelines

Implement resource limits and quotas to prevent resource exhaustion

3. Automating Deployment with CI/CD Pipelines

Continuous Integration and Continuous Deployment (CI/CD) streamline application delivery by automating testing, building, and deployment processes. This ensures faster and more reliable releases.

Key Benefits

Reduces manual errors and deployment time

Enables faster feature rollouts

Improves overall software quality through automated testing

Best Practices

Use tools like Jenkins, GitHub Actions, or GitLab CI/CD

Implement blue-green deployments or canary releases for smooth rollouts

Automate rollback mechanisms to handle failed deployments

4. Ensuring High Availability with Load Balancing and Auto-scaling

To maintain application performance under varying workloads, implementing load balancing and auto-scaling is essential. Cloud providers offer built-in services for distributing traffic and adjusting resources dynamically.

Key Benefits

Ensures application availability during high traffic loads

Optimizes resource utilization and reduces costs

Minimizes downtime and improves fault tolerance

Best Practices

Use cloud-based load balancers such as AWS ELB, Azure Load Balancer, or Nginx

Implement Horizontal Pod Autoscaler (HPA) in Kubernetes for dynamic scaling

Distribute applications across multiple availability zones for resilience

5. Implementing Observability for Proactive Monitoring

Monitoring cloud-native applications is crucial for identifying performance bottlenecks and ensuring smooth operations. Observability tools provide real-time insights into application behavior.

Key Benefits

Early detection of issues before they impact users

Better decision-making through real-time performance metrics

Enhanced security and compliance monitoring

Best Practices

Use Prometheus and Grafana for monitoring and visualization

Implement centralized logging with Elasticsearch, Fluentd, and Kibana (EFK Stack)

Enable distributed tracing with OpenTelemetry to track requests across services

6. Strengthening Security in Cloud-Native Environments

Security must be integrated at every stage of the application lifecycle. By following DevSecOps practices, organizations can embed security into development and deployment processes.

Key Benefits

Prevents vulnerabilities and security breaches

Ensures compliance with industry regulations

Enhances application integrity and data protection

Best Practices

Scan container images for vulnerabilities before deployment

Enforce Role-Based Access Control (RBAC) to limit permissions

Encrypt sensitive data in transit and at rest

7. Optimizing Costs with Cloud-Native Strategies

Efficient cost management is essential for cloud-native applications. By optimizing resource usage and adopting cost-effective deployment models, organizations can reduce expenses without compromising performance.

Key Benefits

Lower infrastructure costs through auto-scaling

Improved cost transparency and budgeting

Better efficiency in cloud resource allocation

Best Practices

Use serverless computing for event-driven applications

Implement spot instances and reserved instances to save costs

Monitor cloud spending with FinOps practices and tools

Conclusion

Cloud-native deployment enables businesses to optimize applications for performance, scalability, and cost efficiency. By adopting microservices, leveraging containerization, automating deployments, and implementing robust monitoring and security measures, organizations can fully harness the benefits of cloud-native computing.

By following these best practices, businesses can accelerate innovation, improve application reliability, and stay competitive in a fast-evolving digital landscape. Now is the time to embrace cloud-native deployment and take your applications to the next level.

#Cloud-native applications#Cloud-native architecture#Cloud-native development#Cloud-native deployment

1 note

·

View note

Text

The concerted effort of maintaining application resilience

New Post has been published on https://thedigitalinsider.com/the-concerted-effort-of-maintaining-application-resilience/

The concerted effort of maintaining application resilience

Back when most business applications were monolithic, ensuring their resilience was by no means easy. But given the way apps run in 2025 and what’s expected of them, maintaining monolithic apps was arguably simpler.

Back then, IT staff had a finite set of criteria on which to improve an application’s resilience, and the rate of change to the application and its infrastructure was a great deal slower. Today, the demands we place on apps are different, more numerous, and subject to a faster rate of change.

There are also just more applications. According to IDC, there are likely to be a billion more in production by 2028 – and many of these will be running on cloud-native code and mixed infrastructure. With technological complexity and higher service expectations of responsiveness and quality, ensuring resilience has grown into being a massively more complex ask.

Multi-dimensional elements determine app resilience, dimensions that fall into different areas of responsibility in the modern enterprise: Code quality falls to development teams; infrastructure might be down to systems administrators or DevOps; compliance and data governance officers have their own needs and stipulations, as do cybersecurity professionals, storage engineers, database administrators, and a dozen more besides.

With multiple tools designed to ensure the resilience of an app – with definitions of what constitutes resilience depending on who’s asking – it’s small wonder that there are typically dozens of tools that work to improve and maintain resilience in play at any one time in the modern enterprise.

Determining resilience across the whole enterprise’s portfolio, therefore, is near-impossible. Monitoring software is silo-ed, and there’s no single pane of reference.

IBM’s Concert Resilience Posture simplifies the complexities of multiple dashboards, normalizes the different quality judgments, breaks down data from different silos, and unifies the disparate purposes of monitoring and remediation tools in play.

Speaking ahead of TechEx North America (4-5 June, Santa Clara Convention Center), Jennifer Fitzgerald, Product Management Director, Observability, at IBM, took us through the Concert Resilience Posture solution, its aims, and its ethos. On the latter, she differentiates it from other tools:

“Everything we’re doing is grounded in applications – the health and performance of the applications and reducing risk factors for the application.”

The app-centric approach means the bringing together of the different metrics in the context of desired business outcomes, answering questions that matter to an organization’s stakeholders, like:

Will every application scale?

What effects have code changes had?

Are we over- or under-resourcing any element of any application?

Is infrastructure supporting or hindering application deployment?

Are we safe and in line with data governance policies?

What experience are we giving our customers?

Jennifer says IBM Concert Resilience Posture is, “a new way to think about resilience – to move it from a manual stitching [of other tools] or a ton of different dashboards.” Although the definition of resilience can be ephemeral, according to which criteria are in play, Jennifer says it’s comprised, at its core, of eight non-functional requirements (NFRs):

Observability

Availability

Maintainability

Recoverability

Scalability

Usability

Integrity

Security

NFRs are important everywhere in the organization, and there are perhaps only two or three that are the sole remit of one department – security falls to the CISO, for example. But ensuring the best quality of resilience in all of the above is critically important right across the enterprise. It’s a shared responsibility for maintaining excellence in performance, potential, and safety.

What IBM Concert Resilience Posture gives organizations, different from what’s offered by a collection of disparate tools and beyond the single-pane-of-glass paradigm, is proactivity. Proactive resilience comes from its ability to give a resilience score, based on multiple metrics, with a score determined by the many dozens of data points in each NFR. Companies can see their overall or per-app scores drift as changes are made – to the infrastructure, to code, to the portfolio of applications in production, and so on.

“The thought around resilience is that we as humans aren’t perfect. We’re going to make mistakes. But how do you come back? You want your applications to be fully, highly performant, always optimal, with the required uptime. But issues are going to happen. A code change is introduced that breaks something, or there’s more demand on a certain area that slows down performance. And so the application resilience we’re looking at is all around the ability of systems to withstand and recover quickly from disruptions, failures, spikes in demand, [and] unexpected events,” she says.

IBM’s acquisition history points to some of the complimentary elements of the Concert Resilience Posture solution – Instana for full-stack observability, Turbonomic for resource optimization, for example. But the whole is greater than the sum of the parts. There’s an AI-powered continuous assessment of all elements that make up an organization’s resilience, so there’s one place where decision-makers and IT teams can assess, manage, and configure the full-stack’s resilience profile.

The IBM portfolio of resilience-focused solutions helps teams see when and why loads change and therefore where resources are wasted. It’s possible to ensure that necessary resources are allocated only when needed, and systems automatically scale back when they’re not. That sort of business- and cost-centric capability is at the heart of app-centric resilience, and means that a company is always optimizing its resources.

Overarching all aspects of app performance and resilience is the element of cost. Throwing extra resources at an under-performing application (or its supporting infrastructure) isn’t a viable solution in most organizations. With IBM, organizations get the ability to scale and grow, to add or iterate apps safely, without necessarily having to invest in new provisioning, either in the cloud or on-premise. Plus, they can see how any changes impact resilience. It’s making best use of what’s available, and winning back capacity – all while getting the best performance, responsiveness, reliability, and uptime across the enterprise’s application portfolio.

Jennifer says, “There’s a lot of different things that can impact resilience and that’s why it’s been so difficult to measure. An application has so many different layers underneath, even in just its resources and how it’s built. But then there’s the spider web of downstream impacts. A code change could impact multiple apps, or it could impact one piece of an app. What is the downstream impact of something going wrong? And that’s a big piece of what our tools are helping organizations with.”

You can read more about IBM’s work to make today and tomorrow’s applications resilient.

#2025#acquisition#ADD#ai#AI-powered#America#app#application deployment#application resilience#applications#approach#apps#assessment#billion#Business#business applications#change#CISO#Cloud#Cloud-Native#code#Companies#complexity#compliance#continuous#convention#cybersecurity#data#Data Governance#data pipeline

0 notes

Text

#cloud services#devops solutions#cloud computing#infrastructure automation#CI/CD pipeline#cloud and devops services#cloud migration#enterprise devops#cloud security#cloud-native development#cloud deployment services#continuous delivery#cloud consulting services#managed cloud services#devops consulting company#hybrid cloud solutions#cloud optimization#instep technologies

0 notes

Text

Skyrocket Your Efficiency: Dive into Azure Cloud-Native solutions

Join our blog series on Azure Container Apps and unlock unstoppable innovation! Discover foundational concepts, advanced deployment strategies, microservices, serverless computing, best practices, and real-world examples. Transform your operations!!

#Azure App Service#Azure cloud#Azure Container Apps#Azure Functions#CI/CD#cloud infrastructure#cloud-native applications#containerization#deployment strategies#DevOps#Kubernetes#microservices architecture#serverless computing

0 notes

Text

Powering the Next Wave of Digital Transformation

In an era defined by rapid technological disruption and ever-evolving customer expectations, innovation is not just a strategy—it’s a necessity. At Frandzzo, we’ve embraced this mindset wholeheartedly, scaling our innovation across every layer of our SaaS ecosystem with next-gen AI-powered insights and cloud-native architecture. But how exactly did we make it happen?

Building the Foundation of Innovation

Frandzzo was born from a bold vision: to empower businesses to digitally transform with intelligence, agility, and speed. Our approach from day one has been to integrate AI, automation, and cloud technology into our SaaS solutions, making them not only scalable but also deeply insightful.

By embedding machine learning and predictive analytics into our platforms, we help organizations move from reactive decision-making to proactive, data-driven strategies. Whether it’s optimizing operations, enhancing customer experiences, or identifying untapped revenue streams, our tools provide real-time, actionable insights that fuel business growth.

A Cloud-Native, AI-First Ecosystem

Our SaaS ecosystem is powered by a cloud-native core, enabling seamless deployment, continuous delivery, and effortless scalability. This flexible infrastructure allows us to rapidly adapt to changing market needs while ensuring our clients receive cutting-edge features with zero downtime.

We doubled down on AI by integrating next-gen technologies from a bold vision that can learn, adapt, and evolve alongside our users. From intelligent process automation to advanced behavior analytics, AI is the engine behind every Frandzzo innovation.

Driving Digital Agility for Customers

Innovation at Frandzzo is not just about building smart tech—it’s about delivering real-world value. Our solutions are designed to help organizations become more agile, make smarter decisions, and unlock new growth opportunities faster than ever before.

We partner closely with our clients to understand their pain points and opportunities. This collaboration fuels our product roadmap and ensures we’re always solving the right problems at the right time.

A Culture of Relentless Innovation

At the heart of Frandzzo’s success is a culture deeply rooted in curiosity, experimentation, and improvement. Our teams are empowered to think big, challenge assumptions, and continuously explore new ways to solve complex business problems. Innovation isn’t a department—it’s embedded in our DNA.

We invest heavily in R&D, conduct regular innovation sprints, and stay ahead of tech trends to ensure our customers benefit from the latest advancements. This mindset has allowed us to scale innovation quickly and sustainably.

Staying Ahead in a Fast-Paced Digital World

The digital landscape is changing faster than ever, and businesses need partners that help them not just keep up, but lead. Frandzzo persistent pursuit of innovation ensures our customers stay ahead—ready to seize new opportunities and thrive in any environment.We’re not just building products; we’re engineering the future of business.

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

Innovative Solutions Ensuring Cybersecurity in Cloud-Native Deployments

http://securitytc.com/T1VYFp

2 notes

·

View notes

Text

NDI Showcases HDCP over NDI, NDI 6.2 and Launches AVIXA-Certified “NDI Basics” Classroom Session at InfoComm 2025

New Post has been published on https://thedigitalinsider.com/ndi-showcases-hdcp-over-ndi-ndi-6-2-and-launches-avixa-certified-ndi-basics-classroom-session-at-infocomm-2025/

NDI Showcases HDCP over NDI, NDI 6.2 and Launches AVIXA-Certified “NDI Basics” Classroom Session at InfoComm 2025

AV professionals can demo HDCP over NDI courtesy of ProITAV, explore products updated with NDI 6.2, and earn RU credits in the in-person classroom.

LISBON, Portugal (May 29, 2025) – NDI, the global standard for plug-and-play IP video connectivity, today announced a technology-driven lineup for InfoComm 2025, including live showcases of NDI 6.2, the industry’s first-ever HDCP over NDI demonstration, and the launch of its AVIXA-certified NDI Basics Classroom Session. These developments mark a major step forward in simplifying and securing IP video for corporate, education, and live production workflows.

In collaboration with ProITAV, NDI will present the first-ever demonstration of HDCP-compliant content transmission over NDI at the NDI booth – 3780 on level 2 of the Exhibit Hall. Using a new metadata setting, this innovation enables the secure transport of HDCP-protected content, addressing a longstanding challenge in enterprise and education AV environments.

Attendees will also get an exclusive look at NDI 6.2, a new update set to be released in June 2025 and publicly demonstrated for the first time at InfoComm 2025. The technology update introduces enhanced device discoverability, new monitoring tools and a fully redesigned NDI Discovery Tool, enabling more scalable, efficient deployment across diverse AV-over-IP systems.

NDI will also debut it’s AVIXA-certified “NDI Basics” Classroom Session on Wednesday, June 11 at 11 a.m. in Room W307CD. Led by Technical Director Roberto Musso, the 60-minute training is designed for AV engineers, IT professionals and system integrators transitioning to IP-based video, offering RU credits and practical, real-world insights. The session is included in AVIXA’s Manufacturers’ Training Pass and costs $99 for members, $149 for non-members. “As the AV industry rapidly shifts toward IP-based solutions, mastering NDI is no longer optional. It’s essential,” said Roberto Musso, Technical Director at NDI. “This session goes beyond theory. It gives professionals the tools, context, and confidence to simplify their workflows, make smarter infrastructure decisions, and lead successful IP deployments in live production, corporate AV, or education.”

On the show floor, NDI will demonstrate the full power of its ecosystem at its booth through live demos and partner pods featuring BirdDog, Bolin, NZBGear, NETGEAR, Ross, Vizrt, and Yamaha. Several partners, including audio manufacturer K-array, will unveil and demo brand-new NDI-enabled products at InfoComm. Two feature walls will also highlight products from BirdDog, Bolin, Canon, CND Live, Lumens, Magewell, Mevo, and Obsbot.

NDI will also showcase its growing collaboration with AWS. At the booth, attendees can explore how AWS MediaConnect now natively outputs NDI High Bandwidth streams directly to a VPC, or the new cloud-native version of Discovery Server available in the AWS marketplace.

For more information, visit https://ndi.video/events/infocomm-2025/. To register for NDI’s in-person course, visit https://www.infocommshow.org/infocomm-2025/ndi-basics-classroom-session.

# # #

ABOUT NDI

NDI, a fast-growing tech company, is removing the limits to video and audio connectivity. NDI – Network Device Interface – is used by millions of customers worldwide and has been adopted by more media organizations than any other IP standard, creating the industry’s largest IP ecosystem of products.

NDI allows multiple video systems to identify and communicate with one another over IP; it can encode, transmit and receive many streams of high-quality, low-latency, frame-accurate video and audio in real-time. The growth of NDI is backed by a growing community of installers, developers, AV professionals, and users who are deeply engaged with the company through community events and initiatives. NDI is a part of Vizrt. For more information: https://ndi.video/

#2025#audio#AWS#Canon#challenge#classroom#Cloud#Cloud-Native#Collaboration#Community#connectivity#content#course#deployment#developers#Developments#discovery#education#engineers#enterprise#Events#Full#Global#growth#how#Industry#Infrastructure#Innovation#insights#IP

0 notes

Text

Going Over the Cloud: An Investigation into the Architecture of Cloud Solutions

Because the cloud offers unprecedented levels of size, flexibility, and accessibility, it has fundamentally altered the way we approach technology in the present digital era. As more and more businesses shift their infrastructure to the cloud, it is imperative that they understand the architecture of cloud solutions. Join me as we examine the core concepts, industry best practices, and transformative impacts on modern enterprises.

The Basics of Cloud Solution Architecture A well-designed architecture that balances dependability, performance, and cost-effectiveness is the foundation of any successful cloud deployment. Cloud solutions' architecture is made up of many different components, including networking, computing, storage, security, and scalability. By creating solutions that are tailored to the requirements of each workload, organizations can optimize return on investment and fully utilize the cloud.

Flexibility and Resilience in Design The flexibility of cloud computing to grow resources on-demand to meet varying workloads and guarantee flawless performance is one of its distinguishing characteristics. Cloud solution architecture create resilient systems that can endure failures and sustain uptime by utilizing fault-tolerant design principles, load balancing, and auto-scaling. Workloads can be distributed over several availability zones and regions to help enterprises increase fault tolerance and lessen the effect of outages.

Protection of Data in the Cloud and Security by Design

As data thefts become more common, security becomes a top priority in cloud solution architecture. Architects include identity management, access controls, encryption, and monitoring into their designs using a multi-layered security strategy. By adhering to industry standards and best practices, such as the shared responsibility model and compliance frameworks, organizations may safeguard confidential information and guarantee regulatory compliance in the cloud.

Using Professional Services to Increase Productivity Cloud service providers offer a variety of managed services that streamline operations and reduce the stress of maintaining infrastructure. These services allow firms to focus on innovation instead of infrastructure maintenance. They include server less computing, machine learning, databases, and analytics. With cloud-native applications, architects may reduce costs, increase time-to-market, and optimize performance by selecting the right mix of managed services.

Cost control and ongoing optimization Cost optimization is essential since inefficient resource use can quickly drive up costs. Architects monitor resource utilization, analyze cost trends, and identify opportunities for optimization with the aid of tools and techniques. Businesses can cut waste and maximize their cloud computing expenses by using spot instances, reserved instances, and cost allocation tags.

Acknowledging Automation and DevOps Important elements of cloud solution design include automation and DevOps concepts, which enable companies to develop software more rapidly, reliably, and efficiently. Architects create pipelines for continuous integration, delivery, and deployment, which expedites the software development process and allows for rapid iterations. By provisioning and managing infrastructure programmatically with Infrastructure as Code (IaC) and Configuration Management systems, teams may minimize human labor and guarantee consistency across environments.

Multiple-cloud and hybrid strategies In an increasingly interconnected world, many firms employ hybrid and multi-cloud strategies to leverage the benefits of many cloud providers in addition to on-premises infrastructure. Cloud solution architects have to design systems that seamlessly integrate several environments while ensuring interoperability, data consistency, and regulatory compliance. By implementing hybrid connection options like VPNs, Direct Connect, or Express Route, organizations may develop hybrid cloud deployments that include the best aspects of both public and on-premises data centers. Analytics and Data Management Modern organizations depend on data because it fosters innovation and informed decision-making. Thanks to the advanced data management and analytics solutions developed by cloud solution architects, organizations can effortlessly gather, store, process, and analyze large volumes of data. By leveraging cloud-native data services like data warehouses, data lakes, and real-time analytics platforms, organizations may gain a competitive advantage in their respective industries and extract valuable insights. Architects implement data governance frameworks and privacy-enhancing technologies to ensure adherence to data protection rules and safeguard sensitive information.

Computing Without a Server Server less computing, a significant shift in cloud architecture, frees organizations to focus on creating applications rather than maintaining infrastructure or managing servers. Cloud solution architects develop server less programs using event-driven architectures and Function-as-a-Service (FaaS) platforms such as AWS Lambda, Azure Functions, or Google Cloud Functions. By abstracting away the underlying infrastructure, server less architectures offer unparalleled scalability, cost-efficiency, and agility, empowering companies to innovate swiftly and change course without incurring additional costs.

Conclusion As we come to the close of our investigation into cloud solution architecture, it is evident that the cloud is more than just a platform for technology; it is a force for innovation and transformation. By embracing the ideas of scalability, resilience, and security, and efficiency, organizations can take advantage of new opportunities, drive business expansion, and preserve their competitive edge in today's rapidly evolving digital market. Thus, to ensure success, remember to leverage cloud solution architecture when developing a new cloud-native application or initiating a cloud migration.

1 note

·

View note

Text

April 22nd, 2055 - Henad 2 Post-Mission Cumulative Report (SOME INFO NOT FOR PUBLIC RELEASE)

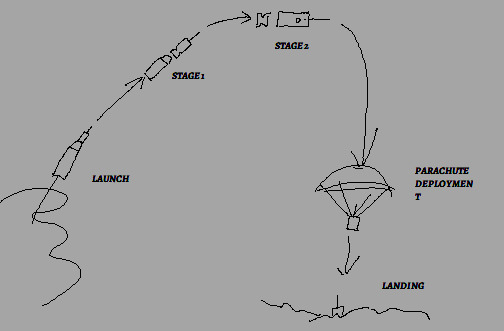

The GSRG's first ever crewed suborbital flight, Henad 2, took place earlier this evening, launching at 08:02:51 Putiyana local time and splashing down only 18 minutes and 49 seconds later. The astronaut piloting the vessel, Grisgia Striseo, enjoyed a comfortable flight onboard the newly developed Salt 3 rocket.

The Salt 3 rocket, designed by our partner laboratories, is the first step in our plan to develop an internationally linked space effort. As of now, the reach of the GSRG is small, but negotiations with multiple governments for cooperation on future missions have already begun.

Henad 2 followed the Henad 1's unmanned test flight as the first crewed flight of the GSRG to escape Ulina's atmosphere. Grisgia Yaoi Striseo was the lucky astronaut who took the first step into space for our organization. Striseo has flown over 2,000 hours in the Royal Vau'senaan Air Force and is one of the first 3 astronauts we have recruited into our crewed programs. They are a 39-year-old non-binary Caticani native, who excelled over our 2 other astronauts in training to be chosen as this mission's pilot.

Henad 2 postcard souvenir Pulari variant that depicts Striseo

Launch day in Pulunadu was clear with few clouds, relieving worries from the day prior of storms. As the Salt 3 rocket blasted through the atmosphere, flight controllers at our facilities on the ground prepared for the next portion of staging.

Stage 1 separation separates the Service and Command modules from the Salt 3 launch vehicle after it has depleted all of its fuel, halfway through its journey out of the atmosphere. For this mission, the Service module only held small fuel cells and oxygen/ RCS fuel reserves, however in an orbital flight would perform the final burn to establish an orbit. So, the module was detached shortly after reaching its highest altitude, and the command module began using its own life support system reserves.

Following this, the command module's atmospheric reentry began, its flat end reinforced with heat-resistant materials to take the brunt of the extreme heat forces. Then, once it had reached a suitable point in altitude, the drogue parachutes deployed, quickly slowing the capsule down before main parachute deployment.

Due to pre-flight calculation errors the craft performed an unplanned water landing, when originally meant to land in Rilhan. However, recovery was unencumbered. Striseo left the capsule in good spirits and in nominal condition.

Part of a report on the mission in a local newspaper:

Grisgia Striseo was all smiles for the cameras that awaited their safe recovery aboard the large trawler that acts as the GSRG's apparent recovery boat. Seeming chipper as ever, a quick interview with (a suspiciously-soaked) Striseo in the aftermath of their feat revealed that they are "Interested, but not certain" on the possibilities of returning as pilot on future GSRG missions. Head of Operations at GSRG, Maksyi Kozymazhets, expressed jovial congratulations to Striseo and extensive optimism for the organization's future. "Everyone on our team is so proud of what we have accomplished here today, and even more so for Grisgia's resolve and stability throughout the challenging mission we have just completed. This, surely, is the sign that more success is inevitable, and that amazing feats are due for our subsequent programs! The team that- We have built- is one I trust to drive the WHOLE of Ulina towards an incredible realization of our potential in space and the industry around it."

An unofficial statement from a recovery team member about the water landing:

'It was from my point of view that I saw the command module, as soon as it touched down in the Strait of Celany, tipping over on its side. Which is, if you can't tell, really not supposed to happen. This meant water pretty much filled the capsule instantly as it opened up so Striseo could get out. The capsule was unrecoverable. I'd say it probably needs a redesign.'

Flight Path

Flight Stages

2 notes

·

View notes

Text

A Success Blueprint: Mastering SAP Integration Migration with NeuVays

In today’s fast-paced digital landscape, enterprise integration is no longer a backend function—it’s a business-critical enabler. As companies increasingly adopt cloud-native applications and operate in hybrid environments, the demand for seamless, scalable, and future-ready integration is rising sharply. That’s why SAP Integration Migration has become a key priority for forward-thinking organizations.

To help enterprises confidently transition from legacy SAP integration platforms to the next-generation SAP Integration Suite, NeuVays is hosting a knowledge-packed webinar titled “A Success Blueprint – PI/PO Migration to SAP Integration Suite.” This live session is designed to offer valuable insights, technical guidance, and strategic approaches to making your integration migration successful, efficient, and aligned with future enterprise needs.

What is SAP Integration Migration?

SAP Integration Migration refers to the structured process of moving from older SAP middleware platforms like SAP PI (Process Integration) or PO (Process Orchestration) to the modern, cloud-native SAP Integration Suite. This shift not only supports emerging integration patterns—such as API-based and event-driven architecture—but also aligns organizations with SAP’s long-term innovation roadmap.

In simpler terms, this migration enables businesses to retire outdated systems and move toward a flexible, agile, and intelligent integration environment that connects SAP and non-SAP systems, cloud applications, and third-party solutions in real-time.

Why SAP PI/PO Migration is Urgent

Many enterprises still rely on SAP PI/PO to integrate applications and orchestrate processes. While these platforms have served businesses well for over a decade, they are increasingly becoming bottlenecks in the cloud era. SAP has already communicated that PI/PO will see reduced investment and innovation moving forward, signaling the need for migration.

Here’s why SAP PI/PO Migration is gaining urgency:

Limited cloud capabilities and scalability in PI/PO

Increasing maintenance costs and technical debt

SAP’s shift to cloud-first innovation and support

Inability of PI/PO to handle modern integration demands (APIs, events, hybrid apps)

To stay ahead, companies must start their SAP PI/PO Migration to Integration Suite today—before end-of-support issues and compatibility limitations hinder operations.

Introducing the SAP Integration Suite

At the core of your SAP Integration Migration strategy is the SAP Integration Suite—SAP’s robust, enterprise-grade integration platform-as-a-service (iPaaS). Built for the intelligent enterprise, the Integration Suite is designed to support a wide range of integration scenarios, including application-to-application (A2A), business-to-business (B2B), and business-to-government (B2G) communication.

Key capabilities of SAP Integration Suite include:

Pre-built integration content from SAP API Business Hub

API management and security tools

Cloud Integration for seamless data exchange across environments

Event-driven architecture support

Centralized monitoring and operations dashboard

AI-based integration advisor for faster design and deployment

It is not only a replacement for PI/PO but a leap forward into intelligent, adaptable enterprise integration.

What You’ll Learn in the NeuVays Webinar

NeuVays’ “A Success Blueprint – PI/PO Migration to SAP Integration Suite” webinar is built around helping enterprises adopt a structured, efficient, and risk-mitigated approach to SAP Integration Migration. The session will focus on real-world insights and proven best practices that can make your migration project a success.

Key Takeaways from the Webinar:

1. Strategic SAP Migration Planning

Discover how to build a comprehensive SAP Migration Strategy that balances technical feasibility with business priorities. From integration landscape assessments to resource planning and phased rollouts, you’ll get a complete migration roadmap.

2. Technical Deep Dive: From PI/PO to Integration Suite

Learn the architectural and functional differences between PI/PO and Integration Suite. Understand how message mapping, adapters, and integration patterns translate in the new environment.

3. Risk Management and Change Control

Uncover methods to minimize downtime, protect data integrity, and manage user acceptance during your SAP PI/PO Migration. Get expert advice on parallel deployment and rollback plans.

4. Real-World Case Studies

See how enterprises in industries such as retail, manufacturing, and fashion have completed successful SAP PI/PO Migration to Integration Suite projects—achieving lower TCO, higher agility, and faster integration cycles.

The Phased Approach to SAP Integration Migration

To execute a smooth SAP Integration Migration, NeuVays recommends a phased approach tailored to your organizational goals and IT landscape.

Phase 1: Assessment

Audit existing PI/PO interfaces and business-critical integrations

Document technical dependencies and custom code

Identify reusability potential for adapters and mappings

Phase 2: Planning

Design the new integration landscape using SAP Integration Suite

Define roles, project scope, and timeline

Choose a pilot process for testing

Phase 3: Execution

Configure and test integration flows in Integration Suite

Rebuild or refactor where necessary

Use migration tools or manual methods depending on complexity

Phase 4: Go-Live and Optimization

Deploy in phases to reduce risk

Monitor integration performance and resolve issues

Train teams and establish ongoing support

Benefits of Migrating to SAP Integration Suite

By transitioning to SAP Integration Suite, organizations unlock a host of benefits:

Cloud readiness: Integrate with SaaS, cloud-native, and on-premise apps effortlessly

Operational efficiency: Reduce the time and cost of maintaining complex middleware systems

Future scalability: Easily scale integrations as your ecosystem grows

Innovation enablement: Leverage AI, ML, and analytics in integration processes

Compliance and security: Centralized governance and enhanced security protocols

This migration isn't just about staying up to date—it's about preparing your business for the future.

Who Should Attend This Webinar?

This webinar is tailored for enterprise professionals responsible for integration architecture, cloud transformation, and SAP platform management:

CIOs, CTOs, and IT Directors

SAP Integration Architects

Technical Consultants and Project Managers

Digital Transformation Teams

Whether your organization is just beginning to explore migration or is already in the planning stages, this webinar will help you avoid costly mistakes and fast-track your success.

Final Thoughts

The path to modernization starts with bold decisions and trusted expertise. SAP Integration Migration isn’t just a technical project—it’s a strategic transformation. NeuVays’ upcoming webinar “A Success Blueprint – PI/PO Migration to SAP Integration Suite” offers you the expert-led guidance needed to navigate this complex journey with confidence.

If you’re ready to improve integration performance, align with SAP’s innovation roadmap, and build a truly intelligent enterprise—this webinar is your starting point.

🔗 Secure Your Spot Today

Seats are limited for this exclusive session. 👉 Register Now 👉 Connect with NeuVays SAP Integration Experts

#sap integration suite#sap integration#sap pi/po#pi/po migration#sap migration#sap pi/po support ends

0 notes

Text

12-Step Scalable Web App Deployment on Cloud – 2025 Guide

Are you a developer, DevOps engineer, or tech founder looking to scale your web app infrastructure in 2025?

We've created a step-by-step visual guide that walks you through the entire cloud deployment pipeline — from infrastructure planning and Kubernetes setup to CI/CD, database scaling, and blue-green deployments.

Check it out here: 12-Step Scalable Web App Deployment on Cloud (SlideShare)

What’s Inside:

Infrastructure planning (regions, zones, services)

Docker & Kubernetes setup

CI/CD with GitOps

Load balancing, auto-scaling

Vault-based secret management

CDN, rollbacks & uptime alerts

Whether you're part of a web app development company or exploring DevOps software development, this guide offers practical, real-world steps to build high-performance cloud-native apps.

0 notes

Text

Exploring the Top Tools for Cloud Based Network Monitoring in 2025

With businesses increasingly implementing cloud-first programming, there has been no time when network visibility is more required. Conventional monitoring tools are no longer sufficient to monitor the performance, latency and security of the modern and distributed infrastructures.

And that is where cloud based network monitoring enters. It allows IT teams that have hybrid and cloud environments to have real-time views, remotely access them, and also have improved scalability.

Some of those tools are remarkable in terms of their features, user-friendliness, and in-depth analytics, in 2025. This is the list totaling the best alternatives that are assisting companies keep in front of the network problems prior to them affecting operations.

1. Datadog

DevOps and IT teams are fond of using Datadog due to its cloud-native architecture and extensive availability. It also provides visibility of full-stack, metrics, traces as well as logs, all on a single dashboard.

Its Network Performance Monitoring (NPM) allows identifying bottlenecks, tracing traffic and tracking cloud services such as AWS, Azure and Google cloud. It provides teams with the ability to move quickly with real-time alerts and customizable dashboards with the insights.

2. SolarWinds Hybrid Cloud Observability

SolarWinds is traditionally associated with on-prem monitoring solutions, whereas, with shifts toward hybrid cloud observability, it will find itself extremely pertinent in 2025. The platform has evolved and is able to combine conventional network monitoring with cloud insights.

It provides anomaly detection, visual mapping, deep packet inspection using AI. This aids IT teams to troubleshoot through complex environments without switching between tools.

3. ThousandEyes by Cisco

ThousandEyes specializes in digital experience monitoring, and it is especially applicable to large, distributed networks. It also delivers end to end visibility at user to application level across third party infrastructure.

Its cloud agents and the internet outage tracking ensure that businesses can find out in a short time whether a performance problem is either internal or external. The strong support of Cisco gives the accuracy and the access of its network data.

4. LogicMonitor

LogicMonitor is a simple to deploy and scale agentless platform. It is awesome when an organization needs automation and little configuration.

The tool measures bandwidth, uptime, latency and cloud performance among various providers. Its predictive analytics not only identify trends, but they also notify teams before minor problems become major ones.

5. ManageEngine OpManager Plus

OpManager Plus is a powerful tool to be used by those who require an infrastructure support combination of the traditional and cloud-based monitoring. It is compatible with hybrid networks that provide stats such as device health, traffic and application performance.

It is distinguished by the UI, which is clean, self-explanatory, and can be customized. It especially is suitable in the middle-sized IT departments who require an unobstructed glance of both physical and virtual systems.

6. PRTG Network Monitor (Cloud Hosted)

The hosted version of PRTG has the same functions as its widely used desktop version, and its availability is on cloud levels. It carries sensors to keep track of server availability to network capacity and usage as well as cloud services.

It is perfect when companies require such a convenient approach as a license and payment as you go prices. Even the simpler option of the tool can be a good option to apply to the project where IT team size is smaller or you are at the beginning of the cloud migration.

What to Look for in a Monitoring Tool

When choosing a cloud network monitoring solution, it's important to focus on a few key aspects:

Ease of deployment and scalability

Multi-cloud and hybrid support

Custom alerting and reporting

Integration with your existing stack

User-friendly dashboards and automation

Each business is unique in its requirements and there is no such thing like the best tool, only the tool that suits your infrastructure, the size of your team and your response requirements.

Conclusion

With evolving infrastructure it is important to have the correct tools implemented to observe performance and availability. In 2025, the cloud based network monitoring tools will be more competitive, intelligent and responsive than ever.

Be it a Hollywood-sized company or a small IT start-up, by investing in any of these best platforms, you have the sight of keeping secure, flexible and consistent in a cloud driven planet.

0 notes

Text

Migrating Virtual Machines to Red Hat OpenShift Virtualization with Ansible Automation Platform

As enterprises modernize their infrastructure, migrating traditional virtual machines (VMs) to container-native platforms is no longer just a trend — it’s a necessity. One of the most powerful solutions for this evolution is Red Hat OpenShift Virtualization, which allows organizations to run VMs side-by-side with containers on a unified Kubernetes platform. When combined with Red Hat Ansible Automation Platform, this migration can be automated, repeatable, and efficient.

In this blog, we’ll explore how enterprises can leverage Ansible to seamlessly migrate workloads from legacy virtualization platforms (like VMware or KVM) to OpenShift Virtualization.

🔍 Why OpenShift Virtualization?

OpenShift Virtualization extends OpenShift’s capabilities to include traditional VMs, enabling:

Unified management of containers and VMs

Native integration with Kubernetes networking and storage

Simplified CI/CD pipelines that include VM-based workloads

Reduction of operational overhead and licensing costs

🛠️ The Role of Ansible Automation Platform

Red Hat Ansible Automation Platform is the glue that binds infrastructure automation, offering:

Agentless automation using SSH or APIs

Pre-built collections for platforms like VMware, OpenShift, KubeVirt, and more

Scalable execution environments for large-scale VM migration

Role-based access and governance through automation controller (formerly Tower)

🧭 Migration Workflow Overview

A typical migration flow using Ansible and OpenShift Virtualization involves:

1. Discovery Phase

Inventory the source VMs using Ansible VMware/KVM modules.

Collect VM configuration, network settings, and storage details.

2. Template Creation

Convert the discovered VM configurations into KubeVirt/OVIRT VM manifests.

Define OpenShift-native templates to match the workload requirements.

3. Image Conversion and Upload

Use tools like virt-v2v or Ansible roles to export VM disk images (VMDK/QCOW2).

Upload to OpenShift using Containerized Data Importer (CDI) or PVCs.

4. VM Deployment

Deploy converted VMs as KubeVirt VirtualMachines via Ansible Playbooks.

Integrate with OpenShift Networking and Storage (Multus, OCS, etc.)

5. Validation & Post-Migration

Run automated smoke tests or app-specific validation.

Integrate monitoring and alerting via Prometheus/Grafana.

- name: Deploy VM on OpenShift Virtualization

hosts: localhost

tasks:

- name: Create PVC for VM disk

k8s:

state: present

definition: "{{ lookup('file', 'vm-pvc.yaml') }}"

- name: Deploy VirtualMachine

k8s:

state: present

definition: "{{ lookup('file', 'vm-definition.yaml') }}"

🔐 Benefits of This Approach

✅ Consistency – Every VM migration follows the same process.

✅ Auditability – Track every step of the migration with Ansible logs.

✅ Security – Ansible integrates with enterprise IAM and RBAC policies.

✅ Scalability – Migrate tens or hundreds of VMs using automation workflows.

🌐 Real-World Use Case

At HawkStack Technologies, we’ve successfully helped enterprises migrate large-scale critical workloads from VMware vSphere to OpenShift Virtualization using Ansible. Our structured playbooks, coupled with Red Hat-supported tools, ensured zero data loss and minimal downtime.

🔚 Conclusion

As cloud-native adoption grows, merging the worlds of VMs and containers is no longer optional. With Red Hat OpenShift Virtualization and Ansible Automation Platform, organizations get the best of both worlds — a powerful, policy-driven, scalable infrastructure that supports modern and legacy workloads alike.

If you're planning a VM migration journey or modernizing your data center, reach out to HawkStack Technologies — Red Hat Certified Partners — to accelerate your transformation. For more details www.hawkstack.com

0 notes