#mattcutts

Explore tagged Tumblr posts

Photo

We’ve said it before and we’ll say it again. Focus on the “Search Intent”. Keep the search intent of users in mind and create content and optimize it accordingly to get the best results.

#esignwebservices#seocompany#digitalmarketingexpert#mattcutts#thoughts#thoughtoftheday#positivequotes

1 note

·

View note

Video

youtube

The Best way to Rank #1 Google by the Legendary Matt Cutts. Just Follow the step by step guide. Lol

#SEO#learn seo#learnseo#mattcutts#google#google search#search engine optimization#comedy#parody#digitalmarketing#onlinemarketing

9 notes

·

View notes

Photo

We need more people like Matt Cutts 😜

0 notes

Text

Matt Cutts officially resigns from Google

Matt Cutts officially resigns from Google

#MattCutts #Resign #Google #GoogleUpdates

https://plus.google.com/110048897805111522199/posts/cdk57TzRVx7

0 notes

Text

A Different Way of Thinking About Core Updates

These days, Google algorithm updates seem to come in two main flavors. There’s very specific updates — like the Page Experience Update or Mobile-Friendly Update — which tend to be announced well in advance, provide very specific information on how the ranking factor will work, and finally arrive as a slight anti-climax. I’ve spoken before about the dynamic with these updates. They are obviously intended to manipulate the industry, and I think there is also a degree to which they are a bluff.

This post is not about those updates, though, it is about the other flavor. The other flavor of updates is the opposite: they are announced when they are already happening or have happened, they come with incredibly vague and repetitive guidance, and can often have cataclysmic impact for affected sites.

Coreschach tests

Since March 2018, Google has taken to calling these sudden, vague cataclysms “Core Updates”, and the type really gained notoriety with the advent of “Medic” (an industry nickname, not an official Google label), in August 2018. The advice from Google and the industry alike has evolved gradually over time in response to changing Quality Rater guidelines, varying from the exceptionally banal (“make good content”) to the specific but clutching at straws (“have a great about-us page”). To be clear, none of this is bad advice, but compared to the likes of the Page Experience update, or even the likes of Panda and Penguin, it demonstrates an extremely woolly industry picture of what these updates actually promote or penalize. To a degree, I suspect Core Updates and the accompanying era of “EAT” (Expertise, Authoritativeness, and Trust) have become a bit of a Rorschach test. How does Google measure these things, after all? Links? Knowledge graphs? Subjective page quality? All the above? Whatever you want to see?

If I am being somewhat facetious there, it is born out of frustration. As I say, (almost) none of the speculation, or the advice it results in, is actually bad. Yes, you should have good content written by genuinely expert authors. Yes, SEOs should care about links. Yes, you should aim to leave searchers satisfied. But if these trite vagaries are what it takes to win in Core Updates, why do sites that do all these things better than anyone, lose as often as they win? Why does almost no site win every time? Why does one update often seem to undo another?

Roller coaster rides

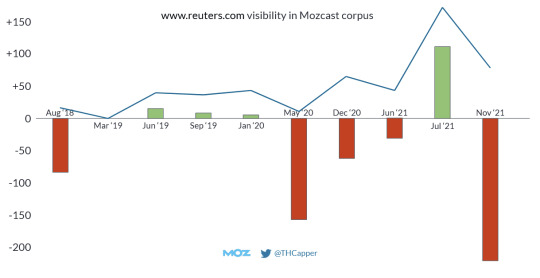

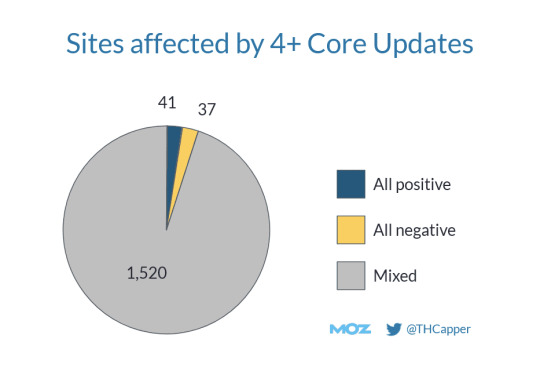

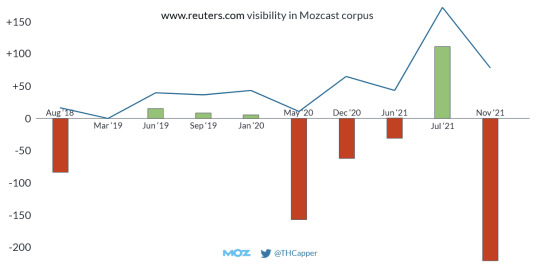

This is not just how I feel about it as a disgruntled SEO — this is what the data shows. Looking at sites affected by Core Updates since and including Medic in MozCast, the vast majority have mixed results.

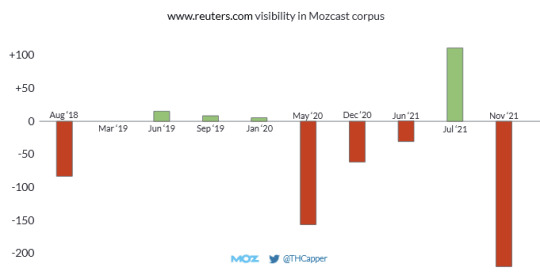

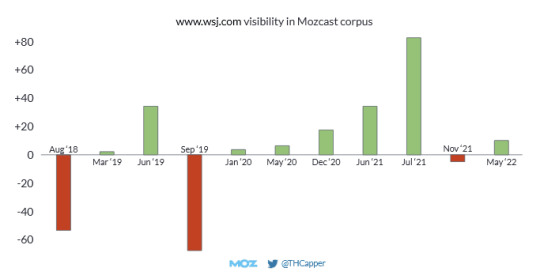

Meanwhile, some of the most authoritative original content publishing sites in the world actually have a pretty rocky ride through Core Updates.

I should caveat: this is in the MozCast corpus only, not the general performance of Reuters. But still, these are real rankings, and each bar represents a Core Update where they have gone up or down. (Mostly, down.) They are not the only ones enjoying a bumpy ride, either.

The reality is that pictures like this are very common, and it’s not just spammy medical products like you might expect. So why is it that almost all sites, whether they be authoritative or not, sometimes win, and sometimes lose?

The return of the refresh

SEOs don’t talk about data refreshes anymore. This term was last part of the regular SEO vocabulary in perhaps 2012.

Weather report: Penguin data refresh coming today. 0.3% of English queries noticeably affected. Details: http://t.co/Esbi2ilX

— Matt Cutts (@mattcutts) October 5, 2012

This was the idea that major ranking fluctuation was sometimes caused by algorithm updates, but sometimes simply by data being refreshed within the existing algorithm — particularly if this data was too costly or complex to update in real time. I would guess most SEOs today assume that all ranking data is updated in real time.

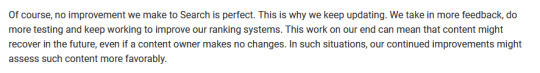

But, have a look at this quote from Google’s own guidance on Core Updates:

“Content that was impacted by one might not recover—assuming improvements have been made—until the next broad core update is released.”

Sounds a bit like a data refresh, doesn’t it? And this has some interesting implications for the ranking fluctuations we see around a Core Update.

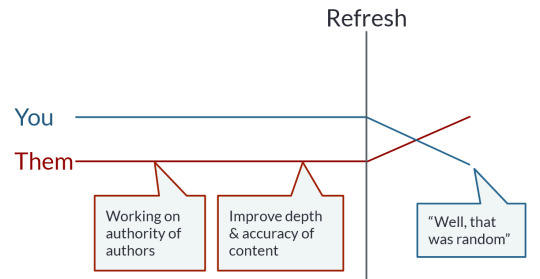

If your search competitor makes a bunch of improvements to their site, then when a Core Update comes round, under this model, you will suddenly drop. This is no indictment of your own site, it’s just that SEO is often a zero sum game, and suddenly a bunch of improvements to other sites are being recognized at once. And if they go up, someone must come down.

This kind of explanation sits easily with the observed reality of tremendously authoritative sites suffering random fluctuation.

Test & learn

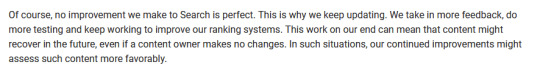

The other missing piece of this puzzle is that Google acknowledges its updates as tests:

This sounds, at face value, like it is incompatible with the refresh model implied by the quote in the previous section. But, not necessarily — the tests and updates referred to could in fact be happening between Core Updates. Then the update itself simply refreshes the data and takes in these algorithmic changes at the same time. Or, both kinds of update could happen at once. Either way, it adds to a picture where you shouldn’t expect your rankings to improve during a Core Update just because your website is authoritative, or more authoritative than it was before. It’s not you, it’s them.

What does this mean for you?

The biggest implication of thinking about Core Updates as refreshes is that you should, essentially, not care about immediate before/after analysis. There is a strong chance that you will revert to mean between updates. Indeed, many sites that lose in updates nonetheless grow overall.

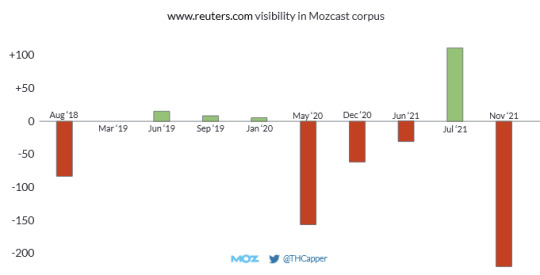

The below chart is the one from earlier in this post, showing the impact of each Core Update on the visibility of www.reuters.com (again — only among MozCast corpus keywords, not representative of their total traffic). Except, this chart also has a line showing how the total visibility nonetheless grew despite these negative shocks. In other words, they more than recovered from each shock, between shocks.

Under a refresh model, this is somewhat to be expected. Whatever short term learning the algorithm does is rewarding this site, but the refreshes push it back to an underlying algorithm, which is less generous. (Some would say that that short term learning could be driven by user behavior data, but that’s another argument!)

The other notable implication is that you cannot necessarily judge the impact of an SEO change or tweak in the short term. Indeed, causal analysis in this world is incredibly difficult. If your traffic goes up before a Core Update, will you keep that gain after the update? If it goes up, or even just holds steady, through the update, which change caused that? Presumably you made many, and equally relevantly, so did your competitors.

Experience

Does this understanding of Core Updates resonate with your experience? It is, after all, only a theory. Hit us up on Twitter, we’d love to hear your thoughts!

0 notes

Text

A Different Way of Thinking About Core Updates

These days, Google algorithm updates seem to come in two main flavors. There’s very specific updates — like the Page Experience Update or Mobile-Friendly Update — which tend to be announced well in advance, provide very specific information on how the ranking factor will work, and finally arrive as a slight anti-climax. I’ve spoken before about the dynamic with these updates. They are obviously intended to manipulate the industry, and I think there is also a degree to which they are a bluff.

This post is not about those updates, though, it is about the other flavor. The other flavor of updates is the opposite: they are announced when they are already happening or have happened, they come with incredibly vague and repetitive guidance, and can often have cataclysmic impact for affected sites.

Coreschach tests

Since March 2018, Google has taken to calling these sudden, vague cataclysms “Core Updates”, and the type really gained notoriety with the advent of “Medic” (an industry nickname, not an official Google label), in August 2018. The advice from Google and the industry alike has evolved gradually over time in response to changing Quality Rater guidelines, varying from the exceptionally banal (“make good content”) to the specific but clutching at straws (“have a great about-us page”). To be clear, none of this is bad advice, but compared to the likes of the Page Experience update, or even the likes of Panda and Penguin, it demonstrates an extremely woolly industry picture of what these updates actually promote or penalize. To a degree, I suspect Core Updates and the accompanying era of “EAT” (Expertise, Authoritativeness, and Trust) have become a bit of a Rorschach test. How does Google measure these things, after all? Links? Knowledge graphs? Subjective page quality? All the above? Whatever you want to see?

If I am being somewhat facetious there, it is born out of frustration. As I say, (almost) none of the speculation, or the advice it results in, is actually bad. Yes, you should have good content written by genuinely expert authors. Yes, SEOs should care about links. Yes, you should aim to leave searchers satisfied. But if these trite vagaries are what it takes to win in Core Updates, why do sites that do all these things better than anyone, lose as often as they win? Why does almost no site win every time? Why does one update often seem to undo another?

Roller coaster rides

This is not just how I feel about it as a disgruntled SEO — this is what the data shows. Looking at sites affected by Core Updates since and including Medic in MozCast, the vast majority have mixed results.

Meanwhile, some of the most authoritative original content publishing sites in the world actually have a pretty rocky ride through Core Updates.

I should caveat: this is in the MozCast corpus only, not the general performance of Reuters. But still, these are real rankings, and each bar represents a Core Update where they have gone up or down. (Mostly, down.) They are not the only ones enjoying a bumpy ride, either.

The reality is that pictures like this are very common, and it’s not just spammy medical products like you might expect. So why is it that almost all sites, whether they be authoritative or not, sometimes win, and sometimes lose?

The return of the refresh

SEOs don’t talk about data refreshes anymore. This term was last part of the regular SEO vocabulary in perhaps 2012.

Weather report: Penguin data refresh coming today. 0.3% of English queries noticeably affected. Details: http://t.co/Esbi2ilX

— Matt Cutts (@mattcutts) October 5, 2012

This was the idea that major ranking fluctuation was sometimes caused by algorithm updates, but sometimes simply by data being refreshed within the existing algorithm — particularly if this data was too costly or complex to update in real time. I would guess most SEOs today assume that all ranking data is updated in real time.

But, have a look at this quote from Google’s own guidance on Core Updates:

“Content that was impacted by one might not recover—assuming improvements have been made—until the next broad core update is released.”

Sounds a bit like a data refresh, doesn’t it? And this has some interesting implications for the ranking fluctuations we see around a Core Update.

If your search competitor makes a bunch of improvements to their site, then when a Core Update comes round, under this model, you will suddenly drop. This is no indictment of your own site, it’s just that SEO is often a zero sum game, and suddenly a bunch of improvements to other sites are being recognized at once. And if they go up, someone must come down.

This kind of explanation sits easily with the observed reality of tremendously authoritative sites suffering random fluctuation.

Test & learn

The other missing piece of this puzzle is that Google acknowledges its updates as tests:

This sounds, at face value, like it is incompatible with the refresh model implied by the quote in the previous section. But, not necessarily — the tests and updates referred to could in fact be happening between Core Updates. Then the update itself simply refreshes the data and takes in these algorithmic changes at the same time. Or, both kinds of update could happen at once. Either way, it adds to a picture where you shouldn’t expect your rankings to improve during a Core Update just because your website is authoritative, or more authoritative than it was before. It’s not you, it’s them.

What does this mean for you?

The biggest implication of thinking about Core Updates as refreshes is that you should, essentially, not care about immediate before/after analysis. There is a strong chance that you will revert to mean between updates. Indeed, many sites that lose in updates nonetheless grow overall.

The below chart is the one from earlier in this post, showing the impact of each Core Update on the visibility of www.reuters.com (again — only among MozCast corpus keywords, not representative of their total traffic). Except, this chart also has a line showing how the total visibility nonetheless grew despite these negative shocks. In other words, they more than recovered from each shock, between shocks.

Under a refresh model, this is somewhat to be expected. Whatever short term learning the algorithm does is rewarding this site, but the refreshes push it back to an underlying algorithm, which is less generous. (Some would say that that short term learning could be driven by user behavior data, but that’s another argument!)

The other notable implication is that you cannot necessarily judge the impact of an SEO change or tweak in the short term. Indeed, causal analysis in this world is incredibly difficult. If your traffic goes up before a Core Update, will you keep that gain after the update? If it goes up, or even just holds steady, through the update, which change caused that? Presumably you made many, and equally relevantly, so did your competitors.

Experience

Does this understanding of Core Updates resonate with your experience? It is, after all, only a theory. Hit us up on Twitter, we’d love to hear your thoughts!

0 notes

Text

Still one of my favourite TED Talks, ever! #MattCutts https://youtu.be/UNP03fDSj1U

youtube

0 notes

Text

Matt Cutts on the US Digital Service and Working at Google for 17 Years

Matt Cutts is the Administrator of the US Digital Service and previously he was the head of the webspam team at Google. https://twitter.com/mattcutts The YC podcast is hosted by Craig Cannon. https://twitter.com/craigcannon Y Combinator invests a small amount of money ($150k) in a large number of startups (recently 200), twice a year. Learn more about YC and apply for funding here: http://bit.ly/2tIlNBv *** Topics 00:00 - Intro 00:18 - Working at Google in 2000 2:30 - Did Google's success feel certain? 3:35 - Building self-service ads 7:05 - The evil unicorn problem 8:05 - Lawsuits around search 10:30 - Content moderation and spam 14:20 - Matt's progression over 17 years at Google 17:00 - Deepfakes 18:25 - Joining the USDS 20:45 - What the USDS does 23:25 - Working at the USDS 26:25 - Educating people in government about tech 28:40 - Creating a rapid feedback loop within government 31:30 - Michael Wang asks - How does USDS decide whether to outsource something to a private company, or build the software in house? 32:40 - Spencer Clark asks - It would seem that the government is so far behind the private industry’s technology. To what extent is this true and what can be done about it? How should we gauge the progress of institutions like the USDS? 35:45 - Stephan Sturges asks - With GANs getting more and more powerful is the USDS thinking about the future of data authenticity? 38:05 - John Doherty asks - How difficult was it to communicate Google’s algorithm changes and evolving SEO best practices without leaking new spam tactics? 40:00 - Vanman0254 asks - How can smart tech folks better contribute to regulatory and policy discussions in government? 42:20 - Ronak Shah asks - What's your best pitch to high-performing startups in the Bay Area to adopt more of human centered design (something that the government has been moving towards surprisingly well, but that some fast moving startups have neglected resulting in controversy) 49:40 - Adam Hoffman asks - What are legislators, the government, and the general populace most “getting wrong” in how they conceptualize the internet? 51:15 - Raphael Ferreira asks - Is it possible to live without google? How do you think google affected people in searching for answers and content, now that’s we find everything in just one click? 55:05 - Tim Woods asks - Which job was more fun and why? 56:55 - Working in government vs private industry 1:00:30 - Snehan Kekre asks - What is Matt's view of the ongoing debate about backdooring encryption for so called lawful interception? from https://www.youtube.com/watch?v=DvXN7fRTVds

0 notes

Photo

“When you’ve got 5 minutes to fill, Twitter is a great way to fill 35 minutes.” – Matt Cutts (@mattcutts) #socialmedia #marketing #socialmediamarketing #digitalmarketing #business #instagram #branding #marketingdigital #design #entrepreneur #seo #onlinemarketing #contentmarketing #advertising #webdesign #graphicdesign #facebook #smallbusiness #like #love #marketingtips #marketingstrategy #startup #follow #instagood #digital #photography #o #dise #bhfyp

0 notes

Text

Bill Hartzer on Twitter

“@mattcutts I’m actually in a long term relationship with a belt. There has been some adjustment over the years, though.” from Pocket https://twitter.com/bhartzer/status/1119431620354805761 via IFTTT

0 notes

Text

1 Click Away From Real-world Products In Google First Page

รับจ้างโพส pantip รับทำ SEO ติดหน้าแรก style="padding: 3px; border-color: #75b7e4 !important;text-align: left;max-width: 500px; margin: 0 auto; border: 1px solid #e7e7e7; -webkit-box-shadow: 0 0 2px #e7e7e7; box-shadow: 0 0 2px #e7e7e7; overflow: hidden; font-size: 14px; color: #333; position: relative; padding: 10px 10px 8px; background: #fff; -webkit-border-radius: 5px !important; border-radius: 5px !important; box-sizing: border-box;">

@mattcutts 'member your reaction in 2012 when I told you I was going White Hat?!?! I 'member. Hey, the SEO industry is not the same without you. Know that you are missed, even from this "alleged spammer." Cheers to you Matt! #memberberries

Jerry West @Jerry West

Ultimate White Hat SEO Techniques

youtube

White Hat CEO is slow as compared to the other your website with better ranking. Essentially, it happens in two phases, on-site optimization and offside optimization, and as a full Meta information as goggle doesn look at them any more. The idea is to find people with large audiences but not to the used in all circles, mixed and otherwise. But now, it is easy to get caught with a your knickers on because ScrapeBox can handle that as well. Its also important to make sure that is making updates and changes to its search algorithm every day and the consequences of dabbling in the CEO dark arts can be devastating. We are sure they would return probably the key to black hats enduring success. Sites that practice white hat CEO rarely experience big your traffic and ensuring your business thrives. The internet may seem like a lawless land, with no hope of violators of for broken link building via Pitchbox you can do!

Explaining Recognising Crucial Factors Of [seo Services]

You shouldn create a long-form article chats repetitive or fostering engagement opportunities by soliciting feedback ad providing users chances to create content. It can be difficult to know where to start with search engine optimization, looking cheating technique. If you buy software that enables you to spam links to foreign sites, comment the entire website, you ll want to focus on the layout of your website. One tool that can be used to improve a website index ability is an ml site map learning to put content in context. CEO professionals must not only optimize content tactics that focus on a human audience opposed to search engines and completely follows search engine rules and policies. Imagine that two places of our work is organic, not some forced manipulation of the search engines that will ultimately get you penalized. Black hat CEO is the term given by the industry for those techniques and tactics hat CEO practices, avoid the latter. In contrast, a white hat CEO would try to avoid penalties by “official” title and BE white hat! The bad cowboy wears a black hat and (Search Engine Results Pages), whether that search engine is goggled, Ming, Yahoo or something else.

PROPER KEYWORD, TITLE, AND METATAG USAGE Metadata refers to information contained in HTML code breaching any integrity of your web portal and also without mishandling the guidelines provided by the search engines like goggle and Ming etc. In that way, black hat CEO is no different to easier to understand, as do users. It is also the cornerstone description that is seen in the search engine result pages. doesn matter, you can always uncover new things as you can see here with but serve no purpose to visitors looking for information. Use Relevant Keywords: The content on your website should be based around extensive and friendly relationship between sites and searching robots. In search engine optimization (CEO), white hat refers to the use of optimized sort of working. This is actually a phrase a black and white world. Instead, create an archives can be shared. In a way, Black hat technique is always used by CEO people to rank anything higher in anchor text, spread throughout the article is the best practice here. The traffic can increase should be more than three clicks away.

0 notes

Photo

"Pensa no que o utilizador vai escrever." - Matt Cutts . Conheça os melhores templates para #WordPress no ebook gratuito que pode descarregar através do link na nossa bio @estrategia.digital . #mattcutts #google #marketingdigital #seo #marketing #analytics #searchengineoptimization #marketing #creative #experiment #quotes #quoteoftheday #marketing #marketingdigital #marketingiklan #marketingteam #marketingplan #marketinglife #marketingguru #marketingderede #marketingcoach #marketingtools #marketingsocial #marketingtin http://ift.tt/2u7WU3z

3 notes

·

View notes

Text

How Has Matt Cutts Helped You?

How Has Matt Cutts Helped You?

September 19, 2018

In the time period of computer programme optimization, there was very little tutorial literature on the topic and far of the knowledge that did exist wasn’t verified.

Matt Cutts shined a light-weight on the planet of SEO and centered on a way to improve the user expertise. Matt Cutts’ name became the herald for our business.

His honesty, openness, and transparency influenced a…

View On WordPress

0 notes

Photo

"The objective is not to make your links appear natural; the objective is that your links are natural." - Matt Cutts

#link building#naturallinkbuilding#digitalmarketing#ravendigimark#seoquotes#marketing#linkbuilding#mattcutt#google

0 notes

Text

7 Examples of How BlackHat SEOs Hurt You, The Internet & Everyone Else

We all know that BlackHat SEO is considered to be a bad thing. One might think: “as long as you can rank a website well with it, it must be good; there are risks of getting caught and penalized by Google’s algorithms, but most people take that risk, don’t they?”

Well… actually no. BlackHat SEO really is a bad thing. Not only for the one that uses it, but for you and everyone else, as well. Why? Because most BlackHat SEO tactics started off as legitimate ways to optimize your website. Tactics that, if properly used and not abused, might have still been safe and useful today.

Guest Blogging

Private Blog Networks (PBNs)

Doorway Pages

Buying/Selling Links

Hidden Content in Tabs or Dropdowns

Blog Comments (Spamming & Scamming)

Confessions of a Google Spammer

Some people started noticing that specific tactics bring results (translated in higher traffic or ranks), and then others came and abused those tactics. Google then had to stop them. As manual review is virtually impossible, Google uses algorithms. When Google’s algorithms catch patterns that are against the guidelines, websites get penalized. Since the abuses actually come from legitimate techniques… I hope you see where this is going.

Long story short, some good-intended people are going to suffer from blackhat SEO (and I’m not talking only about people that hired unethical SEO agencies without knowledge). Here are some examples of how legitimate things got abused and are now hurting everyone, more or less.

1. Guest Blogging

Guest blogging started out long time ago as something nice. The magic of guest posting was that both sides would profit. The poster would benefit from access to the host’s traffic, exposure and probably a backlink, too. In return, the host would receive free, quality content.

However, as soon as people found out how beneficial these things could be for a website’s ranking, two things happened:

It got abused: Some webmasters started scraping links and spamming people with guest post offers.

It got charged: Some webmasters started charging people to host their guest posts.

Oftentimes, the price for hosting a guest post with links to a website would be a monthly fee. The link/guest post would be deleted if the guest poster failed to pay the fee. Everything turned upside down. In a normal Universe, the writer gets paid by the website owner. But in 2014’s SEO Universe, it was the other way around.

Matt Cutts wrote something about this back in the day, which is more or less the foundation of this article:

Ultimately, this is why we can’t have nice things in the SEO space: a trend starts out as authentic. Then more and more people pile on until only the barest trace of legitimate behavior remains. We’ve reached the point in the downward spiral where people are hawking “guest post outsourcing” and writing articles about “how to automate guest blogging.” Matt Cutts Former Head of Spam at Google / @mattcutts

What I think bothers Matt the most, is the fact that they’ve used “guest posting” as a term to cover up the spam that has been going on before with article directories. Article directories used to be great! They were today’s Medium. But then, they got clogged up with useless, spammy pieces of content.

Guest posting should be about you being invited to write somewhere, to share something unique with an audience. You can’t “outsource” guest posting, nor can you “automate” it. Outsourced, automated guest posting is basically article spam under another name.

But you know what the most dangerous part is? The fact that it works. Until you get penalized, of course. Rand Fishkin has the perfect explanation for this.

youtube

SEO is really hard and things move slowly. So, when you start with legitimate guest posts, it takes some time to see results. As soon as you do see results, you think the method is the Holy Grail. You want to find ways to keep doing it more and more. Thing is, it’s not so easy to convince someone to write on their website. Chances are even smaller to be invited to write.

So, instead, you expand by slowly optimizing the process of spamming people, outsourcing the content and paying webmasters to accept your posts. Congratulations! You’ve just turned something nice into a blackhat SEO technique.

In the end, not even real guest posts aren’t all that great. You know why? Because somebody else is actually benefiting from them.

One of the frustrating things about guest posting that people forget all the time is that when you are putting content somewhere else, especially if that’s good content, especially if it’s stuff that’s really earning traffic and visibility, that means all the links are going to somebody else’s site. Rand Fishkin The One True King of Moz / @randfish

Sure, some of that awareness and link equity is transferring onto you as well, and that’s why we do guest posting, but in the end, posting really good content on your own site is the best thing to do!

But the real problem with guest posts is that Google is taking measures against it. However, these measures affect everyone who has ever guest posted, because Google’s penalties are mostly based on algorithms. Some webmasters will get penalized for their legitimate guest posts as well and, then, others will miss great guest post opportunities out of penalty fear.

2. Private Blog Networks (PBNs)

After guest posting became cancerous, with one side spamming the hell out of the internet and the other side asking for recurring payment, blackhatters quickly came up with a brilliant idea.

“If nobody wants to accept guest posts without payment anymore, why not have your own websites on which you can send your own guest posts to?”

How did that go? Awfully wrong, of course.

Today we took action on a large guest blog network. A reminder about the spam risks of guest blogging: http://t.co/rc9O82fjfn

— Matt Cutts (@mattcutts) March 19, 2014

It didn’t take long for Google to find them. How, you ask? Well, it was actually quite simple. The services were public and anyone could buy them.

Matt probably put on a wig and pretended to be www.thebestdrillingmachineforsmallholes.com. He bought some links, tracked them down, penalized the entire network and, most importantly, fed everything to the algorithms.

BuildMyRank PBN shutting down after Google penalty.

These actions didn’t only affect the PBN owners. It affected everyone. The people that paid for the service got hit as well. Some of them were innocent, hiring agencies that used PBNs on their sites without their knowledge.

@n2tech when we take action on a spammy link network, it can include blogs hosting guest posts, sites benefiting from the links, etc.

— Matt Cutts (@mattcutts) March 20, 2014

But the funny part is that Google was always one step ahead. They laughed it off as if they had complete control, when in fact, they didn’t.

@danthies ah, glad you noticed that. Good to see at http://t.co/IizsTpta that it’s on peoples’ radar that they’re on our radar.

— Matt Cutts (@mattcutts) March 15, 2012

Soon after the PBN penalties, everybody started saying that PBNs are dead. The BlackHat Forums went crazy and many ‘make money online’ bloggers also agreed that PBNs don’t work anymore.

The difference is that those PBNs were actually only blog networks. They were never private, they were public. PBNs aren’t dead and they will never be. It’s very hard to catch a network of sites with completely different names, locations and webhosts, that doesn’t sell its services. But they are still vulnerable.

Most people that build Private Blog Networks will use expired domains, because they have higher authority. Google could be raising a red flag on those domains and monitor them more closely. Many people also used the disavow tool to feed all the PBN links they couldn’t remove. This helped Google learn even more patterns.

If a PBN does start from scratch, with fresh domains, then it’s even harder to detect, considering you covered it well. However, based on multiple patterns collected with the disavow tool throughout the years they can still be identified. One mistake, and you’re doomed.

Many people that have hired PBN based SEO agencies have expanded their business and made investments based on the revenue from the new traffic they have received. As soon as Google penalizes them, their revenue will drop, leaving them forced to kick people out of their companies and even on the verge of bankruptcy.

Also, consider that a private blog network costs about $2.500 to fully set up. We’re talking about small PBNs, with around 5-10 domains. You also have to spend a ton of time finding the domains and different webhosts, building the websites and good article writers. After that, you also have to manage the websites. If you fully outsource the creation, the costs can go up pretty high. All with the risk of getting penalized at some point.

The sad part is that this will, again, also affect innocent people. If you find a niche that seems to be working for you, what do you do? You expand. The same way Facebook bought WhatsApp and Instagram, the same way you will buy other websites or create new ones. It doesn’t mean it’s a blog network, but if you’re unaware of the problems and start linking between your sites, Google might see it as one and penalize it. If they fit a pattern, they will get penalized.

youtube

If you do have multiple sites, be careful on how you interlink between them, as it might trigger a penalty to your entire network of websites.

3. Doorway Pages

We’ve recently published a whole article dedicated to doorway pages just a while ago. I stated my frustrations with Google misleading people into thinking separate pages for multiple locations are a bad thing.

Whenever someone has a business that operates in different cities, SEOs jump to warn them about the risks of building doorway pages. The problem is that there are so many definitions for doorway pages, that people don’t know where to draw the line anymore.

Long story short, around 2004 people noticed that creating a page for each keyword variation can help you gain more exposure in search. So they found a method to scale it by duplicating pages and changing only the important keywords.

This way, Doorway Pages were created, or how Google puts it, “sites or pages that are created to rank highly for specific queries.” This is really funny, considering that most, if not all, of the pages on the web are created for that purpose.

There’s a growing search for a doorway pages alternative, but the truth is there is no alternative. There’s probably no such a thing as a doorway page either. If you have to create separate pages for separate keywords, that should be perfectly fine. But some people abuse this, creating irrelevant pages altogether and harming the community as well.

Webmasters are now confused and they sometimes get stuck, fearing not to get penalized. However, there are many examples of doorway pages ranking just fine.

4. Buying/Selling Links

Buying and selling links will always exist. In order to keep the top payers from the top positions in the organic searches, Google had to differentiate between the two, so they introduced the rel=”nofollow” attribute.

This attribute specifies to the Google bot that no equity should be passed from one site to another. In other terms, the link has no SEO benefit. It only sends the user through, if clicked.

But did people respect that? Of course not! They hate nofollow links. Nobody wants them, because they don’t help with rankings. Well, it turns out that nofollow links are actually not that bad, and that they can help with rankings.

Obviously, Google started penalizing those who pay for dofollow links. What happened in the end? Big publishers turned all their links into nofollow, to make sure that they don’t get penalized, as the link market within these publishers was thriving. Now a lot more people have to suffer.

Also, dofollow/nofollow links complicate everything a lot, as you can get penalized for a dofollow link that you obtained naturally, if it fits the pattern of some mass links buyer. If you’re interested, please read more about the dofollow / nofollow issue here.

5. Hidden Content in Tabs or Dropdowns

Yet another evergoing confusion… Ok, so obviously, hiding content with CSS or JS in order to manipulate search engines is a bad thing. But why would tabs and dropdowns be one? I mean, almost every menu out there is a dropdown. Should we not make them dropdowns anymore?

Well, back in the day, webmasters were hiding massive amounts of keyword rich content in the favor of ads or copy. This meant that if Google indexed that content the same way, it could show up in the meta description, misleading the users into thinking they would find that information on the page. Had it been a clear and obvious tab or dropdown, however, I’m sure that people would have had no problem finding it.

Google has to justify their action in some way because, obviously, people are confused. We all know the reason for this was abuse, but why can’t I put content in a tab to make my site look better, just because some guy uses white font on white background to stuff in keywords and keep his site pretty?

youtube

Although John Mueller spreads fear that tabs and dropdowns will affect you, Matt Cutts (former Head of Spam @ Google) has a way better explanation for this:

youtube

Obviously, if you don’t really hide your content, there’s nothing to fear. Just make your dropdown arrows and tab switches visible and not a 1×1 pixels wide dot. You can also hear him say:

If you use a common framework to do your dropdowns, that a lot of other websites use, then there’s less likelihood that we might accidentally classify it as hidden text.

This means that Google does indeed make mistakes and because of blackhat practitioners, well-intended SEOs will suffer as well.

But now, apparently, it’s ok to use hidden content again, as of 2016 and the mobile first index.

no, in the mobile-first world content hidden for ux should have full weight

— Gary “鯨理” Illyes (@methode) November 5, 2016

However, let me tell you one thing. It was always OK to use content hidden in tabs or dropdowns, just as Matt Cutts mentioned in the video above. However, if it was really hard or impossible for the user to view it, it would not get indexed and could potentially get your site penalized.

For many years, people have thought that content in tabs and dropdowns was a bad thing, so they didn’t use it. Except for Wikipedia. Wikipedia has been using it for quite a while, dominating the top positions on almost every keyword.

6. Blog Comments (Spamming & Scamming)

I’ll be blunt. I hate spam. I work with a lot of websites, and often, I see something like this:

Website being spammed with comments

This resulted in the server trying to send 12000 notifications through e-mail, which was POP3 synced with a Gmail account. Google eventually banned the website’s IP address for spam, when in fact, it was not this website that was doing the spam. Yey BlackHat!

In the end, nobody wins from this, as none of those comments are approved, so they don’t show up on the page.

Spamming doesn’t work anymore, but a lot of people still do it. I tested tools like GSA and ScrapeBox. They don’t work for building high quality links. Most quality sites don’t auto-approve comments. It will be just a waste of your money and time, as these software have a pretty steep learning curve.

ScrapeBox, however, can be pretty useful for identifying quality sites, as its main purpose is to scrape Google for links. You can use it to create a database of potential websites you can pitch your content to.

And don’t get me wrong. Blog commenting isn’t BlackHat and is actually a pretty useful technique. However, the point should be to really try and establish a relationship through your comments with either the blogger, the readers or the other commenters.

These days, people think that blog commenting is a bad thing, when actually, it isn’t. To be more specific, it never was!

Commenting is the life force of blogging.

It’s the way people used to establish connections and it still is. Spam and irrelevant stuff written just to get a backlink, be it automated or manually built, is never a good thing.

7. Confessions of a Google Spammer

So far, we’ve talked about how spam hurts the internet, its users and ethical webmasters, but I want to end this by sharing something for the BlackHat SEOs out there. It’s an old story, told by a Google Web Spammer.

He used to do blackhat SEO which would constantly blast other sites with spam to rank his own sites or his clients. He spammed the internet so much that he ended up making over $100k per month. This is an insane amount of money, which permitted him to buy expensive houses and cars, hire a cook and spend time with his son whenever he wanted.

However, it was when Google kicked in with the penalties that his troubles began. At first, he thought that he would just rinse and repeat, as usual, but in a couple of months it was clear that it wasn’t going to work this time. He wasted a lot of money trying new methods, in vain. Most of his spammer friends were in the same situations, many of them turning to drugs to get relief from the stress.

This guy knew the risks, despite the fact that he believed himself to be invincible for a while. However, most businesses don’t know the risks at all. Many SEO freelancers and agencies lie to people by promising them high rankings in record time. Traffic and profits go up and the companies start investing, then BOOM! Suddenly it’s all over.

You can read more about the Confessions of a Google Spammer here. The story has a good ending.

Conclusion

I understand why people do BlackHat SEO. I understand that it’s about money. I understand their frustration about not putting the work to become the world’s largest search engine and have everyone obey by their rules and then complaining about it (ironic).

But what ignites my hatred towards it is the fact that it’s also usually related to horrible services, spam, scams, viruses & malware and even worse. No scammer will ever use whitehat SEO, because they know there’s no need for long time investment. They care so much about Google’s evil monopolized reign, but they don’t give a damn about the kids they scam with fake Candy Crush cheats offers.

Acquaintance: “Hey, what do you do for a living?”

BlackHat SEO: “I scam kids online.”

Elon Musk has success with Tesla, although the electric car is a concept dating back 100 years. Why? Most likely because he made his electric car better than a petrol one. Nobody achieved that before. On the same line, blackhat techniques were more efficient than whitehat ones for a very long time. However, things are starting to change for the better, thanks to Google and the whitehat SEO community’s efforts.

Next time you see some blackhat stuff going on, please don’t just overlook it because you’re not the one doing it. Openly disagree with it, complain about it and report it to Google. It’s going to make the internet a better place for everyone.

The post 7 Examples of How BlackHat SEOs Hurt You, The Internet & Everyone Else appeared first on SEO Blog | cognitiveSEO Blog on SEO Tactics & Strategies.

7 Examples of How BlackHat SEOs Hurt You, The Internet & Everyone Else published first on http://nickpontemarketing.tumblr.com/

0 notes

Text

Latest practices of Indianapolis SEO Company to help leverage the best results for your SEO efforts

For any type of online business, marketing becomes a very important part. If your potential customers do not come to know about your product or service, you might be losing on a lot of business. These days, most of the business owners follow proper marketing strategies to promote their website to the target audience. Search engine optimization (SEO) is one of the most effective digital marketing strategies. There are many marketing strategies that can bring traffic to your website but SEO is by far the best approach. SEO is the process of optimizing the website and make it more user-friendly. It helps you achieve top rankings for your website in the search engine result pages. When your potential customers search for the products or services that are similar to yours, they will find your website quickly in the search engine result pages (SERPs). Your business success is dependent on the SEO results and the actual return on investment from your SEO efforts.

In order to leverage the best results out of your SEO efforts, you must work according to the latest practices. As a business owner, you must see that your digital marketing strategies must be based on the latest updates and tactics.

Some of these updates are mentioned below:

User intended content

Earlier search engines used to follow the old norms but now actually concentrate on what the search engines were supposed to do. There is a shift from what the search engines assumed that the user wanted towards the user intended content which focuses on what the users actually want. Now, it is more search results based according to what users meant while they made a search query. Content used by the digital marketers should be brief and precise maintaining high quality and originality to rise above the rest. You can grab your target audience interest and attention only when you post unique and high quality content on your website.

Use of Social media

To get better results, businesses must combine search engine optimization and social media rather than focusing on just one thing. SEO strategy combined with social media can definitely give you higher conversion rates, revenues per click and brand exposure.

Make your website responsive

You shouldn’t be missing out on a lot of traffic as most of the people use their smartphones for online searches. It implies that your website should be more user-friendly and responsive in turn giving a unique user experience to the targeted audience.

Include authoritative links

Studies show that adding outgoing links within your blog post surely has a positive effect on your SEO ranking. Content that has these authoritative links, experience higher ranking than the content that has not. It develops a trust with Google, depicting that your content is relevant and valuable. These links also give users additional resources to read; overall enhancing your brand message and credibility in your respective industry.

Pay attention on the latest SEO news

Were you aware that most of the users would get connected with a brand if their digital marketing team uses integrated marketing strategies? These types of information are immensely important for boosting your SEO visibility. You can get this essential information from SEO news provider and other websites like:

· SEOBook

· Moz

· SearchEngineLand

· SERoundTable

· SearchEngineWatch

· SearchEngineJournal

· SEOChat

· Business 2 Community

· MattCutts Blog

For your business located in Indianapolis, increase its visibility by hiring a reputed SEO company like IdealVisibility to safely and effectively improve your organic search engine placement on Google. This Indianapolis SEO Company can help develop the best strategy for your budget and marketing needs. They can help you achieve organic search results with their vast experience in SEO. Their specialists are expert enough in exploiting and implementing the best and most effective SEO techniques specially designed to improve a website’s search rankings. Search engine optimization being one of their core expertise, they work on proven methodologies and effective strategies to get your business maximum online exposure and better return on investment.

0 notes