#multi-label classification

Explore tagged Tumblr posts

Text

#multi-label classification#machine learning#classification techniques#AI#ML algorithms#quick insights#data science

0 notes

Text

Python Libraries to Learn Before Tackling Data Analysis

To tackle data analysis effectively in Python, it's crucial to become familiar with several libraries that streamline the process of data manipulation, exploration, and visualization. Here's a breakdown of the essential libraries:

1. NumPy

- Purpose: Numerical computing.

- Why Learn It: NumPy provides support for large multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently.

- Key Features:

- Fast array processing.

- Mathematical operations on arrays (e.g., sum, mean, standard deviation).

- Linear algebra operations.

2. Pandas

- Purpose: Data manipulation and analysis.

- Why Learn It: Pandas offers data structures like DataFrames, making it easier to handle and analyze structured data.

- Key Features:

- Reading/writing data from CSV, Excel, SQL databases, and more.

- Handling missing data.

- Powerful group-by operations.

- Data filtering and transformation.

3. Matplotlib

- Purpose: Data visualization.

- Why Learn It: Matplotlib is one of the most widely used plotting libraries in Python, allowing for a wide range of static, animated, and interactive plots.

- Key Features:

- Line plots, bar charts, histograms, scatter plots.

- Customizable charts (labels, colors, legends).

- Integration with Pandas for quick plotting.

4. Seaborn

- Purpose: Statistical data visualization.

- Why Learn It: Built on top of Matplotlib, Seaborn simplifies the creation of attractive and informative statistical graphics.

- Key Features:

- High-level interface for drawing attractive statistical graphics.

- Easier to use for complex visualizations like heatmaps, pair plots, etc.

- Visualizations based on categorical data.

5. SciPy

- Purpose: Scientific and technical computing.

- Why Learn It: SciPy builds on NumPy and provides additional functionality for complex mathematical operations and scientific computing.

- Key Features:

- Optimized algorithms for numerical integration, optimization, and more.

- Statistics, signal processing, and linear algebra modules.

6. Scikit-learn

- Purpose: Machine learning and statistical modeling.

- Why Learn It: Scikit-learn provides simple and efficient tools for data mining, analysis, and machine learning.

- Key Features:

- Classification, regression, and clustering algorithms.

- Dimensionality reduction, model selection, and preprocessing utilities.

7. Statsmodels

- Purpose: Statistical analysis.

- Why Learn It: Statsmodels allows users to explore data, estimate statistical models, and perform tests.

- Key Features:

- Linear regression, logistic regression, time series analysis.

- Statistical tests and models for descriptive statistics.

8. Plotly

- Purpose: Interactive data visualization.

- Why Learn It: Plotly allows for the creation of interactive and web-based visualizations, making it ideal for dashboards and presentations.

- Key Features:

- Interactive plots like scatter, line, bar, and 3D plots.

- Easy integration with web frameworks.

- Dashboards and web applications with Dash.

9. TensorFlow/PyTorch (Optional)

- Purpose: Machine learning and deep learning.

- Why Learn It: If your data analysis involves machine learning, these libraries will help in building, training, and deploying deep learning models.

- Key Features:

- Tensor processing and automatic differentiation.

- Building neural networks.

10. Dask (Optional)

- Purpose: Parallel computing for data analysis.

- Why Learn It: Dask enables scalable data manipulation by parallelizing Pandas operations, making it ideal for big datasets.

- Key Features:

- Works with NumPy, Pandas, and Scikit-learn.

- Handles large data and parallel computations easily.

Focusing on NumPy, Pandas, Matplotlib, and Seaborn will set a strong foundation for basic data analysis.

8 notes

·

View notes

Text

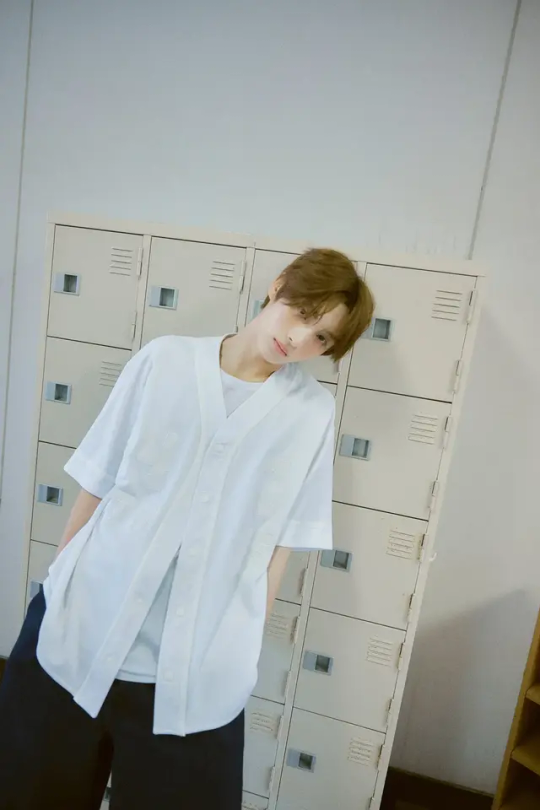

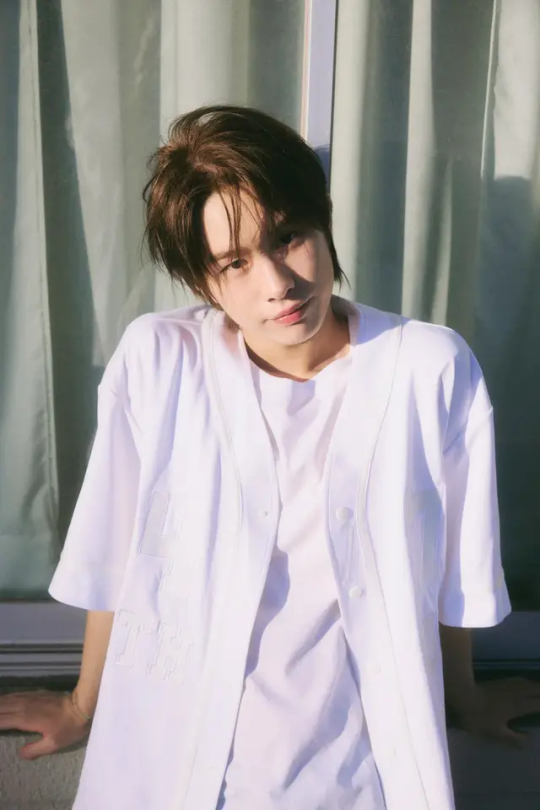

A guide to TWS.

TWS is a multinational group of six members under the management of Pledis Entertainment, who made their debut on 22nd January 2024. The members consist of Shinyu, Dohoon, Youngjae, Hanjin, Jihoon, and Kyungmin, ordered from eldest to youngest. Interestingly, the group name TWS stands for "TWENTY FOR SEVEN WITH US".

Their debut mini-album, named Sparkling Blue, showcases the excitement and tension of the beginning of a new semester, where everything starts afresh. The songs in the album feature lyrics about everyday topics and a refreshing concept that can be relatable to the younger generations. Moreover, TWS can also be pronounced as Two Us.

On the 10th November 2022, the sub-label of HYBE, Pledis Entertainment, announced the debut of a new boy group around the year 2023. It was during the Fan Meeting of SEVENTEEN in Carat Land on 12th March 2023 when Hoshi from SEVENTEEN introduced five trainees as the upcoming members of the label's new group. However, their identities remained a mystery. Pledis officially confirmed on 7th November that the group would make their debut in the first quarter of 2024.

On the 21st December 2023, the group launched their official social media account and released their first debut teaser. The following day, Pledis Entertainment announced that the group would consist of six members and would debut on January 2024.

On 27th December 2023, it was announced that the group would release their debut mini-album, Sparkling Blue, on 22nd January. The pre-release single, titled "Oh Mymy: 7s", was released on 2nd January.

The six members were introduced through a group profile video released on 3rd January and individual videos, which followed the next day, on 4th January.

Brief profile of members (from oldest to youngest)

1. Shinyu

Shinyu, whose birth name is Shin Junghwan, is the oldest member and leader of TWS. He was born on 7th November 2003 and claims to be "the father" of the group. Shinyu graduated from Lila Art High School in 2023, a year late due to being a dropout from Yesan High School previously. He has two elder siblings, born in 1997 and 2002, respectively. Shinyu has an INFP personality type based on MBTI classification and represents the visual of the group. He has a mature, trendy, and flower boy appearance with a small face and broad shoulders.

Before joining Pledis Entertainment, Shinyu was a former trainee at both SM Entertainment and Big Hit Music. He began training since his freshman year of high school. Shinyu is known to be friends with actress Lee Chaeyoon, who once participated in Girls Planet 999. They even shot a school activity video together at Lila Art High School. Shinyu has a calm and mature personality, but he also has some randomness that allows him to adapt well to other members.

2. Dohoon

Dohoon, whose birth name is Kim Dohoon, is a member of TWS, born on 30th January 2005. He has an elder brother and completed his schooling at Lila Art High School before transferring to Hanlim Multi Art High School. Dohoon began his trainee journey in 2017 and is the longest trainee among the TWS members, making him a versatile member.

Based on the MBTI personality test, Dohoon's personality type is ISTP. His face is often compared to Nam Joohyuk and EVNNE's Lee Jeonghyeon, and he is known for representing the "strong and fierce" visual in the group. Together with Shinyu, Dohoon is responsible for the rap line in TWS.

During dance performances, Dohoon's movements are clean, precise, and he knows how to control his power and weakness. He is often chosen as the center during the highlights of the song. Additionally, his vocal ability is exceptional, with a wide range and good pitch. Dohoon tends to sing high-pitched tones with a soft voice, and he also features in rap parts.

Despite the group's challenging pre-debut period, Dohoon is known for not crying when all members remain teary-eyed two days before their debut. He was also very popular among his seniors and juniors during his middle school years. Dohoon is a talkative and random member who adds life to the group's chemistry.

3. Youngjae

Youngjae, whose birth name is Choi Youngjae, was born on 31st May 2005 and is a member of TWS. He has a younger sister and completed his schooling at Jamshin High School. Based on the MBTI personality test, Youngjae's personality type is ISFJ. Compared to the other members, Youngjae is relatively calm, but alongside Shinyu, he often speaks more.

Youngjae's position in the group is as the main vocalist. He has the loudest voice among the members that is clear and gentle, optimized for band vocals. He is particularly responsible for delivering high notes and the song's highlight parts. During his dance performances, Youngjae showcases flexible dance lines with big and proportional body movements.

Youngjae's soft and gentle look comes from his double eyelids, round eyes, and slim face. According to his fellow members, he has an organized personality with strong vitality. Additionally, he was discovered by Pledis Entertainment through Instagram DM. He occasionally uses a Gyeongnam accent while speaking.

4. Hanjin

Hanjin, whose birth name is Han Zhen, is a member of TWS. He was born on 5th January 2006 and is originally from Xinxiang, Henan, China. Hanjin completed his schooling at Hanlim Multi Art High School and has the personality type of INFJ based on the MBTI classification.

Hanjin is the only foreign member of the group, who joined Pledis Entertainment through an audition in China in 2022. He worked as a trainee for approximately a year before debuting. Hanjin's position in the group is as the sub-vocal due to his lacking Korean. However, in the b-side song "Unplugged Boy", Hanjin showcased his stable and warm vocal.

Hanjin's dance skills are excellent even though his training period was relatively short. He is also the visual member, who is a handsome Chinese man with large, beautiful eyes, dark double eyelids, and a sharp nose. His small face is filled with attractive features that exude luxury.

Despite Hanjin's limited language skills in Korean, he is actually a talkative and noisy member. His favorite season is autumn because it is not too hot or too cold. Furthermore, Hanjin is an active user of selca and loves photography.

5. Jihoon

Jihoon, whose birth name is Han Jihoon, is a member of TWS. He was born on 28th March 2006 and has an elder sister born in 2002. Jihoon is skilled in guitar playing and dancing, and he is also INFJ based on the MBTI personality test. He attended Hanlim Multi Art High School.

Jihoon became a trainee in the entertainment industry after attending Move Dance Studio in Suwon. He began his training at YG Entertainment in 2017 and subsequently moved to JYP Entertainment. He then joined Big Hit Music (later known as Big Hit Entertainment) in 2020. Jihoon was part of Trainee A and was set to debut next, but the group was canceled. He finally joined Pledis Entertainment in 2023 as a trainee for about seven years before his debut.

Jihoon's position in the group is the main dancer. He is passionate about dancing and has a precise and controlled movement. He has excellent choreography memorization and can create choreography as well. Jihoon likes hip-hop music, and his clear and transparent tone showcased remarkable vocal ability with a comfortable listening experience. He has plump lips, lovely skin, and cheeks that are soft, portraying a cute image when he smiles with a round-opened mouth, revealing his gumline.

Jihoon is an excellent dancer, but he is said to practice too much that resulted in his knee joint inflammation. Monica, who appeared in Street Woman Fighter, is his dance mentor. He attended a dance school called OFD dance studio ran by Monica.

Jihoon is fluent in three languages, Korean, Japanese, and English. He is reportedly more popular than other members, just like Shinyu and Dohoon, but with a wider range of recognition due to his exposure as Trainee A. Jihoon and Kyungmin are known as the loudest and most talkative members of the group.

6. Kyungmin

Kyungmin, whose birth name is Lee Kyungmin, is the youngest member of TWS, born on 2nd October 2007. He is the only member with two younger brothers and attended Hanlim Multi Art High School. Based on the MBTI personality test, Kyungmin's personality type is ISFP.

Kyungmin received training from Mu Doctor Academy, an audition academy, before passing an in-house audition held by Pledis Entertainment and joining the label. He is the main vocalist, with no remarkable contrast between his natural voice and singing voice. Kyungmin's voice is refreshing but somewhat childlike, with a beautiful and unique tone that captivates the ears, particularly at higher notes.

Kyungmin's dance technique is clear and loud. His slightly droopy eyes and plump lips give him a cute look, often compared to a puppy, especially when he smiles with a raised mouth corner and a dimple that appears, making those who look at him smile along.

Kyungmin and Jihoon are known as the loudest and most playful members of the group. He is known for his outstanding academic achievement and excels in mathematics, ranking sixth in his class. Kyungmin is the first HYBE idol to debut in 2007, but based on the RU Next audition program, Wonhee from I'LL-IT was the first to confirm his debut. However, Kyungmin made his debut earlier than Wonhee.

TWS's first mini album: SPARKLING BLUE, released January 22, 2024.

Go streaming!!!! :

4 notes

·

View notes

Text

Albums of the Month: Sun Without the Heat by Leyla McCalla

Album: Sun Without the Heat Artist: Leyla McCalla Release Date: April 12, 2024 Label: ANTI- Favorite Tracks: Open the Road Sun Without the Heat Tower Love We Had I Want to Believe Thoughts: A theme of recent album releases is their ability to defy genre classification. This holds true for this solo outing Leyla McCalla,a vocalist and multi-instrumentalist who plays cello, banjo, and guitar,…

View On WordPress

1 note

·

View note

Text

How Printed Jewellery Tags Help Manage Jewellery Inventory?

When it comes to the colourful and constantly changing world of jewellery, accurate inventory management is a must and also a challenge. A piece of jewellery can be small, big, expensive, cheap, old, modern, printed, plain, and made of an endless amount of materials. Jewellery inventory management is not just a count of your product, it’s accurate classification, it’s real-time tracking, and it’s profession presentation. One of the most viable options to ease the process of jewellery inventory is printed jewellery tags.

Printed jewellery tags are also more than just a price tag. They are necessary identifiers to enable tracking of each piece, prevent stealing, simplify billing and help in the organization process. In this blog, we will delve into the role of printed jewellery tags in inventory management and why it is also important to choose the right print manufacturer (Jewellery Labels Manufacturers as leaders in the industry) to improve the inventory aspect of your business.

Why Inventory Management is Important in the Jewellery Industry

As with any jewellery company, no matter if they are a small boutique or a large chain, inventory management is essential. Due to the thousands of small, high-value items, it is near impossible to track movement by hand, and mistakes will inevitably happen. Losing just one item can be detrimental to your finances or customer experience.

Therefore companies are turning to a digital inventory system, paired with printed tags to support their business process. A tag will include price information and product details, and can be incorporated into a barcoding or RFID system as well to support accurate and real-time tracking.

Printed Jewellery Tags: A New Solution

Printed jewellery tags can provide all the necessary details for a product including, SKU, metal, gemstone, weight, price, and barcode. When the possibility of printed jewellery tags is realised, they can:

Increase product traceability

Improve the display of products

Speed up accurate billing

Enhance audits and stocktakes

Reduce theft and loss

Printed Jewellery Tags Manufacturers use materials and adhesives in creating printed tags for the fragile products that are still durable and clear.

Importance of Selecting the Right Material

There are many labels that have a range of purposes in the jewellery industry. Some of the most popular labels are made from either polyester or non-tearable material.

Polyester Labels: Strength and Clarity

Polyester labels have the best longevity and the least amount of wear and tear. They are also water-resistant and chemical resistant, to some extent. They also have very high-quality printing capabilities. Quality Polyester Labels Manufacturers are providing polyester tags that not only maintain their looks and functionality, but also hold up to constant handling that you will give them in the retail environment.

Polyester Tags may be the right product for:

High-end jewellery pieces

Fully handled items

Longer stock items in inventory that you want to maintain

Non-Tearable Labels: Security and Longevity

Non-tearable labels are normally made from synthetic materials that withstand wear and tear. Non-tearable tags are best used for more expensive pieces because they are harder to remove or replace without wear and tear.

Some Manufacturers of Non-Tearable Labels ensure that their products are used in many different types of stores, including:

Shops that experience theft

High traffic retail showrooms

Items looked at by customers continuously

The flexibility of piggyback labels

A piggyback label has the functionality of two labels with a substituted multi-layer adhesive. In jewelry, piggyback labels are used for inventory control, billing, and after-sale services. Piggyback Labels Manufacturers produce this multi-use product to help improve operational flexibility.

Advantages of piggyback labels:

Part of the label can be peeled off and kept for accounting.

Used to tag returned or repaired goods

Simplifies warranty and authentication process for jewelry

Bill and Track

With printed jewelry tags already printed with a barcode or QR code, the labels can be scanned easily, allowing for fast and effect billing to help minimize customer wait times and pricing errors. As a backend benefit, you can use the same barcode or QR code for specific stock counts.

Automatically track these beautifully printed tags in the moment you turn the sale into points of sale or ERP.

The benefits of using printed tags that have integrated with POS and ERP

Quick and smooth transaction experience for customers

Accurate updates and tracking for inventory.

Enhanced trend analysis with data.

Jewellery labels for better inventory control

Quality jewellery labels enable a more organized inventory by metal type, gemstone, collection, or design. This way, it is easy to find, trace, and replenish product inventory.

Reliable Jewellery Labels Manufacturers will also allow you options for customization like:

The size and shape of tags

Printing, logo and information

Color-coded by category

An organized tagging system is both efficient for the back end and looks great in the front end!

Branding and Customer Experience

Printed jewellery tags are a powerful and sleuthy marketing vehicle! Personalized labels with the brand name, logo, and tagline build brand awareness and trust. Customers remember: "That looked professional!"

Like a luxurious design label or a minimalistic tag for a boutique, high-quality Printed Jewellery Tags Manufacturers also create memorable tagging solutions that will help elevate your brand.

Preventing Theft and Ensuring Accountability

Jewellery theftallegations are a concern with retail environments. Consider tamper-proof identity tags, or non-removable inventory labels that provide similar protection. Each stable inventory label includes serial number and other tracking information that helps retailers to make more effective inventory audits and security checks.

Non tearable labels and most secure adhesives engineered by reputable manufacturers help retailers to keep their valuable inventory safe.

Making Returns and Replacements Easy

When a product needs to be returned and replaced, it is much easier and quicker if there is a label with all the relevant information attached. This allows for:

Proof of purchase

Verification of product information

Faster service for the customer

Piggyback and polyester labels do this very well, as they will often keep information overtime.

Environmental and Regulatory Considerations

Today's consumers are concerned about the environment. As a result, jewellery businesses are opting for eco-friendly, recyclable or biodegradable tags; and leading Jewellery Labels Manufacturers today are offering sustainable label choices without having to sacrifice quality.

Tags can also hold legal information, hallmarking, and any other regulatory information, ensuring everything is above board and transparent.

Conclusion

Micro-managing jewellery stock is like sailing a ship without a compass when there is no efficient tagging system. Printed jewellery tags are not trivial to the product—they are key components of your inventory scheme! While printed jewellery labels/tagging products contribute to the visibility of your stock, enhance your client experience, reduce loss, and offer so much more!

Whether you want polyester, tamper-proof, or piggyback tags, finding the right supplier among Printed Jewellery Tags Manufacturers will make all the difference. You'll encounter suppliers like Jewellery Labels Manufacturers, Polyester Labels Manufacturers, Non Tearable Labels Manufacturers, Piggyback Labels Manufacturers, and others that will design custom solutions for every business need.

Make the smart choice. Start improving your inventory solutions today—for professional-style printed jewellery tags, built for accuracy, security, and beauty.

Contact Information:

Gamma Sales Corporation📞 +91 80 100 111 88 📧 [email protected] 📍 Opp. Panchayat Ghar, Nangli Sakrawati, Najafgarh, New Delhi - 110043, India

#Jewellery Labels Manufacturers#Printed Jewellery Tags Manufacturers#Polyester Labels Manufacturers#Non Tearable Labels Manufacturers#Piggyback Labels Manufacturers

0 notes

Text

CHARACTER STUDY, TRAITS & CHARACTERISTICS!

bold applies to your muse; italics conditional.

EYES: blue | green | brown | hazel | gray | gray-blue | other

HAIR: blond | sandy | brown | black | auburn | ginger | multi-color | other

She can kind of shapeshift to whatever hair she wants, but she's naturally a brunette

BODY TYPE: skinny | slender | slim | built | curvy average | muscular | pudgy | overweight | athletic

She's a stripper, so she's thin and muscular. Idfk what half of the skinnier classifications really mean. Also, this is assuming no shapeshifting

SKIN: pale | light | fair | freckled | tan | medium | dark | other

Once again, idk the difference between the light skinned ones. She's white, but not super pale. Burns, then tans.

GENDER: male | female | trans | cis | agender | demigender | genderfluid | other

Mina's a cis woman. One of her alters is a man, one is a girl child, and one is an adult fae woman.

SEXUALITY: heterosexual | homosexual | bisexual pansexual | asexual | demisexual | other | unsure | doesn't care for labels

Other aka monster fucker

ORIENTATION: homoromantic | heteroromantic | biromantic | unsure | doesn't care for labels | demiromantic

EDUCATION: high school | college | university master's degree(s) | PhD | other

Faenapped before high school. Technically has a fake high school diploma

I'VE BEEN: in love | hurt | ill | mentally abused | bullied | physically abused | tortured | brainwashed | shot| stabbed

POSITIVE TRAITS: affectionate | adventurous athletic | brave | careful | charming | confident | creative | cunning | determined | forgiving | generous | honest I humorous | intelligent | loyal | modest | patient | selfless | polite | down-to-earth | diligent | romantic | moral | fun-loving | charismatic | calm

NEGATIVE TRAITS: aggressive | bossy | cynical | envious | shy | fearful | greedy | gullible | jealous | impatient | impulsive | cocky | reckless | insecure | irresponsible | mistrustful | paranoid | possessive | sarcastic | self-conscious | selfish | swears (????) | unstable | clumsy | rebellious | emotional | vengeful | anxious | self-sabotaging | moody | peevish | angry | pessimistic | slacker | thin skinned | overly dramatic | argumentative | scheming

LIVING SITUATION: lives alone | lives with parent(s) / guardian | lives with significant other | lives with a friend | drifter | homeless | lives with children/family | other

Moira is her roommate and kind of friend

PARENTS/GUARDIANS: mother | father | adoptive | foster | grandmother | grandfather | other

Divorced mom, Dad got sole custody of kids

SIBLING(S): sister(s) | brother(s) | other | none

One older brother who is six years her senior. One of her alters presents as an older brother between Mina and her actual Real Brother in age.

RELATIONSHIP: single | crushing | dating I engaged | married | separated | it's complicated | verse dependent

LOL

In a polygamous marriage with a true fae. He's allowed multiple wives, she's allowed to date as long as she "doesn't put anyone before him." This devotion is defined by her husband, not by Mina.

Also whatever the fuck is going on with Dust

I HAVE A(N): learning disorder | personality disorder | mental/mood disorder | anxiety disorder | sleep disorder | eating disorder | behavioral disorder (not sure what you mean) | substance-related disorder | PTSD | mental disability | physical disability

Mina has DID, which also means she has a bunch of secondary trauma based disorders bc. That's trauma, babe. She's been impatient before and has been diagnosed with Stuff, but even if a psych knew fae shit, she'd definitely get hit with (c)ptsd and/or bpd. Same with some sort of mood issue and/or anxiety

Sleep disorder is the chronic nightmares and purposefully keeping herself awake to prevent them.

Absolutely smokes too much weed, but god is that even the biggest issue rn?

She definitely absolutely does bulimic behavior in the background but. I'm not rping that bye.

THINGS I'VE DONE: had alcohol | smoked/hookah | stolen | done drugs | self-harmed | starved | had had a threesome | had a one-night stand I gotten into a physical fight | gone to a hospital | gone to jail | used a fake ID | played hooky | gone to a rave | killed someone | had someone try to kill them

Got detained while the cops decided what they were going to do re 5150ing her when she first escaped the Hedge in Cleveland, considering when they grabbed her, she reached for their weapons. White girl privilege saved her lol

Tagged by: @knife-edged-dreams :3

Anyone can do this. Go nuts, show nuts

1 note

·

View note

Text

How AI Is Revolutionizing Contact Centers in 2025

As contact centers evolve from reactive customer service hubs to proactive experience engines, artificial intelligence (AI) has emerged as the cornerstone of this transformation. In 2025, modern contact center architectures are being redefined through AI-based technologies that streamline operations, enhance customer satisfaction, and drive measurable business outcomes.

This article takes a technical deep dive into the AI-powered components transforming contact centers—from natural language models and intelligent routing to real-time analytics and automation frameworks.

1. AI Architecture in Modern Contact Centers

At the core of today’s AI-based contact centers is a modular, cloud-native architecture. This typically consists of:

NLP and ASR engines (e.g., Google Dialogflow, AWS Lex, OpenAI Whisper)

Real-time data pipelines for event streaming (e.g., Apache Kafka, Amazon Kinesis)

Machine Learning Models for intent classification, sentiment analysis, and next-best-action

RPA (Robotic Process Automation) for back-office task automation

CDP/CRM Integration to access customer profiles and journey data

Omnichannel orchestration layer that ensures consistent CX across chat, voice, email, and social

These components are containerized (via Kubernetes) and deployed via CI/CD pipelines, enabling rapid iteration and scalability.

2. Conversational AI and Natural Language Understanding

The most visible face of AI in contact centers is the conversational interface—delivered via AI-powered voice bots and chatbots.

Key Technologies:

Automatic Speech Recognition (ASR): Converts spoken input to text in real time. Example: OpenAI Whisper, Deepgram, Google Cloud Speech-to-Text.

Natural Language Understanding (NLU): Determines intent and entities from user input. Typically fine-tuned BERT or LLaMA models power these layers.

Dialog Management: Manages context-aware conversations using finite state machines or transformer-based dialog engines.

Natural Language Generation (NLG): Generates dynamic responses based on context. GPT-based models (e.g., GPT-4) are increasingly embedded for open-ended interactions.

Architecture Snapshot:

plaintext

CopyEdit

Customer Input (Voice/Text)

↓

ASR Engine (if voice)

↓

NLU Engine → Intent Classification + Entity Recognition

↓

Dialog Manager → Context State

↓

NLG Engine → Response Generation

↓

Omnichannel Delivery Layer

These AI systems are often deployed on low-latency, edge-compute infrastructure to minimize delay and improve UX.

3. AI-Augmented Agent Assist

AI doesn’t only serve customers—it empowers human agents as well.

Features:

Real-Time Transcription: Streaming STT pipelines provide transcripts as the customer speaks.

Sentiment Analysis: Transformers and CNNs trained on customer service data flag negative sentiment or stress cues.

Contextual Suggestions: Based on historical data, ML models suggest actions or FAQ snippets.

Auto-Summarization: Post-call summaries are generated using abstractive summarization models (e.g., PEGASUS, BART).

Technical Workflow:

Voice input transcribed → parsed by NLP engine

Real-time context is compared with knowledge base (vector similarity via FAISS or Pinecone)

Agent UI receives predictive suggestions via API push

4. Intelligent Call Routing and Queuing

AI-based routing uses predictive analytics and reinforcement learning (RL) to dynamically assign incoming interactions.

Routing Criteria:

Customer intent + sentiment

Agent skill level and availability

Predicted handle time (via regression models)

Customer lifetime value (CLV)

Model Stack:

Intent Detection: Multi-label classifiers (e.g., fine-tuned RoBERTa)

Queue Prediction: Time-series forecasting (e.g., Prophet, LSTM)

RL-based Routing: Models trained via Q-learning or Proximal Policy Optimization (PPO) to optimize wait time vs. resolution rate

5. Knowledge Mining and Retrieval-Augmented Generation (RAG)

Large contact centers manage thousands of documents, SOPs, and product manuals. AI facilitates rapid knowledge access through:

Vector Embedding of documents (e.g., using OpenAI, Cohere, or Hugging Face models)

Retrieval-Augmented Generation (RAG): Combines dense retrieval with LLMs for grounded responses

Semantic Search: Replaces keyword-based search with intent-aware queries

This enables agents and bots to answer complex questions with dynamic, accurate information.

6. Customer Journey Analytics and Predictive Modeling

AI enables real-time customer journey mapping and predictive support.

Key ML Models:

Churn Prediction: Gradient Boosted Trees (XGBoost, LightGBM)

Propensity Modeling: Logistic regression and deep neural networks to predict upsell potential

Anomaly Detection: Autoencoders flag unusual user behavior or possible fraud

Streaming Frameworks:

Apache Kafka / Flink / Spark Streaming for ingesting and processing customer signals (page views, clicks, call events) in real time

These insights are visualized through BI dashboards or fed back into orchestration engines to trigger proactive interventions.

7. Automation & RPA Integration

Routine post-call processes like updating CRMs, issuing refunds, or sending emails are handled via AI + RPA integration.

Tools:

UiPath, Automation Anywhere, Microsoft Power Automate

Workflows triggered via APIs or event listeners (e.g., on call disposition)

AI models can determine intent, then trigger the appropriate bot to complete the action in backend systems (ERP, CRM, databases)

8. Security, Compliance, and Ethical AI

As AI handles more sensitive data, contact centers embed security at multiple levels:

Voice biometrics for authentication (e.g., Nuance, Pindrop)

PII Redaction via entity recognition models

Audit Trails of AI decisions for compliance (especially in finance/healthcare)

Bias Monitoring Pipelines to detect model drift or demographic skew

Data governance frameworks like ISO 27001, GDPR, and SOC 2 compliance are standard in enterprise AI deployments.

Final Thoughts

AI in 2025 has moved far beyond simple automation. It now orchestrates entire contact center ecosystems—powering conversational agents, augmenting human reps, automating back-office workflows, and delivering predictive intelligence in real time.

The technical stack is increasingly cloud-native, model-driven, and infused with real-time analytics. For engineering teams, the focus is now on building scalable, secure, and ethical AI infrastructures that deliver measurable impact across customer satisfaction, cost savings, and employee productivity.

As AI models continue to advance, contact centers will evolve into fully adaptive systems, capable of learning, optimizing, and personalizing in real time. The revolution is already here—and it's deeply technical.

#AI-based contact center#conversational AI in contact centers#natural language processing (NLP)#virtual agents for customer service#real-time sentiment analysis#AI agent assist tools#speech-to-text AI#AI-powered chatbots#contact center automation#AI in customer support#omnichannel AI solutions#AI for customer experience#predictive analytics contact center#retrieval-augmented generation (RAG)#voice biometrics security#AI-powered knowledge base#machine learning contact center#robotic process automation (RPA)#AI customer journey analytics

0 notes

Text

Deep Learning and Its Programming Applications

Deep learning is a transformative technology in the field of artificial intelligence. It mimics the human brain's neural networks to process data and make intelligent decisions. From voice assistants and facial recognition to autonomous vehicles and medical diagnostics, deep learning is powering the future.

What is Deep Learning?

Deep learning is a subset of machine learning that uses multi-layered artificial neural networks to model complex patterns and relationships in data. Unlike traditional algorithms, deep learning systems can automatically learn features from raw data without manual feature engineering.

How Does It Work?

Deep learning models are built using layers of neurons, including:

Input Layer: Receives raw data

Hidden Layers: Perform computations and extract features

Output Layer: Produces predictions or classifications

These models are trained using backpropagation and optimization algorithms like gradient descent.

Popular Deep Learning Libraries

TensorFlow: Developed by Google, it's powerful and widely used.

Keras: A high-level API for building and training neural networks easily.

PyTorch: Preferred for research and flexibility, developed by Facebook.

MXNet, CNTK, and Theano: Other libraries used for specific applications.

Common Applications of Deep Learning

Computer Vision: Image classification, object detection, facial recognition

Natural Language Processing (NLP): Chatbots, translation, sentiment analysis

Speech Recognition: Voice assistants like Siri, Alexa

Autonomous Vehicles: Environment understanding, path prediction

Healthcare: Disease detection, drug discovery

Sample Python Code Using Keras

Here’s how you can build a simple neural network to classify digits using the MNIST dataset: from tensorflow.keras.datasets import mnist from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Flatten from tensorflow.keras.utils import to_categorical # Load data (x_train, y_train), (x_test, y_test) = mnist.load_data() # Normalize data x_train, x_test = x_train / 255.0, x_test / 255.0 # Convert labels to categorical y_train = to_categorical(y_train) y_test = to_categorical(y_test) # Build model model = Sequential([ Flatten(input_shape=(28, 28)), Dense(128, activation='relu'), Dense(10, activation='softmax') ]) # Compile and train model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy']) model.fit(x_train, y_train, epochs=5, validation_data=(x_test, y_test))

Key Concepts to Learn

Neural network architectures (CNN, RNN, GAN, etc.)

Activation functions (ReLU, Sigmoid, Softmax)

Loss functions and optimizers

Regularization (Dropout, L2)

Hyperparameter tuning

Challenges in Deep Learning

Requires large datasets and high computational power

Training time can be long

Models can be difficult to interpret (black-box)

Overfitting on small datasets

Conclusion

Deep learning is a rapidly evolving field that opens doors to intelligent and automated systems. With powerful tools and accessible libraries, developers can build state-of-the-art models to solve real-world problems. Whether you’re a beginner or an expert, deep learning has something incredible to offer you!

0 notes

Text

Homework 7 DSCI 552

1. Multi-class and Multi-Label Classification Using Support Vector Machines (a) Download the Anuran Calls (MFCCs) Data Set from: https://archive.ics. uci.edu/ml/datasets/Anuran+Calls+%28MFCCs%29. Choose 70% of the data randomly as the training set. (b) Each instance has three labels: Families, Genus, and Species. Each of the labels has multiple classes. We wish to solve a multi-class and…

0 notes

Text

How AI and Machine Vision Are Revolutionizing Bottle Inspection Machines

Introduction

The demand for high-quality bottled products in industries such as beverages, pharmaceuticals, cosmetics, and chemicals continues to rise. To meet strict quality control standards, manufacturers rely on bottle inspection machines to detect defects, ensure proper labeling, and maintain product integrity. Traditional inspection methods, while effective, often struggle to keep up with high-speed production lines and complex defect detection requirements.

With the advent of Artificial Intelligence (AI) and Machine Vision, bottle inspection technology has undergone a revolutionary transformation. These advancements enhance accuracy, speed, and adaptability, reducing human intervention and improving overall production efficiency.

1. AI-Driven Defect Detection and Classification

Traditional bottle inspection machines rely on predefined rules and static algorithms, which can be limited in detecting complex or subtle defects. AI-powered systems leverage deep learning and neural networks to:

Identify a wide range of defects, including cracks, scratches, deformities, and contaminants.

Learn and improve over time, adapting to new defect patterns without requiring extensive reprogramming.

Minimize false positives and false negatives, ensuring more precise inspection results.

2. Machine Vision for High-Precision Inspection

Machine vision technology uses high-resolution cameras, sensors, and advanced imaging algorithms to inspect bottles at ultra-fast speeds. Key benefits include:

360-degree bottle scanning, ensuring complete coverage of surface defects and labels.

Real-time analysis enables immediate rejection of defective products without disrupting the production flow.

Multi-spectral imaging can detect imperfections invisible to the human eye, such as internal cracks or microscopic contaminants.

3. Automated Cap and Seal Integrity Verification

Proper capping and sealing are crucial in preventing leakage, contamination, and product spoilage. AI-powered inspection machines can:

Analyze cap alignment and torque to ensure proper sealing.

Detect missing or misapplied tamper-proof bands.

Assess pressure seals using non-invasive techniques to maintain product integrity.

4. Fill Level and Volume Detection with AI Precision

Accurate fill levels ensure compliance with industry regulations and consumer expectations. AI-based inspection systems use:

Advanced image processing algorithms to measure fill levels with high precision.

Infrared and X-ray technology to assess liquid volume in opaque bottles.

Predictive analytics helps manufacturers adjust filling processes in real time to prevent waste and inconsistencies.

5. Smart Label and Barcode Verification

Accurate labeling is essential for brand consistency and regulatory compliance. AI and machine vision enhance label inspection by:

Reading and verifying text, barcodes, and QR codes with optical character recognition (OCR).

Detecting misaligned, wrinkled, or missing labels.

Comparing label colors and designs to ensure consistency across production batches.

6. AI-Powered Contaminant Detection

Contamination in bottled products poses serious health risks and can lead to product recalls. AI-enhanced bottle inspection systems use:

X-ray and infrared imaging to detect foreign particles inside bottles.

AI-driven anomaly detection to differentiate between harmless variations and actual contaminants.

Real-time contamination alerts allow manufacturers to take immediate corrective actions.

7. Integration with IoT and Smart Manufacturing

AI-driven bottle inspection machines are increasingly integrated with Industry 4.0 technologies, enabling:

Cloud-based data storage and remote monitoring provide real-time insights into production quality.

Predictive maintenance, where AI identifies potential machine failures before they occur, reduces downtime.

Seamless communication with robotic automation systems, optimizing sorting and rejection processes.

8. Enhanced Speed and Scalability

Traditional inspection methods often struggle to keep pace with high-speed production lines. AI-powered machines can:

Process thousands of bottles per minute with minimal error rates.

Adapt to different bottle shapes and sizes without requiring extensive reconfiguration.

Scale with growing production demands, ensuring long-term investment value.

9. Reduction in Manual Labor and Costs

AI and machine vision drastically reduce the need for human inspectors, leading to:

Lower operational costs by minimizing labor-intensive quality control processes.

Increased accuracy and reliability, as AI-driven machines are not prone to human fatigue or errors.

Faster decision-making, as AI can analyze and classify defects in real time.

10. Regulatory Compliance and Quality Assurance

Manufacturers in the food, beverage, and pharmaceutical industries must comply with stringent safety and quality regulations. AI-based bottle inspection systems ensure compliance by:

Automatically generating compliance reports and audit trails.

Identifying deviations from regulatory standards in real time.

Providing actionable insights for continuous quality improvement.

Conclusion

The integration of AI and machine vision into bottle inspection machines has revolutionized quality control in manufacturing. These technologies enable faster, more accurate, and adaptive defect detection, ensuring that only the highest-quality products reach consumers. By leveraging AI-driven automation, manufacturers can enhance efficiency, reduce waste, and meet stringent industry standards—ultimately securing a competitive edge in an increasingly demanding market.

1 note

·

View note

Text

AI Solutions for Hospitality Booking Request Management - Centelli

Personalised service is the key to creating exceptional guest experiences. Managing and analysing customer booking requests efficiently has become crucial for hotels and service providers aiming to stay ahead in a competitive market. Cutting-edge Automated Intelligence (AI solutions) is at the heart of this transformation. These are designed to extract critical information seamlessly and accurately.

Introducing an Advanced AI Solution for Booking Requests

Our AI-driven model is a game-changer, engineered to effortlessly extract vital details from customer booking requests. By utilising advanced natural language processing (NLP) techniques, this AI solution is capable of identifying and categorising complex booking information, including:

Payment Methods

Meal Preferences

Parking Requests

Service Summaries

Behind the Scenes: How AI Solutions Work

The AI-powered system integrates an end-to-end pipeline leveraging state-of-the-art NLP techniques, including:

Multi-label Classification: Each booking request is analysed to assign multiple relevant categories, enabling a comprehensive understanding of guest needs.

Text Summarisation: The system distils unstructured booking data into concise, actionable summaries, saving hotel staff time and effort.

The Future of Hospitality Technology

As AI continues to evolve, its applications in the hospitality sector are set to expand. The potential is limitless, from automating routine tasks to delivering hyper-personalised experiences. Integrating smart hotel booking systems, AI solutions, and machine learning in hospitality is poised to revolutionise how hotels interact with their guests. Our AI-powered solution for booking requests is just the beginning of a broader movement towards smarter, more efficient hospitality operations.

By integrating AI solutions into the booking process, hotels and service providers can transform how they manage guest requests, ensuring accuracy, efficiency, and superior customer service. With proven results and cutting-edge technology, our AI solutions empower businesses to stay competitive and exceed guest expectations.

Ready to elevate your hospitality operations with AI solutions? Contact us today to learn how our AI solutions can revolutionise your booking management process!

READ MORE

#Automation Solutions#Intelligent Automation#Hospitality Automation#Business Automation#Dubai#centelli#AI Solutions

1 note

·

View note

Text

How to Clean and Label Your Image Classification Dataset for Better Accuracy

Introduction

In the realm of machine learning, the caliber of your dataset is pivotal to the performance of your model. Specifically, in Image Classification Dataset , possessing a well-organized and accurately labeled dataset is essential for attaining high levels of accuracy. Disorganized or incorrectly labeled data can mislead your model, resulting in erroneous predictions and diminished overall effectiveness. This blog will guide you through the critical steps necessary to effectively clean and label your image classification dataset, ensuring that your model is trained on high-quality data to achieve optimal results.

The Importance of Cleaning and Labeling

Before we delve into the steps, it is important to recognize the significance of cleaning and labeling:

Enhanced Model Accuracy – Clean and precise data enables the model to identify the correct patterns, thereby improving classification accuracy.

Minimized Overfitting – Eliminating noise and irrelevant data helps prevent the model from memorizing patterns that do not generalize well to unseen data.

Accelerated Training Speed – A well-structured dataset facilitates quicker learning for the model, leading to lower computational expenses.

Increased Interpretability – When the input data for the model is clear and consistent, it becomes easier to debug and enhance.

Step 1: Gather High-Quality Images

The initial step in constructing an effective dataset is to ensure that the images you gather are of high quality and pertinent to the classification task.

Suggestions for Improved Image Collection:

Ensure uniformity in image format (e.g., JPEG or PNG).

Steer clear of low-resolution images or those that have undergone excessive compression.

Ensure the dataset encompasses a variety of angles, lighting conditions, and backgrounds to enhance generalization.

Step 2: Refine Your Dataset

After collecting the images, the subsequent step is to refine them by eliminating duplicates, blurry images, and any irrelevant content.

Cleaning Techniques:

Eliminate Duplicates:

Employ hashing or similarity-based algorithms to detect and remove duplicate images.

Remove Low-Quality or Corrupted Images:

Identify corrupted files or images with incomplete data. Utilize automated tools such as OpenCV to discard images that are blurry or have low contrast.

Resize and Standardize:

Adjust all images to a uniform size (e.g., 224x224 pixels) to maintain consistency during training. Normalize pixel values to a standard range (e.g., 0–1 or -1 to 1).

Data Augmentation:

Enhance variability by rotating, flipping, and cropping images to strengthen the model's resilience to different variations.

Step 3: Accurately Label Your Dataset

Precise labeling is essential for the success of any image classification model. Incorrect labels can mislead the model and lead to diminished accuracy.

Best Practices for Labeling:

Implement Consistent Labeling Guidelines:

Establish a fixed set of categories and adhere to them consistently. Avoid vague labels—be specific.

Automate When Feasible:

Utilize AI-driven labeling tools to expedite the process. Tools such as LabelImg or CVAT can automate labeling and enhance consistency.

Incorporate Human Oversight:

Combine AI labeling with human verification to achieve greater accuracy. Engage domain experts to review and validate labels, thereby minimizing errors.

Employ Multi-Class and Multi-Label Approaches:

For images that may belong to multiple classes, utilize multi-label classification. Hierarchical labeling can assist in organizing complex datasets.

Step 4: Strategically Split Your Dataset

After cleaning and labeling the data, the next step is to divide the dataset for training and evaluation. A widely accepted strategy is:

70% Training Set – Utilized for model training.

15% Validation Set – Used for tuning hyperparameters and preventing overfitting.

15% Test Set – Reserved for the final evaluation of the model.

Ensure that the dataset split is randomized to avoid data leakage and overfitting.

Step 5: Monitor and Enhance

Your dataset is dynamic and will require regular updates and refinements.

Tips for Continuous Improvement:

Regularly incorporate new images to ensure the dataset remains current.

Assess model performance and pinpoint any misclassified instances.

Modify labeling or enhance data if you observe recurring misclassification trends.

Concluding Remarks

The process of cleaning and labeling your image classification dataset is not a one-off task; it demands ongoing attention and strategic revisions. A meticulously organized dataset contributes to improved model performance, expedited training, and enhanced accuracy in predictions. If you require assistance with your image classification project, explore our image classification services to discover how we can support you in developing high-performance Globose Technology Solutions AI models!

I

0 notes

Text

Data Science vs. Machine Learning vs. Artificial

Intelligence: What Is the Difference

Technology advancement has popularized many buzzwords, and Data Science,

Machine Learning (ML), and Artificial Intelligence (AI) are the three most

commonly mixed terms. Although all of these domains share a wide range of

overlapping functions, they play distinct roles in the arena of technology and

business. Knowledge about their distinction is essential for experts, businesses,

and students pursuing a career in data-driven decision-making.

What is Data Science?

Definition

Data Science is a multi-disciplinary area that integrates statistics, mathematics,

programming, and domain expertise to extract useful information from data. It is

centered on data gathering, cleaning, analysis, visualization, and interpretation to

support decision-making

Key Components of Data Science

1. Data Collection – Extracting raw data from multiple sources, including

databases, APIs, and web scraping.

2. Data Cleaning – Deleting inconsistencies, missing values, and outliers to

ready data for analysis.

3. Exploratory Data Analysis (EDA) – Grasping data trends, distributions, and

patterns using visualizations.

4. Statistical Analysis – Using probability and statistical techniques to make

conclusions from data.5. Machine Learning Models – Utilizing algorithms to predict and automate

insights.

6. Data Visualization – Making graphs and charts to present findings clearly.

Real-World Applications of Data Science

Business Analytics – Firms employ data science for understanding customer

behavior and optimizing marketing efforts.

Healthcare – Doctors utilize data science for disease prediction and drug

development.

Finance – Banks use data science for fraud detection and risk evaluation.

E-commerce – Businesses understand customer ratings and product interest to

make recommendations better.

What is Machine Learning?

Definition

Machine Learning (ML) is a branch of artificial intelligence that deals with the

creation of algorithms that enable computers to learn from data and get better

over time without being directly programmed. It makes it possible for systems to

make predictions, classifications, and decisions based on patterns in data.

Key Elements of Machine Learning

1. Supervised Learning – The model is trained on labeled data (e.g., spam

filtering, credit rating).2. Unsupervised Learning – The model learns patterns from unlabeled data (e.g.,

customer segmentation, anomaly detection).

3. Reinforcement Learning – The model learns by rewards and penalties to

maximize performance (e.g., self-driving cars, robotics).

4. Feature Engineering – Variable selection and transformation to enhance model

accuracy.

5. Model Evaluation – Model performance evaluation using metrics such as

accuracy, precision, and recall.

Real-World Applications of Machine Learning

Chatbots & Virtual Assistants – Siri, Alexa, and Google Assistant learn user

preferences to enhance responses.

Fraud Detection – Banks utilize ML models to identify suspicious transactions.

Recommendation Systems – Netflix, YouTube, and Spotify utilize ML to

recommend content based on user behavior.

Medical Diagnosis – Machine learning assists in identifying diseases from X-rays

and MRIs.

What is Artificial Intelligence?

Definition

Artificial Intelligence (AI) is a more general term that focuses on building

machines with the ability to emulate human intelligence. It encompasses

rule-based systems, robots, and machine learning algorithms to accomplish

tasks usually requiring human intelligence.

Types of Artificial Intelligence1. Narrow AI (Weak AI) – Built for particular functions (e.g., facial recognition,

search engines).

2. General AI (Strong AI) – Seeks to be able to do any intellectual task that a

human can accomplish (not yet fully achieved).

3. Super AI – A hypothetical phase in which machines are more intelligent than

humans.

Important Elements of AI

Natural Language Processing (NLP) – AI's capacity to process and create human

language (e.g., chatbots, voice assistants).

Computer Vision – The capability of machines to interpret and process images

and videos (e.g., facial recognition).

Expert Systems – AI-powered software that mimics human decision-making.

Neural Networks – A subset of machine learning inspired by the human brain,

used for deep learning.

Real-World Applications of AI

Self-Driving Cars – AI enables autonomous navigation and decision-making.

Smart Assistants – Google Assistant, Alexa, and Siri process voice commands

using AI.

Healthcare Innovations – AI helps in robotic surgeries and personalized

medicine.

Cybersecurity – AI detects cyber threats and prevents cyberattacks.

Data Science vs. Machine Learning vs. AI: Main Differences

How These Disciplines Interact

AI is the most general discipline that machine learning is a part of.

Machine learning is an approach applied in AI to create self-improving models.

Data science uses machine learning for business intelligence and predictive

analytics.

For instance, in a Netflix recommendation engine:

Data Science gathers and examines user action

Machine Learning forecasts what content a user will enjoy given past decisions.

Artificial Intelligence personalizes the recommendations through deep learning

and NLP.

Conclusion

Data Science, Machine Learning, and Artificial Intelligence are related but have

different applications and purposes. Data science is all about data analysis and

visualization to derive insights, machine learning is about constructing models

that can learn from data, and AI is concerned with developing machines that can

emulate human intelligence.

Knowing these distinctions will assist professionals in selecting the ideal career

path and companies in optimizing these technologies to their advantage.

Whether you aspire to have a career in data analytics, construct AI-based

applications, or work on machine learning models, there are promising avenues

in each one of these professions in the modern data-centric age

0 notes

Text

AI Agent Development: A Comprehensive Guide to Building Intelligent Autonomous Systems

Artificial Intelligence (AI) has revolutionized the way businesses and individuals interact with technology. One of the most exciting advancements in AI is the development of AI agents—intelligent, autonomous systems capable of making decisions, learning from data, and executing tasks with minimal human intervention.

Whether you're a developer, entrepreneur, or AI enthusiast, understanding how to build AI agents is a valuable skill. This guide will cover the fundamental concepts, key technologies, and step-by-step processes involved in AI agent development.

What is an AI Agent?

An AI agent is a software entity that perceives its environment, processes information, and takes actions to achieve specific goals. These agents range from simple rule-based bots to advanced deep learning-driven systems capable of complex reasoning.

Types of AI Agents

Reactive Agents – Respond to stimuli without memory (e.g., chatbots with predefined responses).

Model-Based Agents – Store past experiences to improve decision-making.

Goal-Based Agents – Plan actions to achieve long-term objectives.

Utility-Based Agents – Optimize decisions based on utility functions.

Learning Agents – Continuously improve through machine learning.

Key Technologies in AI Agent Development

To build intelligent AI agents, developers use a combination of machine learning, natural language processing (NLP), reinforcement learning, and neural networks. Here are some essential technologies:

Machine Learning (ML): Enables AI agents to learn from data and improve decision-making.

Natural Language Processing (NLP): Helps AI agents understand and generate human language.

Computer Vision: Allows AI to interpret and process visual information.

Reinforcement Learning (RL): Helps agents learn optimal actions through rewards and penalties.

Multi-Agent Systems (MAS): Enables collaboration between multiple AI agents.

Popular AI frameworks and tools include TensorFlow, PyTorch, OpenAI Gym, LangChain, and Rasa.

How to Build an AI Agent: Step-by-Step Guide

1. Define the Purpose of Your AI Agent

What problem will the agent solve?

Who are the end users?

What type of AI agent is needed (reactive, goal-based, etc.)?

2. Collect and Prepare Data

Gather structured and unstructured data relevant to your AI agent's tasks.

Preprocess data (cleaning, labeling, feature extraction).

3. Choose the Right AI Model

Supervised Learning (classification, regression).

Unsupervised Learning (clustering, anomaly detection).

Reinforcement Learning (decision-making in dynamic environments).

4. Train and Optimize the AI Model

Use ML frameworks (TensorFlow, PyTorch) to train models.

Optimize hyperparameters for better accuracy and efficiency.

5. Integrate the AI Agent with a System

Deploy the trained model into an application, chatbot, or automation tool.

Use APIs to connect the AI agent with external systems.

6. Test and Improve

Perform rigorous testing to ensure accuracy, efficiency, and reliability.

Collect user feedback to refine the AI agent.

7. Deploy and Monitor Performance

Deploy the AI agent in a real-world setting.

Continuously monitor and update the model to adapt to new data.

Challenges in AI Agent Development

Developing AI agents comes with its set of challenges:

Data Quality Issues: Poor data can lead to biased or inaccurate models.

Computational Power: Training deep learning models requires significant resources.

Ethical Concerns: AI bias, transparency, and accountability need careful consideration.

Security Risks: AI systems can be vulnerable to adversarial attacks.

Future of AI Agent Development

AI agents are becoming increasingly sophisticated, paving the way for:

Fully autonomous AI assistants capable of complex problem-solving.

AI-driven customer service agents that handle entire workflows.

AI-powered robots in industries like healthcare, logistics, and manufacturing.

Decentralized AI agents working in blockchain and Web3 environments.

As AI technology advances, AI agents will become more intelligent, adaptable, and human-like, driving innovation across industries.

Conclusion

AI agent development is at the forefront of the AI revolution, enabling businesses to create autonomous, intelligent systems that enhance productivity and decision-making. By leveraging the right technologies, frameworks, and best practices, developers can build AI agents that transform industries and improve user experiences.

1 note

·

View note

Photo

Logistic Regression is one of the fundamental classification algorithms in machine learning. Despite its name, it is used for classification rather than regression tasks. It predicts the probability of an instance belonging to a particular category, making it widely used in various applications such as spam detection, disease diagnosis, and credit risk assessment.

Logistic Regression is a powerful classification algorithm used for binary and multi-class problems.

It works by modeling the probability of a class label using the sigmoid function.

Widely used in real-world applications like spam detection, disease diagnosis, and fraud detection.

While simple and efficient, it has limitations in handling non-linear relationships.

(via Logistic Regression in Machine Learning)

0 notes

Text

Data Visualization Techniques for Research Papers: A PhD Student’s Guide to Making Data Speak 📊📡

So, you’ve got mountains of data—numbers, statistics, relationships, and trends—but now comes the real challenge: how do you make your research understandable, compelling, and impactful?

Choosing the wrong visualization can distort findings, mislead readers, or worse—get your paper rejected! So, just dive into the best data visualization techniques for research papers and how you, as a PhD student, can use them effectively.

1. Why Data Visualization Matters in Research 📢

A well-designed visualization can:

✔ Simplify complex information – Because nobody wants to decipher raw numbers in a table.

✔ Enhance reader engagement – A compelling graph draws attention instantly.

✔ Highlight patterns and relationships – Trends and outliers pop out visually.

✔ Improve clarity for reviewers and audiences – Clear figures = stronger impact = better chances of acceptance!

🚀 Pro Tip: Journals love high-quality, well-labeled figures. If your visualizations are messy, unclear, or misleading, expect reviewer pushback.

2. Choosing the Right Chart for Your Data 📊

Different types of data require different types of visualizations. Here’s a quick guide to choosing the right chart based on your research data type.

A. Comparing Data? Use Bar or Column Charts 📊

If your research compares multiple categories (e.g., experimental vs. control groups, survey responses, etc.), bar charts work best.

✔ Vertical Bar Charts: Great for categorical data (e.g., “Number of Published Papers per Year”).

✔ Horizontal Bar Charts: Ideal when comparing long category names (e.g., “Funding Received by Research Institutions”).

🚀 Tool Tip: Use Seaborn, Matplotlib (Python), ggplot2 (R), or Excel to create polished bar charts.

B. Showing Trends Over Time? Use Line Charts 📈

For datasets where trends evolve over time (e.g., "Temperature Change Over Decades" or "Citation Growth of AI Research"), line charts provide a clear visual progression.

✔ Single-line charts: Track changes in one dataset.

✔ Multi-line charts: Compare trends across different variables.

🚀 Tool Tip: Matplotlib (Python) and ggplot2 (R) offer excellent support for customizable time-series visualizations.

C. Representing Parts of a Whole? Use Pie Charts (But Carefully) 🥧

Pie charts show proportions but should be used sparingly. If your data has more than 4-5 categories, use a bar chart instead—it’s much easier to read!

✔ Best for: Showing percentages in a dataset (e.g., "Distribution of Research Funding Sources").

✔ Avoid: Using pie charts when categories are too similar in size—they become hard to interpret.

🚀 Tool Tip: If you must use pie charts, D3.js (JavaScript) offers interactive, dynamic versions that work great for online research papers.

D. Finding Relationships in Data? Use Scatter Plots or Bubble Charts 🔄

Scatter plots are your best friend when showing correlations and relationships between two variables (e.g., “Impact of Sleep on Research Productivity”).

✔ Scatter Plots: Show correlations between two numeric variables.

✔ Bubble Charts: Add a third dimension by scaling the dots based on another variable (e.g., “GDP vs. Life Expectancy vs. Population Size”).

🚀 Tool Tip: Python's Seaborn library provides beautiful scatter plots with regression trend lines.

E. Visualizing Large-Scale Networks? Use Graphs & Network Diagrams 🌐

For research in social sciences, computer networks, genomics, or AI, network graphs provide insights into complex relationships.

✔ Nodes & Edges Graphs: Perfect for citation networks, neural networks, or gene interactions.

✔ Force-directed Graphs: Ideal for clustering related data points.

🚀 Tool Tip: Gephi, Cytoscape, and NetworkX (Python) are great tools for generating network graphs.

F. Displaying Hierarchical Data? Use Tree Maps or Sankey Diagrams 🌳

If your research involves nested structures (e.g., "Classification of Machine Learning Algorithms" or "Breakdown of Research Funding"), tree maps or Sankey diagrams offer a clear representation of hierarchical relationships.

✔ Tree Maps: Great for showing proportions within categories.

✔ Sankey Diagrams: Ideal for visualizing flow data (e.g., "Energy Transfer Between Ecosystems").

🚀 Tool Tip: Try D3.js (JavaScript) or Tableau for interactive tree maps and Sankey visualizations.

3. Best Practices for Data Visualization in Research Papers 📑

Now that you know which charts to use, let’s talk about how to format them for academic papers.

✅ 1. Label Everything Clearly

Your axes, titles, and legends should be self-explanatory—don't make readers guess what they’re looking at.

✅ 2. Use Color Intelligently

🚫 Bad: Neon rainbow colors that make your graph look like a unicorn exploded.

✅ Good: Use a consistent color scheme with high contrast for clarity.

🚀 Tool Tip: Use color palettes like ColorBrewer for research-friendly color schemes.

✅ 3. Keep It Simple & Avoid Chart Junk

Less is more. Avoid excessive gridlines, 3D effects, or unnecessary labels that clutter the visualization.

✅ 4. Use Statistical Annotations Where Needed

If you’re presenting significant findings, annotate your charts with p-values, regression lines, or confidence intervals for clarity.

🚀 Pro Tip: If you're new to coding, Tableau or Excel are the fastest ways to create polished graphs without programming.

Final Thoughts: Make Your Research Stand Out With Data Visualization 🚀

Strong data visualization doesn’t just make your research look pretty—it makes your findings more impactful. Choosing the right chart, formatting it correctly, and using the best tools can turn complex data into clear insights.

📌 Choose the right visualization for your data.

📌 Label and format your charts correctly.

📌 Use colors and statistical annotations wisely.

📌 Avoid unnecessary clutter—keep it simple!

🚀 Need expert help formatting your research visuals? Our Market Insight Solutions team can assist with professional data visualization, statistical analysis, and thesis formatting to ensure your research stands out.

💡 We will make your research visually compelling and publication-ready! 💡

1 note

·

View note