#node js web application

Explore tagged Tumblr posts

Text

Node.js Development Company in India - Dahooks

Node.js has emerged as one of the most popular and widely used platforms for web application development. With its efficient and scalable features, Node.js has become the go-to choice for businesses across the globe. In India, one company stands out for its expertise and excellence in Node.js development - Dahooks.

Dahooks, a leading Node.js development company based in India, has been instrumental in delivering cutting-edge solutions to businesses of all sizes. With their team's deep understanding of the latest industry trends and technologies, Dahooks has established itself as a trusted name in the field of Node.js development.

One of the key advantages of choosing Dahooks as your Node.js development partner is their extensive experience in handling complex projects. The team at Dahooks has successfully delivered numerous projects across diverse sectors such as e-commerce, healthcare, finance, media, and more. Their expertise in working with Node.js allows them to build high-performance, scalable, and robust applications that meet clients' specific requirements.

Dahooks ensures that each project undergoes a well-defined and strategic development process. They start by thoroughly analyzing the client's business goals, target audience, and existing infrastructure. This allows them to design a customized Node.js development plan that aligns with the client's objectives. The team at Dahooks strongly believes in the agile development approach, which ensures that the client remains involved throughout the project lifecycle. This promotes transparency, collaboration, and enables faster delivery of high-quality solutions.

When it comes to technical expertise, Dahooks boasts of a highly skilled and talented team of Node.js developers. These professionals possess in-depth knowledge of Node.js and its related technologies, enabling them to build feature-rich and scalable applications. They are proficient in utilizing frameworks such as Express.js, Socket.io, and Nest.js to develop robust backends, real-time applications, and APIs. With their expertise in front-end technologies such as React, Angular, and Vue.js, Dahooks can provide seamless integration between the frontend and backend, delivering a superior user experience.

Client satisfaction is one of the core values at Dahooks. They strive to understand their clients' specific needs and deliver solutions that exceed expectations. The company has earned a reputation for its timely project delivery, cost-effective services, and round-the-clock customer support. Dahooks' dedicated professionals ensure that each client receives personalized attention and is kept updated on the progress of their project.

In addition to their expertise in Node.js development, Dahooks also offers a wide range of complementary services. These include mobile app development, web design, cloud deployment, DevOps consulting, and more. This comprehensive suite of services makes Dahooks a one-stop destination for businesses looking for end-to-end solutions.

Dahooks' success stories and satisfied client testimonials speak volumes about the quality of their services. Their portfolio showcases a diverse range of innovative and successful projects across various industries. The company's strong commitment to excellence has earned them recognition as a top Node.js development company in India.

If you are looking for a reliable and experienced partner for your Node.js development needs in India, Dahooks is undoubtedly the company to consider. With their proven track record, technical expertise, and customer-centric approach, Dahooks is well-equipped to handle even the most complex projects. Trust Dahooks to bring your ideas to life and unlock the true potential of Node.js for your business. Contact Us Let's Talk Business! Visit: https://dahooks.com/web-dev Mail At: [email protected] A-83 Sector 63 Noida (UP) 201301, INDIA +91–7827150289 Suite D800 25420 Kuykendahl Rd, Tomball, TX 77375, United States +1 302–219–0001

#Node.js Development Company#Node JS Web Development#Node JS web application#Node.js development services#Hire Node.js Developers#Node JS Development Agency#Node JS application development#Custom Node.js Development#Node.js Mobile Application Development#node js development company in india#node js development company in usa

0 notes

Text

1 note

·

View note

Text

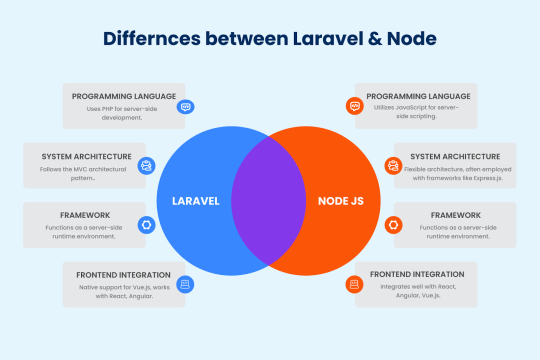

Node.js Vs Laravel: Choosing the Right Web Framework

Difference Between Laravel And Node.Js

Differences Between Laravel & Node

Language

Node.js: Utilizes JavaScript, a versatile, high-level language that can be used for both client-side and server-side development. This makes the development easy. Laravel: Uses PHP, a server-side scripting language specifically designed for web development. PHP has a rich history and is widely used in traditional web applications.

Architecture

Node.js: It does not enforce a specific architecture, allowing flexibility. Middleware architecture is generally used. Laravel: Adheres to the MVC (Model-View-Controller) architecture, which promotes clear separation of demands and goals, which enhances maintainability and scalability.

Framework

Node.js: Acts as a runtime environment, enabling JavaScript to be executed on the server-side. It is commonly used with frameworks like Express.js. Laravel: A full-featured server-side framework that provides a robust structure and built-in tools for web development, including routing, authentication, and ORM (Object-Relational Mapping).

Strengths

Node.js: Node.js is lightweight and high-performance, using an efficient model to handle many tasks simultaneously, making it ideal for real-time apps and high user concurrency. Additionally, it allows developers to use JavaScript for both frontend and backend, streamlining the development process. Laravel: Laravel provides comprehensive built-in features, including Eloquent ORM, Blade templating, and powerful CLI tools to simplify tasks. It also emphasizes elegant syntax, making the codebase easy to read and maintain.

ReadMore

#werbooz#mobile application development#webdevelopement#custom web development#website design services#Node js Laravel#Node Js vs Laravel

1 note

·

View note

Text

The primary purpose of using modules in Node.js is to help organize code into smaller, more manageable pieces.

1 note

·

View note

Text

Explore the #Node.js vs #Python comparison to make the right choice for your #web #app #development. Learn about their strengths, and weaknesses, and find the best #technology for your project in this #comprehensive guide.

#python#web development#node js#hire nodejs developers#node js developers#node js development company#technology#node js application

1 note

·

View note

Text

https://www.appinessworld.com/blogs/web-scraping-using-node-js/

0 notes

Text

Employee Management System

A web-based employee management application that facilitates managers and supervisors in scheduling people based on operational demands.

Industry: Customer Services

Tech stack: DHTMLX, JavaScript, Node.js, Webix

#outsourcing#software development#web development#staff augmentation#it staff augmentation#custom software development#custom software solutions#it staffing company#it staff offshoring#custom software#javascript#node.js#nodejs#node js development services#node js developers#node js development company#node js application#node#webixcustomization#webix

0 notes

Text

React JS

Component-Based Architecture:

React applications are built using components, which are reusable, self-contained pieces of the UI. Components can be nested, managed, and handled independently, leading to better maintainability and scalability.

JSX (JavaScript XML):

React uses JSX, a syntax extension that allows HTML to be written within JavaScript. This makes the code more readable and easier to write.

Virtual DOM:

React maintains a virtual DOM, an in-memory representation of the actual DOM. When the state of an object changes, React updates the virtual DOM and efficiently determines the minimal set of changes needed to update the real DOM, leading to performance improvements.

One-Way Data Binding:

Data flows in one direction, from parent to child components, which makes the data flow and logic easier to understand and debug.

State Management:

React components can maintain internal state, making it easy to build dynamic and interactive UIs. For more complex state management, libraries like Redux or Context API can be used.

Advantages of Using React

Performance:

Due to the virtual DOM, React minimizes direct manipulation of the DOM, resulting in better performance for dynamic applications.

Reusable Components:

Components can be reused across different parts of an application, reducing the amount of code and enhancing consistency.

Strong Community and Ecosystem:

A large community and a rich ecosystem of tools and libraries support React, making it easier to find solutions, get support, and integrate with other technologies.

SEO Friendly:

React can be rendered on the server using Node.js, making web pages more SEO-friendly compared to traditional client-side rendering.

Getting Started with React

To start building applications with React, you need to have Node.js and npm (Node Package Manager) installed. Here’s a basic setup to create a new React application:

Install Node.js and npm:

Download and install from Node.js website.

Create a New React Application:

You can use Create React App, an officially supported way to create single-page React applications with no configuration required:

npx create-react-app my-app cd my-app npm start

import React from 'react';

function Welcome(props) { return

Hello, {props.name}

; }

export default Welcome;

#React JS#Front end Developer#Advanced JavaScript#coding#html css#htmlcoding#html#css#php#website#html5 css3#software#React Training

5 notes

·

View notes

Text

Idiosys USA is a leading minnesota-based web development agency, providing the best standard web development, app development, digital marketing, and software development consulting services in Minnesota and all over the United States. We have a team of 50+ skilled IT professionals to provide world-class IT support to all sizes of industries in different domains. We are a leading Minnesota web design company that works for healthcare-based e-commerce, finance organisations business websites, the News Agency website and mobile applications, travel and tourism solutions, transport and logistics management systems, and e-commerce applications. Our team is skilled in the latest technologies like React, Node JS, Angular, and Next JS. We also worked with open-source PHP frameworks like Laravel, Yii2, and others. At Idiosys USA, you will get complete web development solutions. We have some custom solutions for different businesses, but our expertise is in custom website development according to clients requirements. We believe that we are best in cities.

#web development agency#website development company in usa#software development consulting#minnesota web design#hire web developer#hire web designer#web developer minneapolis#minneapolis web development#website design company#web development consulting#web development minneapolis#minnesota web developers#web design company minneapolis

2 notes

·

View notes

Text

DigitIndus Technologies Private Limited

DigitIndus Technologies Private Limited is one of the best emerging Digital Marketing and IT Company in Tricity (Mohali, Chandigarh, and Panchkula). We provide cost effective solutions to grow your business. DigitIndus Technologies provides Digital Marketing, Web Designing, Web Development, Mobile Development, Training and Internships

Digital Marketing, Mobile Development, Web Development, website development, software development, Internship, internship with stipend, Six Months Industrial Training, Three Weeks industrial Training, HR Internship, CRM, ERP, PHP Training, SEO Training, Graphics Designing, Machine Learning, Data Science Training, Web Development, data science with python, machine learning with python, MERN Stack training, MEAN Stack training, logo designing, android development, android training, IT consultancy, Business Consultancy, Full Stack training, IOT training, Java Training, NODE JS training, React Native, HR Internship, Salesforce, DevOps, certificates for training, certification courses, Best six months training in chandigarh,Best six months training in mohali, training institute

Certification of Recognition by StartupIndia-Government of India

DigitIndus Technologies Private Limited incorporated as a Private Limited Company on 10-01-2024, is recognized as a startup by the Department for Promotion of Industry and Internal Trade. The startup is working in 'IT Services' Industry and 'Web Development' sector. DPIIT No: DIPP156716

Services Offered

Mobile Application Development

Software development

Digital Marketing

Internet Branding

Web Development

Website development

Graphics Designing

Salesforce development

Six months Internships with job opportunities

Six Months Industrial Training

Six weeks Industrial Training

ERP development

IT consultancy

Business consultancy

Logo designing

Full stack development

IOT

Certification courses

Technical Training

2 notes

·

View notes

Text

JavaScript Frameworks

Step 1) Polyfill

Most JS frameworks started from a need to create polyfills. A Polyfill is a js script that add features to JavaScript that you expect to be standard across all web browsers. Before the modern era; browsers lacked standardization for many different features between HTML/JS/and CSS (and still do a bit if you're on the bleeding edge of the W3 standards)

Polyfill was how you ensured certain functions were available AND worked the same between browsers.

JQuery is an early Polyfill tool with a lot of extra features added that makes JS quicker and easier to type, and is still in use in most every website to date. This is the core standard of frameworks these days, but many are unhappy with it due to performance reasons AND because plain JS has incorporated many features that were once unique to JQuery.

JQuery still edges out, because of the very small amount of typing used to write a JQuery app vs plain JS; which saves on time and bandwidth for small-scale applications.

Many other frameworks even use JQuery as a base library.

Step 2) Encapsulated DOM

Storing data on an element Node starts becoming an issue when you're dealing with multiple elements simultaneously, and need to store data as close as possible to the DOMNode you just grabbed from your HTML, and probably don't want to have to search for it again.

Encapsulation allows you to store your data in an object right next to your element so they're not so far apart.

HTML added the "data-attributes" feature, but that's more of "loading off the hard drive instead of the Memory" situation, where it's convenient, but slow if you need to do it multiple times.

Encapsulation also allows for promise style coding, and functional coding. I forgot the exact terminology used,but it's where your scripting is designed around calling many different functions back-to-back instead of manipulating variables and doing loops manually.

Step 3) Optimization

Many frameworks do a lot of heavy lifting when it comes to caching frequently used DOM calls, among other data tools, DOM traversal, and provides standardization for commonly used programming patterns so that you don't have to learn a new one Everytime you join a new project. (you will still have to learn a new one if you join a new project.)

These optimizations are to reduce reflowing/redrawing the page, and to reduce the plain JS calls that are performance reductive. A lot of these optimatizations done, however, I would suspect should just be built into the core JS engine.

(Yes I know it's vanilla JS, I don't know why plain is synonymous with Vanilla, but it feels weird to use vanilla instead of plain.)

Step 4) Custom Element and component development

This was a tool to put XML tags or custom HTML tags on Page that used specific rules to create controls that weren't inherent to the HTML standard. It also helped linked multiple input and other data components together so that the data is centrally located and easy to send from page to page or page to server.

Step 5) Back-end development

This actually started with frameworks like PHP, ASP, JSP, and eventually resulted in Node.JS. these were ways to dynamically generate a webpage on the server in order to host it to the user. (I have not seen a truly dynamic webpage to this day, however, and I suspect a lot of the optimization work is actually being lost simply by programmers being over reliant on frameworks doing the work for them. I have made this mistake. That's how I know.)

The backend then becomes disjointed from front-end development because of the multitude of different languages, hence Node.JS. which creates a way to do server-side scripting in the same JavaScript that front-end developers were more familiar with.

React.JS and Angular 2.0 are more of back end frameworks used to generate dynamic web-page without relying on the User environment to perform secure transactions.

Step 6) use "Framework" as a catch-all while meaning none of these;

Polyfill isn't really needed as much anymore unless your target demographic is an impoverished nation using hack-ware and windows 95 PCs. (And even then, they could possible install Linux which can use modern lightweight browsers...)

Encapsulation is still needed, as well as libraries that perform commonly used calculations and tasks, I would argue that libraries aren't going anywhere. I would also argue that some frameworks are just bloat ware.

One Framework I was researching ( I won't name names here) was simply a remapping of commands from a Canvas Context to an encapsulated element, and nothing more. There was literally more comments than code. And by more comments, I mean several pages of documentation per 3 lines of code.

Custom Components go hand in hand with encapsulation, but I suspect that there's a bit more than is necessary with these pieces of frameworks, especially on the front end. Tho... If it saves a lot of repetition, who am I to complain?

Back-end development is where things get hairy, everything communicates through HTTP and on the front end the AJAX interface. On the back end? There's two ways data is given, either through a non-html returning web call, *or* through functions that do a lot of heavy lifting for you already.

Which obfuscates how the data is used.

But I haven't really found a bad use of either method. But again; I suspect many things about performance impacts that I can't prove. Specifically because the tools in use are already widely accepted and used.

But since I'm a lightweight reductionist when it comes to coding. (Except when I'm not because use-cases exist) I can't help but think most every framework work, both front-end and Back-end suffers from a lot of bloat.

And that bloat makes it hard to select which framework would be the match for the project you're working on. And because of that; you could find yourself at the tail end of a development cycle realizing; You're going to have to maintain this as is, in the exact wrong solution that does not fit the scope of the project in anyway.

Well. That's what junior developers are for anyway...

2 notes

·

View notes

Text

Node js Full Stack

Node js Full Stack course at Croma Campus offers hands-on training in front-end and back-end development. Learn HTML, CSS, JavaScript, Node.js, Express, MongoDB, and more. This course prepares you for real-world projects and job-ready skills. Ideal for aspiring full-stack developers looking to build dynamic web applications.

0 notes

Text

Hire node js developer

If you're building a fast, scalable web application, it's a smart move to hire a Node.js developer. With their expertise in server-side JavaScript, they can create efficient, real-time applications that handle large volumes of data with ease. Whether it's for APIs, backend systems, or full-stack development, hiring a Node.js developer ensures your project runs smoothly and performs at its best.

0 notes

Text

Modern Custom Web App Development Services

Today’s digital era requires tailored solutions for companies to remain agile, competitive, and human-centric. Packaged software sometimes does not make a good fit with particular operations requirements or with the user experience. This is where custom web app development comes in.

Intelligent Scalable High Performance Web Apps At Appnox Technologies, we create intelligent, scalable, and high performing web applications that are tailored to help you meet your goals. Whether you’re baby stepping your way into the market or a seasoned enterprise looking to streamline internal processes, our bespoke solutions allow you to break new ground, improve productivity, and enhance the digital experience for you and your users.

What Is Custom Web Application Development?

A custom web application is a unique or custom-built software application that is designed to cater the exclusive needs, processes, and challenges of a certain business or organization.

Goals of a Custom Web Application:

Offer an incredibly customized and efficient digital product

Improve business systems and processes

Encourage innovation in the enterprise

Provide a competitive edge in the marketplace

Top Benefits of Custom Web Application Development

Customized for Your Company

Your custom app is designed based on your unique requirements. If you need to connect to a particular CRM, automate your supply chain, or comply with user-specific workflows, you get exactly the solution you require.

High Scalability

Our products are designed for scalability. Your web app can scale with your user base or feature set without the need to sacrifice performance.

Seamless Integrations

Want to connect to a third-party tool such as a payments gateway, an analytics platform or an API? With a custom solution, you can do pretty much whatever the hell you want to add—and how it works—ability.

Enhanced Security

Tailor-made apps are designed with strong security measures in mind for your unique business structure. You sidestep typical exploits its off-the-shelf counterparts and have a peace of mind that your privacy is upheld.

Our Custom Web Application Development Services

Appnox Technologies is a professional web application development company that provides end-to-end web development services and we do full cycle back end and front end application development. Our services include:

Custom Web App Design & DevelopmentPowerful backend combined with user orientated designs to develop responsive and interactive applications.

Enterprise Web SolutionsScaling enterprise applications to automate and optimize intricate business processes.

SaaS Application DevelopmentDeveloped strong, subscription-based web platforms for both startups and established software businesses.

Progressive Web Apps (PWAs)On-demand, app-like experiences with offline support and performance on the web.

API Development & Integration Building custom APIs or using third-party services to enhance functionality and connectivity.

Ongoing Maintenance & SupportMaintaining your web application to be the fastest, most secure and modern it can be, now and forever.

Appnox’s Custom Web App Development Process

Our apps are built to scale with your business. As your needs grow, we can easily enhance existing features or add new ones to support your expansion.

Requirement Analysis We collect and evaluate business objectives, technological requirements and end user requirements.

UI/UX DesignYour designers develop user friendly, mobile friendly interfaces to improve interaction.

Custom DevelopmentLeveraging modern frameworks such as React, Node. js, Vue. js, and Laravel, and together they’ll help turn your vision into a long-lasting success.

Testing & QAWe test things — performance, security, usability — to ensure you get a mostly bug-free, stable product.

Deployment & ScalingWe deploy to your cloud (Aws/Azure/GCP) of choice with full CI/CD pipelines for easy going live.

Ongoing SupportAfter we launch, we will continue to maintain, monitor, and add features to your app.

Industries We Serve

Our customized web app development solutions are used by:

Healthcare – Patient Portal, Appointment Scheduling System, Health Dashboard

Fintech – Platforms to execute transaction s, tracking and investment and risk analytics tout etc.

eCommerce – Custom shopping carts, product management systems, and vendor marketplaces

Travel & Logistics – Booking engines, routing and Real-time Tracking Solutions

Education – LMS, online exam software and e-learning platforms

Real Estate – Listing apps, Property Management CRM tools, virtual tour integrations etc.

Why Choose Appnox Technologies?

Selecting the best vendor for your custom web application, is one of the key decisions to make. And this is why we’re unique at Appnox Technologies:

✔ Deep Technical Expertise

Our team is proficient in cutting-edge web technologies such as React, Angular, Laravel, Node. js, and more. We develop solid, secure, scalable applications with the best methodologies in mind.

✔ Full-Cycle Development

We do everything from strategy and design to development, deployment, and support. You end up with a one-stop shop service provider you can trust.

✔ Industry-Specific Solutions

We know the challenges of the industry and build apps that solve real problems—faster and more effectively.

✔ Agile & Transparent Process

We keep you informed at every turn. Support You Because with weekly check ins, demos and feedback you’re always in the loop.

Frequently Asked Questions (FAQs)

Q1: How long does it take to develop a custom web application?

The time for development will correlate with what features and integrations and scope of work you may need. A simple custom app might take 2–3 months; complex enterprise solutions can take 4–6 months or more.

Q2: How much does custom web application development cost?

Pricing depends on functionality, tech stack, and integrations. Price We at Appnox provide cheap price models to suit your budget and needs. Contact us for a free estimate.

Q3: Will my custom web application work on mobile devices?

Yes. All of our software is responsive designed, allowing it to function the same on any desktop, tablet or mobile device.

Q4: Can I upgrade or scale the app later?

Absolutely. Our apps are engineered for scale. As your business scales up, we can simply increase or add features to the app.

1 note

·

View note

Text

Web Scraping Using Node Js

Web scraping using node js is an automated technique for gathering huge amounts of data from websites. The majority of this data is unstructured in HTML format and is transformed into structured data in a spreadsheet or database so that it can be used in a variety of applications in JSON format.

Web scraping is a method for gathering data from web pages in a variety of ways. These include using online tools, certain APIs, or even creating your own web scraping programmes from scratch. You can use APIs to access the structured data on numerous sizable websites, including Google, Twitter, Facebook, StackOverflow, etc.

The scraper and the crawler are the two tools needed for web scraping.

The crawler is an artificially intelligent machine that searches the internet for the required data by clicking on links.

A scraper is a particular tool created to extract data from a website. Depending on the scale and difficulty of the project, the scraper's architecture may change dramatically to extract data precisely and effectively.

Different types of web scrapers

There are several types of web scrapers, each with its own approach to extracting data from websites. Here are some of the most common types:

Self-built web scrapers: Self-built web scrapers are customized tools created by developers using programming languages such as Python or JavaScript to extract specific data from websites. They can handle complex web scraping tasks and save data in a structured format. They are used for applications like market research, data mining, lead generation, and price monitoring.

Browser extensions web scrapers: These are web scrapers that are installed as browser extensions and can extract data from websites directly from within the browser.

Cloud web scrapers: Cloud web scrapers are web scraping tools that are hosted on cloud servers, allowing users to access and run them from anywhere. They can handle large-scale web scraping tasks and provide scalable computing resources for data processing. Cloud web scrapers can be configured to run automatically and continuously, making them ideal for real-time data monitoring and analysis.

Local web scrapers: Local web scrapers are web scraping tools that are installed and run on a user's local machine. They are ideal for smaller-scale web scraping tasks and provide greater control over the scraping process. Local web scrapers can be programmed to handle more complex scraping tasks and can be customized to suit the user's specific needs.

Why are scrapers mainly used?

Scrapers are mainly used for automated data collection and extraction from websites or other online sources. There are several reasons why scrapers are mainly used for:

Price monitoring:Price monitoring is the practice of regularly tracking and analyzing the prices of products or services offered by competitors or in the market, with the aim of making informed pricing decisions. It involves collecting data on pricing trends and patterns, as well as identifying opportunities for optimization and price adjustments. Price monitoring can help businesses stay competitive, increase sales, and improve profitability.

Market research:Market research is the process of gathering and analyzing data on consumers, competitors, and market trends to inform business decisions. It involves collecting and interpreting data on customer preferences, behavior, and buying patterns, as well as assessing the market size, growth potential, and trends. Market research can help businesses identify opportunities, make informed decisions, and stay competitive.

News Monitoring:News monitoring is the process of tracking news sources for relevant and timely information. It involves collecting, analyzing, and disseminating news and media content to provide insights for decision-making, risk management, and strategic planning. News monitoring can be done manually or with the help of technology and software tools.

Email marketing:Email marketing is a digital marketing strategy that involves sending promotional messages to a group of people via email. Its goal is to build brand awareness, increase sales, and maintain customer loyalty. It can be an effective way to communicate with customers and build relationships with them.

Sentiment analysis:Sentiment analysis is the process of using natural language processing and machine learning techniques to identify and extract subjective information from text. It aims to determine the overall emotional tone of a piece of text, whether positive, negative, or neutral. It is commonly used in social media monitoring, customer service, and market research.

How to scrape the web

Web scraping is the process of extracting data from websites automatically using software tools. The process involves sending a web request to the website and then parsing the HTML response to extract the data.

There are several ways to scrape the web, but here are some general steps to follow:

Identify the target website.

Gather the URLs of the pages from which you wish to pull data.

Send a request to these URLs to obtain the page's HTML.

To locate the data in the HTML, use locators.

Save the data in a structured format, such as a JSON or CSV file.

Examples:-

SEO marketers are the group most likely to be interested in Google searches. They scrape Google search results to compile keyword lists and gather TDK (short for Title, Description, and Keywords: metadata of a web page that shows in the result list and greatly influences the click-through rate) information for SEO optimization strategies.

Another example:- The customer is an eBay seller and diligently scraps data from eBay and other e-commerce marketplaces regularly, building up his own database across time for in-depth market research.

It is not a surprise that Amazon is the most scraped website. Given its vast market position in the e-commerce industry, Amazon's data is the most representative of all market research. It has the largest database.

Two best tools for eCommerce Scraping Without Coding

Octoparse:Octoparse is a web scraping tool that allows users to extract data from websites using a user-friendly graphical interface without the need for coding or programming skills.

Parsehub:Parsehub is a web scraping tool that allows users to extract data from websites using a user-friendly interface and provides various features such as scheduling and integration with other tools. It also offers advanced features such as JavaScript rendering and pagination handling.

Web scraping best practices that you should be aware of are:

1. Continuously parse & verify extracted data

Data conversion, also known as data parsing, is the process of converting data from one format to another, such as from HTML to JSON, CSV, or any other format required. Data extraction from web sources must be followed by parsing. This makes it simpler for developers and data scientists to process and use the gathered data.

To make sure the crawler and parser are operating properly, manually check parsed data at regular intervals.

2. Make the appropriate tool selection for your web scraping project

Select the website from which you wish to get data.

Check the source code of the webpage to see the page elements and look for the data you wish to extract.

Write the programme.

The code must be executed to send a connection request to the destination website.

Keep the extracted data in the format you want for further analysis.

Using a pre-built web scraper

There are many open-source and low/no-code pre-built web scrapers available.

3. Check out the website to see if it supports an API

To check if a website supports an API, you can follow these steps:

Look for a section on the website labeled "API" or "Developers". This section may be located in the footer or header of the website.

If you cannot find a dedicated section for the API, try searching for keywords such as "API documentation" or "API integration" in the website's search bar.

If you still cannot find information about the API, you can contact the website's support team or customer service to inquire about API availability.

If the website offers an API, look for information on how to access it, such as authentication requirements, API endpoints, and data formats.

Review any API terms of use or documentation to ensure that your intended use of the API complies with their policies and guidelines.

4. Use a headless browser

For example- puppeteer

Web crawling (also known as web scraping or screen scraping) is broadly applied in many fields today. Before a web crawler tool becomes public, it is the magic word for people with no programming skills.

People are continually unable to enter the big data door due to its high threshold. An automated device called a web scraping tool acts as a link between people everywhere and the big enigmatic data.

It stops repetitive tasks like copying and pasting.t

It organizes the retrieved data into well-structured formats, such as Excel, HTML, and CSV, among others.

It saves you time and money because you don’t have to get a professional data analyst.

It is the solution for many people who lack technological abilities, including marketers, dealers, journalists, YouTubers, academics, and many more.

Puppeteer

A Node.js library called Puppeteer offers a high-level API for managing Chrome/Chromium via the DevTools Protocol.

Puppeteer operates in headless mode by default, but it may be set up to run in full (non-headless) Chrome/Chromium.

Note: Headless means a browser without a user interface or “head.” Therefore, the GUI is concealed when the browser is headless. However, the programme will be executed at the backend.

Puppeteer is a Node.js package or module that gives you the ability to perform a variety of web operations automatically, including opening pages, surfing across websites, analyzing javascript, and much more. Chrome and Node.js make it function flawlessly.

A puppeteer can perform the majority of tasks that you may perform manually in the browser!

Here are a few examples to get you started:

Create PDFs and screenshots of the pages.

Crawl a SPA (Single-Page Application) and generate pre-rendered content (i.e. "SSR" (Server-Side Rendering)).

Automate form submission, UI testing, keyboard input, etc.

Develop an automated testing environment utilizing the most recent JavaScript and browser capabilities.

Capture a timeline trace of your website to help diagnose performance issues.

Test Chrome Extensions.

Cheerio

Cheerio is a tool (node package) that is widely used for parsing HTML and XML in Node.

It is a quick, adaptable & lean implementation of core jQuery designed specifically for the server.

Cheerio goes considerably more quickly than Puppeteer.

Difference between Cheerio and Puppeteer

Cheerio is merely a DOM parser that helps in the exploration of unprocessed HTML and XML data. It does not execute any Javascript on the page.

Puppeteer operates a complete browser, runs all Javascript, and handles all XHR requests.

Note: XHR provides the ability to send network requests between the browser and a server.

Conclusion

In conclusion, Node.js empowers programmers in web development to create robust web scrapers for efficient data extraction. Node.js's powerful features and libraries streamline the process of building effective web scrapers. However, it is essential to prioritize legal and ethical considerations when engaging in Node.js web development for web scraping to ensure responsible data extraction practices.

0 notes

Text

TECHSOFT WEB SOLUTIONS stands out as a top web design firm in Cochin (Kochi), Kerala India. Company builds websites of international caliber for businesses. Main goal? Quality, productivity, and making customers happy.

TECHSOFT team brings together young smart IT experts. This includes marketing advisors, web designers, and coders who deliver high-quality website design projects.

TECHSOFT specializes in creating websites using Laravel, React, Node Js, Yii Frame work and PHP. Web Designing & Development,Ecommerce Web Development, Mobile Applications, Digital Marketing, and Search Engine Optimisation.

#website development#website design company#web development#developers & startups#web design#webdesign#business#website

0 notes