#numpy ones array

Explore tagged Tumblr posts

Text

This is not quite how coding works. Once you find out the proper way to do something in coding, there's an additional step where you feel like a huge idiot because in retrospect it is blindingly obvious that your way is too much work. Like

Wait, why don't you flicker when you're flying? What do you mean I can fly by just telekinetically pushing against the ground? No I've been consecutively teleporting myself successively higher off the ground a hundred times a minute. Yeah no it did take a huge amount of power but I just figured flying was hard. No I know bumblebees do it I just uh.

i love pitting classically trained magic users against self-taught magic users in sci-fi/fantasy but it shouldn’t be snobbish disdain for them it should be terror

#I am taking this math class and there was a little side note in one of the labs about how numpy can reshape arrays#(and like of course it can why wouldn't thar be a thing it can do)#but I spent a stupid amount of time writing a pair of functions to do that by like iterating across the arrays and then carefully testing#but sure yeah np.reshape() good to know

183K notes

·

View notes

Text

im really just THAT stupid huh

#tütensuppe#at least i found the error before this went to permanent storage but. holy fuck#its not numpy its me.....#yk im calculating timestamps for array values where one value has a timestamp#and then the next 499 do NOT#so i forcibly set the endpoint to the last value of the original timestamps#COMPLETELY DISREGARDING THAT 499 MORE VALUES COME AFTER THIS!!!#this only tripped me when i tried to go microseconds -> nanoseconds to avoid saving floats (int is smaller)#bc while using linspace i kept getting weird step sizes and with arange the array kept turning out too short#specifically. 499 values too short. fucking hell

1 note

·

View note

Text

downsides of doing an assignment worth half a course at the last possible minute and submitting with <6 hours to go before the (extended) deadline: this one's probably taken a good three months off my life

upsides of doing an assignment worth half a course at the last possible minute and submitting with <6 hours to go before the (extended) deadline: it's possible that no human being has ever gone so quickly from knowing so little to knowing so much about numpy arrays. this is like seeing the charred remains of a man you knowingly sent out to his death and knowing that you have to send out four-five more into barely, fractionally safer circumstances before the month is out to meet the regime's demands because you know that shit is in your brain now for good, that shit's never leaving. this doesn't sound like an upside actually

9 notes

·

View notes

Text

Python Libraries to Learn Before Tackling Data Analysis

To tackle data analysis effectively in Python, it's crucial to become familiar with several libraries that streamline the process of data manipulation, exploration, and visualization. Here's a breakdown of the essential libraries:

1. NumPy

- Purpose: Numerical computing.

- Why Learn It: NumPy provides support for large multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently.

- Key Features:

- Fast array processing.

- Mathematical operations on arrays (e.g., sum, mean, standard deviation).

- Linear algebra operations.

2. Pandas

- Purpose: Data manipulation and analysis.

- Why Learn It: Pandas offers data structures like DataFrames, making it easier to handle and analyze structured data.

- Key Features:

- Reading/writing data from CSV, Excel, SQL databases, and more.

- Handling missing data.

- Powerful group-by operations.

- Data filtering and transformation.

3. Matplotlib

- Purpose: Data visualization.

- Why Learn It: Matplotlib is one of the most widely used plotting libraries in Python, allowing for a wide range of static, animated, and interactive plots.

- Key Features:

- Line plots, bar charts, histograms, scatter plots.

- Customizable charts (labels, colors, legends).

- Integration with Pandas for quick plotting.

4. Seaborn

- Purpose: Statistical data visualization.

- Why Learn It: Built on top of Matplotlib, Seaborn simplifies the creation of attractive and informative statistical graphics.

- Key Features:

- High-level interface for drawing attractive statistical graphics.

- Easier to use for complex visualizations like heatmaps, pair plots, etc.

- Visualizations based on categorical data.

5. SciPy

- Purpose: Scientific and technical computing.

- Why Learn It: SciPy builds on NumPy and provides additional functionality for complex mathematical operations and scientific computing.

- Key Features:

- Optimized algorithms for numerical integration, optimization, and more.

- Statistics, signal processing, and linear algebra modules.

6. Scikit-learn

- Purpose: Machine learning and statistical modeling.

- Why Learn It: Scikit-learn provides simple and efficient tools for data mining, analysis, and machine learning.

- Key Features:

- Classification, regression, and clustering algorithms.

- Dimensionality reduction, model selection, and preprocessing utilities.

7. Statsmodels

- Purpose: Statistical analysis.

- Why Learn It: Statsmodels allows users to explore data, estimate statistical models, and perform tests.

- Key Features:

- Linear regression, logistic regression, time series analysis.

- Statistical tests and models for descriptive statistics.

8. Plotly

- Purpose: Interactive data visualization.

- Why Learn It: Plotly allows for the creation of interactive and web-based visualizations, making it ideal for dashboards and presentations.

- Key Features:

- Interactive plots like scatter, line, bar, and 3D plots.

- Easy integration with web frameworks.

- Dashboards and web applications with Dash.

9. TensorFlow/PyTorch (Optional)

- Purpose: Machine learning and deep learning.

- Why Learn It: If your data analysis involves machine learning, these libraries will help in building, training, and deploying deep learning models.

- Key Features:

- Tensor processing and automatic differentiation.

- Building neural networks.

10. Dask (Optional)

- Purpose: Parallel computing for data analysis.

- Why Learn It: Dask enables scalable data manipulation by parallelizing Pandas operations, making it ideal for big datasets.

- Key Features:

- Works with NumPy, Pandas, and Scikit-learn.

- Handles large data and parallel computations easily.

Focusing on NumPy, Pandas, Matplotlib, and Seaborn will set a strong foundation for basic data analysis.

8 notes

·

View notes

Text

How much Python should one learn before beginning machine learning?

Before diving into machine learning, a solid understanding of Python is essential. :

Basic Python Knowledge:

Syntax and Data Types:

Understand Python syntax, basic data types (strings, integers, floats), and operations.

Control Structures:

Learn how to use conditionals (if statements), loops (for and while), and list comprehensions.

Data Handling Libraries:

Pandas:

Familiarize yourself with Pandas for data manipulation and analysis. Learn how to handle DataFrames, series, and perform data cleaning and transformations.

NumPy:

Understand NumPy for numerical operations, working with arrays, and performing mathematical computations.

Data Visualization:

Matplotlib and Seaborn:

Learn basic plotting with Matplotlib and Seaborn for visualizing data and understanding trends and distributions.

Basic Programming Concepts:

Functions:

Know how to define and use functions to create reusable code.

File Handling:

Learn how to read from and write to files, which is important for handling datasets.

Basic Statistics:

Descriptive Statistics:

Understand mean, median, mode, standard deviation, and other basic statistical concepts.

Probability:

Basic knowledge of probability is useful for understanding concepts like distributions and statistical tests.

Libraries for Machine Learning:

Scikit-learn:

Get familiar with Scikit-learn for basic machine learning tasks like classification, regression, and clustering. Understand how to use it for training models, evaluating performance, and making predictions.

Hands-on Practice:

Projects:

Work on small projects or Kaggle competitions to apply your Python skills in practical scenarios. This helps in understanding how to preprocess data, train models, and interpret results.

In summary, a good grasp of Python basics, data handling, and basic statistics will prepare you well for starting with machine learning. Hands-on practice with machine learning libraries and projects will further solidify your skills.

To learn more drop the message…!

2 notes

·

View notes

Text

Working on a Python project for weeks and I'm adamant we use Numpy. I'm new to numpy, and my partner knows no Python. I'm convinced this'll be super efficient because Numpy is good.

We can't get Numpy to work, we spend weeks getting everything working and nothing is working.

We end up getting rid of all of it and using standard Python arrays.

Make more progress in one night than we did in weeks.

I was a gigantic asshat for insisting we do something a certain way. Legitimately detrimental to doing what we need to do. Now that the professor and my partner are working on it because this went from a class assignment to a potential research paper. (Worth noting that we couldn't have succeeded without assistance from the professor so he's cool) everything I contributed has been removed at the end of the day except wasting weeks of sleepless nights and it's specifically and unequivocally at my hands.

I feel stupid and asshattish and as if I owe someone something. I'm acting defensive and I shouldn't because they're right when they say that this should be done in the easy straightforward way.

I feel like lashing out and I can't do that. I'm aware I'm in the wrong. I was wrong and I'm angry that it feels like I'm a prick

3 notes

·

View notes

Text

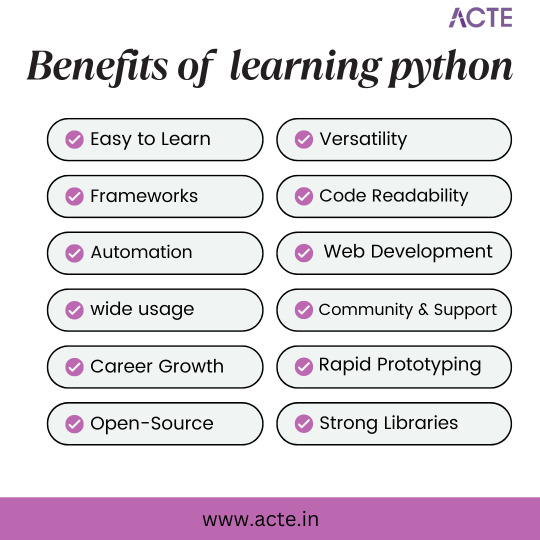

Exploring the Python and Its Incredible Benefits:

Python, a versatile programming language known for its simplicity and adaptability, holds a prominent position in the technological landscape. Originating in the late 1980s, Python has garnered substantial attention due to its user-friendly syntax, making it an accessible choice for individuals at all levels of programming expertise. Notably, Python's design principles prioritize code clarity, empowering developers to articulate their ideas effectively and devise elegant solutions.

Python's applicability spans a multitude of domains, encompassing web development, data analysis, artificial intelligence, and scientific computing, among others. Its rich array of libraries and frameworks enhances efficiency in diverse tasks, including crafting dynamic websites, automating routine processes, processing and interpreting data, and constructing intricate applications.

The confluence of Python's flexibility and robust community support has driven its widespread adoption across varied industries. Whether one is a newcomer or an accomplished programmer, Python constitutes a potent toolset for software development and systematic problem-solving.

The ensuing enumeration underscores the merits of acquainting oneself with Python:

Accessible Learning: Python's straightforward syntax expedites the learning curve, enabling a focus on logical problem-solving rather than grappling with intricate language intricacies.

Versatility in Application: Python's versatility finds expression in applications spanning web development, data analysis, AI, and more, cultivating diverse avenues for career exploration.

Data Insight and Analysis: Python's specialized libraries, such as NumPy and Pandas, empower adept data analysis and visualization, enhancing data-driven decision-making.

AI and Machine Learning Proficiency: Python's repository of libraries, including Scikit-Learn, empowers the creation of sophisticated algorithms and AI models.

Web Development Prowess: Python's frameworks, notably Django, facilitate the swift development of dynamic, secure web applications, underscoring its relevance in modern web environments.

Efficient Prototyping: Python's agile development capabilities facilitate the rapid creation of prototypes and experimental models, fostering innovation.

Community Collaboration: The dynamic Python community serves as a wellspring of resources and support, nurturing an environment of continuous learning and problem resolution.

Varied Career Prospects: Proficiency in Python translates to an array of roles across diverse sectors, reflecting the expanding demand for skilled practitioners.

Cross-Disciplinary Impact: Python's adaptability transcends industries, permeating sectors such as finance, healthcare, e-commerce, and scientific research.

Open-Source Advantage: Python's open-source nature encourages collaboration, fostering ongoing refinement and communal contribution.

Robust Toolset: Python's toolkit simplifies complex tasks and accelerates development, enhancing productivity.

Code Elegance: Python's elegant syntax fosters code legibility, promoting teamwork and fostering shared comprehension.

Professional Advancement: Proficiency in Python translates into promising career advancement opportunities and the potential for competitive compensation.

Future-Proofed Skills: Python's enduring prevalence and versatile utility ensure that acquired skills remain pertinent within evolving technological landscapes.

In summation, Python's stature as a versatile, user-friendly programming language stands as a testament to its enduring relevance. Its impact is palpable across industries, driving innovation and technological progress.

If you want to learn more about Python, feel free to contact ACTE Institution because they offer certifications and job opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested.

10 notes

·

View notes

Text

How you can use python for data wrangling and analysis

Python is a powerful and versatile programming language that can be used for various purposes, such as web development, data science, machine learning, automation, and more. One of the most popular applications of Python is data analysis, which involves processing, cleaning, manipulating, and visualizing data to gain insights and make decisions.

In this article, we will introduce some of the basic concepts and techniques of data analysis using Python, focusing on the data wrangling and analysis process. Data wrangling is the process of transforming raw data into a more suitable format for analysis, while data analysis is the process of applying statistical methods and tools to explore, summarize, and interpret data.

To perform data wrangling and analysis with Python, we will use two of the most widely used libraries: Pandas and NumPy. Pandas is a library that provides high-performance data structures and operations for manipulating tabular data, such as Series and DataFrame. NumPy is a library that provides fast and efficient numerical computations on multidimensional arrays, such as ndarray.

We will also use some other libraries that are useful for data analysis, such as Matplotlib and Seaborn for data visualization, SciPy for scientific computing, and Scikit-learn for machine learning.

To follow along with this article, you will need to have Python 3.6 or higher installed on your computer, as well as the libraries mentioned above. You can install them using pip or conda commands. You will also need a code editor or an interactive environment, such as Jupyter Notebook or Google Colab.

Let’s get started with some examples of data wrangling and analysis with Python.

Example 1: Analyzing COVID-19 Data

In this example, we will use Python to analyze the COVID-19 data from the World Health Organization (WHO). The data contains the daily situation reports of confirmed cases and deaths by country from January 21, 2020 to October 23, 2023. You can download the data from here.

First, we need to import the libraries that we will use:import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns

Next, we need to load the data into a Pandas DataFrame:df = pd.read_csv('WHO-COVID-19-global-data.csv')

We can use the head() method to see the first five rows of the DataFrame:df.head()

Date_reportedCountry_codeCountryWHO_regionNew_casesCumulative_casesNew_deathsCumulative_deaths2020–01–21AFAfghanistanEMRO00002020–01–22AFAfghanistanEMRO00002020–01–23AFAfghanistanEMRO00002020–01–24AFAfghanistanEMRO00002020–01–25AFAfghanistanEMRO0000

We can use the info() method to see some basic information about the DataFrame, such as the number of rows and columns, the data types of each column, and the memory usage:df.info()

Output:

RangeIndex: 163800 entries, 0 to 163799 Data columns (total 8 columns): # Column Non-Null Count Dtype — — — — — — — — — — — — — — — 0 Date_reported 163800 non-null object 1 Country_code 162900 non-null object 2 Country 163800 non-null object 3 WHO_region 163800 non-null object 4 New_cases 163800 non-null int64 5 Cumulative_cases 163800 non-null int64 6 New_deaths 163800 non-null int64 7 Cumulative_deaths 163800 non-null int64 dtypes: int64(4), object(4) memory usage: 10.0+ MB “><class 'pandas.core.frame.DataFrame'> RangeIndex: 163800 entries, 0 to 163799 Data columns (total 8 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Date_reported 163800 non-null object 1 Country_code 162900 non-null object 2 Country 163800 non-null object 3 WHO_region 163800 non-null object 4 New_cases 163800 non-null int64 5 Cumulative_cases 163800 non-null int64 6 New_deaths 163800 non-null int64 7 Cumulative_deaths 163800 non-null int64 dtypes: int64(4), object(4) memory usage: 10.0+ MB

We can see that there are some missing values in the Country_code column. We can use the isnull() method to check which rows have missing values:df[df.Country_code.isnull()]

Output:

Date_reportedCountry_codeCountryWHO_regionNew_casesCumulative_casesNew_deathsCumulative_deaths2020–01–21NaNInternational conveyance (Diamond Princess)WPRO00002020–01–22NaNInternational conveyance (Diamond Princess)WPRO0000……………………2023–10–22NaNInternational conveyance (Diamond Princess)WPRO07120132023–10–23NaNInternational conveyance (Diamond Princess)WPRO0712013

We can see that the missing values are from the rows that correspond to the International conveyance (Diamond Princess), which is a cruise ship that had a COVID-19 outbreak in early 2020. Since this is not a country, we can either drop these rows or assign them a unique code, such as ‘IC’. For simplicity, we will drop these rows using the dropna() method:df = df.dropna()

We can also check the data types of each column using the dtypes attribute:df.dtypes

Output:Date_reported object Country_code object Country object WHO_region object New_cases int64 Cumulative_cases int64 New_deaths int64 Cumulative_deaths int64 dtype: object

We can see that the Date_reported column is of type object, which means it is stored as a string. However, we want to work with dates as a datetime type, which allows us to perform date-related operations and calculations. We can use the to_datetime() function to convert the column to a datetime type:df.Date_reported = pd.to_datetime(df.Date_reported)

We can also use the describe() method to get some summary statistics of the numerical columns, such as the mean, standard deviation, minimum, maximum, and quartiles:df.describe()

Output:

New_casesCumulative_casesNew_deathsCumulative_deathscount162900.000000162900.000000162900.000000162900.000000mean1138.300062116955.14016023.4867892647.346237std6631.825489665728.383017137.25601215435.833525min-32952.000000–32952.000000–1918.000000–1918.00000025%-1.000000–1.000000–1.000000–1.00000050%-1.000000–1.000000–1.000000–1.00000075%-1.000000–1.000000–1.000000–1.000000max -1 -1 -1 -1

We can see that there are some negative values in the New_cases, Cumulative_cases, New_deaths, and Cumulative_deaths columns, which are likely due to data errors or corrections. We can use the replace() method to replace these values with zero:df = df.replace(-1,0)

Now that we have cleaned and prepared the data, we can start to analyze it and answer some questions, such as:

Which countries have the highest number of cumulative cases and deaths?

How has the pandemic evolved over time in different regions and countries?

What is the current situation of the pandemic in India?

To answer these questions, we will use some of the methods and attributes of Pandas DataFrame, such as:

groupby() : This method allows us to group the data by one or more columns and apply aggregation functions, such as sum, mean, count, etc., to each group.

sort_values() : This method allows us to sort the data by one or more

loc[] : This attribute allows us to select a subset of the data by labels or conditions.

plot() : This method allows us to create various types of plots from the data, such as line, bar, pie, scatter, etc.

If you want to learn Python from scratch must checkout e-Tuitions to learn Python online, They can teach you Python and other coding language also they have some of the best teachers for their students and most important thing you can also Book Free Demo for any class just goo and get your free demo.

#python#coding#programming#programming languages#python tips#python learning#python programming#python development

2 notes

·

View notes

Text

Financial Modeling in the Age of AI: Skills Every Investment Banker Needs in 2025

In 2025, the landscape of financial modeling is undergoing a profound transformation. What was once a painstaking, spreadsheet-heavy process is now being reshaped by Artificial Intelligence (AI) and machine learning tools that automate calculations, generate predictive insights, and even draft investment memos.

But here's the truth: AI isn't replacing investment bankers—it's reshaping what they do.

To stay ahead in this rapidly evolving environment, professionals must go beyond traditional Excel skills and learn how to collaborate with AI. Whether you're a finance student, an aspiring analyst, or a working professional looking to upskill, mastering AI-augmented financial modeling is essential. And one of the best ways to do that is by enrolling in a hands-on, industry-relevant investment banking course in Chennai.

What is Financial Modeling, and Why Does It Matter?

Financial modeling is the art and science of creating representations of a company's financial performance. These models are crucial for:

Valuing companies (e.g., through DCF or comparable company analysis)

Making investment decisions

Forecasting growth and profitability

Evaluating mergers, acquisitions, or IPOs

Traditionally built in Excel, models used to take hours—or days—to build and test. Today, AI-powered assistants can build basic frameworks in minutes.

How AI Is Revolutionizing Financial Modeling

The impact of AI on financial modeling is nothing short of revolutionary:

1. Automated Data Gathering and Cleaning

AI tools can automatically extract financial data from balance sheets, income statements, or even PDFs—eliminating hours of manual entry.

2. AI-Powered Forecasting

Machine learning algorithms can analyze historical trends and provide data-driven forecasts far more quickly and accurately than static models.

3. Instant Model Generation

AI assistants like ChatGPT with code interpreters, or Excel’s new Copilot feature, can now generate model templates (e.g., LBO, DCF) instantly, letting analysts focus on insights rather than formulas.

4. Scenario Analysis and Sensitivity Testing

With AI, you can generate multiple scenarios—best case, worst case, expected case—in seconds. These tools can even flag risks and assumptions automatically.

However, the human role isn't disappearing. Investment bankers are still needed to define model logic, interpret results, evaluate market sentiment, and craft the narrative behind the numbers.

What AI Can’t Do (Yet): The Human Advantage

Despite all the hype, AI still lacks:

Business intuition

Ethical judgment

Client understanding

Strategic communication skills

This means future investment bankers need a hybrid skill set��equally comfortable with financial principles and modern tools.

Essential Financial Modeling Skills for 2025 and Beyond

Here are the most in-demand skills every investment banker needs today:

1. Excel + AI Tool Proficiency

Excel isn’t going anywhere, but it’s getting smarter. Learn to use AI-enhanced functions, dynamic arrays, macros, and Copilot features for rapid modeling.

2. Python and SQL

Python libraries like Pandas, NumPy, and Scikit-learn are used for custom forecasting and data analysis. SQL is crucial for pulling financial data from large databases.

3. Data Visualization

Tools like Power BI, Tableau, and Excel dashboards help communicate results effectively.

4. Valuation Techniques

DCF, LBO, M&A models, and comparable company analysis remain core to investment banking.

5. AI Integration and Prompt Engineering

Knowing how to interact with AI (e.g., writing effective prompts for ChatGPT to generate model logic) is a power skill in 2025.

Why Enroll in an Investment Banking Course in Chennai?

As AI transforms finance, the demand for skilled professionals who can use technology without losing touch with core finance principles is soaring.

If you're based in South India, enrolling in an investment banking course in Chennai can set you on the path to success. Here's why:

✅ Hands-on Training

Courses now include live financial modeling projects, AI-assisted model-building, and exposure to industry-standard tools.

✅ Expert Mentors

Learn from professionals who’ve worked in top global banks, PE firms, and consultancies.

✅ Placement Support

With Chennai growing as a finance and tech hub, top employers are hiring from local programs offering real-world skills.

✅ Industry Relevance

The best courses in Chennai combine finance, analytics, and AI—helping you become job-ready in the modern investment banking world.

Whether you're a student, working professional, or career switcher, investing in the right course today can prepare you for the next decade of finance.

Case Study: Using AI in a DCF Model

Imagine you're evaluating a tech startup for acquisition. Traditionally, you’d:

Download financials

Project revenue growth

Build a 5-year forecast

Calculate terminal value

Discount cash flows

With AI tools:

Financials are extracted via OCR and organized automatically.

Forecast assumptions are suggested based on industry data.

Scenario-based DCF models are generated in minutes.

You spend your time refining assumptions and crafting the investment story.

This is what the future of financial modeling looks like—and why upskilling is critical.

Final Thoughts: Evolve or Be Left Behind

AI isn’t the end of financial modeling—it’s the beginning of a new era. In this future, the best investment bankers are not just Excel wizards—they’re strategic thinkers, storytellers, and tech-powered analysts.

By embracing this change and mastering modern modeling skills, you can future-proof your finance career.

And if you're serious about making that leap, enrolling in an investment banking course in Chennai can provide the training, exposure, and credibility to help you rise in the AI age.

0 notes

Text

How Python Can Be Used in Finance: Applications, Benefits & Real-World Examples

In the rapidly evolving world of finance, staying ahead of the curve is essential. One of the most powerful tools at the intersection of technology and finance today is Python. Known for its simplicity and versatility, Python has become a go-to programming language for financial professionals, data scientists, and fintech companies alike.

This blog explores how Python is used in finance, the benefits it offers, and real-world examples of its applications in the industry.

Why Python in Finance?

Python stands out in the finance world because of its:

Ease of use: Simple syntax makes it accessible to professionals from non-programming backgrounds.

Rich libraries: Packages like Pandas, NumPy, Matplotlib, Scikit-learn, and PyAlgoTrade support a wide array of financial tasks.

Community support: A vast, active user base means better resources, tutorials, and troubleshooting help.

Integration: Easily interfaces with databases, Excel, web APIs, and other tools used in finance.

Key Applications of Python in Finance

1. Data Analysis & Visualization

Financial analysis relies heavily on large datasets. Python’s libraries like Pandas and NumPy are ideal for:

Time-series analysis

Portfolio analysis

Risk assessment

Cleaning and processing financial data

Visualization tools like Matplotlib, Seaborn, and Plotly allow users to create interactive charts and dashboards.

2. Algorithmic Trading

Python is a favorite among algo traders due to its speed and ease of prototyping.

Backtesting strategies using libraries like Backtrader and Zipline

Live trading integration with brokers via APIs (e.g., Alpaca, Interactive Brokers)

Strategy optimization using historical data

3. Risk Management & Analytics

With Python, financial institutions can simulate market scenarios and model risk using:

Monte Carlo simulations

Value at Risk (VaR) models

Stress testing

These help firms manage exposure and regulatory compliance.

4. Financial Modeling & Forecasting

Python can be used to build predictive models for:

Stock price forecasting

Credit scoring

Loan default prediction

Scikit-learn, TensorFlow, and XGBoost are popular libraries for machine learning applications in finance.

5. Web Scraping & Sentiment Analysis

Real-time data from financial news, social media, and websites can be scraped using BeautifulSoup and Scrapy. Python’s NLP tools (like NLTK, spaCy, and TextBlob) can be used for sentiment analysis to gauge market sentiment and inform trading strategies.

Benefits of Using Python in Finance

✅ Fast Development

Python allows for quick development and iteration of ideas, which is crucial in a dynamic industry like finance.

✅ Cost-Effective

As an open-source language, Python reduces licensing and development costs.

✅ Customization

Python empowers teams to build tailored solutions that fit specific financial workflows or trading strategies.

✅ Scalability

From small analytics scripts to large-scale trading platforms, Python can handle applications of various complexities.

Real-World Examples

💡 JPMorgan Chase

Developed a proprietary Python-based platform called Athena to manage risk, pricing, and trading across its investment banking operations.

💡 Quantopian (acquired by Robinhood)

Used Python for developing and backtesting trading algorithms. Users could write Python code to create and test strategies on historical market data.

💡 BlackRock

Utilizes Python for data analytics and risk management to support investment decisions across its portfolio.

💡 Robinhood

Leverages Python for backend services, data pipelines, and fraud detection algorithms.

Getting Started with Python in Finance

Want to get your hands dirty? Here are a few resources:

Books:

Python for Finance by Yves Hilpisch

Machine Learning for Asset Managers by Marcos López de Prado

Online Courses:

Coursera: Python and Statistics for Financial Analysis

Udemy: Python for Financial Analysis and Algorithmic Trading

Practice Platforms:

QuantConnect

Alpaca

Interactive Brokers API

Final Thoughts

Python is transforming the financial industry by providing powerful tools to analyze data, build models, and automate trading. Whether you're a finance student, a data analyst, or a hedge fund quant, learning Python opens up a world of possibilities.

As finance becomes increasingly data-driven, Python will continue to be a key differentiator in gaining insights and making informed decisions.

Do you work in finance or aspire to? Want help building your first Python financial model? Let me know, and I’d be happy to help!

#outfit#branding#financial services#investment#finance#financial advisor#financial planning#financial wellness#financial freedom#fintech

0 notes

Text

Python for Data Science: The Only Guide You Need to Get Started in 2025

Data is the lifeblood of modern business, powering decisions in healthcare, finance, marketing, sports, and more. And at the core of it all lies a powerful and beginner-friendly programming language — Python.

Whether you’re an aspiring data scientist, analyst, or tech enthusiast, learning Python for data science is one of the smartest career moves you can make in 2025.

In this guide, you’ll learn:

Why Python is the preferred language for data science

The libraries and tools you must master

A beginner-friendly roadmap

How to get started with a free full course on YouTube

Why Python is the #1 Language for Data Science

Python has earned its reputation as the go-to language for data science and here's why:

1. Easy to Learn, Easy to Use

Python’s syntax is clean, simple, and intuitive. You can focus on solving problems rather than struggling with the language itself.

2. Rich Ecosystem of Libraries

Python offers thousands of specialized libraries for data analysis, machine learning, and visualization.

3. Community and Resources

With a vibrant global community, you’ll never run out of tutorials, forums, or project ideas to help you grow.

4. Integration with Tools & Platforms

From Jupyter notebooks to cloud platforms like AWS and Google Colab, Python works seamlessly everywhere.

What You Can Do with Python in Data Science

Let’s look at real tasks you can perform using Python: TaskPython ToolsData cleaning & manipulationPandas, NumPyData visualizationMatplotlib, Seaborn, PlotlyMachine learningScikit-learn, XGBoostDeep learningTensorFlow, PyTorchStatistical analysisStatsmodels, SciPyBig data integrationPySpark, Dask

Python lets you go from raw data to actionable insight — all within a single ecosystem.

A Beginner's Roadmap to Learn Python for Data Science

If you're starting from scratch, follow this step-by-step learning path:

✅ Step 1: Learn Python Basics

Variables, data types, loops, conditionals

Functions, file handling, error handling

✅ Step 2: Explore NumPy

Arrays, broadcasting, numerical computations

✅ Step 3: Master Pandas

DataFrames, filtering, grouping, merging datasets

✅ Step 4: Visualize with Matplotlib & Seaborn

Create charts, plots, and visual dashboards

✅ Step 5: Intro to Machine Learning

Use Scikit-learn for classification, regression, clustering

✅ Step 6: Work on Real Projects

Apply your knowledge to real-world datasets (Kaggle, UCI, etc.)

Who Should Learn Python for Data Science?

Python is incredibly beginner-friendly and widely used, making it ideal for:

Students looking to future-proof their careers

Working professionals planning a transition to data

Analysts who want to automate and scale insights

Researchers working with data-driven models

Developers diving into AI, ML, or automation

How Long Does It Take to Learn?

You can grasp Python fundamentals in 2–3 weeks with consistent daily practice. To become proficient in data science using Python, expect to spend 3–6 months, depending on your pace and project experience.

The good news? You don’t need to do it alone.

🎓 Learn Python for Data Science – Full Free Course on YouTube

We’ve put together a FREE, beginner-friendly YouTube course that covers everything you need to start your data science journey using Python.

📘 What You’ll Learn:

Python programming basics

NumPy and Pandas for data handling

Matplotlib for visualization

Scikit-learn for machine learning

Real-life datasets and projects

Step-by-step explanations

📺 Watch the full course now → 👉 Python for Data Science Full Course

You’ll walk away with job-ready skills and project experience — at zero cost.

🧭 Final Thoughts

Python isn’t just a programming language — it’s your gateway to the future.

By learning Python for data science, you unlock opportunities across industries, roles, and technologies. The demand is high, the tools are ready, and the learning path is clearer than ever.

Don’t let analysis paralysis hold you back.

Click here to start learning now → https://youtu.be/6rYVt_2q_BM

#PythonForDataScience #LearnPython #FreeCourse #DataScience2025 #MachineLearning #NumPy #Pandas #DataAnalysis #AI #ScikitLearn #UpskillNow

1 note

·

View note

Text

Step-by-Step Python NumPy Tutorial with Real-Life Examples

If you are starting with Python and want to explore data science or numerical computing, then this Python NumPy Tutorial is perfect for you. NumPy stands for “Numerical Python” and is one of the most important libraries used in data analysis, scientific computing, and machine learning.

NumPy makes it easy to work with large sets of numbers. It provides a special data structure called an “array” that is faster and more efficient than regular Python lists. With NumPy arrays, you can perform many mathematical operations like addition, subtraction, multiplication, and more in just a few steps.

This Python NumPy Tutorial helps you understand the basics of arrays, data types, and array operations. It also introduces important features like indexing, slicing, and reshaping arrays. These features allow you to manage and process data in a smart and simple way.

NumPy also supports working with multi-dimensional data. This means you can handle tables, matrices, and higher-dimensional data easily. Whether you’re working with simple numbers or complex datasets, NumPy gives you the tools to analyze and manipulate them effectively.

In short, this tutorial is a great starting point for beginners. It breaks down complex concepts into easy steps, making it simple to understand and follow. If you're planning to learn data science or work with big data in Python, learning NumPy is a must.

To read the full tutorial, visit Python NumPy Tutorial.

0 notes

Text

Python for Data Science: Libraries You Must Know

Python has become the go-to programming language for data science professionals due to its readability, extensive community support, and a rich ecosystem of libraries. Whether you're analyzing data, building machine learning models, or creating stunning visualizations, Python has the right tools to get the job done. If you're looking to start a career in this field, enrolling in the best Python training in Hyderabad can give you a competitive edge and help you master these crucial libraries.

1. NumPy – The Foundation of Numerical Computing

NumPy is the backbone of scientific computing with Python. It offers efficient storage and manipulation of large numerical arrays, which makes it indispensable for high-performance data analysis. NumPy arrays are faster and more compact than traditional Python lists and serve as the foundation for other data science libraries.

2. Pandas – Data Wrangling Made Simple

Pandas is essential for handling structured data. Data structures such as Series and DataFrame make it easy to clean, transform, and explore data. With Pandas, tasks like filtering rows, merging datasets, and grouping values become effortless, saving time and effort in data preprocessing.

3. Matplotlib and Seaborn – Data Visualization Powerhouses

Matplotlib is the standard library for creating basic to advanced data visualizations. From bar graphs to histograms and line charts, Matplotlib covers it all. For more visually appealing and statistically rich plots, Seaborn is an excellent choice. It simplifies the process of creating complex plots and provides a more aesthetically pleasing design.

4. Scikit-learn – Machine Learning Made Easy

In Python, Scikit-learn is one of the most widely used libraries for implementing machine learning algorithms. It provides easy-to-use functions for classification, regression, clustering, and model evaluation, making it ideal for both beginners and experts.

5. TensorFlow and PyTorch – Deep Learning Frameworks

For those diving into artificial intelligence and deep learning, TensorFlow and PyTorch are essential. These frameworks allow developers to create, train, and deploy neural networks for applications such as image recognition, speech processing, and natural language understanding.

Begin Your Data Science Journey with Expert Training

Mastering these libraries opens the door to countless opportunities in the data science field. To gain hands-on experience and real-world skills, enroll in SSSIT Computer Education, where our expert trainers provide industry-relevant, practical Python training tailored for aspiring data scientists in Hyderabad.

#best python training in hyderabad#best python training in kukatpally#best python training in KPHB#Kukatpally & KPHB

0 notes

Text

Using Python for Data Science: Essential Libraries and Tools

If you’re looking to start your journey in data science, enrolling at the Best Python Training Institute in Hyderabad can give you a head start. Python has become the most widely used language in data science due to its simplicity, readability, and powerful ecosystem of libraries and tools. Here’s a breakdown of the essential ones every aspiring data scientist should know.

1. NumPy and Pandas – For Data Handling

NumPy provides support for large, multi-dimensional arrays and mathematical operations, while Pandas is essential for data manipulation and analysis. Together, they make cleaning and processing datasets efficient and intuitive.

2. Matplotlib and Seaborn – For Data Visualization

Visualizing data is a critical part of any data science workflow. Matplotlib allows you to create basic graphs and charts, while Seaborn builds on it by offering more advanced statistical plots with beautiful styling.

3. Scikit-Learn – For Machine Learning

This library offers simple and efficient tools for predictive data analysis. Whether you're working on classification, regression, or clustering, Scikit-Learn makes it easy to implement machine learning algorithms.

4. Jupyter Notebooks – For Interactive Coding

Jupyter Notebooks provide a user-friendly interface for writing and sharing Python code, especially useful in data science for combining live code, equations, and visualizations in one document.

Conclusion

Mastering these tools and libraries will prepare you for real-world data science challenges. If you're ready to gain practical knowledge through hands-on training, consider joining SSS IT Computer Education, where expert guidance meets industry-relevant learning.

0 notes

Text

Unlock Your Coding Superpower: Mastering Python, Pandas, Numpy for Absolute Beginners

If you've ever thought programming looked like a superpower — something only a chosen few could wield — it's time to change that narrative. Learning to code is no longer a mystery, and Python is your easiest gateway into this world. But what if you're a complete beginner? No background, no experience, no idea where to start?

Good news: Python, Pandas, and NumPy were practically made for you.

In this blog, we’ll walk you through why these tools are ideal for anyone just starting out. And if you want a structured, guided path, we highly recommend diving into this complete beginner-friendly course: 👉 Mastering Python, Pandas, Numpy for Absolute Beginners 👈

Let’s start unlocking your coding potential — one simple step at a time.

Why Start With Python?

Let’s keep it real. Python is one of the most beginner-friendly programming languages out there. Its syntax is clear, clean, and intuitive — almost like writing English. This makes it the perfect entry point for new coders.

Here’s what makes Python shine for absolute beginners:

Easy to Read and Write: You don’t need to memorize complex symbols or deal with cryptic syntax.

Huge Community Support: Got stuck? The internet is full of answers — from Stack Overflow to YouTube tutorials.

Used Everywhere: From web development to data analysis, Python is behind some of the world’s most powerful applications.

So whether you want to analyze data, automate tasks, or build apps, Python is your go-to language.

Where Do Pandas and NumPy Fit In?

Great question.

While Python is the language, Pandas and NumPy are the power tools that make data handling and analysis easy and efficient.

🧠 What Is NumPy?

NumPy (short for Numerical Python) is a library designed for high-performance numerical computing. In simple terms, it helps you do math with arrays — fast and efficiently.

Think of NumPy like your calculator, but 10x smarter and faster. It's perfect for:

Performing mathematical operations on large datasets

Creating multi-dimensional arrays

Working with matrices and linear algebra

🧠 What Is Pandas?

If NumPy is your calculator, Pandas is your Excel on steroids.

Pandas is a Python library that lets you manipulate, analyze, and clean data in tabular form (just like spreadsheets). It’s ideal for:

Importing CSV or Excel files

Cleaning messy data

Analyzing large datasets quickly

In short: Pandas + NumPy + Python = Data Analysis Superpowers.

Real Talk: Why You Should Learn This Trio Now

The demand for Python programmers, especially those who can work with data, has skyrocketed. From tech companies to banks, from hospitals to online retailers — data is the currency, and Python is the language of that currency.

Still unsure? Let’s break down the benefits:

1. No Prior Experience Needed

This trio doesn’t assume you’ve written a single line of code. It's designed for learners who are starting from ground zero.

2. Fast Career Opportunities

Roles like Data Analyst, Python Developer, or even Automation Tester are open to beginners with these skills.

3. Used by Top Companies

Google, Netflix, NASA — they all use Python with Pandas and NumPy in various ways.

4. Perfect for Freelancers and Entrepreneurs

Want to automate your invoices, sort data, or build small tools for clients? This skillset is gold.

What You’ll Learn in the Course (and Why It Works)

The course Mastering Python, Pandas, Numpy for Absolute Beginners is not just a crash course — it’s a well-paced, thoughtfully designed bootcamp that makes learning fun, easy, and practical.

Here's what makes it a winner:

✅ Step-by-Step Python Foundation

Install Python and set up your workspace

Learn variables, loops, functions, and conditionals

Build confidence with coding exercises

✅ Hands-On NumPy Training

Create arrays and matrices

Use NumPy’s built-in functions for quick calculations

Apply real-life examples to understand concepts better

✅ Practical Pandas Projects

Import and clean data from files

Slice, filter, and aggregate data

Create powerful visualizations and summaries

✅ Real-World Applications

From data cleaning to basic automation, this course helps you build practical projects that show up on portfolios and get noticed by recruiters.

✅ Learn at Your Own Pace

No pressure. You can go slow or fast, revisit lessons, and even practice with downloadable resources.

From Absolute Beginner to Confident Coder — Your Journey Starts Here

Let’s paint a picture.

You’re sitting at your laptop, coffee in hand. You type a few lines of code. You see the output — data neatly cleaned, or graphs beautifully rendered. It clicks. You feel empowered. You’re not just learning code anymore — you’re using it.

That’s the journey this course promises. It doesn’t throw complex concepts at you. It holds your hand and builds your confidence until you feel like you can take on real-world problems.

And the best part? You’ll be surprised how quickly things start making sense.

👉 Ready to experience that feeling? Enroll in Mastering Python, Pandas, Numpy for Absolute Beginners

Common Myths (And Why They’re Wrong)

Before we wrap up, let’s bust a few myths that might be holding you back.

❌ “I need a math or computer science background.”

Nope. This course is designed for non-tech people. It’s friendly, guided, and explained in simple language.

❌ “It’ll take years to learn.”

Wrong again. You’ll be surprised how much you can learn in just a few weeks if you stay consistent.

❌ “It’s only useful for data scientists.”

Python, Pandas, and NumPy are used in marketing, HR, finance, healthcare, e-commerce — the list goes on.

What Past Learners Are Saying

“I was terrified to even open Python. Now I’m analyzing datasets like a pro. This course literally changed my life!” – Priya K., Student

“I tried learning on YouTube but kept getting confused. This course explained things step-by-step. I finally get it.” – James M., Freelancer

“As a small business owner, I used Python to automate my reports. Saved me hours every week.” – Aamir T., Entrepreneur

Your First Step Starts Today

You don’t need to be a genius to learn Python. You just need a guide, a plan, and a little bit of curiosity.

Python, Pandas, and NumPy are your starting tools — powerful enough to transform how you work, think, and problem-solve. And once you begin, you'll wonder why you didn’t start sooner.

So why wait?

🚀 Click here to start your learning journey today: 👉 Mastering Python, Pandas, Numpy for Absolute Beginners

0 notes

Text

Top 10 Free Coding Tutorials on Coding Brushup You Shouldn’t Miss

If you're passionate about learning to code or just starting your programming journey, Coding Brushup is your go-to platform. With a wide range of beginner-friendly and intermediate tutorials, it’s built to help you brush up your skills in languages like Java, Python, and web development technologies. Best of all? Many of the tutorials are absolutely free.

In this blog, we’ll highlight the top 10 free coding tutorials on Coding BrushUp that you simply shouldn’t miss. Whether you're aiming to master the basics or explore real-world projects, these tutorials will give you the knowledge boost you need.

1. Introduction to Python Programming – Coding BrushUp Python Tutorial

Python is one of the most beginner-friendly languages, and the Coding BrushUp Python Tutorial series starts you off with the fundamentals. This course covers:

● Setting up Python on your machine

● Variables, data types, and basic syntax

● Loops, functions, and conditionals

● A mini project to apply your skills

Whether you're a student or an aspiring data analyst, this free tutorial is perfect for building a strong foundation.

📌 Try it here: Coding BrushUp Python Tutorial

2. Java for Absolute Beginners – Coding BrushUp Java Tutorial

Java is widely used in Android development and enterprise software. The Coding BrushUp Java Tutorial is designed for complete beginners, offering a step-by-step guide that includes:

● Setting up Java and IntelliJ IDEA or Eclipse

● Understanding object-oriented programming (OOP)

● Working with classes, objects, and inheritance

● Creating a simple console-based application

This tutorial is one of the highest-rated courses on the site and is a great entry point into serious backend development.

📌 Explore it here: Coding BrushUp Java Tutorial

3. Build a Personal Portfolio Website with HTML & CSS

Learning to create your own website is an essential skill. This hands-on tutorial walks you through building a personal portfolio using just HTML and CSS. You'll learn:

● Basic structure of HTML5

● Styling with modern CSS3

● Responsive layout techniques

● Hosting your portfolio online

Perfect for freelancers and job seekers looking to showcase their skills.

4. JavaScript Basics: From Zero to DOM Manipulation

JavaScript powers the interactivity on the web, and this tutorial gives you a solid introduction. Key topics include:

● JavaScript syntax and variables

● Functions and events

● DOM selection and manipulation

● Simple dynamic web page project

By the end, you'll know how to create interactive web elements without relying on frameworks.

5. Version Control with Git and GitHub – Beginner’s Guide

Knowing how to use Git is essential for collaboration and managing code changes. This free tutorial covers:

● Installing Git

● Basic Git commands: clone, commit, push, pull

● Branching and merging

● Using GitHub to host and share your code

Even if you're a solo developer, mastering Git early will save you time and headaches later.

6. Simple CRUD App with Java (Console-Based)

In this tutorial, Coding BrushUp teaches you how to create a simple CRUD (Create, Read, Update, Delete) application in Java. It's a great continuation after the Coding Brushup Java Course Tutorial. You'll learn:

● Working with Java arrays or Array List

● Creating menu-driven applications

● Handling user input with Scanner

● Structuring reusable methods

This project-based learning reinforces core programming concepts and logic building.

7. Python for Data Analysis: A Crash Course

If you're interested in data science or analytics, this Coding Brushup Python Tutorial focuses on:

● Using libraries like Pandas and NumPy

● Reading and analyzing CSV files

● Data visualization with Matplotlib

● Performing basic statistical operations

It’s a fast-track intro to one of the hottest career paths in tech.

8. Responsive Web Design with Flexbox and Grid

This tutorial dives into two powerful layout modules in CSS:

● Flexbox: for one-dimensional layouts

● Grid: for two-dimensional layouts

You’ll build multiple responsive sections and gain experience with media queries, making your websites look great on all screen sizes.

9. Java Object-Oriented Concepts – Intermediate Java Tutorial

For those who’ve already completed the Coding Brushup Java Tutorial, this intermediate course is the next logical step. It explores:

● Inheritance and polymorphism

● Interfaces and abstract classes

● Encapsulation and access modifiers

● Real-world Java class design examples

You’ll write cleaner, modular code and get comfortable with real-world Java applications.

10. Build a Mini Calculator with Python (GUI Version)

This hands-on Coding BrushUp Python Tutorial teaches you how to build a desktop calculator using Tkinter, a built-in Python GUI library. You’ll learn:

● GUI design principles

● Button, entry, and event handling

● Function mapping and error checking

● Packaging a desktop application

A fun and visual way to practice Python programming!

Why Choose Coding BrushUp?

Coding BrushUp is more than just a collection of tutorials. Here’s what sets it apart:

✅ Clear Explanations – All lessons are written in plain English, ideal for beginners. ✅ Hands-On Projects – Practical coding exercises to reinforce learning. ✅ Progressive Learning Paths – Start from basics and grow into advanced topics. ✅ 100% Free Content – Many tutorials require no signup or payment. ✅ Community Support – Comment sections and occasional Q&A features allow learner interaction.

Final Thoughts

Whether you’re learning to code for career advancement, school, or personal development, the free tutorials at Coding Brushup offer valuable, structured, and practical knowledge. From mastering the basics of Python and Java to building your first website or desktop app, these resources will help you move from beginner to confident coder.

👉 Start learning today at Codingbrushup.com and check out the full Coding BrushUp Java Tutorial and Python series to supercharge your programming journey.

0 notes