#on prem to cloud migration aws

Explore tagged Tumblr posts

Text

On-premises to AWS cloud migration: Step-by-step guide

Businesses are rapidly migrating corporate data to the cloud. Based on a report by Thales’ finding, it has doubled in cloud storage usage from 30% in 2015 to 60% in 2022. The figure is steadily increasing as businesses recognize the myriad benefits it has to offer. Moving your data to clouds can help you with:

Increased agility and scalability

Improved performance and reliability

Easy implementation of technologies

Reduced costs

Despite knowing the numerous benefits of cloud migration, concerns about security and hidden maintenance cost prevent businesses from fully embracing it. The optimal solution for addressing these concerns is consolidating all your cloud requirements under one roof, leveraging platforms such as AWS.

Businesses looking forward to migrating existing infrastructure software/ business applications from on-premises to AWS cloud require involvement from stakeholders across all levels of an organization. Therefore, this process requires careful planning and a step-by-step process for successful implementation.

Each migration is unique as business goals of every organization are different. Hence, before aiming for a successful migration to cloud, take time to consider the goals intended to achieve through it. Identify key business drivers and align them with your migration strategy to achieve set goals.

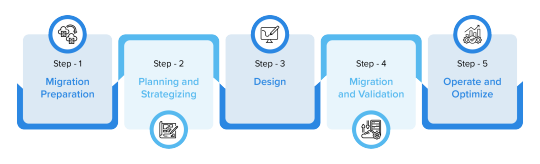

Step1: Migration preparation

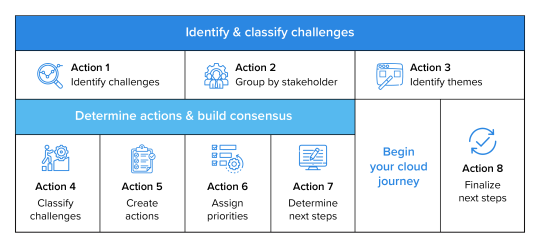

The primary objective of the AWS Cloud Adoption Framework is to bring stakeholders together and formulate an action plan that facilitates the transition of the team from cloud goals to actual cloud implementation. In the preparation phase, the following things will be determined, to formulate the right migration strategy from on premises to cloud.

(i) To ensure project success, identify the key stakeholders involved. It includes the teams participating and a designated project manager to lead.

(ii) Connect with each department and have an open discussion about their app usage, pain points, and desired functionalities.

(iii) To ensure compliance and mitigate risk, clearly state the detailed regulatory requirements and security considerations associated with workload, along with any other relevant requirements.

(iv) Perform dependency mapping to gain better visibility into interdependencies of business services and apps.

Step 2: Planning and strategizing

Pinpointing the first domino in a complex migration is the true test. When data is scattered across silos, choosing the right starting point can be difficult. Having the right data and the right expertise by your side can help you get through this.

Once you have all the prerequisites with you, the 1st step is to determine which applications to include in the 1st phase of AWS cloud migration strategy. The best approach is to start with applications with lowest number of dependencies. Another approach could be to start with the workloads that have the most overprovisioned, or idle resources.

Industry research suggests that as many as 30% of on-premises servers, both physical and virtual, are Zombies- showing no sign of useful compute activity for 6 months or . If a business rightsizes their cloud deployment on AWS, these workloads will see a great difference in price or performance, once migrated.

The next step is to decide on the migration strategy, which can be anyone of the following options:

a. Lift and shift: Quickly move your application to AWS with minimal changes, utilizing migration tools for assistance.

b. Partial refactor: Retain certain aspects of your application while rebuilding other parts for proper functioning on AWS. Alternatively, build additional supporting services alongside the existing application.

c. Full refactor: Completely rebuild your application for optimal use of AWS Cloud benefits, considering opportunities like transitioning to microservices or adopting a container-based architecture.

d. SaaS or PaaS transition: Integrate SaaS or PaaS solutions for commodity applications or components, such as email or CRM, to accelerate migration and reduce management overhead.

Deciding on the right team to architect, build, migrate, and manage your cloud can be overwhelming. Hence, many companies opt for AWS migration services to go through the first phases of migration alongside their team.

Step 3: Design

This phase of migration is strategic as it determines the architecture of the AWS cloud infrastructure. While designing the infrastructure, business needs are analyzed, and the best-suited instance type is recommended to maximize cloud investment.

The objective is to deliver a targeted plan approved by all stakeholders as outlined in the initial step. The process usually involves below steps:

a. Examine the performance data across CPU, memory, network, and disk for servers, and across throughput, capacity, and IO for storage.

b. Decide the desired “headroom” (usually 25%) for each asset and assess actual minimum, maximum, and average usage across metrics. Identify the most suitable AWS instance type based on the analysis.

A virtual machine is considered undersized if its CPU demand exceeds 70% for more than 1% of any given hour and oversized if it stays below 30% for more than 1% of a 7-day period.

c. Develop an AWS design, including an architecture diagram and a service list (Amazon EC2 instances, EBS volumes, VPCs, etc.), along with associated costs.

d. Early engagement with security and compliance teams is crucial. Proactive collaboration helps prevent delays and ensures project deadlines are met.

Step 4: Migration and validation

It’s execution stage. Meticulously follow the approved AWS architecture plan and carefully migrate your application and data to the chosen configuration.

Let cloud engineers build approved architecture using AWS CloudFormation.

Further configure each application using Amazon Machine Image (AMI).

Store AWS CloudFormation template and Puppet/Chef scripts in a versioned code repository (Git).

Migrate servers, databases, and data to AWS through internet transfer or AWS migration services for faster transitions.

Conduct initial testing with cloud engineers by repeatedly tearing down/rebuilding infrastructure with AWS CloudFormation.

Other teams test and validate application performance, security, compliance, etc.

Important: When moving data and applications to AWS Cloud, both your old system and the new AWS one will be running at the same time. This can lead to higher cost for that period. Hence, make sure, this additional cost is taken into consideration while formulating AWS Cloud migration strategy. Learn more about the impact of automation on cloud migration.

Step 5: Operate and optimize

The secret to long term success with AWS platform is to keep optimizing. Once migration is completed, there is ample time to address any issues that may not have surfaced during the testing phase. This allows for ongoing improvements to the infrastructure, making it progressively better.

24*7 Support

Before going live, clearly lay down the roles and responsibilities, and list the procedures for common tasks such as instance rebooting, access management, etc. Relying on external AWS migration service providers like Softweb Solutions can help with reducing this additional burden of management.

Cost tracking and analysis

Leverage AWS for real-time billing updates and spending alerts. Optimize cost management by using a comprehensive analysis and governance platform. Your dashboard should offer a unified view of both on-premises and cloud environments, easily accessible by your cloud engineering team for budget oversight. Schedule regular reviews with your team or Managed Services Provider to stay updated on environment costs, especially with AWS introducing new products and services that may impact expenses.

Partnering with an AWS service provider brings expert guidance, streamlined processes, and ongoing support. They’ll navigate the complexities, choose the optimal path, and ensure a smooth, fast, and cost-effective journey to the cloud.

Approach cloud migration strategically

Based on the “Guidebook Understanding the Value of Migrating from On-premises to AWS for Application Security and Performance, Nucleus Research, June 2020” migrating to AWS delivers:

Working with a cloud provider like AWS allows you to access and benefit from powerful hardware, software and services. AWS, or Amazon Web Services, stands as the most extensive and widely embraced cloud platform worldwide, presenting a range of over 200 fully featured services across global data centers. This ensures high availability as well as fast and efficient storage.

Partner with Softweb Solutions for expert implementation of top-tier migration best practices. Transform your workloads and applications on AWS seamlessly, unlocking rapid cloud benefits and modernizing your operations efficiently.

Originally published at softwebsolutions.com on February 21, 2024.

#AWS Cloud Migration#on premise to aws cloud migration step by step#on prem to cloud migration aws#aws cloud migration benefits

0 notes

Text

Exploring the Top Tools for Cloud Based Network Monitoring in 2025

With businesses increasingly implementing cloud-first programming, there has been no time when network visibility is more required. Conventional monitoring tools are no longer sufficient to monitor the performance, latency and security of the modern and distributed infrastructures.

And that is where cloud based network monitoring enters. It allows IT teams that have hybrid and cloud environments to have real-time views, remotely access them, and also have improved scalability.

Some of those tools are remarkable in terms of their features, user-friendliness, and in-depth analytics, in 2025. This is the list totaling the best alternatives that are assisting companies keep in front of the network problems prior to them affecting operations.

1. Datadog

DevOps and IT teams are fond of using Datadog due to its cloud-native architecture and extensive availability. It also provides visibility of full-stack, metrics, traces as well as logs, all on a single dashboard.

Its Network Performance Monitoring (NPM) allows identifying bottlenecks, tracing traffic and tracking cloud services such as AWS, Azure and Google cloud. It provides teams with the ability to move quickly with real-time alerts and customizable dashboards with the insights.

2. SolarWinds Hybrid Cloud Observability

SolarWinds is traditionally associated with on-prem monitoring solutions, whereas, with shifts toward hybrid cloud observability, it will find itself extremely pertinent in 2025. The platform has evolved and is able to combine conventional network monitoring with cloud insights.

It provides anomaly detection, visual mapping, deep packet inspection using AI. This aids IT teams to troubleshoot through complex environments without switching between tools.

3. ThousandEyes by Cisco

ThousandEyes specializes in digital experience monitoring, and it is especially applicable to large, distributed networks. It also delivers end to end visibility at user to application level across third party infrastructure.

Its cloud agents and the internet outage tracking ensure that businesses can find out in a short time whether a performance problem is either internal or external. The strong support of Cisco gives the accuracy and the access of its network data.

4. LogicMonitor

LogicMonitor is a simple to deploy and scale agentless platform. It is awesome when an organization needs automation and little configuration.

The tool measures bandwidth, uptime, latency and cloud performance among various providers. Its predictive analytics not only identify trends, but they also notify teams before minor problems become major ones.

5. ManageEngine OpManager Plus

OpManager Plus is a powerful tool to be used by those who require an infrastructure support combination of the traditional and cloud-based monitoring. It is compatible with hybrid networks that provide stats such as device health, traffic and application performance.

It is distinguished by the UI, which is clean, self-explanatory, and can be customized. It especially is suitable in the middle-sized IT departments who require an unobstructed glance of both physical and virtual systems.

6. PRTG Network Monitor (Cloud Hosted)

The hosted version of PRTG has the same functions as its widely used desktop version, and its availability is on cloud levels. It carries sensors to keep track of server availability to network capacity and usage as well as cloud services.

It is perfect when companies require such a convenient approach as a license and payment as you go prices. Even the simpler option of the tool can be a good option to apply to the project where IT team size is smaller or you are at the beginning of the cloud migration.

What to Look for in a Monitoring Tool

When choosing a cloud network monitoring solution, it's important to focus on a few key aspects:

Ease of deployment and scalability

Multi-cloud and hybrid support

Custom alerting and reporting

Integration with your existing stack

User-friendly dashboards and automation

Each business is unique in its requirements and there is no such thing like the best tool, only the tool that suits your infrastructure, the size of your team and your response requirements.

Conclusion

With evolving infrastructure it is important to have the correct tools implemented to observe performance and availability. In 2025, the cloud based network monitoring tools will be more competitive, intelligent and responsive than ever.

Be it a Hollywood-sized company or a small IT start-up, by investing in any of these best platforms, you have the sight of keeping secure, flexible and consistent in a cloud driven planet.

0 notes

Link

#cloudcomputing#cloudmigration#CostOptimization#datasovereignty#digitaltransformation#Enterprisetechnology#hybridcloud#ITInfrastructure

0 notes

Text

5 Signs You’re Overpaying for Cloud — and How to Stop It

In today’s digital-first business environment, cloud computing has become a necessity. But as more organizations migrate to the cloud, many are waking up to a tough reality: they’re significantly overpaying for cloud services.

If you’re relying on AWS, Azure, GCP, or other providers to run your infrastructure, chances are you’re spending more than you need to. The complexity of cloud billing, unused resources, and lack of visibility can quickly balloon your monthly invoices.

1. You’re Running Unused or Idle Resources

One of the most common (and silent) culprits of cloud overspending is idle or underutilized resources.

The Problem:

You spin up instances or containers for testing, dev environments, or special projects — and then forget about them. These resources continue running 24/7, racking up costs, even though no one is using them.

The Fix:

Implement auto-scheduling tools to shut down non-production environments during off-hours. Regularly audit your infrastructure for zombie instances, unattached volumes, and dormant databases.

Tools like Cloudtopiaa’s automated resource analyzer can help identify and eliminate these wasted assets in real-time.

2. Your Cloud Bill Is Increasing Without Business Growth

Have you noticed your cloud costs increasing month-over-month, but your user base, traffic, or application load hasn’t significantly changed?

The Problem:

This is a classic sign of inefficient scaling, poor resource planning, or architectural issues like inefficient queries or chatty services.

The Fix:

Conduct a detailed cloud cost audit to understand which services or workloads are spiking. Use cost allocation tags to track spend by department, team, or service. Monitor usage trends, and align them with business performance indicators.

🔍 Cloudtopiaa provides intelligent cloud billing breakdowns and predictive analytics to help businesses map their spend against real ROI.

3. You’re Using the Wrong Instance Types or Pricing Models

Most cloud providers offer a range of pricing models: on-demand, reserved instances, spot pricing, and more. Using the wrong type can mean paying 2–3x more than necessary.

The Problem:

If you’re only using on-demand instances — even for long-term workloads — you’re missing out on serious savings. Similarly, choosing oversized instances for minimal workloads leads to resource waste.

The Fix:

Use rightsizing tools to analyze your compute needs and adjust instance types accordingly.

Shift stable workloads to Reserved Instances (RI) or Savings Plans.

For temporary or flexible jobs, consider Spot Instances at a fraction of the cost.

Cloudtopiaa’s smart instance advisor recommends optimal combinations of pricing models tailored to your workload patterns.

4. Lack of Visibility and Accountability in Cloud Spending

Cloud sprawl is real. Without proper governance, it’s easy to lose track of who’s spinning up what — and why.

The Problem:

No centralized visibility leads to fragmented billing, making it hard to know which teams, projects, or environments are responsible for overspending.

The Fix:

Implement cloud cost governance policies, and enforce tagging across all cloud assets. Establish a FinOps strategy that aligns IT, finance, and engineering teams around budgeting, forecasting, and reporting.

📊 With Cloudtopiaa’s dashboards, you get a 360° view of your cloud costs — by team, project, environment, or region.

5. You’re Not Optimizing for Multi-Cloud or Hybrid Environments

If you’re using more than one cloud provider — or a mix of on-prem and cloud — chances are you’re not optimizing across platforms.

The Problem:

Vendor lock-in, duplicated services, and lack of unified visibility lead to inefficiencies and overspending.

The Fix:

Evaluate cross-cloud redundancy and streamline services.

Use a multi-cloud cost management platform to monitor usage and optimize spending across providers.

Consolidate billing and centralize decision-making.

Cloudtopiaa supports multi-cloud monitoring and provides a unified interface to manage and optimize spend across AWS, Azure, and GCP.

How Cloudtopiaa Helps You Stop Overpaying for Cloud

At Cloudtopiaa, we specialize in helping companies reduce cloud spending without sacrificing performance or innovation.

Features that Save You Money:

Automated Cost Analysis: Identify inefficiencies in real time.

Rightsizing Recommendations: Resize VMs and services based on actual usage.

Billing Intelligence: Break down your cloud bills into actionable insights.

Smart Tagging & Governance: Gain control over who’s spending what.

Multi-Cloud Optimization: Get the best value from AWS, Azure, and GCP.

Practical Steps to Start Saving Today

Audit Your Cloud Resources: Identify unused or underused services.

Set Budgets & Alerts: Monitor spend proactively.

Optimize Workloads: Use automation tools to scale resources.

Leverage Discounted Pricing Models: Match pricing strategies with workload behavior.

Use a Cloud Optimization Partner: Tools like Cloudtopiaa do the heavy lifting for you.

Final Thoughts

Cloud isn’t cheap — but cloud mismanagement is far more expensive.

By recognizing the signs of overspending and taking proactive steps to optimize your cloud usage, you can save thousands — if not millions — each year.

Don’t let complex billing and unmanaged infrastructure drain your resources. Let Cloudtopiaa help you regain control, reduce waste, and spend smarter on the cloud.

📌 Visit Cloudtopiaa.com today to schedule your cloud cost optimization assessment.

0 notes

Text

Understanding the key steps in AWS Onboarding

Migrating to AWS is smart, but getting started can be overwhelming. CONNACT’s AWS Onboarding service is designed to help businesses of all sizes launch confidently into the cloud with a structured, secure, and fully supported onboarding experience.

Expert-Led Cloud Setup & Strategy

Our onboarding process is led by certified AWS professionals who bring deep technical expertise and real-world business insight. Whether you're a startup launching your first cloud environment or an enterprise migrating from on-prem infrastructure, we create a customized roadmap to align your AWS setup with your goals.

What's Included in CONNACT’s AWS Onboarding?

We handle all the essentials to ensure a smooth and efficient transition to AWS. Our onboarding includes:

Account setup and security best practices

Identity and access management (IAM) configuration

Networking (VPC, subnets, routing) setup

Cost optimization guidance

Monitoring and logging configuration

Compliance and backup strategies

Hands-on knowledge transfer and documentation

You’ll walk away with a secure, scalable, and well-architected AWS foundation—ready to support your applications, workloads, and growth.

Accelerated Launch. Long-Term Value.

At CONNACT, we set you up for success. Our goal is to help your team understand the AWS ecosystem so you can confidently manage, scale, and optimize your cloud infrastructure. We focus on reducing time-to-deployment while ensuring cost control and performance.

Ideal for Startups, SMBs, and IT Teams

Our AWS onboarding service is perfect for:

Tech startups launching in the cloud

Growing businesses migrating from legacy systems

IT teams that need a trusted partner for setup

Companies preparing for app deployment or product launches

Start Strong in the Cloud with CONNACT

Make your move to AWS faster, safer, and smarter with AWS Onboarding. From planning to implementation, our team ensures you have the tools, knowledge, and support needed to thrive in the cloud. Learn more!

0 notes

Text

Kubernetes vs. Traditional Infrastructure: Why Clusters and Pods Win

In today’s fast-paced digital landscape, agility, scalability, and reliability are not just nice-to-haves—they’re necessities. Traditional infrastructure, once the backbone of enterprise computing, is increasingly being replaced by cloud-native solutions. At the forefront of this transformation is Kubernetes, an open-source container orchestration platform that has become the gold standard for managing containerized applications.

But what makes Kubernetes a superior choice compared to traditional infrastructure? In this article, we’ll dive deep into the core differences, and explain why clusters and pods are redefining modern application deployment and operations.

Understanding the Fundamentals

Before drawing comparisons, it’s important to clarify what we mean by each term:

Traditional Infrastructure

This refers to monolithic, VM-based environments typically managed through manual or semi-automated processes. Applications are deployed on fixed servers or VMs, often with tight coupling between hardware and software layers.

Kubernetes

Kubernetes abstracts away infrastructure by using clusters (groups of nodes) to run pods (the smallest deployable units of computing). It automates deployment, scaling, and operations of application containers across clusters of machines.

Key Comparisons: Kubernetes vs Traditional Infrastructure

Feature

Traditional Infrastructure

Kubernetes

Scalability

Manual scaling of VMs; slow and error-prone

Auto-scaling of pods and nodes based on load

Resource Utilization

Inefficient due to over-provisioning

Efficient bin-packing of containers

Deployment Speed

Slow and manual (e.g., SSH into servers)

Declarative deployments via YAML and CI/CD

Fault Tolerance

Rigid failover; high risk of downtime

Self-healing, with automatic pod restarts and rescheduling

Infrastructure Abstraction

Tightly coupled; app knows about the environment

Decoupled; Kubernetes abstracts compute, network, and storage

Operational Overhead

High; requires manual configuration, patching

Low; centralized, automated management

Portability

Limited; hard to migrate across environments

High; deploy to any Kubernetes cluster (cloud, on-prem, hybrid)

Why Clusters and Pods Win

1. Decoupled Architecture

Traditional infrastructure often binds application logic tightly to specific servers or environments. Kubernetes promotes microservices and containers, isolating app components into pods. These can run anywhere without knowing the underlying system details.

2. Dynamic Scaling and Scheduling

In a Kubernetes cluster, pods can scale automatically based on real-time demand. The Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler help dynamically adjust resources—unthinkable in most traditional setups.

3. Resilience and Self-Healing

Kubernetes watches your workloads continuously. If a pod crashes or a node fails, the system automatically reschedules the workload on healthy nodes. This built-in self-healing drastically reduces operational overhead and downtime.

4. Faster, Safer Deployments

With declarative configurations and GitOps workflows, teams can deploy with speed and confidence. Rollbacks, canary deployments, and blue/green strategies are natively supported—streamlining what’s often a risky manual process in traditional environments.

5. Unified Management Across Environments

Whether you're deploying to AWS, Azure, GCP, or on-premises, Kubernetes provides a consistent API and toolchain. No more re-engineering apps for each environment—write once, run anywhere.

Addressing Common Concerns

“Kubernetes is too complex.”

Yes, Kubernetes has a learning curve. But its complexity replaces operational chaos with standardized automation. Tools like Helm, ArgoCD, and managed services (e.g., GKE, EKS, AKS) help simplify the onboarding process.

“Traditional infra is more secure.”

Security in traditional environments often depends on network perimeter controls. Kubernetes promotes zero trust principles, pod-level isolation, and RBAC, and integrates with service meshes like Istio for granular security policies.

Real-World Impact

Companies like Spotify, Shopify, and Airbnb have migrated from legacy infrastructure to Kubernetes to:

Reduce infrastructure costs through efficient resource utilization

Accelerate development cycles with DevOps and CI/CD

Enhance reliability through self-healing workloads

Enable multi-cloud strategies and avoid vendor lock-in

Final Thoughts

Kubernetes is more than a trend—it’s a foundational shift in how software is built, deployed, and operated. While traditional infrastructure served its purpose in a pre-cloud world, it can’t match the agility and scalability that Kubernetes offers today.

Clusters and pods don’t just win—they change the game.

0 notes

Text

Exploring the Role of Azure Data Factory in Hybrid Cloud Data Integration

Introduction

In today’s digital landscape, organizations increasingly rely on hybrid cloud environments to manage their data. A hybrid cloud setup combines on-premises data sources, private clouds, and public cloud platforms like Azure, AWS, or Google Cloud. Managing and integrating data across these diverse environments can be complex.

This is where Azure Data Factory (ADF) plays a crucial role. ADF is a cloud-based data integration service that enables seamless movement, transformation, and orchestration of data across hybrid cloud environments.

In this blog, we’ll explore how Azure Data Factory simplifies hybrid cloud data integration, key use cases, and best practices for implementation.

1. What is Hybrid Cloud Data Integration?

Hybrid cloud data integration is the process of connecting, transforming, and synchronizing data between: ✅ On-premises data sources (e.g., SQL Server, Oracle, SAP) ✅ Cloud storage (e.g., Azure Blob Storage, Amazon S3) ✅ Databases and data warehouses (e.g., Azure SQL Database, Snowflake, BigQuery) ✅ Software-as-a-Service (SaaS) applications (e.g., Salesforce, Dynamics 365)

The goal is to create a unified data pipeline that enables real-time analytics, reporting, and AI-driven insights while ensuring data security and compliance.

2. Why Use Azure Data Factory for Hybrid Cloud Integration?

Azure Data Factory (ADF) provides a scalable, serverless solution for integrating data across hybrid environments. Some key benefits include:

✅ 1. Seamless Hybrid Connectivity

ADF supports over 90+ data connectors, including on-prem, cloud, and SaaS sources.

It enables secure data movement using Self-Hosted Integration Runtime to access on-premises data sources.

✅ 2. ETL & ELT Capabilities

ADF allows you to design Extract, Transform, and Load (ETL) or Extract, Load, and Transform (ELT) pipelines.

Supports Azure Data Lake, Synapse Analytics, and Power BI for analytics.

✅ 3. Scalability & Performance

Being serverless, ADF automatically scales resources based on data workload.

It supports parallel data processing for better performance.

✅ 4. Low-Code & Code-Based Options

ADF provides a visual pipeline designer for easy drag-and-drop development.

It also supports custom transformations using Azure Functions, Databricks, and SQL scripts.

✅ 5. Security & Compliance

Uses Azure Key Vault for secure credential management.

Supports private endpoints, network security, and role-based access control (RBAC).

Complies with GDPR, HIPAA, and ISO security standards.

3. Key Components of Azure Data Factory for Hybrid Cloud Integration

1️⃣ Linked Services

Acts as a connection between ADF and data sources (e.g., SQL Server, Blob Storage, SFTP).

2️⃣ Integration Runtimes (IR)

Azure-Hosted IR: For cloud data movement.

Self-Hosted IR: For on-premises to cloud integration.

SSIS-IR: To run SQL Server Integration Services (SSIS) packages in ADF.

3️⃣ Data Flows

Mapping Data Flow: No-code transformation engine.

Wrangling Data Flow: Excel-like Power Query transformation.

4️⃣ Pipelines

Orchestrate complex workflows using different activities like copy, transformation, and execution.

5️⃣ Triggers

Automate pipeline execution using schedule-based, event-based, or tumbling window triggers.

4. Common Use Cases of Azure Data Factory in Hybrid Cloud

🔹 1. Migrating On-Premises Data to Azure

Extracts data from SQL Server, Oracle, SAP, and moves it to Azure SQL, Synapse Analytics.

🔹 2. Real-Time Data Synchronization

Syncs on-prem ERP, CRM, or legacy databases with cloud applications.

🔹 3. ETL for Cloud Data Warehousing

Moves structured and unstructured data to Azure Synapse, Snowflake for analytics.

🔹 4. IoT and Big Data Integration

Collects IoT sensor data, processes it in Azure Data Lake, and visualizes it in Power BI.

🔹 5. Multi-Cloud Data Movement

Transfers data between AWS S3, Google BigQuery, and Azure Blob Storage.

5. Best Practices for Hybrid Cloud Integration Using ADF

✅ Use Self-Hosted IR for Secure On-Premises Data Access ✅ Optimize Pipeline Performance using partitioning and parallel execution ✅ Monitor Pipelines using Azure Monitor and Log Analytics ✅ Secure Data Transfers with Private Endpoints & Key Vault ✅ Automate Data Workflows with Triggers & Parameterized Pipelines

6. Conclusion

Azure Data Factory plays a critical role in hybrid cloud data integration by providing secure, scalable, and automated data pipelines. Whether you are migrating on-premises data, synchronizing real-time data, or integrating multi-cloud environments, ADF simplifies complex ETL processes with low-code and serverless capabilities.

By leveraging ADF’s integration runtimes, automation, and security features, organizations can build a resilient, high-performance hybrid cloud data ecosystem.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Mastering Multicluster Management with Red Hat OpenShift Platform Plus (DO480)

Introduction

In today’s cloud-native world, enterprises are scaling their Kubernetes workloads across multiple clusters, spanning on-premise, hybrid, and multi-cloud environments. Managing these clusters efficiently while ensuring security, compliance, and workload consistency is a critical challenge.

With Red Hat OpenShift Platform Plus (DO480), organizations gain a comprehensive solution for multicluster management, enabling streamlined operations, automated policy enforcement, and advanced security across Kubernetes environments. In this blog, we’ll explore how DO480 helps you manage multicluster deployments effectively.

What is Red Hat OpenShift Platform Plus?

Red Hat OpenShift Platform Plus extends OpenShift’s capabilities by integrating essential tools for security, automation, and governance. It includes: ✔ Red Hat Advanced Cluster Management (ACM): Centralized multicluster management and policy enforcement. ✔ Red Hat Advanced Cluster Security (ACS): Enhanced security and compliance monitoring. ✔ OpenShift Data Foundation (ODF): Integrated storage management for stateful applications. ✔ Quay Container Registry: A secure and scalable container image repository.

With these features, organizations can provision, govern, and secure multiple OpenShift clusters efficiently.

Key Features of DO480: Multicluster Management

🔹 Centralized Cluster Lifecycle Management

DO480 allows you to deploy, update, and decommission OpenShift clusters across hybrid and multi-cloud environments using Red Hat ACM. With GitOps-driven automation, you can standardize cluster configurations and ensure consistency.

🔹 Policy-Driven Governance & Compliance

Managing security policies across multiple Kubernetes clusters can be challenging. DO480 enables: ✅ Automated policy enforcement (RBAC, network policies, compliance rules). ✅ Custom policy creation using Open Policy Agent (OPA). ✅ Visibility into cluster health & policy violations with real-time dashboards.

🔹 Enhanced Security with ACS

Security is a top priority in multicluster environments. Red Hat Advanced Cluster Security (ACS) provides: 🔐 Real-time vulnerability scanning for container images. 🔐 Runtime threat detection and policy-based risk mitigation. 🔐 Security compliance monitoring for Kubernetes clusters.

🔹 Workload Portability & Disaster Recovery

With OpenShift Data Foundation (ODF), organizations can manage persistent storage across multiple clusters, enabling: 📌 Data replication and failover mechanisms for high availability. 📌 Cross-cluster workload migration to ensure business continuity. 📌 Backup and restore capabilities for disaster recovery.

Why Choose DO480 for Multicluster Management?

✔ Scalability: Manage hundreds of OpenShift clusters across cloud and on-prem. ✔ Security-first Approach: Integrated security with ACS ensures compliance and risk mitigation. ✔ Automation & Efficiency: ACM’s GitOps-driven workflows reduce manual operations. ✔ Hybrid & Multi-Cloud Ready: Seamlessly manage clusters across AWS, Azure, GCP, and private data centers.

Getting Started with DO480

Red Hat’s DO480 training equips IT professionals with hands-on experience in managing OpenShift clusters efficiently. The course covers: 📌 Cluster provisioning and lifecycle management. 📌 Policy-based governance and security best practices. 📌 Advanced workload management strategies.

💡 Enroll today and take your OpenShift expertise to the next level! 🚀

For more details www.hawkstack.com

0 notes

Text

Learn step-by-step how to migrate from on-premises to AWS cloud seamlessly. Increase agility, reduce costs, and optimize performance with expert strategies and best practices. Partner with Softweb Solutions for efficient implementation.

#AWS cloud migration#on premise to aws cloud migration step by step#on prem to cloud migration aws#aws cloud migration benefits

0 notes

Text

The Ultimate Guide to Trellix SaaS Migration

Trellix ePO – SaaS is an enterprise software as a service (SaaS) rendition of Trellix ePO – On-prem, designed for multi-tenancy. Hosted on the reliable AWS cloud infrastructure, it offers accessibility through standard web browsers. Transitioning from your existing Trellix ePO – On-prem setup to the cloud is seamless with the ePO – SaaS Migration extension. This streamlined process empowers you…

View On WordPress

0 notes

Text

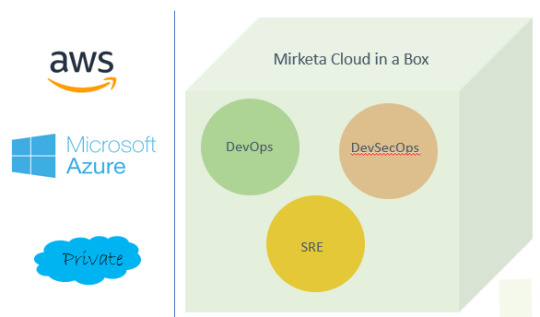

AWS

Cloud in a box

Everything you need to operate your business from Amazon, Microsoft Azure and Private Clouds.

Cloud in the box offering

A holistic approach to your infrastructure needs by combining services from the following disciplines to give you a seamless experience: DevOps: Your infrastructure is automated and built out right the first time SRE: Your infrastructure is configured for fault tolerance, resilience, monitored 24×7 and sends out alerts on critical failures DevSecOps: Your infrastructure is built to comply with security posture

CI/CD for cloud

Releasing features first to the market without sacrificing quality differentiates a company from its competitors. Mirketa will help you define CI pipelines to provide quick feedback to your developers to write better code by implementing industry leading open-source CI tools.

Build CI/CD pipeline: Our CI/CD pipelines integrate code quality, and test coverage tools to ensure quality is maintained as the teams move faster and implement change management integrations to provide compliance. Our expertise includes Jenkins, Kubernetes, Docker, and other open-source tools.

Branching strategies: Branching strategies are impacted by team structure, release processes, and even team skills and willingness to change. We recommend branching model blueprints that best fit your needs for efficient code management to increase developer productivity and reduce build and deploy time.

GIT migration: Our GIT migration services include taking your source code for legacy SCM tools like SVN and Perforce to GIT

CI/CD training: We provide classroom training on Continuous Integration and Continuous Deployment concepts and tools with hands-on exercises.

With Mirketa’s CI/CD methodology, you will see a significant reduction in cost per story point as the holding cost will drastically reduce without losing quality and sacrificing compliance.

Ready to discuss your clouds needs? Contact us for a free consultation.

Contact Us to know more

Modernization

Infrastructure modernization

Legacy infrastructure not just hampers businesses from lost productivity but also impacts growth potential. We at Mirketa take a cloud native approach and build infrastructure via open source DevOps automation tools to provide an error free and repeatable process.

We have expertise in docker, Kubernetes, AWS, GCP, Azure, serverless architecture.

Infrastructure Modernization Include:

– Assess current state and needs for Cloud Infrastructure

– Select the platform and architect to-be state

– Migrate from one platform to another

– Upgrade monitoring/ security tools

Whether it is an application migration journey from on-prem data centers to cloud or a brand new infrastructure built in the cloud, you can entrust Mirketa to have a smooth journey.

0 notes

Text

Migrating SQL Server On-Prem to the Cloud: A Guide to AWS, Azure, and Google Cloud

Taking your on-premises SQL Server databases to the cloud opens a world of benefits such as scalability, flexibility, and often, reduced costs. However, the journey requires meticulous planning and execution. We will delve into the migration process to three of the most sought-after cloud platforms: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), providing you with…

View On WordPress

0 notes

Text

Hybrid multicloud strategy it’s techniques

Successful multicloud strategy

Enterprises are using multicloud services to maximize performance, reduce prices, and avoid vendor lock-in. Gartner (link outside ibm.com) predicts that global end-user expenditure on public cloud services would rise 20.4% to $678.8 billion in 2024 from $563.6 billion in 2023. Multi zcloud architecture lets organizations pick the finest cloud products and services for their requirements and accelerates innovation by enabling game-changing technologies like generative AI and machine learning.

Multicloud environments get increasingly complicated as enterprises add cloud services. To succeed with multicloud, enterprises require a thorough multicloud management plan.

Describe multicloud architecture

Multicloud computing uses numerous cloud services from major cloud service providers (CSPs) such Amazon Web Services (AWS), Google Cloud Platform, IBM Cloud, and Microsoft Azure in the same IT architecture.

SaaS software applications like Webex or Slack hosted on the public internet may be provided by two cloud providers in a basic multicloud scenario.

A multicloud solution usually extends beyond SaaS distribution from several CSPs in complicated corporate businesses. An company may utilize Microsoft Azure for data storage, AWS for app development and testing, and Google Cloud for backup and disaster recovery.

Along with SaaS, many contemporary corporate firms use cloud service providers for the following cloud-based computing models, PaaS offers hardware, software, and infrastructure for app development, operating, and management. PaaS reduces the cost, complexity, and inflexibility of on-premises platform development and maintenance.

Infrastructure-as-a-service (IaaS) provides computing, network, and storage capabilities on-demand, via the internet, and for a fee. IaaS lets organizations increase and downsize workload resources without the enormous capital expenses of ramping up conventional IT infrastructure.

The multicloud hybrid environment

Today, multicloud environments are sometimes integrated with hybrid cloud, which combines public cloud, private cloud, and on-prem infrastructure. One flexible IT architecture facilitates workload interoperability and portability across many clouds in a hybrid cloud system. Hybrid multicloud architectures allow enterprises to migrate, construct, and optimize applications across various clouds using the best of both cloud computing worlds.

Modern hybrid multicloud ecosystems use open-source container orchestration platforms like Kubernetes and Docker Swarm to automate app deployment across on-premises data centers, public cloud, private cloud, and edge settings for cloud-native application development. Microservices accelerate software development and deployment for DevOps.

An IBM Institute for Business Value research found that a comprehensive hybrid multicloud platform technology and operating model at scale provides two-and-a-half times the value of a single-platform, single-cloud vendor strategy.

Multicloud challenges?

Multicloud environments are vital to business digital transformation, yet operating numerous clouds and services from various

CSPs is complicated: A major issue with multicloud is cloud sprawl, the uncontrolled expansion of an organization’s cloud services. Cloud sprawl may increase costs and overprovisioning. Overprovisioning increases the multicloud attack surface, making it more exposed to data breaches and cyberattacks and pays for unneeded or neglected workloads.

Silos of data: Organizations risk data silos with data spanning many clouds and platforms. Data silos hinder data analytics by prohibiting teams from sharing a comprehensive picture of aggregated data to collaborate and make business choices.

Security risks: Enterprise cloud usage requires solid security. Complex multicloud environments with data flowing between private and public clouds pose concerns. When using one cloud provider, a business may employ a single set of security measures. However, combining an organization’s internal security tools with the native security controls of platforms from different cloud service providers might fragment security capabilities and increase the risk of human error or misconfiguration.

Uncontrolled costs: Cloud services increase cloud expenses. The pay-per-usage approach for cloud services controls cloud expenditure, but difficulties controlling CSP pricing structures, forgotten data egress fees, and more may lead to unanticipated charges.

8 techniques for multicloud strategy success

Multiple cloud environments and providers complicate technical and administrative tasks. Here are eight essential stages for a successful multicloud approach, however each journey is different:

1. Set objectives

Starting a multicloud journey requires matching corporate objectives with a strategy. Review your company’s infrastructure and apps. Determine business use case workload and objectives.

Hybrid multicloud architectures provide integrated data communication, assuring minimal latency, no downtime, and easy data transfer. A healthcare firm may want a multicloud environment so teams in various locations may exchange data in real time to improve patient care.

2. Choose the finest cloud providers

Most CSPs provide similar core functions, but each has distinct features and services. A multicloud strategy helps you select the finest cloud services for your organization, whether it’s high-performance computing or sophisticated data analytics.

Check service contracts as some cloud providers provide flexible contracts and reduced beginning fees. Make sure IT teams and other stakeholders weigh in on CSP selection.

3. Make one glass pane.

In a multicloud context, APIs from different cloud platforms might hinder visibility. A central dashboard or platform that provides enterprise-wide visibility is needed to fully benefit from a multicloud architecture. This dynamic, secure centralized cloud management platform (CMP) lets IT teams develop, administer, monitor, and control multicloud environments.

4. Take use of automation

IT infrastructure and process automation are key to corporate multicloud models. Organizations may minimize IT staff manual duties using automation solutions. Cloud automation solutions provide a software layer to public or private cloud VMs.

Selecting the finest automation solutions for your company’s cloud management platform helps minimize computer resources and cloud computing costs. Infrastructure-as-code supports multicloud automation with containers and orchestration technologies. IT infrastructure provisioning is automated by IaC using a high-level descriptive coding language. IaC streamlines infrastructure administration, improves consistency, and reduces human setup.

5. Implement zero-trust security.

A recent IBM IBV survey found that the typical firm employs more than eight to nine cloud infrastructure environments, raising security risks and putting sensitive data at risk.

Managing numerous clouds requires zero-trust security, which implies a complex network is constantly vulnerable to external and internal attacks. Zero trust demands several security skills. These include SSO, multifactor authentication, and rules to regulate all user and privileged account access. Multicloud security solutions from major CSPs and other cloud service suppliers assist control risks and maintain reliability.

6. Include regulatory and compliance requirements

Enterprise-level enterprises, particularly multinational ones, must comply with regulatory norms (e.g., the EU’s General Data Protection Regulation, the US’s AI Bill of Rights (link lives outside of ibm.com)) in several nations. Companies in healthcare, energy, finance, and other areas must follow industry laws.

Industry standards violations may compromise sensitive data and cause legal, financial, and reputational harm. Integrating compliance norms and regulations into the multicloud development and deployment lifecycle reduces these risks and builds customer confidence. CSP compliance technologies that automate compliance updates may be integrated into cloud management platforms to assist enterprises meet industry-specific regulatory norms.

7. Optimize costs via FinOps

Multicloud cloud cost optimization plans use methods, methodologies, and best practices to limit expenses. Cloud financial management and culture FinOps enables firms optimize business value in hybrid multicloud settings. Along with FinOps, AI-powered cost-management technologies may boost application performance and optimize cloud expenses.

8. Keep improving your multicloud approach

A successful multicloud implementation never ends. Instead, it adapts to business demands and uses cutting-edge technology. Your company may innovate, remain nimble, and stay ahead by reviewing business objectives and cloud service portfolios.

Multicloud benefits

Single platforms cannot deliver the variety of services and capabilities of a multicloud. Businesses may benefit from Multicloud:

Choose “best-of-breed” cloud computing services to avoid vendor lock-in and its costs.

Get flexibility with the finest cloud services for cost, performance, security, and compliance.

Data, process, and system backups and redundancies prevent outages and assure resilience.

Control shadow IT with multi-cloud visibility.

IBM, multicloud

Companies will use hybrid multicloud solutions for infrastructure, platforms, and apps in the future. In 2027, public cloud provider services will cost $1.35 trillion globally, according to IDC.

IBM helps firms establish effective hybrid multicloud management strategies as a worldwide leader in hybrid cloud, AI, and consultancy. IBM collaborates with AWS, Microsoft Azure, and Google Cloud Platform to provide your company with the finest cloud-based services to compete in today’s fast-paced digital economy.

Read more on Govindhtech.com

#Hybridmulticloud#generativeAI#machinelearning#cloudservices#multicloudarchitecture#MicrosoftAzure#GoogleCloud#technews#technology

0 notes

Text

What is Cloud Migration? Strategies, Checklist, & FAQs

Cloud migration is the key to successful cloud adoption. However, there is a lot you need to understand before you think about migrating to the cloud. This cloud migration guide covers everything you need to know before planning your migration journey to the cloud for a smooth transition.

Virtual management of an organization’s infrastructure is essential for ensuring anytime, anywhere access. Also, the evolving remote work culture means that organizations need to move to the cloud to ensure business continuity, which, in turn, emphasizes the need for planning, strategizing, and executing cloud migration.

Types of Cloud Migration Tools

Organizations must often prepare for the significant shift where on-prem to cloud migration tools come to the rescue. These tools ensure migration speed and effectiveness without affecting the organization’s routine operations.

Here are the types of cloud migration tools:

1. Saas tools

SaaS, or aptly Software-as-a-service tools, is an anchor between on-premise applications and cloud storage destinations to transfer all the data safely. The good thing about these SaaS tools is that they are automated and user-friendly.

Here are a few examples of SaaS tools:

Cloud Endure: CloudEndure is a SaaS-based cloud migration tool that lets organizations simplify, expedite, and automate migrating their applications and data to the cloud.

Azure Migrate: Azure Migrate is a Microsoft service that offers a centralized hub to assess and migrate on-premises data, applications, and infrastructure to Azure.

2. Open-source tools

These tools are free of cost, and anyone can use them. However, there’s a catch! Your technical team should be able to customize these tools to cater to your business and functional requirements.

Here are a few examples of open-source tools for cloud migration:

CloudEndure: CloudEndure is a free, open-source cloud migration tool that offers live migration and disaster recovery for any workload, including physical, virtual, and cloud-based systems.

Apache Kafka: Apache Kafka is an open-source messaging system that you can use for real-time data processing, streaming, and migration. You can move data between different techniques, including cloud platforms.

3. Batch processing

Batch processing tools transfer large volumes of data. Batch processing tools are automated to work at frequent intervals to help avoid network congestion.

Here are a few popular batch-processing tools for cloud migration:

AWS Batch: AWS Batch is a fully managed batch processing service running batch computing workloads on the AWS Cloud. You can use it to process large volumes of data in parallel, making it an ideal tool for cloud migration.

Apache NiFi: Apache NiFi is an open-source data integration, processing, and routing tool. It can simplify migration by offering a visual interface for designing data flows and processing pipelines.

Apache Spark: Apache Spark is an open-source distributed computing system for large-scale data processing. You can use it to process large volumes of data in parallel, making it an ideal tool for cloud migration.

Azure Batch: Azure Batch is a fully managed batch processing service to run batch computing workloads on the Azure Cloud. It is ideal for cloud migration as you can parallelly process large volumes of data.

Google Cloud Dataflow: Google Cloud Dataflow is a fully managed batch and stream data processing service. Visit at netsolutions to get the full insights.

2 notes

·

View notes

Text

How are organizations winning with Snowflake?

Cloud has evolved pretty considerably throughout the last decade, giving confidence to organizations still hoping on legacy systems for their analytical ventures. There's an excess of choices for organizations enthusiastic about their immediate or specific data management requirements.

This blog addresses anyone or any organization looking for data warehousing options that are accessible in the cloud then here you are, its Snowflake - a cloud data platform, and how it nicely fits if you are thinking of migrating to a new cloud data warehouse.

The cloud data warehouse market is a very challenging space but is also characterized by the specialized offerings of different players. Azure, AWS Redshift, SQL data warehouse, Google BigQuery are ample alternatives that are available in a rapidly advanced data warehousing market, which estimates its value over 18 billion USD.

To help get you there, let's look at some of the key ways to establish a sustainable and adaptive enterprise data warehouse with Snowflake solutions.

#1 Rebuilding

Numerous customers are moving from on-prem to cloud to ask, "Can I leverage my present infrastructure standards and best practices, such as user management and database , DevOps and security?" This brings up a valid concern about building policies from scratch, but it's essential to adapt to new technological advancements and new business opportunities. And that may, in fact, require some rebuilding. If you took an engine from a 1985 Ford and installed it in a 2019 Ferrari, would you expect the same performance?

It's essential to make choices not because "that's how we've always done it," but because those choices will assist you adopt new technology, empower, and gain agility to business processes and applications. Major areas to review involve- policies, user management, sandbox setups, data loading practices, ETL frameworks, tools, and codebase.

#2 Right Data Modelling

Snowflake serves manifold purposes: data mart, data lake, data warehouse, database and ODS. It even supports numerous modeling techniques like - Snowflake, Star, BEAM and Data Vault.

Snowflake can also support "schema on write'' and "schema on read"." This sometimes curates glitches on how to position Snowflake properly.

The solution helps to let your usage patterns predict your data model in an easy way. Think about how you foresee your business applications and data consumers leveraging data assets in Snowflake. This will assist you clarify your organization and resources to get the best result from Snowflake.

Here's an example. In complex use cases, it's usually a good practice to develop composite solutions involving:

Layer1 as Data Lake to ingest all the raw structured and semi-structured data.

Layer2 as ODS to store staged and validated data.

Layer3 as Data Warehouse for storing cleansed, categorized, normalized and transformed data.

Layer4 as Data Mart to deliver targeted data assets to end consumers and applications.

#3 Ingestion and integration

Snowflake adapts seamlessly with various data integration patterns, including batch (e.g., fixed schedule), near real-time (e.g., event-based) and real-time (e.g., streaming). To know the best pattern, collate your data loading use cases. Organizations willing to collate all the patterns—where data is recieved on a fixed basis goes via a static batch process, and easily delivered data uses dynamic patterns. Assess your data sourcing needs and delivery SLAs to track them to a proper ingestion pattern.

Also, account for your coming use cases. For instance: "data X" is received by 11am daily, so it's good to schedule a batch workflow running at 11am, right? But what if instead it is ingested by an event-based workflow—won't this deliver data faster, improve your SLA, convert static dependency and avoid efforts when delays happen to an automated mechanism? Try to think as much as you can through different scenarios.

Once integration patterns are known, ETL tooling comes next. Snowflake supports many integration partners and tools such as Informatica, Talend, Matillion, Polestar solutions, Snaplogic, and more. Many of them have also formed a native connector with Snowflake. And also, Snowflake supports no-tool integration using open source languages such as Python.

To choose the prompt integration platform, calculate these tools against your processing requirements, data volume, and usage. Also, examine if it could process in memory and perform SQL push down (leveraging Snowflake warehouse for processing). Push down technique is excellent help on Big Data use cases, as it eliminates the bottleneck with the tool's memory.

#4 Managing Snowflake

Here are a few things to know after Snowflake is up and running: Security practices. Establish strong security practices for your organization—leverage Snowflake role-based access control (RBAC) over Discretionary Access Control (DAC). Snowflake also supports SSO and federated authentication, merging with third-party services such as Active Directory and Oakta.

Access management. Identify user groups, privileges, and needed roles to define a hierarchical structure for your applications and users.

Resource monitors. Snowflake offers infinitely compute and scalable storage. The tradeoff is that organizations must establish monitoring and control protocols to keep your operating budget under control. The two primary comes here is:

Snowflake Cloud Data Warehouse configuration. It's typically best to curate different Snowflake Warehouses for each user, business area, group, or application. This assists to manage billing and chargeback when required. To further govern, assign roles specific to Warehouse actions (monitor, access/ update / create) so that only designed users can alter or develop the warehouse.

Billing alerts assist with monitoring and making the right actions at the right time. Define Resource Monitors to assist monitor your cost and avoid billing overage. You can customize these alerts and activities based on disparate threshold scenarios. Actions range from suspending a warehouse to simple email warnings.

Final Thoughts

If you have an IoT solutions database or a diverse data ecosystem, you will need a cloud-based data warehouse that gives scalability, ease of use, and infinite expansion. And you will require a data integration solution that is optimized for cloud operation. Using Stitch to extract and load data makes migration simple, and users can run transformations on data stored within Snowflake.

As a Snowflake Partner, we help organizations assess their data management requirements & quantify their storage needs. If you have an on-premise DW, our data & cloud experts help you migrate without any downtime or loss of data or logic. Further, our snowflake solutions enables data analysis & visualization for quick decision-making to maximize the returns on your investment.

0 notes

Text

Unlock the Power of Cloud with Goognu’s Azure Consulting Services

In today’s fast-paced digital world, businesses must leverage the cloud to stay competitive and drive efficiency. Goognu’s Azure Consulting Services help organizations seamlessly migrate, optimize, and manage their cloud infrastructure on Microsoft Azure. Our team of certified Azure experts ensures businesses maximize their cloud investment while maintaining security, scalability, and cost-efficiency.

Why Choose Microsoft Azure?

Microsoft Azure is a powerful and flexible cloud platform offering a vast array of services to help businesses scale and innovate. Key benefits include:

· Scalability & Flexibility: Easily scale resources based on demand.

· Enterprise-Grade Security: Built-in compliance and threat protection.

· AI & Analytics Integration: Gain real-time business insights with AI-driven tools.

· Hybrid Cloud Capabilities: Seamless integration with on-premises and multi-cloud environments.

· Cost Optimization: Pay-as-you-go model ensures cost efficiency.

Why Choose Goognu for Azure Consulting Services?

At Goognu, we specialize in Azure Consulting Services, ensuring businesses get tailored cloud solutions for their unique needs. Our expertise covers:

· Certified Azure Professionals: Extensive experience in Azure architecture and cloud optimization.

· End-to-End Cloud Consulting: Strategy, migration, security, and optimization services.

· Customized Solutions: Industry-specific cloud strategies.

· Multi-Cloud & Hybrid Cloud Expertise: Integration across AWS, GCP, and on-prem systems.

· 24/7 Support & Monitoring: Proactive issue resolution and cloud management.

Our Comprehensive Azure Consulting Services

1. Azure Cloud Strategy & Assessment

We help businesses evaluate their cloud readiness and develop a tailored Azure roadmap, including:

· Cloud readiness assessment.

· Strategic planning for Azure adoption.

· Security and compliance recommendations.

2. Azure Cloud Migration Services

Seamlessly transition your applications, data, and workloads to Azure with minimal downtime. Our services include:

· Lift-and-shift migrations.

· Application modernization for Azure-native solutions.

· Secure data migration with compliance adherence.

3. Azure Infrastructure Optimization

Enhance performance and cost efficiency with optimized Azure infrastructure. We provide:

· Automated scaling for dynamic workloads.

· AI-driven analytics for cloud cost optimization.

· Performance monitoring and predictive insights.

4. Azure DevOps & Automation

Accelerate development with Azure Consulting Services and DevOps Consulting Services. Our consulting solutions include:

· Implementation of CI/CD pipelines.

· Infrastructure as Code (IaC) automation.

· Integration of DevOps tools and automation workflows.

5. Azure Security & Compliance

Protect your cloud infrastructure with advanced security solutions. Our services include:

· Identity and Access Management (IAM) implementation.

· Threat detection and encryption protocols.

· Compliance with GDPR, HIPAA, and ISO standards.

6. Azure Cost Management & Optimization

Maximize your cloud investment with cost-saving strategies. We help businesses:

· Identify underutilized resources and optimize costs.

· Implement governance policies for budget control.

· Monitor spending trends with real-time analytics.

Key Benefits of Goognu’s Azure Consulting Services

Faster Cloud Adoption

Accelerate your Azure transformation with a structured migration approach.

Enhanced Business Agility

Scale your applications effortlessly to meet evolving business needs.

Strengthened Security & Compliance

Leverage Azure’s robust security framework to protect business data.

Cost-Efficient Cloud Operations

Optimize cloud spending while maintaining peak performance.

AI & Automation-Driven Efficiency

Enhance productivity with AI-powered insights and automation.

Success Stories

Case Study 1: Azure Cloud Transformation for a Financial Firm

A global financial institution partnered with Goognu to migrate its workloads to Azure. Results:

· 30% reduction in cloud costs through optimization.

· Zero downtime migration, ensuring business continuity.

· Enhanced security compliance with Azure’s security framework.

Case Study 2: Scaling Retail Operations with Azure

A leading e-commerce company utilized Azure Consulting Services for cloud scalability. Key achievements:

· 50% faster page load times due to optimized cloud performance.

· Automated CI/CD pipelines, reducing deployment time by 60%.

· Enhanced customer experience through improved cloud availability.

Why Azure is the Future of Cloud Computing?

With the rise of digital transformation, Azure Consulting Services and DevOps Consulting Services are crucial for businesses looking to:

· Leverage AI & Big Data: Gain insights from data analytics and AI tools.

· Ensure High Availability: Use Azure’s global infrastructure for reliability.

· Maintain Compliance: Meet industry-specific regulatory requirements.

· Adopt Multi-Cloud Strategies: Seamlessly integrate Azure with other cloud platforms.

Take the Next Step in Your Azure Journey

Are you ready to unlock the full potential of Azure Consulting Services? Partner with Goognu to accelerate cloud transformation, optimize costs, and enhance security. Whether you’re migrating to Azure, modernizing applications, or securing cloud infrastructure, we provide end-to-end consulting solutions tailored to your business.

Contact us today for a free consultation and discover how Goognu’s Azure Consulting Services can transform your business.

0 notes