#postgres database docker

Explore tagged Tumblr posts

Text

youtube

#youtube#video#codeonedigest#microservices#microservice#docker#nodejs module#nodejs express#node js#nodejs#node js training#node js express#node js development company#node js development services#node js developers#node js application#postgres database#postgres tutorial#postgresql#postgres#docker image#docker container#docker tutorial#dockerfile#docker course

0 notes

Text

chat I'm testing a few systems & need a simple application with postgres database & ideally docker compose ready to go. any recommendations?

8 notes

·

View notes

Text

if my goal with this project was just "make a website" I would just slap together some html, css, and maybe a little bit of javascript for flair and call it a day. I'd probably be done in 2-3 days tops. but instead I have to practice and make myself "employable" and that means smashing together as many languages and frameworks and technologies as possible to show employers that I'm capable of everything they want and more. so I'm developing apis in java that fetch data from a postgres database using spring boot with authentication from spring security, while coding the front end in typescript via an angular project served by nginx with https support and cloudflare protection, with all of these microservices running in their own docker containers.

basically what that means is I get to spend very little time actually programming and a whole lot of time figuring out how the hell to make all these things play nice together - and let me tell you, they do NOT fucking want to.

but on the bright side, I do actually feel like I'm learning a lot by doing this, and hopefully by the time I'm done, I'll have something really cool that I can show off

8 notes

·

View notes

Text

When you attempt to validate that a data pipeline is loading data into a postgres database, but you are unable to find the configuration tables that you stuffed into the same database out of expediency, let alone the data that was supposed to be loaded, dont be surprised if you find out after hours of troubleshooting that your local postgres server was running.

Further, dont be surprised if that local server was running, and despite the pgadmin connection string being correctly pointed to localhost:5432 (docker can use the same binding), your pgadmin decides to connect you to the local server with the same database name, database user name, and database user password.

Lessons learned:

try to use unique database names with distinct users and passwords across all users involved in order to avoid this tomfoolery in the future, EVEN IN TEST, ESPECIALLY IN TEST (i dont really have a 'prod environment, homelab and all that, but holy fuck)

do not slam dunk everything into a database named 'toilet' while playing around with database schemas in order to solidify your transformation logic, and then leave your local instance running.

do not, in your docker-compose.yml file, also name the database you are storing data into, 'toilet', on the same port, and then get confused why the docker container database is showing new entries from the DAG load functionality, but you cannot validate through pgadmin.

3 notes

·

View notes

Text

Using Docker in Software Development

Docker has become a vital tool in modern software development. It allows developers to package applications with all their dependencies into lightweight, portable containers. Whether you're building web applications, APIs, or microservices, Docker can simplify development, testing, and deployment.

What is Docker?

Docker is an open-source platform that enables you to build, ship, and run applications inside containers. Containers are isolated environments that contain everything your app needs—code, libraries, configuration files, and more—ensuring consistent behavior across development and production.

Why Use Docker?

Consistency: Run your app the same way in every environment.

Isolation: Avoid dependency conflicts between projects.

Portability: Docker containers work on any system that supports Docker.

Scalability: Easily scale containerized apps using orchestration tools like Kubernetes.

Faster Development: Spin up and tear down environments quickly.

Basic Docker Concepts

Image: A snapshot of a container. Think of it like a blueprint.

Container: A running instance of an image.

Dockerfile: A text file with instructions to build an image.

Volume: A persistent data storage system for containers.

Docker Hub: A cloud-based registry for storing and sharing Docker images.

Example: Dockerizing a Simple Python App

Let’s say you have a Python app called app.py: # app.py print("Hello from Docker!")

Create a Dockerfile: # Dockerfile FROM python:3.10-slim COPY app.py . CMD ["python", "app.py"]

Then build and run your Docker container: docker build -t hello-docker . docker run hello-docker

This will print Hello from Docker! in your terminal.

Popular Use Cases

Running databases (MySQL, PostgreSQL, MongoDB)

Hosting development environments

CI/CD pipelines

Deploying microservices

Local testing for APIs and apps

Essential Docker Commands

docker build -t <name> . — Build an image from a Dockerfile

docker run <image> — Run a container from an image

docker ps — List running containers

docker stop <container_id> — Stop a running container

docker exec -it <container_id> bash — Access the container shell

Docker Compose

Docker Compose allows you to run multi-container apps easily. Define all your services in a single docker-compose.yml file and launch them with one command: version: '3' services: web: build: . ports: - "5000:5000" db: image: postgres

Start everything with:docker-compose up

Best Practices

Use lightweight base images (e.g., Alpine)

Keep your Dockerfiles clean and minimal

Ignore unnecessary files with .dockerignore

Use multi-stage builds for smaller images

Regularly clean up unused images and containers

Conclusion

Docker empowers developers to work smarter, not harder. It eliminates "it works on my machine" problems and simplifies the development lifecycle. Once you start using Docker, you'll wonder how you ever lived without it!

0 notes

Text

Using Docker for Full Stack Development and Deployment

1. Introduction to Docker

What is Docker? Docker is an open-source platform that automates the deployment, scaling, and management of applications inside containers. A container packages your application and its dependencies, ensuring it runs consistently across different computing environments.

Containers vs Virtual Machines (VMs)

Containers are lightweight and use fewer resources than VMs because they share the host operating system’s kernel, while VMs simulate an entire operating system. Containers are more efficient and easier to deploy.

Docker containers provide faster startup times, less overhead, and portability across development, staging, and production environments.

Benefits of Docker in Full Stack Development

Portability: Docker ensures that your application runs the same way regardless of the environment (dev, test, or production).

Consistency: Developers can share Dockerfiles to create identical environments for different developers.

Scalability: Docker containers can be quickly replicated, allowing your application to scale horizontally without a lot of overhead.

Isolation: Docker containers provide isolated environments for each part of your application, ensuring that dependencies don’t conflict.

2. Setting Up Docker for Full Stack Applications

Installing Docker and Docker Compose

Docker can be installed on any system (Windows, macOS, Linux). Provide steps for installing Docker and Docker Compose (which simplifies multi-container management).

Commands:

docker --version to check the installed Docker version.

docker-compose --version to check the Docker Compose version.

Setting Up Project Structure

Organize your project into different directories (e.g., /frontend, /backend, /db).

Each service will have its own Dockerfile and configuration file for Docker Compose.

3. Creating Dockerfiles for Frontend and Backend

Dockerfile for the Frontend:

For a React/Angular app:

Dockerfile

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD ["npm", "start"]

This Dockerfile installs Node.js dependencies, copies the application, exposes the appropriate port, and starts the server.

Dockerfile for the Backend:

For a Python Flask app

Dockerfile

FROM python:3.9 WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . EXPOSE 5000 CMD ["python", "app.py"]

For a Java Spring Boot app:

Dockerfile

FROM openjdk:11 WORKDIR /app COPY target/my-app.jar my-app.jar EXPOSE 8080 CMD ["java", "-jar", "my-app.jar"]

This Dockerfile installs the necessary dependencies, copies the code, exposes the necessary port, and runs the app.

4. Docker Compose for Multi-Container Applications

What is Docker Compose? Docker Compose is a tool for defining and running multi-container Docker applications. With a docker-compose.yml file, you can configure services, networks, and volumes.

docker-compose.yml Example:

yaml

version: "3" services: frontend: build: context: ./frontend ports: - "3000:3000" backend: build: context: ./backend ports: - "5000:5000" depends_on: - db db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

This YAML file defines three services: frontend, backend, and a PostgreSQL database. It also sets up networking and environment variables.

5. Building and Running Docker Containers

Building Docker Images:

Use docker build -t <image_name> <path> to build images.

For example:

bash

docker build -t frontend ./frontend docker build -t backend ./backend

Running Containers:

You can run individual containers using docker run or use Docker Compose to start all services:

bash

docker-compose up

Use docker ps to list running containers, and docker logs <container_id> to check logs.

Stopping and Removing Containers:

Use docker stop <container_id> and docker rm <container_id> to stop and remove containers.

With Docker Compose: docker-compose down to stop and remove all services.

6. Dockerizing Databases

Running Databases in Docker:

You can easily run databases like PostgreSQL, MySQL, or MongoDB as Docker containers.

Example for PostgreSQL in docker-compose.yml:

yaml

db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

Persistent Storage with Docker Volumes:

Use Docker volumes to persist database data even when containers are stopped or removed:

yaml

volumes: - db_data:/var/lib/postgresql/data

Define the volume at the bottom of the file:

yaml

volumes: db_data:

Connecting Backend to Databases:

Your backend services can access databases via Docker networking. In the backend service, refer to the database by its service name (e.g., db).

7. Continuous Integration and Deployment (CI/CD) with Docker

Setting Up a CI/CD Pipeline:

Use Docker in CI/CD pipelines to ensure consistency across environments.

Example: GitHub Actions or Jenkins pipeline using Docker to build and push images.

Example .github/workflows/docker.yml:

yaml

name: CI/CD Pipeline on: [push] jobs: build: runs-on: ubuntu-latest steps: - name: Checkout Code uses: actions/checkout@v2 - name: Build Docker Image run: docker build -t myapp . - name: Push Docker Image run: docker push myapp

Automating Deployment:

Once images are built and pushed to a Docker registry (e.g., Docker Hub, Amazon ECR), they can be pulled into your production or staging environment.

8. Scaling Applications with Docker

Docker Swarm for Orchestration:

Docker Swarm is a native clustering and orchestration tool for Docker. You can scale your services by specifying the number of replicas.

Example:

bash

docker service scale myapp=5

Kubernetes for Advanced Orchestration:

Kubernetes (K8s) is more complex but offers greater scalability and fault tolerance. It can manage Docker containers at scale.

Load Balancing and Service Discovery:

Use Docker Swarm or Kubernetes to automatically load balance traffic to different container replicas.

9. Best Practices

Optimizing Docker Images:

Use smaller base images (e.g., alpine images) to reduce image size.

Use multi-stage builds to avoid unnecessary dependencies in the final image.

Environment Variables and Secrets Management:

Store sensitive data like API keys or database credentials in Docker secrets or environment variables rather than hardcoding them.

Logging and Monitoring:

Use tools like Docker’s built-in logging drivers, or integrate with ELK stack (Elasticsearch, Logstash, Kibana) for advanced logging.

For monitoring, tools like Prometheus and Grafana can be used to track Docker container metrics.

10. Conclusion

Why Use Docker in Full Stack Development? Docker simplifies the management of complex full-stack applications by ensuring consistent environments across all stages of development. It also offers significant performance benefits and scalability options.

Recommendations:

Encourage users to integrate Docker with CI/CD pipelines for automated builds and deployment.

Mention the use of Docker for microservices architecture, enabling easy scaling and management of individual services.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

Updating a Tiny Tiny RSS install behind a reverse proxy

Screenshot of my Tiny Tiny RSS install on May 7th 2024 after a long struggle with 502 errors. I had a hard time when trying to update my Tiny Tiny RSS instance running as Docker container behind Nginx as reverse proxy. I experienced a lot of nasty 502 errors because the container did not return proper data to Nginx. I fixed it in the following manner: First I deleted all the containers and images. I did it with docker rm -vf $(docker ps -aq) docker rmi -f $(docker images -aq) docker system prune -af Attention! This deletes all Docker images! Even those not related to Tiny Tiny RSS. No problem in my case. It only keeps the persistent volumes. If you want to keep other images you have to remove the Tiny Tiny RSS ones separately. The second issue is simple and not really one for me. The Tiny Tiny RSS docs still call Docker Compose with a hyphen: $ docker-compose version. This is not valid for up-to-date installs where the hyphen has to be omitted: $ docker compose version. The third and biggest issue is that the Git Tiny Tiny RSS repository for Docker Compose does not exist anymore. The files have to to be pulled from the master branch of the main repository https://git.tt-rss.org/fox/tt-rss.git/. The docker-compose.yml has to be changed afterwards since the one in the repository is for development purposes only. The PostgreSQL database is located in a persistent volume. It is not possible to install a newer PostgreSQL version over it. Therefore you have to edit the docker-compose.yml and change the database image image: postgres:15-alpine to image: postgres:12-alpine. And then the data in the PostgreSQL volume were owned by a user named 70. Change it to root. Now my Tiny Tiny RSS runs again as expected. Read the full article

0 notes

Text

2024 New Year Resolution

To keep my hopes high for this year, I will be focusing more on Backend. So setting some of the goals, that I want to do this year

Checklist

Q1

Data Structure Algorithm

Java Spring Boot

Q2

AWS Cloud Practioner Certificate

Docker

My Current Stack

Frontend: HTML / CSS / JS (Vue | Angular | React | Svelte), TypeScript / Dart (Flutter)

Backend: Python (Flask), PHP (Laravel), Node.js (Express, Nest and Koa)

Database: MongoDB, Postgres, SQLite, Redis

Short Summary

I started learning programming back in 2008. So it's been a long journey. Initially learned Python to write games via PyGame and ActionScript 2.0 (to modify Flash games source code).

1 note

·

View note

Text

Let's do Fly and Bun🚀

0. Sample Bun App

1. Install flycll

$ brew install flyctl

$ fly version fly v0.1.56 darwin/amd64 Commit: 7981f99ff550f66def5bbd9374db3d413310954f-dirty BuildDate: 2023-07-12T20:27:19Z

$ fly help Deploying apps and machines: apps Manage apps machine Commands that manage machines launch Create and configure a new app from source code or a Docker image. deploy Deploy Fly applications destroy Permanently destroys an app open Open browser to current deployed application Scaling and configuring: scale Scale app resources regions V1 APPS ONLY: Manage regions secrets Manage application secrets with the set and unset commands. Provisioning storage: volumes Volume management commands mysql Provision and manage PlanetScale MySQL databases postgres Manage Postgres clusters. redis Launch and manage Redis databases managed by Upstash.com consul Enable and manage Consul clusters Networking configuration: ips Manage IP addresses for apps wireguard Commands that manage WireGuard peer connections proxy Proxies connections to a fly VM certs Manage certificates Monitoring and managing things: logs View app logs status Show app status dashboard Open web browser on Fly Web UI for this app dig Make DNS requests against Fly.io's internal DNS server ping Test connectivity with ICMP ping messages ssh Use SSH to login to or run commands on VMs sftp Get or put files from a remote VM. Platform overview: platform Fly platform information Access control: orgs Commands for managing Fly organizations auth Manage authentication move Move an app to another organization More help: docs View Fly documentation doctor The DOCTOR command allows you to debug your Fly environment help commands A complete list of commands (there are a bunch more)

2. Sign up

$ fly auth signup

or

$ fly auth login

3. Launch App

Creating app in /Users/yanagiharas/works/bun/bun-getting-started/quickstart Scanning source code Detected a Bun app ? Choose an app name (leave blank to generate one): hello-bun

4. Dashboard

0 notes

Text

TeamTNT's Silentbob Botnet Infecting 196 Hosts in Cloud Attack Campaign

The Hacker News : As many as 196 hosts have been infected as part of an aggressive cloud campaign mounted by the TeamTNT group called Silentbob. "The botnet run by TeamTNT has set its sights on Docker and Kubernetes environments, Redis servers, Postgres databases, Hadoop clusters, Tomcat and Nginx servers, Weave Scope, SSH, and Jupyter applications," Aqua security researchers Ofek Itach and Assaf Morag said in a http://dlvr.it/Ss7lDV Posted by : Mohit Kumar ( Hacker )

0 notes

Text

Senior Software Engineer - Remote

Company: Garner Health Garner's mission is to transform the healthcare economy, delivering high quality and affordable care for all. By helping employers restructure their healthcare benefit to provide clear incentives and data-driven insights, we direct employees to higher quality and lower cost healthcare providers. The result is that patients get better health outcomes while doctors are rewarded for practicing well, not performing more procedures. We are backed by top-tier venture capital firms, are growing rapidly and looking to expand our team. We are looking for a Senior Software Engineer to join our team and help us revolutionize how people find top doctors in their area and get their medical claims reimbursed promptly. You will work closely with a talented product and design team, stay up-to-date with the latest technologies, and contribute to a fast-paced, innovative environment. The ideal candidate for this role should be able to deliver across the tech stack and is eager to learn and apply new techniques and technologies. This position is fully remote. Main Responsibilities: - Design, build and launch new features and improve the overall quality of the Garner app - Collaborate across disciplines to understand our users and iterate on new ideas - Protect our users’ privacy and security through secure coding practices - Support the Garner app in production Our software kit: TypeScript, React, Emotion, NodeJS, Kubernetes, Istio, Postgres, ElasticSearch, NATS, AWS, Terraform Ideal Qualifications: - 6+ years hands-on work delivering full-stack software - Expertise in TypeScript (preferred) or modern JavaScript - Familiarity with React, NodeJS, Docker, and a major cloud provider - Experience with one or more database systems, especially PostgreSQL or ElasticSearch - Experience working in a team using agile methodologies and fluency with the agile toolkit Why You Should Join Our Team: - You are mission-driven and want to work at a company that can change the healthcare system - You want to be on a small, fast-paced team that nimbly moves to meet new challenges - You love ideating on new features and working with data to find new insights - You’re excited about researching and working with the latest tools and technologies The salary range for this position is $125,000 - $185,000 annually. Compensation for this role will depend on a variety of factors including qualifications, skills and applicable laws. In addition to base compensation, this role is eligible to participate in our equity incentive and competitive benefits plans. If you are hired, we may require proof of full vaccination against COVID-19. Reasonable accommodations will be considered on a case-by-case basis for exemptions to this requirement, in accordance with applicable law. APPLY ON THE COMPANY WEBSITE To get free remote job alerts, please join our telegram channel “Global Job Alerts” or follow us on Twitter for latest job updates. Disclaimer: - This job opening is available on the respective company website as of 5thJuly 2023. The job openings may get expired by the time you check the post. - Candidates are requested to study and verify all the job details before applying and contact the respective company representative in case they have any queries. - The owner of this site has provided all the available information regarding the location of the job i.e. work from anywhere, work from home, fully remote, remote, etc. However, if you would like to have any clarification regarding the location of the job or have any further queries or doubts; please contact the respective company representative. Viewers are advised to do full requisite enquiries regarding job location before applying for each job. - Authentic companies never ask for payments for any job-related processes. Please carry out financial transactions (if any) at your own risk. - All the information and logos are taken from the respective company website. Read the full article

0 notes

Text

youtube

#nodejs express#node js express tutorial#node js training#nodejs tutorial#nodejs projects#nodejs module#node js express#node js development company#codeonedigest#node js developers#docker container#docker microservices#docker tutorial#docker image#docker course#docker#postgres tutorial#postgresql#postgres database#postgres#install postgres#Youtube

0 notes

Text

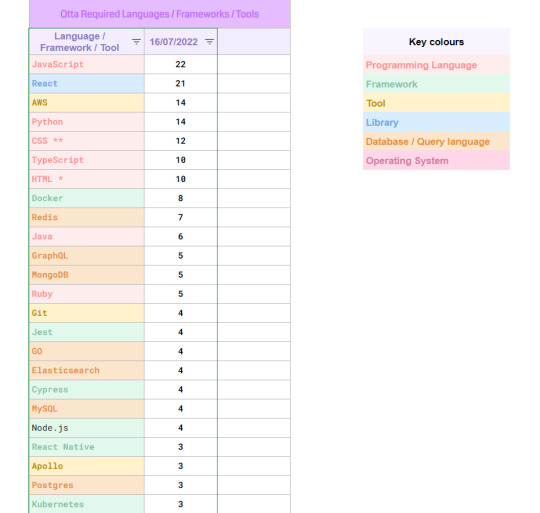

Tech jobs requirements prt 1 | Resource ✨

I decided to do my own mini research on what languages/frameworks/tools and more, are required when looking into getting a job in tech! Read below for more information! 🤗🎀

The website I have used and will be using for this research is Otta (link) which is an employment search service based in London, England that mostly does tech jobs. What they take into consideration are:

ꕤ Where would you like to work? (Remote or in office in UK, Europe, USA and, Toronto + Vancouver in Canada)

ꕤ If remote, where you are based?

ꕤ If you need a visa to work elsewhere

ꕤ What language you can work in? (English, French, Spanish, etc)

ꕤ What type of roles you would like to see in your searches (Software Engineering, Data, Design, Marketing, etc)

ꕤ What level of the roles you would like? (Entry, Junior, Mid-Level, etc)

ꕤ Size of the company you would like to work in (10 employees to 1001+ employees)

ꕤ Favourite industries you would like to work for (Banking, HR, Healthcare, etc) and if you want to exclude any from your searches

ꕤ Favourite technologies (Scala, Git, React, Java, etc) and if you want to exclude any

ꕤ Minimum expected salary (in £, USD $, CAD $, €)

With this information, I created a dummy profile who is REMOTE based IN THE UK with ENTRY/JUNIOR level and speaks ENGLISH. I clicked all for technologies and industries. Clicked all the options for the size of the company and set the minimum salary at £20k (the lower the number the more job posts will appear) I altered my search requirements to fit these job roles because these are my preferred roles:

ꕤ Software Developer + Engineer

ꕤ Full-Stack

ꕤ Backend

ꕤ Frontend

ꕤ Data

I searched 40 job postings so far but might increase it to 100 to be nice and even. I will keep updating every week for a month to see what is truly the most in-demand in the technology world! I might be doing this research wrong but it's all for fun! ❀

🔥 Week 1 🔥

ꕤ Top programming languages ꕤ

1. JavaScript

2. Python

3. CSS (styling sheet)

4. TypeScript , HTML (markup language)

5. Java

ꕤ Top frameworks ꕤ

1. Docker

2. Jest, Cypress, Node.js

3. React Native

4. Kubernetes

ꕤ Top developer tools ꕤ

1. AWS

2. Git

3. Apollo

4. Metabase

5. Clojure

ꕤ Top libraries ꕤ

1. React

2. Redux

3. NumPy

4. Pandas

ꕤ Top Database + tools + query language ꕤ

1. Redis

2. GraphQL, MongoDB

3. GO , Elasticsearch, MySQL

4. Postgres

5. SQL

#resources#resource#programming#computer science#computing#computer engineering#coding#python#comp sci#100 days of code#studying#studyblr#dataanalytics#deeplearning

171 notes

·

View notes

Text

Mainframe Community / Mattermost

So, last night I ‘launched’ a MatterMost instance on https://mainframe.community. To summarize MatterMost (via wikipedia) it is: an open-source, self-hostable online chat service with file sharing, search, and integrations. It is designed as an internal chat for organisations and companies, and mostly markets itself as an open-source alternative to Slack[7][8] and Microsoft Teams. In this post I wanted to quickly explain how and why I did this. Let’s start with the why first. But Why? Last week, while working for one of my clients, I discovered they are starting to implemen MatterMost as a cross-team collaboration tool. And I discovered it has integrations, webhooks and bots. Being the Mainframe nerd I am, I quickly whipped up some lines of REXX to call CURL so I could ‘post’ to a MatterMost channel straight from The Mainframe. It was also quite easy in the wsadmin scripts to have jython execute an os.system to call curl and do the post... Now I wanted to take it a step further and create a “load module” that did the same, but could be called from a regular batch-job to, I donno, post messages when jobs failed, or required other forms of attention. Seeing as I was going to develop that on my own ZPDT/ZD&T I needed a sandbox environment. Running MatterMost locally from docker was a breeze, yet not running as “https” (something I wanted to test to work from the still to be made load-module. So, seeing as I already had the “mainframe.community”-domain, I thought, why not host it there, and use that as a sandbox....turned out that was easier done than imagined. But How? The instructions provided at https://docs.mattermost.com/install/install-ubuntu-1804.html were easy enough to follow and should get you up and running yourself in under an hour.

Seeing as there already ‘some stuff’ running at the local datacenter here I already had an nginx-environment up and running. I started with creating a new VM in my ProxMox environment (running Ubuntu 18.04) and made sure this machine got a static IP. From there on I did the following:

sudo apt update sudo apt upgrade sudo apt install postgresql postgresql-contrib

That then made sure the VM had a local database for all the MatterMost things. Initializing the DB environment was as easy as;

sudo --login --user postgres psql postgres=# CREATE DATABASE mattermost; postgres=# CREATE USER mmuser WITH PASSWORD 'x'; postgres=# GRANT ALL PRIVILEGES ON DATABASE mattermost to mmuser; postgres=# \q exit

Of course the password is not ‘x’ but something a bit more secure...

Then, make a change to the postgres config (vi /etc/postgresql/10/main/pg_hba.conf) changing the line

local all all peer

to

local all all trust

Then installing mattermost was basically these next commands:

systemctl reload postgresql wget https://releases.mattermost.com/5.23.1/mattermost-5.23.1-linux-amd64.tar.gz mv mattermost /opt mkdir /opt/mattermost/data useradd --system --user-group mattermost chown -R mattermost:mattermost /opt/mattermost chmod -R g+w /opt/mattermost vi /opt/mattermost/config/config.json cd /opt/mattermost/ sudo -u mattermost ./bin/mattermost vi /opt/mattermost/config/config.json sudo -u mattermost ./bin/mattermost vi /lib/systemd/system/mattermost.service systemctl daemon-reload systemctl status mattermost.service systemctl start mattermost.service curl http://localhost:8065 systemctl enable mattermost.service restart mattermost systemctl restart mattermost

Some post configuration needed to be done via the MatterMost webinterface (that was running like a charm) and then just a little nginx-config like specified at the MatterMost docs webpages and it was all up and running. Thanks to the peeps at LetsEncrypt it’s running TLS too :) Curious to see how ‘busy’ it will get on the mainframe.community. I’ve setup the VM with enough hardware resource to at least host 2000 users. So head on over to https://mainframe.community and make me ‘upgrade’ the VM due to the user growth :)

1 note

·

View note

Text

Exploring the Exciting Features of Spring Boot 3.1

Spring Boot is a popular Java framework that is used to build robust and scalable applications. With each new release, Spring Boot introduces new features and enhancements to improve the developer experience and make it easier to build production-ready applications. The latest release, Spring Boot 3.1, is no exception to this trend.

In this blog post, we will dive into the exciting new features offered in Spring Boot 3.1, as documented in the official Spring Boot 3.1 Release Notes. These new features and enhancements are designed to help developers build better applications with Spring Boot. By taking advantage of these new features, developers can build applications that are more robust, scalable, and efficient.

So, if you’re a developer looking to build applications with Spring Boot, keep reading to learn more about the exciting new features offered in Spring Boot 3.1!

Feature List:

1. Dependency Management for Apache HttpClient 4:

Spring Boot 3.0 includes dependency management for both HttpClient 4 and 5.

Spring Boot 3.1 removes dependency management for HttpClient 4 to encourage users to move to HttpClient 5.2. Servlet and Filter Registrations:

The ServletRegistrationBean and FilterRegistrationBean classes will now throw an IllegalStateException if registration fails instead of logging a warning.

To retain the old behaviour, you can call setIgnoreRegistrationFailure(true) on your registration bean.3. Git Commit ID Maven Plugin Version Property:

The property used to override the version of io.github.git-commit-id:git-commit-id-maven-plugin has been updated.

Replace git-commit-id-plugin.version with git-commit-id-maven-plugin.version in your pom.xml.4. Dependency Management for Testcontainers:

Spring Boot’s dependency management now includes Testcontainers.

You can override the version managed by Spring Boot Development using the testcontainers.version property.5. Hibernate 6.2:

Spring Boot 3.1 upgrades to Hibernate 6.2.

Refer to the Hibernate 6.2 migration guide to understand how it may affect your application.6. Jackson 2.15:

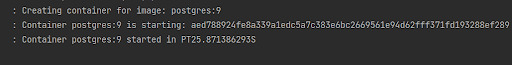

TestContainers

The Testcontainers library is a tool that helps manage services running inside Docker containers. It works with testing frameworks such as JUnit and Spock, allowing you to write a test class that starts up a container before any of the tests run. Testcontainers are particularly useful for writing integration tests that interact with a real backend service such as MySQL, MongoDB, Cassandra, and others.

Integration tests with Testcontainers take it to the next level, meaning we will run the tests against the actual versions of databases and other dependencies our application needs to work with executing the actual code paths without relying on mocked objects to cut the corners of functionality.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-testcontainers</artifactId> <scope>test</scope> </dependency> <dependency> <groupId>org.testcontainers</groupId> <artifactId>junit-jupiter</artifactId> <scope>test</scope> </dependency>

Add this dependency and add @Testcontainers in SpringTestApplicationTests class and run the test case

@SpringBootTest @Testcontainers class SpringTestApplicationTests { @Container GenericContainer<?> container = new GenericContainer<>("postgres:9"); @Test void myTest(){ System.out.println(container.getContainerId()+ " "+container.getContainerName()); assert (1 == 1); } }

This will start the docker container for Postgres with version 9

We can define connection details to containers using “@ServiceConnection” and “@DynamicPropertySource”.

a. ConnectionService

@SpringBootTest @Testcontainers class SpringTestApplicationTests { @Container @ServiceConnection static MongoDBContainer container = new MongoDBContainer("mongo:4.4"); }

Thanks to @ServiceConnection, the above configuration allows Mongo-related beans in the application to communicate with Mongo running inside the Testcontainers-managed Docker container. This is done by automatically defining a MongoConnectionDetails bean which is then used by the Mongo auto-configuration, overriding any connection-related configuration properties.

b. Dynamic Properties

A slightly more verbose but also more flexible alternative to service connections is @DynamicPropertySource. A static @DynamicPropertySource method allows adding dynamic property values to the Spring Environment.

@SpringBootTest @Testcontainers class SpringTestApplicationTests { @Container @ServiceConnection static MongoDBContainer container = new MongoDBContainer("mongo:4.4"); @DynamicPropertySource static void registerMongoProperties(DynamicPropertyRegistry registry) { String uri = container.getConnectionString() + "/test"; registry.add("spring.data.mongodb.uri", () -> uri); } }

c. Using Testcontainers at Development Time

Test the application at development time, first we start the Mongo database our app won’t be able to connect to it. If we use Docker, we first need to execute the docker run command that runs MongoDB and exposes it on the local port.

Fortunately, with Spring Boot 3.1 we can simplify that process. We don’t have to Mongo before starting the app. What we need to do – is to enable development mode with Testcontainers.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-testcontainers</artifactId> <scope>test</scope> </dependency>

Then we need to prepare the @TestConfiguration class with the definition of containers we want to start together with the app. For me, it is just a single MongoDB container as shown below:

public class MongoDBContainerDevMode { @Bean @ServiceConnection MongoDBContainer mongoDBContainer() { return new MongoDBContainer("mongo:5.0"); } }

2. Docker Compose

If you’re using Docker to containerize your application, you may have heard of Docker Compose, a tool for defining and running multi-container Docker applications. Docker Compose is a popular choice for developers as it enables them to define a set of containers and their dependencies in a single file, making it easy to manage and deploy the application.

Fortunately, Spring Boot 3.1 provides a new module called spring-boot-docker-compose that provides seamless integration with Docker Compose. This integration makes it even easier to deploy your Java Spring Boot application with Docker Compose. Maven dependency for this is given below:

The spring-boot-docker-compose module automatically looks for a Docker Compose configuration file in the current working directory during startup. By default, the module supports four file types: compose.yaml, compose.yml, docker-compose.yaml, and docker-compose.yml. However, if you have a non-standard file type, don’t worry – you can easily set the spring.docker.compose.file property to specify which configuration file you want to use.

When your application starts up, the services you’ve declared in your Docker Compose configuration file will be automatically started up using the docker compose up command. This means that you don’t have to worry about manually starting and stopping each service. Additionally, connection details beans for those services will be added to the application context so that the services can be used without any further configuration.

When the application stops, the services will then be shut down using the docker compose down command.

This module also supports custom images too. You can use any custom image as long as it behaves in the same way as the standard image. Specifically, any environment variables that the standard image supports must also be used in your custom image.

Overall, the spring-boot-docker-compose module is a powerful and user-friendly tool that simplifies the process of deploying your Spring Boot application with Docker Compose. With this module, you can focus on writing code and building your application, while the module takes care of the deployment process for you.

Conclusion

Overall, Spring Boot 3.1 brings several valuable features and improvements, making it easier for developers to build production-ready applications. Consider exploring these new features and enhancements to take advantage of the latest capabilities offered by Spring Boot.

Originally published by: Exploring the Exciting Features of Spring Boot 3.1

#Features of Spring Boot#Application with Spring boot#Spring Boot Development Company#Spring boot Application development#Spring Boot Framework#New Features of Spring Boot

0 notes

Text

[ad_1] Superbass a complete back end for web and mobile applications based entirely on free open source software the biggest challenge when building an app is not writing code but rather architecting a complete system that works at scale products like Firebase and amplify have addressed this barrier but there's one Big problem they lock you into proprietary technology on a specific Cloud platform Superbass was created in 2019 specifically as an open source Firebase alternative at a high level it provides two things on the back end we have infrastructure like a database file storage and Edge functions that run in The cloud on the front end we have client-side sdks that can easily connect this infrastructure to your favorite front-end JavaScript framework react native flutter and many other platforms as a developer you can manage your postgres database with an easy to understand UI which automatically generates rest and graphql apis to use In your code the database integrates directly with user authentication making it almost trivial to implement row level security and like fire base it can listen to data changes in real time while scaling to virtually any workload to get started you can self-host with Docker or sign up for a fully managed Account that starts with a free tier on the dashboard you can create tables in your postgres database with a click of a button insert columns to build out your schema then add new rows to populate it with data by default every project has an authentication schema to manage users Within the application this opens the door to row level security where you write policies to control who has access to your data in addition the database supports triggers to react to changes in your data and postgres functions to run stored procedures directly on the database server it's a nice interface But it also automatically generates custom API documentation for you from here we can copy queries tailored to our database and use them in a JavaScript project install the Super Bass SDK with npm then connect to your project and sign a user in with a single line of Code and now we can listen to any changes to the authentication state in real time with on off stage change when it comes to the database we don't need to write raw SQL code instead we can paste in that JavaScript code from the API docs or use the rest and graphql Apis directly and that's all it takes to build an authenticated full stack application however you may still want to run your own custom server-side code in which case serverless Edge functions can be developed with Dino and typescript then easily distributed around the globe this has been super Bass in 100 seconds if you want to build something awesome on this platform we just released a brand new Super Bass course on fireship i o it's free to get started so check it out to learn more thanks for watching and I will see you in the next one [ad_2] #Supabase #Seconds For More Interesting Article Visit : https://mycyberbase.com/

0 notes