#postgres in docker container

Explore tagged Tumblr posts

Text

#spring boot tutorial#postgres db#docker tutorial#postgres in docker container#run postgres in docker container

0 notes

Text

if my goal with this project was just "make a website" I would just slap together some html, css, and maybe a little bit of javascript for flair and call it a day. I'd probably be done in 2-3 days tops. but instead I have to practice and make myself "employable" and that means smashing together as many languages and frameworks and technologies as possible to show employers that I'm capable of everything they want and more. so I'm developing apis in java that fetch data from a postgres database using spring boot with authentication from spring security, while coding the front end in typescript via an angular project served by nginx with https support and cloudflare protection, with all of these microservices running in their own docker containers.

basically what that means is I get to spend very little time actually programming and a whole lot of time figuring out how the hell to make all these things play nice together - and let me tell you, they do NOT fucking want to.

but on the bright side, I do actually feel like I'm learning a lot by doing this, and hopefully by the time I'm done, I'll have something really cool that I can show off

8 notes

·

View notes

Text

When you attempt to validate that a data pipeline is loading data into a postgres database, but you are unable to find the configuration tables that you stuffed into the same database out of expediency, let alone the data that was supposed to be loaded, dont be surprised if you find out after hours of troubleshooting that your local postgres server was running.

Further, dont be surprised if that local server was running, and despite the pgadmin connection string being correctly pointed to localhost:5432 (docker can use the same binding), your pgadmin decides to connect you to the local server with the same database name, database user name, and database user password.

Lessons learned:

try to use unique database names with distinct users and passwords across all users involved in order to avoid this tomfoolery in the future, EVEN IN TEST, ESPECIALLY IN TEST (i dont really have a 'prod environment, homelab and all that, but holy fuck)

do not slam dunk everything into a database named 'toilet' while playing around with database schemas in order to solidify your transformation logic, and then leave your local instance running.

do not, in your docker-compose.yml file, also name the database you are storing data into, 'toilet', on the same port, and then get confused why the docker container database is showing new entries from the DAG load functionality, but you cannot validate through pgadmin.

3 notes

·

View notes

Text

Using Docker in Software Development

Docker has become a vital tool in modern software development. It allows developers to package applications with all their dependencies into lightweight, portable containers. Whether you're building web applications, APIs, or microservices, Docker can simplify development, testing, and deployment.

What is Docker?

Docker is an open-source platform that enables you to build, ship, and run applications inside containers. Containers are isolated environments that contain everything your app needs—code, libraries, configuration files, and more—ensuring consistent behavior across development and production.

Why Use Docker?

Consistency: Run your app the same way in every environment.

Isolation: Avoid dependency conflicts between projects.

Portability: Docker containers work on any system that supports Docker.

Scalability: Easily scale containerized apps using orchestration tools like Kubernetes.

Faster Development: Spin up and tear down environments quickly.

Basic Docker Concepts

Image: A snapshot of a container. Think of it like a blueprint.

Container: A running instance of an image.

Dockerfile: A text file with instructions to build an image.

Volume: A persistent data storage system for containers.

Docker Hub: A cloud-based registry for storing and sharing Docker images.

Example: Dockerizing a Simple Python App

Let’s say you have a Python app called app.py: # app.py print("Hello from Docker!")

Create a Dockerfile: # Dockerfile FROM python:3.10-slim COPY app.py . CMD ["python", "app.py"]

Then build and run your Docker container: docker build -t hello-docker . docker run hello-docker

This will print Hello from Docker! in your terminal.

Popular Use Cases

Running databases (MySQL, PostgreSQL, MongoDB)

Hosting development environments

CI/CD pipelines

Deploying microservices

Local testing for APIs and apps

Essential Docker Commands

docker build -t <name> . — Build an image from a Dockerfile

docker run <image> — Run a container from an image

docker ps — List running containers

docker stop <container_id> — Stop a running container

docker exec -it <container_id> bash — Access the container shell

Docker Compose

Docker Compose allows you to run multi-container apps easily. Define all your services in a single docker-compose.yml file and launch them with one command: version: '3' services: web: build: . ports: - "5000:5000" db: image: postgres

Start everything with:docker-compose up

Best Practices

Use lightweight base images (e.g., Alpine)

Keep your Dockerfiles clean and minimal

Ignore unnecessary files with .dockerignore

Use multi-stage builds for smaller images

Regularly clean up unused images and containers

Conclusion

Docker empowers developers to work smarter, not harder. It eliminates "it works on my machine" problems and simplifies the development lifecycle. Once you start using Docker, you'll wonder how you ever lived without it!

0 notes

Text

Using Docker for Full Stack Development and Deployment

1. Introduction to Docker

What is Docker? Docker is an open-source platform that automates the deployment, scaling, and management of applications inside containers. A container packages your application and its dependencies, ensuring it runs consistently across different computing environments.

Containers vs Virtual Machines (VMs)

Containers are lightweight and use fewer resources than VMs because they share the host operating system’s kernel, while VMs simulate an entire operating system. Containers are more efficient and easier to deploy.

Docker containers provide faster startup times, less overhead, and portability across development, staging, and production environments.

Benefits of Docker in Full Stack Development

Portability: Docker ensures that your application runs the same way regardless of the environment (dev, test, or production).

Consistency: Developers can share Dockerfiles to create identical environments for different developers.

Scalability: Docker containers can be quickly replicated, allowing your application to scale horizontally without a lot of overhead.

Isolation: Docker containers provide isolated environments for each part of your application, ensuring that dependencies don’t conflict.

2. Setting Up Docker for Full Stack Applications

Installing Docker and Docker Compose

Docker can be installed on any system (Windows, macOS, Linux). Provide steps for installing Docker and Docker Compose (which simplifies multi-container management).

Commands:

docker --version to check the installed Docker version.

docker-compose --version to check the Docker Compose version.

Setting Up Project Structure

Organize your project into different directories (e.g., /frontend, /backend, /db).

Each service will have its own Dockerfile and configuration file for Docker Compose.

3. Creating Dockerfiles for Frontend and Backend

Dockerfile for the Frontend:

For a React/Angular app:

Dockerfile

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD ["npm", "start"]

This Dockerfile installs Node.js dependencies, copies the application, exposes the appropriate port, and starts the server.

Dockerfile for the Backend:

For a Python Flask app

Dockerfile

FROM python:3.9 WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . EXPOSE 5000 CMD ["python", "app.py"]

For a Java Spring Boot app:

Dockerfile

FROM openjdk:11 WORKDIR /app COPY target/my-app.jar my-app.jar EXPOSE 8080 CMD ["java", "-jar", "my-app.jar"]

This Dockerfile installs the necessary dependencies, copies the code, exposes the necessary port, and runs the app.

4. Docker Compose for Multi-Container Applications

What is Docker Compose? Docker Compose is a tool for defining and running multi-container Docker applications. With a docker-compose.yml file, you can configure services, networks, and volumes.

docker-compose.yml Example:

yaml

version: "3" services: frontend: build: context: ./frontend ports: - "3000:3000" backend: build: context: ./backend ports: - "5000:5000" depends_on: - db db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

This YAML file defines three services: frontend, backend, and a PostgreSQL database. It also sets up networking and environment variables.

5. Building and Running Docker Containers

Building Docker Images:

Use docker build -t <image_name> <path> to build images.

For example:

bash

docker build -t frontend ./frontend docker build -t backend ./backend

Running Containers:

You can run individual containers using docker run or use Docker Compose to start all services:

bash

docker-compose up

Use docker ps to list running containers, and docker logs <container_id> to check logs.

Stopping and Removing Containers:

Use docker stop <container_id> and docker rm <container_id> to stop and remove containers.

With Docker Compose: docker-compose down to stop and remove all services.

6. Dockerizing Databases

Running Databases in Docker:

You can easily run databases like PostgreSQL, MySQL, or MongoDB as Docker containers.

Example for PostgreSQL in docker-compose.yml:

yaml

db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

Persistent Storage with Docker Volumes:

Use Docker volumes to persist database data even when containers are stopped or removed:

yaml

volumes: - db_data:/var/lib/postgresql/data

Define the volume at the bottom of the file:

yaml

volumes: db_data:

Connecting Backend to Databases:

Your backend services can access databases via Docker networking. In the backend service, refer to the database by its service name (e.g., db).

7. Continuous Integration and Deployment (CI/CD) with Docker

Setting Up a CI/CD Pipeline:

Use Docker in CI/CD pipelines to ensure consistency across environments.

Example: GitHub Actions or Jenkins pipeline using Docker to build and push images.

Example .github/workflows/docker.yml:

yaml

name: CI/CD Pipeline on: [push] jobs: build: runs-on: ubuntu-latest steps: - name: Checkout Code uses: actions/checkout@v2 - name: Build Docker Image run: docker build -t myapp . - name: Push Docker Image run: docker push myapp

Automating Deployment:

Once images are built and pushed to a Docker registry (e.g., Docker Hub, Amazon ECR), they can be pulled into your production or staging environment.

8. Scaling Applications with Docker

Docker Swarm for Orchestration:

Docker Swarm is a native clustering and orchestration tool for Docker. You can scale your services by specifying the number of replicas.

Example:

bash

docker service scale myapp=5

Kubernetes for Advanced Orchestration:

Kubernetes (K8s) is more complex but offers greater scalability and fault tolerance. It can manage Docker containers at scale.

Load Balancing and Service Discovery:

Use Docker Swarm or Kubernetes to automatically load balance traffic to different container replicas.

9. Best Practices

Optimizing Docker Images:

Use smaller base images (e.g., alpine images) to reduce image size.

Use multi-stage builds to avoid unnecessary dependencies in the final image.

Environment Variables and Secrets Management:

Store sensitive data like API keys or database credentials in Docker secrets or environment variables rather than hardcoding them.

Logging and Monitoring:

Use tools like Docker’s built-in logging drivers, or integrate with ELK stack (Elasticsearch, Logstash, Kibana) for advanced logging.

For monitoring, tools like Prometheus and Grafana can be used to track Docker container metrics.

10. Conclusion

Why Use Docker in Full Stack Development? Docker simplifies the management of complex full-stack applications by ensuring consistent environments across all stages of development. It also offers significant performance benefits and scalability options.

Recommendations:

Encourage users to integrate Docker with CI/CD pipelines for automated builds and deployment.

Mention the use of Docker for microservices architecture, enabling easy scaling and management of individual services.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

Podman: Exportar/Importar volumenes

A ver, esto que explico es para Podman (supongo que en Docker tienen algo parecido, pero como ahora es de pago, ya no le dedico tiempo ni lo uso).

Aqui me estoy refiriendo a mover los datos del contenedor que no están en un volumen, para exportar el fileystem del contenedor se hace con podman export. Pero los datos del contenedor (sobre todo si es una BBDD esta alojada en un volumen externo). Obtener la información de cual es el volumen de ese contenedor:

podman container inspect nonprod "Type": "volume", "Name": "96ba830cfdc14f4758df5c7a06de5b716f4a415fecf1abdde3a27ebd989bd640", "Source": "/home/user/.local/share/containers/storage/volumes/2d062d3174a4a694427da5c102edf1731c5ca9f20e8ee7b229e04d4cb4a5bc69/_data", "Destination": "/var/lib/postgresql/data",

Vale, el volumen se llama "96ba830cfdc14f4758df5c7a06de5b716f4a415fecf1abdde3a27ebd989bd640".

Entramos por ssh en la máquina:

podman machine ssh --username user Connecting to vm podman-machine-default. To close connection, use ~. or exit Last login: Fri Jan 31 13:45:48 2025 from ::1 [user@MYCOMPUTER ~]$

Exportamos el contenido del volumen a un tar:

podman volume export 96ba830cfdc14f4758df5c7a06de5b716f4a415fecf1abdde3a27ebd989bd640 -o volume.tar

Como lo voy a hacer en local y no hay problemas de velocidad ni espacio, lo dejo así sin comprimir. Así me ahorro pasos. Pero si quereis compartirlo por red con otro ordenador o compañero pues le pasais un gzip o bzip2.

Me salgo.

exit

Ahora obtengo los datos de conexión de la máquina de podman para poder copiar el fichero al exterior usando SCP.

podman system connection list Name URI Identity Default ReadWrite podman-machine-default ssh://[email protected]:56086/run/user/1000/podman/podman.sock C:\Users\xmanoel.local\share\containers\podman\machine\machine true true podman-machine-default-root ssh://[email protected]:56086/run/podman/podman.sock C:\Users\xmanoel.local\share\containers\podman\machine\machine false true

De aqui lo importante es el fichero donde estan las idendidades y el puerto para hacer el SCP.

scp -i C:\Users\xmanoel.local\share\containers\podman\machine\machine -P 56086 user@localhost:~/volume.tar .

Ya esta, ya lo tenemos en la máquina local. Aquí lo puedes compartir con otro equipo, enviarlo por la red o lo que quieras. Ahora para crear otro contenedor vacío y usarlo para recibir los datos de este volumen...

podman run -d --name nonprod -p 5432:5432 postgres podman stop nonprod

Como veis lo creo pero inmediatamente lo detengo. No croe que sea buena idea tener ejecutando el contenedor cuando vamos a sobreescribir los datos.

Vemos cual era el volumen que se creó este contenedor: podman container inspect nonprod

"Type": "volume", "Name": "2d062d3174a4a694427da5c102edf1731c5ca9f20e8ee7b229e04d4cb4a5bc69", "Source": "/home/user/.local/share/containers/storage/volumes/2d062d3174a4a694427da5c102edf1731c5ca9f20e8ee7b229e04d4cb4a5bc69/_data", "Destination": "/var/lib/postgresql/data",

En este caos el volumen se llama "2d062d3174a4a694427da5c102edf1731c5ca9f20e8ee7b229e04d4cb4a5bc69". Pues ahora a la inversa. Una vez más vemos cual es el puerto y el fichero de identidad del podman:

podman system connection list Name URI Identity Default ReadWrite podman-machine-default ssh://[email protected]:56086/run/user/1000/podman/podman.sock C:\Users\xmanoel.local\share\containers\podman\machine\machine true true podman-machine-default-root ssh://[email protected]:56086/run/podman/podman.sock C:\Users\xmanoel.local\share\containers\podman\machine\machine false true

En este caso este paso no era necesario porque como veis estoy copiando de vuelta en mi propia máquina. Pero bueno, es que yo lo estoy haciendo de ejemplo. En vuestro caso esto lo tendríais que hacer en la otra máquina y os saldrían cosas diferentes. Copiamos el tar adentro. Que es la inversa de lo de antes:

scp -i C:\Users\xmanoel.local\share\containers\podman\machine\machine -P 56086 user@localhost:~/volume.tar . Y entramos otra vez con ssh.

podman machine ssh --username user Connecting to vm podman-machine-default. To close connection, use ~. or exit Last login: Fri Jan 31 16:21:41 2025 from ::1 [user@HEREWEARE ~]$

Y simplemente ahora es importar el contenido del tar en el volumen. Cuidado aquí porque claro, lo que va a pasar es que se va a cargar lo que haya en el volumen de antes. Por eso, si recordais hace un rato hemos creado un contenedor nuevo, para no fastidiar nada de lo existente. Si vosotros quereis expresamente reutilizar un volumen ya existente, pues ya sabeis ahí todo vuestro.

podman volume import 2d062d3174a4a694427da5c102edf1731c5ca9f20e8ee7b229e04d4cb4a5bc69 volume.tar

Ahora ya podemos salirnos:

exit

Y levantar el contenedor que habíamos creado. Ese contenedor ahora leerá el volumen que hemos importado, por tanto los datos que estaban en el contenedor inicial estarán dentro.

podman start nonprod

Y nada, espero que os sea util.

0 notes

Text

Sử dụng Docker Compose cấu hình Netbox + Nginx làm Reverse Proxy

Docker Compose là một công cụ mạnh mẽ giúp triển khai các ứng dụng đa container một cách dễ dàng và hiệu quả. Trong bài viết này, chúng ta sẽ tìm hiểu cách cấu hình ứng dụng Netbox kết hợp với Nginx làm Reverse Proxy khi sử dụng Docker Compose. Mô hình triển khai bao gồm nhiều container, như Netbox, PostgreSQL, Redis và Nginx, giúp ứng dụng hoạt động mượt mà với hiệu suất tối ưu. Cùng khám phá từng bước thực hiện chi tiết và các tùy chỉnh cần thiết để cài đặt thành công trên Docker Host.

1. Mô hình docker compose mà tôi build

Mô hình này biểu thị cách cấu trúc của một ứng dụng NetBox triển khai trên Docker, với sự phối hợp giữa nhiều container khác nhau (NetBox, PostgreSQL, Redis, Nginx) và mạng nội bộ để cung cấp một ứng dụng quản lý mạng đầy đủ chức năng.

Docker Host:

Đây là máy chủ vật lý hoặc máy ảo nơi Docker được cài đặt và chạy. Tất cả các container sẽ hoạt động bên trong máy chủ này.

Netbox_net (Network):

Đây là mạng Docker nội bộ kết nối các container với nhau. Các container sẽ giao tiếp qua mạng này.

Container NetBox:

Đây là container chính chứa ứng dụng NetBox (một công cụ quản lý mạng). NetBox sẽ sử dụng các dịch vụ từ những container khác như PostgreSQL và Redis để hoạt động.

Container PostgreSQL:

Đây là container chứa cơ sở dữ liệu PostgreSQL. NetBox sẽ lưu trữ dữ liệu của nó trong cơ sở dữ liệu này.

Container Redis:

Redis là hệ thống lưu trữ bộ nhớ tạm (cache). Container Redis sẽ được sử dụng bởi NetBox để cải thiện hiệu năng, lưu trữ dữ liệu tạm thời.

Container Nginx:

Nginx là một máy chủ web được sử dụng để xử lý yêu cầu từ phía người dùng đến NetBox. Container này sẽ lắng nghe và chuyển tiếp các yêu cầu HTTP/HTTPS đến các thành phần NetBox tương ứng.

Expose Ports:

Cổng 80 và 443 của container Nginx được mở ra cho mạng bên ngoài, cho phép người dùng có thể truy cập vào ứng dụng NetBox từ trình duyệt web thông qua HTTP hoặc HTTPS.

Cổng ens160 là cổng mạng của máy chủ Docker host, cho phép các container kết nối với mạng bên ngoài.

2. Hướng dẫn sử dụng Docker Compose

Đầu tiên bạn sẽ cần download repo này về. Lưu ý bắt buộc phải di chuyển đến thư mục /opt nếu không file active sẽ có thể bị lỗi.

cd /opt/

git clone https://github.com/thanhquang99/Docker

Tiếp theo ta sẽ chạy file docker compose

cd /opt/Docker/netbox/

docker compose up

Ta có thể tùy chỉnh biến trong file docker compose để thay đổi user và password của netbox hay postgres

vi /opt/Docker/netbox/docker-compose.yml

Đợi thời gian khoảng 5 phút để docker compose chạy xong ta sẽ tạo thêm 1 terminal mới ctrl +shirt +u để tiến hành active bao gòm tạo super user và cấu hình nginx làm reverse proxy.

cd /opt/Docker/netbox/

chmod +x active.sh

. active.sh

Bây giờ ta cần nhập thông tin từ màn hình vào (yêu cầu đúng cú pháp được gợi ý), thông tin sẽ bao gồm tên miền của netbox, gmail, user và password của netbox.

Bây giờ chỉ cần đợi cho quá trình hoàn tất. Sau khi quá trình hoàn tất nhưng mà bạn quên thông tin thì vẫn có thể xem lại.

root@Quang-docker:~# cat thongtin.txt

Sửa file hosts thành 172.16.66.41 quang.netbox.com

Link truy cập netbox: https://quang.netbox.com

Netbox User: admin

Netbox password: fdjhuixtyy5dpasfn

netbox mail: [email protected]

Sửa file hosts thành 172.16.66.41 quang.netbox.com

Link truy cập netbox: https://quang.netbox.com

Netbox User: fdjhuixtyy5dpasfn

Netbox password: fdjhuixtyy5dpasfn

netbox mail: [email protected]

Tổng kết

Trên đây là hướng dẫn của mình cho các bạn sử dụng docker netbox kết hợp với nginx để build một cách nhanh chóng với chỉ một vài lệnh mà ai cũng có thể làm được. Bài viết trước là hướng dẫn các bạn build theo nhà phát triển mà không cần nginx, bạn có thể xem lại ở đây. Nhưng trong bài viết này mình đã build thêm nginx và ssl. Việc có thêm ssl chính là để có thể mã hóa dữ liệu, chính việc mã hóa dữ liệu là bước bảo mật đầu tiên để phòng tránh tấn công mạng.

Nguồn: https://suncloud.vn/su-dung-docker-compose-cau-hinh-netbox-nginx-lam-reverse-proxy

0 notes

Text

Managing Containerized Applications Using Ansible: A Guide for College Students and Working Professionals

As containerization becomes a cornerstone of modern application deployment, managing containerized applications effectively is crucial. Ansible, a powerful automation tool, provides robust capabilities for managing these containerized environments. This blog post will guide you through the process of managing containerized applications using Ansible, tailored for both college students and working professionals.

What is Ansible?

Ansible is an open-source automation tool that simplifies configuration management, application deployment, and task automation. It's known for its agentless architecture, ease of use, and powerful features, making it ideal for managing containerized applications.

Why Use Ansible for Container Management?

Consistency: Ensure that container configurations are consistent across different environments.

Automation: Automate repetitive tasks such as container deployment, scaling, and monitoring.

Scalability: Manage containers at scale, across multiple hosts and environments.

Integration: Seamlessly integrate with CI/CD pipelines, monitoring tools, and other infrastructure components.

Prerequisites

Before you start, ensure you have the following:

Ansible installed on your local machine.

Docker installed on the target hosts.

Basic knowledge of YAML and Docker.

Setting Up Ansible

Install Ansible on your local machine:

pip install ansible

Basic Concepts

Inventory

An inventory file lists the hosts and groups of hosts that Ansible manages. Here's a simple example:

[containers] host1.example.com host2.example.com

Playbooks

Playbooks define the tasks to be executed on the managed hosts. Below is an example of a playbook to manage Docker containers.

Example Playbook: Deploying a Docker Container

Let's start with a simple example of deploying an NGINX container using Ansible.

Step 1: Create the Inventory File

Create a file named inventory:

[containers] localhost ansible_connection=local

Step 2: Create the Playbook

Create a file named deploy_nginx.yml:

name: Deploy NGINX container hosts: containers become: yes tasks:

name: Install Docker apt: name: docker.io state: present when: ansible_os_family == "Debian"

name: Ensure Docker is running service: name: docker state: started enabled: yes

name: Pull NGINX image docker_image: name: nginx source: pull

name: Run NGINX container docker_container: name: nginx image: nginx state: started ports:

"80:80"

Step 3: Run the Playbook

Execute the playbook using the following command:

ansible-playbook -i inventory deploy_nginx.yml

Advanced Topics

Managing Multi-Container Applications

For more complex applications, such as those defined by Docker Compose, you can manage multi-container setups with Ansible.

Example: Deploying a Docker Compose Application

Create a Docker Compose file docker-compose.yml:

version: '3' services: web: image: nginx ports: - "80:80" db: image: postgres environment: POSTGRES_PASSWORD: example

Create an Ansible playbook deploy_compose.yml:

name: Deploy Docker Compose application hosts: containers become: yes tasks:

name: Install Docker apt: name: docker.io state: present when: ansible_os_family == "Debian"

name: Install Docker Compose get_url: url: https://github.com/docker/compose/releases/download/1.29.2/docker-compose-uname -s-uname -m dest: /usr/local/bin/docker-compose mode: '0755'

name: Create Docker Compose file copy: dest: /opt/docker-compose.yml content: | version: '3' services: web: image: nginx ports: - "80:80" db: image: postgres environment: POSTGRES_PASSWORD: example

name: Run Docker Compose command: docker-compose -f /opt/docker-compose.yml up -d

Run the playbook:

ansible-playbook -i inventory deploy_compose.yml

Integrating Ansible with CI/CD

Ansible can be integrated into CI/CD pipelines for continuous deployment of containerized applications. Tools like Jenkins, GitLab CI, and GitHub Actions can trigger Ansible playbooks to deploy containers whenever new code is pushed.

Example: Using GitHub Actions

Create a GitHub Actions workflow file .github/workflows/deploy.yml:

name: Deploy with Ansible

on: push: branches: - main

jobs: deploy: runs-on: ubuntu-lateststeps: - name: Checkout code uses: actions/checkout@v2 - name: Set up Ansible run: sudo apt update && sudo apt install -y ansible - name: Run Ansible playbook run: ansible-playbook -i inventory deploy_compose.yml

Conclusion

Managing containerized applications with Ansible streamlines the deployment and maintenance processes, ensuring consistency and reliability. Whether you're a college student diving into DevOps or a working professional seeking to enhance your automation skills, Ansible provides the tools you need to efficiently manage your containerized environments.

For more details click www.qcsdclabs.com

#redhatcourses#docker#linux#information technology#containerorchestration#kubernetes#container#containersecurity#dockerswarm#aws

0 notes

Text

Updating a Tiny Tiny RSS install behind a reverse proxy

Screenshot of my Tiny Tiny RSS install on May 7th 2024 after a long struggle with 502 errors. I had a hard time when trying to update my Tiny Tiny RSS instance running as Docker container behind Nginx as reverse proxy. I experienced a lot of nasty 502 errors because the container did not return proper data to Nginx. I fixed it in the following manner: First I deleted all the containers and images. I did it with docker rm -vf $(docker ps -aq) docker rmi -f $(docker images -aq) docker system prune -af Attention! This deletes all Docker images! Even those not related to Tiny Tiny RSS. No problem in my case. It only keeps the persistent volumes. If you want to keep other images you have to remove the Tiny Tiny RSS ones separately. The second issue is simple and not really one for me. The Tiny Tiny RSS docs still call Docker Compose with a hyphen: $ docker-compose version. This is not valid for up-to-date installs where the hyphen has to be omitted: $ docker compose version. The third and biggest issue is that the Git Tiny Tiny RSS repository for Docker Compose does not exist anymore. The files have to to be pulled from the master branch of the main repository https://git.tt-rss.org/fox/tt-rss.git/. The docker-compose.yml has to be changed afterwards since the one in the repository is for development purposes only. The PostgreSQL database is located in a persistent volume. It is not possible to install a newer PostgreSQL version over it. Therefore you have to edit the docker-compose.yml and change the database image image: postgres:15-alpine to image: postgres:12-alpine. And then the data in the PostgreSQL volume were owned by a user named 70. Change it to root. Now my Tiny Tiny RSS runs again as expected. Read the full article

0 notes

Text

Sr DevOps Engineer - Remote

Company: Big Time A BIT ABOUT US We are a bold new game studio with a mission to build cutting edge AAA entertainment for the 21st century. Our founders are veterans in the online games, social games, and crypto fields. We are fully funded and building a dream team of developers who want to work with the best of the best and take their careers to the next level. See press coverage: Bloomberg, VentureBeat, CoinDesk. THE MISSION As a DevOps Engineer, you will join a small, fully remote team to work on backend infrastructure for an exciting Multiplayer Action RPG title as well as our marketplace infrastructure. In this role, you will work on game-supporting services and features as well as web facing products interacting with users directly. Our stack includes Docker, AWS Elastic Container Service, Terraform, Node.js, Typescript, MongoDB, React, PostgreSQL, and C++ among others. You will be working with the game and backend engineering teams to architect and maintain stable, reliable, and scalable backend infrastructure. RESPONSIBILITIES - Work closely with stakeholders company wide to provide services that enhance user experience for the development team, as well as our end-users. - Design and build operational infrastructure to support games and marketplace products for Big Time Studios. - Spearhead company wide security culture and architecture to keep our platform secure. - Own delivery, scalability, and reliability of our backend infrastructure. - Advise and collaborate with the rest of the engineering team to ensure we are building safe, secure, and reliable products. REQUIREMENTS - Ability to design and implement highly available and reliable systems. - Proven experience with Linux, Docker, and cloud technologies such as AWS, GCP, and Azure. - Extensive experience setting up and maintaining database infrastructure, including Postgres, Terraform, NoSQL, MongoDB, and DocumentDB. - Ability to be on-call during evenings and weekends when required. - Strong documentation skills. - Excellent communication and time management skills. DESIRABLE - Experience with Game Development. - Proficiency with C++.Proficiency with Typescript/Node.js. - Experience with DevOps tools such as Terraform, Ansible, Kubernetes, Redis, Jenkins. etc. - Experience with game server hosting. WHAT WE OFFER - Fully remote work, with a yearly company offsite. - Experience working with gaming veterans of game titles with a gross aggregate revenue well over $10B USD. - Flexible PTO. - Experience creating a new IP with franchise potential. APPLY ON THE COMPANY WEBSITE To get free remote job alerts, please join our telegram channel “Global Job Alerts” or follow us on Twitter for latest job updates. Disclaimer: - This job opening is available on the respective company website as of 2ndJuly 2023. The job openings may get expired by the time you check the post. - Candidates are requested to study and verify all the job details before applying and contact the respective company representative in case they have any queries. - The owner of this site has provided all the available information regarding the location of the job i.e. work from anywhere, work from home, fully remote, remote, etc. However, if you would like to have any clarification regarding the location of the job or have any further queries or doubts; please contact the respective company representative. Viewers are advised to do full requisite enquiries regarding job location before applying for each job. - Authentic companies never ask for payments for any job-related processes. Please carry out financial transactions (if any) at your own risk. - All the information and logos are taken from the respective company website. Read the full article

0 notes

Text

youtube

#nodejs express#node js express tutorial#node js training#nodejs tutorial#nodejs projects#nodejs module#node js express#node js development company#codeonedigest#node js developers#docker container#docker microservices#docker tutorial#docker image#docker course#docker#postgres tutorial#postgresql#postgres database#postgres#install postgres#Youtube

0 notes

Text

Build Systems

Here's an unpopular - but I suspect secretly popular - opinion: Telling your users to build/run the software inside a container in the readme/user guide is a code smell. Docker is not a "bad" piece of software, but it's suspicious.

You'll have a hard time wrapping your head around docker and kubernetes if you think it's just like virtualisation/security sandboxing. Containers are mostly used as a band-aid for configuration and build problems. Many desktop applications use containers to run build scripts inside a controlled environment, to set up Postgres the right way, or to sidestep weird systemd interactions by running your own init system in a controlled environment.

Most of these problems could be fixed or at least mitigated by better support for these use cases in compilers, build systems, and distro packaging formats. Some of these problems could be mitigated by better integrating Postgres with distro packaging. Some are just caused by hardcoded paths to configuration files or IPC sockets.

Docker is a way to mix multiple build systems, install the correct versions of the required compilers, and not worry about paths and environment variables because everything is installed into /usr/local. Docker is a way to run integration tests in a "real" environment without polluting your /etc with configuration files for MariaDB, Memcached, and Redis or RabbitMQ.

A lot of the time, Docker is not a tool to deploy applications, just to compile and test them.

5 notes

·

View notes

Text

Exploring the Exciting Features of Spring Boot 3.1

Spring Boot is a popular Java framework that is used to build robust and scalable applications. With each new release, Spring Boot introduces new features and enhancements to improve the developer experience and make it easier to build production-ready applications. The latest release, Spring Boot 3.1, is no exception to this trend.

In this blog post, we will dive into the exciting new features offered in Spring Boot 3.1, as documented in the official Spring Boot 3.1 Release Notes. These new features and enhancements are designed to help developers build better applications with Spring Boot. By taking advantage of these new features, developers can build applications that are more robust, scalable, and efficient.

So, if you’re a developer looking to build applications with Spring Boot, keep reading to learn more about the exciting new features offered in Spring Boot 3.1!

Feature List:

1. Dependency Management for Apache HttpClient 4:

Spring Boot 3.0 includes dependency management for both HttpClient 4 and 5.

Spring Boot 3.1 removes dependency management for HttpClient 4 to encourage users to move to HttpClient 5.2. Servlet and Filter Registrations:

The ServletRegistrationBean and FilterRegistrationBean classes will now throw an IllegalStateException if registration fails instead of logging a warning.

To retain the old behaviour, you can call setIgnoreRegistrationFailure(true) on your registration bean.3. Git Commit ID Maven Plugin Version Property:

The property used to override the version of io.github.git-commit-id:git-commit-id-maven-plugin has been updated.

Replace git-commit-id-plugin.version with git-commit-id-maven-plugin.version in your pom.xml.4. Dependency Management for Testcontainers:

Spring Boot’s dependency management now includes Testcontainers.

You can override the version managed by Spring Boot Development using the testcontainers.version property.5. Hibernate 6.2:

Spring Boot 3.1 upgrades to Hibernate 6.2.

Refer to the Hibernate 6.2 migration guide to understand how it may affect your application.6. Jackson 2.15:

TestContainers

The Testcontainers library is a tool that helps manage services running inside Docker containers. It works with testing frameworks such as JUnit and Spock, allowing you to write a test class that starts up a container before any of the tests run. Testcontainers are particularly useful for writing integration tests that interact with a real backend service such as MySQL, MongoDB, Cassandra, and others.

Integration tests with Testcontainers take it to the next level, meaning we will run the tests against the actual versions of databases and other dependencies our application needs to work with executing the actual code paths without relying on mocked objects to cut the corners of functionality.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-testcontainers</artifactId> <scope>test</scope> </dependency> <dependency> <groupId>org.testcontainers</groupId> <artifactId>junit-jupiter</artifactId> <scope>test</scope> </dependency>

Add this dependency and add @Testcontainers in SpringTestApplicationTests class and run the test case

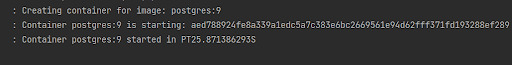

@SpringBootTest @Testcontainers class SpringTestApplicationTests { @Container GenericContainer<?> container = new GenericContainer<>("postgres:9"); @Test void myTest(){ System.out.println(container.getContainerId()+ " "+container.getContainerName()); assert (1 == 1); } }

This will start the docker container for Postgres with version 9

We can define connection details to containers using “@ServiceConnection” and “@DynamicPropertySource”.

a. ConnectionService

@SpringBootTest @Testcontainers class SpringTestApplicationTests { @Container @ServiceConnection static MongoDBContainer container = new MongoDBContainer("mongo:4.4"); }

Thanks to @ServiceConnection, the above configuration allows Mongo-related beans in the application to communicate with Mongo running inside the Testcontainers-managed Docker container. This is done by automatically defining a MongoConnectionDetails bean which is then used by the Mongo auto-configuration, overriding any connection-related configuration properties.

b. Dynamic Properties

A slightly more verbose but also more flexible alternative to service connections is @DynamicPropertySource. A static @DynamicPropertySource method allows adding dynamic property values to the Spring Environment.

@SpringBootTest @Testcontainers class SpringTestApplicationTests { @Container @ServiceConnection static MongoDBContainer container = new MongoDBContainer("mongo:4.4"); @DynamicPropertySource static void registerMongoProperties(DynamicPropertyRegistry registry) { String uri = container.getConnectionString() + "/test"; registry.add("spring.data.mongodb.uri", () -> uri); } }

c. Using Testcontainers at Development Time

Test the application at development time, first we start the Mongo database our app won’t be able to connect to it. If we use Docker, we first need to execute the docker run command that runs MongoDB and exposes it on the local port.

Fortunately, with Spring Boot 3.1 we can simplify that process. We don’t have to Mongo before starting the app. What we need to do – is to enable development mode with Testcontainers.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-testcontainers</artifactId> <scope>test</scope> </dependency>

Then we need to prepare the @TestConfiguration class with the definition of containers we want to start together with the app. For me, it is just a single MongoDB container as shown below:

public class MongoDBContainerDevMode { @Bean @ServiceConnection MongoDBContainer mongoDBContainer() { return new MongoDBContainer("mongo:5.0"); } }

2. Docker Compose

If you’re using Docker to containerize your application, you may have heard of Docker Compose, a tool for defining and running multi-container Docker applications. Docker Compose is a popular choice for developers as it enables them to define a set of containers and their dependencies in a single file, making it easy to manage and deploy the application.

Fortunately, Spring Boot 3.1 provides a new module called spring-boot-docker-compose that provides seamless integration with Docker Compose. This integration makes it even easier to deploy your Java Spring Boot application with Docker Compose. Maven dependency for this is given below:

The spring-boot-docker-compose module automatically looks for a Docker Compose configuration file in the current working directory during startup. By default, the module supports four file types: compose.yaml, compose.yml, docker-compose.yaml, and docker-compose.yml. However, if you have a non-standard file type, don’t worry – you can easily set the spring.docker.compose.file property to specify which configuration file you want to use.

When your application starts up, the services you’ve declared in your Docker Compose configuration file will be automatically started up using the docker compose up command. This means that you don’t have to worry about manually starting and stopping each service. Additionally, connection details beans for those services will be added to the application context so that the services can be used without any further configuration.

When the application stops, the services will then be shut down using the docker compose down command.

This module also supports custom images too. You can use any custom image as long as it behaves in the same way as the standard image. Specifically, any environment variables that the standard image supports must also be used in your custom image.

Overall, the spring-boot-docker-compose module is a powerful and user-friendly tool that simplifies the process of deploying your Spring Boot application with Docker Compose. With this module, you can focus on writing code and building your application, while the module takes care of the deployment process for you.

Conclusion

Overall, Spring Boot 3.1 brings several valuable features and improvements, making it easier for developers to build production-ready applications. Consider exploring these new features and enhancements to take advantage of the latest capabilities offered by Spring Boot.

Originally published by: Exploring the Exciting Features of Spring Boot 3.1

#Features of Spring Boot#Application with Spring boot#Spring Boot Development Company#Spring boot Application development#Spring Boot Framework#New Features of Spring Boot

0 notes

Text

Egybell is hiring Java developer for multinational company Skills and Qualifications · 2+ years of software development experience with strong java/jee/springboot/vertx/quarkus development frameworks. · BSc in Computer Science, Engineering or relevant field · Experience as a DevOps or Software Engineer or similar software engineering role · Proficient with git and git workflows · Good knowledge of linux, java, JSF or angular or other front-end technology · Demonstrated implementation of microservices, container and cloud-native application development. · Hands-on experience with Docker, Kubernetes or Openshift and related container platform ecosystems. · Experience with two or more database technologies such as Oracle, MySQL or Postgres, MongoDB. If you are interested apply with your CV.

0 notes

Text

Postgresql create database

Postgresql create database how to#

Postgresql create database install#

Postgresql create database upgrade#

An Azure resource group is a logical container into which Azure resources are deployed and managed. # to limit / allow access to the PostgreSQL serverĮcho "Using resource group $resourceGroup with login: $login, password: $password."Ĭreate a resource group with the az group create command. # Specify appropriate IP address values for your environment Server="msdocs-postgresql-server-$randomIdentifier" Tag="create-postgresql-server-and-firewall-rule" ResourceGroup="msdocs-postgresql-rg-$randomIdentifier" Use the public IP address of the computer you're using to restrict access to the server to only your IP address. Replace 0.0.0.0 with the IP address range to match your specific environment. Server names need to be globally unique across all of Azure so the $RANDOM function is used to create the server name.Ĭhange the location as appropriate for your environment. The following values are used in subsequent commands to create the database and required resources. or use 'az login'įor more information, see set active subscription or log in interactively Set parameter values subscription="" # add subscription hereĪz account set -s $subscription #. If you don't have an Azure subscription, create an Azure free account before you begin. Use the following script to sign in using a different subscription, replacing with your Azure Subscription ID. Sign in to AzureĬloud Shell is automatically authenticated under the initial account signed-in with. Subsequent sessions will use Azure CLI in a Bash environment, Select Copy to copy the blocks of code, paste it into the Cloud Shell, and press Enter to run it. When Cloud Shell opens, verify that Bash is selected for your environment. You can also launch Cloud Shell in a separate browser tab by going to. To open the Cloud Shell, just select Try it from the upper right corner of a code block. It has common Azure tools preinstalled and configured to use with your account. The Azure Cloud Shell is a free interactive shell that you can use to run the steps in this article.

Postgresql create database upgrade#

To upgrade to the latest version, run az upgrade. Run az version to find the version and dependent libraries that are installed. For more information about extensions, see Use extensions with the Azure CLI.

Postgresql create database install#

When you're prompted, install the Azure CLI extension on first use. For other sign-in options, see Sign in with the Azure CLI. To finish the authentication process, follow the steps displayed in your terminal. If you're using a local installation, sign in to the Azure CLI by using the az login command.

Postgresql create database how to#

For more information, see How to run the Azure CLI in a Docker container. If you're running on Windows or macOS, consider running Azure CLI in a Docker container. If you prefer to run CLI reference commands locally, install the Azure CLI. For more information, see Azure Cloud Shell Quickstart - Bash. Use the Bash environment in Azure Cloud Shell. Consider using the simpler az postgres up Azure CLI command.

0 notes

Text

If this is not one of the most robust, free, rich and informative era ever then I cannot think of any other time in history adorned with the wealth of technology as this one. If you would wish to accomplish anything, this era wields the most virile grounds to nourish, nurture and aid the sprouting, the growth and the maturity of your dreams. You can literaly learn to be what you would wish to be in this age. That being said, this disquisition takes on a quest to get you into setting up something similar to Heroku on your own environment. We shall get to know what Heroku is then get off the dock and sail off towards our goal of having such an environment. The proliferation of cloud technologies brought with it many opportunities in terms of service offerings. First and foremost, users had the ability to get as much infrastructure as they could afford. Users can spawn servers, storage and network resources ad libitum which is popularly known as Infrastructure as a service. Then comes the second layer that sits on the infrastructure. It could be anything, cloud identity service, cloud monitoring server et cetera. This layer provides ready made solutions to people who might need them. This is known as software as a service. I hope we are flowing together this far. In addition to that there is another incredible layer that is the focus of this guide. It is a layer that targets developers majorly by making their lives easier on the cloud. In this layer , developers only concentrate on writing code and when they are ready to deploy, they only need to commit their ready project in a source control platform like GitHub/GitLab and the rest is done for them automatically. This layer provides a serverless layer to the developers since they do not have to touch the messy server side stuff. This layer as you might already have guessed is known as Platform as a Service (PaaS). Heroku is one of the solutions that sits on this layer. In this guide, are going to setup a platform that is similar to Heroku on your own infrastructure. As you know, you cannot download and install Heroku on your server. It is an online cloud service that you subscribe to. We will use Caprover to setup our own private Platform as a service (PaaS). CapRover is an extremely easy to use app/database deployment & web server manager for your NodeJS, Python, PHP, ASP.NET, Ruby, MySQL, MongoDB, Postgres, WordPress and even more applications. Features of Caprover CLI for automation and scripting Web GUI for ease of access and convenience No lock-in! Remove CapRover and your apps keep working! Docker Swarm under the hood for containerization and clustering Nginx (fully customizable template) under the hood for load-balancing Let’s Encrypt under the hood for free SSL (HTTPS) One-Click Apps: Deploying one-click apps is a matter of seconds! MongoDB, Parse, MySQL, WordPress, Postgres and many more. Fully Customizable: Optionally fully customizable nginx config allowing you to enable HTTP2, specific caching logic, custom SSL certs and etc Cluster Ready: Attach more nodes and create a cluster in seconds! CapRover automatically configures nginx to load balance. Increase Productivity: Focus on your apps! Not the bells and whistles just to run your apps! Easy Deploy: Many ways to deploy. You can upload your source from dashboard, use command line caprover deploy, use webhooks and build upon git push Caprover Pre-requisites Caprover runs as a container in your server which can be any that supports containerization. Depending on your preferences, you can use Podman or Docker to pull and run Caprover image. For this example, we are going to use Docker. In case you do not have Docker installed, the following guides listed below will be there to help you set it up as fast as possible. Install Docker and Docker Compose on Debian Setup Docker CE & Docker Compose on CentOS 8 | RHEL 8 How To Install Docker on RHEL 7 / CentOS 7

How To Install Docker CE on Ubuntu Once Docker Engine has been installed, add your user account to docker group: sudo usermod -aG docker $USER newgrp docker Another pre-requisite is a wildcard domain name pointed to the IP of your server where Caprover Server will be running. Setup your Heroku PaaS using CapRover Once the pre-requisites are out of the way, the only task remaining now is to set up our Caprover and poke around its rooms just to see what it has to offer. The following steps will be invaluable as you try to get it up and running. Step 1: Prepare your server Once Docker is installed, you can install all of the applications you need during your stay in the server. They include an editor and such kind of stuff. ##On CentOS sudo yum update sudo yum install vim git curl ##On Ubuntu sudo apt update sudo apt install vim git curl That was straingtforward. Next, let us pull Caprover image to set the stone rolling Step 2: Pull and execute Caprover Image We are going to cover the installation of Caprover depending on where your server sits. Scenario 1: Installation on a local server without Public IP Install dnsmasq After all, as mentioned in the pre-requisites section, we shall need a small DNS server to resolve domain names since Caprover is so particular with it. In case you have a local DNS server that supports wildcard domains, then you are good to go. You can skip the DNS setup part. In case you do not have it, install lightweight dnsmasq as follows: sudo yum -y install dnsmasq After dnsmasq is successfully installed, start and enable the service. sudo systemctl start dnsmasq sudo systemctl enable dnsmasq Add Wildcard DNS Recod Once dnsmasq is running as expected, we can go ahead and add the configs and wildcard domain name as shown below: $ sudo vim /etc/dnsmasq.conf listen-address=::1,127.0.0.1,172.20.192.38 domain=example.com server=8.8.8.8 address=/caprover.example.com/172.20.192.38 Replace the IPs therein with yours accordingly. Then restart dnsmasq sudo systemctl restart dnsmasq Test if it works We shall use the dig utility to test if our configuration works $ dig @127.0.0.1 test.caprover.example.com ; DiG 9.11.20-RedHat-9.11.20-5.el8 @127.0.0.1 test.caprover.example.com ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER> Checking System Compatibility > Checking System Compatibility

0 notes