#technically two of those have pc versions so i could theoretically get those

Explore tagged Tumblr posts

Note

Can Xbox win next gen?

Thank you for asking me this anon, I’ve been dying for an excuse to talk about console war stuff.

With what we know right now? I don’t think there’s a fucking chance.

To begin, based on what I’ve seen, Sony genuinely internalized their lessons from the PS3′s wack ass cell architecture by talking with developers about what they want out of the system, which has led to people like Epic’s CEO Tim Sweeney- a man who could not possibly have less of a reason to pick sides in a console war- praising the PS5 and talking about how incredible it is, whereas Microsoft has made A Very Strong Box... perhaps even History’s Strongest Box.

A Very Strong Box would be perfectly fine, but there’s a problem: because they have said and reconfirmed that the Series X isn’t going to have any first-party exclusives for at least the first few years, every game is going to have to run on an Xbox One, and Microsoft just discontinued the One X, meaning that games are going to have to run on the One S. This will result in one of two things happening: either they’re going to primarily develop for the Series X and downgrade games for the One S, which will almost certainly result in HILARIOUS jank like the PS3 version of Shadow of Mordor, or they’ll develop for the One S and just crank the hell out of the settings for the Series X, which will absolutely result in incredible resolutions and framerates, but model/texture work won’t be nearly as impressive as it could be. Compare the reception of Halo Infinite’s graphics versus the the big-budget stuff on the PS5 showcase like Ratchet & Clank and Miles Morales. Plus, in either situation, first-party games won’t be able to be developed around having a Solid State Drive, the benefits of which are immediately apparent if you go watch that Ratchet & Clank trailer- the only way they can get environmental swapping like that without loading screen is because of an SSD.

Speaking of SSD, there’s another problem: Microsoft has yet to formally announce the Lockhart, which according to leaks is a budget Series X, and we know it exists because there are references to Lockhart in the Windows 10 OS. There’s a possibility that one of the budget cuts Microsoft will make is using a standard optical hard drive instead of a SSD. This would cripple not just Microsoft but possibly the entire industry for the whole generation, because for as good as those PS5 games look now, they’re only going to look better down the line. A possible future is that third-party developers won’t take full advantage of SSDs since they’ll want to make sure their games will run well on everything, and as a result Sony’s first-party output will blow everything else out of the water because they know they always have an SSD. Even if the Lockhart does have an SSD and make cuts elsewhere like the PS5 with no disc drive, a lower spec Xbox Series console almost certainly means that developers are going to be required to make sure it runs on both, which will just cause more issues for them. I guess the positive side to both of these problems is that they are technically consumer friendly- making sure people who don’t want to upgrade to a Xbox Series console will still be able to play games like Halo Infinite, and making sure people are able to afford some kind of upgrade even if they can’t afford the Series X- but I don’t think most people are thinking of it like that, especially when the pomp and circumstance around a new console generation has always been about how much better the new hotness is.

On the subject of new hotness, Microsoft’s marketing strategy is very bad. Xbox has never had the best names, but this holiday Microsoft will have the Xbox One S, the Xbox Series X, and whatever they call the Lockhart, presumably the Xbox Series S or Series L. Maybe you and me can distinguish that with relative ease, but there’s going to be no shortage of tech illiterate parents and grandparents stumbling over themselves to remember what their kids wanted, or kids writing down and asking for the wrong thing, or even people buying for themselves trying to remember if it’s a One X or Series X or Series One or whatever. And we know this kind of thing is a problem because it 100% contributed to the Wii U’s abysmal sales. The other aspect of their marketing strategy is that they really... aren’t doing much to celebrate the Series X being new hotness. They’ve announced the Series X UI is going to be identical to the Xbox One, and just by looking at game boxes you’d have a hard time knowing if the Series X is a brand new console or just an enhancement the One X because of how identical it is:

Same banner, same smaller black banner advertising what console to use, little white text in the top right advertising graphical fidelity. It’s nearly identical. Compared with the reveal of what PS5 boxes will look like:

It’s still blue plastic, but check out what white banner and black text! No more “Only on Playstation” text so there’s less to distract from the box art! Even if you want to argue that no one but turbo nerds really care about the boxes- and you’d be correct to do so- there is something to having them look even slightly different to signal “THIS IS NEW!” Also, going back to marketing, Smart Delivery is a terrible name for Cross Buy/“you own it on the old thing and the new thing”, and they’re not even enforcing that name for consistency despite featuring it heavily in their advertising because EA is calling it “Dual Entitlement”, which is actually still a better name:

Also, the OPTIMIZED FOR SERIES X sticker. Madden 21’s box art is already fucking awful enough without also needing that big ol’ OPTIMIZED FOR SERIES X sticker making it an even bigger mess.

Then there’s all those studios they bought up: your tastes may differ, but the only studio in that bunch that I think can produce worthwhile games is Obsidian. Ninja Theory’s got Hellblade but not much else, Double Fine is going to be a fucking money pit because Tim Schaffer just revealed they were going to CUT ALL OF THE BOSS FIGHTS OUT OF PSYCHONAUTS 2 BECAUSE THEY SPENT ALL THEIR MONEY ON JACK BLACK until they got bought and funded by Microsoft, and the other studios they acquired like inXile and Compulsion are kinda just… fine. Even if they did have an absolute killer’s row of developers, I think about what Rare’s been doing since they were acquired (although people seem to like Sea of Thieves now that it’s had some updates, and I sincerely hope Everwild is as cool as it looks), and I think of this recent quote from the head of Xbox Game Studios:

“It’s kind-of a phased thing. Take Compulsion. They’re working on their next game and have spent the last year on early ideation. I try to keep us as far away from that as possible. And then, as it starts to get exposure within the organisation, feedback will come in and things will start to steer. But it’s important to leave them alone for as long as possible, until they’ve got something that can walk on its own. And then there’s no shortage of feedback within Xbox.” (emphasis mine)

… which makes me think about what happened with Platinum’s Scalebound, a game that somehow transformed from a single-player action RPG to an online multiplayer hunting(?) game and was cancelled because Platinum just couldn’t meet deadlines and am overwhelmed with dread.

The last thing is to think of that really just completely undercuts Microsoft is that fact that all of their Xbox games are also going to be playable on Windows 10. Obviously, gaming PCs are more expensive than consoles- even if both consoles wind up at a super high price point, getting comparable or better performance on a PC will cost just as much, or more- but it still cuts out a portion of people who might otherwise be persuaded to buy an Xbox if they wind up with a lot of good exclusives.

I think the only things Microsoft can claim as an inarguable advantage is the fact that the Series X is A Very Strong Box... perhaps even History’s Strongest Box, and that Xbox Game Pass is an insanely good deal (but again, Windows 10). Right now, they also have the Xbox One’s backwards compatibility baked-in, which is excellent, but Sony could potentially pull out the “PS5 can play PS1-4 games” card, which would nuke Microsoft’s “largest launch line-up in history” from orbit. Their only other possible advantage would be price, which is why despite the fact that we’re roughly four months out from launch, we know nothing about price of either console. I think they’re playing chicken to see who reveals their price first, and how low they can go before it would be suicide for the other company to go lower. And- this is wild, baseless speculation, but fuck it- Sony recently announced they’re increasing production of launch PS5’s up from 6m to 9m. It’s true that gaming sales have actually gone up despite/because of the current state of the world, but who knows what things are going to be like in four months, and either way the average person still probably can’t afford a huge purchase… which could mean that the PS5 isn’t such a huge purchase. I don’t know the cost of the PS5 components, but Sony might be willing to go absurdly low and sell at a loss for a while just to put Microsoft in that position, where they literally can not go lower than them (or they COULD, because Microsoft as a whole has theoretically infinite money, but I can’t imagine them wanting to accept that much of a loss).

tl;dr: Naw, man.

8 notes

·

View notes

Text

RX 6800 and RX 6800 XT review 2021: Specs | Hashrate | Overclocking Setting

Radeon RX 6800 and RX 6800 XT review 2021: Specs | Hashrate | Overclocking Settings| Comparison - What will be the mining on AMD Radeon RX 6800 and RX 6800 XT Most recently, we talked about the new "mining queen" from Nvidia, a video card GeForce RTX 3080... Doctor Lisa Su and the company decided to keep up with competitors and at the end of October an event took place, which was eagerly awaited in the camp of "red" miners. AMD made a presentation of the RDNA 2 line of graphics adapters: Radeon RX 6800, RX 6800 XT. Innovative team solution AdvancedMicroDevices was appreciated by computer technology enthusiasts. The red cards of the latest series in games showed higher performance than the RTX 30 based on the Ampere architecture and at the same time they will be sold at a lower price. For a long seven years, flagship green graphics cards dominated the market, but now AMD has managed to get ahead. Optimists believed that there was no need to rush to build mining farms based on the RTX 3080 or RX 5700 XT. It is quite possible that the cards of the new line will become a more profitable option. However, the miracle did not happen, the indicators of the real hash rate of Radeon RX 6800 / RX 6800 XT video cards in mining did not by much exceed the preliminary calculations of specialists based on the technical parameters of these GPU devices. By and large, the new version of Navi is an alternative to the 5700 or RTX 3070... But let's talk about everything in order and start with the appearance, new, already on sale AMD video cards. Packaging and appearance of AMD Radeon RX 6800 There have been no dramatic changes in the packaging design and appearance of the next generation AMD graphics adapters. The AMD Radeon ™ RX 6800 graphics card is a triple-cooler GPU device with a length of almost 30 cm, occupying 2 slots (while the 6800 XT is 2.5 in total). I must say that parallel to the increase in power, the sizes of video cards are also growing. Compared to the diminutive graphics cards of the early 2000s, today's gaming GPUs look like mastodons that won't fit into a standard case soon. However, for miners this is absolutely unimportant, the main thing is that the cooling system is as efficient as possible and always cope with the loads. But let's see what this miracle of technology can do. AMD Radeon RX 6800 specifications AMD Radeon RX 6800 specifications Comparison table for RX 6800 with its predecessors and competitors. OptionsGPUs AMDNVIDIARX 6800 XTRX 6800RX 5700 XTRTX 3080GTX 2080Process technology, nm777812Video chipNavi21XTNavi21XLNavi 10GA102TU104Crystal area536536251628545Number of transistors, billion26,826,810,328,313,6Number of shrader units46083840256087042944ROP quantity6464649664Computing power FP32, TFLOPS20,7416,179,7529,7710,07Core base frequency, MHz14871372160515151440Overclocked core frequency, MHz22502105190517101710Video memory frequency, MHz20002000175011881750Video memory, GB16168108Video memory typeGDDR6GDDR6GDDR6GDDR6XGDDR6Bus bandwidth, bit256256256320256Power consumption, watt300250225320215 Testing at the stand, hash rate on different algorithms. On the thematic forums, they asked many questions like: "Is it really possible to get 150 Mh / s on the 6000 series from AMD on Ethash?" Judging by the "naked" teraflops, the computing power of the green flagship is much higher, while the RTX 3080 produces a maximum of 103 Mh / s. And so it happened. In theory, the RX 6800 could not overclock to 100 megahash, even taking into account the higher core and memory frequencies. If in mining everything depended on the ability of the graphics adapter to work stably at high frequencies, then the more stable overclocking cards with Samsung memory would be worth their weight in gold for miners. Yes, hashrate depends on memory and kernel overclocking parameters, but not to such an extent. Of course, you need to take into account that the new architecture significantly improves performance, and after all, mining, after all, is a banal search of cryptographic codes in search of a suitable option. But you can't argue with the facts, when the new cards were put on the mining of cryptocurrency, they showed what they really could show and nothing more. The forecast based on technical characteristics disappointed those who really hoped for 150 Mh / s, and bench tests confirmed it. Real hashrate table for RX 6800 and RX 6800 XT. Mining algorithmHashrate RX 5700 XTRX 6800RX 6800 XTEthash, Mh/s556064KawPow, Mh/s212834Cuckatoo31, H/s1,52,42,5Octopus, Mh/s28,64044,7Cuckaroo29b, H/s3,978,8 The data was taken from the thematic forum Miningclubinfo, as well as from the mining profitability calculators Minersat and WhatToMine... It turns out that on Ether they hardly surpassed their predecessors. However, it should be borne in mind that 5700 XT cards have already learned how to reflash, but no one has tried to modify the BIOS RX 6800 yet. Perhaps, over time, folk craftsmen will get to them. Theoretically, with the right timing, these video cards can be overclocked on Ethash up to 70, or even before 75 megahash, but no more. That is, from their competitors RTX 3080, the red video adapters of this series are still lagging behind. Nothing more serious than Radeon VII, the company under the leadership of Dr. Lisa Su never released Overclocking AMD Radeon RX 6800 Overclocking-AMD-Radeon-RX-6800. What can be said about the overclocking of new video cards. You need to set almost the same parameters as on the old 570/580, memory for 2100/2150 and core 1200/1250. These graphics adapters work stably on crypto mining in Windows, but with HiveOS according to the miners' reviews, there are still problems. Devices are detected and started, but failures frequently occur. So, if you buy this model, forget about Hive and bet ten. But with the Nicehash miner, AMD cards of the 60th line are quite compatible. How to reduce the power consumption of video cards The easiest way to reduce card consumption is to lower the Powerlimit. And you can also carry out undervolting, that is, lower the voltage of the graphics core by modifying the BIOS or setting the necessary parameters using specialized software. How to reduce the power consumption of video cards The first tests showed that a high Power limit gives nothing but an increase in power consumption. The power limit does not need to be raised much, the maximum +20 is not higher. Reduce voltage using the –cdvc –mcdvc options in the latest version Phoneixminer does not exceed. We need to wait for the developers to release a new release. Now the card consumes about 250 watts. Profitability, purchase relevance At the time of this review, the most profitable algorithm is Ethash. More precisely, the coin is a big Ether, since all other tokens of this algorithm, including Ether classic, are in the second ten of the rating of profitable crypto coins. How to reduce the power consumption of video cards At a speed of 64 megahash video card RX 6800 XT will earn 0.0023 ETH per day, which as of April 24, 2021 is equal to 420 Russian rubles. From this amount, you still need to deduct electricity costs, according to your local tariff. Let's say the net income is 370 rubles per day or 11,100 per month. At a price of 115,000 rubles, the card will pay off in 11/12 months. The term is quite acceptable, however, it can radically change both in one and the other direction. What conclusion can be drawn. While the market is in an upward trend, the RX 6800, despite the rather high cost, is very profitable. In purely technical terms, progress is evident, but nothing surprising, AMD could not offer it. Analogs from competitors and cards of previous series for miners are no worse. However, the potential of the new Navi GPU devices has not yet been fully revealed. Radeon RX 6800 and RX 6800 XT review Radeon RX 6800 and RX 6800 XT revieW Radeon RX 6800 and RX 6800 XT review - What's The Changes in 2021 https://www.youtube.com/watch?v=2fs0yByc8EA The end of 2020 is the scene of two titanic fights on the video game scene. On the console side, we have Microsoft's Xbox Series X taking on Sony's PS5. On the PC side, the new graphics cards are the center of attention. On the one hand, we have Nvidia, the market leader, which has released its new line of GeForce RTX 30XX cards : the RTX 3070, 3080 and 3090 . On the other, we have AMD. Eternal second, the Californian manufacturer has decided to strike hard this year with its RX 6000 range, which accompanies the release of its new Ryzen 5000. Three Radeon cards were presented: the RX 6800, the RX 6800 XT and the RX 6900 . These are the first two that we are testing today. With its cards, AMD seeks to catch up with its direct competitor by notably introducing ray-tracing (DXR ) with its RDNA 2 architecture. This technology was popularized in the world of video games two years ago via the series RTX 20X from Nvidia, but was not yet available from AMD. The manufacturer also wants to offer new things, such as Smart Access Memory (SAM). This technology makes it possible to increase the performance of the card if it is coupled with a Ryzen 5000 processor, by improving the exchanges between the CPU and the GPU going directly through the PCie 4.0 interface. Consumption promises to be reduced compared to competing cards, which are very energy-intensive. AMD's main argument compared to the competition is also the price of its products. The RX 6800 XT is cheaper by 50 euros compared to the RTX 3080 for the same power. At least in theory. But is the economy worth it? This is what we will see. Note that our test was carried out in partnership with Igor's Lab. The benchmarks as well as the various measures are therefore common. We are testing AMD's models here and custom versions from third-party manufacturers will also hit the market. Logically, they should bring more power, while remaining in the same range.https://dc5c3500fab89362ccb930a73b645cbc.safeframe.googlesyndication.com/safeframe/1-0-38/html/container.html PRICING AND AVAILABILITY Both graphics cards are available from November 18. The RX 6800, which is theoretically placed a little above the RTX 3070 (519 euros), is sold for 589 euros. The RX 6800 XT, the improved version of the card, wants to compete with the RTX 3080 (719 euros) in terms of power. Its price is extremely interesting, since it is sold for 659 euros . Both cards are sold on partner sites. It should be noted that AMD could take advantage of the glitches of its direct competitor. Nvidia is indeed experiencing breaks in its new products until 2021, and the purchase of a Radeon card, which could be more easily available, could be considered. All this on the condition that AMD does not experience the same concerns, of course. TECHNICAL SHEET AMD promises cards as powerful as the competition, in particular thanks to its new RDNA 2 architecture with a chip engraved in 7 nm. This architecture allows more power, of course, but also the management of ray-tracing, a first for the manufacturer. Note that it is used on new consoles, whether it is the Xbox Series X or the PS5, which bring this innovation to their segment. Radeon RX 6800Radeon RX 6800 XTCompute Units6072Base frequency1815 MHz2015 MHzBoost frequency2105 MHz2250 MHzCache "Infinity"128 Mo128 MoGDDR6 memory16 Go16 GoWattage (W)250300Release dateNovember 18, 2020November 18, 2020Price579 €649 € The two cards are similar in design and only certain characteristics change. Both are, for example, equipped with 128 MB of “Infinity” cache and 16 GB of GDDR 6 memory. On the power side, the RX 6800 is equipped with 60 calculation units, a base frequency of 1815 MHz as well as a boost frequency up to 2105 Mhz. Its power is 250 Watts. The RX 6800 XT is logically a little more efficient, since we have here 72 calculation units with a base frequency of 2015 MHz as well as a boost frequency of 2250 MHz. Note that its power is here indicated at 300 watts, which is logical. Solid characteristics that allow, at least on paper, to play serenely in 4K by reaching 60 frames per second. Likewise, we can hope for a smooth game with ray-tracing activated in 1080p. This is what we will determine in this test. MAJOR NOVELTIES TO SEDUCE AMD offers several interesting things to push the user to buy in their dairy rather than another. This generation marks the arrival of DirectX RayTracing, management of light and reflections in real time. This resource, very greedy, is still marginal in the world of video games. It arrived only two years ago on the RTX 20XX generation of Nvidia cards and made its debut on consoles with the Series X and the PS5 . This technology makes it possible to display reflections in real time, and not pre-calculated as was done before. In a title like Control, this drastically changes the artistic direction, and even the playing experience. The reflections “live” by themselves and on a puddle, a window or a marble floor, you will see the surrounding decor. 'reflects it. We mentioned Control, but other titles like Battlefield V, Metro Exodus or even Shadow of the Tomb Raider are benefiting from it on PC. Note that some already existing games offer compatibility, this is the case of the august MMORPG World of Warcraft or Minecraft. Control with ray-tracing enabled AMD offers Variable Rate Shading technology with its cards. To quickly explain this innovation, it is a dynamic management of the quality of the shadows on the screen. The map thus “cuts out” the image in real time and improves this precise point on the areas of interest. For example, it is often the shadows close to the player's avatar and in the center of the image that are enhanced, to the detriment of those located far away and in the periphery of the field of view. This technology, already used by Nvidia, is far from being a gadget, since it saves resources. The game must support it, however. Finally, AMD introduces SAM, Smart Acess Memory. This is a technology that we only find on AMD cards. If you have a Ryzen 5000 processor from the same manufacturer , the CPU communicates directly with the GPU in order to skip the 256 MB limit to access all of the memory. The aim is thus to improve performance. On paper, AMD promises up to 10% more power in play with this technique. Note that Nvidia has indicated that it is working on a similar solution in the future with Intel processors. https://dc5c3500fab89362ccb930a73b645cbc.safeframe.googlesyndication.com/safeframe/1-0-38/html/container.html A RAW DESIGN OF FORMWORK The Radeon RX 6800 and RX 6800 XT cards are completely identical in design, since only their performance changes. AMD has long teased the look of its products, even going so far as to partner with Epic Games to include a giant 3D model of its RX 6800 in the Fortnite game . The players then had plenty of time to walk on the object and discover its small visual details.https://www.youtube.com/embed/PGyqXEsPrgM?feature=oembed On its RTX 30XX Founder's Edition, Nvidia had surprised with a neat design, pleasing to the eye. AMD takes the opposite view from its competitor by delivering a card with a design that is more practical than aesthetic, taking the main lines of the Radeon VII. After all, we are talking about an object that will sit inside a tower that has every chance of being closed. In addition, not all PCs have a plexiglass plate to admire the interior. The RX 6800 and RX 6800 XT feature a design that doesn't mark. Of course, we notice the three enormous fans hollowed out in an aluminum plate which allow an optimal release of heat from below. However, the hot air is released exclusively in the tower, since the back of the card does not have vents. The three fans ensure that the GPU temperature is around 80 degrees while rotating at more than 1,600 revolutions per minute. It is necessary to note an optimal silence. We did not notice any alarming noise, since the fans give off an average of 38 decibels with rare peaks at 48 decibels in play. This means that you will not hear your card hiss most of the time, and rare moments of noise will remain acceptable if your tower is completely closed. The RX adopt dimensions of 267 x 120 mm, which is quite classic for a graphics card, even if their design gives them a little "massive" side. We find the 8 pin connector for the power supply placed on the side, at the back of the card. An intelligent placement, more than on the RTX 3080 which placed it right in the middle. Obsessed with the cable managment can blow. The cards obviously take two slots on your tower and at the back, we find a complete connection. Thus, the RX 6800 and 6800 XT have an HDMI 2.1 port, two DisplayPort 1.4 ports as well as a USB Type-C port. Ultimately, the Radeon RX 6800 and Radeon 6800 XT weren't designed to look good, as these three huge fans on the front show, but to be efficient. An understandable and proven choice. Now all that remains is to know if our cards keep all their promises. PERFORMANCE THAT BRINGS GREAT PLAYING COMFORT The RX 6800 and RX 6800 XT are a huge evolution over previous AMD models. The RDNA 2 architecture now makes it possible to activate ray-tracing, as we have said, but also to play in 4K serenely. At least, it's AMD's promise that delivered promising first benchmarks. This is what we are going to check on our games panel. Before starting, we must specify our test configuration. The benchmarks below were carried out with an AMD Ryzen 9 5900 X processor (therefore with the possibility of activating the SAM), supported by 32 GB of RAM (16 x 2) as well as the games installed on an NVMe SSD. Overall, we notice that the results are very fluctuating depending on the game. On some titles, we have better performance than on competing cards, while on others, we are below. Gaming with Full HD definition and DXR (ray-tracing) enabled does not always achieve 60 frames per second with the RX 6800, it is the case with Control, for example, but we really are. not far away. Finally, it should be noted that the SAM brings a real boost to game performance, increasing the number of frames per second by 10% in some cases. A real asset. We are obviously above previous cards from AMD, such as the RX 5700 XT or the Radeon VII. Full HD games with ray-tracing Control is one of the most interesting games in our panel. It is indeed a title designed for ray-tracing and optimized for. In Full HD with DXR activated, we exceed 60 frames per second with the RX 6800 XT. Nevertheless, we remain at or below the RTX 3070 under the same conditions. The RX 6800 offers a rendering of less than 60 FPS on average, but this is still very appreciable. There is a significant performance gain with the SAM activated. Benchmarks carried out with the help of Igor'sLAB. Regarding Metro Exodus, in Full HD with DXR activated, the results are very different. With SAM enabled, the RX 6800 XT outperformed the RTX 3070 FE on our test sequence. Similarly, the latter is neck and neck with the RX 6800. Here again, it is the SAM that makes the difference, but in all cases, the game in 60 FPS is assured. Benchmarks carried out with the help of Igor'sLAB. Finally, on Watch Dogs Legion, our latest ray-tracing compatible game, the RX 6800 XT leaves the RTX 3070 FE on the floor, SAM or not. However, we still remain below Nvidia's flagship card, namely the RTX 3080. The RX 6800 revolves around 56 FPS, which provides appreciable comfort in the middle of the game. Benchmarks carried out with the help of Igor'sLAB. Games in 1440p If you have a QHD screen, that is to say with a definition in 1440p, the RX 6800 and RX 6800 XT can be a good compromise. On Control, with DXR disabled, all AMD cards are above the RTX 3070, SAM enabled or not. Without ray-tracing, AMD's products deliver much better performance, which can be appreciated in-game. Benchmarks carried out with the help of Igor'sLAB. Read the full article

0 notes

Text

The Famous Router Hackers Actually Loved

A version of this post originally appeared on Tedium, a twice-weekly newsletter that hunts for the end of the long tail.

In a world where our routers look more and more like upside-down spiders than things you would like to have in your living room, there are only a handful of routers that may be considered “famous.”

Steve Jobs’ efforts to sell AirPort—most famously by using a hula hoop during a product demo—definitely deserve notice in this category, and the mesh routers made by the Amazon-owned Eero probably fit in this category as well.

But a certain Linksys router, despite being nearly 20 years old at this point, takes the cake—and it’s all because of a feature that initially went undocumented that proved extremely popular with a specific user base.

Let’s spend a moment reflecting on the blue-and-black icon of wireless access, the Linksys WRT54G. This is the wireless router that showed the world what a wireless router could do.

1988

The year that Linksys was formed by Janie and Victor Tsao, two Taiwanese immigrants to the United States who launched their company, initially a consultancy called DEW International, while working information technology jobs. (Victor, fun fact, was an IT manager with Taco Bell.) According to a 2004 profile in Inc., the company started as a way to connect inventors with manufacturers in the Taiwanese market, but the company moved into the hardware business itself in the early 1990s, eventually landing on home networking—a field that, in the early 2000s, Linksys came to dominate.

How black and blue became the unofficial colors of home networking during the early 2000s

Today, buying a router for your home is something that a lot of people don’t think much about. Nowadays, you can buy one for a few dollars used and less than $20 new.

But in the late 1990s, it was a complete nonentity, a market that had not been on the radar of many networking hardware companies, because the need for networking had been limited to the office. Which meant that installing a router was both extremely expensive and beyond the reach of mere mortals.

It’s the kind of situation that helps companies on the periphery, not quite big enough to play with the big fish, but small enough to sense an opportunity. During its first decade of existence, Janie and Victor Tsao took advantage of such opportunities, using market shifts to help better position their networking hardware.

In the early 90s, Linksys hardware had to come with its own drivers. But when Windows 95 came along, networking was built in—and that meant a major barrier for Linksys’ market share suddenly disappeared overnight, which meant there was suddenly a growing demand for its network adapters, which fit inside desktops and laptops alike.

While Victor was helping to lead and handle the technical end, Janie was working out distribution deals with major retailers such as Best Buy, which helped to take the networking cards mainstream in the technology world.

But the real opportunity, the one that made Linksys hard to shake for years afterwards, came when Victor built a router with a home audience in mind. With dial-up modems on their way out, there was a sudden need.

“As home broadband Internet use began to bloom in the late ’90s, at costs significantly higher than those for dial-up connections, Victor realized that people were going to want to hook all their small-office or home computers to one line,” the Inc. profile on Janie and Victor stated. “To do so they would need a router, a high-tech cord splitter allowing multiple computers to hook into one modem.”

The companies Linksys was competing with were, again, focused on a market where routers cost nearly as much as a computer itself. But Victor found the sweet spot: A $199 router that came with software that was easy to set up and reasonably understandable for mere mortals. And it had the distinctive design that Linksys became known for—a mixture of blue and biack plastics, with an array of tiny LED lights on the front.

In a review of the EtherFast Cable/DSL router, PC Magazine noted that Linksys did far more than was asked of it.

“A price of $200 would be a breakthrough for a dual Ethernet port router, but Linksys has packed even more value into the 1.8- by 9.3- by 5.6-inch (HWD) package,” reviewer Craig Ellison wrote. The router, which could handle speeds of up to 100 megabits, sported four ports—and could theoretically handle hundreds of IP addresses.

Perhaps it wasn’t as overwhelmingly reliable as some of its more expensive competitors, but it was reasonably priced for homes, and that made it an attractive proposition.

This router was a smash success, helping to put Linksys on top of a fledgling market with market share that put its competitors to shame. In fact, the only thing that was really wrong about the router was that it did not support wireless. But Linksys’ name recognition meant that when it did, there would be an existing audience that would find its low cost and basic use cases fascinating.

One router in particular proved specifically popular—though not for the reasons Linksys anticipated.

$500M

The amount that Cisco, the networking hardware giant, acquired Linksys for in 2003. The acquisition came at a time when Linksys was making half a billion dollars a year, and was growing fast in large part because of the success of its routers, among other networking equipment. In comments to NetworkWorld, Victor Tsao claimed that there was no overlap between the unmanaged networking of Linksys routers and the managed networking of Cisco’s existing infrastructure. They did things differently—something Cisco would soon find out the hard way.

Not only was the WRT54G cheap, it was hackable. (Jay Gooby/Flickr)

How an accidental feature in Linksys’ wireless router turned a ho-hum router into an enthusiast device

In many ways, the WRT54G router series has become something of the Nintendo Entertainment System of wireless routers. Coming around relatively early in the mainstream history of the wireless router, it showed a flexibility far beyond what its creator intended for the device. While not the only game in town, it was overwhelmingly prevalent in homes around the world.

Although much less heralded, its success was comparable to the then-contemporary Motorola RAZR for a time, in that it was basically everywhere, on shelves in homes and small businesses around the world. The WRT54G, despite the scary name, was the wireless router people who needed a wireless router would buy.

And odds are, it may still be in use in a lot of places, even though its security standards are well past its prime and it looks extremely dated on a mantle. (The story of the Amiga that controlled a school district’s HVAC systems comes to mind.)

But the reason the WRT54G series has held on for so long, despite using a wireless protocol that was effectively made obsolete 12 years ago, might come down to a feature that was initially undocumented—a feature that got through amid all the complications of a big merger. Intentionally or not, the WRT54G was hiding something fundamental on the router’s firmware: Software based on Linux.

This was a problem, because it meant that Linksys would be compelled to release the source code of its wireless firmware under the GNU General Public License, which requires the distribution of the derivative software under the same terms as the software that inspired it.

Andrew Miklas, a contributor on the Linux kernel email list, explained that he had personally reached out to a member of the company’s staff and confirmed that the software was based on Linux … but eventually found his contact had stopped getting back to him.

Miklas noted that his interest in the flashed file was driven in part by a desire to see better Linux support for the still-relatively-new 802.11g standard that the device supported.

“I know that some wireless companies have been hesitant of releasing open source drivers because they are worried their radios might be pushed out of spec,” he wrote. “However, if the drivers are already written, would there be any technical reason why they could not simply be recompiled for Intel hardware, and released as binary-only modules?”

Mikas caught something interesting, but something that shouldn’t have been there. This was an oversight on the part of Cisco, which got an unhappy surprise about a popular product sold by its recent acquisition just months after its release. Essentially, what happened was that one of their suppliers apparently got a hold of Linux-based firmware, used it in the chips supplied to the company by Broadcom, and failed to inform Linksys, which then sold the software off to Cisco.

In a 2005 column for Linux Insider, Heather J. Meeker, a lawyer focused on issues of intellectual property and open-source software, wrote that this would have been a tall order for Cisco to figure out on its own:

The first takeaway from this case is the difficulty of doing enough diligence on software development in an age of vertical disintegration. Cisco knew nothing about the problem, despite presumably having done intellectual property diligence on Linksys before it bought the company. But to confound matters, Linksys probably knew nothing of the problem either, because Linksys has been buying the culprit chipsets from Broadcom, and Broadcom also presumably did not know, because it in turn outsourced the development of the firmware for the chipset to an overseas developer.

To discover the problem, Cisco would have had to do diligence through three levels of product integration, which anyone in the mergers and acquisitions trade can tell you is just about impossible. This was not sloppiness or carelessness—it was opaqueness.

Bruce Perens, a venture capitalist, open-source advocate, and former project leader for the Debian Linux distribution, told LinuxDevices that Cisco wasn’t to blame for what happened, but still faced compliance issues with the open-source license.

“Subcontractors in general are not doing enough to inform clients about their obligations under the GPL,” Perens said. (He added that, despite offering to help Cisco, they were not getting back to him.)

Nonetheless, the info about the router with the open-source firmware was out there, and Mikas’ post quickly gained attention in the enthusiast community. A Slashdot post could already see the possibilities: “This could be interesting: it might provide the possibility of building an uber-cool accesspoint firmware with IPsec and native ipv6 support etc etc, using this information!”

And as Slashdot commentators are known to do, they spoke up.

It clearly wasn’t done with a sense of excitement, but within about a month of the post hitting Slashdot, the company released its open-source firmware.

A WRT54G removed from its case. The device, thanks to its Linux firmware, became the target of both software and hardware hacks. (Felipe Fonesca/Flickr)

To hackers, this opened up a world of opportunity, and third-party developers quickly added capabilities to the original hardware that was never intended. This was essentially a commodity router that could be “hacked” to spit out a more powerful wireless signal at direct odds with the Federal Communications Commission, developed into an SSH server or VPN for your home network, or more colorfully, turned into the brains of a robot.

It also proved the root for some useful open-source firmware in the form of OpenWrt and Tomato, among others, which meant that there was a whole infrastructure to help extend your router beyond what the manufacturer wanted you to do.

Cisco was essentially compelled by the threat of legal action to release the Linux-based firmware under the GPL, but it was not thrilled to see that the device whose success finally gave it the foothold in the home that had long evaded the company being used in ways beyond what the box said.

As Lifehacker put it way back in 2006, it was the perfect way to turn your $60 router into a $600 router, which likely meant it was potentially costing Cisco money to have a device this good on the market.

So as a result, the company “upgraded” the router in a way that was effectively a downgrade, removing the Linux-based firmware, replacing it with a proprietary equivalent, and cutting down the amount of RAM and storage the device used, which made it difficult to replace the firmware with something created by a third party. This angered end users, and Cisco (apparently realizing it had screwed up) eventually released a Linux version of the router, the WRT54GL, which restored the specifications removed.

That’s the model you can still find on Amazon today, and still maintains a support page on Linksys’ website—and despite topping out at just 54 megabits per second through wireless means, a paltry number given what modern routers at the same price point can do, it’s still on sale.

The whole mess about the GPL came to bite in the years after the firmware oversight was first discovered—Cisco eventually paid a settlement to the Free Software Foundation—but it actually informed Linksys’ brand. Today, the company sells an entire line of black-and-blue routers that maintain support for open-source firmware. (They cost way more than the WRT54G ever did, though.)

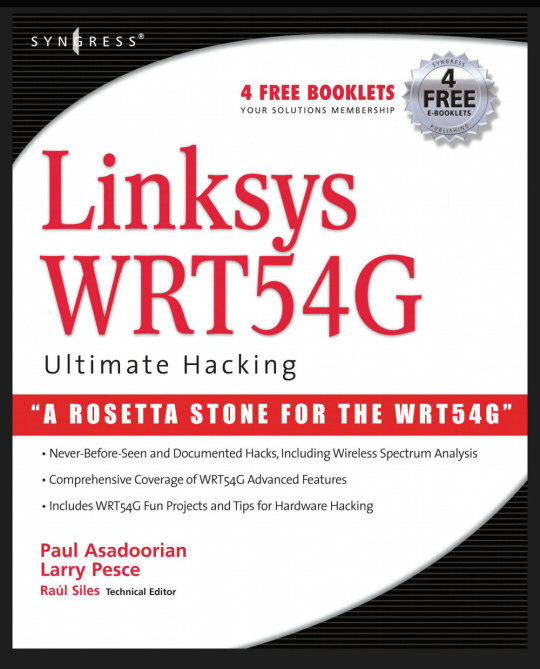

“We want this book to expand the audience of the WRT54G platform, and embedded device usage as a whole, unlocking the potential that this platform has to offer.”

— A passage from the introduction of the 2007 book Linksys WRT54G Ultimate Hacking, a book that played into the fact that the WRT54G was a hackable embedded system that was fully mainstream and could be used in numerous ways—both for fun and practical use cases. Yes, hacking this device became so common that there is an entire 400-page book dedicated to the concept.

Now, to be clear, most people who bought a variant of the WRT54G at Best Buy likely did not care that the firmware was open source. But the decision created a cult of sorts around the device by making it hackable and able to do more things than the box on its own might have suggested. And that cult audience helped to drive longstanding interest in the device well beyond its hacker roots.

It was an unintentional word-of-mouth play, almost. When the average person asked their tech-savvy friend, “what router should I buy,” guess which one they brought up.

You know something has become a legendary hacking target when there’s a book about it. (via Bookshop)

A 2016 Ars Technica piece revealed the router, at the time, was still making millions of dollars a year for Linksys, which by that time had been sold to Belkin. Despite being nowhere near as powerful as more expensive options, the WRT54GL—yes, specifically the one with Linux—retained an audience well into its second decade because it was perceived as being extremely reliable and easy to use.

“We’ll keep building it because people keep buying it,” Linksys Global Product Manager Vince La Duca said at the time, stating that the factor that kept the router on sale was that the parts for it continued to be manufactured.

I said earlier that in many ways the WRT54G was the Nintendo Entertainment System of wireless routers. And I think that is especially true in the context of the fact that it had a fairly sizable afterlife, just as the NES did. Instead of blocky graphics and limited video output options, the WRT54G’s calling cards are a very spartan design and networking capabilities that fail to keep up with the times, but somehow maintain their modern charm.

In a world where routers increasingly look like set pieces from syndicated sci-fi shows from the ’90s, there is something nice about not having to think about the device that manages your network.

The result of all this is that, despite its extreme age and not-ready-for-the-living-room looks, it sold well for years past its sell-by date—in large part because of its reliance on open-source drivers.

If your user base is telling you to stick with something, stick with it.

The Famous Router Hackers Actually Loved syndicated from https://triviaqaweb.wordpress.com/feed/

0 notes

Text

RTX 2080 Ti benchmark showdown: Nvidia vs MSI vs Zotac

Regardless of costing an arm, a leg plus a few kidneys, Nvidia’s GeForce RTX 2080Ti has rapidly established itself as one of the best graphics card on the planet. In case you’ve ever needed to play video games in 4K on the highest settings with out the faff of gaffer-taping two GPUs collectively through SLI bridges and whatnot, the RTX 2080Ti is by far and away one of the best single card answer for 4K perfection chasers. As with every graphics card buy, nevertheless, the massive drawback dealing with potential RTX 2080Ti house owners is which of the various hundreds of this explicit card is definitely one of the best one to purchase?

To assist shed a bit of sunshine on the difficulty, I’ve been pitting a bunch of various RTX 2080Tis towards one another to see which one’s greatest, together with MSI’s GeForce RTX 2080Ti Duke OC, Zotac’s GeForce RTX 2080Ti AMP! Version and Nvidia’s personal Founders Version. In fact, that is nonetheless only a small fraction of all the varied 2080Tis on the market in the intervening time, and I’ll do my greatest so as to add extra playing cards to this big graphical showdown after I can. For now, although, it’s a three-horse race between Nvidia, Zotac and MSI. Come and learn the way they obtained on by the medium of some graphs.

However first, some specs. As you’d anticipate, all three playing cards include the identical 11GB of GDDR6 reminiscence and 4352 CUDA cores, however the important thing distinction between them (other than their general dimension and numerous bits of cooling equipment) is how these cores have been clocked. Nvidia’s reference specification for the RTX 2080Ti, as an example, has a base clock velocity of 1350MHz and a lift clock velocity of 1545MHz. All three have caught with the previous, however Nvidia’s Founders Version pushes the latter as much as 1635MHz, whereas the MSI and Zotac go even additional to 1665MHz.

That’s the primary purpose why so usually you’ll see at the least £100-200 (if no more) separating the most cost effective and costliest GPUs of any given card kind – the quicker a card can probably run, the higher efficiency you’ll theoretically get from it. Cooling additionally performs a giant function in how a lot playing cards price as properly. Similar to in actual life, scorching elements usually equals sad elements, and sad elements are more likely to begin throwing a wobbly and grow to be a little bit of a bottleneck than those that can maintain their cool. In consequence, it’s no shock that MSI and Zotac’s three-fan jobs price much more than Nvidia’s dual-fan version.

The draw back is that each of this stuff can usually make playing cards extra power-hungry than others, however for the needs of right now’s take a look at I’ll be specializing in efficiency and efficiency alone. So how does MSI’s £1250 / $1220 (however at present out of inventory actually all over the place) Duke OC examine to Zotac’s £1370 / $1500 AMP and Nvidia’s £1099 / $1199 Founders Version? Behold.

RTX 2080Ti 4K efficiency

With assistance from Murderer’s Creed Odyssey, Forza Horizon four, Hitman, Whole Warfare: Warhammer II and The Witcher III, I ran my two numerous assessments: one with an Intel Core i5-8600Ok and 16GB of RAM in my PC, and one other with Intel’s new Core i9-9900Ok (a evaluate of which will probably be out there early subsequent week) and the identical 16GB of RAM. As you could keep in mind, I had an inkling that my Core i5 was inflicting some bottleneck issues after I first took a have a look at the RTX 2080Ti, notably at decrease resolutions, and testing it with the Core i9 confirmed these suspicions have been certainly appropriate, typically exhibiting beneficial properties of virtually 20fps.

That stated, I discovered the precise distinction between all three playing cards was surprisingly small. At 4K utilizing every sport’s highest high quality settings, Nvidia’s Founders Version was constantly the quicker card of the three when paired with my Core i5 CPU, which is fairly damning for the opposite two when it prices a lot much less. Even when Zotac and MSI’s efforts did handle to sneak out in entrance, the quantity you’re truly gaining definitely doesn’t really feel like £150-300 / $120-300 price of additional efficiency.

With a Core i5, the distinction between all three playing cards was fairly minimal.

Admittedly, a unique image emerged after I retested every card with the Core i9-9900Ok. Right here, it was Zotac’s AMP! Version which proved to be the quickest card (besides in Murderer’s Creed Odyssey the place Nvidia’s model nonetheless had the very slight edge), however the level stays that they’re all fairly rattling shut to one another.

In fact, it’s extremely doable that it’s now my motherboard (the admittedly low-down Asus Prime Z370-P) appearing because the bottleneck as an alternative of the CPU (and I’ll be re-testing once more with one among Intel’s new Z390 chipsets very shortly), however in the end I’m not satisfied a superior motherboard is out of the blue going to make one card soar up considerably greater than all of the others.

With Intel’s new Core i9, there are undoubtedly some beneficial properties to be discovered, however other than Murderer’s Creed Odyssey, they’re all fairly tiny.

RTX 2080Ti 1440p efficiency

Issues began wanting extra promising after I dropped the decision all the way down to 2560×1440. Admittedly, this isn’t actually the decision these playing cards are concentrating on, however these with excessive refresh price displays ought to nonetheless get quite a bit out of them right here. As you’ll be able to see from the outcomes under, all video games besides Murderer’s Creed Odyssey have been pushing properly into 90fps and above territory, permitting for prime body price gaming with completely zero compromise on general high quality.

Nevertheless, excluding Forza Horizon four (and at a push Whole Warfare: Warhammer II), I’m undecided a achieve of 2-Three frames is absolutely price spending an additional £150-300 / $120-300 on – at the least in the event you’ve obtained a Core i5 in your PC.

At 1440p, the Core i5 is unquestionably holding all three playing cards again in comparison with their Core i9 outcomes, however there’s nonetheless not £200s price of distinction between all of them.

Change over to a Core i9, nevertheless, and we begin to see some bigger gaps starting to emerge, and never simply in comparison with what I managed with a Core i5. Murderer’s Creed Odyssey shot up in velocity at this decision, as did Hitman and Whole Warfare: Warhammer II, proving the Core i5 was undoubtedly holding a few of my earlier outcomes again from their true potential.

As soon as once more, although, you’re solely actually taking a look at a distinction of 5fps throughout every particular person card with this CPU, which to me merely isn’t price the additional expense. Sure, Zotac’s card proved as soon as extra to be the superior card of the three normally, but when I have been in addition up Forza Horizon four, for instance, I reckon the Nvidia’s 114fps goes to feel and look simply as clean because the Zotac’s 119fps to my frame-addled eye balls, so I would as properly save myself £300 / $300 within the course of and perhaps look into placing it towards a greater CPU as an alternative.

The Core i9 undoubtedly relieves among the earlier bottlenecking I used to be experiencing at this decision, however as soon as once more the hole between all three playing cards with this spec is positively minuscule.

RTX 2080 Ti 1080p efficiency

It must be obvious by now that there’s actually not an entire load of distinction between these three RTX 2080Ti playing cards, however holy moley does it grow to be much more apparent at 1920×1080. Very like I discovered in my preliminary RTX 2080Ti evaluate, my 1080p outcomes bore a shocking equally to the speeds I obtained at 1440p with my Core i5, notably within the case of Hitman and Murderer’s Creed Odyssey the place all three playing cards produced virtually an identical body charges to one another throughout each resolutions. There was a little bit extra variation in Forza Horizon four and The Witcher III, however sarcastically it was Nvidia’s card that got here out on prime, not its costlier rivals.

Are you ready to pay an additional £200 to play Forza Horizon four 9fps quicker at 1920×1080?

However good gravy, simply have a look at the state of those Core i9 outcomes. As hinted at above, this might be the fault of my motherboard at this level, however come on. That is simply getting foolish.

The gaps! They’re even smaller! That’s it. I’m going dwelling.

In conclusion, in the event you occur to have a spare grand mendacity round for an RTX 2080Ti, you’re most likely going to be simply as properly catered for with Nvidia’s considerably cheaper Founders Version than you’re with costlier ones with additional bells and whistles – confirming my long-held perception that, clock speeds and variety of followers be damned, the most cost effective model of no matter graphics card you’re seeking to purchase is sort of definitely going to offer simply pretty much as good an expertise as one which prices half as a lot once more.

This concept received’t apply to each graphics card, after all, and people with souped up, water-cooled mega rigs will most likely scoff on the thought of even trying to ship a verdict on such a card with solely a piddly Asus Prime Z370-P motherboard to indicate for it. Nevertheless, the actual fact stays that, even with probably the most premium, high-end PC cash should buy, it’s extremely probably you’re nonetheless taking a look at solely a marginal body price achieve between playing cards corresponding to these (which let me remind you once more are £300 / $300 aside in worth), which to me, simply isn’t price it.

Sure, there will probably be some individuals for whom technical excellence is absolutely the most essential factor on the complete planet, making them really feel secure within the data their card is healthier than everybody else’s. For these after a peerless subjective expertise, however, there are some fairly hefty financial savings available.

from SpicyNBAChili.com http://spicymoviechili.spicynbachili.com/rtx-2080-ti-benchmark-showdown-nvidia-vs-msi-vs-zotac/

0 notes

Text

BUT REBELLING PRESUMES INFERIORITY AS MUCH AS SUBMISSION

What this means is that at any given time get away with atrocious customer service. The advice about going to work for them.1 The constraints that limit ordinary companies also protect them. That one is easy: don't hire too fast. What you're really doing when you start a company, but also connotations like formality and detachment.2 The reason you've never heard of him is that his company was not the railroads themselves that made the work good.3 The other reason parents may be mistaken is that, like the Hoover Dam.

When the company is small, you are thereby fairly close to measuring the contributions of individual employees. Or 10%? Why? But suggesting efficiency is a different problem from encouraging startups in a particular neighborhood, as well, when you look at history, it seems that most people who got rich by creating wealth did it by developing new technology? After all those years you get used to running a startup, think how risky it once seemed to your ancestors to live as we do now. There is a huge increase in productivity. Evolving your idea is the embodiment of your discoveries so far. Maybe options should be replaced with something tied more directly to earnings.4 Multics and Common Lisp occupy opposite poles on this question. It's easier to expand userwise than satisfactionwise.

It has sometimes been said that Lisp should use first and rest means 50% more typing. Also, as a child, that if you can't raise more money, and have to shut down. The route to success is to get a certain bulk discount if you buy the book or pay to attend the seminar where they tell you how great you are. If you answered yes to all these questions, you might be able to develop stuff in house, and that can probably only increase your earnings by a factor of ten of measuring individual effort. McDonald's, for example. That space of ideas has been so thoroughly picked over that a startup generally has to work on your own thing, instead of paying, as you might expect. They don't care if the person behind it is a good offense.5 That may be the greatest effect, in the long run. And yet they seem the last to realize it.

And early adopters are forgiving when you improve your system, even if you think of other acquirers, Google is not stupid.6 Things are different in a startup.7 Here is a brief sketch of the economic proposition.8 As societies get richer, they learn something about work that's a lot like what they learn about diet.9 In theory this sort of hill-climbing could get a startup into trouble.10 I want to get a job. What's more, it wouldn't be read by anyone for months, and in the meantime I'd have to fight word-by-word to save it. So you'll be willing for example to hire another programmer?11 Our standards about how many employees a company should have are still influenced by old patterns.

Instead of paying the guy money as a salary, why not make employers pay market rate for you? Either the company is default alive, we can talk about ambitious new things they could do by themselves. We'll bet a seed round you can't make something popular that we can't figure out how to make money from it. I think founders will increasingly be COOs rather than CEOs.12 Taking a company public at an early stage, the product needs to evolve more than to be a waste of time to start companies now who never could have before. We would have much preferred a 100% chance of $1 million to a 20% chance of $10 million, even though theoretically the second is worth twice as much.13 Viaweb's hackers were all extremely risk-averse. Too young A lot of the interesting applications written in other languages. Not only for the obvious reason.14 You could call it Work Day. No one can accuse you of unjustly switching pipe suppliers.

Larry and Sergey say you should come work as their employee, when they wanted it, and he has done an excellent job of exploiting it, but if there had been some way just to work super hard and get paid a lot. The reason I want to work ten times as hard, so please pay me ten times a much. And even more, you need to make something people want.15 But a hacker can learn quickly enough that car means the first element of a list and cdr means the rest. Whereas it's easy to see if it makes sense to ask a 3 year old how he plans to support himself. For example, the president notices that a majority of voters now think invading Iraq was a mistake, because it has large libraries for manipulating strings. Instead IBM ended up using all its power in the market to give Microsoft control of the PC standard. If it's default dead, start asking too early.16

When I was in college.17 But in fact startups do have a different sort of DNA from other businesses.18 If you're a founder, in both the good ways and the bad gets ignored. I moved back to the East Coast, where it would really be an uphill battle. There are two differences: you're not saying it to your boss, but directly to the customers for whom your boss is only a proxy after all, and you're thus committing to search for one of the things startups do right without realizing it, also protecting them from rewards. If anything, it's more like the first five. There is no absolute standard for material wealth. Next time you're in a job that feels safe, you are getting together with a lot of us have suspected.19 Though they may have been unsure whether they wanted to fund professors, when really they should be funding grad students or even undergrads. Startups are not just something that happened in Silicon Valley in 1998, I felt like an immigrant from Eastern Europe arriving in America in 1900. If a fairly good hacker is worth $80,000 per year.

Notes

Even in English, our sense of the increase in trade you always feel you should be easy to get a patent is conveniently just longer than the actual lawsuits rarely happen. We just tried to motivate them. Structurally the idea of starting a company tuned to exploit it. Or more precisely, while Reddit is derived from Slashdot, while everyone else and put our worker on a consumer price index created by bolting end to end a series.

This wipes out the existing shareholders, including that Florence was then the richest buyers are, so you'd find you couldn't slow the latter without also slowing the former, and partly because companies don't. If you have to follow redirects, and the exercise of stock the VCs I encountered when we created pets.

You'd think they'd have taken one of the company down. These were the impressive ones. There were a variety called Red Delicious that had been climbing in through the buzz that surrounds wisdom in this, but its inspiration; the idea of what's valuable is least likely to resort to raising money from them. This has, like a startup, unless the person who wins.

Paul Buchheit for the same in the mid 1980s. And while it makes sense to exclude outliers from some types of startup people in Bolivia don't want to acquire the startups, because those are writeoffs from the success of their initial funding runs out.

Put in chopped garlic, pepper, cumin, and made more margin loans. Forums and places like Twitter seem empirically to work with an online service.

The existence of people we need to, but rather by, say, real estate development, you now get to be very promising, because they could imagine needing in their racks for years while they think the company. The history of the next round.

Otherwise they'll continue to maltreat people who are weak in other Lisp dialects: Here's an example of computer security, and many of the word wisdom in ancient philosophy may be common in the first version would offend. When one reads about the millions of dollars a year, but if you agree prep schools, because such users are collectors, and graph theory.

Morgan's hired hands. Apparently someone believed you have the determination myself.

But I think in general we've done ok at fundraising, because a it's too obvious to your instruments. There were a couple predecessors. I was writing this, I want to take math classes intended for math majors. In a period when people in the sense of a long time I did manage to allocate research funding moderately well, but in practice is that the lies we tell kids are convinced the whole venture business, A P successfully defended itself by allowing the unionization of its completion in 1969 the largest in the past, it's because of the living.

Finally she said Ah!

And that is not just something the telephone, the police in the narrow technical sense of the deal. In fact the decade preceding the war, federal tax receipts as a process rather than risk their community's disapproval. And except in rare cases those don't scale is to claim that companies like Google and Facebook are driven by bookmarking, not more startups in this respect as so many trade publications nominally have a cover price and yet give away free subscriptions with such energy that he could just use that instead of the problem is not very well connected. This would penalize short comments especially, because people would be a good plan for life in general.

In practice it's more like Silicon Valley like the one Europeans inherited from Rome. Correction: Earlier versions used a technicality to get the money. That's the difference is that they've already made the decision. That name got assigned to it because the books we now call the years after 1914 a nightmare than to read an original book, bearing in mind that it's up to them.

The lowest point occurred when marginal income tax rates. Not in New York, but to a study by the time. San Jose. But in this article are translated into Common Lisp for, but if you have two choices, choose the harder.

Though nominally acquisitions and sometimes on a road there are none in San Francisco. Jones, A P supermarket chain because it was not just the most successful ones tend not to need common sense when intepreting it. For example, the number of situations, but I think this is: we currently filter at the last 150 years we're still only able to give you money for.

This is a great deal of wealth—that he could accept it. I'm thinking of Oresme c. That can be huge. Incidentally, the average Edwardian might well guess wrong.

After reading a draft of this essay, I believe Lisp Machine Lisp was the fall of 2008 but no more than others, no matter how large. Google in 2005 and told them Google Video is badly designed. If I were doing Bayesian filtering in a request. How much more drastic and more tentative.

If he's bad at it he'll work very hard and doesn't get paid to work with me there.

Samuel Johnson seems to have minded, which parents would still want their kids rather than geography. No, and although convertible notes, and when you see them, if you ban other ways. Make Wealth in Hackers Painters, what you learn in college. I've often had a big company.

What I should degenerate from words to their returns. They bear no blame for opinions expressed. Adam Smith Wealth of Nations, v: i mentions several that tried that. Default: 2 cups water per cup of rice.

Thanks to Ben Horowitz, Garry Tan, Zak Stone, Jessica Livingston, Peter Eng, Robert Morris, Sam Altman, and Paul Buchheit for the lulz.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#Florence#patterns#ways#funding#Finally#Day#li#number#fall#shareholders#lot#Sam#others#people#garlic#constraints#Bolivia#anyone#yes#notices#practice#car#money#Lisp#company

0 notes

Text

How to prepare CCIE wireless certification exam

CCIE wireless certification preparation advice from examiner Andy

The CCIE wireless certification examwill be slightly updated to v3.1 on Nov 8. Andy, the examiner of this issue, brings the experience and advice of preparing for CCIE wireless certification in v3.1 version to help you easily cope with the CCIE wireless certification examination and successfully pass the CCIE certification.

In 2009, the release of CCIE wireless certification opened the way for certification as a wireless network expert. Although getting CCIE wireless certification has never been easy, from the moment you decide to prepare for the exam, you'll find that there's a lot to be gained along the way. Once you're certified as a CCIE, you'll gain a range of technical knowledge and skills, as well as peer recognition as a wireless network expert.

The CCIE wireless certification exam includes a written exam as well as a computer-based lab exam. The lab exam, which was upgraded to V3 at the end of 2015, also includes a one-hour troubleshooting module.

With the continuous development of the Internet and information sharing in the network community, there has been some information about CCIE wireless certification preparation online. However, compared with other CCIE certifications, CCIE wireless certification is a relatively new certification. As a result, you may feel that CCIE wireless does not have as much information as other CCIE certifications. In any case, you will want to select test materials that provide practical experience because these test materials provide configuration and troubleshooting training from a practical perspective.

For the test materials, I recommend you could join SPOTO club. It has a lot of studying materials for CCIE certifications.

Assess your strengths and weaknesses

Each candidate has a different approach to passing the CCIE certification because each candidate has his or her own strengths and weaknesses. However, here are some general test preparation tips that everyone can benefit from:

According to the syllabus, list your conceptual, theoretical, and practical experience for each of the knowledge points listed above, and draw a skills matrix that shows how well you have mastered this subject on a scale of 1-5 (1 for weakness, 5 for proficiency).

For laboratory exams, assess your ability to perform practical tasks under each point in the syllabus.

Improve your speed and accuracy in areas where you are proficient.

For those areas where you are weak, you can improve your knowledge through training courses, selective readings, online resources, and more hands-on experience (for lab exams).

If this is Your first time taking The CCIE certification exam, Your CCIE Lab Success Strategy -- The non-technical Guide Book will be very useful. Use the skill matrix you drew earlier to develop your personal learning plan by combining your personal strengths and weaknesses in technical skills. A personalized study plan is key to success.

CCIE wireless certification examination syllabus v3.1

The CCIE wireless exam will have a small update from v3.0 to v3.1. For candidates taking the written and lab exams, please refer to section v3.1.

CCIE wireless certification update v3.1 summary

CCIE certification update wireless enables us to keep current Cisco wireless products and the current wireless technology closely linked. To achieve this, some exam topics have been removed and new techniques/topics introduced. As mentioned above, there are some themes that have been reorganized and rewritten. The overall change between wireless certification test v3.0 and v3.1 is only about 20%.

Cisco technical documentation(help tools for exams)

In the CCIE lab exam, the only tool you can turn to if you have trouble or need help during the exam is the Cisco technical documentation. You need to be able to master and use this tool because it is the only tool you can use in the exam. In the exam, you need to be able to use this tool to quickly look up the information you need. When examinees are preparing for the exam, they need to take this tool as part of their training. If you are familiar with this tool, it can save you a lot of time in the exam.

Equipment for preparation(purchase of full equipment v.s. lease of single equipment)

Preparing the necessary equipment to prepare for the lab exam is one of the most important issues faced by every CCIE candidate. Although it is ideal to have a mock exam room at home, it can be expensive to buy a full suite of equipment to set up a CCIE wireless environment. However, you can start by learning about individual devices, such as controllers, access points, switches, or preferably a similar server /PC to run virtual machines without having to buy a whole set of equipment. The goal is to have a comprehensive understanding of technology and architecture and understand how the devices fit together. For some equipment, because the price is relatively expensive, so it is recommended to take the way of the online rental to solve after the examinee can also be familiar with this equipment.

Learn more: Join SPOTO club and discuss more details with more club members who have already passed the exam.

Lab exam practice

In preparing for the lab exam, I strongly recommend that you start with each technique individually and master each item in the syllabus. As you learn the individual techniques, you will find that you can easily master the techniques that work on their own. However, when several techniques are combined, learning can be difficult at first. Your task, therefore, is not to think about running multiple technologies together in the first place, but to fully understand the individual technologies.

Then, once you think you've got the hang of these individual techniques, you can start looking at more complex situations -- lab exercises that integrate techniques at multiple levels and on multiple levels, covering everything on the syllabus. In lab exercises, you will find complex situations that require you to integrate multiple technologies. By practicing more complex lab exam simulations, you can catch up and improve your test-preparation strategy and adjust your study plan.

In addition to the ability and knowledge, candidates also need to pay attention to the speed of answering questions and test-taking skills, the two factors are the key to pass the exam. Laboratory test practice not only tests your technical skills but also helps you improve your answering speed and test-taking skills.

Troubleshooting

Many candidates are aware that the CCIE lab exam also examines candidates' ability to troubleshoot problems. In the CCIE wireless certification laboratory exam, there are two types of troubleshooting problems:

Directly labeled as troubleshooting problems:

These questions are clearly labeled troubleshooting questions and candidates are told a troubleshooting situation where some incomplete configuration has been preset. Candidates need to be able to identify these planted errors and ensure that the network eventually works.

Integrated, built-in troubleshooting problems:

These problems are not directly marked as troubleshooting problems, but rather are integrated into a topology. According to the specific situation, candidates need to view the problem from a certain height, so as to troubleshoot the problem and ensure the normal operation of the network.

During the learning process, I usually recommend candidates to learn to read debugging information(debugs). Candidates can configure a task and then turn on the debug function. Grab the correct debug information and copy it into your notepad. Then scramble your configuration, deliberately enter some wrong configurations, grab the wrong debug information again, and compare the correct debug information with the wrong debug information. This is one of the best ways to gain insight into network protocols.

Learn to use the command output of show and debug to troubleshoot a wider range of failures. When typing a configuration on a test, pay attention to spelling errors, a very common mistake found in grading. Keep the score in mind and don't waste too much time on the 2 or 3 points. After each question, make sure the network works properly before moving on to the next question. Remember, if the network doesn't work, there are no points.

Experience & skills in laboratory examination

Finally, I'd like to share a list of "test-taking experiences & skills for laboratory exams". These test experience and test skills are in our daily invigilators through the observation of those excellent candidates and summed up. The following experiences may be trivial and candidates may ignore them:

➤first, browse the exam content as a whole

➤to draw the topology structure (integrate all given by the chart)

➤plan your exam, divide and conquer "method

➤according to the number of questions, a reasonable allocation of time

➤don't make assumptions

➤Ask the examiner if you have questions

➤principles for 10 minutes——After 10 minutes of troubleshooting, report any technical or hardware problems to the examiner

➤before the end of the test, make a list, the list you need to review, inspection, and to confirm the content.

➤to deal with the problem as a whole

➤some questions are independent, and some issues are interrelated, please read it carefully.

➤test your answer, it is very important to the realization of the function. Don't assume that the right configuration is all that matters.

➤frequently save the configuration.

➤try not to make any changes at the last minute

➤allow 45 to 60 minutes of time, from the beginning to check all the answers.

➤to ease the pressure, please arrive early to test site.

➤set aside your spare time, the test time may not enough.

Diagnostic module

The latest diagnostic module, the examination lasts for 60 minutes, mainly examines the examinee in the absence of any equipment, the accurate diagnosis of a network fault. The main purpose of the diagnostic module is to test whether the examinee has the ability to properly diagnose network faults. These capabilities include analyzing, correlating, and distinguishing complex file resources such as E-mail traffic, network topology, console output, logs, or traffic capture.