#tensorflow lite

Explore tagged Tumblr posts

Text

TensorFlow Lite is a lightweight version of TensorFlow, designed to bring machine learning (ML) models to mobile, embedded, and edge devices. It enables fast, efficient, and offline AI experiences—without depending on cloud servers.

0 notes

Text

youtube

Lesson 2

Adding Audio classification to your mobile app simple tracking wawes size windows and the end adding tensorflow lite task library.

*NB: still by the taken capture there dog on microphone tool

#tensorflow lite#Audio text collaboration#pre-trained model#adding task library#google#tracking wawes#by size windows#Youtube

1 note

·

View note

Text

Fluent Bit and AI: Unlocking Machine Learning Potential

These days, everywhere you look, there are references to Generative AI, to the point that what have Fluent Bit and GenAI got to do with each other? GenAI has the potential to help with observability, but it also needs observation to measure its performance, whether it is being abused, etc. You may recall a few years back that Microsoft was trailing new AI features for Bing, and after only having…

View On WordPress

#AI#Cloud#Data Drift#development#Fluent Bit#GenAI#Machine Learning#ML#observability#Security#Tensor Lite#TensorFlow

0 notes

Text

Tensorflow Lite for Microcontrollers | Bermondsey Electronics Limited

Explore the future of embedded AI with Bermondsey Electronics Limited. Harness the power of TensorFlow Lite for Microcontrollers to bring intelligence to your devices. Elevate your products with advanced machine learning capabilities.

0 notes

Text

🚀 Triển Khai Phân Loại Hình Ảnh Trên Thiết Bị Android – Dễ Hiểu & Tiện Lợi 📲

Bạn đang quan t��m đến công nghệ AI 🤖 và muốn tìm hiểu cách phân loại hình ảnh ngay trên chiếc điện thoại Android của mình? 🌐 Không cần đợi thêm nữa, bài viết này là dành cho bạn! Hãy cùng khám phá cách triển khai một mô hình phân loại ảnh với các bước dễ hiểu, phù hợp cho cả người mới bắt đầu lẫn chuyên gia.

Tại sao lại chọn phân loại hình ảnh trên Android? 📸

Nhanh chóng 🚀: Phân loại ảnh trực tiếp trên thiết bị giúp xử lý hình ảnh gần như ngay lập tức, không cần kết nối mạng.

Tiện lợi 👍: Dễ dàng tích hợp vào các ứng dụng thực tế như nhận diện sản phẩm, phân loại thực vật, động vật và nhiều ứng dụng khác.

Bảo mật 🔒: Dữ liệu hình ảnh không cần tải lên server, đảm bảo quyền riêng tư cho người dùng.

Các bước triển khai 🛠️

Chuẩn bị mô hình AI: Tìm kiếm mô hình phân loại ảnh phù hợp hoặc tự đào tạo mô hình của riêng bạn với các nền tảng như TensorFlow hoặc PyTorch.

Tích hợp vào Android: Sử dụng thư viện TensorFlow Lite để tối ưu hóa mô hình cho thiết bị di động 📱.

Kiểm tra và triển khai: Đảm bảo ứng dụng chạy mượt mà, kiểm tra độ chính xác và tối ưu hóa cho hiệu năng tốt nhất 🔧.

Triển khai phân loại hình ảnh trên thiết bị Android

Khám phá thêm những bài viết giá trị tại aicandy.vn

3 notes

·

View notes

Text

🚀 Khám phá Cách Triển Khai Phân Loại Hình Ảnh Trên Thiết Bị Android! 📱🤖

Bạn có từng thắc mắc làm sao điện thoại của mình nhận diện được khuôn mặt, phân biệt được các loại hoa, hoặc thậm chí xác định các món ăn? 🍕🌸📸 Hôm nay, chúng ta sẽ cùng nhau tìm hiểu một chủ đề thú vị trong lĩnh vực Trí tuệ Nhân tạo và Học máy: Phân loại hình ảnh trên thiết bị Android! 📲💡

🔍 Vì sao phân loại hình ảnh quan trọng? Việc phân loại hình ảnh đã trở thành một phần không thể thiếu trong ứng dụng di động ngày nay. Từ camera thông minh, đến các ứng dụng như Google Photos hay Instagram, tất cả đều ứng dụng công nghệ phân loại hình ảnh để mang lại trải nghiệm tốt nhất cho người dùng. 🌐✨

💡 Nội dung bài viết Trong bài viết trên website của chúng mình, bạn sẽ tìm thấy:

✅ Hướng dẫn từng bước: Từ cài đặt môi trường đến xây dựng mô hình cơ bản

✅ Các công cụ cần thiết như TensorFlow Lite và Android Studio

✅ Tips tối ưu hóa hiệu suất và giảm thiểu dung lượng ứng dụng

✅ Ví dụ minh họa và mã nguồn mẫu để bạn dễ dàng bắt tay vào thực hiện

🚀 Ứng dụng thực tế của phân loại hình ảnh Phân loại hình ảnh không chỉ giúp nâng cao trải nghiệm người dùng mà còn mở ra rất nhiều cơ hội phát triển sản phẩm mới, từ hệ thống nhận diện bệnh cho nông sản 🍅 đến kiểm tra chất lượng sản phẩm trong ngành công nghiệp. ����💼

📌 Đừng bỏ lỡ bài viết này nếu bạn là một developer muốn học hỏi thêm về AI và ML trên nền tảng di động! 👉Triển khai phân loại hình ảnh trên thiết bị Android

Khám phá thêm những bài viết giá trị tại aicandy.vn

3 notes

·

View notes

Text

📱 Triển Khai Phân Loại Hình Ảnh Trên Thiết Bị Android: Đưa AI Vào Tầm Tay Bạn! 📱

Bạn có biết rằng với sự phát triển của công nghệ, giờ đây chúng ta có thể tận dụng AI ngay trên chiếc điện thoại Android của mình? 🚀 Từ phân loại ảnh đơn giản đến nhận diện vật thể phức tạp, việc tích hợp AI vào ứng dụng di động đã giúp cho các tính năng trở nên thông minh và tiện dụng hơn bao giờ hết! Hãy tưởng tượng một ứng dụng Android có thể phân loại hình ảnh trong thời gian thực 🌟 – công cụ lý tưởng cho các dự án cá nhân và cả doanh nghiệp.

💡 Triển khai phân loại hình ảnh trên Android có nhiều thử thách như tối ưu hóa mô hình sao cho nhẹ, đảm bảo tốc độ và tiết kiệm pin 🔋. Từ việc lựa chọn mô hình đến kỹ thuật tối ưu hóa như TensorFlow Lite và pytorch, bài viết sẽ hướng dẫn bạn từng bước để đưa ứng dụng của bạn đến gần hơn với công nghệ AI tân tiến mà vẫn đảm bảo hiệu suất mượt mà.

👉 Khám phá ngay bài viết để tìm hiểu cách biến ý tưởng AI trên di động thành hiện thực và mang đến trải nghiệm tuyệt vời cho người dùng! 🔗 Triển khai phân loại hình ảnh trên thiết bị Android

Khám phá thêm những bài viết giá trị tại aicandy.vn

2 notes

·

View notes

Text

Anton R Gordon’s Blueprint for Real-Time Streaming AI: Kinesis, Flink, and On-Device Deployment at Scale

In the era of intelligent automation, real-time AI is no longer a luxury—it’s a necessity. From fraud detection to supply chain optimization, organizations rely on high-throughput, low-latency systems to power decisions as data arrives. Anton R Gordon, an expert in scalable AI infrastructure and streaming architecture, has pioneered a blueprint that fuses Amazon Kinesis, Apache Flink, and on-device machine learning to deliver real-time AI performance with reliability, scalability, and security.

This article explores Gordon’s technical strategy for enabling AI-powered event processing pipelines in production, drawing on cloud-native technologies and edge deployments to meet enterprise-grade demands.

The Case for Streaming AI at Scale

Traditional batch data pipelines can’t support dynamic workloads such as fraud detection, anomaly monitoring, or recommendation engines in real-time. Anton R Gordon's architecture addresses this gap by combining:

Kinesis Data Streams for scalable, durable ingestion.

Apache Flink for complex event processing (CEP) and model inference.

Edge inference runtimes for latency-sensitive deployments (e.g., manufacturing or retail IoT).

This trio enables businesses to execute real-time AI pipelines that ingest, process, and act on data instantly, even in disconnected or bandwidth-constrained environments.

Real-Time Data Ingestion with Amazon Kinesis

At the ingestion layer, Gordon uses Amazon Kinesis Data Streams to collect data from sensors, applications, and APIs. Kinesis is chosen for:

High availability across multiple AZs.

Native integration with AWS Lambda, Firehose, and Flink.

Support for shard-based scaling—enabling millions of records per second.

Kinesis is responsible for normalizing raw data and buffering it for downstream consumption. Anton emphasizes the use of data partitioning and sequencing strategies to ensure downstream applications maintain order and performance.

Complex Stream Processing with Apache Flink

Apache Flink is the workhorse of Gordon’s streaming stack. Deployed via Amazon Kinesis Data Analytics (KDA) or self-managed ECS/EKS clusters, Flink allows for:

Stateful stream processing using keyed aggregations.

Windowed analytics (sliding, tumbling, session windows).

ML model inference embedded in UDFs or side-output streams.

Anton R Gordon’s implementation involves deploying TensorFlow Lite or ONNX models within Flink jobs or calling SageMaker endpoints for real-time predictions. He also uses savepoints and checkpoints for fault tolerance and performance tuning.

On-Device Deployment for Edge AI

Not all use cases can wait for roundtrips to the cloud. For industrial automation, retail, and automotive, Gordon extends the pipeline with on-device inference using NVIDIA Jetson, AWS IoT Greengrass, or Coral TPU. These edge devices:

Consume model updates via MQTT or AWS IoT.

Perform low-latency inference directly on sensor input.

Reconnect to central pipelines for data aggregation and model retraining.

Anton stresses the importance of model quantization, pruning, and conversion (e.g., TFLite or TensorRT) to deploy compact, power-efficient models on constrained devices.

Monitoring, Security & Scalability

To manage the entire lifecycle, Gordon integrates:

AWS CloudWatch and Prometheus/Grafana for observability.

IAM and KMS for secure role-based access and encryption.

Flink Autoscaling and Kinesis shard expansion to handle traffic surges.

Conclusion

Anton R Gordon’s real-time streaming AI architecture is a production-ready, scalable framework for ingesting, analyzing, and acting on data in milliseconds. By combining Kinesis, Flink, and edge deployments, he enables AI applications that are not only fast—but smart, secure, and cost-efficient. This blueprint is ideal for businesses looking to modernize their data workflows and unlock the true potential of real-time intelligence.

0 notes

Text

AI Model Integration for Apps: A Complete Developer’s Guide to Smarter Applications

In today’s digital-first world, applications are becoming smarter, faster, and more personalized thanks to the integration of Artificial Intelligence (AI). Whether you're a solo developer or part of a product team, embedding AI into your app can dramatically enhance its performance, usability, and value. From predictive analytics to voice recognition and recommendation systems, AI Model Integration for Apps is now a key strategy in modern app development.

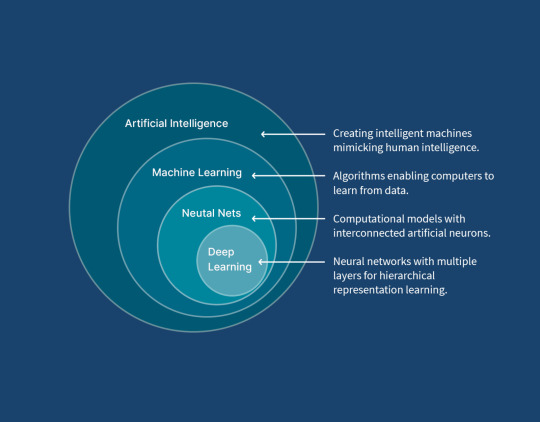

What Is AI Model Integration? AI model integration refers to the process of incorporating machine learning models into software applications so they can make intelligent decisions based on data. These models are trained to perform tasks such as identifying images, predicting trends, understanding natural language, or automating responses—without the need for explicit programming for every possible scenario. When properly implemented, AI transforms static apps into dynamic, adaptive tools that continue to learn and improve over time.

Benefits of AI Integration in App Development

Personalized User Experiences: AI helps tailor content, notifications, and interactions based on user behavior, preferences, and usage patterns.

Smarter Automation: Repetitive tasks like sorting emails, flagging spam, or generating responses can be automated, saving time and effort.

Faster Decision-Making: Real-time analytics powered by AI models offer quick insights that improve user satisfaction and engagement.

Reduced Human Error: In fields like finance, healthcare, and logistics, AI models help catch inconsistencies or anomalies that might go unnoticed.

Enhanced Accessibility: Features such as speech-to-text, voice commands, and intelligent assistants make apps more inclusive and user-friendly.

Practical Use Cases of AI in Apps

E-commerce Apps: Recommending products based on user activity, managing inventory, and detecting fraudulent transactions.

Health & Fitness Apps: Predicting health trends, monitoring vital stats, and suggesting routines.

Travel Apps: Suggesting personalized travel itineraries or predicting flight delays.

Chat Applications: Implementing AI chatbots for 24/7 customer support.

Finance Apps: Detecting unusual activity, automating spending reports, and offering budget advice.

Steps for AI Model Integration The process of integrating AI models typically follows these steps:

Define the Problem: Decide what you want the AI model to do—recommend products, interpret voice commands, detect faces, etc.

Collect and Prepare Data: The model’s performance depends on high-quality data. Clean, labeled datasets are crucial.

Choose or Build a Model: You can either use pre-trained models from platforms like TensorFlow, PyTorch, or OpenAI, or build your own using custom datasets.

Train the Model: If you're not using a pre-trained model, train your model using machine learning algorithms relevant to your problem.

Deploy the Model: This can be done through APIs (such as REST APIs) or mobile SDKs, depending on your app’s environment.

Integrate with the App: Embed the model in your codebase and create endpoints or interfaces for your app to interact with it.

Test and Monitor: Evaluate the model’s accuracy, adjust for edge cases, and continuously monitor its performance in the real world. For a complete breakdown with code snippets, platform options, and common pitfalls to avoid, visit the full guide on AI Model Integration for Apps.

Popular Tools and Libraries for AI Integration

TensorFlow Lite: Designed for deploying machine learning models on mobile and embedded devices.

PyTorch Mobile: Offers a flexible and dynamic framework ideal for rapid prototyping and model deployment.

ML Kit by Google: A set of mobile SDKs that bring on-device machine learning to Android and iOS.

OpenAI API: Provides access to advanced language models like GPT, perfect for chatbots and text generation.

Amazon SageMaker: Helps build, train, and deploy machine learning models at scale with built-in support for APIs.

Best Practices for AI Model Integration

Start Small: Focus on integrating a single AI-powered feature before scaling.

Use Lightweight Models: Especially for mobile apps, use compressed or distilled models to maintain performance.

Prioritize User Privacy: Be mindful of how you collect and process user data and comply with data laws like GDPR and CCPA.

Maintain Transparency: Ensure users understand how AI is being used, especially when decisions impact their experience.

Test for Bias and Accuracy: Audit models regularly to prevent biased or inaccurate outcomes.

Future Trends in AI App Integration Expect more apps offering real-time sentiment analysis, emotion detection, personalized coaching, and integration with AR/VR. Cross-platform intelligence will also rise, where an app learns from your entire digital ecosystem.

Final Thoughts The power of AI lies in its ability to adapt, learn, and improve—qualities that, when integrated into apps, drive significant value for users. From increasing efficiency to delivering personalized experiences, AI model integration helps apps stand out in a crowded market.

1 note

·

View note

Text

Mobile App Development Secrets for 2025

In our hyper-connected world, mobile apps drive everything—from grabbing your morning coffee to handling your finances. Behind every amazing app lies a technology stack that not only powers great performance but also sets the stage for future growth. Whether you're starting with a minimum viable product (MVP) or mapping out a full-scale digital strategy, choosing the right development tools is critical.

What Are Mobile App Development Technologies?

Mobile app technologies include all the programming languages, frameworks, platforms, and tools used to build apps for your smartphone or tablet. Think of it like crafting your favorite recipe: you need the right ingredients to create something truly special. The choices you make here influence the development speed, cost, performance, and overall user experience of your app.

Native vs. Cross-Platform Development: Finding Your Fit

One of the biggest early choices is whether to go native or cross-platform. Let’s break it down:

Native Mobile App Development

Native apps are developed specifically for one platform—either iOS or Android, using platform-centric languages (Swift for iOS or Kotlin for Android). This approach offers best-in-class performance, smoother integration with device hardware, and top-tier user experiences.

Pros:

Best performance

Full access to device features (think camera, GPS, etc.)

Seamless updates with the operating system

Cons:

Requires two separate codebases if you need both iOS and Android

Typically more time-consuming and expensive

Ideal for high-demand sectors such as banking, gaming, healthcare, or AR/VR applications.

Cross-Platform Mobile App Development

Frameworks like Flutter and React Native let you craft a single codebase that deploys across both iOS and Android. This approach is great for faster development, reduced costs, and consistent design across platforms—but might trade off a bit on native performance.

Pros:

Quicker turnaround and lower development costs

Consistent UI experience across devices

Cons:

May have limited access to some native device features

Slight performance differences compared to native solutions

Perfect for startups, MVPs, and businesses with tighter budgets.

The Leading Frameworks in 2025

Let’s look at some of the coolest frameworks shaking up mobile app development this year:

Flutter

Language: Dart

By: Google

Flutter is renowned for its beautifully customizable UIs and high performance across different platforms using just one codebase. It’s a favorite if you need a dynamic, pixel-perfect design without the extra overhead.

React Native

Language: JavaScript

By: Meta (Facebook)

If you’re from a web development background, React Native feels like home. It uses reusable components to speed up prototyping and quickly bring your app to life.

Kotlin Multiplatform

Language: Kotlin

By: JetBrains and Google

A newer, exciting option, Kotlin Multiplatform allows for sharing core business logic across platforms while giving you the flexibility to build native user interfaces for each.

Swift and SwiftUI

Language: Swift

By: Apple

For purely iOS-focused projects, Swift combined with SwiftUI offers robust performance and a seamless integration with the Apple ecosystem, perfect for apps needing advanced animations and responsiveness.

Hybrid Approaches

Using web technologies like HTML, CSS, and JavaScript wrapped in native containers, hybrid apps (via frameworks like Ionic or Apache Cordova) let you quickly convert an existing website into a mobile app. They’re great for rapid development but might not always match the performance of native apps.

Trends Shaping Mobile App Development

Looking ahead to 2025, several trends are redefining how we build mobile apps:

Next-Level AI and Machine Learning: Integrating on-device AI (using tools like Core ML or TensorFlow Lite) can help personalize experiences and power innovative features like voice assistants.

Wearable Integration: With the rise of smartwatches and fitness trackers, native development for wearables is booming.

5G and Real-Time Experiences: As 5G networks expand, expect more apps to offer real-time features and smoother interactions, especially in areas like gaming and AR.

Low-Code/No-Code Platforms: For rapid prototyping or internal tools, platforms that simplify app creation without deep coding knowledge are becoming increasingly popular.

Choosing the Right Tech for Your App

Before you jump into development, ask yourself:

What’s Your Budget?

Limited funds? Cross-platform options like Flutter or React Native might be perfect.

Budget is less of an issue? Native development can deliver that extra performance boost.

How Fast Do You Need to Launch?

If time-to-market is key, cross-platform frameworks speed up the process.

For a more robust, long-term solution, native apps may be worth the wait.

Which Features Are Essential?

Need deep hardware integration? Native is the way to go.

For straightforward functionality, a hybrid approach can work wonders.

What’s Your Team’s Expertise?

A team comfortable with web technologies might excel with React Native.

If your developers are seasoned in iOS or Android, diving into Swift or Kotlin may yield the best results.

Final Thoughts

There isn’t a magic bullet in mobile app development—the best choice always depends on your unique objectives, budget, timeline, and team. If you’re looking for high performance and don’t mind investing extra time, native development is ideal. However, if speed and cost-efficiency are your top priorities, cross-platform frameworks like Flutter or React Native offer tremendous value.

At Ahex Technologies, we’ve partnered with start-ups, small businesses, and enterprises to build tailored mobile solutions that fit their exact needs. Whether you’re at the ideation stage or ready to scale up, our team is here to help you navigate the tech maze and set your app up for long-term success.

Questions or Thoughts?

I’m curious, what trends in mobile app development are you most excited about for 2025? Feel free to share your insights, ask questions, or start a discussion right here. Let’s keep the conversation going!

#ahex technologies#android mobile app development company#android application development company#mobile application development#mobile app development

0 notes

Text

Edge-Native Custom Apps: Why Centralized Cloud Isn’t Enough Anymore

The cloud has transformed how we build, deploy, and scale software. For over a decade, centralized cloud platforms have powered digital transformation by offering scalable infrastructure, on-demand services, and cost efficiency. But as digital ecosystems grow more complex and data-hungry, especially at the edge, cracks are starting to show. Enter edge-native custom applications—a paradigm shift addressing the limitations of centralized cloud computing in real-time, bandwidth-sensitive, and decentralized environments.

The Problem with Centralized Cloud

Centralized cloud infrastructures still have their strengths, especially for storage, analytics, and orchestration. However, they're increasingly unsuited for scenarios that demand:

Ultra-low latency

High availability at remote locations

Reduced bandwidth usage

Compliance with local data regulations

Real-time data processing

Industries like manufacturing, healthcare, logistics, autonomous vehicles, and smart cities generate massive data volumes at the edge. Sending all of it back to a centralized data center for processing leads to lag, inefficiency, and potential regulatory risks.

What Are Edge-Native Applications?

Edge-native applications are custom-built software solutions that run directly on edge devices or edge servers, closer to where data is generated. Unlike traditional apps that rely heavily on a central cloud server, edge-native apps are designed to function autonomously, often in constrained or intermittent network environments.

These applications are built with edge-computing principles in mind—lightweight, fast, resilient, and capable of processing data locally. They can be deployed across a variety of hardware—from IoT sensors and gateways to edge servers and micro data centers.

Why Build Custom Edge-Native Apps?

Every organization’s edge environment is unique—different devices, network topologies, workloads, and compliance demands. Off-the-shelf solutions rarely offer the granularity or adaptability required at the edge.

Custom edge-native apps are purpose-built for specific environments and use cases. Here’s why they’re gaining momentum:

1. Real-Time Performance

Edge-native apps minimize latency by processing data on-site. In mission-critical scenarios—like monitoring patient vitals or operating autonomous drones—milliseconds matter.

2. Offline Functionality

When connectivity is spotty or non-existent, edge apps keep working. For remote field operations or rural infrastructure, uninterrupted functionality is crucial.

3. Data Sovereignty & Privacy

By keeping sensitive data local, edge-native apps help organizations comply with GDPR, HIPAA, and similar regulations without compromising on performance.

4. Reduced Bandwidth Costs

Not all data needs to be sent to the cloud. Edge-native apps filter and process data locally, transmitting only relevant summaries or alerts, significantly reducing bandwidth usage.

5. Tailored for Hardware Constraints

Edge-native custom apps are optimized for low-power, resource-constrained environments—whether it's a rugged industrial sensor or a mobile edge node.

Key Technologies Powering Edge-Native Development

Developing edge-native apps requires a different stack and mindset. Some enabling technologies include:

Containerization (e.g., Docker, Podman) for packaging lightweight services.

Edge orchestration tools like K3s or Azure IoT Edge for deployment and scaling.

Machine Learning on the Edge (TinyML, TensorFlow Lite) for intelligent local decision-making.

Event-driven architecture to trigger real-time responses.

Zero-trust security frameworks to secure distributed endpoints.

Use Cases in Action

Smart Manufacturing: Real-time anomaly detection and predictive maintenance using edge AI to prevent machine failures.

Healthcare: Medical devices that monitor and respond to patient data locally, without relying on external networks.

Retail: Edge-based checkout and inventory management systems to deliver fast, reliable customer experiences even during network outages.

Smart Cities: Traffic and environmental sensors that process data on the spot to adjust signals or issue alerts in real time.

Future Outlook

The rise of 5G, AI, and IoT is only accelerating the demand for edge-native computing. As computing moves outward from the core to the periphery, businesses that embrace edge-native custom apps will gain a significant competitive edge—pun intended.

We're witnessing the dawn of a new software era. It’s no longer just about the cloud—it’s about what happens beyond it.

Need help building your edge-native solution? At Winklix, we specialize in custom app development designed for today’s distributed digital landscape—from cloud to edge. Let’s talk: www.winklix.com

#custom software development company in melbourne#software development company in melbourne#custom software development companies in melbourne#top software development company in melbourne#best software development company in melbourne#custom software development company in seattle#software development company in seattle#custom software development companies in seattle#top software development company in seattle#best software development company in seattle

0 notes

Text

How is TensorFlow used in neural networks?

TensorFlow is a powerful open-source library developed by Google, primarily used for building and training deep learning and neural network models. It provides a comprehensive ecosystem of tools, libraries, and community resources that make it easier to develop scalable machine learning applications.

In the context of neural networks, TensorFlow enables developers to define and train models using a flexible architecture. At its core, TensorFlow operates through data flow graphs, where nodes represent mathematical operations and edges represent the multidimensional data arrays (tensors) communicated between them. This structure makes it ideal for deep learning tasks that involve complex computations and large-scale data processing.

TensorFlow’s Keras API, integrated directly into the library, simplifies the process of creating and managing neural networks. Using Keras, developers can easily stack layers to build feedforward neural networks, convolutional neural networks (CNNs), or recurrent neural networks (RNNs). Each layer, such as Dense, Conv2D, or LSTM, can be customized with activation functions, initializers, regularizers, and more.

Moreover, TensorFlow supports automatic differentiation, allowing for efficient backpropagation during training. Its optimizer classes like Adam, SGD, and RMSprop help adjust weights to minimize loss functions such as categorical_crossentropy or mean_squared_error.

TensorFlow also supports GPU acceleration, which drastically reduces the training time for large neural networks. Additionally, it provides utilities for model saving, checkpointing, and deployment across platforms, including mobile and web via TensorFlow Lite and TensorFlow.js.

TensorFlow’s ability to handle data pipelines, preprocessing, and visualization (via TensorBoard) makes it an end-to-end solution for neural network development from experimentation to production deployment.

For those looking to harness TensorFlow’s full potential in AI development, enrolling in a data science machine learning course can provide structured and hands-on learning.

0 notes

Text

Projeto Completo da Placa CM5 HyperModule: Arquitetura Soberana para Computação Modular de Alta Performance

(Baseado em documentação técnica, pesquisas acadêmicas e padrões industriais)

🔍 1. Objetivo Estratégico

Desenvolver uma placa-mãe modular de código aberto para o Compute Module 5 (CM5) que supere dispositivos comerciais como MacBook Air M2 em:

Desempenho Computacional (26 TOPS/W com Hailo-8)

Resiliência Térmica (operar a -20°C a 70°C sem throttling)

Soberania Tecnológica (100% reparável com peças impressas em 3D)

⚙️ 2. Especificações Técnicas da Placa

Núcleo Computacional

Componente Especificação Finalidade SoC Principal Broadcom BCM2712 (4x Cortex-A76) Processamento central Coprocessador IA Hailo-8 M.2 (26 TOPS/W) Inferência neural offline Memória LPDDR5 8GB + FRAM/MRAM para failover Preservação de estado durante falhas

Subsistemas Críticos

Módulo Tecnologia Inovação Térmico Heatpipe de grafeno + PCM magnético Dissipação passiva até 15W TDP Energia Baterias LiFePO4 + Supercaps Maxwell Hot-swap em <5s sem perda de dados Segurança TPM 2.0 + STM32H7 secure enclave Autodestruição física do firmware

🛠️ 3. Design Avançado da PCB

Parâmetros Chave

Característica Especificação Ferramenta de Validação Substrato Rogers 4350B (4 camadas) Simulação Ansys HFSS Impedância 85Ω ±5% (PCIe Gen4) TDR Teledyne LeCroy Dissipação Microcanais capilares integrados Testes em câmara Weiss WK11-340 Conectores Mecânica de travamento magnético Certificação MIL-STD-810H

Topologia de Alimentação

graph LR USB_C[USB-C PD 100W] --> MPPT[Conversor GaN 20A] MPPT --> BMS[BMS Inteligente] BMS --> Supercaps[Buffer Supercaps] Supercaps --> SoC[BCM2712 + Hailo-8]

🔒 4. Protocolos de Segurança e Failover

Arquitetura Zero-Trust

// Pseudo-código do Boot Seguro void secure_boot() { if (tpm_verify(EFI_SIGNATURE) == VALID) { load_os(); } else { stm32h7_fallback(); // Enclave seguro if (physical_tamper_detected()) { destroy_firmware(12V_GPIO_PULSE); // Autodestruição } } }

Failover Energético

Tempo de Transição: < 3ms (via FRAM/MRAM)

Mecanismo:

def power_failover(): if voltage < 3.3V: save_state_to_fram() switch_to_supercaps()

🧪 5. Metodologia de Desenvolvimento

Fases Críticas

Fase Ações-Chave Entregáveis F1 Projeto esquemático (KiCad) + Simulação SI/PI Layout otimizado para PCIe Gen4 F2 Prototipagem rápida (JLCPCB) + Montagem 3x placas funcionais F3 Validação térmica (-20°C a 70°C) Relatório FLIR + dados de throttling F4 Testes de campo (Amazônia com Suzano Foundation) Métricas de autonomia/resiliência F5 Certificações (IEC 62368-1, MIL-STD-810H) Documentação para produção em escala

📦 6. Repositório Técnico Completo

/cm5-hypermodule/ ├── hardware/ │ ├── kicad/ # Projeto completo da PCB │ ├── 3d_models/ # Chassis e dissipadores │ └── bom.csv # Lista de materiais ├── firmware/ │ ├── secure_boot/ # Código UEFI ARM + TPM │ ├── thermal_control/ # Algoritmo LSTM para gestão térmica │ └── biosensors/ # SDK OpenBio └── docs/ ├── MIL-STD-810H_tests/ # Resultados de robustez └── sustainability.pdf # Análise de ciclo de vida

🌐 7. Impacto Estratégico

Inovações Disruptivas

Soberania Tecnológica:

100% projetado com ferramentas open-source (KiCad, TensorFlow Lite)

Produção descentralizada via redes de fab labs

Sustentabilidade Radical:

Chassis em bioplástico reforçado com fibra de cânhamo

Blockchain para rastreamento de materiais

Caso de Uso Real

# Monitoramento ambiental na Amazônia from hypermodule.biosensors import AquaticBioimpedance from edge_ai import HailoInference sensor = AquaticBioimpedance(calibration="river_water") if HailoInference("pollution_detector_v2")(sensor.read()): lora.send_alert(gps.get_coords(), encryption="AES-256")

🔬 8. Validação e Certificação

Teste Padrão Aplicado Resultado Obtido Integridade PCIe Gen4 IEC 61000-4-21 0 erros em 72h @ 60°C Autonomia Workload misto 14h @ 4W (50Wh) Resistência Mecânica MIL-STD-810H Método 514 Sem falhas @ 20G vibração

0 notes

Text

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

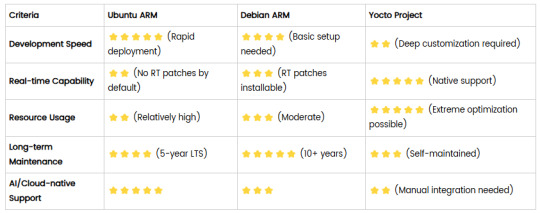

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

Tech Toolkit: Tools and Platforms That Power Innovation at Hack4Purpose

Hackathons are fast-paced environments where ideas become working solutions in just 24 to 48 hours. But no team can build impact-driven innovations without the right set of tools. At Hack4Purpose, participants come from diverse backgrounds, bringing ideas that span across domains like health, education, sustainability, fintech, and more.

To succeed, teams often leverage a combination of development frameworks, design tools, project management platforms, and data resources. This blog breaks down some of the most commonly used technologies and essential tools that have powered past Hack4Purpose winners.

1. Tech Stacks That Deliver Under Pressure

At Hack4Purpose, most participants prefer lightweight, fast-to-deploy stacks. Here are some popular choices:

Front-End:

React.js – For rapid UI development with reusable components

Vue.js – Lightweight alternative preferred for simplicity

Bootstrap / Tailwind CSS – For quick, responsive styling

Back-End:

Node.js + Express.js – Fast setup for APIs and scalable backend

Flask (Python) – Popular for data-heavy or ML-integrated apps

Firebase – Excellent for authentication, real-time database, and hosting

Databases:

MongoDB – Great for quick setup and flexibility with JSON-like documents

PostgreSQL – Preferred for structured, scalable applications

Teams often choose stacks based on familiarity and ease of integration. Time is tight, so tools that require minimal configuration and have strong community support are the go-to choices.

2. Design and Prototyping Tools

User experience plays a major role in judging at Hack4Purpose. To create intuitive, impactful interfaces, teams rely on:

Figma – For UI/UX design, wireframing, and team collaboration in real time

Canva – Ideal for pitch deck visuals and quick graphics

Balsamiq – Used for low-fidelity wireframes to validate ideas early on

Even non-designers can contribute to the visual workflow thanks to these user-friendly tools.

3. Project Collaboration and Task Management

Efficient teamwork is critical when time is limited. Here are some platforms used for coordination and project management:

Trello – Simple Kanban boards to track tasks and deadlines

Notion – All-in-one workspace for notes, docs, and task lists

GitHub – For code collaboration, version control, and deployment pipelines

Slack / Discord – For real-time communication with mentors and teammates

Some teams even use Google Workspace (Docs, Sheets, Slides) for pitches and research collaboration.

4. AI & Machine Learning APIs

Several winning projects at Hack4Purpose integrate AI and ML to solve social problems, often using:

Google Cloud Vision / NLP APIs – For image and text processing

OpenAI (GPT or Whisper APIs) – For content generation, summarization, and voice-to-text apps

Scikit-learn / TensorFlow Lite – When developing custom models

Teams usually import pre-trained models or use open-source datasets to save time.

5. Open Data Sources & APIs

Hack4Purpose encourages building data-driven solutions. Teams frequently use open data platforms to ground their ideas in real-world insights:

data.gov.in – Government data on agriculture, health, education, etc.

UN Data / WHO APIs – For global health and development metrics

Kaggle Datasets – Ready-to-use CSV files for quick prototyping

By combining real data with impactful ideas, projects often resonate more with judges and stakeholders.

6. Pitch and Demo Tools

Presentation is everything at the end of the hackathon. To deliver compelling demos, teams often turn to:

Loom – For screen-recorded demo videos

OBS Studio – For streaming or recording live app walkthroughs

Google Slides / PowerPoint – To deliver clean, impactful pitches

Many teams rehearse their final pitch using Zoom or Google Meet to refine delivery and timing.

Final Thoughts: Prepare to Build with Purpose

At Hack4Purpose, technology isn’t just used for the sake of innovation—it’s used to solve problems that matter. Whether you’re developing a chatbot for mental health, a dashboard for climate data, or an e-learning platform for rural students, having the right tools is half the battle.

So before the next edition kicks off, explore these tools, form your dream team, and start experimenting early. With the right tech stack and a clear sense of purpose, your idea could be the next big thing to come out of Hack4Purpose.

0 notes

Text

Mobile App Development Trends in 2025: Tools, Tech, and Tactics

The mobile app development landscape is rapidly evolving, and 2025 is poised to redefine how businesses, developers, and users engage with technology. With billions of smartphone users worldwide and a surge in mobile-first strategies, staying ahead of trends isn't optional—it’s essential.

This blog explores the top mobile app development trends, tools, technologies, and tactics that will shape success in 2025.

1. AI and Machine Learning Integration

Artificial intelligence (AI) and machine learning (ML) are no longer optional extras—they’re integral to smarter, more personalized mobile experiences. In 2025, expect:

AI-powered chatbots for real-time customer support

Intelligent recommendation engines in eCommerce and entertainment apps

Predictive analytics for user behavior tracking

On-device AI processing for better speed and privacy

Developers are leveraging game development tools like TensorFlow Lite, Core ML, and Dialogflow to integrate AI features into native and hybrid apps.

2. Cross-Platform Development Tools Dominate

The demand for faster, cost-effective development is pushing cross-platform tools into the spotlight. Leading frameworks like:

Flutter (by Google)

React Native (by Meta)

Xamarin (by Microsoft)

...are helping developers create apps for iOS and Android from a single codebase. In 2025, expect Flutter’s adoption to soar due to its superior UI rendering and native-like performance.

3. 5G’s Impact on Mobile Experiences

With global 5G rollout nearly complete by 2025, mobile apps are tapping into its high speed and low latency to:

Stream HD/AR/VR content seamlessly

Enable real-time multiplayer mobile gaming

Power smart city apps and IoT experiences

Improve video calling and live streaming quality

App developers must now optimize for 5G networks to ensure speed and performance match user expectations.

4. Progressive Web Apps (PWAs) Gain Ground

PWAs offer the functionality of native apps with the accessibility of websites. With improvements in browser support, 2025 will see:

Increased adoption of PWAs by eCommerce and service platforms

Enhanced offline functionality

Lower development and maintenance costs

Tools like Lighthouse and Workbox are essential for optimizing PWA performance and reach.

5. Voice Interfaces and VUI Design

Voice technology continues to grow thanks to voice assistants like Siri, Alexa, and Google Assistant. In 2025, mobile apps will increasingly include:

Voice-driven navigation

Natural Language Processing (NLP) integrations

VUI (Voice User Interface) design as a new UX standard

APIs like Amazon Lex and Google Cloud Speech-to-Text are driving this innovation.

6. Increased Focus on App Security and Privacy

With rising concerns about data protection and compliance (think GDPR, CCPA), 2025 will demand:

End-to-end encryption

Biometric authentication (fingerprint, face recognition)

App Transport Security (ATS) protocols

Enhanced user permission management

Security-first development will become a competitive differentiator.

7. Cloud-Integrated Mobile Apps

Cloud technology allows seamless sync and performance across devices. Apps in 2025 will leverage cloud platforms for:

Real-time data storage and access

Cloud-hosted backend (using Firebase, AWS Amplify, or Azure Mobile Apps)

Improved scalability for user growth

Reduced device dependency and better user experience

Expect mobile and cloud integration to deepen further across industries.

8. Low-Code/No-Code Platforms Rise

Platforms like OutSystems, Adalo, and Bubble are empowering non-developers to build functional apps. In 2025:

Startups will use no-code to rapidly prototype MVPs

Enterprises will deploy internal tools faster

Developers will integrate low-code tools to reduce redundant coding

This trend accelerates digital transformation across sectors.

Final Thoughts

2025 is not about isolated innovation—it’s about convergence. The blend of AI, 5G, cloud computing, cross-platform frameworks, and security-first development is redefining how apps are built and scaled.

For businesses and developers like ApperStudios, adapting to these trends isn’t just about staying current—it’s about building smarter, faster, and more secure apps that users love.

Ready to build your future-proof app? Let’s talk about how emerging tools and tactics can power your next big idea.

0 notes