#tensorflowlite

Explore tagged Tumblr posts

Link

https://lnkd.in/gM2tjzD Hi guys very excited to share this new video on my youtube channel So, please subscribe to my youtube channel in which I will discuss the latest tech topics which will more helpful for both students and working professionals, "Please Do Subscribe and Share" So, that it will really help to various people #internshalastudentpartner #internshala #internship #internshipopportunity #glassdoor #amazonjobs #angelinvesting #datascience #technologyrecruitment #jobapplications #jobsalert #deeplearning #keras #neuralnetwork #tableaupublic #datavisualizations #datavizualization #learntocode #teslamodelx #machinelearningtraining #datamining #code #mlearning #bigdataanalytics #neuralnetworks #javascript #datanalytics #programmer #businessanalytics #onlinecourse #developercommunity #programmers #artificialintelligenceai #artificialintelligence #developers #developer #freecourse #typescript #advancedanalytics #internship2020 #internshipopportunity #intern2learn #internship #internshipalert #internships #internshipopportunities #teslamotors #pytorch #python #pytorchlightning #tensorflow #tensorflowjs #tensorflowlite #deeplearning #softwaredevelopment #programming #gan #teslaroadster #selfdrivingcars #selfdrivingvehicles #selfcarethreads #teslas

2 notes

·

View notes

Photo

Android 8.1 preview unlocks your Pixel 2 camera’s AI potential Remember how Google said the Pixel 2 and Pixel 2 XL both have a custom imaging chip…

#Android#android8.1#androidgo#androidoreo#developer#developerpreview#gear#google#mobile#neuralnetwork#photography#pixel2#pixel2xl#pixelvisualcore#smartphone#tensorflow#tensorflowlite

0 notes

Text

Fruit identification using Arduino and TensorFlow

By Dominic Pajak and Sandeep Mistry

Arduino is on a mission to make machine learning easy enough for anyone to use. The other week we announced the availability of TensorFlow Lite Micro in the Arduino Library Manager. With this, some cool ready-made ML examples such as speech recognition, simple machine vision and even an end-to-end gesture recognition training tutorial. For a comprehensive background we recommend you take a look at that article.

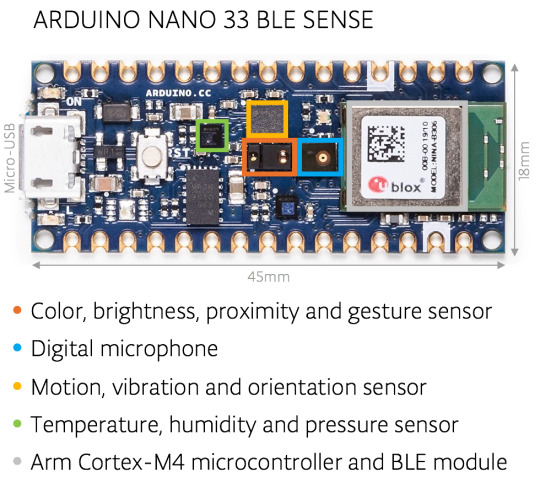

In this article we are going to walk through an even simpler end-to-end tutorial using the TensorFlow Lite Micro library and the Arduino Nano 33 BLE 33 Sense’s colorimeter and proximity sensor to classify objects. To do this, we will be running a small neural network on the board itself.

Arduino BLE 33 Nano Sense running TensorFlow Lite Micro

The philosophy of TinyML is doing more on the device with less resources – in smaller form-factors, less energy and lower cost silicon. Running inferencing on the same board as the sensors has benefits in terms of privacy and battery life and means its can be done independent of a network connection.

The fact that we have the proximity sensor on the board means we get an instant depth reading of an object in front of the board – instead of using a camera and having to determine if an object is of interest through machine vision.

In this tutorial when the object is close enough we sample the color – the onboard RGB sensor can be viewed as a 1 pixel color camera. While this method has limitations it provides us a quick way of classifying objects only using a small amount of resources. Note that you could indeed run a complete CNN-based vision model on-device. As this particular Arduino board includes an onboard colorimeter, we thought it’d be fun and instructive to demonstrate in this way to start with.

We’ll show a simple but complete end-to-end TinyML application can be achieved quickly and without a deep background in ML or embedded. What we cover here is data capture, training, and classifier deployment. This is intended to be a demo, but there is scope to improve and build on this should you decide to connect an external camera down the road. We want you to get an idea of what is possible and a starting point with tools available.

youtube

What you’ll need

Arduino Nano 33 BLE Sense

A micro USB cable

A desktop/laptop machine with a web browser

Some objects of different colors

About the Arduino board

The Arduino Nano 33 BLE Sense board we’re using here has an Arm Cortex-M4 microcontroller running mbedOS and a ton of onboard sensors – digital microphone, accelerometer, gyroscope, temperature, humidity, pressure, light, color and proximity.

While tiny by cloud or mobile standards the microcontroller is powerful enough to run TensorFlow Lite Micro models and classify sensor data from the onboard sensors.

Setting up the Arduino Create Web Editor

In this tutorial we’ll be using the Arduino Create Web Editor – a cloud-based tool for programming Arduino boards. To use it you have to sign up for a free account, and install a plugin to allow the browser to communicate with your Arduino board over USB cable.

You can get set up quickly by following the getting started instructions which will guide you through the following:

Download and install the plugin

Sign in or sign up for a free account

(NOTE: If you prefer, you can also use the Arduino IDE desktop application. The setup for which is described in the previous tutorial.)

Capturing training data

We now we will capture data to use to train our model in TensorFlow. First, choose a few different colored objects. We’ll use fruit, but you can use whatever you prefer.

Setting up the Arduino for data capture

Next we’ll use Arduino Create to program the Arduino board with an application object_color_capture.ino that samples color data from objects you place near it. The board sends the color data as a CSV log to your desktop machine over the USB cable.

To load the object_color_capture.ino application onto your Arduino board:

Connect your board to your laptop or PC with a USB cable

The Arduino board takes a male micro USB

Open object_color_capture.ino in Arduino Create by clicking this link

Your browser will open the Arduino Create web application (see GIF above).

Press OPEN IN WEB EDITOR

For existing users this button will be labeled ADD TO MY SKETCHBOOK

Press Upload & Save

This will take a minute

You will see the yellow light on the board flash as it is programmed

Open the serial Monitor

This opens the Monitor panel on the left-hand side of the web application

You will now see color data in CSV format here when objects are near the top of the board

Capturing data in CSV files for each object

For each object we want to classify we will capture some color data. By doing a quick capture with only one example per class we will not train a generalized model, but we can still get a quick proof of concept working with the objects you have to hand!

Say, for example, we are sampling an apple:

Reset the board using the small white button on top.

Keep your finger away from the sensor, unless you want to sample it!

The Monitor in Arduino Create will say ‘Serial Port Unavailable’ for a minute

You should then see Red,Green,Blue appear at the top of the serial monitor

Put the front of the board to the apple.

The board will only sample when it detects an object is close to the sensor and is sufficiently illuminated (turn the lights on or be near a window)

Move the board around the surface of the object to capture color variations

You will see the RGB color values appear in the serial monitor as comma separated data.

Capture at a few seconds of samples from the object

Copy and paste this log data from the Monitor to a text editor

Tip: untick AUTOSCROLL check box at the bottom to stop the text moving

Save your file as apple.csv

Reset the board using the small white button on top.

Do this a few more times, capturing other objects (e.g. banana.csv, orange.csv).

NOTE: The first line of each of the .csv files should read:

Red,Green,Blue

If you don’t see it at the top, you can just copy and paste in the line above.

Training the model

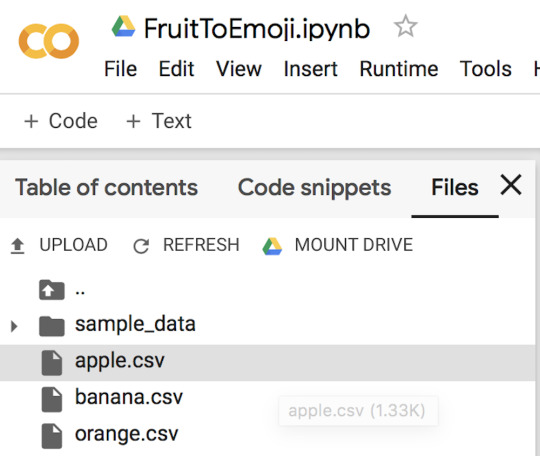

We will now use colab to train an ML model using the data you just captured in the previous section.

First open the FruitToEmoji Jupyter Notebook in colab

Follow the instructions in the colab

You will be uploading your *.csv files

Parsing and preparing the data

Training a model using Keras

Outputting TensorFlowLite Micro model

Downloading this to run the classifier on the Arduino

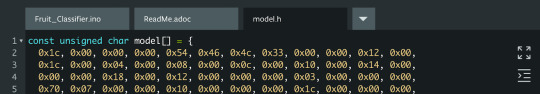

With that done you will have downloaded model.h to run on your Arduino board to classify objects!

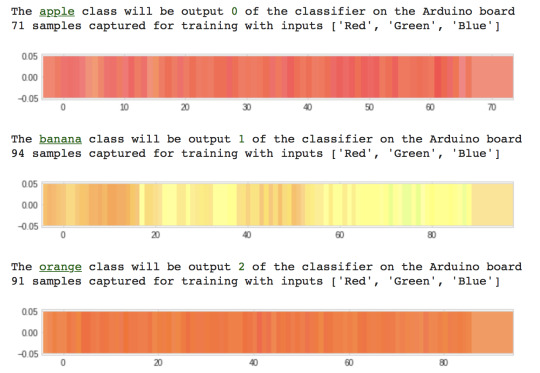

The colab will guide you to drop your .csv files into the file window, the result shown above

Normalized color samples captured by the Arduino board are graphed in colab

Program TensorFlow Lite Micro model to the Arduino board

Finally, we will take the model we trained in the previous stage and compile and upload to our Arduino board using Arduino Create.

Open Classify_Object_Color.ino

Your browser will open the Arduino Create web application:

Press the OPEN IN WEB EDITOR button

Import the model.h you downloaded from colab using Import File to Sketch:

Import the model.h you downloaded from colab

The model.h tab should now look like this

Compile and upload the application to your Arduino board

This will take a minute

When it’s done you’ll see this message in the Monitor:

Put your Arduino’s RGB sensor near the objects you trained it with

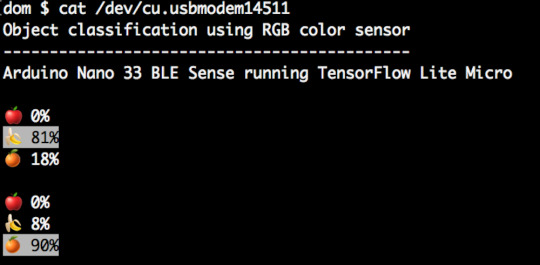

You will see the classification output in the Monitor:

Classifier output in the Arduino Create Monitor

You can also edit the object_color_classifier.ino sketch to output emojis instead (we’ve left the unicode in the comments in code!), which you will be able to view in Mac OS X or Linux terminal by closing the web browser tab with Arduino Create in, resetting your board, and typing cat /cu/usb.modem[n].

Output from Arduino serial to Linux terminal using ANSI highlighting and unicode emojis

Learning more

The resources around TinyML are still emerging but there’s a great opportunity to get a head start and meet experts coming up 2-3 December 2019 in Mountain View, California at the Arm IoT Dev Summit. This includes workshops from Sandeep Mistry, Arduino technical lead for on-device ML and from Google’s Pete Warden and Daniel Situnayake who literally wrote the book on TinyML. You’ll be able to hang out with these experts and more at the TinyML community sessions there too. We hope to see you there!

Conclusion

We’ve seen a quick end-to-end demo of machine learning running on Arduino. The same framework can be used to sample different sensors and train more complex models. For our object by color classification we could do more, by sampling more examples in more conditions to help the model generalize. In future work, we may also explore how to run an on-device CNN. In the meantime, we hope this will be a fun and exciting project for you. Have fun!

Fruit identification using Arduino and TensorFlow was originally published on PlanetArduino

0 notes

Text

Google Has Figured Out a Way to Detect When Strangers Are Sneaking a Peek at Your Phone

With all the data that’s constantly sent and received by our phones, there’s been an ever-increasing focus on combating viruses, malware, and other online attacks, to the point that we sometimes forget what’s going on in the real world. But good physical security is still one of the most important things when it comes to protecting your devices, and thanks to a couple members of Google’s research team, your phone might soon be able to tell when others are peeping at your screen from over your shoulder.

During an upcoming presentation at the Neural Information Processing Systems conference next week, Hee Jung Ryu and Florian Schroff are scheduled to discuss their electronic screen protector project which uses the selfie camera on a Google Pixel and artificial intelligence to detect if multiple people are looking at the screen.

According to Ryu and Schroff, the program can recognize a second face in just two milliseconds, and works across a number of angles, poses, and lighting conditions. And while more details will be announced at the presentation, a demo of the software in action can already been seen in the unlisted, but public video above.

To achieve such fast recognition, it seems the team’s program is using TensorFlowLite, Google’s latest venture into AI and machine learning which uses the processor in your phone to perform complex visual analysis rather than needing to ping beefier servers in the cloud.

So the next time you are looking at some sensitive info, whether it’s a document from work, a text from a friend, or even the PIN for your phone, this is exactly the kind of program that could help prevent prying eyes from peering at your info. Now the question is: How long would it take for this functionality to make its way into the greater Android ecosystem—and will it ever?

[Quartz]

0 notes

Text

Google TensorFlow Team: How to Use AI to Train Dogs?

Dog training usually requires a human dog trainer. But if there is no trainer, how to achieve the purpose of training? A company called Companion Labs invented the first dog training device driven by artificial intelligence AI. Among them, computer vision is the key to the operation of this machine. It can detect the behavior of dogs in real time and adjust its delivery of rewards to strengthen the desired behavior. For example, use its computer vision to detect when dogs are doing satisfactory things and issue rewards.

This trainer, called CompanionPro, looks like a Soviet-era space heater with an image sensor, Google Edge TPU AI processor, wireless connection, lights, speakers, and a proprietary "dog food transmitter."

In this article, the TensorFlow team will share how to develop a system to understand the dog's behavior and influence the dog's behavior through training, as well as our process of miniaturizing the computer to adapt to B2B products.

Improve the lives of our pets through technology

Today's technology has the potential to improve the lives of our pets. As a personal example, I am a 4 year old pet daddy who rescues Adele. Like many other pet parents, I work full time and only leave my cat at home for up to 8 hours a day. When I am at work or away from home, I will worry about her because I never want her to feel lonely or bored, without water or food, or get sick without me.

Fortunately, in the absence of me, the available technology allows me to check her and improve her welfare. For example, I have an automatic pet food dispenser that distributes 2 meals to Adele every day, so she always feeds in the right amount on time, and I have an automatically cleaned bin so that she can keep it clean throughout the day Rubbish, and I used the Whistle GPS tracker to help ensure she would never get lost.

Although these devices can help me ensure the health of my pets, I am always curious and hope to further adopt technology to solve animal welfare issues. What if we can use technology to communicate with animals? How can we keep them happy when we are not around them? Every day, millions of dogs stay alone at home for several hours, and millions of dogs are placed in shelters, and they rarely receive personal care. Can we use this time to improve the quality of people and pets? These are issues that our Companion team is interested in solving.

For the past two years, our team of small animal behavior experts and engineers has been working on a new device that can interact with dogs and automatically train when people can’t be with them. Our first product, CompanionPro, interacts with dogs through lights, sounds, and snack dispensers to handle basic obedience behaviors such as sitting down, sitting down, staying, and recalling.

Our users are dog shelters and businesses, and they want to help them improve dog training services. Although there is evidence that dogs that respond to basic obedience orders are more likely to be adopted in permanent homes, shelters are often under-resourced and unable to provide training for all dogs. Puppy day care tells us that it is difficult for them to find enough dog trainers to meet the demand for dog training services in their facilities. Institutions that specialize in dog training tell us that they want the coach to focus on teaching dog advanced tasks, but they have to spend a lot of time repeating the same exercises to ensure that the dog responds to basic obedience. Fortunately, the machine is good at performing repetitive tasks with perfect consistency and unlimited patience, which puts us on the path to creating autonomous training equipment.

Building this product poses many challenges. We must first conduct experiments to prove that we can train the dog without human trainers, then build a model to understand the dog's behavior and determine how to react to the dog, and finally miniaturize our technology to make it suitable for productization, And can be sold to related companies.

At Companion, we have developed a device that uses Google's TensorFlowLite (and EdgeTPU), which can automatically train dogs' advanced response to multiple behaviors, including sitting down, lying down, stopping, etc. We believe that this will provide all dogs with a wealth of rearing and training by reducing costs, which will have a very positive impact on the adoption rate and retention rate of lean shelters throughout the United States. Google Edge TPU allows us to understand the behavior of the dog when interacting with the device. This understanding allows the CompanionPro device to use precise positive reinforcement by giving food rewards when the dog exhibits the desired behavior. Ultimately, it can help us understand and improve the lives of pets.

More realistic examples of Google Edge TPU and TensorFlow

The Model Play and Tiorb AIX launched by Gravitylink can also perfectly support Edge TPU. AIX is an artificial intelligence hardware that integrates the two core functions of computer vision and intelligent voice interaction. Model Play is an AI model resource platform for global developers. It has built-in diverse AI models, combined with Tiorb AIX, based on Google open source neural Network architecture and algorithms, build autonomous transfer learning functions, without writing code, you can complete AI model training by selecting pictures, defining models and category names.

0 notes

Video

youtube

We use an easy method to retrain a pre-trained model for object classification. Transfer learning is an easy and quick method to retrain a model for good classification of objects. Git repository: https://ift.tt/2ZJp5UG Please follow me on twitter https://twitter.com/kalaspuffar Or visit my blog at: https://ift.tt/3bF6D4l Outro music: Sanaas Scylla #ObjectClassification #MachineLearning #TensorflowLite

0 notes

Video

instagram

@PINTO03091 さんのTensorflowLite-binのスクリプト、ラズパイカメラ対応してみた。0.01秒くらい高速化に貢献した気がするw https://www.instagram.com/p/B--6eYkJyLG/?igshid=vbqln2ph45cc

0 notes

Text

.@Google Launches #TensorFlowLite 1.0 For @Mobile and Embedded Devices https://t.co/V5xpFm3m92 https://t.co/sHwieEpYkv

.@Google Launches #TensorFlowLite 1.0 For @Mobile and Embedded Devices https://t.co/V5xpFm3m92 pic.twitter.com/sHwieEpYkv

— Macronimous.com (@macronimous) October 6, 2019

from Twitter https://twitter.com/macronimous October 06, 2019 at 07:45PM via IFTTT

0 notes

Link

https://lnkd.in/gM2tjzD Hi guys very excited to share this new video on my youtube channel So, please subscribe to my youtube channel in which I will discuss the latest tech topics which will more helpful for both students and working professionals, "Please Do Subscribe and Share" So, that it will really help to various people #internshalastudentpartner #internshala #internship #internshipopportunity #glassdoor #amazonjobs #angelinvesting #datascience #technologyrecruitment #jobapplications #jobsalert #deeplearning #keras #neuralnetwork #tableaupublic #datavisualizations #datavizualization #learntocode #teslamodelx #machinelearningtraining #datamining #code #mlearning #bigdataanalytics #neuralnetworks #javascript #datanalytics #programmer #businessanalytics #onlinecourse #developercommunity #programmers #artificialintelligenceai #artificialintelligence #developers #developer #freecourse #typescript #advancedanalytics #internship2020 #internshipopportunity #intern2learn #internship #internshipalert #internships #internshipopportunities #teslamotors #pytorch #python #pytorchlightning #tensorflow #tensorflowjs #tensorflowlite #deeplearning #softwaredevelopment #programming #gan #teslaroadster #selfdrivingcars #selfdrivingvehicles #selfcarethreads #teslas

1 note

·

View note

Text

Tweeted

Hi friends 👋I'm curious how you're using MLKit in your apps. Doing anything cool to share? #TalkResearch #AndroidDev #MLKit #AutoMLVisionKit #TensorFlowLite

— Victoria Gonda (@TTGonda) October 3, 2019

0 notes

Link

via Adafruit Industries – Makers, hackers, artists, designers and engineers! https://ift.tt/2Kk7tZk

0 notes

Text

Machine-Learning Inference Standards

*People want to put machine-learning into devices that are on “the Edge” instead of in “the Cloud,” but that means that IoT standards and consortia battles are starting all over again, except they’re about machine-learning gizmos instead of IoT gizmos.

https://gigaom.com/ai-at-the-edge-a-gigaom-research-byte/

(...)

Compatibility and Middleware

In the introduction to this report, I mentioned two oft-overlooked factors driving AI and ML:

•The decrease in cost and increase in power of chips capable of performing AI inferencing “at the edge.”

•The development of middleware allowing a broader range of applications to run seamlessly on a wider variety of chips.

The future will be a world with countless chipsets, produced by dozens or hundreds of manufacturers. Each may be used in different devices and in different combinations. (((Aieee! Imagine the size of that attack surface.)))

There will be dozens, or hundreds, of development environments for creating applications to run on those chips, in those applications.

In fact, it is not so much the world of tomorrow, as the world of today. It is already like the wild west in terms of edge ML. First, there are a number of neural net development frameworks inthe cloud, including Caffe, Caffe2, MXNet, ONNX, PyTorch, and TensorFlowLite.

And there are a number of multiple, intermediate forms, such as FlatBuffers, NNEF, ONNX, and Protobuf.

In addition, there are a variety of inference engine interfaces such as Android NNAPI, MACE, and PaddlePaddle.

These examples only touch the tip of the AI foundation iceberg. (((Nodding resignedly))) And the situation will become vastly more complex. (((Of course.))) ML applications are so specialized that a single, uber toolkit that is all things to all people will almost certainly not emerge. So, how can we prevent this from descending into a chaos of technical incompatibility and inoperability?

The answer is in standards....

0 notes

Photo

Congrats to @Qualcomm on winning track one of the Low-Power Image Recognition Challenge (LPIRC), with #TensorFlowLite! Read more here ↓ https://t.co/l4bDogc1y8

0 notes

Photo

Congrats to @Qualcomm on winning track one of the Low-Power Image Recognition Challenge (LPIRC), with #TensorFlowLite! Read more here ↓ https://t.co/l4bDogc1y8 (via Twitter http://twitter.com/TensorFlow/status/1043202452374339587)

0 notes

Text

Google's new machine learning framework is going to put more AI on your phone

Google's new machine learning framework is going to put more AI on your phone

At the moment, artificial intelligence lives in the cloud, but Google - and other big tech companies - want it work directly on your devices, too. At Google I/O today, the search giant announced a new initiative to help its AI make this leap down to earth: a mobile-optimized version of its machine learning framework named TensorFlowLite.

At the moment, artificial intelligence lives in the cloud, but Google - and other big tech companies - want it work directly on your devices, too. At Google I/O today, the search giant announced a new initiative to help its AI make this leap down to earth: a mobile-optimized version of its machine learning framework named TensorFlowLite. via Blogger http://ift.tt/2zxK9z6

0 notes

Text

RT @AndroidDev: The developer preview of #TensorFlowLite -- #TensorFlow's lightweight solution for mobile and embedded devices -- is now available. Head on over to the blogpost to find out more, or straight to the documentation at https://t.co/nRImGlNFpu https://t.co/AFuBNgLC2R

The developer preview of #TensorFlowLite -- #TensorFlow's lightweight solution for mobile and embedded devices -- is now available. Head on over to the blogpost to find out more, or straight to the documentation at https://t.co/nRImGlNFpu https://t.co/AFuBNgLC2R

— Android Developers (@AndroidDev) November 14, 2017

from Twitter https://twitter.com/mobileo2o November 19, 2017 at 11:08AM via IFTTT

0 notes