#virtual machine failover

Explore tagged Tumblr posts

Text

NAKIVO Backup and Replication v10.10 Beta: Real-time Replication for VMware vSphere

NAKIVO Backup and Replication v10.10 Beta: Real-time Replication for VMware vSphere @nakivo #vmwarecommunities #realtimereplication #ransomwareprotection #virtualmachinefailover #InstantVMRecoveryandP2V #DisasterRecoveryAutomation #disasterrecovery

NAKIVO has just released NAKIVO Backup and Replication v10.10 Beta with a great new feature that many will be interested in that will help bolster their business-critical data protection – real-time replication in VMware vSphere environments. Table of contentsWhat is Virtual Machine Replication?Features of VM replicationWhat is NAKIVO Backup and Replication?Core Offerings of NAKIVO Backup &…

View On WordPress

#business continuity#disaster recovery#Disaster Recovery Automation#Instant VM Recovery and P2V#NAKIVO Backup & Replication Overview v10.10 Beta#ransomware protection#real-time replication#User-friendly Data Backup Administration#virtual machine failover#VMware vSphere VM Monitoring

0 notes

Text

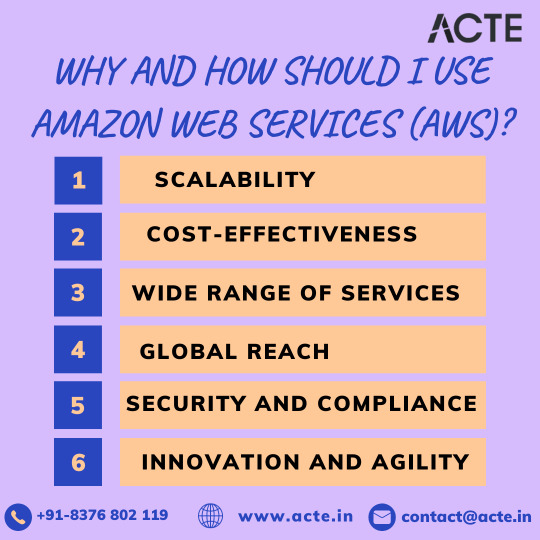

Navigating the Cloud: Unleashing the Potential of Amazon Web Services (AWS)

In the dynamic realm of technological progress, Amazon Web Services (AWS) stands as a beacon of innovation, offering unparalleled advantages for enterprises, startups, and individual developers. This article will delve into the compelling reasons behind the adoption of AWS and provide a strategic roadmap for harnessing its transformative capabilities.

Unveiling the Strengths of AWS:

1. Dynamic Scalability: AWS distinguishes itself with its dynamic scalability, empowering users to effortlessly adjust infrastructure based on demand. This adaptability ensures optimal performance without the burden of significant initial investments, making it an ideal solution for businesses with fluctuating workloads.

2. Cost-Efficient Flexibility: Operating on a pay-as-you-go model, AWS delivers cost-efficiency by eliminating the need for large upfront capital expenditures. This financial flexibility is a game-changer for startups and businesses navigating the challenges of variable workloads.

3. Comprehensive Service Portfolio: AWS offers a comprehensive suite of cloud services, spanning computing power, storage, databases, machine learning, and analytics. This expansive portfolio provides users with a versatile and integrated platform to address a myriad of application requirements.

4. Global Accessibility: With a distributed network of data centers, AWS ensures low-latency access on a global scale. This not only enhances user experience but also fortifies application reliability, positioning AWS as the preferred choice for businesses with an international footprint.

5. Security and Compliance Commitment: Security is at the forefront of AWS's priorities, offering robust features for identity and access management, encryption, and compliance with industry standards. This commitment instills confidence in users regarding the safeguarding of their critical data and applications.

6. Catalyst for Innovation and Agility: AWS empowers developers by providing services that allow a concentrated focus on application development rather than infrastructure management. This agility becomes a catalyst for innovation, enabling businesses to respond swiftly to evolving market dynamics.

7. Reliability and High Availability Assurance: The redundancy of data centers, automated backups, and failover capabilities contribute to the high reliability and availability of AWS services. This ensures uninterrupted access to applications even in the face of unforeseen challenges.

8. Ecosystem Synergy and Community Support: An extensive ecosystem with a diverse marketplace and an active community enhances the AWS experience. Third-party integrations, tools, and collaborative forums create a rich environment for users to explore and leverage.

Charting the Course with AWS:

1. Establish an AWS Account: Embark on the AWS journey by creating an account on the AWS website. This foundational step serves as the gateway to accessing and managing the expansive suite of AWS services.

2. Strategic Region Selection: Choose AWS region(s) strategically, factoring in considerations like latency, compliance requirements, and the geographical location of the target audience. This decision profoundly impacts the performance and accessibility of deployed resources.

3. Tailored Service Selection: Customize AWS services to align precisely with the unique requirements of your applications. Common choices include Amazon EC2 for computing, Amazon S3 for storage, and Amazon RDS for databases.

4. Fortify Security Measures: Implement robust security measures by configuring identity and access management (IAM), establishing firewalls, encrypting data, and leveraging additional security features. This comprehensive approach ensures the protection of critical resources.

5. Seamless Application Deployment: Leverage AWS services to deploy applications seamlessly. Tasks include setting up virtual servers (EC2 instances), configuring databases, implementing load balancers, and establishing connections with various AWS services.

6. Continuous Optimization and Monitoring: Maintain a continuous optimization strategy for cost and performance. AWS monitoring tools, such as CloudWatch, provide insights into the health and performance of resources, facilitating efficient resource management.

7. Dynamic Scaling in Action: Harness the power of AWS scalability by adjusting resources based on demand. This can be achieved manually or through the automated capabilities of AWS Auto Scaling, ensuring applications can handle varying workloads effortlessly.

8. Exploration of Advanced Services: As organizational needs evolve, delve into advanced AWS services tailored to specific functionalities. AWS Lambda for serverless computing, AWS SageMaker for machine learning, and AWS Redshift for data analytics offer specialized solutions to enhance application capabilities.

Closing Thoughts: Empowering Success in the Cloud

In conclusion, Amazon Web Services transcends the definition of a mere cloud computing platform; it represents a transformative force. Whether you are navigating the startup landscape, steering an enterprise, or charting an individual developer's course, AWS provides a flexible and potent solution.

Success with AWS lies in a profound understanding of its advantages, strategic deployment of services, and a commitment to continuous optimization. The journey into the cloud with AWS is not just a technological transition; it is a roadmap to innovation, agility, and limitless possibilities. By unlocking the full potential of AWS, businesses and developers can confidently navigate the intricacies of the digital landscape and achieve unprecedented success.

2 notes

·

View notes

Text

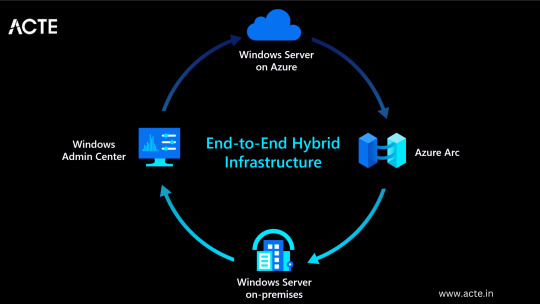

A Complete Guide to Mastering Microsoft Azure for Tech Enthusiasts

With this rapid advancement, businesses around the world are shifting towards cloud computing to enhance their operations and stay ahead of the competition. Microsoft Azure, a powerful cloud computing platform, offers a wide range of services and solutions for various industries. This comprehensive guide aims to provide tech enthusiasts with an in-depth understanding of Microsoft Azure, its features, and how to leverage its capabilities to drive innovation and success.

Understanding Microsoft Azure

A platform for cloud computing and service offered through Microsoft is called Azure. It provides reliable and scalable solutions for businesses to build, deploy, and manage applications and services through Microsoft-managed data centers. Azure offers a vast array of services, including virtual machines, storage, databases, networking, and more, enabling businesses to optimize their IT infrastructure and accelerate their digital transformation.

Cloud Computing and its Significance

Cloud computing has revolutionized the IT industry by providing on-demand access to a shared pool of computing resources over the internet. It eliminates the need for businesses to maintain physical hardware and infrastructure, reducing costs and improving scalability. Microsoft Azure embraces cloud computing principles to enable businesses to focus on innovation rather than infrastructure management.

Key Features and Benefits of Microsoft Azure

Scalability: Azure provides the flexibility to scale resources up or down based on workload demands, ensuring optimal performance and cost efficiency.

Vertical Scaling: Increase or decrease the size of resources (e.g., virtual machines) within Azure.

Horizontal Scaling: Expand or reduce the number of instances across Azure services to meet changing workload requirements.

Reliability and Availability: Microsoft Azure ensures high availability through its globally distributed data centers, redundant infrastructure, and automatic failover capabilities.

Service Level Agreements (SLAs): Guarantees high availability, with SLAs covering different services.

Availability Zones: Distributes resources across multiple data centers within a region to ensure fault tolerance.

Security and Compliance: Azure incorporates robust security measures, including encryption, identity and access management, threat detection, and regulatory compliance adherence.

Azure Security Center: Provides centralized security monitoring, threat detection, and compliance management.

Compliance Certifications: Azure complies with various industry-specific security standards and regulations.

Hybrid Capability: Azure seamlessly integrates with on-premises infrastructure, allowing businesses to extend their existing investments and create hybrid cloud environments.

Azure Stack: Enables organizations to build and run Azure services on their premises.

Virtual Network Connectivity: Establish secure connections between on-premises infrastructure and Azure services.

Cost Optimization: Azure provides cost-effective solutions, offering pricing models based on consumption, reserved instances, and cost management tools.

Azure Cost Management: Helps businesses track and optimize their cloud spending, providing insights and recommendations.

Azure Reserved Instances: Allows for significant cost savings by committing to long-term usage of specific Azure services.

Extensive Service Catalog: Azure offers a wide range of services and tools, including app services, AI and machine learning, Internet of Things (IoT), analytics, and more, empowering businesses to innovate and transform digitally.

Learning Path for Microsoft Azure

To master Microsoft Azure, tech enthusiasts can follow a structured learning path that covers the fundamental concepts, hands-on experience, and specialized skills required to work with Azure effectively. I advise looking at the ACTE Institute, which offers a comprehensive Microsoft Azure Course.

Foundational Knowledge

Familiarize yourself with cloud computing concepts, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

Understand the core components of Azure, such as Azure Resource Manager, Azure Virtual Machines, Azure Storage, and Azure Networking.

Explore Azure architecture and the various deployment models available.

Hands-on Experience

Create a free Azure account to access the Azure portal and start experimenting with the platform.

Practice creating and managing virtual machines, storage accounts, and networking resources within the Azure portal.

Deploy sample applications and services using Azure App Services, Azure Functions, and Azure Containers.

Certification and Specializations

Pursue Azure certifications to validate your expertise in Azure technologies. Microsoft offers role-based certifications, including Azure Administrator, Azure Developer, and Azure Solutions Architect.

Gain specialization in specific Azure services or domains, such as Azure AI Engineer, Azure Data Engineer, or Azure Security Engineer. These specializations demonstrate a deeper understanding of specific technologies and scenarios.

Best Practices for Azure Deployment and Management

Deploying and managing resources effectively in Microsoft Azure requires adherence to best practices to ensure optimal performance, security, and cost efficiency. Consider the following guidelines:

Resource Group and Azure Subscription Organization

Organize resources within logical resource groups to manage and govern them efficiently.

Leverage Azure Management Groups to establish hierarchical structures for managing multiple subscriptions.

Security and Compliance Considerations

Implement robust identity and access management mechanisms, such as Azure Active Directory.

Enable encryption at rest and in transit to protect data stored in Azure services.

Regularly monitor and audit Azure resources for security vulnerabilities.

Ensure compliance with industry-specific standards, such as ISO 27001, HIPAA, or GDPR.

Scalability and Performance Optimization

Design applications to take advantage of Azure’s scalability features, such as autoscaling and load balancing.

Leverage Azure CDN (Content Delivery Network) for efficient content delivery and improved performance worldwide.

Optimize resource configurations based on workload patterns and requirements.

Monitoring and Alerting

Utilize Azure Monitor and Azure Log Analytics to gain insights into the performance and health of Azure resources.

Configure alert rules to notify you about critical events or performance thresholds.

Backup and Disaster Recovery

Implement appropriate backup strategies and disaster recovery plans for essential data and applications.

Leverage Azure Site Recovery to replicate and recover workloads in case of outages.

Mastering Microsoft Azure empowers tech enthusiasts to harness the full potential of cloud computing and revolutionize their organizations. By understanding the core concepts, leveraging hands-on practice, and adopting best practices for deployment and management, individuals become equipped to drive innovation, enhance security, and optimize costs in a rapidly evolving digital landscape. Microsoft Azure’s comprehensive service catalog ensures businesses have the tools they need to stay ahead and thrive in the digital era. So, embrace the power of Azure and embark on a journey toward success in the ever-expanding world of information technology.

#microsoft azure#cloud computing#cloud services#data storage#tech#information technology#information security

6 notes

·

View notes

Text

The Rise of Zero-Touch IT Infrastructure in Disaster Recovery Planning

In an era where operational downtime can result in significant financial losses and reputational damage, the importance of disaster recovery (DR) has moved to the forefront of business strategy. As organizations navigate increasingly complex IT ecosystems, one concept has begun to revolutionize how they prepare for and recover from disruptions: Zero-Touch IT Infrastructure.

This blog will dive deep into how Zero-Touch IT is reshaping disaster recovery planning, its benefits, components, use cases, and why forward-thinking businesses are adopting this approach for resilient operations.

🔗 Explore end-to-end IT infrastructure services tailored for resiliency

Understanding Zero-Touch IT Infrastructure

Zero-Touch IT Infrastructure refers to systems that can be automatically configured, deployed, monitored, maintained, and, when necessary, recovered with minimal or no human intervention. This level of automation reduces manual dependencies and ensures that critical infrastructure can remain operational even during crises.

It leverages advanced tools like:

AI-driven monitoring

Infrastructure-as-Code (IaC)

Cloud-native applications

Self-healing systems

Remote configuration and orchestration tools

🔗 Build intelligent IT infrastructure with automation-ready architecture

The Need for Automation in Disaster Recovery

Disaster scenarios—whether natural calamities, cyberattacks, hardware failures, or human errors—can occur without warning. Traditional DR processes often involve manual backups, hardware swapping, and delayed decision-making, which:

Increases recovery time (RTO)

Causes extended service disruption

Complicates troubleshooting

Requires trained on-site personnel

Zero-Touch disaster recovery, however, eliminates most of these friction points through proactive and pre-configured automation.

🔗 Strengthen your business continuity strategy with Leading Network Systems

How Zero-Touch Infrastructure Supports Disaster Recovery

Let’s break down the key ways this model elevates disaster resilience:

1. Automated Failover and Backup

Modern infrastructure can detect service anomalies and trigger automated failover to backup servers or cloud environments. This ensures minimal downtime without manual action.

For example, if your primary data center goes offline, zero-touch systems can instantly reroute traffic to a secondary location—without waiting for IT intervention.

🔗 Ensure seamless data center transitions with smart failover design

2. Self-Healing Networks

Using AI and machine learning, Zero-Touch environments can identify issues (e.g., memory leaks, disk failures) and automatically resolve them, such as restarting services or reallocating resources.

This reduces the reliance on human monitoring and improves Mean Time to Resolution (MTTR).

🔗 Implement intelligent monitoring to future-proof your network

3. Remote Configuration and Provisioning

During a disaster, physical access to infrastructure might not be possible. With Zero-Touch deployment tools, IT teams can remotely:

Configure virtual machines

Provision backup services

Restore databases

Push patches and updates

All this can be done securely from anywhere in the world.

🔗 Leverage remote-ready infrastructure management solutions

4. Scalable Cloud Recovery

By integrating with cloud-based platforms, Zero-Touch DR can auto-scale infrastructure based on load, ensuring business continuity during surges triggered by failover events.

This avoids overprovisioning and keeps costs in check.

🔗 Seamlessly scale infrastructure with hybrid and cloud-ready systems

5. Continuous Testing and DR Simulation

One of the most powerful aspects is the ability to simulate disaster recovery scenarios regularly—without disrupting live systems.

This provides confidence that, in a real event, the failover and recovery will work as expected.

🔗 Test and validate your disaster recovery plans proactively

Industries Benefiting Most from Zero-Touch DR

While all businesses can benefit from faster recovery, the following industries are especially vulnerable to downtime:

🔹 Banking & Finance

Delays in transactions, data breaches, or outages can lead to millions in losses and regulatory penalties.

🔹 Healthcare

Life-saving decisions depend on access to electronic health records and diagnostics.

🔹 Telecommunications

Users expect 24/7 connectivity—any outage hurts trust and revenue.

🔹 Manufacturing & Logistics

Downtime can halt production lines and delay shipments across supply chains.

🔗 Deploy industry-specific disaster recovery solutions with Leading Network Systems

Key Components of a Zero-Touch DR System

Building a successful Zero-Touch environment requires a blend of technologies and strategy:

✅ Infrastructure as Code (IaC)

IaC allows you to define and manage infrastructure through code. This means entire environments can be spun up or restored by executing a script.

Popular tools include Terraform, Ansible, and AWS CloudFormation.

🔗 Modernize your setup with code-based infrastructure deployment

✅ Unified Management Portals

Central dashboards help monitor and control infrastructure health across multiple locations. This is critical during emergencies where quick decisions matter.

🔗 Get a unified view of infrastructure status with integrated platforms

✅ Cloud Disaster Recovery (CDR)

Instead of relying solely on physical backups, cloud platforms offer faster recovery with minimal hardware dependency. Snapshots can be scheduled, encrypted, and deployed on-demand.

🔗 Add redundancy with flexible cloud disaster recovery setups

✅ AI & Machine Learning Analytics

Predictive analytics and anomaly detection allow the system to act before failure occurs, improving uptime and resilience.

🔗 Utilize AI-powered infrastructure for proactive issue resolution

✅ Zero-Trust Security Integration

Security remains critical. Implementing Zero Trust frameworks ensures only authenticated systems and users can trigger DR actions.

🔗 Protect your critical infrastructure with Zero-Trust architecture

Benefits of Zero-Touch Disaster Recovery

Let’s summarize why more organizations are transitioning to this model:

Benefit

Impact

Instant failover

Reduced RTO and business continuity

No manual dependencies

Consistent response in any crisis

Faster troubleshooting

Lower downtime and higher MTTR

Scalable and cloud-ready

Efficient cost management

Regular testing

Increased DR reliability

Security integrated

No compromise during automated processes

🔗 Upgrade your business continuity plan with Zero-Touch capabilities

Steps to Transition to Zero-Touch DR

Thinking of making the shift? Here’s how to get started:

1. Audit Your Current IT Environment

Understand what infrastructure, applications, and workflows need to be disaster-resilient. Identify weaknesses in current DR processes.

🔗 Request a full IT infrastructure audit today

2. Define SLAs and Recovery Objectives

Establish your Recovery Time Objective (RTO) and Recovery Point Objective (RPO). These metrics help determine the right automation tools and platforms.

3. Implement Automation Frameworks

Adopt tools that support Infrastructure as Code, automated provisioning, monitoring, and response.

4. Integrate Cloud and Redundant Systems

Backup critical systems to cloud platforms and ensure they’re accessible across regions.

5. Test and Simulate Regularly

Schedule failover drills and automated scenario testing to ensure your Zero-Touch systems function under pressure.

🔗 Get expert support for DR simulations and testing

Challenges and Considerations

While the benefits are clear, there are also considerations:

Initial setup complexity

Budgeting for cloud and automation tools

Training IT teams on automation practices

Ensuring security during automated processes

That’s why working with an experienced infrastructure partner is essential.

🔗 Consult with Leading Network Systems to get Zero-Touch DR right

Conclusion

Disaster recovery has evolved. It’s no longer just about having backups—it’s about ensuring your entire IT infrastructure can bounce back with zero friction. Zero-Touch IT Infrastructure is setting the new standard by bringing automation, speed, and intelligence into every corner of disaster planning.

As business demands increase and downtime becomes less tolerable, now is the time to modernize your recovery strategy.

✅ Let Leading Network Systems help you build a Zero-Touch IT infrastructure for a future-ready, resilient enterprise

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Synology DiskStation DS1823xs+, made for a variety of server roles, such as backup, file storage, email servers, and domain controllers! Form Factor: NAS Tower Server Internal File System Format: EXT4, Btrfs Ports: 1 x RJ-45 10GbE; 2 x RJ-45 1GbE; 1 x Out-of-Band Management LAN; 3 x USB 3.2; 2 x eSATA Processor: Ryzen V1780B 4-Core 3.35GHz CPU, Up To 3.6GHz Turbo Memory: 32GB DDR4 ECC SODIMM Memory Storage: 160TB (8 x 20TB) SATA HDDs for High-Capacity Storage; 1TB (2 x 500GB) M.2 2280 NVMe SSD Power: Single Power Supply Operating System: Synology DSM Software Features: Virtual Machine Manager, Security Advisor, Cache Acceleration, AES 256-bit Encryption, 2 Factor Authentication, Surveillance Station Suite, 4K Multimedia Server, Desktop Backup, Hyper Backup, Snapshot Replication, Synology Drive, and more Powerful storage management, file syncing, surveillance, and backup software. Comes in sealed box. Hard drives and memory upgrades included separately, not installed, installation required. Synology DiskStation DS1823xs+, made for a variety of server roles for a flexible all-in-one solution for large-scale file storage, data sharing and backup, synchronization, surveillance, and multimedia streaming. Powerful, high-capacity, versatile storage that fits in any environment. An ideal solution for individuals and small to medium-sized businesses seeking a reliable and scalable storage solution. Ryzen V1780B 4-Core 3.35GHz CPU, Up To 3.6GHz Turbo; 32GB DDR4 ECC SODIMM Memory; 160TB (8 x 20TB) SATA HDDs for High-Capacity Storage; 1TB (2 x 500GB) M.2 2280 NVMe SSD; 1 x RJ-45 10GbE LAN Port; 2 x RJ-45 1GbE LAN Ports (with Link Aggregation / Failover support); 1 x Out-of-Band Management LAN Port; 3 x USB 3.2 Ports; 2 x eSATA Ports; Btrfs File System for Advanced LUN iSCSI Service Operating System: Synology DSM Software Synology NAS chassis comes in a sealed box. Hard drives and memory upgrades included separately, not installed, installation required. [ad_2]

0 notes

Text

High Availability Server Market Size, Share, Scope, Analysis, Forecast, Growth, and Market Dynamics Report 2032

The High Availability Server Market Size was valued at USD 13.67 Billion in 2023 and is expected to reach USD 24.31 Billion by 2032 with a growing CAGR of 6.61% over the forecast period 2024-2032.

The High Availability Server Market is witnessing significant growth, driven by increasing demand for uninterrupted business operations, rising cybersecurity threats, and the growing need for data center resilience. Organizations across industries are investing in high availability (HA) servers to ensure seamless performance, prevent downtime, and enhance disaster recovery capabilities.

The High Availability Server Market continues to expand as enterprises shift toward cloud computing, virtualization, and data-driven decision-making. With the rise in remote work and digital transformation, businesses are prioritizing robust IT infrastructure to maintain 24/7 availability. The growing reliance on real-time applications, AI-powered analytics, and mission-critical workloads is further accelerating market adoption.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3935

Market Keyplayers:

IBM Corporation (IBM Power Systems, IBM z Systems)

Fujitsu (Fujitsu PRIMEQUEST, FUJITSU Server SPARC M12)

Cisco Systems (Cisco UCS C-Series, Cisco UCS B-Series)

Oracle Corporation (Oracle Exadata Database Machine, Oracle SPARC Servers)

HP Development Company L.P. (HP ProLiant Servers, HP Integrity Servers)

NEC Corporation (NEC Express5800, NEC UNIVERSE)

Unisys Global Technologies (Unisys ClearPath Forward, Unisys ES7000)

Dell Inc. (Dell PowerEdge Servers, Dell VRTX Servers)

Stratus Technologies (Stratus ftServer, Stratus everRun)

Centerserv (Centerserv High Availability Servers)

Huawei Technologies (Huawei FusionServer, Huawei KunLun Servers)

Microsoft Corporation (Microsoft Windows Server, Azure Stack HCI)

Lenovo Group (Lenovo ThinkSystem Servers, Lenovo ThinkAgile)

Supermicro (Supermicro SuperServer, Supermicro TwinPro)

Toshiba Corporation (Toshiba High Availability Servers, Toshiba Server Solutions)

Hitachi Vantara (Hitachi Compute Blade, Hitachi Virtual Storage Platform)

VCE (part of Dell Technologies) (Vblock Systems)

VMware (VMware vSphere, VMware vSAN)

Zebra Technologies (Zebra SmartEdge Servers, Zebra QL Servers)

Micron Technology (Micron High Availability Memory Solutions, Micron Storage Solutions)

Market Trends

Cloud-Based HA Servers: The shift toward cloud computing is driving demand for high availability server solutions that offer scalability, security, and cost efficiency.

Edge Computing Integration: Businesses are deploying HA servers at the edge to process data closer to users, reducing latency and enhancing operational efficiency.

AI and Automation in HA Servers: AI-driven predictive analytics and automated failover systems are improving server reliability and minimizing downtime.

Increased Focus on Cybersecurity: As cyber threats escalate, enterprises are implementing HA servers with advanced security features to safeguard critical data.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3935

Market Segmentation:

By Deployment Mode

Cloud-Based

On-Premises

By Organization Size

Large Enterprises

Small and Medium Enterprises

By Operating System

Windows

Linux

Others

By End-Use Industry

BFSI

IT & Telecommunication

Government

Healthcare

Manufacturing

Retail

Market Analysis

Rising Digital Transformation: Organizations across finance, healthcare, e-commerce, and telecom sectors are adopting HA servers to ensure uninterrupted digital services.

Growing Data Center Investments: Major IT firms and cloud service providers are expanding their high availability infrastructure to meet the rising demand for data processing and storage.

Regulatory Compliance and Risk Management: Industries dealing with sensitive data, such as banking and healthcare, are investing in HA solutions to meet compliance standards.

Small and Medium Enterprises (SMEs) Adoption: The increasing affordability of HA server solutions is driving adoption among SMEs seeking robust IT infrastructure.

Future Prospects

The High Availability Server Market is expected to grow at a CAGR of over 10% in the coming years, fueled by advancements in AI, IoT, and hybrid cloud solutions. Businesses will continue to invest in HA infrastructure to ensure business continuity, enhance cybersecurity, and optimize IT efficiency. The rise of 5G networks and real-time data processing will further drive demand for highly reliable server environments.

Access Complete Report: https://www.snsinsider.com/reports/high-availability-server-market-3935

Conclusion

The High Availability Server Market is evolving rapidly, with enterprises prioritizing reliability, security, and efficiency in their IT operations. As organizations embrace cloud computing, edge technology, and AI-driven automation, HA servers will remain a critical component in ensuring seamless digital experiences. The future of the market looks promising, with continued innovation and widespread adoption across industries.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#High Availability Server Market#High Availability Server Market Scope#High Availability Server Market Share#High Availability Server Market Growth

0 notes

Text

Exploring Oracle Cloud Infrastructure: Driving Business Innovation and Efficiency

In the rapidly evolving digital landscape, businesses are increasingly leveraging cloud technologies to streamline operations and drive innovation. Among the leading cloud computing providers, Oracle Cloud Infrastructure (OCI) stands out by offering a robust suite of services designed to meet the unique demands of enterprises.

What Is Oracle Cloud Infrastructure?

Oracle Cloud Infrastructure is a comprehensive set of cloud services that deliver computing power, storage, and networking capabilities. It supports both traditional and modern workloads, offering flexible and secure solutions for businesses. With its high-performance infrastructure, OCI enables organizations to run mission-critical applications efficiently.

Key Features of Oracle Cloud Infrastructure

Oracle Cloud Infrastructure is built for enterprise-grade applications, offering superior performance, reliability, and scalability. Its multi-cloud capabilities allow businesses to integrate with other cloud platforms while maintaining centralized management. OCI also provides advanced data management solutions with Oracle Autonomous Database, delivering self-driving, self-securing, and self-repairing database capabilities. Enhanced security is a key feature, with built-in encryption, identity management, and compliance controls. Additionally, Oracle’s AI and machine learning tools empower businesses to derive actionable insights from their data.

Popular Oracle Cloud Services

Oracle Compute offers scalable virtual machines and bare metal servers, providing flexibility for various workloads. Oracle Cloud Storage delivers secure, reliable storage options for structured and unstructured data. Oracle Kubernetes Engine facilitates the deployment and management of containerized applications. Oracle Autonomous Database ensures high performance, security, and automatic management without human intervention. Oracle Analytics provides advanced data visualization and predictive insights to support data-driven decision-making.

Benefits of Using Oracle Cloud Infrastructure

Businesses leveraging Oracle Cloud Infrastructure experience numerous advantages. OCI’s high-performance architecture ensures low-latency and efficient operations for demanding applications. With its cost-effective pricing model, companies can optimize expenses by paying only for the resources they use. OCI’s built-in disaster recovery and failover solutions provide enhanced reliability and business continuity. Additionally, Oracle’s focus on security and compliance offers businesses peace of mind, ensuring their data remains protected.

Conclusion

Oracle Cloud Infrastructure offers a powerful and flexible environment for organizations seeking to accelerate their digital transformation. From autonomous database management to scalable compute resources, OCI provides the tools and infrastructure needed to drive innovation and achieve business goals.

Ready to explore how Oracle Cloud Infrastructure can transform your business operations? Partner with leading cloud computing providers and choose the best cloud computing provider to unlock the full potential of the cloud today.

0 notes

Text

Boosting SAN Storage: Best Practices for Faster Storage Performance

Modern businesses rely on rapid, reliable data access, and SAN (Storage Area Network) storage systems play a critical role in meeting these demands. However, ensuring optimal SAN performance requires diligent planning, proactive management, and the application of best practices.

This guide is for IT professionals, system administrators, and data center managers who seek to improve their SAN storage performance. It covers actionable strategies, from hardware optimization to data traffic management, aimed at unleashing the full capabilities of your SAN infrastructure.

By the end of this article, you'll be equipped with the knowledge to maximize the efficiency of your SAN, reduce latency, and future-proof your enterprise storage systems.

Why SAN Storage Performance Matters

Your SAN system is the backbone of your enterprise data infrastructure, supporting mission-critical applications, virtual machines, databases, and more. Poor SAN performance can lead to bottlenecks, slower application response times, and dissatisfied users.

Key reasons to focus on SAN performance optimization include:

Minimizing Downtime: Faster storage access ensures smoother operations for business-critical workloads.

Enhancing User Experience: Rapid data retrieval leads to better application responsiveness, improving productivity.

Scalability and Future-Proofing: A highly optimized SAN can handle growing data demands without requiring constant upgrades.

Now, let's explore some proven best practices to boost SAN storage performance.

Best Practices for Faster SAN Storage Performance

1. Optimize Data Traffic Flow

Efficient data traffic management is the foundation of a high-performing SAN. Poor traffic allocation can lead to congestion and latency. Here's how to improve traffic flow within your network:

Implement Zoning: Zoning organizes data paths by segregating SAN devices (e.g., hosts and storage arrays). Use single-initiator zoning or port-based zoning depending on your infrastructure. This reduces traffic conflicts and enhances security.

Use Multipath I/O (MPIO): Configure redundant data paths between hosts and storage to optimize traffic and provide failover in case of a path failure.

Monitor Switch Bottlenecks: Analyze traffic patterns and upgrade switch ports or bandwidth where necessary to eliminate bottlenecks.

2. Leverage High-Performance Hardware

The hardware supporting your SAN greatly influences its performance. Investing in modern storage and networking components can yield significant improvements.

Choose Low-Latency SSDs: Replace spinning disks with SSDs or NVMe drives for lower latency and higher throughput.

Use High-Speed Networking: Implement fiber-channel networks at 16Gbps or higher. Alternatively, adopt Ethernet-based iSCSI solutions with 25GbE or 100GbE speeds for cost-effective performance.

Optimize for RAID Levels: Select RAID levels based on your workload. For instance, RAID 10 provides both high performance and redundancy, making it ideal for high I/O workloads.

3. Implement Storage Tiering

Storage tiering helps balance performance and cost by allocating data to the most appropriate storage medium.

Frequently accessed, high-priority data should reside on SSDs or NVMe storage tiers.

Less-used or archival data can be stored on slower, more economical disk tiers.

Modern SAN solutions often include automated tiering capabilities to dynamically optimize data placement.

4. Fine-Tune Workload Balancing

Balancing workloads across your storage resources prevents overloading any single element in the SAN and ensures even utilization.

Distribute I/O Loads: Leverage intelligent SAN features to spread workloads evenly across storage controllers and disks.

Monitor Queues: Excessive wait times in queue depths indicate resource overloading. Adjust workloads to prevent bottlenecks.

5. Adopt Deduplication and Compression

SAN storage efficiency and speed increase significantly when data sizes are reduced. Deduplication eliminates redundant data, while compression decreases its size, freeing up storage space. Advanced SAN platforms include these features natively, so be sure to enable them if your hardware supports it.

6. Regularly Update Firmware and Software

Keeping your SAN's firmware and software up to date ensures compatibility with the latest features and fixes.

Update firmware for storage arrays, HBAs, and SSDs regularly.

Apply patches for SAN management software to guard against performance issues and security vulnerabilities.

7. Monitor and Analyze SAN Performance

Proactive monitoring is essential to identify and resolve emerging issues before they impact operations.

Leverage Analytics Tools: Use SAN-specific monitoring tools like Brocade Network Advisor or SolarWinds Storage Resource Monitor to track throughput, latency, and utilization.

Set Threshold Alerts: Configure automated alerts for critical performance metrics to enable faster response times for issues.

Conduct Regular Audits: Periodically review SAN logs to identify anomalies and ensure that zoning, multipathing, and overall configurations align with best practices.

8. Plan for Scalability

Growth is inevitable, and preparing your SAN for increased workload now saves time and resources in the future.

Use Virtualized Storage: Virtualization optimizes capacity and makes scalability easier without excessive hardware upgrades.

Leverage Cloud Integration: Hybrid SAN-cloud solutions allow you to scale storage seamlessly as demand grows.

9. Train Your Team

A well-informed IT team ensures SAN configurations and operations remain optimized. Provide regular training for staff to ensure they stay updated on the latest technologies and best practices.

10. Prioritize Security

A highly optimized SAN that lacks proper security can be disastrous. Segmented zoning, encryption, and firewalls are non-negotiable. Always implement strict access controls and monitor for vulnerabilities.

The Role of Backup and Disaster Recovery in SAN Performance

No high-performing SAN strategy is complete without reliable backup and disaster recovery protocols. Regularly back up your SAN configurations and ensure that restoration processes are optimized and tested. This mitigates potential downtime during unexpected failures.

Unlock Your SAN's Full Potential

Optimizing SAN storage solution is no longer optional for enterprises determined to stay competitive in the digital age. From upgrading your hardware to fine-tuning storage configurations, there are plenty of ways to boost performance and streamline operations. Proactive management, coupled with a commitment to scalability and security, ensures that your SAN can evolve alongside your growing business.

Start implementing these best practices today, and watch your system efficiency (and user satisfaction) soar.

0 notes

Text

What’s The Best VPS For Developers In Nigeria?

If you're a Nigerian developer in search of a strong and stable VPS, selecting the right provider can be the difference between success and frustration. From speed to security and flexibility, a good VPS should provide all that you require to develop, test, and deploy applications seamlessly. One of the best options in Nigeria is Layer3Cloud; let's see why!

Key features of Layer3Cloud VPS

High-performance vServers

Layer3Cloud vServer offers developers high-speed and secure virtual machines, unconstrained by limits on processing power, memory, and storage. This makes for smooth operation for resource-hungry applications.

Virtual data center

Layer3Cloud's Virtual Data Center (vDC) service provides you with full administrative control of several VMs in an environment that is entirely private. You can control your infrastructure from one central location, allowing for resource-rich management and easy scalability.

Disaster recovery-as-a-service

Concerned about system crashes? PaaS Provider in Nigeria allows for simple, secure replication of your virtual machines in multiple availability zones to minimize downtime and ensure fault-tolerant failover possibilities.

Backup-as-a-service (BaaS)

Layer3Cloud offers quick, flexible, and secure backup options, where all your important data and virtualized applications are stored securely and restored quickly when necessary. When this Virtual Private Server Provider in Nigeria is beside you, forget to worry about backups.

Final thoughts

For developers in Nigeria, Layer3Cloud’s VPS solutions offer a combination of power, flexibility, and security. Whether you need high-performance VMs, reliable backups, or scalable storage, Layer3Cloud is a great choice. So, if you’re looking for a VPS that supports your development workflow, it might be time to give them a try!

For more information, you can visit our website https://www.layer3.cloud/ or call us at 09094529373

0 notes

Text

Deploying Red Hat Linux on AWS, Azure, and Google Cloud

Red Hat Enterprise Linux (RHEL) is a preferred choice for enterprises looking for a stable, secure, and high-performance Linux distribution in the cloud. Whether you're running applications, managing workloads, or setting up a scalable infrastructure, deploying RHEL on public cloud platforms like AWS, Azure, and Google Cloud offers flexibility and efficiency.

In this guide, we will walk you through the process of deploying RHEL on Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Why Deploy Red Hat Linux in the Cloud?

Deploying RHEL on the cloud provides several benefits, including:

Scalability: Easily scale resources based on demand.

Security: Enterprise-grade security with Red Hat’s continuous updates.

Cost-Effectiveness: Pay-as-you-go pricing reduces upfront costs.

High Availability: Cloud providers offer redundancy and failover solutions.

Integration with DevOps: Seamlessly use Red Hat tools like Ansible and OpenShift.

Deploying Red Hat Linux on AWS

Step 1: Subscribe to RHEL on AWS Marketplace

Go to AWS Marketplace and search for "Red Hat Enterprise Linux."

Choose the version that suits your requirements (RHEL 8, RHEL 9, etc.).

Click on "Continue to Subscribe" and accept the terms.

Step 2: Launch an EC2 Instance

Open the AWS Management Console and navigate to EC2 > Instances.

Click Launch Instance and select your subscribed RHEL AMI.

Choose the instance type (e.g., t2.micro for testing, m5.large for production).

Configure networking, security groups, and storage as needed.

Assign an SSH key pair for secure access.

Review and launch the instance.

Step 3: Connect to Your RHEL Instance

Use SSH to connect:ssh -i your-key.pem ec2-user@your-instance-ip

Update your system:sudo yum update -y

Deploying Red Hat Linux on Microsoft Azure

Step 1: Create a Virtual Machine (VM)

Log in to the Azure Portal.

Click on Create a resource > Virtual Machine.

Search for "Red Hat Enterprise Linux" and select the appropriate version.

Click Create and configure the following:

Choose a subscription and resource group.

Select a region.

Choose a VM size (e.g., Standard_B2s for basic use, D-Series for production).

Configure networking and firewall rules.

Step 2: Configure VM Settings and Deploy

Choose authentication type (SSH key is recommended for security).

Configure disk settings and enable monitoring if needed.

Click Review + Create, then click Create to deploy the VM.

Step 3: Connect to Your RHEL VM

Get the public IP from the Azure portal.

SSH into the VM:ssh -i your-key.pem azureuser@your-vm-ip

Run system updates:sudo yum update -y

Deploying Red Hat Linux on Google Cloud (GCP)

Step 1: Create a Virtual Machine Instance

Log in to the Google Cloud Console.

Navigate to Compute Engine > VM Instances.

Click Create Instance and set up the following:

Choose a name and region.

Select a machine type (e.g., e2-medium for small workloads, n1-standard-4 for production).

Under Boot disk, click Change and select Red Hat Enterprise Linux.

Step 2: Configure Firewall and SSH Access

Enable HTTP/HTTPS traffic if needed.

Add your SSH key under Security.

Click Create to launch the instance.

Step 3: Connect to Your RHEL Instance

Use SSH via Google Cloud Console or terminal:gcloud compute ssh --zone your-zone your-instance-name

Run updates and configure your system:sudo yum update -y

Conclusion

Deploying Red Hat Linux on AWS, Azure, and Google Cloud is a seamless process that provides businesses with a powerful, scalable, and secure operating system. By leveraging cloud-native tools, automation, and Red Hat’s enterprise support, you can optimize performance, enhance security, and ensure smooth operations in the cloud.

Are you ready to deploy RHEL in the cloud? Let us know your experiences and any challenges you've faced in the comments below! For more details www.hawkstack.com

0 notes

Text

Cloud Playout Software: The Future of TV Broadcasting and Streaming

Introduction

In the ever-evolving world of broadcasting, cloud playout software is emerging as a game-changer. Traditional playout systems relied on expensive hardware and complex infrastructure, but cloud-based solutions offer a more scalable, cost-effective, and flexible alternative. Whether for television networks, OTT (Over-the-Top) platforms, or online streaming services, cloud playout is transforming how content is delivered to audiences worldwide.

This article explores what cloud playout software is, its key benefits, challenges, and the future trends shaping the industry.

What is Cloud Playout Software?

Cloud playout software is a virtualized broadcasting solution that enables media companies to store, manage, and distribute content via the cloud. Unlike traditional hardware-based systems, cloud playout allows broadcasters to deliver content without the need for physical servers, reducing infrastructure costs and increasing operational efficiency.

Key Functions of Cloud Playout Software

Remote Access: Enables broadcasters to manage content from anywhere with an internet connection.

Automation: Schedules and plays content automatically without human intervention.

Scalability: Easily expands to accommodate growing audiences and content libraries.

Multi-Platform Distribution: Supports simultaneous broadcasting on TV, social media, and mobile apps.

Redundancy and Failover: Ensures uninterrupted streaming with backup systems in place.

Benefits of Cloud Playout Software

Implementing cloud-based playout solutions provides numerous advantages for broadcasters:

Cost Efficiency: Eliminates the need for expensive on-premises hardware, reducing capital expenditure.

Flexibility: Supports a wide range of formats and allows for quick content updates.

Reliability: Cloud-based redundancy ensures uninterrupted broadcasting.

Remote Operation: Broadcasters can manage content from anywhere, facilitating global collaboration.

Faster Deployment: Unlike traditional playout systems, cloud solutions can be set up quickly with minimal infrastructure requirements.

Challenges in Cloud Playout Implementation

Despite its advantages, transitioning to cloud playout software comes with challenges:

Internet Dependence: Requires a stable and high-speed internet connection to avoid buffering and downtime.

Security Concerns: Cloud systems must have strong cybersecurity measures to prevent unauthorized access and data breaches.

Technical Learning Curve: Teams may need training to adapt to cloud-based workflows.

Latency Issues: Some broadcasters may experience slight delays compared to traditional playout systems.

Types of Cloud Playout Solutions

1. Public Cloud Playout

Utilizes third-party cloud providers like AWS, Microsoft Azure, or Google Cloud.

Cost-effective and scalable but may have limited customization options.

2. Private Cloud Playout

Hosted on dedicated cloud infrastructure for greater security and control.

Ideal for large broadcasters with specific compliance requirements.

3. Hybrid Cloud Playout

Combines on-premises and cloud-based solutions for maximum flexibility.

Allows broadcasters to maintain critical operations in-house while leveraging cloud scalability.

The Future of Cloud Playout Software

The broadcasting industry continues to evolve, and cloud playout software is playing a vital role in shaping its future. Here are some emerging trends:

1. AI and Automation

Artificial Intelligence (AI) is improving content scheduling, ad insertion, and error detection.

Machine learning algorithms are being used to enhance viewer engagement.

2. 5G and Low-Latency Streaming

Faster internet speeds will enable real-time content delivery with minimal lag.

5G connectivity will enhance cloud-based live broadcasting.

3. IP-Based Broadcasting

Internet Protocol (IP) technology is replacing traditional satellite and cable systems.

Enables more flexible and cost-effective content distribution.

4. Cloud-Native Media Workflows

More broadcasters are adopting end-to-end cloud workflows for production, editing, and distribution.

Cloud-native solutions reduce operational complexity and costs.

Conclusion

Cloud playout software is revolutionizing broadcasting by providing a scalable, cost-effective, and reliable alternative to traditional playout systems. With advancements in AI, 5G, and IP-based broadcasting, the future of cloud playout is promising. Broadcasters looking to stay competitive should consider adopting cloud-based solutions to enhance efficiency and reach global audiences.

Frequently Asked Questions (FAQs)

1. What is the main advantage of cloud playout over traditional playout?

Cloud playout eliminates the need for expensive hardware, offering greater flexibility and scalability.

2. Can cloud playout software handle live broadcasting?

Yes, most cloud playout solutions support live streaming with low-latency delivery.

3. How secure is cloud playout software?

Security measures like encryption, firewalls, and access controls ensure data protection.

4. Does cloud playout work with multiple streaming platforms?

Yes, it allows simultaneous broadcasting across TV, social media, and OTT platforms.

5. What are the key factors to consider when choosing a cloud playout provider?

Consider factors like cost, scalability, security, customer support, and integration capabilities.

0 notes

Text

High Availability and Disaster Recovery with OpenShift Virtualization

In today’s fast-paced digital world, ensuring high availability (HA) and effective disaster recovery (DR) is critical for any organization. OpenShift Virtualization offers robust solutions to address these needs, seamlessly integrating with your existing infrastructure while leveraging the power of Kubernetes.

Understanding High Availability

High availability ensures that your applications and services remain operational with minimal downtime, even in the face of hardware failures or unexpected disruptions. OpenShift Virtualization achieves HA through features like:

Clustered Environments: By running workloads across multiple nodes, OpenShift minimizes the risk of a single point of failure.

Example: A database application is deployed on three nodes. If one node fails, the other two continue to operate without interruption.

Pod Auto-Healing: Kubernetes’ built-in mechanisms automatically restart pods on healthy nodes in case of a failure.

Example: If a virtual machine (VM) workload crashes, OpenShift Virtualization can restart it on another node in the cluster.

Disaster Recovery Made Easy

Disaster recovery focuses on restoring operations quickly after catastrophic events, such as data center outages or cyberattacks. OpenShift Virtualization supports DR through:

Snapshot and Backup Capabilities: OpenShift Virtualization provides options to create consistent snapshots of your VMs, ensuring data can be restored to a specific point in time.

Example: A web server VM is backed up daily. If corruption occurs, the VM can be rolled back to the latest snapshot.

Geographic Redundancy: Workloads can be replicated to a secondary site, enabling a failover strategy.

Example: Applications running in a primary data center automatically shift to a backup site during an outage.

Key Features Supporting HA and DR in OpenShift Virtualization

Live Migration: Move running VMs between nodes without downtime, ideal for maintenance and load balancing.

Use Case: Migrating workloads off a node scheduled for an update to maintain uninterrupted service.

Node Affinity and Anti-Affinity Rules: Distribute workloads strategically to prevent clustering on a single node.

Use Case: Ensuring VMs hosting critical applications are spread across different physical hosts.

Storage Integration: Support for persistent volumes ensures data continuity and resilience.

Use Case: A VM storing transaction data on persistent volumes continues operating seamlessly even if it is restarted on another node.

Automated Recovery: Integration with tools like Velero for backup and restore enhances DR strategies.

Use Case: Quickly restoring all workloads to a secondary cluster after a ransomware attack.

Real-World Implementation Tips

To make the most of OpenShift Virtualization’s HA and DR capabilities:

Plan for Redundancy: Deploy at least three control plane nodes and three worker nodes for a resilient setup.

Leverage Monitoring Tools: Tools like Prometheus and Grafana can help proactively identify issues before they escalate.

Test Your DR Plan: Regularly simulate failover scenarios to ensure your DR strategy is robust and effective.

Conclusion

OpenShift Virtualization empowers organizations to build highly available and disaster-resilient environments, ensuring business continuity even in the most challenging circumstances. By leveraging Kubernetes’ inherent capabilities along with OpenShift’s enhancements, you can maintain seamless operations and protect your critical workloads. Start building your HA and DR strategy today with OpenShift Virtualization, and stay a step ahead in the competitive digital landscape.

For more information visit : www.hawkstack.com

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Synology DiskStation DS1823xs+, made for a variety of server roles, such as backup, file storage, email servers, and domain controllers! Form Factor: NAS Tower Server Internal File System Format: EXT4, Btrfs Ports: 1 x RJ-45 10GbE; 2 x RJ-45 1GbE; 1 x Out-of-Band Management LAN; 3 x USB 3.2; 2 x eSATA Processor: Ryzen V1780B 4-Core 3.35GHz CPU, Up To 3.6GHz Turbo Memory: 32GB DDR4 ECC SODIMM Memory Storage: 160TB (8 x 20TB) SATA HDDs for High-Capacity Storage; 1TB (2 x 500GB) M.2 2280 NVMe SSD Power: Single Power Supply Operating System: Synology DSM Software Features: Virtual Machine Manager, Security Advisor, Cache Acceleration, AES 256-bit Encryption, 2 Factor Authentication, Surveillance Station Suite, 4K Multimedia Server, Desktop Backup, Hyper Backup, Snapshot Replication, Synology Drive, and more Powerful storage management, file syncing, surveillance, and backup software. Comes in sealed box. Hard drives and memory upgrades included separately, not installed, installation required. Synology DiskStation DS1823xs+, made for a variety of server roles for a flexible all-in-one solution for large-scale file storage, data sharing and backup, synchronization, surveillance, and multimedia streaming. Powerful, high-capacity, versatile storage that fits in any environment. An ideal solution for individuals and small to medium-sized businesses seeking a reliable and scalable storage solution. Ryzen V1780B 4-Core 3.35GHz CPU, Up To 3.6GHz Turbo; 32GB DDR4 ECC SODIMM Memory; 160TB (8 x 20TB) SATA HDDs for High-Capacity Storage; 1TB (2 x 500GB) M.2 2280 NVMe SSD; 1 x RJ-45 10GbE LAN Port; 2 x RJ-45 1GbE LAN Ports (with Link Aggregation / Failover support); 1 x Out-of-Band Management LAN Port; 3 x USB 3.2 Ports; 2 x eSATA Ports; Btrfs File System for Advanced LUN iSCSI Service Operating System: Synology DSM Software Synology NAS chassis comes in a sealed box. Hard drives and memory upgrades included separately, not installed, installation required. [ad_2]

0 notes

Text

Strategic Cloud Migration: Tools, Future Utility, and Newt Global’s Approach

Cloud Migration is no longer a mere option but a key necessity for businesses aiming to achieve scalability, flexibility, and cost-efficiency. To navigate these complexities, leveraging the right tools is crucial. This article delves into the tools essential for cloud migration and their future utility in ensuring a seamless and efficient transition.

Key Tools for Cloud Migration

Cloud Assessment Tools

AWS Migration Evaluator: Formerly known as TSO Logic, this tool helps evaluate on-premises workloads and gives point-by-point cost estimates for running them in the AWS cloud. It helps in right-sizing assets, which can significantly diminish costs.

Azure Migrate: It gives insights into VM dependencies, readiness, and cost estimations.

Data Migration Tools

AWS Database Migration Service (DMS): Encourages the movement of databases to AWS with negligible downtime. It supports continuous data replication, ensuring data consistency and integrity during the migration process.

Azure Database Migration Service: This tool supports seamless migration of multiple database types to Azure. It provides a comprehensive guide through the assessment, conversion, and migration phases.

Application Migration Tools

Google Cloud Migrate for Compute Engine: Assists in migrating virtual machines from on-premises or other clouds to Google Cloud. It offers automated and manual migration options to suit different needs.

AWS Application Migration Service: Simplifies and expedites the migration process by replicating the entire application stack, including OS, middleware, and application data, to the AWS cloud.

Cost Management Tools

AWS Cost Explorer: It helps identify cost-saving opportunities and optimize asset allocation.

Azure Cost Management and Billing: Tracks cloud usage and expenditures for Azure and other cloud providers. It provides detailed insights into spending patterns and potential savings.

Integration and Automation Tools

AWS Lambda: Enables the execution of code in response to events without provisioning or managing servers. It is ideal for integrating various services and automating workflows.

Azure Logic Apps: Facilitates the creation and automation of workflows that integrate apps, data, services, and systems. It supports numerous connectors, making integration seamless and efficient.

Future Utility of Cloud Migration Tools

As businesses continue to migrate to the cloud, the utility of these tools extends beyond the initial migration phase. Here’s how these tools will prove invaluable in the future:

1. Continuous Optimization Post-migration, tools like AWS Cost Explorer and Azure Cost Management will help businesses continuously monitor and optimize their cloud expenditures. By providing real-time insights and recommendations, these tools enable proactive cost management. 2. Advanced Data Management Data migration tools like AWS DMS and Azure Database Migration Service will evolve to support advanced data management capabilities. This includes real-time data replication, automated backups, and seamless integration with advanced analytics and AI services.

3. Automated Workflows and Integration Automation tools like AWS Lambda and Azure Logic Apps will drive efficiency by automating repetitive tasks and integrating disparate systems. This capability will be essential for maintaining operational efficiency and agility in a cloud-centric environment.

4. Disaster Recovery and Business Continuity Tools like AWS CloudEndure Disaster Recovery and Azure Site Recovery will be pivotal in ensuring business continuity. They provide real-time replication and automated failover, minimizing downtime and data loss during disruptions. Future-Focused Cloud Migration with Newt Global

Newt Global's future-focused cloud migration approach emphasizes continuous optimization, advanced security, and automation to ensure long-term success in the cloud. They start with a thorough assessment using cutting-edge tools like AWS Migration Evaluator and Azure Migrate, followed by strategic planning tailored to the future needs of the business. By leveraging advanced data migration services such as AWS DMS and Azure Database Migration Service, they ensure seamless data transfer with minimal disruption. Post-migration, Newt Global employs tools like AWS Cost Explorer and Azure Cost Management for ongoing cost efficiency, while AWS Security Hub and Azure Security Center provide robust, continuous security and compliance management. Additionally, automation tools like AWS Lambda and Azure Logic Apps are utilized to enhance operational efficiency and scalability. Newt Global's approach ensures that businesses not only migrate smoothly but also remain agile, secure, and cost-effective in the evolving digital landscape.

Conclusion

By leveraging tools for assessment, data migration, application migration, security, cost management, and integration, businesses can ensure a seamless transition to the cloud. The future utility of these tools extends beyond migration, enabling scalability, continuous optimization, enhanced security, advanced data management, automated workflows, and robust disaster recovery.

As businesses continue to evolve in a digital-first world, the strategic use of these tools will be essential in harnessing the full potential of cloud computing, driving innovation, and achieving long-term success. Thanks For Reading

For More Information, Visit Our Website: https://newtglobal.com/

0 notes

Text

The Hidden Backbone: Exploring the Untapped Power of SAN Storage

Storage Area Network (SAN) storage may not always grab headlines in the world of IT infrastructure, but it is often the unseen force driving high-performance operations for enterprises. If you’ve worked in environments demanding speed, scalability, and reliability, you’ve likely encountered SAN storage solutions as a critical enabler.

Yet, even among seasoned IT professionals, the nuances and immense potential of SAN storage often go underappreciated. This guide dives deep into the untapped power of SAN storage—what it is, how it works, and why it's an indispensable tool for building robust, future-ready infrastructures.

What is SAN Storage?

At its core, a Storage Area Network (SAN) is a high-speed network of storage devices. Unlike storage attached directly to computing devices (such as direct-attached storage, or DAS), a SAN connects storage at the network level, providing shared access to multiple users and systems.

Key characteristics of SAN include:

Dedicated Network: Operates as a separate network specialized for data storage, distinct from a Local Area Network (LAN).

Block-level Access: SAN delivers data in small, quickly accessible blocks, making it suitable for applications that require intensive I/O operations, like databases or ERP systems.

High Speed and Reliability: SANs typically use Fiber Channel (FC) or iSCSI protocols, designed for the high throughput and low latency demands of modern workloads.

Why SAN Storage Matters in Today's IT Landscape

1. Supporting Enterprise Scalability

Today’s enterprises generate vast amounts of data. Whether it’s transactional data, logs, or multimedia content, organizations need scalable solutions to manage growing data sets efficiently. With the ability to seamlessly pool storage devices, SAN storage can grow with your business demands without disrupting existing infrastructure.

SAN also supports features like thin provisioning, which allocates storage dynamically to optimize capacity utilization. This is invaluable for companies looking to strike a balance between scalability and cost-effective storage deployment.

2. Enhancing Application Performance

Need lightning-fast access speed for mission-critical applications? SAN shines in delivering high read/write performance thanks to its block-level storage capabilities. By offloading storage operations onto a separate network, compute resources remain focused on application processing, minimizing latency and boosting overall performance.

For example:

Database Operations thrive on SAN storage, benefiting from its ability to handle high IOPS (Input/Output Operations Per Second).

Large Virtual Machine (VM) Deployments across VMware, Hyper-V, or Citrix environments are optimized via SAN’s ability to manage multiple workloads efficiently.

3. Simplified Management Across Multiple Systems

One of SAN’s standout features is its centralized management. Instead of juggling separate storage silos across various servers, IT administrators gain access to consolidated storage resources. Many SAN solutions include intuitive dashboards, making tasks like allocating storage, monitoring performance, and configuring backups streamlined and repeatable.

This simplicity becomes particularly valuable in environments with dynamic needs—where quick resource provisioning can mean the difference between hitting SLAs and causing delays.

4. Increased Redundancy and Data Protection

The value of data cannot be overstated, and SAN architecture inherently prioritizes redundancy. Its high-availability design ensures minimal downtime, even in the event of hardware failures. Common SAN features like RAID configurations, failover clustering, and synchronous/asynchronous replication fortify your data’s safety.

For disaster recovery, SAN also integrates seamlessly with enterprise backup solutions, enabling snapshot-based backups and long-distance replication, ensuring business continuity even in worst-case scenarios.

Common Use Cases for SAN Storage

Virtualized Environments

Virtualization has long been a driving force behind SAN adoption. SAN capabilities align perfectly with the unpredictable workloads of virtual machines, offering high availability and dependable performance without sacrificing flexibility. Virtualized data centers need shared storage accessible by multiple hosts, and SAN is designed precisely for this.

High-frequency Trading

Financial markets generate enormous volumes of data, requiring real-time capture and computation. Given the speed at which stock transactions occur, even the slightest delay can spell significant losses. SAN storage ensures high-speed, reliable connectivity for trading platforms, reducing latency and keeping pace with fast-changing market conditions.

Media and Entertainment

From video production to rendering massive 3D projects, the media industry demands robust storage solutions. SAN not only handles large file sizes but also provides the multi-user access needed by creative teams working on simultaneous projects.

Healthcare and Research

Hospitals and research facilities rely on SAN storage for managing extensive patient records, imaging files, and genomic data. The combination of large capacity, security features, and stable performance makes it a favorite in these data-heavy sectors.

Questions to Ask Before Adopting SAN Storage

Implementing SAN storage isn’t a one-size-fits-all process. Consider the following questions to ensure SAN aligns with your organization’s needs:

What Workloads Are You Supporting? Applications requiring frequent and fast data access, like transactional databases, are ideal candidates.

What is Your Growth Trajectory? If your data demands are expected to double or triple soon, SAN’s scalability might be worth the investment.

Does Your IT Team Have the Expertise? SAN deployment and management require skill and familiarity with storage protocols; investing in training may be a necessary step.

What Budget Constraints Do You Face? While SAN solutions deliver exceptional value, organizations with lean budgets might need to weigh cost-benefit analyses compared to NAS or DAS options.

The Untapped Opportunities of SAN

Despite its sophistication, SAN storage often flies under the radar, overshadowed by flashier terms like hyper-converged infrastructure or AI-driven analytics. But the truth is, SAN continues to evolve—offering faster interconnects (e.g., NVMe over Fiber Channel), improved management capabilities, and even integrations with private clouds.

By adopting SAN strategically, enterprises can ensure their data infrastructure remains robust, reliable, and future-proof.

Take the Next Step Toward SAN

SAN storage may be the hidden backbone of modern IT architecture, but its performance, scalability, and resilience make it anything but ordinary. For IT professionals and Storage Architects, understanding how to harness SAN effectively can transform your organization’s infrastructure.

Explore SAN innovations today and stay ahead of your competition. Start by consulting our free guide to implementing SAN solution in enterprise environments for tailored insights.

0 notes

Text

Protect SAP Workloads with Workload Manager’s assessment

Use the assessment service offered by Workload Manager to protect your SAP setups.

Following best practices is essential as companies move their SAP workloads to the cloud. In this blog article, they explore Google Cloud’s Workload Manager and demonstrate how its automated, rule-based analysis may proactively detect potential misconfigurations and deviations from best practices, thereby helping to protect your SAP workloads.

The function of best practices in preserving the integrity of the SAP workloads

Large-scale cloud deployment configuration and maintenance can be difficult, particularly when operating systems, applications, and infrastructure are all involved. Complicated setups, changing industry standards, and the possibility of human mistake associated with manual labour can all have a detrimental effect on your SAP workloads and ultimately your company.

In the past, misconfigurations were only found and fixed in reaction to a crisis, and frequently required laborious manual inspections. Workload Manager offers a SAP assessment service that can significantly enhance your Google Cloud SAP workloads management capabilities, enabling you to:

Minimise avoidable errors: Adhering to best practices would have prevented or lessened a great deal of problems and disruptions. Perform scans to proactively find possible problems before they become more serious ones.

Safeguard go-lives: To help boost go-live success rates and address problems that can be challenging to fix later, make sure new deployments are validated and configured in accordance with best practices.

Detect drift: Perform routine scans to find any changes or misconfigurations over time.

Minimise operational overhead: To help reduce time spent on laborious manual inspections, automate the validation process.

Workload Manager

An introduction of the Workload Manager evaluation service

The sophisticated rule-based validation service offered by Workload Manager’s evaluation service may automatically evaluate your SAP workloads in comparison to a comprehensive set of operating system, SAP, and Google Cloud best practices.

As new insights are gained and best practices change, the SAP rule catalogue is regularly updated. The supplied rules explore important facets of your SAP setup in the following categories, going beyond basic configuration checks:

SAP General: Guidelines that are applicable to all kinds of SAP workloads, like support requirements and VM setup settings

SAP High Availability: Analyzing cluster setups, failover procedures, and system architectures, these checks help maximize availability and reliability.

HANA & SAP NetWeaver: Logic that recognizes each resource’s role automatically and then verifies it against role-specific specifications including approved machine sizes, disc kinds, and other details

SAP HANA Insights: Perceptive analysis and optimizations for memory allocation, performance evaluations, compression, and other areas

SAP HANA Security Best Practices: Guidelines for assessing HANA’s security posture, covering known vulnerabilities, encryption settings, and access control, among other things Following a scan, an assessment report summarizes the findings and enables you to go more into each rule to see which specific resources passed and failed.

Every rule comprises an explanation of the problem, an assessment of its gravity, and a suggestion along with links to the pertinent files to aid in fixing the issue. Additionally, you have the option to get alerts by email or Pub/Sub on specific triggers, like the discovery of a new issue.

How to use the Workload Manager assessment service

Install the SAP Google Cloud agent on each virtual machine within the scope.

The SAP agent for Google Cloud is a unified agent that handles several tasks associated with executing SAP workloads on Google Cloud. Every virtual machine (VM) running SAP workloads on Google Cloud must have the agent installed and operational (SAP Note 2456406 – SAP on Google Cloud Platform: Support Prerequisites).

The agent also offers optional features, such as the Workload Manager that gathers data for analysis. To ensure that the agent is installed and configured appropriately, consult the following checklist: Install Google Cloud’s SAP Agent.

An automated check to ensure that the agent is installed and configured correctly in every in-scope virtual machine is also included in the assessment report. Run an assessment as instructed below and look for the rule labelled “Verify that Google Cloud’s Agent for SAP is configured appropriately on all instances within the evaluation scope” in the results if you are not sure if you have already finished the aforementioned steps.

Prerequisites and first authorization

Make sure you have finished the following requirements before utilising the Sap Workload Manager assessment service:

Turn on the API for Workload Manager.

IAM Roles: To control access to the assessment service, specify the relevant IAM roles.