Don't wanna be here? Send us removal request.

Text

LangChain: A New Era of Business Innovation

The progression of human-machine interaction has been a fascinating journey. When it began, human communication revolved around exchanging information with one another using language, facial expressions, gestures, and various other signals. However, as machines and computers emerged, humans began engaging in more structured interactions with them. Initially, this entailed giving precise instructions to machines using punch cards, switches, and buttons, but the way that humans and machines interact now has significantly changed in recent years, moving more towards unstructured interactions. This is primarily driven by Natural Language Processing (NLP) advancements. The growth in NLP has paved the way for the development of Large Language Models (LLMs). They are a kind of Artificial Intelligence (AI) program that comprehends, encapsulates, produces, and predicts new material using deep learning techniques and extraordinarily big data sets. After all the significant advancements in the LLM field, such as Google’s LAMDA chatbot, open-source LLM known as BLOOM, etc., OpenAI published ChatGPT, bringing LLMs to the forefront. Around the same period, LangChain emerged. Harrison Chase unveiled LangChain, an open-source project, in October 2022.

What is LangChain?

LangChain is a framework used to create language model-driven applications. It is intended to link a language model to other data sources and enable interaction with the outside world. A set of tools, parts, and interfaces made available by LangChain make it easier to build chat models and LLM-based systems. With the help of this open-source framework, programmers can create dynamic, data-responsive apps that use the most recent advances in natural language processing.

LangChain Structure

The primary idea is that we may “chain” together various components to develop use cases for LLMs that are more sophisticated. Chains may include a number of elements from various modules:

Prompt templates: Prompt templates serve as models for various prompts. Depending on the size of the context window and the input variables utilized as contexts, it can adapt to various LLM kinds.

Models:

LLM

A text string is put into a large language model (LLM), which outputs another text string.

Chat models

These models take an input list of chat messages and output a chat message.

Text embedding models

Text is put into text embedding models, which then output a list of floats.

Agents: The “custom agents” functionality of LangChain is one of its more recent additions. According to Chase, agents are a strategy that “uses the language model as a reasoning engine” to decide how to interact with the outside environment based on user input.

Memory: Typically, LLMs lack long-term memory. LangChain ensures to aid developers in this area by integrating components like memory into handling LLMs.

Indexes: They describe how to organize documents so that LLMs may interact with them effectively. This module includes helpful features for interacting with documents and connecting to various vector databases.

Chains: For some straightforward applications, using an LLM alone is feasible, but for many more sophisticated ones, chaining LLMs—either with one another or with other experts—is necessary.

Integration of LangChain, and Pinecone vector database

Pinecone provides a data warehouse to provide vector-based personalization, ranking, and search solutions that are accurate, quick, and scalable, the company claims. Pinecone’s collaboration with OpenAI’s Large Language Models improves semantic search or the ‘long-term memory’ of LLMs. Combining Pinecone’s vector search capabilities with the embedding and completion endpoints of LLMs allows for sophisticated information retrieval.

Pinecone gives programmers the tools they need to create scalable, real-time vector similarity search-based recommendation and search systems. In contrast, LangChain offers modules for controlling and enhancing the use of language models in applications. Its primary principle is to enable data-aware applications in which the language model communicates with its surroundings and other data sources.

Pinecone and LangChain integration allows you to create complex applications that take advantage of both platforms’ strengths. Allowing us to add “long-term memory” to LLMs, significantly boosting the functionality of chatbots, autonomous agents, and question-answering systems, among others.

How does it work?

With as few processing resources as possible, LangChain composes massive volumes of data that can be simply referred to by an LLM. A large data source is divided into “chunks,” which are then inserted into a Vector Store to make it work.

Since the huge text has been vectorized, we can use the LLM to extract only the data we need to create a prompt-completion pair.

LangChain will ask the Vector Store for pertinent data when we insert a prompt. Consider it a smaller version of Google for your document. When the pertinent data has been located, we use it in unison with the LLM’s prompt to produce our response.

How has LangChain impacted businesses?

Businesses have benefited from LangChain because it has allowed them to develop language model-powered software applications that can carry out various activities, including code analysis, document analysis, and summarization. By offering modular abstractions, integrations, and tools for interacting with language models and other data sources, LangChain streamlines the process of developing NLP applications.

Furthermore, you can link LLMs to other data sources using LangChain. Due to the size of the text and code training datasets used to train LLMs, this is significant. They can only access the data that is present in those datasets, though. You may offer LLMs access to more information by linking them to additional data sources. Your applications may become more robust and adaptable as a result. Here are some more reasons why LangChain is important:

Improve LLMs with memory and context

LangChain enables developers to add Memory and Context to existing powerful LLMs, artificially introducing “reasoning” and handling more intricate jobs with greater precision.

Offers a novel method for creating user interfaces

Developers are particularly intrigued by LangChain because it offers a new way of creating user interfaces. Developers can use LLMs to produce each step or question in place of conventional UI elements, doing away with the requirement for manual step ordering.

Latest Knowledge

The LangChain team continually tries to make the library run faster. You can be sure that you will know the most recent LLM features.

Use cases of LangChain

Healthcare:

By lowering the human labor and complexity associated with reading and processing medical records, LangChain enables healthcare practitioners to access and analyze information more quickly and easily.

An example of a LangChain application for the healthcare industry can be the chatbot for harmful medication reaction reports, which uses ChatGPT and LangChain to query and extract insights from reports of bad medication reactions. It can assist medical practitioners in comprehending the causes, consequences, and remedies of adverse drug reactions.

Finance:

It can help finance professionals understand the performance, trends, and risks of a company or an industry. Using ChatGPT and LangChain, a chatbot for financial contracts can query and extract insights from financial contracts. Understanding a contract or agreement’s conditions, responsibilities, and ramifications will greatly benefit finance professionals. Using a chatbot for financial statements, they can also assess a company’s or an entity’s financial health, profitability, and liquidity.

Commerce:

It can benefit e-commerce by using Redis, LangChain, and OpenAI to create an e-commerce chatbot that can assist clients in finding items of interest from a big catalog. Based on the user’s question, the chatbot can discover suitable products using vector similarity search and then utilize a language model to provide realistic responses.

Banking:

LangChain can benefit the banking sector by constructing a blockchain banking platform with Distributed Ledger Technology (DLT) that allows quicker, cheaper, more secure, and more inclusive transactions. Using a linguistic model, the platform can support decentralized finance, robo-advisory services, asset-backed digital tokens, nonfungible tokens, digital currencies issued by central banks, smart contracts, initial coin offerings, and other financial innovations.

LangChain and OpenAI can also be used to create a code analysis tool to evaluate banking software’s performance, security, and quality. The program can recognize and generate code snippets from language models, find errors and vulnerabilities, and provide suggestions for improvement.

Conclusion

Most human-computer interaction in the past has been done through command-line interfaces or strict menu-driven systems. Computers needed precise instructions or organized inputs to communicate with them. Natural language interaction with computers is now possible thanks to NLP, LLMs, chatbots, LangChain, and other emerging technologies for making it easier and more accessible.

Imagine that you enter a large home improvement store. Usually, you would feel overwhelmed with all the options. But with your LangChain-enabled AI-powered personal shopping assistant, finding what you need is a breeze. You tell the assistant on your smartphone app that you’re remodeling your kitchen. The assistant, using advanced language processing, shows you trending paints, suggests cabinet handles that match your chosen paint, and offers a variety of backsplashes. It also provides product comparisons, checks inventory, and answers any questions you have. With this LangChain-enabled AI assistant, home improvement shopping becomes easy and personalized. Welcome to the future of convenient retail.

As the LangChain-enabled AI continues to learn and adapt, it can help in more than just shopping scenarios. It will assist in areas such as education, healthcare, and logistics, making everyday tasks easier and more efficient. The impact of LangChain’s application on human life will be substantial, leading to a future where AI understands and responds to our needs in a personalized and intuitive manner.

0 notes

Text

Re-imagining, Re-skinning, Re-creating: Exploring the Spectrum of UI/UX Design Evolution

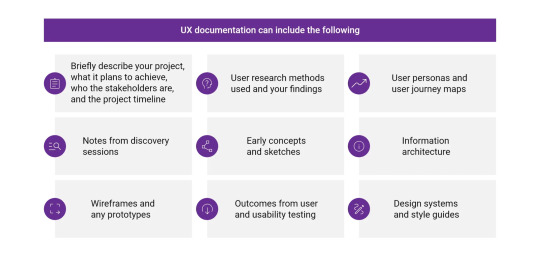

User interface (UI) and user experience (UX) design are constantly evolving disciplines driven by the need to create engaging and user-friendly digital experiences. As technology advances and user expectations change, there are different approaches designers can take to refresh and improve interfaces. In this article, we will delve into the UI / UX design process and understand the concepts of re-imagining, re-skinning, and re-creating, and how they contribute to the evolution of digital products.

Re-imagining: Unleashing Innovation and User-Centric Design

Re-imagining in UI/UX design involves a holistic approach that goes beyond incremental changes. It is about rethinking and redefining the entire user experience to address pain points, introduce innovative solutions, and meet emerging user needs. Re-imagining encourages designers to think creatively, challenge assumptions, and re-imagine the possibilities.

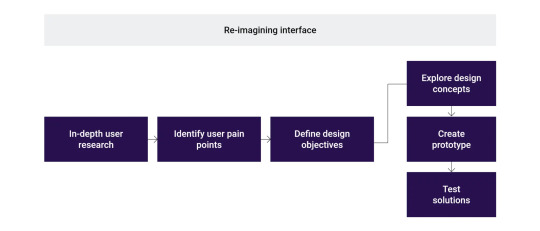

When re-imagining an interface, designers conduct in-depth user research, identify user pain points, and define clear design objectives. They then explore new design concepts, create prototypes, and test innovative solutions. Re-imagining often leads to significant changes in an interface’s structure, layout, and functionality, resulting in a transformed user experience that better aligns with user expectations. Sometimes re-imagining involves changing the user interface, and sometimes it requires changing the entire process that the user follows.

Re-skinning: Refreshing the Visual Experience

Re-skinning, also known as a facelift or visual overhaul, focuses on updating the visual elements of an existing UI without making significant changes to the underlying functionality. It is often employed when an interface looks outdated or no longer aligns with current design trends.

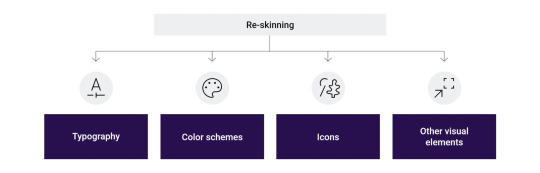

During re-skinning, designers update the color schemes, typography, icons, and other visual elements to give the interface a fresh and modern look. Re-skinning helps maintain visual consistency, align the interface with brand guidelines, and enhance the overall aesthetics. It is a cost-effective approach that can breathe new life into an interface and improve user perception without requiring extensive development work.

Re-creating: Transforming for Optimal User Engagement

Re-creating involves a comprehensive redesign of the UI and UX, going beyond visual changes. It is employed when an interface requires significant improvements, such as addressing usability issues, rethinking the information architecture, or introducing new features. During the re-creating process, designers conduct user research, redefine the user flow, and create wireframes and prototypes to test and validate new design concepts. The goal is to optimize the user experience by improving usability, enhancing navigation, and introducing innovative solutions. Re-creating often requires a more significant investment of time and resources compared to re-imagining or re-skinning. It offers the opportunity to transform the interface into a more intuitive, user-friendly, and engaging experience, resulting in increased user satisfaction and improved business outcomes.

Understanding the Spectrum

Re-imagining, re-skinning, and re-creating represent different approaches on a spectrum of UI/UX design evolution. They cater to different needs and objectives, depending on the current state of the interface and the goals of the design project. Re-imagining is about embracing innovation, challenging conventions, and pushing boundaries to create transformative experiences. It is ideal when the existing UI requires a fundamental redesign to address user pain points and introduce cutting-edge solutions.

Re-skinning focuses on refreshing the visual elements of an interface to give it a modern and updated look. It is suitable when the existing UI is visually outdated but still functions well, and the primary objective is to enhance aesthetics and maintain consistency.

Re-creating involves a comprehensive redesign encompassing an interface’s visual and functional aspects. It is the right approach when the existing UI has significant usability issues or requires a complete overhaul to align with current user expectations and business objectives.

Choosing the Right Approach

When considering which approach to take in a UI/UX design project, it is essential to assess the current state of the interface, understand user needs and pain points, and define clear design objectives. Re-imagining is ideal when a radical transformation is needed to address user pain points, introduce innovation, and create a truly user-centric experience. It requires a deep understanding of users, careful analysis of data, and an open-minded approach to design thinking. Re-skinning is suitable when the existing UI still functions well but needs a visual facelift to align with current design trends and brand guidelines. It is a surface-level approach that focuses more on improving aesthetics and maintaining consistency. Re-creating is the right choice when the existing UI has significant usability issues, lacks scalability, or requires a complete redesign to meet evolving user needs. It involves a more extensive and time-consuming process but can result in a transformative user experience. In the end, the selection of a strategy relies on the goals, constraints, and context of the design project. UI/UX designers must carefully evaluate the needs and objectives and select the most appropriate approach to drive meaningful improvements and create exceptional digital experiences.

Conclusion

Re-imagining, re-skinning, and re-creating represent different approaches in the UI / UX design process, each with its unique focus and objectives. Re-imagining encourages innovation and user-centric design thinking, re-skinning refreshes the visual experience, and re-creating involves a comprehensive redesign to optimize user engagement. By understanding the spectrum of design evolution and selecting the appropriate approach based on the specific project requirements, designers can create interfaces that meet user needs, align with business objectives, and deliver exceptional user experiences.

0 notes

Text

Human Journeys, Not Processes

When it comes to human experience, businesses often prioritize progress and efficiency, striving to optimize processes and maximize outcomes. However, in this pursuit, there is a risk of losing sight of the human element—the intricate and often unpredictable journeys customers embark on. By shifting the focus from progress to embracing authenticity and genuine human connection, companies can create meaningful and lasting experiences that resonate with customers on a deeper level.

The Human Touch in Customer Experience

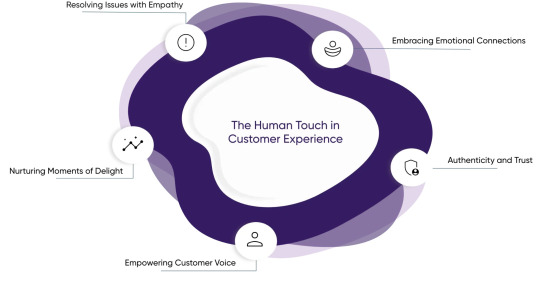

Embracing Emotional Connections

Customers are not just transactions but individuals with emotions, desires, and values. Recognizing and understanding the emotional aspects of their journeys allows businesses to connect with customers more profoundly. By fostering empathy, actively listening, and holistically addressing their customers’ needs, companies can create an emotional bond beyond mere transactions.

Authenticity and Trust

In a world saturated with marketing messages, customers crave authenticity. When businesses prioritize building genuine relationships over pushing sales, trust is established. By consistently delivering on promises, being transparent, and valuing open communication, companies can foster the trust that forms the foundation of long-term customer loyalty.

Empowering Customer Voice

Customers want to be heard and have their opinions valued. By actively seeking feedback, whether through surveys, reviews, or social media, businesses demonstrate their commitment to understanding and meeting customer expectations. Empowering customers to contribute to shaping products, services, and experiences creates a sense of ownership and strengthens the bond between the customer and the brand.

Nurturing Moments of Delight

Human journeys are filled with small moments that have the potential to create lasting memories. Businesses can create positive associations and leave a lasting impression by focusing on delighting customers at various touchpoints along their journey. It could be a personalized message, a surprise gift, or simply going the extra mile to exceed expectations. These small acts of thoughtfulness have the power to turn customers into brand advocates.

Resolving Issues with Empathy

No journey is without its challenges. When customers encounter issues or problems, how a company responds can make all the difference. By approaching these situations with empathy, actively listening, and working towards a satisfactory resolution, businesses can turn a negative experience into an opportunity to strengthen the customer relationship. Demonstrating a genuine concern for the customer’s well-being and taking responsibility for rectifying any issues builds trust and loyalty.

Conclusion

In a customer-centric world, businesses must shift their focus from internal processes to the journeys customers undertake. Companies can build deeper connections, deliver personalized experiences, and cultivate long-term loyalty by understanding and prioritizing human journeys. In a world where progress often overshadows the human touch, organizations prioritizing the authenticity of these journeys will succeed in building strong and loyal customer relationships. Ultimately, it is the human element that makes customer experiences genuinely remarkable and memorable. By placing the customer and their emotions at the forefront, organizations can differentiate themselves in a crowded marketplace and build lasting relationships that drive success.

0 notes

Text

Mastering DesignOps: Roles and Partnerships for Success

The expression “DesignOps” originates from DevOps, a cooperative method in software development and systems management that focuses on automation, agility and efficiency. And DesignOps is a discipline that focuses on the operational aspects of design, aiming to improve the efficiency, collaboration, and overall effectiveness of design teams. DesignOps roles and partnerships in UI/UX can vary from organization to organziation, depending its specific needs. However, here are some typical roles and partnerships you may find in DesignOps:

DesignOps Manager / Lead

This role is responsible for overseeing the DesignOps function within an organization. They work closely with design teams, project managers, and other stakeholders to develop and implement efficient design processes, tools, and systems. They also ensure the design team has the necessary resources and support to deliver high-quality work on time.

Design Program Manager

A Design Program Manager works closely with cross-functional teams to manage and coordinate design initiatives and projects. They help define project goals, allocate resources, track progress, and ensure timely delivery of design outcomes. They also facilitate communication and collaboration between design teams and other departments, such as engineering, product management, and marketing.

Design Systems Manager

Design Systems Managers are responsible for developing and maintaining design systems, which are collections of reusable components, guidelines, and assets that ensure consistency and efficiency across different design projects. They collaborate with designers, developers, and other stakeholders to define design standards, create design libraries, and document guidelines for design implementation.

UX Research Operations

UX Research Operations professionals support the research efforts of the design team. They assist in organizing and managing user research studies, recruiting participants, coordinating research logistics, and analyzing & sharing research findings. They work closely with UX researchers and designers to ensure smooth and effective research processes.

Design Tooling Specialist

Design Tooling Specialists focus on selecting, implementing, and maintaining design tools and software that enhance the efficiency and effectiveness of design workflows. They stay current with the latest design tools and technologies and work closely with designers to provide training, support, and guidance on tool usage.

Partnerships in DesignOps typically involve collaboration with other departments and roles, such as:

Product Managers

DesignOps professionals work closely with product managers to align design processes with product development goals, define design requirements, and ensure that design work supports the overall product strategy.

Engineering Teams

Collaboration with engineering teams is essential for integrating design workflows with the development process. DesignOps professionals partner with engineers to establish effective handoff processes, ensure smooth implementation of designs, and address any technical constraints or challenges.

Marketing and Branding Teams

DesignOps professionals collaborate with marketing and branding teams to align design efforts with the organization’s brand guidelines, messaging, and marketing strategies. They work together to ensure consistent visual identity and messaging across different touchpoints.

Project Managers

Project managers are crucial in coordinating design projects and managing timelines and resources. DesignOps professionals collaborate closely with project managers to define project goals, allocate design resources, track progress, and ensure successful project delivery.

It’s important to note that the specific roles and partnerships in DesignOps can vary depending on the organization’s size, structure, and industry. Some organizations may have dedicated DesignOps teams, while others may integrate DesignOps responsibilities within existing roles or departments.

0 notes

Text

Effective software testing strategies for the financial sector

The world of financial services is going through a lot of changes as a result of technological improvements and digitization. The banking industry is heavily reliant on technologically enhanced products, and in order to provide high-quality client service, it is crucial that these products be reliable and performant. Additionally, it is essential that all operations carried out by banking software proceed without hitches and without errors to guarantee safe and secure transactions, this raises the need for effective software testing strategies for the financial sector.

Applications created for the banking and financial industries typically have to adhere to a fairly tight set of standards. It results from the necessity of addressing the legal requirements that financial institutions must adhere to. Because they have power over the clients’ money. All these criteria, as well as the fundamental functional needs of banking software, should be taken into account when evaluating banking software.

Why do we need software testing in the financial sector?

The payment procedure could end in disaster if there are flaws or failures at any point. Hackers may be able to access and utilize private user data if a financial software program has a weakness. This is why financial institutions should place a high priority on end-to-end testing. It guarantees a great user experience, customer safety, program functionality, enough load time, and data integrity. For a variety of reasons, the financial sector needs software testing:

Regulatory reporting

Financial firms frequently have to submit reports and audits to regulatory agencies in order to comply with regulations. Effective software testing ensures the required data is correct, comprehensive, and accessible for reporting needs. By implementing effective testing practices, organizations can confidently comply with regulatory reporting obligations and avoid fines or legal repercussions.

Customer satisfaction

Financial organizations heavily depend on customer trust and satisfaction. Customer churn can be caused by malfunctioning software, transaction mistakes, or security breaches. An effortless and satisfying user experience is made possible by effective software testing, which helps find and fix problems before they affect customers. Financial institutions may preserve consumer confidence and contentment by providing dependable and secure software.

Cost savings

Resolving bugs early in the software development lifecycle often results in lower costs than doing so after they have been discovered in use. Software testing aids in the early identification of problems, lowering the cost of rework, system downtime, and assistance for customers. Organizations can optimize their infrastructure and resource allocation by using it to find performance bottlenecks and scalability problems.

Risk mitigation

The financial industry is intrinsically fraught with risks. Program testing helps to reduce these risks by verifying that the program performs complicated financial computations and transactions accurately and correctly. It assists in identifying and resolving possible problems that can lead to monetary losses, reputational harm, or non-compliance with risk management procedures.

What are the stages in software testing?

When testing software, there are three main stages:

What Software Testing Strategies can be used in the financial sector?

Automation testing

Since they encounter various scenarios, most financial services applications need thorough testing. Test automation makes the process fluid and gets rid of any mistakes that could happen from manual testing. Automated test scripts and frameworks can be used for this.

Stress testing

Recreate high-stress situations to ascertain how the system will react in such circumstances. You can test the software’s robustness by subjecting it to high loads, quick transactions, or parallel user access. This aids in locating any possible flaws or failure locations.

Security testing

After evaluating the application’s functional and non-functional components, security testing is often considered near the end of the testing cycle. However, over time, the dynamics and procedures must evolve. Thanks to financial applications, millions of dollars can now be traded in the form of investments, goods, money, and other assets. This calls for proactive treatment of sensitive locations and close attention to financial breaches. By using security testing, you can look for issues and fix them in accordance with governmental and commercial regulations. Every platform, including mobile apps and internet browsers, is assisted in checking for vulnerabilities.

Regression testing

Regression testing is necessary as financial software is updated or improved to ensure that new changes don’t cause existing functionality to change or introduce new flaws. Create a comprehensive regression test suite that includes key features, and run regression tests often.

Performance testing

Applications for financial services are diversifying their market and product offerings, necessitating a greater understanding of the projected load on the application. Performance testing is, therefore, necessary throughout the entire development lifecycle. It aids in system load estimation, testing, and management, allowing for more appropriate application development.

Conclusion

Given the sensitivity of handling clients’ financial transactions, evaluating banking software and procedures is of the utmost importance. It necessitates technical mastery and a highly skilled team. Various software testing strategies, like security testing, performance testing, accessibility testing, API testing, and database testing, are essential alongside automated testing to guarantee the creation of error-free and superior apps.

Partnering with a professional software testing service provider like TVS Next might have considerable advantages for achieving thorough testing coverage and ensuring the greatest degree of quality assurance.

0 notes

Text

Machine Learning Trends for Financial and Healthcare Industries

Machine learning (ML) has surfaced as a game-changing influence in multiple industries, dramatically reshaping the banking, financial services, and healthcare landscape. With its proficiency in processing large quantities of data and generating predictions, machine learning is progressively becoming more valuable.

This blog will examine some of the most significant machine learning trends currently shaping the banking and financial services industry, including churn management, customer segmentation, underwriting, marketing analytics, regulatory reporting, and debt collection. We will also delve into the machine learning trends in healthcare sector, highlighting disease risk prediction, patient personalization, and automating de-identification.

These trends are supported by insights from leading market research firms like Gartner and Forrester and consulting firms like McKinsey, BCG, Accenture, and Deloitte.

ML Trends in Banking and Financial Service Industry

Churn Management:

Churn management is a critical concern for banking and financial service providers. Machine learning algorithms can analyze customer behavior, transaction history, and interaction patterns to identify potential churn indicators. By detecting early signs of customer dissatisfaction, businesses can proactively engage customers and offer tailored solutions to retain them.

Example: Citibank implemented a machine learning system to predict customer churn by analyzing transactional data and customer interactions. This approach helped Citibank reduce customer churn by 20% and increase customer retention.

Customer Segmentation:

Machine learning enables accurate customer segmentation, allowing banks and financial institutions to understand their customer base better. ML algorithms can analyze customer data, including demographics, transaction history, and online behavior, to identify distinct customer segments with specific needs and preferences. This information empowers businesses to create targeted marketing campaigns, personalized offerings, and tailored customer experiences.

Example: A leading financial institution employed machine learning to segment its customers based on their financial goals, spending patterns, and risk appetite. By tailoring their product offerings to each segment, the institution achieved a 15% increase in cross-selling and improved customer satisfaction.

Underwriting:

In the banking and financial services industry, underwriting is a critical process for assessing loan applications and managing risk. Machine learning algorithms can analyze large amounts of data, including credit scores, financial statements, and historical loan data, to automate and enhance the underwriting process. ML-powered underwriting systems can provide faster and more accurate risk assessments, leading to efficient decision-making and improved loan portfolio quality.

Example: LendingClub, an online lending platform, utilizes machine learning to assess borrower creditworthiness. By analyzing various data points, such as income, credit history, and loan purpose, LendingClub’s machine learning models have improved loan approval accuracy and reduced default rates.

Marketing Analytics:

Machine learning empowers banks and financial institutions to better understand customer behavior and preferences, enhancing marketing effectiveness. ML algorithms can analyze customer data, social media interactions, and campaign responses to identify trends, patterns, and customer preferences. This enables businesses to create targeted marketing strategies, optimize campaign performance, and improve customer acquisition and retention rates.

Example: Capital One employs machine learning to personalize marketing offers for credit card customers. By analyzing customer data, spending patterns, and demographic information, Capital One delivers tailored offers, resulting in increased response rates and improved customer engagement.

Regulatory Reporting:

Regulatory compliance is a significant concern for banks and financial institutions. Machine learning can automate and streamline the regulatory reporting process by analyzing and extracting relevant information from vast amounts of data. ML algorithms can ensure accuracy, identify anomalies, and provide real-time insights, enabling timely compliance with regulatory requirements.

Example: JPMorgan Chase leverages machine learning for regulatory reporting by automating data extraction and verification. This approach has improved accuracy, reduced reporting errors, and increased operational efficiency.

Debt Collection:

Machine learning can improve debt collection processes by identifying the most effective strategies and predicting the likelihood of repayment. ML algorithms can analyze customer payment history, communication patterns, and external data sources to prioritize collection efforts, tailor communication channels, and optimize resource allocation.

Example: American Express implemented machine learning algorithms to predict the likelihood of customers falling behind on payments. By proactively engaging at-risk customers and offering tailored payment plans, American Express reduced delinquency rates and improved collections efficiency.

ML Trends in Healthcare Industry

Disease Risk Prediction:

Machine learning algorithms can analyze large amounts of patient data, including medical records, genetics, and lifestyle factors, to accurately predict disease risks. By leveraging these predictions, healthcare providers can proactively intervene, develop personalized prevention plans, and improve patient outcomes.

Example: Google’s DeepMind developed a machine learning model to predict the risk of developing acute kidney injury (AKI). The model enabled healthcare professionals to identify at-risk patients earlier by analyzing patient data, allowing for timely intervention and reduced AKI incidence.

Patient Personalization:

Machine learning enables personalized healthcare by analyzing patient data to tailor treatment plans, medication dosages, and therapies to individual characteristics and needs. This approach, known as precision medicine, improves patient outcomes and minimizes adverse effects.

Example: Memorial Sloan Kettering Cancer Center employed machine learning to personalize cancer treatment recommendations. The algorithm helped oncologists determine the most effective and personalized treatment plans by analyzing patient data, including genetic information and treatment history.

Automating De-Identification:

To comply with privacy regulations, healthcare providers must de-identify patient data before sharing it for research or analysis. Machine learning can automate de-identification by accurately removing or encrypting personally identifiable information (PII) while preserving data utility.

Example: The National Institutes of Health (NIH) developed machine learning models to automate the de-identification of medical records. This approach increased efficiency, reduced human error, and ensured compliance with privacy regulations.

Conclusion

In conclusion, the financial services and healthcare industries are undergoing a significant transformation driven by machine learning technologies. As these machine learning trends evolve, we expect to see more sophisticated applications that enhance decision-making, improve operational efficiency, and deliver personalized customer experiences. By embracing machine learning, organizations in these sectors can unlock valuable insights from their data, streamline processes, and stay ahead of the competition.

However, it is essential for businesses to not only adopt these technologies but also invest in the necessary infrastructure, skilled workforce, and data management practices. This will ensure that they can fully harness the power of machine learning and capitalize on its potential to drive innovation and growth. As we move forward, the financial services and healthcare industries will undoubtedly continue to be at the forefront of machine learning advancements, setting new benchmarks for other sectors.

0 notes

Text

Practical Human-Centered Design: The Evolution of the Designer’s Role

Traditionally, designers have been responsible for creating visually appealing products and experiences. However, the designer’s role has changed with the growing emphasis on human-centered design. Designers are now responsible for understanding the needs and behaviors of the user and using that understanding to create solutions that meet those needs. This means that designers must have a deep understanding of the user and be able to apply that understanding to the design process.

What is Human-Centered Design (HCD)?

It is an approach that solves problems by prioritizing the understanding of the needs, desires, and behaviors of the end-users who will utilize the product or service being designed. The goal of HCD is to create valuable and user-friendly things for the people who will interact with the users.

Benefits of Practical Human-Centered Design

The design has several benefits, including:

As a UI/UX designer, I have personally experienced a transformative journey by adopting practical HCD principles. By prioritizing user needs and preferences, I have been able to enhance my career and growth in the following ways:

Conclusion

The designer’s role is changing, and human-centered design is becoming essential to the design process. It involves putting the user at the center of the design process and using that understanding to create practical solutions that meet their needs. By understanding the needs and behaviors of the user, designers can generate innovative solutions that improve the user experience, increase customer satisfaction, and drive business success.

0 notes

Text

User Research Methods Within Constraints

User Research is done to inspire your design, evaluate your solutions, and measure the impact of your product/project. When working with limited resources, conducting user research can seem daunting. While it’s true that having more resources at your disposal makes the task easier, there are still plenty of ways to effectively do user research, even while working within constraints. In this blog, we will take you through some essential user research methods to do effective research without breaking the bank or feeling overwhelmed.

Dive in to find out what steps you can take today to gather meaningful user insights despite restrictions.

How to do User Research with Budget Constraints

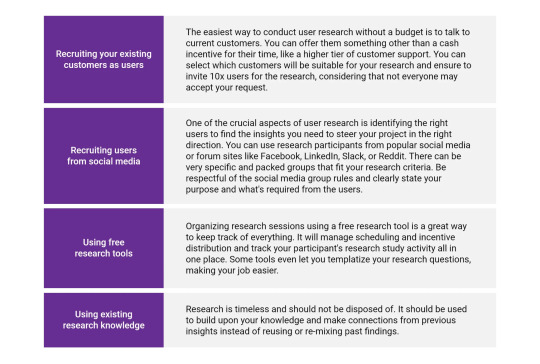

Research can be established with a minimal budget using these innovative user research methods.

How to do User Research with Time Constraints

Using a framework for optimizing engagement and data

Time is always a constraint for many companies. Several frameworks are available online, depending on the outcome they want to achieve. A framework also allows us to prioritize our teams’ research efforts better.

Using impactful users

Choosing the right users for the research is essential. Every user has a different perspective based on his experiences and exposure. For example, if your research is about building a new experience for drivers, you need to ensure your users know how to drive. Otherwise, you will not get meaningful results. Sometimes we need users with domain experience or expertise.

Prior documentation

Documentation is often not given the importance it deserves. We need to make sure we capture the different stages of the research and design so that everyone can understand why certain design decisions were made and why.

Conclusion

There will always be constraints that we will have to work in. Keep refining your process, starting with these tips, until it works for you and your team. We will need to get creative with our ways to accomplish user research!

0 notes

Text

Uncovering User Need is 50% of Solving: ‘Define’ in Design Thinking

Are we trying to solve a customer’s problem? Do we know what exactly is our customer’s problem that we are trying to solve? The ‘Define’ stage in Design Thinking is dedicated to defining the problem. In this blog, we will discuss user needs statement, why they are essential, their format, and a few examples.

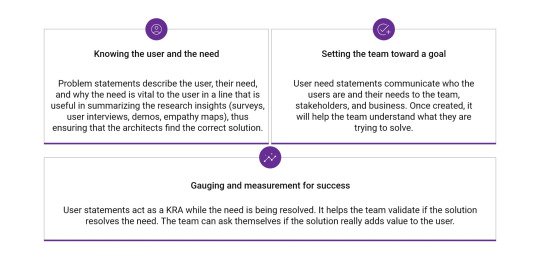

What is a user need statement?

A user need statement is an actionable problem statement that provides an overview of who a particular user is, what the user’s need is, and why that need is valuable to that user. It defines what we want to solve before we move on to generating possible solutions.

A problem statement identifies what needs to be changed, i.e., the gap between the current and desired state of the process or product.

How to build a good problem statement?

A good problem statement should always focus on the user. Here are some indicators that will help us create a good problem statement:

For example:

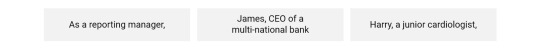

Suppose we have framed our problem statement as shown below, we will not be sure of exact user needs, what insights are required by the reporting manager, should the insights be based on the number of reportees, their roles, leaves they have availed, projects they are assigned to, or is it anything else? As the reporting manager, I should have insights into the reportees’ reporting to me. Instead, if we build our problem statement as below, we should know who the user is, their needs, and why it is important to them.

As the reporting manager, I should have insights into the total number of leaves applied by my reportees (yearly/half-yearly/quarterly/monthly/weekly/day-to-day basis). I should also be able to drill down and view the details to keep project managers informed and as well evaluate reportees’ KPIs.

Format

Traditionally, user need statements have 3 components:

These are then put together in the below pattern: User [the user persona] needs [the need] so that [the objective].

For example, [As a reporting manager, I] should be able [to approve leave requests applied by my reportee] so that [they can avail planned holiday(s)].

The user should refer to a specific persona or actual end-user we’ve conducted discovery on. It is helpful to include a catchline that helps to understand who the user is, whether the problem statement will be utilized by a group or an individual, what’s the user role is, etc.

The need should reflect the truth, should belong to users, should not be painted by the team, and should not be written as a solution. It should not include features, interface components, and specific technology. For example, possible goals may be:

We should recognize that users do not always know what they need, even if they may say so.

For example, the customer says that they copy content from a page in the application and paste it into Excel and try to do some calculations they are unable to do due to formatting issues and want us to fix them.

But the exact need is: As the accountant, I need a copy of the figures/table that I see on the screen in Excel format so that I can make further calculations if required using the data and save it for auditing purposes.

For a successful outcome, the insight or goal should fulfill the user’s needs while incorporating empathy. Instead of focusing solely on the surface-level benefits, it’s essential to consider the user’s deeper desires, emotions, fears, and motivations:

Problem statements can also take these formats:

Benefits

The process of making a user need statement and the actual statement itself have many benefits for the team and organization, including:

Conclusion

User need statements help communicate the end user’s problem we are about to solve and what value we are bringing in. When done together and correctly, they can act as a single source of truth for what the team or organization is trying to achieve.

0 notes

Text

How the healthcare industry can drive innovations through the power of cloud

Are you a hospital CEO, CIO, or director looking for innovative ways to optimize the efficiency of your healthcare organization? Healthcare organizations are beginning to look beyond traditional storage and technology capabilities and embracing cloud computing in healthcare to increase patient satisfaction, enhance collaboration within the team, improve administrative processes, drive cost savings, and more.

In this blog post, we’re taking a closer look at how cloud-driven innovations have positively impacted the healthcare industry. From enhanced patient experience and improved communication with clinicians – cloud-powered innovation can open up new possibilities for improving hospital operations!

How cloud-related healthcare innovations are transforming the industry

Cloud technology has been a game-changer for numerous industries, and healthcare is no exception. Healthcare providers are using cloud-based solutions to manage patient data, streamline processes, and improve overall care quality. One of the biggest benefits of cloud computing in healthcare is the ability to securely store and share large amounts of data. With the ability to access information from anywhere, doctors and nurses can quickly pull up patient files, review medical histories, and collaborate with colleagues in real-time. Cloud technology is also making testing and diagnosis more efficient by allowing for remote monitoring and analysis of patient data. These advancements are transforming the healthcare industry, enabling providers to consistently deliver the best possible care to their patients.

Patient data storage

If you are in the healthcare industry, you know how crucial it is to secure patient records. But did you know that cloud storage can help make this process a breeze? Not only does cloud storage save on costs, but it also enhances security measures. Since the data is stored on the cloud, it’s not vulnerable to physical theft or damage. Plus, you can access the data anytime, anywhere – making it all the more convenient for healthcare professionals on the go. With cloud storage, your patient data is in safe, secure hands. So, whether you’re a doctor, nurse, or administrator, it’s time to consider moving to cloud storage for your patient records.

Medical records access

With the help of cloud computing, doctors can access medical records in just a few clicks. Gone are the days when physicians had to physically search through piles of files and documents just to find a patient’s medical history. With cloud computing, medical records are stored online and can easily be accessed from any device with an internet connection. This not only saves time for doctors but also ensures that patient data is kept safe and secure. Doctors can quickly access important information such as test results, medication history, and treatment plans using cloud technology. It’s no wonder that so many medical facilities are turning to the cloud to streamline their operations and provide better patient care.

Access to healthcare

Telemedicine technology has revolutionized the way healthcare services are being delivered to patients. With the advent of telemedicine, patients who live in remote or underserved areas can now easily access quality medical care from the comfort of their homes. Telemedicine technology has allowed patients to consult with their doctors virtually, outside regular clinic hours, and with greater convenience. Patients can also benefit from remote monitoring devices that transmit data such as blood pressure readings and other vital signs, allowing healthcare providers to monitor their condition remotely.

Furthermore, telemedicine technology has opened up new opportunities for medical professionals to collaborate, share knowledge, and consult with each other on challenging cases, enabling better patient outcomes. It is clear that telemedicine technology has transformed the healthcare sector by increasing the availability of medical services for patients and facilitating better communication and collaboration between healthcare providers.

Accurate diagnostics

Artificial intelligence (AI) has made its way into the healthcare industry and is changing the game for healthcare professionals. With the help of AI tools, healthcare professionals can now make better decisions and improve diagnosis accuracy. These tools provide a wealth of information that doctors can use to make more informed decisions about patient care. For example, AI-powered tools can mine patient data, such as electronic health records, lab results, and images, to give doctors a more comprehensive view of a patient’s health. This allows healthcare professionals to make more accurate diagnoses and develop more effective treatment plans. As AI advances, we can expect to see even more innovative tools that will help transform healthcare delivery.

Improved patient experience

Visiting the doctor can often be a stressful and time-consuming process, but advancements in technology and changes to healthcare systems have led to a more convenient and positive experience for patients. Some of these changes include the ability to book appointments online, virtual visits with healthcare providers, and updated waiting room protocols to reduce wait times. These improvements provide convenience and lead to better patient outcomes and satisfaction. Patients are now able to receive the care they need in a more efficient and stress-free manner, ultimately enhancing their overall experience.

Conclusion

In conclusion, cloud computing in healthcare has revolutionized the industry. From cloud storage solutions that improve security and reduce costs to AI tools that aid in diagnosis accuracy, healthcare professionals now have access to a range of tools to make their work easier and more efficient. Advanced telemedicine technology has made quality healthcare services more accessible than ever before, giving patients greater convenience and shorter wait times for their appointments. Thanks to these advancements in cloud technologies, healthcare professionals and patients can benefit from increased data security and improved quality of care – making it a win-win for all involved.

0 notes

Text

Challenges In Software Testing And How To Overcome Them – Part 2

Software testing has become an integral part of the development process. It’s even more important nowadays that teams ensure the highest quality product is released, as software must work across multiple platforms and devices with different versions of operating systems. As a result, testing teams must be well-equipped with knowledge and technology solutions to keep up with this ever-evolving landscape. Unfortunately, these key challenges of software testing come with roadblocks that can leave QA leads feeling overwhelmed–until now!

In this blog post series, we’re taking a deep dive into some common issues seen in software testing and how best to overcome them. This is Part 2 of our series, so if you haven’t already read Part 1, check that out first before jumping in here. Now let’s get into it!

Lack of resources

Lack of resources in software testing can lead to several problems that can impact the quality of the software being tested, delay the testing process, and increase the risk of undiscovered defects. Lack of resources leads to insufficient test coverage, incomplete testing, inadequate testing environment, and limited test automation.

To overcome these issues, here are some strategies that software testing managers can use:

Lack of test prioritization

Test prioritization refers to the ideology of giving importance to testing the features or modules of high importance. Prioritizing test cases is essential because not all test cases are equal in terms of their impact on the application or software being tested. Prioritizing helps to ensure that the most critical test cases are executed first, allowing for early identification of major defects or issues. This can also aid in risk management and determining the level of testing required for a particular release or build. By prioritizing test cases, testers can optimize their efforts, reduce testing time, and improve software quality.

When test cases are not appropriately prioritized, it can lead to inadequate testing coverage and result in missed defects. This can have a significant impact on the quality of the software, as well as customer satisfaction levels. To overcome this issue, software testing managers should prioritize testing activities to focus resources on the most critical areas of the software. Additionally, they should implement techniques such as risk-based prioritization or test case optimization to ensure that tests are being executed in the correct order. Finally, teams should continuously review and refine their test plans to remain up-to-date with changing requirements.

Lack of proper test planning

A test plan is a formal document that outlines the strategy, objectives, scope, and approach to be used in a software testing effort. It is typically created during the planning phase of the software development life cycle and serves as a guiding document for the entire testing team.

This results in issues falling through the cracks or being duplicated unnecessarily. The four most common problems are unclear roles and responsibilities, unclear test objectives, ill-defined test documents, and no strong feedback loop.

By creating a well-structured and comprehensive test plan, testing teams can ensure they understand the testing objectives and work efficiently to deliver a high-quality software product.

Here are the elements you need to build an effective test plan:

Test environment duplication

The test environment plays a vital role in the success of software testing, as it provides an isolated environment to run tests and identify potential defects. To ensure reliable results, the test environment should be designed to replicate the software’s target deployment and usage conditions, such as hardware architecture and operating system. This allows testers to uncover defects caused by environmental differences, such as different browser versions or user input formats.

To duplicate the test environment, teams should first thoroughly document the requirements for the test environment. They should also create an inventory of all relevant hardware and software components, including versions and configurations. Once this is done, they can leverage cloud-based technologies such as virtual machines or containers to spin up multiple copies of identical environments quickly and cost-effectively. Additionally, they should configure automated systems to monitor and track changes made to the test environment during testing.

Test data management

Test data is a set of values used to run tests on software programs. It allows testers to validate the behavior and performance of the software against expected outcomes. These data sets can be generated manually or automatically, depending on the complexity of the software under test. Test data can include parameters such as user credentials, system configurations, transaction histories, etc., which can accurately represent real-world scenarios.

If test data is not managed correctly, it can lead to unreliable test results and an inaccurate software assessment. Poorly managed test data can also cause tests to take longer to execute due to inconsistencies in datasets or redundant information. Additionally, incorrect assumptions may be made during testing since the results are not accurately represented. Finally, if test data is not properly archived, it can be challenging to reproduce defects and rerun tests when necessary.

Here are some key steps to consider when managing test data:

Undefined quality standards

Quality standards in software testing refer to a set of expectations for assessing the quality of the software under test. These standards are based on user requirements and industry best practices and typically include accuracy, reliability, performance, scalability, and security criteria.

If quality standards are undefined or unclear, it can confuse testers and developers and affect the overall testing quality. This can result in incorrect assumptions made during tests which may lead to undetected bugs and flaws in the final product.

To ensure good quality standards in testing, it is crucial to define objectives and expectations at the project’s outset. Meetings between stakeholders should also be held periodically throughout development to review progress against specific goals. Additionally, teams should have access to up-to-date test documentation so that they know exactly what is expected from them. Finally, regular code reviews should be conducted by experienced professionals who are knowledgeable about coding best practices.

Lack of traceability between requirements and test cases

Traceability in software testing is the process of keeping track of functional requirements and their associated tests. It is a vital part of quality assurance as it enables teams to confirm that all requirements are being tested properly and provides an audit trail in case any changes need to be made.

If there is no traceability in software testing, it can lead to problems such as undetected bugs and flaws in the final product. It also makes identifying gaps or errors in the process difficult due to the lack of an audit trail. Furthermore, without traceability, reviewing what has been tested against requirements and spotting redundant tests is hard.

Good traceability measures include maintaining detailed records of the different tests conducted, ensuring that all changes to requirements are tracked and recorded, and regularly reviewing tests against requirements. A Requirement Traceability Matrix (RTM) is a tool that maps test cases to their corresponding requirement to give teams an overview of what has been tested. This makes it easier to identify gaps or errors in the process. An RTM can also help identify redundant tests that may be inefficiently consuming resources.

People issues

Conflicts between developers and testers can arise due to differences in their primary roles and objectives. Developers often focus on creating functional code, while testers focus more on product quality. These differing perspectives can lead to disagreements over issues such as when a test should be performed or how much time is allocated to testing.

To resolve these issues, both parties need open communication and a better understanding of each other’s goals. Additionally, setting clearer expectations of what needs to be achieved can help bring clarity and focus to the process. Finally, introducing metrics that measure overall effectiveness can help identify areas for improvement and act as an incentive for collaboration between developers and testers.

Release day of the week

Release management is essential to software development, as it aligns business needs with IT work. Automating day-to-day release manager activities can lead to a misconception that release management is no longer critical and can be replaced by product management.

When products and updates have to be released multiple times a day, manual testing becomes impossible to keep up with accurately. New features pose an even greater challenge with the needed levels of speed, accuracy, and additional testers. As the end of the working week approaches, there’s often a looming deadline between developers and the weekend – leaving little time for necessary tasks such as regression testing before deployment.

Deployment is nothing more than an additional step in the software lifecycle – you can deploy on any given day, provided you are willing to observe how your code behaves in production. If you’re just looking to deploy and get away from it all, then maybe hold off until another day because tests will not give you a proper understanding of how your code will perform in a real environment. In place of DevOps AI, which does automated observation and rollbacks if necessary, teams must ensure their code works as intended – especially on Fridays.

Piecemeal deployments are key for releasing faster and improving code quality – though counterintuitive, doing something frightening repeatedly will help make it mundane and normalize it over time. Releasing frequently can help catch bugs sooner, making teams more efficient in the long run.

Lack of test coverage

Test coverage denotes how much of the software codebase has been tested. It is typically expressed as a percentage, indicating the amount of code being tested in relation to the total size and complexity of the codebase. Test coverage can be used to evaluate the quality of the tests and ensure that all areas of the project have been sufficiently tested, reducing potential risks or bugs in production.

Issues that can occur due to inadequate test coverage include software bugs, decreased reliability and stability of the software, increased risk of security vulnerabilities, and increased dependence on manual testing. Inadequate test coverage can also lead to inefficient development cycles, as unexpected errors may only be found later in the process. Finally, inadequate test coverage can lead to increased maintenance costs as more effort is needed to fix issues after release.

One way to address the problem of lack of test coverage is to ensure that all areas of the codebase are tested. This can be done by utilizing unit, integration, and system-level testing. It is also important to use automation for tests to ensure sufficient coverage. Additionally, it is essential to have rigorous code reviews to detect potential issues early on and set up software engineering guidelines that provide clear standards for coding practices and quality control.

Defect leakage

Defect leakage allows bugs and defects to remain in a codebase, even after tests have been conducted. This can lead to serious issues for software applications and must be addressed as soon as possible.

Defect leakage usually happens when there is insufficient test coverage, where not all areas of the codebase are properly tested. This means that some parts of the application will go unchecked, leading to any potential flaws or bugs being missed. Additionally, if the requirements analysis process was incomplete, certain scenarios or conditions could not be considered during testing. Inadequate bug tracking and tracking processes can also result in undiscovered defects slipping through testing.

The best way to prevent defect leakage is by ensuring all areas of the codebase are thoroughly tested utilizing unit testing, integration testing, and system-level testing. Automation should also be utilized wherever possible to ensure adequate coverage across the entire application. Additionally, rigorous code reviews should be done so any potential issues can be detected early on and corrected before they become more severe problems down the line. Finally, organizations should set software engineering guidelines that help developers create high-quality code while ensuring defects don’t slip through testing unnoticed.

Defects marked as INVALID

Invalid bugs are software defects reported by testers or users but ultimately discarded because they don’t indicate a real issue. These bugs can lead to wasted resources and time as developers and testers work on them without making any progress.

Invalid bugs typically occur due to insufficient testing, where certain areas of the codebase have not been thoroughly tested or covered. This can lead to false positives where software appears to malfunction even though it works properly. Additionally, if the requirements analysis process was incomplete, it’s possible that certain scenarios or conditions weren’t considered during testing, which could also cause invalid bugs to be raised.

The best way to avoid invalid bugs is to ensure quality assurance processes are up-to-date and robust. Testers should perform thorough tests across all areas of the application before releasing a new version – unit testing, integration testing, system-level testing, etc. Automation should also be utilized wherever possible to ensure adequate coverage across the entire application and reduce room for human error in manual testing. Reports from users must be taken seriously – however, each report should be investigated carefully before concluding whether an issue needs further attention.

Running out of test ideas

If a software tester runs out of ideas, it can be a huge problem – they won’t be able to find any more defects. Thus, the quality assurance process will suffer. To overcome this issue, testers should use different methods and approaches to ensure that all application areas are thoroughly tested.

First off, it’s essential to have a clear understanding of the requirements and make sure that these have been considered when testing. It may be helpful for testers to use tools such as Mind Mapping or Fishbone diagrams to organize their thoughts before beginning testing. Additionally, testers should consider using automated testing tools or scripts to cover more ground effectively – repeating certain tests across multiple environments can help find potential bugs faster.

Another valuable approach for finding bugs is exploratory testing, where testers explore the application by trying out different scenarios and use cases that weren’t included in the initial test plans. This approach encourages creativity from the tester and can uncover unexpected issues which were previously unknown. Additionally, bug bounty programs can be set up, which allow external users to report any bugs they find on an application – this way, new ideas can be generated without requiring more input from internal testers.

Struggling to reproduce an inconsistent bug

Inconsistent bugs are software defects due to an inconsistency between different versions or areas of the codebase. These bugs can be difficult to debug because it’s not always immediately obvious why one part of the application behaves differently from another.

Inconsistent bugs usually occur when changes are made to a product, but not every area is updated accordingly. This can lead to some areas being more up-to-date than others, creating discrepancies in behavior between them. Additionally, if developers have used different coding practices for different parts of the codebase, inconsistencies can also arise – such as using a different function in one area and an alternative function in another despite both ultimately performing the same task.

The best way to fix inconsistent bugs is to ensure that all relevant code is up-to-date and consistent across each version and environment. Automation tools should be utilized wherever possible in order to distribute updates quickly and efficiently while keeping track of changes. Additionally, developers need to use similar coding practices throughout each area – this includes using syntactically identical syntax and structure so that discrepancies don’t arise unintentionally. Finally, testers must keep a close eye on newly released versions by performing thorough tests across multiple environments; this will help identify any issues quickly before they become widespread.

Blame Game

The blame game is a common problem in software testing projects that can lead to communication breakdowns between different teams and avoidable mistakes. In order to avoid this issue, it’s crucial for everyone involved to take responsibility for the tasks assigned to them and understand the importance of their role.

An excellent way to prevent blame game scenarios is by having transparent processes in place right from the start. Agree on who is responsible for what tasks, and ensure that progress updates are communicated frequently among all stakeholders. Additionally, holding regular reviews or retrospectives throughout the testing process can help identify issues early before they become serious problems – allowing any necessary changes to be made quickly and effectively.

Setting up an environment of trust is also key to avoiding blame game situations. Team members should feel comfortable discussing challenges without fear of criticism or judgment, resulting in higher-quality output, as issues can be discussed openly without worrying about assigning blame afterward. Additionally, testers should remain focused on understanding the reason behind any errors rather than just pointing out mistakes – this will empower them to suggest solutions instead of simply criticizing others’ work.

Conclusion

Software testing is a complex and challenging process – but with the right systems and processes in place, it’s possible to reduce issues and problems to a minimum. Building trust between different teams, setting up clear guidelines for all stakeholders, and utilizing automated testing tools are key components to ensuring that the software you produce meets the highest quality standard. With these approaches in place, developing and launching robust applications without too much difficulty is possible.

0 notes

Text

Challenges In Software Testing And How to Overcome Them – Part-1

Software testing is an essential but often underestimated step in software development. Ensuring that the finished product meets the users’ expectations and provides a valuable experience is necessary. However, certain challenges can arise during software testing, inhibiting success. By understanding these challenges and taking steps to mitigate them, businesses can ensure their software works as intended. In this two-part series, we will explore the common challenges in software testing and provide tips on overcoming them.

Lack of communication

Communication is the key aspect of all kinds of businesses. The tech industry is the place where both living and non-living things have the potential to communicate, and a lack of communication impacts businesses on various levels, like:

To overcome these challenges, effective communication should be practiced as routine in daily scrum connects and stand-up meetings. Managers should ensure all their team members have clear objectives and equal communication opportunities.

Missing or no documentation or insufficient requirements

Requirements in software testing refer to the specifications and expectations the client and stakeholders set out. These requirements typically include the expected outcomes, timeline, budget, level of quality assurance needed, and other important factors relating to software product development. Requirements should be clearly communicated and agreed upon between all stakeholders before beginning development, as they are critical for the successful implementation of a project.

The following statistics clearly depict the importance of requirements:

Testing teams can overcome challenges in software testing due to a lack of requirements by following these tips:

Diversity in the testing environment

Diversity in software testing is essential because it brings diverse perspectives and experiences to the testing process, which can help identify a broader range of defects, improve testing accuracy, and ensure the software product is suitable for all users.

If there’s no diversity in testing teams, it could lead to challenges in software testing like blind spots in testing, exclusion of user perspectives, inaccuracy in testing, biases in testing, and poor software quality.

Here are some key reasons why diversity in software testing is important:

Diversity in software testing promotes inclusivity and accuracy, ensuring that the software product is suitable for all users. According to several studies, businesses with diverse teams of employees get more substantial financial returns.

Inadequate testing

Coding is one part of SDLC similar to testing, but the testing phase comes nearly at the end of SDLC. All phases of SDLC are equally essential to deliver high-quality software products. Tech history clearly depicts that software with inadequate testing can be fatal and make tables turn in business profit loss margins. Market leaders might be pushed to a state of losing market share due to defective software products with inadequate testing. Customers search for new alternatives when reliable brands release products without testing.

Inadequate testing can be overcome by:

Company’s culture

A company’s culture can substantially impact a software testing team’s morale, productivity, and effectiveness. If the organizational culture values speed over accuracy, it can lead to a testing team feeling rushed, resulting in errors that could have been detected earlier in the development process. A vibrant culture can assist people in thriving professionally, enjoying their job, and finding meaning in their work.

Cross-cultural challenges in software testing could be managed by following these tips:

Time zone differences

With an increasing number of businesses considering setting up a remote development team, they are forced to make many important decisions. How to manage and overcome time-zone differences is one of them.

Time zone differences can have several adverse effects on software testing teams, affecting the quality and timing of the testing effort. Here are some of the critical issues that can arise:

Here are some simple hacks to overcome time zone challenges:

By implementing these strategies, software testing teams can overcome time zone differences and collaborate effectively to ensure the timely delivery of high-quality software products.

Unstable test environment or irrelevant test environment

Geographically distant sites are frequently used to store test environments or assets. The test teams depend on support teams to deal with hardware, software, firmware, networking, and build/firmware upgrade challenges. This often takes time and causes delays, mainly where the test and support teams are based in different time zones.

Unstable environments can potentially derail the overall release process, as frequent changes to the software environments can delay the overall release cycle and test timelines. Dedicated test environments are essential. Support teams should be available to troubleshoot issues popping up from test environments. Good test environment management improves the quality, availability, and efficiency of test environments to meet milestones and ultimately reduces time-to-market and costs.

Instability in test environments can be handled by following tips and tricks:

Tools being force-fed

In many projects and organizations, existing tools play havoc on project deliverables. QA team members suggest that the tool is outdated or not a suitable match for the project, and yet the unwanted tool remains a vital part of the project. The testing teams struggle without the latest tools required for work. There are many cases where QA teams are not considered when buying new tools. When test engineers cannot use a tool of their convenience, it lead to several challenges in software testing.