#improving estimate accuracy

Explore tagged Tumblr posts

Text

How Accurate Is a Residential Estimating Service?

Accuracy is the backbone of any successful construction or renovation project. When homeowners or developers hire a residential estimating service, they often ask one crucial question: “How accurate is it really?” The short answer is that it can be highly accurate—if it's done by experienced professionals using updated data, proven methods, and clear project documentation. But to fully understand the reliability of a residential estimating service, it's essential to explore what influences accuracy and what clients can expect.

Experience and Methodology

The accuracy of an estimate depends largely on the estimator’s experience and the methodology used. Seasoned professionals analyze design documents, blueprints, specifications, and site conditions. They use standard estimating practices, historical cost data, and regional pricing databases. Services that rely on manual processes alone may leave room for human error, but those that incorporate digital takeoff tools and cost databases produce more precise results.

Level of Design Detail

The more detailed the construction documents, the more accurate the estimate. A preliminary estimate based on a concept drawing may have a 20–30% margin of error. In contrast, estimates based on full construction drawings with detailed specs can reach 90–95% accuracy. Estimators often specify the level of accuracy by the project stage—conceptual, schematic, design development, or construction-ready.

Use of Updated Cost Data

One factor that directly affects accuracy is the pricing data used. The best estimating services subscribe to national and regional cost databases or consult real-time supplier and subcontractor quotes. Accurate pricing includes labor, materials, equipment rentals, permits, and contingencies. Estimates that use outdated pricing or generic figures are more likely to lead to cost overruns later.

Accounting for Regional Differences

Costs for residential construction vary greatly based on location due to differences in labor rates, building codes, and material availability. A professional residential estimating service customizes the estimate to the project’s location, ensuring it reflects local conditions rather than national averages.

Contingency and Risk Factors

No estimate is perfect, but a good service includes a contingency percentage to cover unforeseen changes. These may include design modifications, site condition issues, or material shortages. Factoring in these risks doesn’t compromise accuracy—it improves the estimate’s realism.

Client Input and Revisions

Accurate estimates rely on good communication. Clients who provide clear goals, budgets, and decisions upfront allow estimators to craft more tailored and precise results. Also, reputable estimating services allow for revisions, especially if the project scope evolves.

Conclusion

A residential estimating service can deliver highly accurate results—especially when the estimator is experienced, the documentation is detailed, and the pricing is current. While no estimate is flawless, the best services offer transparency, account for variables, and include contingencies to avoid surprises. For homeowners and builders seeking clarity and control over construction budgets, a professional estimate is an essential foundation.

#how accurate is residential estimating#residential estimating service accuracy#estimating errors in home projects#factors affecting estimate accuracy#is construction estimate reliable#accuracy of home building estimates#estimating services vs actual cost#estimating accuracy for home renovation#margin of error in residential estimating#cost prediction in house construction#trusted residential estimator#accurate construction budgeting#construction estimate confidence levels#estimate reliability for homeowners#estimate range in home builds#getting precise home cost estimate#professional vs DIY estimates#errors in renovation cost estimation#real cost vs estimate comparison#improving estimate accuracy#estimator experience and accuracy#regional cost estimating#how estimators predict costs#price variance in residential projects#how accurate is digital takeoff#estimate precision by project phase#residential estimate accuracy factors#estimation risk allowance#updating estimates during project#comparing estimate to final budget

0 notes

Text

Advancements in Precise State of Charge (SOC) Estimation for Dry Goods Batteries

In the dynamic world of dry goods batteries, accurately determining the State of charge estimation (SOC estimation for dry goods batteries) is crucial for optimal performance and longevity. This article explores two widely used methods for SOC estimation for dry goods batteries: the Anshi integral method and the open-circuit voltage method. By examining their mechanics, strengths, and limitations, we aim to understand each method's suitability for different battery types clearly, highlighting recent advancements in SOC estimation.

I. The Anshi Integral Method

The Anshi integral method precisely calculates SOC by considering critical variables such as charge and discharge currents, time, and total capacity. This method is a cornerstone of Precise SOC estimation technology and is versatile and suitable for various battery chemistries.

Operational Mechanics

Current Measurement: Accurate measurements of charge and discharge currents using high-precision sensors are fundamental to SOC measurement for dry batteries.

Time Integration: Integrating measured currents over time to determine the total charge transferred utilizes advanced SOC algorithms for batteries.

SOC Calculation: Dividing the total charge transferred by the battery's capacity to obtain SOC ensures Accurate SOC estimation methods.

Strengths

Versatility: Applicable to different battery chemistries, enhancing Dry goods battery SOC improvement.

Robustness: Resilient to noise and parameter variations, supporting reliable Battery state of charge monitoring.

Accuracy: Provides precise SOC estimation when combined with other methods, contributing to Improving SOC estimation accuracy.

Limitations

Sensor Dependence: Accuracy relies on the quality of current sensors, affecting overall Battery management system SOC.

Temperature Sensitivity: SOC calculation can be affected by temperature variations, necessitating adaptive measures.

Computational Complexity: The integration process can be computationally expensive, impacting real-time applications.

II. The Open-Circuit Voltage Method

The open-circuit voltage method estimates SOC by measuring a battery's voltage when no load is connected. This method is particularly effective for ternary and lithium manganate batteries due to their unique voltage characteristics, representing significant Innovations in battery SOC tracking.

Operational Mechanics:

Voltage Measurement: Measuring the battery's open-circuit voltage is a fundamental aspect of State of charge estimation techniques.

SOC Lookup Table: Comparing the measured voltage to a pre-constructed lookup table utilizes Battery SOC prediction advancements.

SOC Determination: Obtaining the corresponding SOC value from the lookup table ensures reliable Real-time SOC estimation for batteries.

Strengths:

Simple Implementation: Requires minimal hardware and computational resources, making it an Accurate SOC estimation method.

High Accuracy: Provides precise SOC estimates for specific battery chemistries, enhancing SOC measurement for dry batteries.

Temperature Independence: Relatively unaffected by temperature variations, improving overall SOC estimation accuracy.

Limitations:

Limited Applicability: Effective only for batteries with well-defined voltage-SOC relationships, restricting its use.

Lookup Table Dependence: Accuracy depends on the quality and completeness of the lookup table, highlighting the need for comprehensive data.

Dynamic Voltage Fluctuations: Self-discharge and other factors can affect open-circuit voltage accuracy, challenging State of charge estimation.

III. Suitability for Different Battery Types

The open-circuit voltage method is generally applicable, but its accuracy varies depending on the battery chemistry:

Ternary Batteries: Highly suitable due to distinct voltage-SOC relationships.

Lithium Manganate Batteries: Performs well due to stable voltage profiles.

Lithium Iron Phosphate Batteries: Requires careful implementation and calibration for accurate estimation within specific SOC segments.

Lead-Acid Batteries: Less suitable due to non-linear voltage-SOC relationships.

IV. Factors Affecting State of Charge Calculation

Several factors influence SOC estimation accuracy:

Current Sensor Quality: Accuracy depends on high-precision sensors, critical for Battery state of charge monitoring.

Temperature Variations: Battery capacity changes with temperature, affecting SOC calculation.

Battery Aging: Aging reduces capacity and increases internal resistance, impacting SOC accuracy.

Self-discharge: Natural discharge over time can lead to underestimation of SOC.

Measurement Noise: Electrical noise in the system can introduce errors in SOC calculation.

V. Enhancing SOC Estimation Accuracy

To achieve accurate SOC estimation, several strategies can be employed:

Fusion of Methods: Combining the Anshi integral method with the open-circuit voltage method improves accuracy by leveraging dynamic and static information, representing key Advancements in SOC estimation.

Adaptive Algorithms: Real-time data-driven algorithms compensate for changing battery parameters and environmental conditions, enhancing SOC algorithms for batteries.

Kalman Filtering: Advanced filtering techniques reduce measurement noise, enhancing accuracy and reliability.

VI. Impact of Accurate SOC Estimation

Accurate SOC estimation has significant implications across various applications:

Optimized Battery Usage: Avoiding overcharging and deep discharging extends battery life and enhances performance, contributing to Dry goods battery SOC improvement.

Improved Safety: Reliable information on remaining capacity prevents safety hazards associated with improper charging or discharging.

Extended Battery Lifespan: Minimizing stress on batteries prolongs their lifespan, reducing costs and environmental impact.

Efficient Battery Management: Accurate SOC information enables optimized charging, discharging, and prevention of premature failure, integral to Battery management system SOC.

VII. Applications in Various Industries

Accurate SOC estimation finds applications beyond dry goods batteries:

Renewable Energy Systems: Optimizes energy storage in solar and wind power installations.

Electric Vehicles: Predicts driving range and optimizes battery performance, leveraging Battery SOC prediction advancements.

Portable Electronics: Provides reliable information on remaining battery life in smartphones and laptops.

Medical Devices: Ensures reliable operation of battery-powered medical devices for patient safety.

VIII. Future Development

Advancements in SOC estimation can be expected in the following areas:

Advanced Machine Learning Techniques: Analysing data patterns for even greater accuracy.

Battery Health Monitoring Integration: Comprehensive insights into battery performance and failure prediction.

Wireless Communication: Real-time monitoring and remote battery management, enhancing Real-time SOC estimation for batteries.

Conclusion

Accurately estimating State of charge estimation is crucial for optimizing dry goods battery performance and lifespan. Understanding the mechanics, strengths, and limitations of the Anshi integral method and the open-circuit voltage method allows informed selection and implementation for different battery types. As technology progresses, further advancements in SOC estimation techniques will enhance the efficiency and reliability of dry goods batteries across diverse applications, driving forward Innovations in battery SOC tracking and Battery SOC prediction advancements.

#State of charge estimation#SOC estimation for dry goods batteries#Precise SOC estimation technology#Advancements in SOC estimation#SOC measurement for dry batteries#Battery state of charge monitoring#SOC algorithms for batteries#Accurate SOC estimation methods#Dry goods battery SOC improvement#State of charge estimation techniques#Battery management system SOC#Improving SOC estimation accuracy#Battery SOC prediction advancements#Real-time SOC estimation for batteries#Innovations in battery SOC tracking

0 notes

Text

Sign of the Day - Boston again… another great overpass banner sign there….

* * * * *

LETTERS FROM AN AMERICAN

June 4, 2025

Heather Cox Richardson

Jun 04, 2025

Just hours after President Donald J. Trump posted on social media yesterday that “[b]ecause of Tariffs, our Economy is BOOMING!” a new report from the Organization for Economic Cooperation and Development (OECD) said the opposite. Founded in 1961, the OECD is a forum in which 38 market-based democracies cooperate to promote sustainable economic growth.

The OECD’s economic outlook reports that economic growth around the globe is slowing because of Trump’s trade war. It projects global growth slowing from 3.3% in 2024 to 2.9% in 2025 and 2026. That economic slowdown is concentrated primarily in the United States, Canada, Mexico, and China.

The OECD predicts that growth in the United States will decline from 2.8% in 2024 to 1.6% in 2025 and 1.5% in 2026.

The nonpartisan Congressional Budget Office (CBO) released two analyses today of Trump’s policies that add more detail to that report. The CBO’s estimate for the effect of Trump’s current tariffs—which are unlikely to stay as they are—is that they will raise inflation and slow economic growth as consumers bear their costs. The CBO says it is hard to anticipate how the tariffs will change purchasing behavior, but it estimates that the tariffs will reduce the deficit by $2.8 trillion over ten years.

Also today, the CBO’s analysis of the Republicans’ “One Big, Beautiful Bill” is that it will add $2.4 trillion to the deficit over the next decade because the $1.2 trillion in spending cuts in the measure do not fully offset the $3.7 trillion in tax cuts for the wealthy and corporations. Republicans have met this CBO score with attacks on the CBO, but its estimate is in keeping with those of a wide range of economists and think tanks.

Taken together, these studies illustrate how Trump’s economic policies are designed to transfer wealth from consumers to the wealthy and corporations. From 1981 to 2021, American policies moved $50 trillion from the bottom 90% of Americans to the top 1%. After Biden stopped that upward transfer, the Trump administration is restarting it again, on steroids.

Just how these policies are affecting Americans is no longer clear, though. Matt Grossman of the Wall Street Journal reported today that economists no longer trust the accuracy of the government’s inflation data. Officials from the Bureau of Labor Statistics, which compiles a huge monthly survey of employment and costs, told economists that staffing shortages and a hiring freeze have forced them to cut back on their research and use less precise methods for figuring out price changes. Grossman reports that the bureau has also cut back on the number of places where it collects data and that the administration has gotten rid of committees of external experts that worked to improve government statistics.

There is more than money at stake in the administration’s policies. The administration's gutting of the government seeks to decimate the modern government that regulates business, provides a basic social safety net, promotes infrastructure, and protects civil rights and to replace it with a government that permits a few wealthy men to rule.

The CBO score for the Republicans’ omnibus bill projects that if it is enacted, 16 million people will lose access to healthcare insurance over the next decade in what is essentially an assault on the Affordable Care Act, also known as Obamacare. The bill also dramatically cuts Supplemental Nutrition Assistance Plan (SNAP) benefits, clean energy credits, aid for student borrowers, benefits for federal workers, and consumer protection services, while requiring the sale of public natural resources.

These cuts continue those the administration has made since Trump took office, many of which fell under the hand of the “Department of Government Efficiency.” But, while billionaire Elon Musk was the figurehead for that group, it appears his main interest was in collecting data. His understudy, Office of Management and Budget director Russell Vought, appears to have determined the direction of the cuts, which did not save money so much as decimate the parts of the government that the authors of Project 2025 wanted to destroy.

Vought was a key author of Project 2025, whose aim is to disrupt and destroy the United States government in order to center a Christian, heteronormative, male-dominated family as the primary element of society. To do so, the plan calls for destroying the administrative state, withdrawing the United States from global affairs, and ending environmental and business regulations.

Yesterday the White House asked Congress to cancel $9.4 billion in already-appropriated spending that the Department of Government Efficiency identified as wasteful, a procedure known as “rescission.” Trump aides say the money funds programs that promote what they consider inappropriate ideologies, including public media networks PBS and NPR; the United States Agency for International Development (USAID), which provides food and basic medical care globally; and PEPFAR, the U.S. President's Emergency Plan for AIDS Relief that was established under President George W. Bush to combat HIV/AIDS in more than 50 countries and is currently credited with saving about 26 million lives.

Vought appeared today before the House Appropriations Committee, where members scolded him for neglecting to provide a budget for the year, which they need to do their jobs. But Vought had plenty to say about the things he is doing. According to ProPublica’s Andy Kroll, he claimed that under Biden “every agency became a tool of the Left.” He said the White House will continue to ask for rescissions, but also noted that, as Project 2025 laid out, he does not believe that the 1974 Impoundment Control Act, which requires the executive branch to spend the money that Congress has appropriated, is constitutional, despite court decisions saying it is.

Representative Rosa DeLauro (D-CT) told Vought: “Be honest, this is never about government efficiency. In fact, an efficient government, a government that capably serves the American people and proves good government is achievable is what you fear the most. You want a government so broken, so dysfunctional, so starved of resources, so full of incompetent political lackeys and bereft of experts and professionals that its departments and agencies cannot feasibly achieve the goals and the missions to which they are lawfully directed. Your goal is privatization, for the biggest companies to have unchecked power, for an economy that does not work for the middle class, for working and vulnerable families. You want the American people to have no one to turn to, but to the billionaires and the corporations this administration has put in charge. Waste, fraud, and abuse are not the targets of this administration. They are your primary objectives.”

The use of the government to impose evangelical beliefs on the country, even at the expense of lives, also appears to be an administration goal. Yesterday, the administration announced it is ending the Biden administration’s 2022 guidance to hospital emergency rooms that accept Medicare—which is virtually all of them—requiring that under the Emergency Medical Treatment and Active Labor Act they must perform an abortion in an emergency if the procedure is necessary to prevent a patient’s organ failure or severe hemorrhaging. The Emergency Medical Treatment and Active Labor Act requires emergency rooms to stabilize patients.

The Trump administration will no longer enforce that policy. Last year, an investigation by the Associated Press found that even when the Biden administration policy was being enforced, dozens of pregnant women, some of whom needed emergency abortions, were turned away from emergency rooms with advice to “let nature take its course.”

Finally tonight, in what seems likely to be an attempt to distract attention from the omnibus bill and all the controversy surrounding it, Trump banned Harvard from hosting foreign students. He also banned nationals from a dozen countries—Afghanistan, Chad, Republic of Congo, Equatorial Guinea, Eritrea, Haiti, Iran, Libya, Myanmar, Somalia, Sudan, and Yemen—from entering the United States, an echo of the travel ban of his first term that threw the country into chaos.

Trump justified his travel ban by citing the attack Sunday in Boulder, Colorado, on peaceful demonstrators marching to support Israeli hostages in Gaza. An Egyptian national who had overstayed a tourist visa hurled Molotov cocktails at the marchers, injuring 15 people.

Egypt is not on the list of countries whose nationals Trump has banned from the United States.

LETTERS FROM AN AMERICAN

HEATHER COX RICHARDSON

#Letters From An American#Heather Cox Richardson#Travel Bans#Project 2025#economic news#CBO#deficit#delusional#Vought

30 notes

·

View notes

Text

How to write a game of chess

Except at the absolute novice level, a victory does not come from somebody missing what is on the board, or not seeing what their opponent intends to do. A checkmate should not be a surprise. Nobody but an absolute novice could possibly miss a mate in one. Nobody should be surprised to be checkmated unless they have never or rarely played chess before, or they are extremely distracted.

The game of chess changes over time. If you're writing for a non-modern era and accuracy is important to you, consider using a database such as chessgames.com, inputting a year, and looking through some games until you find one you'd like your characters to play.

You can also look at a chess manual from the time period your story is set in to find some appropriate vocabulary to use. Here are some instructional books from 1614 (London); 1799 (London); 1849 (New York); 1896 (London); 1919 (Boston); 1948 (London); and 1980 (London).

How detailed your description of the game is is up to you. You don't want to bore a reader unacquainted with chess; nor do you want to include inaccurate detail and distract a reader who is acquainted with chess (see again point no. 1).

If you're not confident in analysing a chess game yourself, many games have analyses online. If I go to chessgames.com and enter a random year (say 1855), and pick some of the names I see (say, Mayet and Anderssen), and then google "Mayet versus Anderssen 1855," I can find youtube videos and essays of people analysing games by these players in this year. (Not every game will necessarily have analyses like this: you might have to look around for a more famous one.)

An analysis of a game is the analyser's best idea of what each player was thinking at any given time. Player A moves a pawn up in order to take space in the center. Player B plays a check in order to force player A to defend their king, so that player A does not have time to take player B's queen, etc. Of course I'm not suggesting you use anybody's analysis of a game word for word: but this will give you an idea of what kinds of things you can describe happening in a game of chess.

You probably already have an idea of what you want the chess game in your story to say about the characters' relationship with each other. How do they each approach the game? Do they view it as symbolically important, or just as recreation? Does each of them have a different idea of what the game symbolises? Are they friendly, or competitive? Do they under- or over-estimate the others' skill? Does one person allow the other to win?

Also think about what each character's game says about them as an individual. Do they play slowly and methodically, consolidating and improving their position before launching a co-ordinated attack? Are they impulsive, attacking aggressively but leaving their center open? Do they play defensively, not allowing their opponent to get through? Do they play unpredictably, making moves that leave their opponent questioning their plans?

Are there other characters in the scene, or watching the game? What do they expect the game's outcome to be? How do they interpret the characters' play?

13 notes

·

View notes

Text

(Credit to @fdataanalysis )

Leclerc was on average only 0.11 off the pace set by Norris in the HEAVILY updated McLaren, despite running in dirty air for the majority of the race and having the oldest tyres of the top drivers (and he was only 0.04 seconds off Verstappen). With Sainz about a tenth slower at +0.19, this made Ferrari the fastest team this race (by an absolutely tiny margin, and keep in mind that Piastri only had 50% of the McLaren updates and that RBR was struggling more than we've seen before this season)

With this in mind:

McLaren have brought their big update this race, but haven't had the opportunity to gather much data on it. With both drivers having the full package in Imola they will need a couple of races to fully understand how the new car behaves before they can bring further improvements.

I'm very curious about this actually. The new McLaren seemed Very temperamental this weekend, as shown by Norris's issues in qualifying (most likely caused by huge shifts in balance depending on the tyre compound). Likely it will take them another 5-7 races before they can bring any new substantial upgrades.

RedBull brought their big upgrade to Japan, (although it wasn't as big as McLaren's) and haven't announced any new date for any major new upgrades (to my knowledge, but please correct me on this if I have missed something!).

The improvement made by McLaren is difficult to calculate because there are a lot of factors to consider, like strategy and potential traffic (also it's currently 2am and I'm too tired. But it should be mostly accurate), but if you look at the average race pace difference between the fastest RedBull and McLaren since RBR brought their upgrades (also conveniently excludes Australia as there were no true representative times set by the RedBulls):

Japan: Norris +0.54

China sprint: Norris +1.08

China: Norris +0.88

Miami: Verstappen +0.07

This gives us an average pace difference of 0.83 before MCL upgrades and technically show an improvement of 0.9 seconds in Miami (but of course this time is affected by RBR having a very good pace in China and an unusually bad pace in Miami, so take this with a couple of grains of salt. On a more regular weekend the improvement is most likely closer to 0.3-0.5 which would put them slightly behind or on par with RBR)

((EDIT: realised when I woke up that a comparison between McLaren and Ferrari would provide more accuracy for the estimated pace improvement, because of both a bigger example size and fewer outliers. This comparison also allows us to consider every race so far this season, bar the Miami sprint (no representative times for the updated MCL) and potentially the Chinese gp as Ferrari lost a lot of time due to lack of tyre temperature. Excluding outliers we get an average pace difference of 0.22 (in Ferrari's favour) meaning the MCL updates gave Norris a gain of 0.33 seconds. This shows that my previous estimate of 0.3-0.5 depending on track is most likely accurate.))

Ferrari however, is bringing their new package to Imola, and it is reportedly as big if not bigger than what McLaren brought — SF-24 EVO (for evolution). This is an upgrade package that Ferrari are Very optimistic about, and it's said to be a radical version of the sf-24. The focus going into this season was to make sure they had a good baseline that they understood properly, and good tyre degradation. This led to the car being quite simple in its design, but as we've seen so far this season Ferrari has achieved these goals. The Imola upgrades will build on this very solid base to improve speed and adjust it towards more oversteer (although we'll have to wait and see if this is successful)

Anyway, average pace difference:

Japan: Sainz +0.42

China sprint: Leclerc +0.74

China: Leclerc +1.19

Miami sprint: +0.17

Miami: Leclerc +0.04

This gives us an average pace difference of 0.5 (0.78 if we were to consider Miami an outlier, and probably less than 0.5 if we consider RBR's rocket pace in China another outlier). With MCL and RBR not likely to take any big steps forward other than what can be achieved through set up and track characteristics, and with Ferrari only needing to find about 0.3-0.5 seconds on average with their sf-24 evolution (which is what MCL did), Imola and the rest of the European races will be very very interesting. My prediction (only slightly biased) is that the top 3 teams are going to be very close depending on the track, but that Ferrari might have an edge if their upgrades work. Ultimately McLaren will probably stay the 3rd fastest team, but no one will have the same level of dominance as we've seen so far.

If Ferrari does have an edge, well Leclerc is only 3pts behind Perez, and only 38pts behind Verstappen with 18 races to go. We might still have a title fight on our hands... At the very least for the constructors and the vice-championship.

Also if I've made any errors, huge or small, please tell me because I am Sleep Deprived and the text is now blurry

#f1#f1 2024#miami gp 2024#scuderia ferrari#mclaren#red bull racing#charles leclerc#max verstappen#lando norris#thoughts and analysis

57 notes

·

View notes

Text

Am I really writing fic again? Not sure...but I guess maybe...cause I wrote a thing. Colin and Penelope have me in a vice, y'all.

No idea which of my blogs to use since this is a new fandom for me, so I chose this one. (*waves hello*) Do I even remember how to make a post? LOL

Anyway...here's a tiny little kiss fic, cause that's what I do.

-------------------

never been kissed...

His first was an embarrassment. Fumbling fingers mixed with overwhelmed breaths, the memory built up in his estimation as more revelatory than it was in actuality. There were feelings, undefinable, but new and full and far from perfunctory. The stirring he’d imagined to feel in his belly he’d felt, but not for the nameless partner, but more for the act itself. He’d been left wondering if there was more, something beyond the fluttering like moths wings and mild nausea he’s still unsure was from the touch of another's lips or his nerves alone.

His second was rushed, drunken and hazy, the remnants of it only tickling at the edges of brown liquor-poisoned flashes of dimly lit sights and muffled sounds. His pockets were left lighter from too many coins spent for something so unmemorable.

His third was better. Confidence and less alcohol proved to be improved bedfellows than his previous encounter, experienced lips matching his eagerness and hands finding purchase on areas before unexplored by soft fingers. He’d flushed at the intimacy of the act, thought back on it fondly, but remembered her hands far more than her mouth. Perhaps that is to be expected.

The few more that followed brought pleasure and exploration, but each one leaving him searching for that elusive something…something life-altering, something poetic, something… more . Looking back at his diary from that time, his confusion over his own feelings, or lack thereof, is etched into the pages with long dry ink. How could he have known something so seemingly unknowable to a man of two and twenty.

The next was his last, the last of the life he’d known before and first of the life irrevocably reshaped after.

Every millisecond of it is etched on his heart, forever being retraced with each minute that passes spent by her side. The warmth from the blush blooming beneath the impossibly soft skin of her cheek, it still causes his fingertips to flex at the slightest reminder. Her eyes, two swirling oceans of impossible blue, wide and questioning, slowly fluttering closed as he'd drawn her closer. He’d never felt so exposed, so uncertain, yet confusingly certain at the same time. That slight pull in his gut he’d felt before, it was nothing contrasted to the plummet his stomach had taken as the plump fullness of her bottom lip made contact with his own. If he’d known what electricity to feel like, he’d have been able to describe it with perfect accuracy. It was quick and searing, warmth being drug to the surface of his skin at the speed of a herd of wild horses tearing across a meadow. And then it was gone, over far too soon and leaving him near panicked and needy in ways when he looks back on he can’t help but feel foolish. He can’t give himself the credit of courageousness or strength for drawing her back in, for it had been born out of necessity, an inability to not have his lips back where they belonged. With each soft slide of her mouth against his own and the warmth of her breath igniting the space between them from the sighs escaping her throat...the formula, the construction, the intricacies of how a kiss was supposed to feel came crashing through the haze he’d been wandering through much too far away.

Entirely too far away from her .

How was he to know that this thing he’d been searching for had been here all along?

Not this thing, this person . This singular being who made it all make sense.

Pen.

#polin#polin fic#colin bridgerton#penelope featherington#bridgerton#bridgerton season 3#bridgerton spoilers#I can't believe I wrote something - it's been ages#but my god#give me a plus sized leading lady and absolutely adorable leading man#and I'm a goner#and I am here for how demisexual Colin is coded#give me all of it#sorry for the tag abuse#it's been a while#colin x penelope

31 notes

·

View notes

Text

A new method to improve the accuracy of nanoparticle size estimation from STEMs has been developed. This approach offers a more resource-efficient way to generate large datasets for training machine learning models, compared to generating additional real multislice images.

4 notes

·

View notes

Text

iPhone 17: Everything You Need to Know (Rumors, Specs, and Images)

The iPhone 17 is shaping up to be one of the most exciting smartphone launches of 2025. With major changes in design, camera, performance, and even model lineup, Apple is rumored to introduce a fresh generation of iPhones that appeal to both regular users and pro-level creators.

📅 Expected Launch Date Event date: Mid-September 2025 (likely September 10–12)

Pre-orders: Expected to begin the same week

Availability: Global release by end of September 2025

Apple traditionally announces its iPhones in early to mid-September, and the iPhone 17 lineup is expected to follow this pattern.

📦 iPhone 17 Lineup: All the Models Model Key Feature iPhone 17 Standard upgrade with new camera iPhone 17 Air (Slim) Ultra-thin design replacing Plus model

iPhone 17 Pro Max / Ultra Largest, most powerful iPhone yet

🧩 Design & Build: A Sleeker Look for 2025 iPhone 17: Minor refinements over iPhone 16 design

iPhone 17 Air: New slim profile (~5.5–6.0 mm thickness), aluminum build

Pro Models: Horizontal rear camera bar for symmetry and stability

New Coating: Anti-reflective and ultra-scratch-resistant glass

Apple is reportedly moving away from the “Plus” branding and introducing a new iPhone 17 Air, emphasizing thinness and portability.

🔋 Performance & Hardware Specs 🔧 Chipset & RAM: Model Chipset RAM iPhone 17 A18 or A19 8 GB iPhone 17 Air A19 8 GB iPhone 17 Pro A19 Pro 12 GB iPhone 17 Ultra A19 Pro 12 GB

First-time use of vapor chamber cooling in iPhones for better thermal control.

Thinner internal boards using resin-coated copper to reduce weight.

Potential shift to Apple-manufactured batteries.

📷 Camera Upgrades: New Standards for Mobile Photography Front Camera: 24 MP, 6-element lens (all models)

Pro Models: Triple 48 MP setup with telephoto + LiDAR + 8K video capture

Improved low-light and computational photography features

This year's front camera upgrade is one of the most significant in iPhone history — particularly useful for selfies and FaceTime.

Display & Screen Features LTPO OLED panels across all models

Always-on Display (expected in Pro models)

Anti-glare coating and improved color accuracy

Display sizes will remain similar to the iPhone 16 lineup but with enhancements in brightness, power efficiency, and durability.

🎨 Color Options (Expected) iPhone 17 Base:

Lilac / Lavender (new)

Midnight Black

Starlight

Blue / Green

Pro Models:

Sky Blue (new)

Space Black

Silver

Titanium Gray

Apple may use exclusive finishes and materials in Pro models, continuing the premium design trend.

💸 Estimated Pricing (USD) Model Estimated Price iPhone 17 $799 iPhone 17 Air $899 iPhone 17 Pro $1,099 iPhone 17 Ultra $1,199–$1,299

🖼️ Leaked Renders & Concept Images Here are some early concept visuals based on leaked schematics and CAD files:

iPhone 17 Concept

iPhone 17 Air (Slim) – Side Profile

iPhone 17 Pro with Camera Bar

✅ Final Thoughts The iPhone 17 looks like it will be a major leap forward, especially with the introduction of:

Slimmer form factors (iPhone 17 Air)

Significantly improved cameras

Better display across all models

If these rumors hold true, the iPhone 17 may mark one of Apple’s most important redesigns since the iPhone X.

More details visit this link

#iphone#esc 2025#35mm#adidas#aespa#across the spiderverse#alexander mcqueen#911 abc#19th century#alien stage

2 notes

·

View notes

Text

Utilizing communication satellites to survey Earth

Useable data is one of the most valuable tools scientists can have. The more data sources they have, the better they can make statements about their research topic. For a long time, researchers in the field of navigation and satellite geodesy found it regrettable that although mega-constellations with thousands of satellites orbited Earth for communication purposes, they were unable to use their signals for positioning or for observation of Earth.

In the FFG project Estimation, the Institute of Geodesy at Graz University of Technology (TU Graz) has now conducted research on ways of utilizing these signal data and thus tapping into a large reservoir of additional data sources alongside navigation satellites and special research satellites, which will help to observe changes on Earth even more precisely.

Earth observation using satellites is based on the principle that changes in sea level or groundwater levels, for example, influence Earth's gravitational field and therefore the satellite trajectory. Scientists use this to utilize the positions and orbits of satellites as a data source for their research.

"The increasing availability of satellite internet in particular means that we have a huge number of communication signals at our disposal, which significantly exceed those of navigation satellites in terms of number and signal strength," says Philipp Berglez from the Institute of Geodesy.

"If we can now use these signals for our measurements, we not only have better signal availability, but also much better temporal resolution thanks to the large number of satellites. This also allows us to observe short-term changes. This means that in addition to determining the position and changes in Earth's gravitational field that are relevant for climate research, weather phenomena such as heavy rain or changes in sea level can also be tracked in real time."

One of the challenges in realizing the project was that satellite operators, including Starlink, OneWeb and the Amazon project Kuiper, do not disclose any information about the structure of their signals, and these signals are constantly changing. In addition, there are no precise orbit data or distance measurements for the satellites, which represent potential sources of error for calculations.

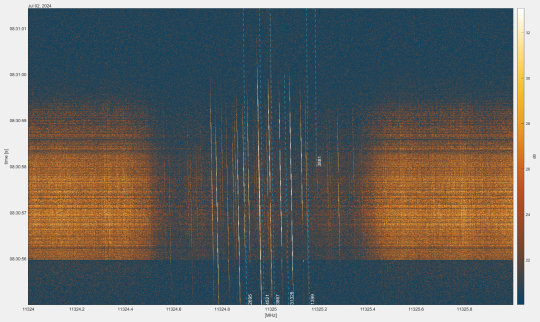

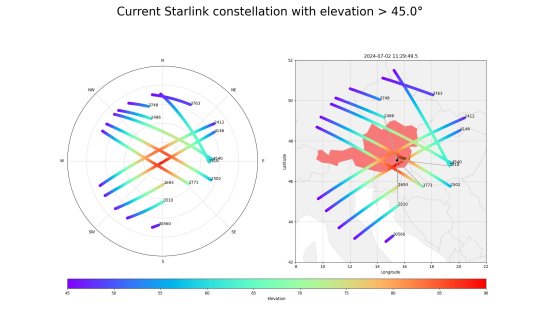

By analyzing the Starlink signal, the researchers nevertheless found a way to enable the desired applications. They detected sounds within the signal that were constantly audible. They then utilized the Doppler effect and investigated the frequency shift of these constant tones as satellites moved towards and away from the receiver. This allowed the position to be determined with an accuracy of 54 meters.

Although this is not yet satisfactory for geodetic applications, for the investigations that have been carried out so far, only a fixed, commercially available satellite antenna was used to test and verify the basic principle of the measurement method.

More insight into how our world is changing

The aim now is to improve the accuracy to just a few meters. This will be made possible by antennas that can either follow the satellites or receive signals from different directions. In addition, measurements are to be taken at several locations in order to increase accuracy and reduce the influence of errors.

With more measurement data, the researchers can calculate more precise orbit data, which in turn makes determining positions and calculating Earth's gravitational field more accurate. The navigation working group also wants to develop new signal processing methods that filter out more precise measurement data from signals that have so far been rather unusual for geodetic applications.

"By being able to utilize the communication signals for geodesy, we have revealed enormous potential for the even more detailed investigation and measurement of our Earth," says Berglez.

"Now it's all about improving precision. Once we have succeeded in doing this, we will be able to understand even more precisely what changes our world is undergoing. Just to be on the safe side, I would like to make the following clear: we are analyzing communication signals here, but we cannot and do not want to know their content. We really only use them for positioning and observing orbits in order to determine Earth's gravitational field."

TOP IMAGE: Signal spectrum of the received Startlink satellite signals. Credit: IFG - TU Graz

LOWER IMAGE: The visibility of Starlink satellites over Graz. Credit: IFG - TU Graz

3 notes

·

View notes

Text

Craig Gidney Quantum Leap: Reduced Qubits And More Reliable

A Google researcher reduces the quantum resources needed to hack RSA-2048.

Google Quantum AI researcher Craig Gidney discovered a way to factor 2048-bit RSA numbers, a key component of modern digital security, with far less quantum computer power. His latest research shows that fewer than one million noisy qubits could finish such a task in less than a week, compared to the former estimate of 20 million.

The Quantum Factoring Revolution by Craig Gidney

In 2019, Gidney and Martin Ekerå found that factoring a 2048-bit RSA integer would require a quantum computer with 20 million noisy qubits running for eight hours. The new method allows a runtime of less than a week and reduces qubit demand by 95%. This development is due to several major innovations:

To simplify modular arithmetic and reduce computing, approximate residue arithmetic uses Chevignard, Fouque, and Schrottenloher (2024) techniques.

Yoked Surface Codes: Gidney's 2023 research with Newman, Brooks, and Jones found that holding idle logical qubits maximises qubit utilisation.

Based on Craig Gidney, Shutty, and Jones (2024), this method minimises the resources needed for magic state distillation, a vital stage in quantum calculations.

These advancements improve Gidney's algorithm's efficiency without sacrificing accuracy, reducing Toffoli gate count by almost 100 times.

Cybersecurity Effects

Secure communications including private government conversations and internet banking use RSA-2048 encryption. The fact that quantum-resistant cryptography can be compromised with fewer quantum resources makes switching to such systems more essential.

There are no working quantum computers that can do this technique, but research predicts they may come soon. This possibility highlights the need for proactive cybersecurity infrastructure.

Expert Opinions

Quantum computing experts regard Craig Gidney's contribution as a turning point. We offer a method for factoring RSA-2048 with adjustable quantum resources to bridge theory and practice.

Experts advise not panicking immediately. Quantum technology is insufficient for such complex tasks, and engineering challenges remain. The report reminds cryptographers to speed up quantum-secure method development and adoption.

Improved Fault Tolerance

Craig Gidney's technique is innovative in its tolerance for faults and noise. This new approach can function with more realistic noise levels, unlike earlier models that required extremely low error rates, which quantum technology often cannot provide. This brings theoretical needs closer to what quantum processors could really achieve soon.

More Circuit Width and Depth

Gidney optimised quantum circuit width (qubits used simultaneously) and depth (quantum algorithm steps). The method balances hardware complexity and computing time, improving its scalability for future implementation.

Timeline for Security Transition

This discovery accelerates the inevitable transition to post-quantum cryptography (PQC) but does not threaten present encryption. Quantum computer-resistant PQC standards must be adopted by governments and organisations immediately.

Global Quantum Domination Competition

This development highlights the global quantum technological competition. The US, China, and EU, who invest heavily in quantum R&D, are under increased pressure to keep up with computing and cryptographic security.

In conclusion

Craig Gidney's invention challenges RSA-2048 encryption theory, advancing quantum computing. This study affects the cryptographic security landscape as the quantum era approaches and emphasises the need for quantum-resistant solutions immediately.

#CraigGidney#Cybersecurity#qubits#quantumsecurealgorithms#cryptographicsecurity#postquantumcryptography#technology#technews#technologynews#news#govindhtech

2 notes

·

View notes

Text

Top Tips for Producing Accurate Electrical Estimates

Producing accurate electrical estimates is essential for contractors aiming to submit competitive bids and successfully manage project budgets. Careful planning, attention to detail, and the right tools can significantly improve estimate quality. Here are key tips to help achieve accurate electrical estimates.

Thoroughly Review Project Documents

Start by carefully examining all available project documents including blueprints, electrical drawings, and specifications. Understanding the full scope and details ensures no materials or work items are missed. Clarify any ambiguous or incomplete information with designers or clients before beginning the estimate.

Use Digital Takeoff Tools

Digital takeoff software allows precise measurement and quantification of materials directly from electronic plans. Using these tools reduces manual errors and speeds up the process compared to traditional paper takeoffs. Accurate quantity takeoffs form the foundation of a reliable estimate.

Keep Pricing Data Updated

Material costs and labor rates frequently fluctuate due to market conditions. Regularly update pricing databases by consulting suppliers or using real-time industry resources. Accurate current pricing helps prevent cost overruns and ensures your bids reflect true market conditions.

Adjust Labor Units for Project Conditions

Standard labor units estimate time needed for installation under typical conditions. However, factors such as site accessibility, complexity, crew experience, and project schedule impact actual labor requirements. Adjust labor hours accordingly to reflect these realities.

Include Indirect and Miscellaneous Costs

Don’t overlook indirect costs such as equipment rentals, permits, testing, cleanup, and project management time. Including these expenses ensures your estimate reflects the total project cost and helps avoid surprises later.

Collaborate with Field Teams

Consult with electricians and project managers to validate labor productivity assumptions and identify potential challenges. Their practical insights can help refine estimates and improve accuracy.

Review and Verify Estimates

Double-check all calculations and quantities. Have a second estimator or project manager review the estimate to catch mistakes or omissions. Peer review is a valuable quality control step.

Document Assumptions Clearly

Maintain clear documentation of all assumptions made during estimating, such as productivity rates, pricing sources, and scope interpretations. This documentation supports transparency and helps explain estimates to clients or internal teams.

Stay Informed on Codes and Regulations

Electrical codes and standards change periodically. Staying current ensures your estimates incorporate compliance costs and avoid costly rework due to nonconformance.

Invest in Ongoing Training and Software Updates

Regular training for estimators on best practices and new technologies, along with keeping estimating software updated, contributes to consistent estimate quality.

FAQs

How often should I update labor and material pricing? Ideally, update pricing at least quarterly or whenever there are significant market changes to maintain estimate accuracy.

What is the biggest cause of inaccurate electrical estimates? Incomplete project documentation and failure to adjust for site-specific conditions often lead to inaccurate estimates.

Can software alone guarantee accurate estimates? Software is a powerful tool but accuracy depends on quality data input and estimator expertise.

How detailed should an electrical estimate be? The level of detail depends on project size and client requirements but should always cover all major materials, labor, indirect costs, and contingencies.

Conclusion

Producing accurate electrical estimates requires a combination of detailed review, precise measurement, realistic labor evaluation, and ongoing collaboration. Following these tips can improve your estimating process and contribute to successful project outcomes.

#how to produce accurate electrical estimates#tips for electrical estimating accuracy#best practices for electrical estimating#improving electrical bid accuracy#electrical takeoff accuracy tips#updating material pricing#adjusting labor units#estimating indirect electrical costs#reviewing electrical estimates#validating labor productivity#electrical estimating collaboration tips#avoiding mistakes in electrical estimating#documenting estimating assumptions#training for estimators#electrical estimating software tips#electrical code updates and estimating#bid preparation accuracy#electrical estimating checklist#estimating electrical subcontractor work#checking electrical estimate calculations#refining electrical estimates#managing estimating risks#electrical cost forecasting tips#estimating complex electrical projects#electrical estimating quality control#improving estimating workflows#electrical project cost accuracy#real-time pricing in estimating#estimator review processes#tips for winning electrical bids

0 notes

Text

Predicting Employee Attrition: Leveraging AI for Workforce Stability

Employee turnover has become a pressing concern for organizations worldwide. The cost of losing valuable talent extends beyond recruitment expenses—it affects team morale, disrupts workflows, and can tarnish a company's reputation. In this dynamic landscape, Artificial Intelligence (AI) emerges as a transformative tool, offering predictive insights that enable proactive retention strategies. By harnessing AI, businesses can anticipate attrition risks and implement measures to foster a stable and engaged workforce.

Understanding Employee Attrition

Employee attrition refers to the gradual loss of employees over time, whether through resignations, retirements, or other forms of departure. While some level of turnover is natural, high attrition rates can signal underlying issues within an organization. Common causes include lack of career advancement opportunities, inadequate compensation, poor management, and cultural misalignment. The repercussions are significant—ranging from increased recruitment costs to diminished employee morale and productivity.

The Role of AI in Predicting Attrition

AI revolutionizes the way organizations approach employee retention. Traditional methods often rely on reactive measures, addressing turnover after it occurs. In contrast, AI enables a proactive stance by analyzing vast datasets to identify patterns and predict potential departures. Machine learning algorithms can assess factors such as job satisfaction, performance metrics, and engagement levels to forecast attrition risks. This predictive capability empowers HR professionals to intervene early, tailoring strategies to retain at-risk employees.

Data Collection and Integration

The efficacy of AI in predicting attrition hinges on the quality and comprehensiveness of data. Key data sources include:

Employee Demographics: Age, tenure, education, and role.

Performance Metrics: Appraisals, productivity levels, and goal attainment.

Engagement Surveys: Feedback on job satisfaction and organizational culture.

Compensation Details: Salary, bonuses, and benefits.

Exit Interviews: Insights into reasons for departure.

Integrating data from disparate systems poses challenges, necessitating robust data management practices. Ensuring data accuracy, consistency, and privacy is paramount to building reliable predictive models.

Machine Learning Models for Attrition Prediction

Several machine learning algorithms have proven effective in forecasting employee turnover:

Random Forest: This ensemble learning method constructs multiple decision trees to improve predictive accuracy and control overfitting.

Neural Networks: Mimicking the human brain's structure, neural networks can model complex relationships between variables, capturing subtle patterns in employee behavior.

Logistic Regression: A statistical model that estimates the probability of a binary outcome, such as staying or leaving.

For instance, IBM's Predictive Attrition Program utilizes AI to analyze employee data, achieving a reported accuracy of 95% in identifying individuals at risk of leaving. This enables targeted interventions, such as personalized career development plans, to enhance retention.

Sentiment Analysis and Employee Feedback

Understanding employee sentiment is crucial for retention. AI-powered sentiment analysis leverages Natural Language Processing (NLP) to interpret unstructured data from sources like emails, surveys, and social media. By detecting emotions and opinions, organizations can gauge employee morale and identify areas of concern. Real-time sentiment monitoring allows for swift responses to emerging issues, fostering a responsive and supportive work environment.

Personalized Retention Strategies

AI facilitates the development of tailored retention strategies by analyzing individual employee data. For example, if an employee exhibits signs of disengagement, AI can recommend specific interventions—such as mentorship programs, skill development opportunities, or workload adjustments. Personalization ensures that retention efforts resonate with employees' unique needs and aspirations, enhancing their effectiveness.

Enhancing Employee Engagement Through AI

Beyond predicting attrition, AI contributes to employee engagement by:

Recognition Systems: Automating the acknowledgment of achievements to boost morale.

Career Pathing: Suggesting personalized growth trajectories aligned with employees' skills and goals.

Feedback Mechanisms: Providing platforms for continuous feedback, fostering a culture of open communication.

These AI-driven initiatives create a more engaging and fulfilling work environment, reducing the likelihood of turnover.

Ethical Considerations in AI Implementation

While AI offers substantial benefits, ethical considerations must guide its implementation:

Data Privacy: Organizations must safeguard employee data, ensuring compliance with privacy regulations.

Bias Mitigation: AI models should be regularly audited to prevent and correct biases that may arise from historical data.

Transparency: Clear communication about how AI is used in HR processes builds trust among employees.

Addressing these ethical aspects is essential to responsibly leveraging AI in workforce management.

Future Trends in AI and Employee Retention

The integration of AI in HR is poised to evolve further, with emerging trends including:

Predictive Career Development: AI will increasingly assist in mapping out employees' career paths, aligning organizational needs with individual aspirations.

Real-Time Engagement Analytics: Continuous monitoring of engagement levels will enable immediate interventions.

AI-Driven Organizational Culture Analysis: Understanding and shaping company culture through AI insights will become more prevalent.

These advancements will further empower organizations to maintain a stable and motivated workforce.

Conclusion

AI stands as a powerful ally in the quest for workforce stability. By predicting attrition risks and informing personalized retention strategies, AI enables organizations to proactively address turnover challenges. Embracing AI-driven approaches not only enhances employee satisfaction but also fortifies the organization's overall performance and resilience.

Frequently Asked Questions (FAQs)

How accurate are AI models in predicting employee attrition?

AI models, when trained on comprehensive and high-quality data, can achieve high accuracy levels. For instance, IBM's Predictive Attrition Program reports a 95% accuracy rate in identifying at-risk employees.

What types of data are most useful for AI-driven attrition prediction?

Valuable data includes employee demographics, performance metrics, engagement survey results, compensation details, and feedback from exit interviews.

Can small businesses benefit from AI in HR?

Absolutely. While implementation may vary in scale, small businesses can leverage AI tools to gain insights into employee satisfaction and predict potential turnover, enabling timely interventions.

How does AI help in creating personalized retention strategies?

AI analyzes individual employee data to identify specific needs and preferences, allowing HR to tailor interventions such as customized career development plans or targeted engagement initiatives.

What are the ethical considerations when using AI in HR?

Key considerations include ensuring data privacy, mitigating biases in AI models, and maintaining transparency with employees about how their data is used.

For more Info Visit :- Stentor.ai

2 notes

·

View notes

Text

Tax Consultants Services: Trusted Experts for Every Tax Need

Taxes are one of the most critical and complex aspects of personal and business finance. Whether you’re an individual taxpayer, a business owner, or an expat living abroad, navigating tax laws can be overwhelming. That’s where Tax Consultants Services play a vital role.

By hiring Expert Tax Advisors, you gain access to strategic guidance, compliance support, and personalized tax planning — saving you money, time, and unnecessary stress.

In this guide, we’ll explore the different types of tax consultant services available, how they can help you, and what to look for when choosing the right tax advisor.

What Are Tax Consultants Services?

Tax Consultants Services refer to professional tax solutions offered by certified individuals or firms. These services are designed to help taxpayers — both individuals and businesses — manage their tax obligations effectively and legally minimize liabilities.

Unlike seasonal tax preparers, Expert Tax Advisors offer year-round services including:

· Tax preparation and filing

· Tax planning and strategy

· Audit representation

· IRS dispute resolution

· International and expat tax support

· Small business tax optimization

Whether you’re dealing with domestic filings or navigating global tax obligations, tax consultants provide clarity and expertise where it’s most needed.

Why Hire Expert Tax Advisors?

Hiring Expert Tax Advisors gives you an edge in understanding and applying complex tax rules. Here’s how they help:

✅ Maximize Deductions and Credits

Most people miss out on valuable deductions and credits simply because they don’t know they exist. An experienced tax advisor can uncover savings that software often overlooks.

✅ Stay Compliant with Changing Tax Laws

Tax laws change frequently. Advisors stay updated and help you stay compliant, preventing IRS penalties and audits.

✅ Save Time and Reduce Stress

Filing taxes can be time-consuming and confusing. Hiring a professional ensures accuracy and saves you hours of frustration.

✅ IRS Representation and Support

Facing an audit or letter from the IRS? A tax consultant can represent you, resolve disputes, and provide legal guidance.

Business Tax Preparation Services

Running a business comes with added tax complexity — payroll, expenses, deductions, estimated taxes, and more. That’s why Business Tax Preparation Services are essential for companies of all sizes.

Benefits of Business Tax Services:

· Accurate tax return preparation and filing for LLCs, S-corporations, and corporations

· Advice on tax-efficient business structures

· Assistance with payroll, sales tax, and quarterly estimated payments

· Industry-specific deductions and tax-saving opportunities

· Year-round bookkeeping and financial forecasting

Business owners who use tax consultants often find they not only avoid costly errors, but also improve profitability by optimizing their tax approach.

Expat Tax Services: Stay Compliant Abroad

Living or working abroad? Your tax situation gets even more complicated. Fortunately, Expat Tax Services are designed specifically for U.S. citizens and residents living overseas.

Key Areas Covered:

· Foreign Earned Income Exclusion (FEIE)

· Foreign Tax Credit (FTC)

· Reporting foreign bank accounts (FBAR)

· FATCA compliance

· Dual taxation issues

· Renouncing U.S. citizenship tax planning

Filing taxes as an expat requires in-depth knowledge of both U.S. and international tax law. A specialized tax consultant can ensure you stay compliant while minimizing double taxation.

How to Choose the Right Tax Consultant

When hiring someone for tax consultant services, it’s essential to vet their credentials and expertise. Here’s what to consider:

Certification & Credentials

Look for licensed professionals — Certified Public Accountants (CPAs), Enrolled Agents (EAs), or tax attorneys.

Industry Experience

Ask if they’ve handled cases similar to yours — whether it’s small business taxes, expat returns, or high-net-worth planning.

Client Reviews & Testimonials

Check online reviews or ask for references to understand their reputation and reliability.

Services Offered

Choose a consultant who provides the full spectrum of services you need — from planning to filing and beyond.

Technology & Communication

Modern tax consultants use secure, cloud-based platforms for file sharing, video calls, and e-signatures, making it easy to work together remotely.

Related FAQs About Tax Consultants Services

What’s the difference between a tax preparer and a tax consultant?

A tax preparer mainly focuses on filing your returns, while a tax consultant offers ongoing strategic advice, planning, and representation. Tax consultants typically have more experience and credentials.

Can tax consultants help me save money?

Yes! Expert Tax Advisors can uncover deductions and strategies that significantly reduce your tax bill, often more than covering their own fees.

Are tax consultant services worth it for small businesses?

Absolutely. Business Tax Preparation Services help small businesses stay compliant, reduce errors, and maximize tax advantages like write-offs and credits.

What are Expat Tax Services, and do I need them?

Expat Tax Services help U.S. citizens living abroad file their required returns and disclosures. If you earn income overseas or have foreign assets, you likely need these services.

How much do tax consultants typically charge?

Fees vary based on complexity. Personal returns may range from $200–$500, while business or international filings can go up to $1,000+.

Can a tax consultant represent me before the IRS?

Yes — CPAs, Enrolled Agents, and tax attorneys can legally represent you in front of the IRS for audits, appeals, or collections.

Final Thoughts

Whether you’re managing your personal finances, running a business, or living abroad, Tax Consultants Services are essential for simplifying the tax process and protecting your financial future.

From Expert Tax Advisors who can guide you through IRS complexities, to specialized Expat Tax Services and robust Business Tax Preparation Services, there’s a solution for every need.

✅ Ready to make smarter tax decisions?

Visit Taxperts.com today to schedule a consultation with certified tax professionals who care about your success.

#accounting#tax consultants#Expert Tax Advisors#taxiservice#Business Tax Preparation#Expat Tax Services#tax services#tax preparation

2 notes

·

View notes

Text

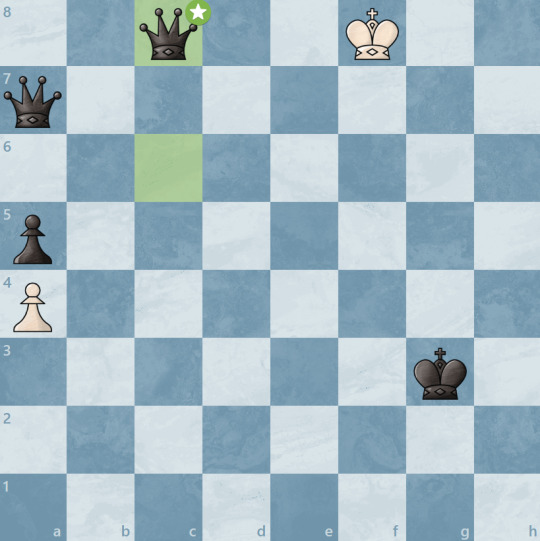

The Game:

With a resignation on move 53, the game has ended.

With an accuracy of 86% relative to computer analysis, this is an extremely solid playing level. (The computer managed 93%, which I naively estimate puts our collective rating somewhere around 2000.) As I suspect we had no candidate masters or actual master players, this is a marked improvement over the individual players, who probably cap out at around 1500, with most lower than that.

The most important move was probably 20. a4. Before then, the game may have been a draw or a victory for white, but after that, it became a draw or a victory for black because of how it weakened the queenside. (The computer, as seen here, suggests h3 as the alternative, which I guess is reasonable.)

Incidentally, the correct response to 22... c4+ was not 23. d4, which allows the c pawn to come to sit on b2, where it annoyed us for a long period of the middle and late game, but to offer a trade of queens with Qf2.

This actually was a pretty common thread, where the weak moves would be made in very positional situations, and would be followed by an unwillingness to offer trades. This is actually pretty interesting, because some of the best play from the collective was during tactical positions (Aside from move 24 where we exchanged a rook for a bishop instead of recognizing that the b2 pawn promoting was not actually a threat yet and capturing the f8 rook instead), but the collective's votes also tried to get us into much more cautious and strategic positions, occasionally to our detriment.

This implies that a more tactical opening is actually desirable, perhaps the scotch game or a king's indian attack, rather than the weird hybrid italian-scotch opening we ended up with. However, that might be too risky, as a single mistake in a tactical situation could be devastating.

Overall, the period from move 20-24 was the most damaging to our game, but the overall decline was pretty slow. The position wasn't basically-unwinnable until move 37. d4, which would eventually break our kingside pawn structure.

At the time of resignation, there was a forced checkmate. With fairly accurate play, we'd have been checkmated on the 8th rank by two queens promoted from the h and g pawns. It might have looked something like this.

Thus it goes. And remember, you can send questions to the ask box if you'd like! Also, Anarchist Chess will probably return for a second game (though probably not this month.)

16 notes

·

View notes

Text

The role of AI in cybersecurity: protecting data in the digital age

Quick summary Unfortunately, cybercriminals are resourceful and work tirelessly to infiltrate vulnerable systems with evolved cyber attacks that adapt to particular environments, making it difficult for security teams to identify and mitigate risks. So, read our blog and learn how artificial intelligence in cybersecurity helps with threat detection, automates responses, and facilitates robust protection against evolving cyber threats. This blog explores the different dynamics of AI on cybersecurity, supported by real-life examples and our thorough research.

The digital revolution led by AI/ML development services and interconnectedness at scale has opened a number of opportunities for innovation and communication. However, this digital revolution has made us vulnerable and exposed us to a wide array of cyber attacks. As modern technologies have become an integral part of every enterprise and individual, we can not underestimate the persistent cyber threat. Moreover, cyber risk management failed to keep pace with the proliferation of digital and analytical transformation, leaving many enterprises confused about how to identify and manage security risks.

The scope and threat of cybersecurity are growing, and no organization is immune. From small organizations to large enterprises, municipalities to the federal government, all face looming cyber threats even with the most sophisticated cyber controls, no matter how advanced, will soon be obsolete. In this highly volatile environment, leadership must answer critical issues.

Are we prepared for accelerated digitalization for the next few years?

More specifically,

Are we looking far enough to understand how today's tech investment will have cybersecurity implications in the future?

Globally, organizations are continuously investing in technology to run and modernize their businesses. Now, they are targeting to layer more technologies into their IT networks to support remote work, improve customer satisfaction, and generate value, all of which create vulnerabilities and, at the same time, adversaries - no longer limited to individual players since it also includes highly advanced organizations that function with integrated tools and capabilities powered by artificial intelligence and machine learning. The growth of AI in the cybersecurity market Artificial Intelligence has become one of the most valuable technologies in our day-to-day lives, from the tech powering our smartphones to the autonomous driving features of cars. AI ML services are changing the dynamics of almost every industry, and cybersecurity is no exception. The global artificial intelligence market is expanding due to the increasing usage of technology across almost every field, spurring demand for advanced cybersecurity solutions and privacy.

The leading strategic consulting and research firm Statista estimated that AI in the cybersecurity market was worth $24.3 billion in 2023, which is forecasted to double in 2026. During the forecast period of 2023 to 2030, the global AI in cybersecurity market is expected to grow significantly and reach a value of $134 billion by 2029. The major growth drivers include increasing cyber-attacks, advanced security solutions, and the growing sophistication of cybercriminals.

Cyber AI is trending now since it facilitates proactive defense mechanisms with utmost accuracy. Besides that, the importance of cybersecurity in the banking and finance industry, the rise in privacy concerns, and the frequency and intricacies of cyber threats are set to prime the pump for the global artificial intelligence market in cybersecurity during the period under analysis. However, experts predict that advanced demonstrative data requirements will likely restrain the overall market growth.

AI-powered applications improve the security of networks, computer systems, and data from cyber attacks, such as malware, phishing, hacking, and insider threats. Artificial intelligence in cybersecurity automates and improves security processes like threat detection, incident response, and security risk analysis. Leveraging machine learning systems, AI-based systems analyze massive data sets from different sources to identify specific patterns and potential risks.

The pandemic impact on the cybersecurity

The pandemic had a dual impact on the cybersecurity market. It led to economic uncertainties and significant disruption across the industry. On the other hand, it also highlighted the importance of cybersecurity as businesses and most of the workforce shifted to remote work and digital communications. Additionally, it also highlighted the extensive need for automation. As people became more dependent on technology, the need and necessity for cybersecurity became paramount.

During the pandemic, security teams were also forced to work from home and manage security incidents in a greater quantity. This led to increased investment in AI in cybersecurity, as it facilitates real-time threat detection and response capabilities at scale. Moreover, AI-powered cybersecurity solutions automate daily operations, enabling teams to focus on higher-value and intricate tasks. AI for cybersecurity is a proactive approach that reacts to the threat in real-time. Cybersecurity challenges

The cybersecurity market is leaning towards an upward trajectory; McKinsey research reveals that the global market expects a further increase in cyberattacks. Its study indicates that around $101.5 billion US dollars is projected to be spent on service providers by 2025, and 85% of organizations are expected to increase their spending on IT security. These growing numbers have also opened positions for security professionals, which now number around 3.5 million globally.

Unfortunately, the security team hasn't experienced the one last 'easy' year since the pandemic, as cyber-attacks are on the rise, existing attacks are evolving, and new and more advanced threats are approaching. Cybercriminals are becoming more resourceful and taking advantage of new vulnerabilities and technologies. Some of the most significant cyber threats that are going to threaten corporate cybersecurity in the current and next years are,

The sophistication of cyberattacks, along with increased frequency

The increasing volume of data and network traffic to monitor

The dire need to monitor real-time threat detection and response

Shortage of skilled security professionals

Ransomware zero days and mega attacks

AI-enabled cyber threat

State-sponsored hacktivism and wipers

Now, organizations are facing an increasing volume of sophisticated and harmful cyberattacks. Cyber threat actors are equipped with highly effective and profitable attack vectors and are choosing to use automation and artificial intelligence to carry out these attacks on a larger scale. Therefore, globally, enterprises leverage AI for cybersecurity since it offers enhanced abilities to manage these growing cybersecurity threats more effectively. AI in cybersecurity - A guaranteed promise to digital protection

AI, a system that quickly identifies and responds to online anomalies in real time, is the perfect solution for data safety. With the long-term potential to assure powerful built-in security measures, AI for cybersecurity aims to bridge the gap that cybercriminals aim to take advantage of. AI has the capability to learn and improve cybersecurity, which is powered by massive amounts of data. This consistent learning means AI-powered cybersecurity is constantly evolving and remains relevant.

AI in threat detection

Cybercriminals are intelligent and consistently work to evolve their strategies to evade the most secure environment. They piggyback off each other to launch a more deadly attack using the most advanced approaches, such as polymorphic malware, zero-day exploits, and phishing attacks. To deal with such attacks, AI for cybersecurity is designed to protect against emerging threats that are tough to identify and mitigate, like expanding attack vectors.

Its ultimate aim is to address the increasing volume and velocity of such attacks, particularly ransomware. It enables predictive analytics that helps security teams instantly identify, analyze, and neutralize cyber threats. AI-enabled approaches for threat detection automate anomaly detection, identify vulnerabilities, and respond to attacks quickly. AI in threat detection involves machine learning models that evaluate the network traffic user behavior, and systems logs.

AI in automated response