Nitor Infotech, an Ascendion Company is a software product development services company that builds world-class products and solutions by combining cutting-edge technologies for web, Cloud, data and devices. Utilizing digitalization strategy as the centrepiece, Nitor helps build disruptive solutions for businesses using readily deployable and customizable accelerators. With prime innovation in healthcare and technology, Nitor provides solutions and services that significantly improve product time to market, helping businesses deliver quality healthcare applications and better customer engagement. Our offerings help companies engage patients, empower care teams, optimize clinical and operational effectiveness, and transform the care continuum.

Don't wanna be here? Send us removal request.

Text

Regression Testing Best Practices Every Developer Should Know

If you’ve ever made a small tweak in your code–maybe just a bug fix or a UI adjustment–and suddenly noticed something else in the code breaking mysteriously, welcome to the world where regression testing becomes your best friend.

So, what is regression testing really? It’s the process of re-running previously executed test cases to ensure that new code changes haven't negatively impacted existing functionalities. And trust us, skipping it can lead to nightmarish bugs in production.

Let’s dive into some regression testing best practices that every developer, whether a beginner or seasoned, should know.

1. Automate Early and Often Manual testing is okay for small projects, but for any scalable application, automated regression testing is a game-changer. It saves time, improves accuracy, and ensures consistency across test runs. With the right automation software testing tools, you can turn repetitive tests into reliable safety nets.

2. Prioritize Test Cases Wisely Not all test cases need to be run every time. Focus on high-risk areas, like payment gateways, login functionalities, or anything customer-facing. Prioritize based on business impact and likelihood of failure. This makes your regression testing software faster and more efficient.

3. Use Stable Test Data Flaky tests are often a result of unstable or inconsistent test data. Always ensure that your test data is controlled, predictable, and relevant to the scenarios you're testing. This improves the reliability of your automation testing results.

4. Maintain and Update Your Test Suite As your application evolves, your test suite must evolve too. Regularly update or retire outdated test cases. A bloated test suite slows down your pipeline. It also dilutes the value of your regression testing efforts.

5. Integrate Testing into CI/CD Pipelines Integrating regression testing into your CI/CD pipeline ensures you're always catching bugs early. It acts as a gatekeeper–if something breaks, your build fails, and you can fix it before deploying. This is the core of any mature software testing strategy.

6. Leverage Regression Testing Services (When Needed) Sometimes, you need an external perspective. Many companies offer regression testing services that bring in fresh eyes, tools, and strategies. This is especially helpful when you're launching big updates or working with legacy code.

7. Monitor Test Results and Optimize Don't just run tests; analyze them. Track failures, performance trends, and flaky test patterns. This data helps improve your automation regression testing efforts over time.

To wrap up, regression testing isn't just a checkbox in your test plan. It's your defense against the domino effect of unintended consequences. Whether you're using in-house regression testing software, tapping into regression testing services, or building your own automation testing framework, the goal is simple: keep your app stable, your users happy, and your developers sane.

And if someone ever asks you, "What are some best practices of regression testing?"–you know exactly what to say now.

Looking to future-proof your software quality? Connect us to leverage advanced regression testing techniques that ensure smarter, faster, and more reliable software releases.

#Regression Testing#CI/CD Testing#Regression Testing Tools#QA Best Practices#Regression Testing Services

0 notes

Text

What Is Agentic AI? A Quick Primer for Developers

Imagine an AI assistant that doesn’t just wait for your prompt but thinks ahead, makes decisions, and takes action on your behalf, proactively. That’s Agentic AI in a nutshell.

We’re moving beyond the era of passive bots and entering the world of Agentic AI, where intelligent systems can plan, reason, and act autonomously toward goals. If you're a developer or tech enthusiast, this is a game-changer for how we build intelligent applications and a strong signal of where the future of artificial intelligence is headed.

To truly understand the shift, we need to define what makes AI "agentic".

So, What Exactly Is Agentic AI?

Agentic AI refers to AI systems that exhibit agency-the ability to set goals, make context-aware decisions, and execute tasks with minimal human intervention. Unlike traditional AI assistants, which are reactive and need specific instructions, agentic systems can function more like intelligent agents.

They're not just tools-they’re doers. Agentic AI is built on top of powerful large language models (LLMs) like GPT or Claude, but it goes a step further. Think of an LLM as the brain, while Agentic AI gives the brain a sense of purpose and direction.

At this point, you might wonder: “Isn’t this just another form of generative AI?”

How Is It Different from Generative AI?

Most of what we use today—like ChatGPT, DALL·E, and Copilot—falls under the generative AI umbrella. These systems are excellent at producing outputs: whether it's text, code, or visuals.

However, Agentic AI goes several steps further. Instead of stopping at generation, it continues with decision-making, task execution, and self-improvement. It can:

· manage multi-step workflows,

· adjust its strategy based on context, and

· even incorporate feedback to improve future performance.

In simple terms: generative AI creates; Agentic AI acts.

If you're someone who builds products, services, or tools, this paradigm shift is essential to watch. Watch this video for an in-depth look at the AI revolution of 2025: >>> AI Showdown: Meet the Brainy Bots Taking Over 2025!

Why Developers Should Care

The rise of Agentic AI transforms what’s possible in intelligent applications. We're not just building smarter chatbots—we're creating systems that collaborate with humans and drive real-world outcomes.

Here are just a few use cases that highlight its potential:

● A customer support AI assistant that monitors tickets, prioritizes by urgency, and automatically resolves known issues

● An autonomous agent that scans the news daily, summarizes market trends, and generates actionable executive briefs

● Sales AI that initiates personalized outreach, follows up with leads, and evolves its messaging based on past response patterns

These are not futuristic ideas; they’re already being prototyped. The future of artificial intelligence is not passive. It’s autonomous, contextual, and strategic-exactly what Agentic AI promises.

So, what does it take to build Agentic AI?

The Architecture Behind It

You’ll need more than just a large language model. A functional agentic system includes:

● Memory: To retain context across interactions and decisions.

● Planning modules: To break down goals into executable steps.

● Tools and plugins: Such as CRMs, browsers, or APIs to perform actions in the real world.

● Feedback loops: To learn from successes and mistakes and get better over time.

Together, these components create an AI that is not only intelligent—but also purposeful and persistent.

Here’s a quick summary for busy software Developers

Ï Agentic AI is the next step in AI evolution—shifting from reactive tools to autonomous agents.

● It builds on the capabilities of LLMs but adds memory, tools, planning, and goals.

● Unlike generative AI, which focuses on content creation, Agentic AI is built for action and execution.

● The future of artificial intelligence lies in systems that not only understand and generate—but also decide and do.

● If you’re a builder, this is the moment to explore the space, experiment, and rethink how you design intelligent systems.

Because the real magic doesn’t happen when AI gives you an answer. It happens when it solves your problem without being asked.

Ready to build the future with Agentic AI? Contact Nitor Infotech, an Ascendion company, to witness how we can develop autonomous, goal-driven AI solutions that act, not just respond.

0 notes

Text

BDD Explained Simply: Why It Matters in 2025

Ever feel like your product owner is speaking an ancient tongue while the developers are speaking a fictional language altogether - and QA’s just quietly panicking in the corner?

That’s exactly the chaos that Behavior-Driven Development (BDD) was invented to avoid. If you’re wondering, “What is BDD?” think of it as the tech world’s version of couples' therapy. It gets everyone talking in the same language before the misunderstandings start costing money.

Let’s see how we can define it.

What is BDD?

Behavior-Driven Development (BDD) is a collaborative approach that encourages teams to write requirements in plain language, often using the Given-When-Then format.

This ensures that everyone, from developers to business analysts, shares a common understanding of the application's behavior. This understanding leads to more effective software testing and alignment with business goals.

Now that we’ve nailed down the basics, let’s take a quick trip through how BDD has grown - and why it still matters today. The Evolution of BDD

Originally an extension of Test-Driven Development (TDD), BDD has grown to prioritize collaboration and clarity. While TDD focuses on writing tests before code, BDD emphasizes understanding the desired behavior before development begins. This shift has proven especially beneficial in complex projects where miscommunication can lead to costly errors.

As BDD evolved, so did comparisons. Let’s clear the air on how BDD stacks up against its cousin, TDD.

BDD vs. TDD

Although improving code quality is the goal of both BDD and Test-Driven Development (TDD), their areas of emphasis are different. To guarantee functioning, TDD places a strong emphasis on writing tests before code, mostly through unit testing. BDD, on the other hand, centers on the application's behavior and promotes collaboration among all stakeholders.

With the differences out of the way, it’s time to dive into the guiding principles that make BDD tick. Key Principles of BDD

Behavior Specification: Define how the application should behave in various scenarios.

Collaboration: Engage with all stakeholders in the development process.

Plain Language: Use understandable language to describe requirements, reducing ambiguity.

Understanding the “why” behind BDD helps us appreciate the real-world value it brings to modern development teams. Benefits of BDD Today

Improved Communication: By using a shared language, BDD enhances understanding among team members.

Culture of Collaboration: Writing scenarios together fosters teamwork and shared ownership.

User-Centric Development: Focuses on delivering features that provide real value to users.

Early Issue Detection and Reduced Ambiguity: Identifies potential problems before coding begins.

Automated Testing: Integrates seamlessly with tools like Cucumber and SpecFlow for efficient automated testing in software testing.

Faster Feedback: Provides immediate insights into the application's behavior, accelerating development cycles.

Enhanced Customer Satisfaction: Ensures the final product aligns with user expectations, boosting satisfaction.

But let’s be real - no approach is perfect. Here’s what to watch out for when implementing BDD. Challenges and Considerations

While BDD offers many benefits, it's not without challenges:

Potential Verbosity: Writing detailed behavior specifications can be time-consuming.

Tool Complexity: Integrating BDD tools into existing workflows may require a learning curve.

Learning Curve: It could take some time for teams to get used to new procedures and tools.

Maintenance: Keeping scenarios up to date requires ongoing effort.

However, with proper training and adoption strategies, these challenges can be mitigated.

Of course, the right tools can make or break your BDD journey. Take a look at what’s in the toolbox in the next section. Popular Tools in BDD

The following are popular tools in BDD:

Cucumber: A popular tool with multilingual support

SpecFlow: Tailored for .NET environments

Behave: Suitable for Python projects

JBehave: Designed for Java applications

Now that you know the tools, let’s see how BDD plays out across a typical development lifecycle.

BDD Lifecycle

The BDD lifecycle unfolds like this:

Discovery: Collaboratively identify desired behaviors.

Formulation: Write scenarios in plain language.

Automation: Implement tests using BDD tools.

Validation: Execute tests and make adjustments in response to input.

BDD isn’t just about writing tests. It’s about aligning your team, your code, and your users. In 2025, with increasingly complex systems and shorter release cycles, it’s your secret weapon for building software that doesn’t just “work,” but works well - for everyone.

What’s more, anything that means fewer awkward retro meetings is worth a try, right?

Want to build smarter, faster, and with fewer bugs? Explore Nitor Infotech’s product engineering services! Let’s turn your brilliant ideas into equally brilliant products! Contact us today!

0 notes

Text

Monolith to Microservices – How Database Architecture Must Evolve

The journey from monolith to microservices is like switching from a one-size-fits-all outfit to a tailored wardrobe—each piece has a purpose, fits perfectly, and works well on its own or with others. But here's the catch: while many teams focus on refactoring application code, they often forget the backbone that supports it all - database architecture.

If you're planning a monolithic to microservices migration, your database architecture can't be an afterthought. Why? Because traditional monolithic architectures often tie everything to one central data store. When you break your app into microservices, you can't expect all those services to keep calling back to a single data source. That would kill performance and create tight coupling. That’s the exact problem microservices are meant to solve.

What does evolving database architecture really mean?

In a monolithic setup, one large relational database holds everything—users, orders, payments; you name it. It's straightforward, but it creates bottlenecks as your app scales. Enter microservices database architecture, where each service owns its data. Without this, maintaining independent services and scaling seamlessly becomes difficult.

Here is how Microservices Database Architecture looks like:

Microservices Data Management: Strategies for Smarter Database Architecture

Each microservice might use a different database depending on its needs—NoSQL, relational, time-series, or even a share database architecture to split data horizontally across systems.

Imagine each service with its own custom toolkit, tailored to handle its unique tasks. However, this transition isn't plug-and-play. You’ll need solid database migration strategies. A thoughtful data migration strategy ensures you're not just lifting and shifting data but transforming it to fit your new architecture.

Some strategies include:

· strangler pattern

· change data capture (CDC)

· dual writes during migration

You can choose wisely based on your service’s data consistency and availability requirements.

What is the one mistake teams often make? Overlooking data integrity and synchronization. As you move to microservices database architecture, ensuring consistency across distributed systems becomes tricky. That’s why event-driven models and eventual consistency often become part of your database architecture design toolkit.

Another evolving piece is your data warehouse architecture. In a monolith, it's simple to extract data for analytics. But with distributed data, you’ll need pipelines to gather, transform, and load data from multiple sources—often in real-time.

Wrapping Up

Going from monolith to microservices isn’t just a code-level transformation—it’s a paradigm shift in how we design, access, and manage data. So, updating your database architecture is not optional; it's foundational. From crafting a rock-solid data migration strategy to implementing a flexible microservices data management approach, the data layer must evolve in sync with the application.

So, the next time you’re planning that big monolithic to microservices migration, remember: the code is only half the story. Your database architecture will make or break your success.---

Pro Tip: Start small. Pick one service, define its database boundaries, and apply your database migration strategies thoughtfully. In the world of data, small, strategic steps work better than drastic shifts.

Contact us at Nitor Infotech to modernize your database architecture for a seamless move to microservices.

0 notes

Text

Explore expert insights on product engineering, innovation, and scalable software solutions to accelerate your product development journey.

#product engineering#software development#software services#software engineering#product development

0 notes

Text

End-to-End Product Engineering Services

As markets become more focused on customers, businesses are investing in better technology. This shift has made every stage of a tech product’s life more important. Companies that create products that meet market needs can grow quickly and succeed.

That’s why software product engineering services are in high demand. These services help improve a product’s features, quality, durability, and ease of maintenance. By following the right methods, businesses can build and launch products more affordably while enhancing customer experience.

What Is Product Engineering?

Product engineering services refers to the end-to-end process of designing, developing, testing & maintaining software products with a focus on usability, quality, and cost-efficiency. It integrates various disciplines like mechanical, software, and hardware engineering to create a functional and market-ready product. Unlike traditional software development, product engineering emphasizes long-term product vision.

Product Engineering Process

Idea Generation: Finding problems or needs in the market.

Concept Design: Making basic drawings or models of the idea.

Prototyping: Building early versions to try out the idea.

Design & Development: Making the product look good and work well.

Testing & Validation: Checking if the product is safe, works properly, and meets standards.

Production: Making the product in large quantities.

Launch & Maintenance: Selling the product and improving it based on customer feedback.

By leveraging expert product engineering services, we at Nitor Infotech, can transform innovative ideas into robust, scalable solutions that drive business growth and customer satisfaction.

Our Product Engineering Services Expertise 1. BFIS: - We develop secure and scalable solutions to enhance digital transformation across banking, insurance, and financial institutions. Our expertise includes risk management, compliance, and customer experience optimization.

2. Healthcare: - We deliver patient-centric digital health solutions, focusing on EHR integration, telehealth, and AI-driven diagnostics to improve care outcomes and operational efficiency.

3. Retail: - We build personalized, omnichannel retail experiences using advanced analytics, e-commerce platforms, and inventory management systems to boost customer engagement and sales.

4. Manufacturing: - Our smart manufacturing solutions enable automation, predictive maintenance, and digital twins to enhance productivity, reduce downtime, and ensure quality control.

5. Supply chain: - We streamline supply chain operations through real-time tracking, demand forecasting, and intelligent logistics, ensuring transparency, agility, and cost efficiency.

Learn more about our product engineering services excellence.

#product engineering#product engineering services#generative ai#software development#blog#software services#software engineering

0 notes

Text

5 Real-World Use Cases of LLMs in Enterprise Solutions

Large Language Models (LLMs) have sprinted from research labs into boardrooms, rewriting how enterprises create value. Unlike narrow AI tools that tackle a single task, LLMs learn broad language patterns, then transfer that knowledge across domains with minimal retraining. Below are five concrete scenarios—drawn from production deployments—showing how companies are wielding LLMs to cut costs, boost revenue, and sharpen competitive edges.

1. Customer‑Support Copilots: Instant Answers, Happier Customers

In high‑volume contact centers, response time is king. Firms like Klarna and Shopify embed an LLM behind every chat window: the model triages incoming messages, drafts human‑like replies, and surfaces policy snippets for agents to approve or tweak. Because LLMs understand context, they can resolve tier‑one tickets (password resets, shipping status) without escalation, while flagging emotionally charged or compliance‑sensitive issues for human review. Early adopters report 30–40 percent reductions in average handle time and measurable gains in CSAT. The same copilots whisper suggestions to live agents, cutting onboarding from weeks to days. Crucially, feedback loops—thumbs‑up/down, resolution codes—feed back into the model, so support quality improves continuously.

2. Document Intelligence & Contract Analytics: Turning Word Soup into Structured Gold

Enterprises drown in text—NDAs, statements of work, regulatory filings. Traditional optical character recognition extracts raw words; LLMs go further, identifying meaning. A global insurer trained a domain‑tuned model to pull indemnification clauses, renewal dates, and jurisdiction from multi‑format policies, then push that data into policy‑admin systems. Accuracy leapt from sub‑80 percent with rules engines to 95 plus, eliminating thousands of manual review hours. In banking, an LLM paired with retrieval‑augmented generation (RAG) summarizes 100‑page credit agreements into one‑page risk briefs, highlighting covenant breaches. Lawyers still sign off, but billable hours drop, throughput rises, and deals close sooner.

3. Hyper‑Personalized Marketing Content: Infinite Variations, Consistent Voice

Marketers once sweated over A/B copy tests and localization spreadsheets. Now, teams feed LLM brand guidelines and past top‑performing campaigns; the model generates subject lines, product descriptions, or LinkedIn posts tuned to persona, geography, and season. A fashion e‑commerce giant used this workflow to launch 12 languages in a single quarter, driving a 22 percent lift in click‑through without expanding headcount. Importantly, guardrails—including style prompts, toxicity filters, and human review dashboards—ensure that on‑brand doesn’t become off‑color. The creative team pivots from writing every line to orchestrating concepts, freeing hours for strategy and experimentation.

4. Developer Productivity & Software Modernization: A Tireless Pair Programmer

LLMs fine‑tuned on code bases (think GitHub Copilot, Amazon Code Whisperer) accelerate everything from boilerplate generation to legacy refactors. One Fortune 200 retailer plugged a private LLM into its CI/CD pipeline: developers highlight a COBOL function, receive Java equivalents plus unit tests, and iterate interactively. The pilot converted 1 million lines in six months—triple the original estimate—while slashing defect density by 25 percent. Beyond translation, LLMs answer “why does this fail on Kubernetes?” with suggestions culled from internal runbooks and Stack Overflow. Productivity metrics—story points per sprint, lead time for changes—trend upward, while seasoned engineers spend more time on architectural decisions than syntax wrangling.

5. Enterprise Knowledge Search & Decision Support: From PDF Graveyards to Conversational Insight

Decades of tribal knowledge often languish in PDFs, SharePoint sites, and ticket logs. LLM‑powered semantic search engines, fortified with RAG, let employees ask natural‑language questions— “What’s our VAT policy for SaaS sold in the EU?”—and receive paragraph‑level answers plus source citations. A multinational manufacturer indexed 15 terabytes of documents; engineers now troubleshoot equipment failures 40 percent faster because the system surfaces identical past incidents. Crucially, the architecture keeps proprietary data inside the firewall and logs every query, creating an audit trail critical for compliance. Over time, usage analytics reveal content gaps, informing documentation roadmaps.

Across these use cases, recurring success factors emerge. First, domain adaptation matters: even the smartest foundation model benefits from a small corpus of company‑specific examples. Second, human‑in‑the‑loop guardrails—approval queues, explainability layers, policy filters—turn raw AI power into enterprise‑grade reliability. Third, retrieval‑augmented generation sidesteps hallucinations by grounding answers in verifiable documents. Finally, value compounds when LLMs integrate with existing workflows (CRMs, IDEs, ERPs) rather than live in silos.

#llm models#llm development#software development#software services#nitorinfotech#technology#gen ai#software#ai model

0 notes

Text

Sentiment Analysis: How It Helps Brands Understand Customers

Sentiment analysis may not be new, but its impact is more explosive than ever. As technology evolves, understanding the emotions behind words has become a game-changer for businesses and individuals a like.

We all know about it as a powerful tool that uses AI and NLP to understand customer emotions and opinions about brands.

That’s not all. It helps brands make informed decisions and enhance customer experience. You can picture these as experiences that can stun customers.

Let’s explore how it works.

How Sentiment Analysis Works

Its key components are as follows:

Natural Language Processing (NLP): It analyzes text for emotional content.

Text Classification: It categorizes sentiment as positive, negative, or neutral.

Data Aggregation: It collects data from social media, reviews, and forums.

Let’s not leave out its advanced features:

Emotion detection:

Emotion detection goes beyond basic sentiment analysis. It identifies specific emotions such as happiness, anger, or sadness. This is achieved through more nuanced NLP techniques and machine learning models.

It helps in understanding the emotional nuances behind customer feedback. This allows for more targeted responses and improvements.

Nuanced Sentiment Analysis:

This involves techniques like aspect-based sentiment analysis. This focuses on specific aspects or features of a product or service. It can identify both positive and negative sentiments within the same text.

Nuanced sentiment analysis provides detailed insights into customer preferences and dissatisfaction areas. The result? A business can make precise improvements.

Bookmark our blog centered on sentiment analysis to understand how it is implemented.

Now we suggest you continue sipping your tea and discovering the benefits of sentiment analysis for brands!

Benefits of Sentiment Analysis for Brands

Here are the major benefits:

Enhanced Customer Experience

Strategic Decision Making

Brand Reputation Management

Enhanced Customer Experience: Sentiment analysis helps identify pain points and areas for improvement. Imagine tailoring your services to meet customer needs more effectively. By listening to customer feedback, you can resolve issues in a flash and build a loyal customer base.

Strategic Decision Making: This tool informs product development and marketing strategies. It does this by providing insights into customer preferences and opinions. It helps you make data-driven decisions that resonate with your target audience. This leads to more effective campaigns and product launches.

Brand Reputation Management: Monitoring and addressing negative sentiments proactively are essential for maintaining a highly memorable brand image. Sentiment analysis enables you to detect early warning signs of potential crises. Once you detect these signs, you can respond swiftly to mitigate damage.

Competitive Advantage: Analyzing competitor sentiment allows you to stay ahead in the market. Make sense of how customers perceive your competitors. Then, you can identify gaps in the market and craft brilliant strategies to outperform them!

Onwards to the use cases!

Use Cases for Sentiment Analysis

Take a look at these important use cases:

Guiding product development

Personalizing user engagement

Making the onboarding process simpler

Pinpointing friction in the customer journey

Guiding Product Development: Use sentiment insights to identify areas for improvement. What’s more, you can validate new product ideas.

Personalizing User Engagement: Tailor interactions based on customer emotions and preferences. Why, might you ask? This is to enhance their experience, of course.

Simplifying Onboarding: Identify and address friction points in the onboarding process to ensure a smoother customer journey.

Pinpointing Friction in the Customer Journey: Analyze sentiment to identify and resolve bottlenecks. This will take overall customer satisfaction to the level you are dreaming of.

Sentiment analysis is crucial for building trust, loyalty, and a competitive edge. By welcoming sentiment analysis into your business strategy, you can unlock new opportunities for growth and customer satisfaction. Transform your brand's approach to customer engagement today!

Explore how Nitor Infotech can help integrate sentiment analysis into your business strategy.

#sentiment analysis#software development services#blog#software services#User Engagement#product devlopment#software engineering#nitor infotech#software testing#technology

0 notes

Text

Drive business growth with CPQ software that automates quoting, pricing, and configurations. Additionally, learn to reduce errors, streamline sales cycles, and stay competitive in this dynamic environment. Also, explore how industries like manufacturing, retail, and SaaS benefit from CPQ automation.

#automation company#digital transformation#business analytics#gen ai#data companies#software company development#ai automation#automation process#companies building#customer experience

0 notes

Text

Top Platform Engineering Practices for Scalable Applications

In today’s digital world, scalability is a crucial attribute for any platform. As businesses grow and demands change, building a platform that can adapt and expand is essential. Platform engineering practices focus on creating robust, flexible systems. These systems not only perform underload but also evolve with emerging technologies. Here are some top practices to ensure your applications remain scalable and efficient.

1. Adopt a Microservices Architecture

A microservices architecture breaks down a monolithic application into smaller, independent services that work together. This approach offers numerous benefits for scalability:

Independent Scaling: Each service can be scaled separately based on demand. This ensures efficient resource utilization.

Resilience: Isolated failures in one service do not bring down the entire application. This improves overall system stability.

Flexibility: Services can be developed, deployed, and maintained using different technologies. This allows teams to choose the best tools for each job.

2. Embrace Containerization and Orchestration

Containerization, with tools like Docker, has become a staple in modern platform engineering. Containers package applications with all their dependencies. This ensures consistency across development, testing, and production environments. Orchestration platforms like Kubernetes further enhance scalability by automating the deployment, scaling, and management of containerized applications. This combination allows for rapid, reliable scaling. It alsod helps maintain high availability.

3. Leverage Cloud-Native Technologies

Cloud-native solutions are designed to exploit the full benefits of cloud computing. This includes utilizing Infrastructure as Code (IaC) tools such as Terraform or CloudFormation to automate the provisioning of infrastructure. Cloud platforms offer dynamic scaling, robust security features, and managed services that reduce operational complexity. Transitioning to cloud-native technologies enables teams to focus on development. Meanwhile, underlying infrastructure adapts to workload changes.

4. Implement Continuous Integration/Continuous Deployment (CI/CD)

A robust CI/CD pipeline is essential for maintaining a scalable platform. Continuous integration ensures that new code changes are automatically tested and merged. This reduces the risk of integration issues. Continuous deployment, on the other hand, enables rapid, reliable releases of new features and improvements. By automating testing and deployment processes, organizations can quickly iterate on their products. They can also respond to user demands without sacrificing quality or stability.

5. Monitor, Analyze, and Optimize

Scalability isn’t a one-time setup—it requires continuous monitoring and optimization. Implementing comprehensive monitoring tools and logging frameworks is crucial for:

tracking application performance,

spotting bottlenecks, and

identifying potential failures.

Metrics such as response times, error rates, and resource utilization provide insights that drive informed decisions on scaling strategies. Regular performance reviews and proactive adjustments ensure that the platform remains robust under varying loads.

6. Focus on Security and Compliance

As platforms scale, security and compliance become increasingly complex yet critical. Integrating security practices into every stage of the development and deployment process—often referred to as DevSecOps—helps identify and mitigate vulnerabilities early. Automated security testing and regular audits ensure that the platform not only scales efficiently but also maintains data integrity and compliance with industry standards.

Scalable applications require thoughtful platform engineering practices that balance flexibility, efficiency, and security. What happens when organizations adopt a microservices architecture, embrace containerization and cloud-native technologies, and implement continuous integration and monitoring? Organizations can build platforms capable of handling growing user demands. These practices streamline development and deployment. They also ensure that your applications are prepared for the future.

Read more about how platform engineering powers efficiency and enhances business value.

#cicd#cloud native#software development#software services#software engineering#it technology#future it technologies

0 notes

Text

Exploring Black Box Testing: Techniques, Pros, and Cons

If you’ve ever wondered how software applications get tested without delving into their code, you’re thinking of black box testing. In software engineering, this type of testing evaluates the functionality of an application without examining its internal workings

But how do testers approach Black box testing in practice? Let’s look at some proven techniques.

How to use black box testing?

Boundary value analysis: It focuses on edge cases, such as maximum and minimum input values.

Equivalence partitioning: It divides input data into invalid and valid sets for efficient testing.

State transition testing: This tests how software behaves when it is transitioning between states.

Decision table testing: It maps inputs and expected outputs in complex scenarios.

Error guessing: It is based on the tester’s past knowledge and intuition to discover bugs in the system.

What are the types of testing under black box testing

But where do we use these black box testing techniques? Here’s a look at their types and practical uses in testing.

Functional testing: This checks what the application does. Think of it as testing buttons on a laptop work or if the login screen is doing what it is supposed to. For example, whether you can log in with the right username and password, whether the ‘reset password’ field works or what happens if you forget your password. Retesting an application is done to ensure that if something that was broken, it is now fixed.

Non-functional testing: This checks how the application performs. It’s like testing how strong or fast something is. Here are some smaller types of non-functional testing:

Performance testing: This tests how well the application works. Can it handle a lot of users at the same time?

Accessibility testing: Is it easy for people with disabilities to use it (like hearing or vision impairments)?

Usability testing: Is the app easy to use and can it be used without instructions?

Security testing: Is your personal data safe with the app? Can someone hack into your system?

Regression testing: This makes sure that new changes in the application don’t interfere with the old stuff.

Knowing the types of black box testing demonstrates its breadth, but what about the benefits of black box testing?

What are the benefits of black box testing?

Black box testing brings several advantages to software testing.

It simplifies the process by focusing more on the output rather than the code.

It enhances the overall software development quality by focusing on end-user scenarios.

Automation testing can help speed up repetitive tasks, improving efficiency and identifying the cost of fixing bugs later.

No process is perfect, and black box testing is no exception. Here are some hurdles to consider.

What are the problems with black box testing

Limited scope: Testers can lose edge cases without access to the code and understanding of the business domain.

Test case dependency: There is a dependency on well-defined test cases since continuous evaluations of the test cases are necessary to make sure that there is comprehensive coverage.

The pesticide paradox: Running the same tests repeatedly can lead the software to adapt to the defects. To avoid this, testers need to regularly diversify and update their test cases including new techniques and scenarios.

Thankfully, automation tools help testers to cover the execution of the repetitive scenarios and focus on identifying Edge Cases, exploratory testing

Automation and black box testing

Incorporating automation tools into black box testing can significantly improve efficiency. Automated testing scripts are invaluable for performance testing or running regression suites.

Black box testing is an integral part of software development services, offering an end user-centric perspective to ensure performance and functionality. By using robust testing techniques and automation testing, software developers can create software that’s scalable, reliable and ready for modern users. Visit Nitor Infotech to learn more about our software development services.

#app development#application development#web application#software for apps#software development#it software development#user testing#black box testing

0 notes

Text

In this blog, we discuss the six best practices for creating effective API documentation. They emphasize clarity, consistency, and practical examples. Dive into our blog to know more about the key practices for API documentation which will help you create easy to understand API documentation.

Additionally, you’ll explore how Generative AI can significantly optimize the documentation process. It includes everything from generating code samples to troubleshooting. Also, learn how organizations can improve the usability and efficiency of their API documentation by using GenAI. This will ultimately enhance the developer experience.

#api documentation#api software#using api#api methods#api best practices#example api documentation#best api#api reference#api example#software documentation#technical document#api documentation tool#software services#Nitor Infotech

0 notes

Text

The blog discusses the role of AI scribes in revolutionizing healthcare management. It highlights how AI-powered tools streamline documentation, enhance accuracy, reduce administrative burdens, and improve patient care, enabling healthcare professionals to focus more on delivering quality medical services.

#medical scribe#artificial intelligence in healthcare#ai and healthcare#primary care physician#healthcare#nitorinfotech#software development#blog#software services

0 notes

Text

AI in DevSecOps: Revolutionizing Security Testing and Code Analysis

DevSecOps, short for Development, Security, and Operations, is an approach that integrates security practices within the DevOps workflow. You can think of it as an extra step necessary for integrating security. Before, software development focused on speed and efficiency, often delaying security to the final stages.

However, the rise in cyber threats has made it essential to integrate security into every phase of the software lifecycle. This evolution gave rise to DevSecOps, ensuring that security is not an afterthought but a shared responsibility across teams.

From DevOps to DevSecOps: The Main Goal

The shift from DevOps to DevSecOps emphasizes applying security into continuous integration and delivery (CI/CD) pipelines. The main goal of DevSecOps is to build secure applications by automating security checks. This approach helps in fostering a culture where developers, operations teams, and security experts collaborate seamlessly.

How is AI Reshaping the Security Testing & Code Analysis Industry?

Artificial intelligence and generative AI are transforming the landscape of security testing and code analysis by enhancing precision, speed, and scalability. Before AI took over, manual code reviews and testing were time-consuming and prone to errors. AI-driven solutions, however, automate these processes, enabling real-time vulnerability detection and smarter decision-making.

Let’s look at how AI does that in detail:

AI models analyze code repositories to identify known and unknown vulnerabilities with higher accuracy.

Machine learning algorithms predict potential attack vectors and their impact on applications.

AI tools simulate attacks to assess application resilience, saving time and effort compared to manual testing.

AI ensures code adheres to security and performance standards by analyzing patterns and dependencies.

As you can imagine, there have been several benefits of this:

Reducing False Positives: AI algorithms improve accuracy in identifying real threats.

Accelerating Scans: Traditional methods could take hours, but AI-powered tools perform security scans in minutes.

Self-Learning Capabilities: AI systems evolve based on new data, adapting to emerging threats.

Now that we know about the benefits AI has, let’s look at some challenges AI could pose in security testing & code analysis:

AI systems require large datasets for training, which can expose sensitive information if not properly secured. This could cause disastrous data leaks.

AI models trained on incomplete or biased data may lead to blind spots and errors.

While AI automates many processes, over-reliance can result in missed threats that require human intuition to detect.

Cybercriminals are leveraging AI to create advanced malware that can bypass traditional security measures, posing a new level of risk.

Now that we know the current scenario, let’s look at how AI in DevSecOps will look like in the future:

The Future of AI in DevSecOps

AI’s role in DevSecOps will expand with emerging trends as:

Advanced algorithms will proactively search for threats across networks, to prevent attacks.

Future systems will use AI to detect vulnerabilities and automatically patch them without human intervention.

AI will monitor user and system behavior to identify anomalies, enhancing the detection of unusual activities.

Integrated AI platforms will facilitate seamless communication between development, operations, and security teams for faster decision-making.

AI is revolutionizing DevSecOps by making security testing and code analysis smarter, faster, and more effective. While challenges like data leaks and algorithm bias exist, its potential is much more than the risks it poses.

To learn how our AI-driven solutions can elevate your DevSecOps practices, contact us at Nitor Infotech.

#continuous integration#software development#software testing#engineering devops#applications development#security testing#application security scanning#software services#nitorinfotech#blog#ascendion#gen ai

0 notes

Text

Are you eager to delve into the core of web development? Join us as we explore Backend for Frontend (BFF), an intricate powerhouse that silently serves as an intermediary layer, tailoring data for distinct front-end clients, streamlining UI customization, and accelerating development. Further, learn how BFF stands as the unsung hero, elevating web development speed and performance. Stay confident and informed of the ever-evolving web development terrain with Nitor Infotech.

#micro services#Backend for Frontend#web application development service#front end development#microservices architecture patterns#web app development#software development#software services#nitorinfotech#software engineering

0 notes

Text

A Comprehensive Introduction to Generative AI Application Development

Remember the last time you used your phone without using any application? Me neither. Web and mobile applications are essential tools that make our daily tasks easier, connect us with others, and bring countless services right to our fingertips.

From Mobile apps that we use for deliveries to web apps which help enterprises solve major business problems; applications shape the digital landscape. Application development is essentially the entire process through which an app is made. It involves understanding the business requirement, designing how the application would look, coding, testing, and finally deploying it.

Now that you know about application development, let’s explore how generative AI is revolutionizing the process, making it faster, and more adaptable than ever.

What is Generative AI and how can we use it for Application Development?

GenAI or Generative artificial intelligence is a type of AI tool that uses data to generate content. It is predominantly a deep-learning model which you can train on certain data to generate new content. This can include content like audio, images, text, code, videos and so much more.

It can even impersonate an author’s style and create content that resembles it. You must have heard of ChatGPT; it’s a generative AI chatbot which uses all the data that it has been fed to generate responses.

Application development is a challenging process which involves multiple stages from idea creation to prototyping to deployment and monitoring. The entire process can take months. GenAI can transform application development by making it faster and more efficient.

You can use generative AI for developing your application in the following ways –

Code assistance

Visualizing UI/UX ideas for prototypes

Sample test case generation

Automated testing, bug detection, and improving code quality before deployment.

Analyzing data to provide personalized content and to understand user behavior and application performance

Automatically generating documentation

Detection of security issues and potential threats

Continuous monitoring

Thus, we can see that generative AI simplifies different parts of application development, making the process smarter and more focused on user needs.

Using GenAI for application development sounds great but you might want to know if it’s worth it for you and your business. So, let’s turn to the business benefits.

Business benefits of using GenAI for Application Development

Faster Time to Market: Generative AI speeds up development by automating coding, prototyping, and testing. Companies using AI have seen a 30% faster time to market, letting them launch products quicker and stay ahead.

Cost Savings: Automating repetitive tasks with AI reduces the need for extra resources in coding and testing. Deloitte found that organizations using AI save up to 40% on operational costs, freeing up funds for other purposes.

Increased Developer Productivity: Generative intelligence boosts productivity by taking care of routine and repetitive tasks, allowing developers to focus on bigger goals. McKinsey reports that AI tools can improve developer productivity by 50%, leading to faster project progress.

Better Product Quality: AI tools catch bugs early in development, which means fewer issues after launch and a more stable product, resulting in improved user satisfaction.

Personalized User Experiences: Generative AI uses data insights to adapt apps to user preferences, helping boost engagement and retention, which supports growth.

Implementing GenAI in application development yields these significant business advantages, helping companies thrive in a fast-paced digital economy.

If you’d like to know more about our GenAI services and how they can optimize your application development process, reach out to us at Nitor Infotech.

#artificial intelligence#artificial ai#generative ai#gen ai#ai technology#ai models#generative ai tool#artificial intelligence models#app development#mobile app#mobile and apps#smartphone app development#websites application

0 notes

Text

Low-Code Development Made Simple: A Step-by-Step Guide

In a world where rapid innovation and digital transformation are paramount, traditional software development methods may often lead to bottlenecks and delayed project timelines. Here, low-code development emerges as a solution, allowing organizations to build applications quickly and efficiently through visual interfaces and minimal coding.

Wondering how low-code development differs from traditional methods? Read on to explore the differences, key stages, and strategies for a smooth low-code experience.

Why choose Low-Code Development?

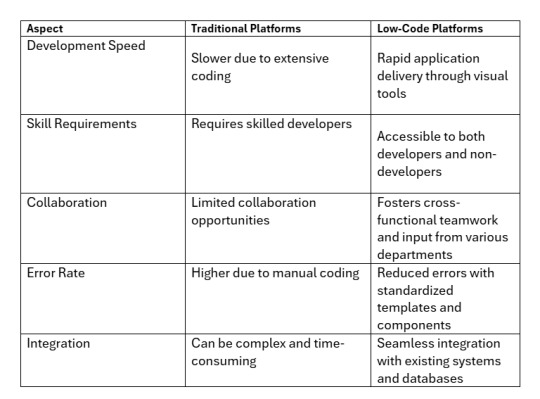

Here’s a table explaining why many prefer low-code development over traditional methods:

Hope you now ha’ve a clearer understanding of your next steps! Now, let’s explore the key stages of the low-code development journey.

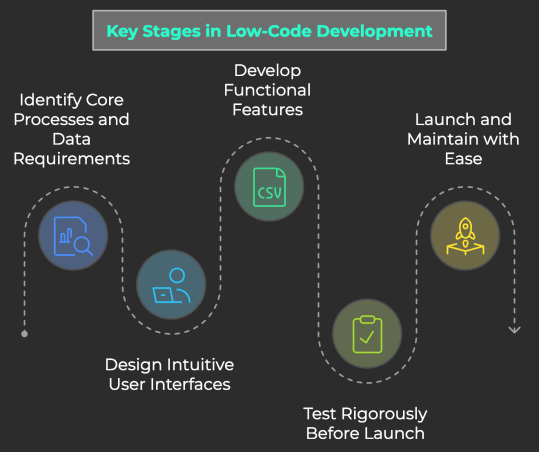

Key Stages in the Low-Code Development Journey

Navigating the low-code development journey involves several essential stages to ensure successful application creation. Let’s break down these stages using the example of developing a mobile fitness application.

1. Identify Core Processes and Data Requirements: Before building your fitness app, it’s crucial to clarify the core processes that will drive user engagement. For instance, you might want to include features like workout tracking, diet logging, and user progress monitoring. Mapping out these processes helps you determine the data needed, such as user input, activity metrics, and nutritional information, in this case.

2. Design Intuitive User Interfaces: Next, think about how users will interact with your app. For a fitness application, you’ll need user-friendly interfaces for logging workouts or meals. Low-code/ no code platforms enable you to design these interfaces effortlessly using drag-and-drop functionality, allowing you to create customized fields that cater to user needs, such as exercise type, duration, and calorie intake.

3. Develop Functional Features: With your processes defined and interfaces in place, it’s time to construct the app. Low-code development empowers you to build robust features without extensive coding. For example, you can integrate a workout schedule that automatically adjusts based on user preferences, leveraging pre-built components to save time while enhancing functionality.

4. Test Rigorously Before Launch: Before launching your fitness app, thorough testing is crucial. Simulate user interactions to identify any technical issues or bugs. Low-code development platforms often provide testing environments where you can evaluate app performance under various scenarios.

5. Launch and Maintain with Ease: Once testing is complete, you’re ready to launch your fitness app. Low-code solutions simplify this process, allowing you to publish your application on various app stores with just a few clicks.

Additionally, these platforms facilitate ongoing updates and feature enhancements, enabling you to respond quickly to user feedback and keep your app relevant in a fast-paced market.

Onwards toward knowing about the hurdles and ways to dodge them!

Strategies for Overcoming Low-Code Development Challenges

Here are three common challenges along with their solutions to help you navigate the low-code journey effectively:

a. Limited Customization

Challenge: Low-code platforms may restrict customization, making it difficult to meet unique business needs.

Solution: Choose a flexible platform that allows for custom coding when necessary, enabling tailored solutions without compromising speed.

b. Integration Issues

Challenge: Integrating low-code applications with existing systems can be complicated, risking data flow and compatibility.

Solution: Select platforms with robust integration capabilities and APIs to facilitate seamless connections with other systems.

c. Governance and Security Concerns

Challenge: Increased access to development can lead to governance and security challenges.

Solution: Implement strict governance frameworks and security protocols to manage user access, data protection, and compliance effectively.

That’s all! By following these key stages and addressing the challenges in your low-code development journey, you can streamline your processes and create impactful applications that meet your user’s needs.

Learn more about cutting-edge tech developments with us at Nitor Infotech.

#application development#app softwares#mobile app development#mobile development company#low code#no code#software services#software development#blog#software engineering#Low-Code Development

0 notes