Doing creative things. Don't replace artists: augment them.

Don't wanna be here? Send us removal request.

Text

Week 20 Update

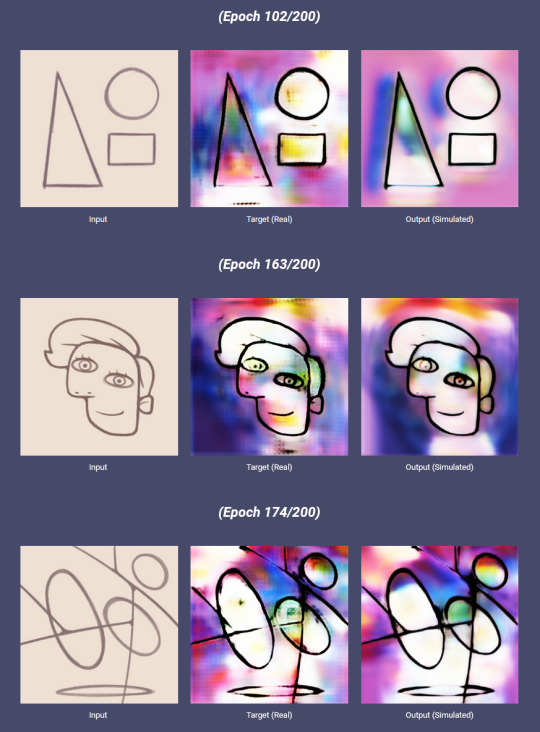

The final week is upon us. I have the 50/50 model up and running, and I plan to finish the full "ouroboros" style generator this coming week, to unveil at the exhibition. Here's some examples of various epochs of training, keeping in mind this order of "sketch/original generated image/newly regenerated image."

A cool effect you'll notice is the smoothing out of certain visual artifacts: I actually curated these images, so I had to pull certain pieces to use for the retraining set--some images were unintelligible, and I could not have those taint the retraining. However, some of these were deemed acceptable by me, despite a few artifacts, and as you can see here, these get mellowed out as it retrains, which is really cool.

I like having this kind of control over my sense of "selective breeding." It shows that, while I want to manufacture a somewhat independent "imagination," I, as the creator, am still needed. I want AI and machine learning to still be used as a tool too.

0 notes

Text

Week (1)9 Update

Things are really ramping up with the curational aspect, and I admit it has meant my project has taken a bit of the backburner as a result. However, I have currently made quite a few sketches in the background to feed into the generator, so that I may curate around half of them to feed back into the dataset. My goal is to have that 50/50 generator model finished, then have the full "ouroboros" model in line for week 10, so that I may have time to fix any kinks I find with the generator.

But despite of that, the great thing about this project is the unpredictable and imperfect nature. When considering machine learning and AI in general, we tend to think of reducing biases and ensuring a diverse dataset for general purposes--but that's the great thing about art: there is no true correct answer. As such, in the chaotic and volatile state that exists due to having a dataset of training around ~50 artworks, change is a lot more visible. It's truly a neat, chaotic, and expressive exploration at a small scale that opens the door of possibility. I hope in the next update, be it for the next week, or later this week, that you all can observe the next model in the process!

0 notes

Text

Week (1)8 Update

Well, things are finally getting into motion! Saturday's meeting with the other curators was a great success! Still chugging along in the model training, although I have a nice little mockup of what's to come!

You can see the labels underneath are as follows: ・Original Sketch: My original sketch given as an input to the varying models. Sketches can have varying levels of looseness (such as construction lines still present). ・Sketch2Line Model: This is the first model I tested with, and merely shows an attempt to replicate my lineart. It was an important model that served as a stepping stone to thinking bigger than just "replicate my art." ・Sketch2Full Model: This model is the fully stable model trained to finish my sketches to full pieces, with a wide array of different pieces. This is an important checkpoint. ・89to11 Model: This model is named because it is 89% my art, 11% AI-assisted art, as I am starting to feed its own imagery back into the training sets. I hope to have later models that eventually feed more art back into itself, and I would love to explore how it evolves based on my own curation of art I deem "good enough to approve."

I hope to have a 50to50 model, as well as a fully independent model, perhaps scrapping the 89to11 model, which would give me 5 columns to work with in the evolutionary model. I could do more, but that would consume quite a bit more paper in the printing process. I am also hoping to tackle different genres of character sketches, random obejcts, and abstraction, to fully stress test and see what the model produces. After all, while I am concerned with quality, the main grab is what occurs, and that unexpected nature will be soon seen during exhibition.

0 notes

Text

Week (1)7 Update

Okay, I have introduced some of the images back into the dataset, curating the best of the generated images to pass on as good enough to retrain itself on. While the majority of images are still my art (89%), the effects seen on the simulated outputs look really promising:

I have always been a fan of using things we see bad with machine learning, such as a small sample size or bias in a creative manner. Using the limitation for creative purposes is always a concept that stuck with me, and seeing how these imperfect recreations of already imperfect generative art shows this cool smoothening process that I find will be really cool once I get more checkpoints. I think my plan in the next week is to finally REPLACE some bits of the dataset, and for exhibition, unveil the version of the model that has finally breached free of my own artwork, fully trained on its own generative art. It is still invariably connected to me, but it now can create without direct reference to me in its training set. So the plan for finals week is to maybe get it to a 50/50 split of training with my art and its own art. Exciting stuff!

0 notes

Text

Week 1(6) Update

Hey all, not much in news today for updates, but that is not a bad thing.

I'll be honest, I had a bit of an off week this time around: been feeling particularly low mentally speaking, but once again, thank you past me for making good progress that allowed me to take a sort of break week.

My HP Sprocket Plus had died, and I have acquired a new HP Sprocket Select to replace it (turns out the Plus model is discontinued, who would have known?); I've also been having it a bit tough in my own personal matters of life.

Regardless, I feel I have really gotten to the core of what I want to do: for exhibition, I think showing off my drawings, with input and output, is the way to go. I will also show early progress to show the budding starts of it, and it will really sell the durational aspect of my project.

I think the part that will be most influential too will be the moment I finally take the AI off of my own art, and have enough curated test images to then fully train off of. In essence, I can "finish" the project off at the exhibition. As such, these weeks will merely continue to train, as I start to replace the dataset with more images of my own art. This will be really really cool to see, and I'll probably leave hell week (week 20, or week 10 of this quarter) as a test week for the fully independent model.

Fun stuff ahead!

0 notes

Text

Week (1)5 Update

Big day today: midterm updates!

For me, most of the moving parts are in place: I have acquired an even better HP Sprocket Plus, so the pictures are ever a bit bigger, which is awesome (thank you mom for you HP admin connections).

I have also begun to see what it may be like to introduce trained art back into the training dataset. I figure since I am working with a purposefully small dataset, I need to really be careful in what I choose as "good." Due to the nature of now having my model input my sketch to output a full piece, I have also made it easier to produce a larger volume of sketches to generate final images: in essence, I can make generated art relatively quickly. As such, I can curate the right pieces and observe the evolution.

So where does that leave us? I think what is nice is that I can use the remaining weeks to streamline the process but also simulate what could be happening as the machine learns more off of its own artwork, seeing what semblance of style it may try to push forth. Or I could find that it may take a long while before a meaningful shift can be found. Either way, I think it'll really help me in making such a process interesting. If viewed like a science experiment, I could even then say that the null hypothesis is true: the imagination cannot exist within the machine, as it needs too much input from me to exist properly.

In any sense, exciting questions and theories pop up as I think more about other applications of AI for art. I hope too that in the coming weeks that my role as one of the student curators can be fully realized: I truly want to do everything I can to contribute to an amazing exhibition!

0 notes

Text

Example of HP Sprocket printing as well as the result of current testing! I hope this week I can really start getting to sketching--maybe depending on how many sketch I can get done, I may even decide to filter on results to submit to what I want the machine to consider a pass.

I also have a fun idea in the content I decide to draw. As of right now, I think it will be fun to study abstract works, really get the shapes in mind and let the AI fill in the rest. This is honestly really cool: I'm excited to see how it is both influenced by my subject matter as well as its own "creative" decisions in filling in that huge gap between sketch and full piece.

Week (1)4 Update

Hooray! Things are working quite smoothly: I was able to run the code locally on my machine, so no more messing with Google Colab and needing to rerun the cells every single session, nor reuploading certain files like the generator.

I have also acquired an HP Sprocket! As such, I am able to print out pieces on a small 2in x 3in piece of paper; these don't come cheap, so while it's fun to sketch, I have to be a bit more choosy with what I decide to print. Perhaps, depending on the quality of the pieces, I can curate the best generated artworks to print out. But yeah: I may need less wall space than I originally expected!

All in all, super excited, as I plan now to start training the AI on its own generated artwork, seeing how a semblance of its own decision making can become reinforced. Very exciting things are happening now!

1 note

·

View note

Text

Week (1)4 Update

Hooray! Things are working quite smoothly: I was able to run the code locally on my machine, so no more messing with Google Colab and needing to rerun the cells every single session, nor reuploading certain files like the generator.

I have also acquired an HP Sprocket! As such, I am able to print out pieces on a small 2in x 3in piece of paper; these don't come cheap, so while it's fun to sketch, I have to be a bit more choosy with what I decide to print. Perhaps, depending on the quality of the pieces, I can curate the best generated artworks to print out. But yeah: I may need less wall space than I originally expected!

All in all, super excited, as I plan now to start training the AI on its own generated artwork, seeing how a semblance of its own decision making can become reinforced. Very exciting things are happening now!

1 note

·

View note

Text

Oh yeah, so this has been functioning more currently like a dev blog, but I do plan on making a more consolidated website, with a link to this blog in case anyone later on would like to see the process and posts leading up. I have no qualms with people seeing such things, as who knows, maybe something in my experience can be helpful.

I'll probably be working on getting a Google Sites more properly filled out with the aims of my project, especially as I start to laser focus my desires and motivations.

Week (1)3 Update

Hello again, and while I need to update this post with a reply later when I return home (and have access to better quality images), I want to give an update into the process!

One aspect, in terms of live drawing and later image exhibit after I have left (with a durational component over the days), is that I plan on getting my hands on an HP Sprocket printer. These are meant to print small images, which is my plan when showing off the images--they are meant to be looked at closely, but they are also meant to exist as part of a large collection of works to really show the steps of training when it comes to the model (and future retraining as the days pass by).

Originally I wanted to translate from sketch to line art, due to wanting a more accurate recreation--however, in this quarter, the idea has evolved into purposefully making big leaps in hopes of finding weird "errors" to be used creatively.

As such, in this past week I had experimented with training sets with the biggest leap: sketch to full piece. I had not initially considered it as I was too scared to make such big a leap, especially not having much data, but now, I have embraced both limitations as a way to confront more avenues of creativity.

I also wanted to say that one idea I really would like to tackle is the idea of abstraction, in both the computing and the artistic sense of it. With my self-identification of creative first, academic second, this feels like a perfect marriage to combine both. I hope that in by training this model on my character art (as I primarily draw characters), I can use that translation to abstract other full pieces, and in a sense, explore a genre of art I have not much considered doable on my part. One could argue I am making a mockery of abstractions in art, but I like to see it more as another foray into that realm, able to coexist with established forms.

I will definitely update with actual images later tonight, maybe even some tests, because this truly feels like a fun place to venture. After all, art should be enjoyable, right?

1 note

·

View note

Text

Week (1)3 Update

Hello again, and while I need to update this post with a reply later when I return home (and have access to better quality images), I want to give an update into the process!

One aspect, in terms of live drawing and later image exhibit after I have left (with a durational component over the days), is that I plan on getting my hands on an HP Sprocket printer. These are meant to print small images, which is my plan when showing off the images--they are meant to be looked at closely, but they are also meant to exist as part of a large collection of works to really show the steps of training when it comes to the model (and future retraining as the days pass by).

Originally I wanted to translate from sketch to line art, due to wanting a more accurate recreation--however, in this quarter, the idea has evolved into purposefully making big leaps in hopes of finding weird "errors" to be used creatively.

As such, in this past week I had experimented with training sets with the biggest leap: sketch to full piece. I had not initially considered it as I was too scared to make such big a leap, especially not having much data, but now, I have embraced both limitations as a way to confront more avenues of creativity.

I also wanted to say that one idea I really would like to tackle is the idea of abstraction, in both the computing and the artistic sense of it. With my self-identification of creative first, academic second, this feels like a perfect marriage to combine both. I hope that in by training this model on my character art (as I primarily draw characters), I can use that translation to abstract other full pieces, and in a sense, explore a genre of art I have not much considered doable on my part. One could argue I am making a mockery of abstractions in art, but I like to see it more as another foray into that realm, able to coexist with established forms.

I will definitely update with actual images later tonight, maybe even some tests, because this truly feels like a fun place to venture. After all, art should be enjoyable, right?

1 note

·

View note

Text

Week (1)2 Update!

Hello all again! I know the dev posts have been quite quiet since the end of last quarter: I took a well needed break from this project entirely, and I am raring to go as we finish off this quarter!

I want to start out with saying that I am quite close to being done honestly: a lot of the work now is creative experimentation, so pats on the back to myself for really doing the heavy lifting in the first half.

The state I left it at during our final week of the quarter is nicely summarized here:

During demos, I had the important criticism that it merely tries to replicate my lineart, but terribly. Thus ideas were thrown around about intentionally breaking it--giving it images it was not trained to take, and seeing where those results could lead. As such, I don't feel like I want to chase the line art anymore: I want to chase full pieces, and I want to fully embrace all the imperfections that can occur.

As such, we can see it is quite rough--even in training it still struggles to fully replicate, but again, that is the point, and I am okay with playing with the imperfections.

Breaking stuff is going to be a focal point of my experimentations.

So while I still have things to work out creatively, here's the big thing I want to share here in this update: the tentative layout of how I wish to exhibit.

I really want to run this similar to an artist alley situation, but with that fun twist, and a display of my works after I finish the live drawing. This is my fun and intentional take, plus the portability aspect would make it very easy to transport to other locations if need be. I also want to help my fellow colleagues if need be, as I care about their success just as much as mine.

So yeah, get ready for more experimentation!

0 notes

Text

Week 10: The Final Report for the Quarter

So, here we are, at week 10. I think the best thing for this post would be to summarize this quarter's timeline now.

Week 2: Artist statement and motivations have been established.

Week 3: Timeline established, with explorations into possible models such as Teachable Machine from Google, the text-to-image generators like Stable Diffusion and DALL-E, as well as the image-to-image cGAN known as Pix2Pix.

Week 4: Further locking into Pix2Pix, using tutorials from YouTube to learn more about cGANs in general and understanding a bit better as to the method of how this model trains off data.

Week 5: Further learning of bits of Python coding, as well as a lot of troubleshooting: installed the needed libraries that were missing. My idea of injecting my sketches into a machine that can finish them into lineart is fully established--I just need to figure out how to actually train this model.

Week 6: Troubleshooting still occurring, and worried about midterm presentations. Got pushed to week 7, but progress seemed quite slow. There were some improvements to the code made, after realizing the nearly 4 year old code had some weird kinks to work out, due to it utilizing an older version of python.

Week 7: TRAINING SUCCESS! Pulled through on Monday night, and finally got through a training set in the nick of time. Tuesday I was able to give my midterm presentation with training results. Very exciting breakthrough week.

Week 8: Further dataset production (making more sketches for the model to train off of, but not a big priority currently). Looking into real-time implementation, but it involves other languages with other libraries I am not familiar with. Definitely will take some more learning.

Week 9: Got some nice pointers from the great Prof. Memo Akten--turns out I may be able to use Python to achieve what I want. Not much progress done this week other than image drawings, had other projects to work on from other classes.

And that brings us here to week 10. I am quite proud of where I am, and I'm amazed I actually got here. I had no idea I had the ability to actually get this up and running. I still want to do real time implementation for live drawing, and this will be explored in the next quarter. Even if I am unable to do this live drawing, there are still other real time methods I can do, even if just a performative way to show my drawing process, then feed it into the machine, and later display for everyone else. Regardless of what state things end up being, I am confident that when at the exhibition, you will all see my enthusiasm for this project shine.

0 notes

Text

Hoo boy; regarding my two main methods of trying to do real time implementation:

If I go the Memo Akten route, I likely will have to simulate a webcam and make some changes to how the video feed from my iPad works, so that the webcam footage is not transformed in anyway, since I just need the canvas and sketch to show. I started to realize I need to learn/ultilize C++ and the openFrameworks toolkit, so that is going to take some time when it comes to trying to learn enough.

The other option is keijiro's Pix2Pix Unity implementation, but then I would also need to speedlearn some Unity, to be able to edit the code to insert my own trained model. Another issue with using this implementation as well would be that I would have to draw in that program, which would require a completely different training dataset (since I trained my model with a brush that has a certain pressure/opacity/etc settings when drawing).

Obviously, plan C is something I don't wish to pursue, because I really want to do a real time implementation, but I could look into other ways for exhibition, such as durational animation, maybe animating epoch checkpoints to show an evolution in how to model has learned. I would leave this as my backup plan, as I know exactly the manner I would need to do this, and as such, would just need time to do so. Another option could be a fun timelapse video, removing the real time element but allowing for a fun look into how a real time implementation COULD look like.

Either way, whatever occurs, you can best bet I will do my hardest to entertain, and to deliver a project I am proud to put my name on.

Problems

So I have reached my minimum checkpoint, that being getting the model to spit out images after being trained. Currently I want to do some implementation of real time.

I've been looking at other GitHub repos, but I admit the implementation notes are a bit above what I am currently used to--after all, it took me 4 weeks before I could properly get the model to finally train. I have a few options at the ready, like Memo Akten's branch that uses openCV, as well as another I found that uses Unity. I plan to work through these, and if somehow by the end of this I cannot get this real time option to work, I most definitely will pursue other avenues.

One backup plan I think would be really cool is to instead animate the evolution of my art as seen through the pix2pix epochs, becoming clearer over time. Time will tell...

1 note

·

View note

Text

Problems

So I have reached my minimum checkpoint, that being getting the model to spit out images after being trained. Currently I want to do some implementation of real time.

I've been looking at other GitHub repos, but I admit the implementation notes are a bit above what I am currently used to--after all, it took me 4 weeks before I could properly get the model to finally train. I have a few options at the ready, like Memo Akten's branch that uses openCV, as well as another I found that uses Unity. I plan to work through these, and if somehow by the end of this I cannot get this real time option to work, I most definitely will pursue other avenues.

One backup plan I think would be really cool is to instead animate the evolution of my art as seen through the pix2pix epochs, becoming clearer over time. Time will tell...

1 note

·

View note

Text

Hrmm...now seeing actual non-test image results tells me I have a lot of work ahead of me in regards to making my own personal dataset...

No matter--I embrace this challenge!

Image Generation?

Hello again all; been a bit busied up lately, so my progress has been unfortunately on the slower end. I really want to get my model to generate images based on sketches it now has not seen.

I have found a promising video linked to the first tutorial I used, where it uses Google Colab. https://www.youtube.com/watch?v=fXgodCC2O7o

Really hoping I can get something out, but I may need some more tinkering. Lots of uploading needs to be done...

1 note

·

View note

Text

Image Generation?

Hello again all; been a bit busied up lately, so my progress has been unfortunately on the slower end. I really want to get my model to generate images based on sketches it now has not seen.

I have found a promising video linked to the first tutorial I used, where it uses Google Colab. https://www.youtube.com/watch?v=fXgodCC2O7o

Really hoping I can get something out, but I may need some more tinkering. Lots of uploading needs to be done...

1 note

·

View note

Text

Here are some of outputs of the trained model! This was with just 26 images, and of course, I still have yet to test it on new sketches to see how this will fare, but I'm really excited to now have this huge wall knocked down!

As for the slides, I know the images are not labeled: the order of images goes as follows: sketch (to be translated), lineart (real), and lineart (simulated by generator). You can see that, despite the lacking dataset, it truly is learning. I cannot wait to mess around with feeding images into this trained model, and of course, further developing its capabilities.

I still have yet to decide what avenue I want this project to go for exhibition, but I definitely will be exploring real time possibilities, and if not possible, I will look into static presentations that will at least highlight the work I am making.

TRAINING SUCCESS

LET'S GO, I FINALLY HAVE IT TRAINING SO FAR!

At a later point I will reblog with some first impressions! I underestimated the amount of time it would take for training, but you will all start to see some of the training sets, and thus how the generator has been doing.

1 note

·

View note