#3D depth sensor

Text

I had a dream that I got my Magic Leap headset and then took it to a forest to create magical crystals and rainbows in the streams there and then I took it to the beach to decorate the sand with glowing shells and stars!!!!!!! and then woke up to realize I’ll soon be able to do these things FOR REAL!!!!!!!

#incessant meowing#I'VE BEEN WAITING SO LONG TO GET A HEADSET THAT WORKS OUTSIDE#the possibilities are mind boggling#i won't actually be bringing it to the beach because yknow. sand.#BUT STILL.... i've got some fuckign PLANS#most of them revolving around celebrating earth day next month#i still can't believe i'm actually getting one of these headsets to work with#it doesn't use passthrough video like other headsets#it actually projects fucking holographic images onto your retinas#it has a depth sensor that can scan your surroundings to generate a 3d map#and this 3d map is used to project shadows of virtual objects onto your surroundings and also generates collision#the lenses have a dimming feature that you can use to turn down the brightness on all or parts of reality#for various purposes involving holograms...... which i will be making......#?!?!?!#like WHAT!!!!!!!!#i wonder if i'll ever be able to wrap my head around any of this#one day this tech will be as commonplace as all the other miraculous computing devices around us we all take for granted#but right now??? i'm living in a scifi novel and i'm losing my mind about it

13 notes

·

View notes

Text

https://www.htfmarketintelligence.com/report/global-3d-depth-sensor-market

1 note

·

View note

Photo

Furby Resources!

Last updated 2/15/2024 with How To Dye Furby Fur

I added a lot of things since originally posting, so you may want to delete your last reblog and replace it!

Incredible Google Drive folder with a huge variety of Furby content and history

Guide to Furby Fandom Tags

Tips For Buying Furbies

Adult Furby Price Guide | Furby Value Guide For Dummies

Furby Discord Server | Furby Wiki’s Discord Server (direct invite)

Archived Furby content on archive.org

Colorful Google Doc to track Furby projects and collections

Furby Certificate Of Adoption | Furby Adoption Certificate

Images ripped from flash games

Official Furby Tiled Backgrounds

Furby Carrier Pattern | If that doesn’t work patterns are sold on Etsy

All Official Furbys

Eye colors on official models | Common 2005 Furby Eye Colors

Differences between Curly Furby Babies and Sheep Furby Babies

High Quality Transparent Furby Masks

Furbtober Prompts

Pixel Furby Page Dividers

Furby Sticker Scans

THE FURBY ORGAN, A MUSICAL INSTRUMENT MADE FROM FURBIES

Lore

Furby Paradise Manga - 1 chapter scanned and translated

Desktop Furby - 2005 Burger King Freeware

A Deep Dive Into The Furby Fandom

Furby Island Movie Free On YouTube

Printable furbish-english dictionary | Official PDF Dictionary | All Known Furbish Words

Official Furby trainer's guide

Furby Songs YouTube Playlist

Dancing Furby Gameplay & Interacting with Furby - Game Boy Color Japan

Unofficial Guide To Furby Species And Biology

Furby Lore Zine

Every printable from Big Fun In Furbyland (contains lore, Furbish words, phrases, coloring pages and photos)

Make A (non-plush) Furby

Furby Bases Collection on deviantART | Extra Furby Base | Furby Bases on Toyhou.se

Design A Furby Shockwave Game (pictured above , also has a few old Furby mini games) | Can be played through Flashpoint which archives old web games

Official Coloring Pages

AdoptAFurby.com Coloring Pages | List Of Official Coloring Books

Color A Furby Online (pictured above)

Furby's Design-o-Matic (pictured above) | Works with Ruffle’s browser extension

Make A Furb Game (pictured above) | Preview Video

Furby Creator Games on Picrew

Origami Furby Tutorial

Big Fun In Furby Land CD-ROM has a very limited Furby maker pictured in a gif above (works with Windows 10, just right click, click “Mount”, then open Furby.exe it’s an application file)

Furby Patterns on Etsy

Real Life Furby Mods

FURBY TUTORIAL MASTERPOST

3D Printable Face Plates and Furbys

1998 Furby Pattern

Long Furby Pattern

How to Long Furb | Longifying Your Furby

How To Skin A 1998 Furby

Eye Chip Tutorial

How To Make Accurate 1998 Eye Chips

Furby Buddy Pattern

replacing a 1998 furby’s speaker

~ furby beret - crochet pattern ~

Curing Me Sleep Again (when you’re Furby won’t stay awake)

How To Skin A Shelby

How To Dye Furby Fur

Real Life Furby Care

How To Find Your Furby’s Birthday

Furby Name Generator | Another Furby Name Generator

How To Brush Your Furby In Depth Guide

How To Clean A Tilt Sensor

Sync Screw Adjustment

Please suggest additions!!

#furby#my furby#furby art#long furby#furbies#furby community#furbys#custom furby#furblr#furby fandom#webcore#furby resources#safe furby#allfurby#safefurby#eye contact

3K notes

·

View notes

Note

How does moon have human senses? (like taste, touch, smell, sound, hearing). Does moon have a tongue that allows them to taste things despite being a robot?

Lucky you, we love thinking about this stuff so we already have a number of explanations for these.

Hearing: Stereo microphones around where the ears connect to the head that feed into an audio processor that determines a noises placement in 3d space from the differences in acoustics.

Sight: Already drew a thing about this a bit ago! She has a spinning array of sonar depth sensors that essentially create a full 360 field of vision albeit without any color or light. The only data from this sensor is simply how close points are that can show the shape of things and their proximity. For the rest of the information there is a hidden camera on her visor to get light and color in front of her.

Smell: Moon actually cannot smell as she has no reason to breathe like a person does.

Taste: Her tongue is lined with sensors that can determine the chemical makeup of things in close proximity, emulating a "taste" sensation by tying a range of compounds to a variety of different signals.

Touch: Her various limbs have extremely sensitive positioning sensors that compare their actual position to their intended position, such that even a slight touch pushes on them enough that the sensors can work together to determine a general position of the point of impact.

#robotposting#oc:moon#Why is she capable of taste?#robot can have a little food sometimes#as a treat

194 notes

·

View notes

Text

Available never on the Switch! Hypnosis the Game!

• Hypnotist, subject, & switch modes

•Depth sensors & BT pocket watch included

•Spatial 3D sound & HD spirals

•Learn real hypnotic language as you play

•Use real techniques or fantasy

•Highly addictive

•Available in VR

46 notes

·

View notes

Text

Self-driving cars occasionally crash because their visual systems can't always process static or slow-moving objects in 3D space. In that regard, they're like the monocular vision of many insects, whose compound eyes provide great motion-tracking and a wide field of view but poor depth perception.

Except for the praying mantis.

A praying mantis' field of view also overlaps between its left and right eyes, creating binocular vision with depth perception in 3D space.

Combining this insight with some nifty optoelectrical engineering and innovative "edge" computing -- processing data in or near the sensors that capture it -- researchers at the University of Virginia School of Engineering and Applied Science have developed artificial compound eyes that overcome vexing limitations in the way machines currently collect and process real-world visual data. These limitations include accuracy issues, data processing lag times and the need for substantial computational power.

Read more.

11 notes

·

View notes

Text

cameras & 3d

belatedly learning how to actually use a proper camera. it's funny I know a reasonable amount about geometric optics and I could explain mathematically how a camera works, but I hadn't managed to connect that to practical knowledge of 'how aperture priority mode works'.

so no wonder so many shots at the concert turned out blurry, I hadn't twigged that you're supposed to adjust the aperture and ISO until the auto shutter speed becomes reasonable (and the focal depth is appropriate for your intent). I also wasn't really using the different autofocus-area settings to full effect so I'm sure it sometimes focused on the wrong thing.

I had it on auto ISO and f/2.8 the whole time. does explain why it was fairly easy to get the bokeh since that's the lowest f-number available on this lens (apparently very good for a zoom lens). but I should probably have manually cranked up the ISO to better handle the darkness of the room.

still, I have learned what the buttons and dials on the camera do now so hopefully the next lot of photos will be nicer. and hopefully learning a bit more about photography will also help me get better at 3D rendering lol.

in a real camera, the ISO, exposure time and aperture size all affect the brightness of the image, and each comes with drawbacks. increasing aperture size increases the amount of bokeh (equivalently, narrows the focal plane). increasing ISO (sensitivity) increases the amount of grain in low light. increasing exposure time increases the amount of motion blur.

3D graphics is simulating a camera, but it comes with its own parameters and language. in 3D, by default you get pinhole-perfect sharpness. in rasterisation, depth of field is a postprocessing shader which blurs the image based on depth. in pathtracing, it's a setting you can turn on which I believe affects how rays are traced from the camera. in Blender, you can input an f-number, but it's just another slider you can fiddle with so I don't tend to pay much attention to the exact number when I adjust it.

ISO and exposure time is not really a thing in 3D. the brightness of your scene is something you choose when converting from the scene-referred floating point output of the renderer to the final display-referred integer colour (blender offers a handful of presets here). every real camera sensor has a limited dynamic range, but since the rendering is done in floating point, in 3D you have a near-infinite dynamic range at the 'sensor' stage.

motion blur in 3D is completely optional - again it's something you'd do in a post-processing shader which blurs the image along motion vectors, or by adjusting your renderer settings in a path tracer to trace some rays at different simulated times. the graininess of the image in a pathtracing renderer is really more a function of how many samples you trace, which has no connection to the duration of the simulated exposure in the scene.

so while 3D rendering prepares you for photography in some ways, it definitely leaves some gaps! but knowing a bit more about how real cameras work will be useful if I want to render something realistically or fake it in a painting.

11 notes

·

View notes

Text

DeerHead EMC-V1 baby pictures.. She doesn't even have all of her leg servos yet 🥺 (EMC stands for "Electro-Mechanical Chihuahua"!)

Her snout is a bit huge for this first run, but really this is just a draft while the 3D printer is broken; the LED matrix in her head was also defective right out of the package, as it turns out; so because it's also a little heavy, I probably won't end up using it! That's okay because atleast for the Arduino Uno, running both 15 servoes and the 64 pixel screen animations at once could be a bit much! I'm thinking of getting the hardware up and at 'em first, and making sure that I can program it to say, do a little dance for now to show that I'm up for the challenge of animating servoes with this driver library (right now I'm just testing with a live servo control program), and then I want to upgrade to a Raspberry Pi brain so that I can start giving her autonomy! When I do that, I'm going to atleast want two buttons; a back and head button to sense affection and love! Or scoulding with a tap on the head, but that might be much to program for something I'd never really need to do with a robot I programmed XD unless it ended up being more autonomous than I'm realisitcally imagining it being! I'll also want a gyro sensor, so that she'll be able to tell if she's fallen over (maybe combined with soft buttons on her front paws sensing that they aren't touching the ground), and self-right! She will also have a distance sensor on her chest to avoid bumping into things, and for a general sense of depth; and beside that, a microphone (connected to a voice recognition module? I feel like Raspberry Pi wouldn't need that, I haven't looked into it though), to understand voice commands.. Or just "I love you" whilst receiving cuddles 😄 and of course, there would be a speaker, likely in the back of the head or also near the distance sensor depending on the size; for this maybe I can use some old furby boom parts I have laying around 😆 I'd probably need a module for the speaker though, so the Pi knows it's a speaker, and that'd probably come with another speaker.. And to power it all? A six volt LIPO battery! Or would six volts be anywhere near enough for all that combined with running the programming of the robot? Such I'd have to research lol, but furthermore.. I welcome EMC-V1 to the world!!! And tomorrow my new servoes will come in, hopefully she'll be able to walk too by then 😄

5 notes

·

View notes

Text

Apple Vision Pro: Reality Restyled, World goes Mad.

The tech world has gone berserk since Apple’s “Apple Vision Pro”, its first stab at spatial computing, was introduced. This ultra-modern virtual reality headset surpasses the boundaries of that very concept presenting a near invariant convergence between digital and non material. Let us look into the characteristics that have fired up this frenzy, exposing how it operates, its dazzling layout as well as what technological advances is driving all of these.

How it Works: Notably, Apple Vision Pro does not just cast an image onto your eyes it generates a realistic three-dimensional feeling. It, with the aid of innovative LiDAR technology and depth sensors maps around you in real time embedding digital elements within physical reality. Picture playing a game of chess on an augmented coffee table that leaps into the depths, or joining coworkers from all over the world in real time inside shared virtual space.The possibilities are mind-boggling.

Design that Dazzles: The so-called ‘high art’ brand that is Apple has not failed in terms of form factor as Vision Pro proves, which you can read below. The frame is lightweight aluminum that curves ergonomically around your face, a single piece of laminated glass functions as the lens housing all intricate technology inside. The available modular parts necessitate a comfortable fit for varietal heads. It’s just one of many demonstrations as to Apple pays with its quality designs which merge function and form.

Technology Powerhouse: The dual-chip architecture has driven this wonder. The M2 chip well known from Apple’s latest devices packs phenomenal processing power whilst the R1 is a breakthrough solution which focuses on sensor data, eliminating lag. This combined power in other words gives smooth and into reality real experiences without trembling neither with lags of some timely moments.

Craze Unfolding: This is a crowd of people waiting for the future to see, powered by Apple’s image as innovators and somehow hoping Vision Pro. Players expect to immerse themselves in hyper-realistic realms, creative professionals imagine unmatched collaboration tools and casual users dream about the ability to step into their dear movie or even memory reeling, pulsating is 3D. This isn’t just a device; it is a new paradigm in human-computer interaction.

Privacy Concerns: However even during the heights of excitement, there remains a cloud in privacy concerns. The dimension of questions about data collection and use also surrounds the Headset functionality to track your gaze and mapping it, besides identifying where you are. Apple requires user control and transparency, but trust has to be won; specifically for such a sensitive technology.

However worrisome, the Apple Vision Pro is a giant leap. It is more than a poshlost headset: it unlocks the doors of an entirely new age to computing where real and virtual fuse together. Whether it lives up to the hype remains to be seen, but one thing is certain: Apple Vision Pro has set ablaze the world and everyone waits with great anticipation.

3 notes

·

View notes

Text

Buy an iPhone 14 Pro Max 5G 128GB from Spectronic UK at an Affordable Price

Spectronic UK is a top-rated e-commerce store that specializes in offering the latest Apple phones. We take pride in providing our customers with a wide range of phones with advanced features at highly competitive prices. You can order the iPhone 14 Pro Max 5G with 128GB of storage from our online store and get it at the best price.

The following specifications are mentioned below:

Internal Memory- 128GB 6GB RAM

📷Main Camera- Triple [48 MP, f/1.8, 24mm (wide), 1/1.28″, 1.22µm, dual pixel PDAF, sensor-shift OIS, 12 MP, f/2.8, 77mm (telephoto), 1/3.5″, PDAF, OIS, 3x optical zoom, 12 MP, f/2.2, 13mm, 120˚ (ultrawide), 1/2.55″, 1.4µm, dual pixel PDAF, TOF 3D LiDAR scanner (depth)]

📷Selfie Camera- 12 MP, f/1.9, 23mm (wide), 1/3.6″, PDAF, OIS (unconfirmed) SL 3D, (depth/biometrics sensor)

🔋Battery- Li-Ion 4323 mAh, non-removable (16.68 Wh)

Colour- Deep Purple

In The Box- The Phone, USB Type-C to Lightning Cable, Documentation, Apple Sticker.

Experience the ease and convenience of online shopping with Spectronic UK by visiting our website and placing your order now!

2 notes

·

View notes

Link

It can be unlocked with wet hands The top-end flagship smartphone Xiaomi 14 Ultra will receive an ultrasonic fingerprint sensor and will become the only smartphone in the line with such a solution. This was reported by insider Digital Chat Station. Judging by previously published information, Xiaomi14 Ultra should use an ultrasonic under-display fingerprint scanner manufactured by Goodix, and not a Qualcomm solution. Compared with traditional optical fingerprint solutions, pulses emitted by ultrasonic sensors can recognize unique fingerprints to generate 3D depth data, thereby achieving higher accuracy. When ultrasonic waves identify fingerprints, the screen does not need to be turned up to high brightness. More importantly, smartphones with ultrasonic fingerprint sensors can be unlocked with wet hands. Traditional phones with optical fingerprints will not unlock in such cases. Xiaomi 14 Ultra will be the only smartphone in this flagship [caption id="attachment_85486" align="aligncenter" width="780"] Xiaomi 14 Ultra[/caption] Previously, the same source reported that the main camera will support a variable aperture that can be adjusted from F/1.6 to F/4.0, fixing the amount of light entering the image sensor. The 50-megapixel sensor of the main camera of Xiaomi 14 Ultra will be Sony LYT900. This sensor is an optimized version of the Sony IMX989 and has an optical format of 1 inch. It is also stated that Dual Conversion Gain technology will improve image quality by reducing the amount of noise. It is expected that Xiaomi 14 Ultra will be equipped with the Qualcomm Snapdragon 8 Gen3 mobile platform and will receive up to 1 TB of flash memory. It will be released in the first quarter of next year. Digital Chat Station was the first to accurately report the specifications and release dates of the Redmi K60 and Xiaomi 14.

#Android#battery_life#camera_features#device_release#Display#Gaming#MIUI#Mobile_communication#mobile_device#mobile_device_launch.#mobile_technology#phone_features#photography#processor#smartphone#smartphone_specifications#tech_specifications#Xiaomi#xiaomi_14_ultra#Xiaomi_14_Ultra_price#Xiaomi_14_Ultra_review#xiaomi_phone

2 notes

·

View notes

Text

iPhone 14 Pro Max Giveaway Free

Amazing new giving way idea iPhone 14 and 14 pro max.You can get Apple iPhone 14 for free by playing a quiz. This is open worldwide contest it’s meaning there are no country restrictions. Enter to win an free iPhone 14 Pro max This is incredible gadgets giveaways of the Apple latest smartphone.

Free iPhone 14 Pro Max Giveaway Today

Now how to get iPhone 14 for free. Just enter the details in the form above. As soon as you fill up the forms, you are automatically added to our giveaway list.

You do not need to pay anything to join this giveaway

Winners are chosen randomly

You may participate for multiple times within a month

Bot Protected, Don’t try to cheat our system

Absolutely Free Delivery

Free participate giveaway contest and get a chance to win the Apple’s 5G smartphone. Complete the online registration form the link of the giveaway iPhone 14

iPhone 14 Pro Max Features Specifications

Apple’s new iPhone 14 Pro and Pro Max have been floating in the market for a while now. Improve faster then old model’s iPhone 13 pro max even iOS. If you are interested in buying the iPhone 14 and want to know more about what is expected of it, read on to know more:

Apple’s new iPhone 14 should be a great improvement and a big hit among all iPhone’s. Apple iPhone 14 has been in the news for a long time, and people are eagerly waiting for its launch as it will be a great upgrade.

iPhone 14 Pro Max display

6.7-inch Super Retina XDR

Dynamic Island FTW

Size: 6.7 inches

Resolution: 2796 x 1290 pixels, 19.5:9 ratio, 460 PPI

Technology: OLED

Refresh rate: 120Hz

Screen-to-body: 88.45 %

Features: HDR support, Oleophobic coating, Scratch-resistant glass

Ambient light sensor, Proximity sensor

iPhone 14 Pro Max Camera System

Main camera: 48MP quad-pixel sensor. Specifications: Aperture size: F1.8; Focal length: 24 mm; Pixel size: 2.44 μm. Second camera: 12 MP Telephoto, OIS, PDAF. Specifications: Optical zoom: 3.0x; Aperture size: F2.8; Focal Length: 77 mm. Third camera: 12 MP ltra-wide, PDAF. Specifications: Aperture size: F2.2; Focal Length: 13 mm; Pixel size: 1.4 μm. Fourth camera: ToF 3D depth sensing Video recording: 3840x2160 4K UHD 60 fps, 1920x1080 Full HD 240 fps, 1280x720 HD 30 fps Front: 12 MP Auto focus, HDR Video capture: 3840x2160 4K UHD 24 fps System chip: Apple 16 Bionic Processor Hexa-core CPU Hexa-core 2x3.46 GHz Avalanche + 4x Blizzard

GPU Apple GPU 5-core graphics

RAM: 6GB

Internal storage: 128GB

Device type: Smartphone

OS: iOS (16.x)

Capacity: 4323 mAh

Type: Built‑in rechargeable lithium‑ion

Charging : Fast charging, Qi wireless charging, USB

MagSafe wireless charging

Max charge speed: Wireless: 15.0W

Storage :128GB 6GB RAM, 256GB 6GB RAM, 512GB 6GB RAM, 1TB 6GB RAM

Bluetooth: 5.3

Wi-Fi: 802.11 a, b, g, n, ac, ax Wi-Fi 6; Wi-Fi Direct, Hotspot

Sensors: Accelerator, Gyroscope, Compass, Barometer, LiDAR scanner

Hearing aid compatible: M3, T4

16 notes

·

View notes

Text

Buy a High-End Xiaomi 13 Ultra SmartPhone from Spectronic CA

The most reputable and popular online mobile store in Canada, Spectronic CA, offers the newest Xiaomi smartphones at competitive prices. Buy the Xiaomi 13 Ultra online from Spectronic CA with the following features:

Body: Glass front (Gorilla Glass Victus), eco-leather back, aluminium frame, IP68 dust/water resistant (up to 1.5m for 30 min)

📱 Display: 6.73-inch AMOLED HDR10+ display with a refresh rate of up to 120Hz

Chipset: Qualcomm SM8550-AB Snapdragon 8 Gen 2 (4 nm)

Internal Memory: 1TB 16GB RAM and UFS 4.0

📷 Main Camera: 50-megapixel with Four sensors (ultrawide, periscope telephoto, telephoto, 3D depth)

📷 Selfie Camera: 32-megapixel

🔋 Battery Type: Li-Po 5000 mAh

Colours: Black, Olive Green, White, Orange, Yellow, Blue

In The Box: Mi 13 Ultra, 90-watt fast charger, USB Type-C cable, Protective cover and Documentation.

You can go to the website to learn more. Place an order right away to receive the discount!

2 notes

·

View notes

Text

Apple Vision Pro: Features, Uses, Advantages & Disadvantages.

Apple vision pro is Apple’s first virtual reality headset. The Vision Pro has a completely three-dimensional interface that can be operated with your hands, voice, and eyes. It includes Apple’s first three-dimensional camera. And allowing users to record, relive, and fully immerse themselves in 3D spatial images and films. The headgear includes LiDAR + TrueDepth depth sensors, two high-resolution, one four-megapixel color camera, eye and facial tracking, and other capabilities. The gaming, media consumption, and communication-enabled visionOS software powers the gadget. The headset offers a 96Hz mode for specific usage cases and can refresh up to 90 Hz. Accessibility features for the gadget additionally include eye and hand motion control.

#apple vision pro#vision pro#apple vision pro reviews#apple vision pro reactions#apple#apple vision pro headset#apple vision#use of apple vision pro#features or apple vision pro#apple VR#virtual reality#augmented reality#apple AR#apple vision pro unboxing#apple vision pro impressions#Advantages of apple vision pro#disadvantages of apple vision pro#pros and cons of apple vision pro

4 notes

·

View notes

Text

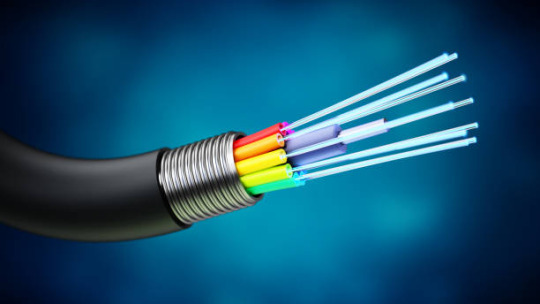

In-Depth Understanding of Fiber Optic Sensing Network

Fiber optic sensing network is a tendency for many applications. It supports a large number of sensors in a single optical fiber with high-speed, high security, and low attenuation. This article provides some information about fiber optic sensing networks.

What is Fiber Optic Sensing Network?

A fiber optic sensing network detects changes in temperature, strain, vibrations, and sound by using the physical properties of light as it travels along an optical fiber. The optical fiber itself is the sensor, resulting in thousands of continuous sensor points along the fiber length.

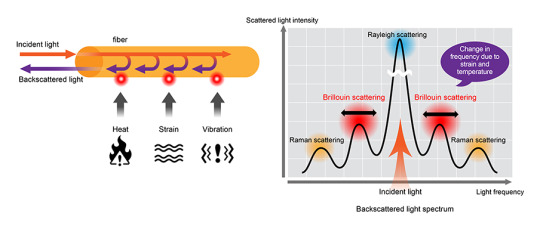

How Does Fiber Optic Sensing Network work?

A fiber optic sensing network works by measuring changes in the backscattered light inside of the fiber when it meets temperature, strain, and vibration.

Rayleigh scattering is produced by fluctuations in the density inside of the fiber. Raman scattering is produced by the interaction with molecular vibration inside the fiber. The intensity of anti-Stokes rays is mainly dependent on temperature. Brillouin scattering is caused by the interaction with sound waves inside the medium. The frequency is dependent on strain and temperature.

Operating Principle of Fiber Optic Sensing Network

Optical Time Domain Reflectometry (OTDR)

In the OTDR principle, a laser pulse is generated from solid-state or semiconductor lasers and is sent into the fiber. The backscattered light is analyzed for temperature monitoring. From the time it takes the backscattered light to return to the detection unit, it is possible to locate the location of the temperature event.

Optical Frequency Domain Reflectometry (OFDR)

The OFDR principle provides information about the local characteristics of temperature. This information is only available when the signal is backscattered in the function of frequency. It allows for efficient use of available bandwidth and enables distributed sensing with a maximum updated rate in the fiber.

Fiber Optic Sensing Network Technologies

Distributed Temperature Sensing (DTS): DTS uses the Raman effect to measure temperature distribution over the length of a fiber optic cable using the fiber itself as the sensing element.

Distributed Acoustic Sensing (DAS): DAS uses Rayleigh scattering in the optical fiber to detect acoustic vibration.

Distributed Strain Sensing (DSS): DSS provides spatially resolved elongation (strain) shapes along an optical fiber by combining multiple sensing cables at different positions in the asset cross-section.

Distributed Strain and Temperature Sensing (DSTS): DSTS uses Brillouin scattering in optical fibers to measure changes in temperature and strain along the length of an optical fiber.

Electricity DTS: Reliable temperature measurement of high-voltage transmission lines is essential to help meet the rising electricity demand. Fiber optic sensing, integrated into distributed temperature sensors on power lines, help ensure optimal safety and performance in both medium- and long-distance systems.

Oil and Gas DTS : Many lands and subsea oil operations rely heavily on DTS for improved safety and functionality in harsh environments. Fiber optic sensing ensures reliable performance and durability in high-temperature, high-pressure, and hydrogen-rich environments.

Oil and Gas DAS: The optical fiber in DAS creates a long sensor element that can detect high-resolution events throughout the entire length of the fiber.

Fiber Optic Navigation Sensing: Fiber optics are used in navigation systems to provide accurate information about location and direction. Aircraft, missiles, unmanned aerial vehicles (UAVs), and ground vehicles require advanced optical fiber navigation technology to ensure reliability and safety.

Fiber Optic Shape Sensing Technology: Reconstructs and displays the entire shape of optical fiber in 2D and 3D. The technology enables cutting-edge applications such as robotic, minimally invasive surgery, energy, virtual Reality (VR), etc.

Wavelength Division Multiplexing (WDM) Technology: Use of Fiber Bragg Gratings (FBGs) with different reflection wavelengths (Bragg wavelengths) in one optical fiber.

Applications

A fiber optic sensing network is used to monitor pipelines, bridges, tunnels, roadways, and railways. Also, it is used in oil & gas, power and utility, safety and security, fire detection, industrial, civil engineering, transportation, military, smart city, minimally invasive surgery, internet of thing (IoT), etc.

Conclusion

A fiber optic sensing network has high bandwidth, security, and stability, is immune to electromagnetic interference, and is lightweight, small in size, and easy to deploy. Sun Telecom specializes in providing one-stop total fiber optic solutions for all fiber optic application industries worldwide. Contact us if any needs.

2 notes

·

View notes

Text

What is Apple ARKit Overview and Its Features Highlights

The era of augmented reality and virtual reality has only recently begun. But their use cases are still expanding in 2019. Apple just launched the updated Apple ARKit, which enables AR developers to create more detailed AR experiences for mobile devices. Let's learn about the highlights of Apple ARKit's features in more depth.

What is an Apple AR kit:

AR stands for augmented reality, and ARKit is the iOS mobile device development platform. The consumer doesn't have to worry because Apple developers mostly use the ARKit framework. For the iPad and iPhone, ARKit enables AR developers to create cutting-edge AR experiences.

Overview:

Apple created the ARKit library in 2017. It enabled the creation and construction of mobile apps employing AR technology.

We can view virtual items in the actual world through the camera using AR apps.

A 2D or 3D element is added to the user experience in augmented reality (AR) so that it can be seen in real time through a mobile camera and appear to be a part of the physical world.

Without leaving your home, you may use 2D and 3D AR to see how the flower pot will look on your drawing room table. With 2D AR, you can add overlays that react in real time to geographic location or visual elements.

ARKit 2.0 was presented by Apple during the Apple Worldwide Developers Conference in 2018. A slew of brand-new APIs and functionality for AR development are included in ARKit 2.0.

ARKit uses motion sensors in conjunction with visual data from cameras to track objects in the real world.

What’s new in ARKit 2?

A new component in ARKit has improved and made it even better to use. The app developer now has new possibilities to incorporate into their core development principles. Let's examine the advancements in ARKit 2.

Persistent Experiences

You can maintain an augmented reality environment and objects that are linked to real-world spaces and things, such as toys and office spaces. So, you can pick off were you last left off. Now that AR stores the sessions, the user can begin playing chess on a table and resume it at a later time with the game still in progress.

Shared Experiences

Many people engage in internet gaming. The AR apps are not exclusive to a particular individual. iOS devices can now be used by many users at once to see augmented reality experiences or play multiplayer games.

Object Detection and Tracking.

With the addition of support for 2D image individuality in ARKit 1.5, you can now start an AR experience based on 2D images like signage, posters, or pieces of art. You may incorporate moving objects like product boxes or magazines into your AR experiences thanks to the support provided by ARKit 2. The identification of well-known 3D objects, such as furniture, toys, and sculptures, is another feature added by ARKit 2.

Conclusion:

All of the new ARKit's features were discussed in the article above. We advance the creation of apps with these additional functionalities. All those advanced features help the Mobile App Development Company developers to showcase the creativity of AR. Recently, Clutch named XcelTec as the best AR/VR developer.

Visit to explore more on What is Apple ARKit Overview and Its Features Highlights

Get in touch with us for more!

Contact us on:- +91 987 979 9459 | +1 919 400 9200

Email us at:- [email protected]

1 note

·

View note