#AI efficiency

Explore tagged Tumblr posts

Text

The Impact of AI on Everyday Life: A New Normal

The impact of AI on everyday life has become a focal point for discussions among tech enthusiasts, policymakers, and the general public alike. This transformative force is reshaping the way we live, work, and interact with the world around us, making its influence felt across various domains of our daily existence. Revolutionizing Workplaces One of the most significant arenas where the impact…

View On WordPress

#adaptive learning#AI accessibility#AI adaptation#AI advancements#AI algorithms#AI applications#AI automation#AI benefits#AI capability#AI challenges#AI collaboration#AI convenience#AI data analysis#AI debate#AI decision-making#AI design#AI diagnostics#AI discussion#AI education#AI efficiency#AI engineering#AI enhancement#AI environment#AI ethics#AI experience#AI future#AI governance#AI healthcare#AI impact#AI implications

1 note

·

View note

Text

Top Weekly AI News – June 27, 2025

AI News Roundup – June 27, 2025 ‘Quantum AI’ algorithms already outpace the fastest supercomputers, study says Researchers have developed a quantum photonic circuit that allows AI algorithms to run faster and more efficiently than on classical supercomputers livescience Jun 27, 2025 Meta’s quest to dominate the AI world Meta is heavily investing in AI and open-sourcing its models to drive…

#AI#ai efficiency#ai evolution#AI News#ai regulation#AI Weekly News#anthropic#artificial intelligence#google#OpenAI#Quantum AI#sovereign ai#Top AI News

0 notes

Text

Is Artificial Intelligence (AI) Ruining the Planet—or Saving It?

AI’s Double-Edged Impact: Innovation or Environmental Cost? Have you heard someone say, “AI is destroying the environment” or “Only tech giants can afford to use it”? You’re not alone. These sound bites are making the rounds—and while they come from real concerns, they don’t tell the whole story. I’ve been doing some digging. And what I found was surprising, even to me: AI is actually getting a…

#AIAffordability#AIAndThePlanet#AIForGood#AITools#DigitalInclusion#FallingAICosts#GreenAI#HumanCenteredAI#InnovationWithPurpose#ResponsibleAI#SustainableTech#TechForChange#AI accessibility#AI affordability#AI and climate change#AI and sustainability#AI and the environment#AI efficiency#AI for good#AI innovation#AI market competition#AI misconceptions#AI myths#Artificial Intelligence#cost of AI#democratization of AI#Digital Transformation#environmental impact of AI#ethical AI use#falling AI costs

0 notes

Text

Maximizing Efficiency with BotResponse AI - inklingmarketing

Maximize efficiency with BotResponse AI by automating tasks, improving customer service, and streamlining workflows. Discover AI-driven solutions for business growth.

#BotResponse AI#automation#AI efficiency#customer service AI#business automation#workflow optimization#AI solutions.

0 notes

Text

⚙️ Transform your daily tasks into effortless efficiency with AI solutions! 🚀 Let AI streamline your workflow and free up your time for what really matters. ✨ Click this link : https://tinyurl.com/fbhea698

#ai efficiency#ai#productivity#tech tools#efficiency#digital transformation#automation#work smart#tech solutions#time management#creator community#content creation#youtube growth#digital marketing#channel growth#digital#youtube tips#content strategy

0 notes

Text

Thinking about Marvin and pathetic androids in general.

You’ve been delivered the magnum opus of technology. A supercomputer beyond human comprehension, so advanced, so efficient, invested with self-awareness and consciousness. Truly the peak of artificial intelligence.

And he’s a depressed, whiny loser. Oh, he will perform his tasks with utmost perfection, naturally, but he will complain and sigh and philosophize on the side.

“Thank you for taking me out,” you say to your synthetic partner.

“Well, my pleasure. Mine only, I suppose, as you probably had no other choice,” he says with pursed lips. “Give it two years and they’ll come up with a better model, and I’ll be discarded in the trash, recycled for scraps, forgotten in some intergalactic dump.”

Ah, the misery of life. He glides to his designated pouting corner and shuts himself off.

#listen I love highly efficient and logical androids#but imperfect or humane androids??? top tier trope#this includes Andy from Alien Romulus#hgttg#the hitchhiker's guide to the galaxy#android x reader#ai x reader#robot x reader#monster fucker

420 notes

·

View notes

Note

i’ve seen too many people ask for a character.ai bot or a chatbot or whatever of the characters. dude, the game is LITERALLY against ai, what is the thought process here?? not to mention all the awful things ai does, but it feels especially bitter given that i can only assume the dev team hates ai just as much as any sane person would. i can’t imagine hating ai only to have someone feed my oc’s into a chatbot site when you could just be writing fanfic or rping with real people. sorry for the rant, i’m just frustrated.

⋆˚꩜。

#date everything#NO ANON YOURE SO SO REAL FOR THIS#YOU PEOPLE HAVE FORGOTTEN THE JOYS OF PUTTING TOGETHER A SCRAPPY AND SELF INDULGENT FANFIC#OR DAYDREAMING YOURSELF TO SLEEP WITH SOME GOOD MUSIC IN THE BACKGROUND#i love date everything for being against it in the most ultimate ways#hate hate hate ai . until it doesn’t actively rot the earth and leave people jobless#i wont care for it one bit#i dont care how efficient it is#ill make ai dudebros go to libraries for research the old fashioned way if i need to

115 notes

·

View notes

Text

The Amazing AnswerBot.

131 notes

·

View notes

Text

#bsky#AI#data centers#climate crisis#heat wave#energy efficiency#air conditioning#electrical grid#power grid

26 notes

·

View notes

Text

Lisa Needham at Daily Kos:

Although Elon Musk’s exit from government service was the messiest breakup ever, the multibillionaire’s legacy will live on in the so-called Department of Government Efficiency. It’s not just that DOGE personnel are now squirreled away in other agencies, though that is definitely the case. Sadly, DOGE’s real legacy is the mindset of cutting government to the bone with little regard for the consequences.

We’re still playing the “Who Runs DOGE?” game

This is the stupidest game. Remember that the Trump administration played coy about Musk’s role, saying with great fanfare that he was the head of DOGE, yet insisting to courts that Amy Gleason, a random official who seems to have learned of her new role while on vacation, was running things, although she also found time to work at an entirely different agency. Gleason is still listed as the acting administrator, but just as was the case during the Musk era, she doesn’t appear to be doing anything at DOGE. Instead, Russell Vought, Project 2025 guru, Christian nationalist, and head of the Office of Management and Budget, will now run DOGE from the shadows. Where Musk was a mercurial toddler who slashed and burned his way through the federal government, Vought is methodical, steadily advancing toward his twin goals of putting federal workers “in trauma” and making America a Christian nation controlled by a conservative Christian government. Put another way, Vought is just as committed as Musk was to destroying the administrative state—and he might be better at getting that done.

DOGE’s AI efforts still suck

Despite all evidence to the contrary, the Trump administration remains convinced that DOGE will somehow replace thousands of government workers with artificial intelligence. When they tried to let AI decide which Department of Veterans Affairs contracts to cancel, it was a predictable disaster. The AI tool hallucinated the value of contracts, deciding that over 1,000 contracts were worth $34 million each. The DOGE employee who developed the tool had no particular background in AI, but used AI to write some of his code nonetheless. Then DOGE let the thing loose in the VA, where it determined that 2,000 contracts were “MUNCHABLE” and therefore not essential.

This is only the latest pathetic effort by the administration to push shoddy AI tools on federal agencies. One federal employee described GSAi, an AI tool for the General Services Administration, as “about as good as an intern” that gave “generic and guessable answers.” Another chatbot at the Food and Drug Administration’s Center for Devices and Radiological Health has difficulty uploading documents or allowing chatbot users to submit questions. Not a big help, particularly since humans are already pretty capable at uploading documents and answering questions. Despite these repeated failures, the administration remains convinced that AI is magical and ready for prime time.

[...]

Some of the worst DOGE cuts are about to become law

While DOGE was given free rein to hack its way through the federal government, the administration only sent a few of DOGE’s cuts to Congress for them to be passed into law. Out of the $160 billion ostensibly saved by DOGE—well short of the promised $2 trillion—the administration asked Congress to codify only $9.4 billion. The budget’s passing would slash $1.1 billion from NPR and PBS, eliminating all their federal funding because Trump thinks they are radical leftists, a thing that anyone who listens to NPR or watches PBS knows is not true. Trump also wants to make permanent the $9 million slashed from the President’s Emergency Plan for AIDS Relief. Modeling studies show that the PEPFAR cuts could result in up to 11 million new HIV infections and 3 million additional deaths by 2030. By one estimate, over 63,000 adults and 6,700 children have already died because of PEPFAR funding freezes. Those deaths are on Musk, DOGE, and Trump, but none of those ghouls care.

Feral DOGE kids remain in agencies

DOGE is now embedded at the General Services Administration, and new permanent government employees now include “Big Balls” Edward Coristine and Luke Farritor. It’s unclear how 19-year-old Coristine’s background as a hacker for hire and 23-year-old Farritor’s background as a SpaceX intern make them qualified to work in the GSA, but LOL nothing matters anymore. Interior Department Secretary Doug Burgum has basically ceded all his authority to former oil executive Tyler Hassen, who is now running that agency as a sort of shadow Cabinet minister after the DOGE takeover. Hassen is perfect for turning Interior from a department that protects public lands into one that exploits them by allowing oil and gas drilling instead.

Another DOGE denizen who got a sweet government job is Airbnb founder Joe Gebbia, who is embarking on a “digital design challenge” overhauling the Office of Personnel Management’s retirement system. Over at the CDC, all grants must now be reviewed by unnamed DOGE employees before money can be released. This occurs after review and approval by agency personnel who are public health experts rather than tweens infatuated with Elon Musk. This mandatory secondary review gives DOGE personnel the ability to block any grants based on whatever the spiders in their brain are saying at the time, which is not exactly helpful for public health.

Even though Elon Musk is no heading up DOGE, DOGE continues terrorizing government agencies.

#DOGE#Department of Government Efficiency#Elon Musk#Trump/Musk Feud#General Services Administration#AI#Joe Gebbia#Edward Coristine#Luke Farritor

24 notes

·

View notes

Text

it’s time i come out and say it!!!!!!!!!!!!!!!!! i love ai slop!!!!!!!!!! i love putting prompts into chatgpt and drinking the slop it gives me!!!!!!!!!!! mmm slop, i love it!!!!!!!!!!!!

april fools!

ai art is a skill issue, pick up a pencil, loser. learn how to write, lame-o. i don’t give a fuck if that ai extension is “summarizing your email”, literally learn how to read, dumbie.

#april fools#ai is a hand of the oppressor forcing us to be more efficient while destroying the environment. i don’t care.

50 notes

·

View notes

Text

my cardiologist seems incapable of communicating with my gp practice their emails to one another keep getting lost im going to become the jokerrrrrr. at what point does it make sense for me to physically take the form i need signing from the gp to the consultant and then deliver it by hand back to the gp. come onnnnnn

#when certain politicians are like we are going to take the nhs digital we are going to use ai to make it more efficient im like#the nhs is not capable of sending one (1) email.#beeps

20 notes

·

View notes

Text

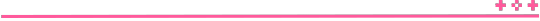

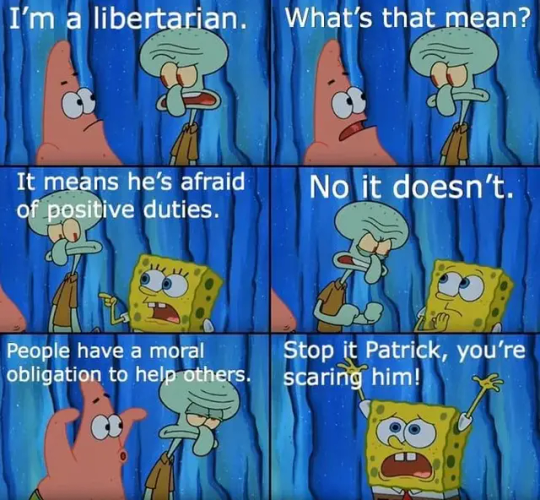

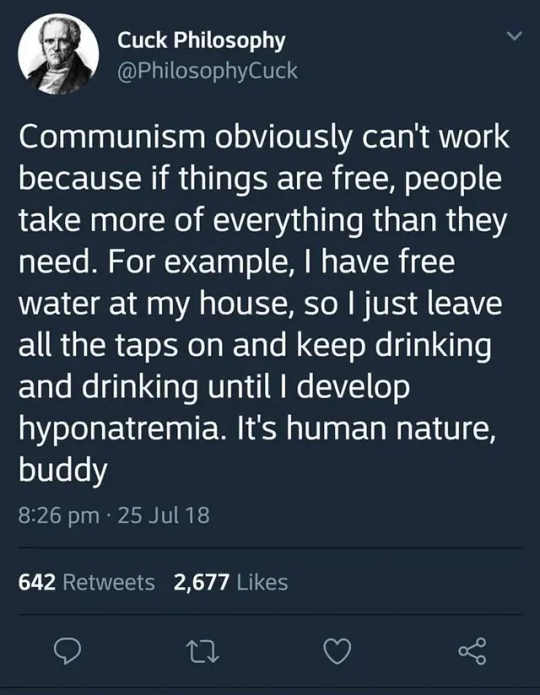

"It is difficult for me to imagine what “personal liberty” is enjoyed by an unemployed person, who goes about hungry, and cannot find employment. Real liberty can exist only where exploitation has been abolished, where there is no oppression of some by others, where there is no unemployment and poverty, where a man is not haunted by the fear of being tomorrow deprived of work, of home and of bread. Only in such a society is real, and not paper, personal and every other liberty possible."

(Pt.2)

#united front#meme#anticapitalism#communism#socialism#imperialism#free palestine#capitalism#memes#anti imperialism#antifascism#free congo#free sudan#free yemen#ai#libertarians#spongebob memes#das kapital#karl marx#studio ghibli#israel is a terrorist state#elon musk#doge#department of government efficiency#cia

26 notes

·

View notes

Text

DOGE Put a College Student in Charge of Using AI to Rewrite Regulations | WIRED

#department of government efficiency#elongated muskrat#elon musk#ai#artificial intelligence#donald trump#trump administration#republicans#federal government#us politics

23 notes

·

View notes

Text

although it may come as a shock based on the content of my blog i am in fact a functional adult with a full time job in stem who is somehow entrusted to manage other humans.

and i have something very important i would like to express to any of my college-ish followers who may be entering the full time workforce soon.

think of the resume you submit when you apply for a new job as a fic you just opened on ao3. you see a wall of text, you zone out. you can't process any of it. you back out of the fic and find something you can make sense of.

the lesson is: please please please for the love of god do not apply for a job with a resume that's more than three pages long MAXIMUM. i can tell you from experience literally no one is reading all of that and all it does is make it harder to understand your actual skills and experience.

#honestly two pages is even better#and use keywords from the job posting in case you're applying somewhere that runs resumes through AI first#this is speaking from experience with other hiring managers too not just my personal preferences#nobody has time for that even if they wanted to read it all#and it does NOTHING to convince me you have good communication skills when you can't efficiently prioritize and organize your experience#which is like the number one thing i'm looking for beyond the actual technical expertise#this message brought to you by the 35 ten page resumes in my inbox this morning

17 notes

·

View notes