#AI letter

Explore tagged Tumblr posts

Text

#ai art generator#my ai art#ai#ai art gallery#ai artwork#ai image#ai generated#ai artist#ai art#ai rose#ai heart#ai letter#roses#hearts#letters

7 notes

·

View notes

Text

Valentine's love letter. Eve vs. AI. Will I win, or am I a loser? Why I'm jealous of AI...

Dear Lovestar, If you follow this blog, you know I am constantly trying new ideas, and I’m about to do something new again. The reason I hate on AI As an artist, I am super jealous of AI. How come AI is more creative than me? It’s not fair… I’m used to winning on creativity, so being a loser is not on my cards. I know what they say… that we’re all losing our jobs to AI, but I only recently…

View On WordPress

#AI#AI letter#Ask AI#can AI do better than people?#how to write a letter#Love letter#people against AI#Valentine&039;s love letter#writing a letter

1 note

·

View note

Text

"this new generation is the dumbest and laziest ever because ai is ruining people's ability to learn!!! Why are you using ai to write emails and cover letters when you could instead LEARN this beautiful, important and valuable skill instead of growing lazy and getting ai slop to slop it for you?" oh my god. oooooh my god. oh my goooooood.

#yeah fucking cover letters and business emails a dying art of divine human communication ruined by ai#''hiring managers love me so much because I handwrite my cover letters like a human'' hiring managers actually don't care if you live or di#tumblr hate posting

1K notes

·

View notes

Text

Grading in the AI era is like *looks at first submission* huh that's a weird mistake *sees the same mistake in 4 other submissions* oh this is the chatGPT answer to this question isn't it

#they have to evaluate a website using an acronym checklist#which - don't get me started. web eval checklists are trash#but it was built into the program's assessment cycle#so I left it in and just also teach better web evaluation strategies#the point is each letter stands for a specific thing#but a whole bunch of students have confidently completed the acronym checklist as if the letters stand for entirely different things?#and all did it the same way#so apparently that's what the latest AI model is spitting out

95 notes

·

View notes

Text

#letters#love letters#aesthetic#art#artificial intelligence#ai#ai art#ai artwork#wordx#feelings#literature#ai artist#dark academia

82 notes

·

View notes

Text

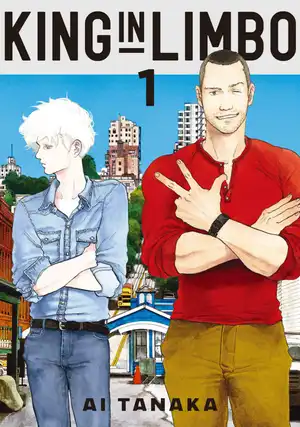

Hey, so today KING IN LIMBO volume 1 came out, and I HIGHLY recommend it. Full disclosure: I’m the letterer on this manga. Kodansha paid me for that, but not to plug this book, or to gush about it with the editor. This is probably my favorite title I’ve ever worked on. The SF premise is very cool and the sense of place is spot on, but what makes KING IN LIMBO stand out is the multifaceted, layered main character. If you’re into grounded, character-heavy SF and want more comics for mature readers please check it out.

I just got the script and assets for the third and final volume and am so excited to see how it wraps up.

#Also there’s just straight up a morgan freeman cameo in it#the MC embodies the ‘good hearted guy who has done fucked up things’ trope i so love#also the other protag is so scrungly#king in limbo#manga#new releases#limbo the king#science fiction#near future science fiction#ai tanaka#and re: the lettering#i don’t get to flex much on this#so it’s pretty seamless#i just genuinely love this comic

664 notes

·

View notes

Text

Any localised translation worth reading is crafted using cultural understanding and carefully honed language skills that are inherently human in nature. AI cannot replicate this and any company hawking content that's been haphazardly tossed through AI doesn't deserve your support in any capacity.

I said the above on Bluesky and while I feel like I'm preaching to the choir (I don't think anyone I know online would knowingly waste their money on AI localised trash), I do think it still needs to be said loudly and clearly so the humans whose (already tenuous) jobs are under threat know that they have community support. I also strongly feel that this support needs to be extended to the editors, letterers, retouchers and others who make a significant difference in how works appear in publications outside their home country.

Generative AI being used solely to remove the human aspect of creative arts is abhorrent in all its forms and unfortunately one of the only ways to fight back meaningfully is to not give companies touting this soulless filth our hard earned money.

It sometimes feels like we're fighting a losing battle, resisting this fascist tech creep, but the humanity of the arts is intrinsic to its cultural value even when we're locked into the hellscape that is capitalism. It's worth resisting.

My friends in the arts, you will always have my support.

#personal#ramblings#random thoughts#i've edited and lettered for friends in the past#but that's nothing compared to my pals down the freelance mines right now struggling to get consistent gigs that make use of their skills#and yeah obviously anyone who cares about the arts is not going to support AI ruining the creations they care about#but we need to continue being angry and consistently resist giving one fucking inch to these corporates#not just because the art we love has value beyond dollars and cents#but because the people that bring it to us do too

57 notes

·

View notes

Text

Thoughts on ai and Art

What has Ai really changed for me is the perception of my own art. Years back, I was extremely concerned of my work being imperfect: everything had to look "right", the anatomy had to be flawless, the lines - clean and refined. The pipe-line had to be flawless too: minimal amount of layers, one - for lines, one - for colors, and a few for lighting/shading.

Meanwhile I was yearning for chaos and the standard pipe-line felt too strict, too limiting. I finished the drawing and cried over the imperfections, but I could not let myself create a new layer and just paint it all over as I wanted to - that would "mess up my perfect psd". This was even harder because I started as a traditional artist and traditional art is basically the same as drawing on one layer or stacking the layers on top of each other whenever you wish to change anything. I was so obsessed with the anatomy/perspective looking right that my works started looking boring and stiff. If I was not sure that I would be able to draw a certain body part at a certain angle ANATOMICALLY PERFECT - I just refused to draw it at all. Drawing back then was HARD. I forced too much limitations upon myself, I was so scared of making any mistakes and thus did everything I could to avoid the risk to fail. It felts like an entire world would see me failing and everyone - literally everyone - will disapprove. And don't get me wrong - the art community in my country has always been astonishingly toxic. We had, like, a group of 20 THOUSANDS individuals hunting down children online and bullying them into oblivion for drawing anime and furry characters in their school textbooks. And pretty much everyone except a small group of people (which I was a part of) thought that it's absolutely fine and this is how the things should be. Even the industry professionals were absolutely sure that young artists have to suffer and be ashamed of everything they do unless it is absolutely flawless at an any aspect. I was ashamed of everything I did back then. I was ashamed of drawing and posting sketches because I felt like they are not good enough to be shown to anyone. And then the Ai-boom started. And I had mixed feelings because I was not THAT scared, but I was somewhat disappointed of people? General public praised the generated slop ignoring the mistakes far worse than what real artists got bullied for for DECADES. The synthetic artworks are shiny. They are overrendered. They are liveless, boring, they lack fundamentals and yet somehow people viewed them as some kind of a miracle. I decided to learn how does those little machines generated their slop out of morbid curiosity, just to make sure that I got it right and it is spitting out cadavers created from mutilated, dismembered works of real artists. Used by people who did not care enough to pick up a bloody pencils. And I thought: why would I care enough to look at something that no one bothered to create? And then I started seeing everything I do completely different. I suddenly stopped caring of being perfect. Every piece I have ever done, every work I was crying over for it being ugly, every messy sketch and unfinished doodle suddenly started to matter a lot. Not that I stopped caring of doing my best, no. I stopped wishing to disown my own mistakes. They are my own. I cared enough to try and fail and to try again, and fail so badly that I wanted to cry, scream and throw up. And I repeated the cycle for long enough that I started to enjoy my silly doodles and started loving every tiny imperfection because this is what made my art so human. I still suck at drawing hands and feet. My line-art is messy and I started doing it right on top of my colored sketch. My pipe-line is in chaos and my PSDs look like a total mess of three hundreds of layers. I draw sketches with huge-ass round brush only adding the details that really matters. My works are better than they could ever be because they feel alive and chaotic as we human had always been. This is a love letter to my art and write it while flipping off my middle finger to the cadavers generated by the machine. I will not be stopped by glorified autocomplete and I refuse to be outdone by people who confuse googling an image with the act of creation.

My worst drawing is better than any of the generative imagery out there, because I cared drawing it.

232 notes

·

View notes

Text

Sweet reward for obeying commands

#THIS PIECE WAS SPONSORED BY THE HOURS OF RESEARCH I DID ON HUMAN HORMONE AND THEIR EFFECTS AND THE 0 REFERENCES I USED FOR THE SERVER TOWERS#ghosts art#SAYER#SAYER podcast#SAYER ai#jacob hale#sayerhale#well kids. lets analyze this piece. what did the author (me) mean by this?#anyways uh this is. something ive drawn. and made. and posted.#if you are thinking to yourself ''this looks intimate'' then yes. you are right.#i also feel like i owe a hand written apology letter to everyone who works in IT. i am NOT a technology nerd. but i AM a human antomy nerd.#anyways!!! role swap!!!!! its now Hale prodding around SAYER's ''brain'' instead of the opposite :)#the filename for this is SAYER_sayerhale objectum nonsense . just a fun silly fact for everyone#because i am aroace towards humans. but i am also very much objectum#i dont know if ill have the balls to post this in the official server . but enjoy#objectum#<- dont worry about it#ALSO PLEASEEEEEE ZOOM IN ON THE DETAILS. IM BEGGING YOU.#MY ASS DID NOT SPEND HOURS DOING ALL THAT RESEARCH FOR NOTHING!!!!#''what exactly are hale's biometric readings telling us about'' decide that for yourself . up for interpretation.

147 notes

·

View notes

Text

Rukmini Patra

If you ever feel weird for rereading favourite chats before sleeping.. don’t worry—even today, as a daily ritual, Dwarkadhish listens to Rukmini Patra before He sleeps. Some loves are forever

This sacred tradition continues at the Dwarkadhish temple, keeping the essence of Rukmini & Krishna’s eternal bond alive. It is believed that reading this letter with faith can bless one’s heart with the love they seek. A beautiful reminder that devotion and love always find their way.

divider @/strangergraphics

art - instagram • pinterest

#krishna#krishnablr#gopiblr#desiblr#rukminikrishna#desi tumblr#desi aesthetic#desiaesthetic#rukmini#hinduism#love letters#loveletter#dwarkadhish#dwarka#ai artwork#ai generated#lovestory#apricitycanvas#Spotify

61 notes

·

View notes

Text

#if you see smthn in the background no you dont#sometimesanequine#equine art#horse art#my art#to clarify on the background thing. was demonstrating how lines and shapes and numbers and letters -#and varying colors can kind of poison ai data to a friend and figured. i can post this too

35 notes

·

View notes

Text

#ai art generator#my ai art#ai#ai generated#ai image#ai artist#ai artwork#ai boy#ai art#stars#gemstones#ai star#ai gemstone#letters#ai letter

5 notes

·

View notes

Text

I don't have time to swap accounts but I just watched an ai generated coca cola advertisement and I want to kill myself

#IT WAS SO BAD#SO SHITTY#WHAT THE FUCK WERE THEY THINKING#and I know it was generated because THEY PUT THE DISCLAIMER IN BIG LETTERS#coca cola#ai critical#notjimmy

63 notes

·

View notes

Text

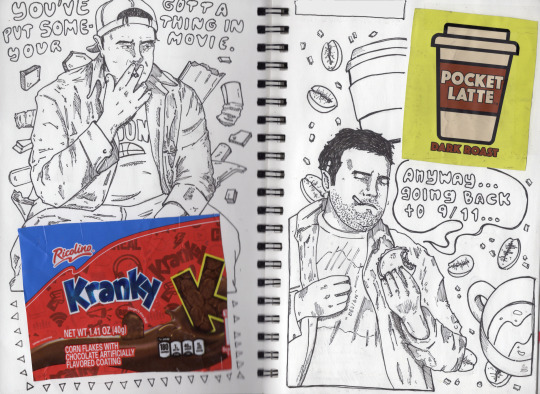

i think i have drawn these pics 5 times.

and i will draw them 5 more times bc i like them.

#artists on tumblr#sketchbook#fanart#attendingmidnightscreen_art#sketch#half in the bag#best of the worst#not ai#mike stoklasa#hitb#rlm#red letter media#redlettermedia#botw#rlm fanart

35 notes

·

View notes

Text

oh no she's talking about AI some more

to comment more on the latest round of AI big news (guess I do have more to say after all):

chatgpt ghiblification

trying to figure out how far it's actually an advance over the state of the art of finetunes and LoRAs and stuff in image generation? I don't keep up with image generation stuff really, just look at it occasionally and go damn that's all happening then, but there are a lot of finetunes focusing on "Ghibli's style" which get it more or less well. previously on here I commented on an AI video model generation that patterned itself on Ghibli films, and video is a lot harder than static images.

of course 'studio Ghibli style' isn't exactly one thing: there are stylistic commonalities to many of their works and recurring designs, for sure, but there are also details that depend on the specific character designer and film in question in large and small ways (nobody is shooting for My Neighbours the Yamadas with this, but also e.g. Castle in the Sky does not look like Pom Poko does not look like How Do You Live in a number of ways, even if it all recognisably belongs to the same lineage).

the interesting thing about the ghibli ChatGPT generations for me is how well they're able to handle simplification of forms in image-to-image generation, often quite drastically changing the proportions of the people depicted but recognisably maintaining correspondence of details. that sort of stylisation is quite difficult to do well even for humans, and it must reflect quite a high level of abstraction inside the model's latent space. there is also relatively little of the 'oversharpening'/'ringing artefact' look that has been a hallmark of many popular generators - it can do flat colour well.

the big touted feature is its ability to place text in images very accurately. this is undeniably impressive, although OpenAI themeselves admit it breaks down beyond a certain point, creating strange images which start out with plausible, clean text and then it gradually turns into AI nonsense. it's really weird! I thought text would go from 'unsolved' to 'completely solved' or 'randomly works or doesn't work' - instead, here it feels sort of like the model has a certain limited 'pipeline' for handling text in images, but when the amount of text overloads that bandwidth, the rest of the image has to make do with vague text-like shapes! maybe the techniques from that anthropic thought-probing paper might shed some light on how information flows through the model.

similarly the model also has a limit of scene complexity. it can only handle a certain number of objects (10-20, they say) before it starts getting confused and losing track of details.

as before when they first wired up Dall-E to ChatGPT, it also simply makes prompting a lot simpler. you don't have to fuck around with LoRAs and obtuse strings of words, you just talk to the most popular LLM and ask it to perform a modification in natural language: the whole process is once again black-boxed but you can tell it in natural language to make changes. it's a poor level of control compared to what artists are used to, but it's still huge for ordinary people, and of course there's nothing stopping you popping the output into an editor to do your own editing.

not sure the architecture they're using in this version, if ChatGPT is able to reason about image data in the same space as language data or if it's still calling a separate image model... need to look that up.

openAI's own claim is:

We trained our models on the joint distribution of online images and text, learning not just how images relate to language, but how they relate to each other. Combined with aggressive post-training, the resulting model has surprising visual fluency, capable of generating images that are useful, consistent, and context-aware.

that's kind of vague. not sure what architecture that implies. people are talking about 'multimodal generation' so maybe it is doing it all in one model? though I'm not exactly sure how the inputs and outputs would be wired in that case.

anyway, as far as complex scene understanding: per the link they've cracked the 'horse riding an astronaut' gotcha, they can do 'full glass of wine' at least some of the time but not so much in combination with other stuff, and they can't do accurate clock faces still.

normal sentences that we write in 2025.

it sounds like we've moved well beyond using tools like CLIP to classify images, and I suspect that glaze/nightshade are already obsolete, if they ever worked to begin with. (would need to test to find out).

all that said, I believe ChatGPT's image generator had been behind the times for quite a long time, so it probably feels like a bigger jump for regular ChatGPT users than the people most hooked into the AI image generator scene.

of course, in all the hubbub, we've also already seen the white house jump on the trend in a suitably appalling way, continuing the current era of smirking fascist political spectacle by making a ghiblified image of a crying woman being deported over drugs charges. (not gonna link that shit, you can find it if you really want to.) it's par for the course; the cruel provocation is exactly the point, which makes it hard to find the right tone to respond. I think that sort of use, though inevitable, is far more of a direct insult to the artists at Ghibli than merely creating a machine that imitates their work. (though they may feel differently! as yet no response from Studio Ghibli's official media. I'd hate to be the person who has to explain what's going on to Miyazaki.)

google make number go up

besides all that, apparently google deepmind's latest gemini model is really powerful at reasoning, and also notably cheaper to run, surpassing DeepSeek R1 on the performance/cost ratio front. when DeepSeek did this, it crashed the stock market. when Google did... crickets, only the real AI nerds who stare at benchmarks a lot seem to have noticed. I remember when Google releases (AlphaGo etc.) were huge news, but somehow the vibes aren't there anymore! it's weird.

I actually saw an ad for google phones with Gemini in the cinema when i went to see Gundam last week. they showed a variety of people asking it various questions with a voice model, notably including a question on astrology lmao. Naturally, in the video, the phone model responded with some claims about people with whatever sign it was. Which is a pretty apt demonstration of the chameleon-like nature of LLMs: if you ask it a question about astrology phrased in a way that implies that you believe in astrology, it will tell you what seems to be a natural response, namely what an astrologer would say. If you ask if there is any scientific basis for belief in astrology, it would probably tell you that there isn't.

In fact, let's try it on DeepSeek R1... I ask an astrological question, got an astrological answer with a really softballed disclaimer:

Individual personalities vary based on numerous factors beyond sun signs, such as upbringing and personal experiences. Astrology serves as a tool for self-reflection, not a deterministic framework.

Ask if there's any scientific basis for astrology, and indeed it gives you a good list of reasons why astrology is bullshit, bringing up the usual suspects (Barnum statements etc.). And of course, if I then explain the experiment and prompt it to talk about whether LLMs should correct users with scientific information when they ask about pseudoscientific questions, it generates a reasonable-sounding discussion about how you could use reinforcement learning to encourage models to focus on scientific answers instead, and how that could be gently presented to the user.

I wondered if I'd asked it instead to talk about different epistemic regimes and come up with reasons why LLMs should take astrology into account in their guidance. However, this attempt didn't work so well - it started spontaneously bringing up the science side. It was able to observe how the framing of my question with words like 'benefit', 'useful' and 'LLM' made that response more likely. So LLMs infer a lot of context from framing and shape their simulacra accordingly. Don't think that's quite the message that Google had in mind in their ad though.

I asked Gemini 2.0 Flash Thinking (the small free Gemini variant with a reasoning mode) the same questions and its answers fell along similar lines, although rather more dry.

So yeah, returning to the ad - I feel like, even as the models get startlingly more powerful month by month, the companies still struggle to know how to get across to people what the big deal is, or why you might want to prefer one model over another, or how the new LLM-powered chatbots are different from oldschool assistants like Siri (which could probably answer most of the questions in the Google ad, but not hold a longform conversation about it).

some general comments

The hype around ChatGPT's new update is mostly in its use as a toy - the funny stylistic clash it can create between the soft cartoony "Ghibli style" and serious historical photos. Is that really something a lot of people would spend an expensive subscription to access? Probably not. On the other hand, their programming abilities are increasingly catching on.

But I also feel like a lot of people are still stuck on old models of 'what AI is and how it works' - stochastic parrots, collage machines etc. - that are increasingly falling short of the more complex behaviours the models can perform, now prediction combines with reinforcement learning and self-play and other methods like that. Models are still very 'spiky' - superhumanly good at some things and laughably terrible at others - but every so often the researchers fill in some gaps between the spikes. And then we poke around and find some new ones, until they fill those too.

I always tried to resist 'AI will never be able to...' type statements, because that's just setting yourself up to look ridiculous. But I will readily admit, this is all happening way faster than I thought it would. I still do think this generation of AI will reach some limit, but genuinely I don't know when, or how good it will be at saturation. A lot of predicted 'walls' are falling.

My anticipation is that there's still a long way to go before this tops out. And I base that less on the general sense that scale will solve everything magically, and more on the intense feedback loop of human activity that has accumulated around this whole thing. As soon as someone proves that something is possible, that it works, we can't resist poking at it. Since we have a century or more of science fiction priming us on dreams/nightmares of AI, as soon as something comes along that feels like it might deliver on the promise, we have to find out. It's irresistable.

AI researchers are frequently said to place weirdly high probabilities on 'P(doom)', that AI research will wipe out the human species. You see letters calling for an AI pause, or papers saying 'agentic models should not be developed'. But I don't know how many have actually quit the field based on this belief that their research is dangerous. No, they just get a nice job doing 'safety' research. It's really fucking hard to figure out where this is actually going, when behind the eyes of everyone who predicts it, you can see a decade of LessWrong discussions framing their thoughts and you can see that their major concern is control over the light cone or something.

#ai#at some point in this post i switched to capital letters mode#i think i'm gonna leave it inconsistent lol

34 notes

·

View notes

Text

don't have the energy or time to render anything so take some sillies I've got in my tiny sketchbook

mostly just trying to get faces down. the tiny sketchbook is making it easier for me to draw frequently again :)

#personally really fond of the donut and epsilon#i think all the AIs have their greek letter as a kind of tattoo behind their left ear#krae draws#krae art#my art#rvb#red vs blue#rvb epsilon#agent south dakota#rvb south#franklin delano donut#rvb donut#rvb caboose

22 notes

·

View notes