#AWSCloud

Explore tagged Tumblr posts

Text

Understanding the Boot Process in Linux

Six Stages of Linux Boot Process

Press the power button on your system, and after few moments you see the Linux login prompt.

Have you ever wondered what happens behind the scenes from the time you press the power button until the Linux login prompt appears?

The following are the 6 high level stages of a typical Linux boot process.

BIOS Basic Input/Output System

MBR Master Boot Record executes GRUB

GRUB Grand Unified Boot Loader Executes Kernel

Kernel Kernel executes /sbin/init

Init init executes runlevel programs

Runlevel Runlevel programs are executed from /etc/rc.d/rc*.d/

1. BIOS

BIOS stands for Basic Input/Output System

Performs some system integrity checks

Searches, loads, and executes the boot loader program.

It looks for boot loader in floppy, cd-rom, or hard drive. You can press a key (typically F12 of F2, but it depends on your system) during the BIOS startup to change the boot sequence.

Once the boot loader program is detected and loaded into the memory, BIOS gives the control to it.

So, in simple terms BIOS loads and executes the MBR boot loader.

2. MBR

MBR stands for Master Boot Record.

It is located in the 1st sector of the bootable disk. Typically /dev/hda, or /dev/sda

MBR is less than 512 bytes in size. This has three components 1) primary boot loader info in 1st 446 bytes 2) partition table info in next 64 bytes 3) mbr validation check in last 2 bytes.

It contains information about GRUB (or LILO in old systems).

So, in simple terms MBR loads and executes the GRUB boot loader.

3. GRUB

GRUB stands for Grand Unified Bootloader.

If you have multiple kernel images installed on your system, you can choose which one to be executed.

GRUB displays a splash screen, waits for few seconds, if you don’t enter anything, it loads the default kernel image as specified in the grub configuration file.

GRUB has the knowledge of the filesystem (the older Linux loader LILO didn’t understand filesystem).

Grub configuration file is /boot/grub/grub.conf (/etc/grub.conf is a link to this). The following is sample grub.conf of CentOS.

#boot=/dev/sda

default=0

timeout=5

splashimage=(hd0,0)/boot/grub/splash.xpm.gz

hiddenmenu

title CentOS (2.6.18-194.el5PAE)

root (hd0,0)

kernel /boot/vmlinuz-2.6.18-194.el5PAE ro root=LABEL=/

initrd /boot/initrd-2.6.18-194.el5PAE.img

As you notice from the above info, it contains kernel and initrd image.

So, in simple terms GRUB just loads and executes Kernel and initrd images.

4. Kernel

Mounts the root file system as specified in the “root=” in grub.conf

Kernel executes the /sbin/init program

Since init was the 1st program to be executed by Linux Kernel, it has the process id (PID) of 1. Do a ‘ps -ef | grep init’ and check the pid.

initrd stands for Initial RAM Disk.

initrd is used by kernel as temporary root file system until kernel is booted and the real root file system is mounted. It also contains necessary drivers compiled inside, which helps it to access the hard drive partitions, and other hardware.

5. Init

Looks at the /etc/inittab file to decide the Linux run level.

Following are the available run levels

0 – halt

1 – Single user mode

2 – Multiuser, without NFS

3 – Full multiuser mode

4 – unused

5 – X11

6 – reboot

Init identifies the default initlevel from /etc/inittab and uses that to load all appropriate program.

Execute ‘grep initdefault /etc/inittab’ on your system to identify the default run level

If you want to get into trouble, you can set the default run level to 0 or 6. Since you know what 0 and 6 means, probably you might not do that.

Typically you would set the default run level to either 3 or 5.

6. Runlevel programs

When the Linux system is booting up, you might see various services getting started. For example, it might say “starting sendmail …. OK”. Those are the runlevel programs, executed from the run level directory as defined by your run level.

Depending on your default init level setting, the system will execute the programs from one of the following directories.

Run level 0 – /etc/rc.d/rc0.d/

Run level 1 – /etc/rc.d/rc1.d/

Run level 2 – /etc/rc.d/rc2.d/

Run level 3 – /etc/rc.d/rc3.d/

Run level 4 – /etc/rc.d/rc4.d/

Run level 5 – /etc/rc.d/rc5.d/

Run level 6 – /etc/rc.d/rc6.d/

Please note that there are also symbolic links available for these directory under /etc directly. So, /etc/rc0.d is linked to /etc/rc.d/rc0.d.

Under the /etc/rc.d/rc*.d/ directories, you would see programs that start with S and K.

Programs starts with S are used during startup. S for startup.

Programs starts with K are used during shutdown. K for kill.

There are numbers right next to S and K in the program names. Those are the sequence number in which the programs should be started or killed.

For example, S12syslog is to start the syslog deamon, which has the sequence number of 12. S80sendmail is to start the sendmail daemon, which has the sequence number of 80. So, syslog program will be started before sendmail.

There you have it. That is what happens during the Linux boot process.

for more details visit www.qcsdclabs.com

#qcsdclabs#hawkstack#hawkstack technologies#linux#redhat#information technology#awscloud#devops#cloudcomputing

2 notes

·

View notes

Text

youtube

Top 5 Cloud Computing Skills for 2025 (Salaries Included)

In this video, he share the top 5 Cloud Computing Skills for 2025, and he included all the salaries for these skills as well as the Cloud Computing Jobs you can apply for.

#education#Cloud Computing Skills#cloudcomputing#educate yourself#educate yourselves#security#technology#awscloud#aws cloud#ai machine learning#Top 5 Cloud Computing Skills for 2025#education for all#youtube#Youtube

2 notes

·

View notes

Text

🤝Hand holding support is available with 100% passing assurance🎯 📣Please let me know if you or any of your contacts need any certificate📣 📝or training to get better job opportunities or promotion in current job📝 📲𝗖𝗼𝗻𝘁𝗮𝗰𝘁 𝗨𝘀 : Interested people can whatsapp me directly ✅WhatsApp :- https://wa.aisensy.com/uemtSK 💯Proxy available with 100% passing guarantee.📌 🎀 FIRST PASS AND THAN PAY 🎀 ISC2 : CISSP & CCSP Cisco- CCNA, CCNP, Specialty ITILv4 CompTIA - All exams Google-Google Cloud Associate & Google Cloud Professional People Cert- ITILv4 PMI-PMP, PMI-ACP, PMI-PBA, PMI-CAPM, PMI-RMP, etc. EC Counsil-CEH,CHFI AWS- Associate, Professional, Specialty Juniper- Associate, Professional, Specialty Oracle - All exams Microsoft - All exams SAFe- All exams Scrum- All Exams Azure & many more… 📲𝗖𝗼𝗻𝘁𝗮𝗰𝘁 𝗨𝘀 : Interested people can whatsapp me directly ✅WhatsApp :- https://wa.aisensy.com/uemtSK

#ccna#ccnatraining#ccnacertification#cisco ccna#awscloud#aws course#devops#cybersecurity#salesforce#pmp certification#pmp training#pmp course#pmp exam

1 note

·

View note

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

Google Cloud Professional Cloud Architect Certification. Become a GCP Cloud Architect, Latest GCP Exam and Case Studies.

Google Cloud Platform is one of the fastest-growing cloud service platforms offered today that lets you run your applications and data workflows at a 'Google-sized' scale.

Google Cloud Certified Professional Cloud Architect certification is one of the most highly desired IT certifications out today. It is also one of the most challenging exams offered by any cloud vendor today. Passing this exam will take many hours of study, hands-on experience, and an understanding of a very wide range of GCP topics.

Luckily, we're here to help you out! This course is designed to be your best single resource to prepare for and pass the exam to become a certified Google Cloud Architect.

Why should do a Google Cloud Certification?

Here are few results from Google's 2020 Survey:

89% of Google Cloud certified individuals are more confident about their cloud skills

GCP Cloud Architect was the highest paying certification of 2020 (2) and 2019 (3)

More than 1 in 4 of Google Cloud certified individuals took on more responsibility or leadership roles at work

Why should you aim for Google Cloud - GCP Cloud Architect Certification?

Google Cloud Professional Cloud Architect certification helps you gain an understanding of cloud architecture and Google Cloud Platform.

As a Cloud Architect, you will learn to design, develop, and manage robust, secure, scalable, highly available, and dynamic solutions to drive business objectives.

The Google Cloud Certified - Professional Cloud Architect exam assesses your ability to:

Design and architect a GCP solution architecture

Manage and provision the GCP solution infrastructure

Design for security and compliance

Analyze and optimize technical and business processes

Manage implementations of Google Cloud architecture

Ensure solution and operations reliability

Are you ready to get started on this amazing journey to becoming a Google Cloud Architect?

So let's get started!

Who this course is for:

You want to start your Cloud Journey with Google Cloud Platform

You want to become a Google Cloud Certified Professional Cloud Architect

#googlecloud#aws#cloud#cloudcomputing#azure#google#googlepixel#technology#machinelearning#awscloud#devops#bigdata#python#coding#googlecloudplatform#cybersecurity#gcp#developer#microsoft#linux#datascience#tech#microsoftazure#programming#amazonwebservices#amazon#software#pixel#xl#azurecloud

5 notes

·

View notes

Text

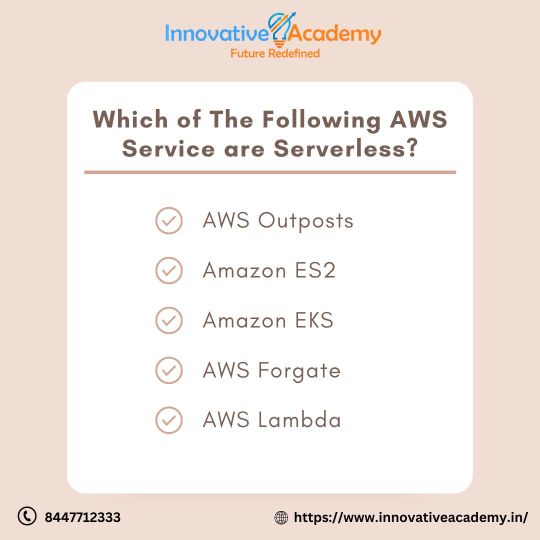

Quiz Time

Visit: https://innovativeacademy.in Call us: +91 8447712333

#InnovativeAcademy#microsoftcertifications#linux#linuxcertifications#cloudcomputing#awscloud#ccnacertifications#cybersecurity#coding#developer#RedHatTraining#redhatlinux#machinelearning#bigdata#googlecloud#iot#software#java#awstraining#ccna#javascript#gcp#security#CCNAQuiz#CISCO#Network#Microsoft#itcourses#quiz

2 notes

·

View notes

Text

Tsofttech is a One of the best quality training center for online, Classroom and Corporate trainings In Hyderabad . We are providing DEVOPS Online Training through world wide. Tsofttech is excellent DEVOPS Training center in Hyderabad. After course we will give support for certification, Resume preparation and how to prepare for interviews.

For More: https://tsofttech.com/devops-online-training

Attend Free Demo On DevOps With AWS Sign Up Now: https://bit.ly/3rp0W84 To Boost Your IT Career to Next Level by Expert Faculty's. Register now to reserve your spot.

3 notes

·

View notes

Text

Exclusive Training institute for AWS and many courses | Wiculty

Wiculty’s AWS training and certification will help you master skills like AWS Cloud, Lambda, Redshift, EC2, IAM, S3, Global Accelerator and more. Also, in this AWS course, you will work on various tools of AWS cloud platform and create highly scalable SaaS application. Learn AWS from AWS certified experts to become an AWS solutions architect.Download CurriculumFree Linux & Shell Scripting Course

2 notes

·

View notes

Text

Simplifying Your Journey: AWS Cloud Migration with Arrow PC Network

In today's digital age, businesses are increasingly turning to cloud solutions to leverage scalability, flexibility, and cost-effectiveness. As a trusted technology partner, Arrow PC Network is committed to assisting enterprises in unlocking the full potential of cloud computing. This blog post explores the benefits and best practices of AWS cloud migration through the lens of Arrow PC Network, showcasing their expertise in seamlessly transitioning businesses to the cloud

The Power of AWS Cloud:

The AWS cloud is a leading provider of on-demand cloud computing resources, offering a wide range of services, including compute power, storage, databases, analytics, machine learning, and more. Arrow PC Network recognizes the transformative power of AWS and helps businesses harness this potential to drive innovation, reduce operational costs, and enhance overall efficiency.

Why Migrate to AWS Cloud with Arrow PC Network? :

Arrow PC Network distinguishes itself as an AWS migration partner by providing a comprehensive suite of services tailored to meet the unique needs of each business. From initial planning and assessment to the execution and post-migration support, their certified experts guide businesses through the migration journey with meticulous attention to detail.

Understanding the Business Needs:

Arrow PC Network begins the migration process by understanding the specific requirements and objectives of the business. They work closely with key stakeholders to analyze existing infrastructure, applications, and data to devise a personalized migration strategy that ensures minimal disruption to ongoing operations.

Picking the Right Migration Approach:

There is no one-size-fits-all approach to cloud migration. Arrow PC Network advises businesses on choosing the most suitable migration model, be it a "lift-and-shift" approach for rapid migration or a more complex re-architecting for optimizing performance and cost-effectiveness.

Data Security and Compliance:

Arrow PC Network prioritizes data security and compliance throughout the migration journey. They implement robust security measures, including encryption, access controls, and continuous monitoring, to safeguard sensitive information and meet industry-specific regulations.

Seamless Application Migration:

Migrating applications to the cloud requires careful planning and execution. Arrow PC Network's seasoned experts utilize automation tools and a phased approach to ensure a smooth transition, reducing the risk of downtime and data loss.

Optimizing Costs and Performance:

One of the primary benefits of cloud migration is cost optimization. Arrow PC Network assists businesses in selecting the most cost-efficient AWS services, right-sizing resources, and leveraging auto-scaling capabilities to achieve maximum performance while keeping operational expenses in check.

Post-Migration Support and Monitoring:

Cloud migration is not a one-time event; it's an ongoing journey. Arrow PC Network provides continuous support and monitoring to address any post-migration challenges, ensuring that the migrated environment remains secure, performant, and scalable.

Conclusion :

As businesses continue to embrace the cloud as a key driver of innovation and growth, Arrow PC Network stands as a reliable partner in guiding organizations through the complexities of AWS cloud migration. Their expertise, combined with a deep understanding of individual business needs, empowers enterprises to leverage the full potential of AWS and embark on a successful cloud journey.

Let Arrow PC Network be your trusted companion on your path to digital transformation with AWS cloud migration.

linkedin.com/in/karanjot-singh - Cloud Expert at Arrow PC Network

1 note

·

View note

Text

#information technology#software#software development#testing#automation#awscloud#cybersecurity#digitaltransformation

0 notes

Text

AWS Cloud Training in Pune: The Smartest Move for Tech Enthusiasts

The world is moving to the cloud, and so should your career. As organizations adopt digital-first strategies, the need for cloud computing experts—especially those skilled in Amazon Web Services (AWS)—is rising fast. If you’re in Pune and ready to step into the world of cloud technology, AWS Cloud Training in Pune with WebAsha Technologies is your best move toward a high-growth IT career.

What is AWS? And Why Should You Learn It?

Amazon Web Services (AWS) is the most widely used cloud computing platform globally, offering secure, scalable, and cost-efficient infrastructure. It powers businesses in every domain—from tech startups to government agencies—enabling them to deploy applications, store data, and manage services in real-time.

Why AWS Skills Are Career Gold:

AWS holds over 30% of the global cloud market share

More than 1 million active users globally

High-paying job roles across industries

Certifications that are recognized worldwide

Why Choose WebAsha Technologies for AWS Cloud Training in Pune?

At WebAsha Technologies, we go beyond textbooks. We deliver career-oriented training that helps you not only learn AWS but also implement it in real-world scenarios. Whether you're a student, a working professional, or someone seeking a career change, our program is tailored to meet your goals.

What Makes Our AWS Training Stand Out?

Certified Trainers with real-time cloud project experience

Hands-On Learning with labs and practical simulations

Live Projects to prepare for real-world deployments

Updated Curriculum

Interview Preparation & Job Support

Who Can Join AWS Cloud Training in Pune?

This course is designed for learners from various backgrounds. You don’t need prior cloud experience—just curiosity and dedication.

Ideal for:

IT Professionals looking to upskill

Freshers eager to start a career in cloud computing

System Admins and Network Engineers

Software Developers and DevOps Engineers

Business Owners or Startup Founders with technical goals

Course Highlights – What You’ll Learn

Our AWS Cloud Training in Pune focuses on building your knowledge from the ground up and preparing you for AWS certifications like Solutions Architect Associate.

Core Modules Include:

Introduction to Cloud & AWS Overview

EC2: Compute Services

S3: Cloud Storage Solutions

VPC: Virtual Private Network Setup

IAM: User and Permission Management

RDS & DynamoDB: Databases on AWS

Elastic Load Balancers & Auto Scaling

Cloud Monitoring with CloudWatch

AWS Lambda & Serverless Computing

Real-World Project Deployment

Career Path After AWS Training

Once you complete the course, you can aim for industry-recognized certifications such as:

AWS Certified Cloud Practitioner

AWS Certified Solutions Architect – Associate

AWS Certified Developer – Associate

AWS Certified SysOps Administrator

Why Pune is the Perfect Place to Learn AWS

As one of India’s top IT cities, Pune hosts numerous tech parks and multinational companies. Cloud computing roles are booming here, and employers actively seek AWS-certified professionals. By completing AWS Cloud Training in Pune, you position yourself for local and global job opportunities.

Final Words: Let the Cloud Power Your Future

With cloud computing becoming essential in every sector, there has never been a better time to learn AWS. Enroll in AWS Cloud Training in Pune at WebAsha Technologies and take your first step toward a high-paying, future-ready career. Learn from experts, get hands-on experience, and earn certifications that can open doors globally.

0 notes

Text

Why ITIO Innovex AWS Solutions Outperform the Rest 🚀☁️

Not all AWS partners are created equal. Here’s why ITIO Innovex AWS is miles ahead:

✅ Custom-Built AWS Architectures – Not cookie-cutter setups. We tailor cloud environments that match your exact business needs—scalable, secure, and cost-optimized.

✅ FinTech & Crypto Expertise – We specialize in cloud infrastructure for Neo Banks, Payment Gateways, Crypto Exchanges, and more—so you get domain-specific solutions, not generic ones.

✅ Full Compliance by Design – PCI DSS, GDPR, ISO... baked into your AWS environment from Day 1. No post-deployment surprises.

✅ Blazing-Fast Deployment – Launch production-ready systems in days, not months.

✅ 24/7 DevOps & Cost Optimization – Constant monitoring, automatic scaling, and ongoing cloud spend audits to save you money without sacrificing performance.

⚡ AWS is powerful—but with ITIO Innovex, it becomes unstoppable. Let’s make your AWS stack work smarter, faster, and safer.

#AWS #CloudComputing #ITIOInnovex #CloudExperts #FinTechCloud #CryptoInfrastructure

#crypto#cybersecurity#digital banking licenses#digitalbanking#investors#white label crypto exchange software#bitcoin#digital marketing#fintech#financial advisor#awscloud#cloudcomputing#devops#digitalcurrency#altcoin#investment#blockchain#fintechcloud#cryptoinvesting#crypto industry

1 note

·

View note

Text

Making a Game with Amazon Q

UPDATE: I can't redeem for the cool shirt because they don't send them to America and I didn't read that in the doc when I started, so I just wasted hours of time lmao.

Today, I got this email from Amazon AWS:

and I thought "eh why not, I get a cool shirt."

So, I tried it out. I read the article that Haowen Huang wrote about how he made his own platformer game with Amazon Q. I tried creating a similar environment with Python and Pygame, but I wanted a different game. The game I wanted to recreate was Flappy Bird. While it isn't a "retro game," I still think it fits the theme of a classic '8bit' game, and it's retro enough for me (don't get mad haha). So I started by installing the Amazon Q cask on Homebrew and setting up a Python project and virtual environment with Pycharm. After I did that, I opened the terminal and started a q chat. Just as a side note, I have to admit, I am a fan of the auto-fill menu options available in the terminal with Amazon Q installed. It looks nice and it is kinda useful (especially for cd). I also love how Amazon Q chat will ask you for your permission before doing any task.

Once opening the chat, I gave it the following prompt:

"Greetings! In this directory, I am using python 3.11 with pygame to try and make a 'retro' styled game. The game I want to make is Flappy Bird. While it's not entirely retro, I still think it fits the theme, and it's retro to me, so I think it counts. Can you generate me some code and maybe textures/sprites to recreate flappy bird?"

It then took FOREVER to generate roughly six images (.png) and they were ALL corrupt. I think it may have just generated a hash of a PNG, because the content was just text, no binary. With this failure, I started to think outside of the box and start thinking into "what would be easy for AI to make?" I then asked it: "All of the images in the assets sub-directory are corrupted/invalid. How about instead of using images, we use vectors, more specifically SVGs? Can we please replace the old images with new SVGs and update the code to correspond with these changes?"

And it made one bird SVG. It tried to generate some code, but ran into issues when checking if I have specific packages installed with pip (because I don't have pip installed globally). It did not make anything else until prompted again:

"You made an amazing bird! But the rest of our code still needs updated and we need to replace all of the previous images. Please generate the remaining images in the assets directory, and rewrite the code. Don't worry about if we have packages installed or not, I can handle that myself; use whichever packages you deem necessary."

After this prompt, it made the rest of the SVGs, which all looked acceptable, and also generated some updated code and created its own requirements. Unfortunately, the SVG library it tried to use (cairo) would not work, and I did not want to have any native dependencies, so I asked it to fix that itself.

"Could you maybe rewrite the code to parse the SVGs without needing any sort of dependency like cairo? I don't like having to install anything extra and I'm getting errors for not having cairo installed (even though I do) and I would rather not troubleshoot that."

And it did exactly that. It removed the dependencies and I had a somewhat working game. Of course there were bugs. The two in this one had an issue where the ground was not continuous (would teleport at the end of the screen) and that when the bird hit the ground, the game would continue running and essentially soft-lock. I then asked Q to fix its issues:

"This runs great! Two slight issues. First one is that the ground kinda teleports to the new side once it goes off the screen, leaving a blank ground on the bottom of the screen. Second, when the bird hits the ground, the game does not end and it soft-locks. Could you update the code to fix this?"

After this, it fixed both of those issues, but introduced a new issue. In this change, Q decided to keep the ground moving, even when the game is finished. I asked it to fix that issue:

"Amazing work! One last tiny thing, when the game is over, the ground keeps moving, can you make it so the ground doesn't move when the game is over?"

And after that, the game seemed to work great! The last issue I had was that the score text in the top left was completely white and hard to see, so I asked Q to make it have an outline. Q asked if it was okay to remove pretty much all of the code, which seemed odd, so I backed up the code and allowed it. After I allowed it, Q did that exact action, then threw an error saying it couldn't find any code relating to scoring... after it had just removed it. It then continued to regenerate the whole file resulting in a broken game. However, it did generate a method called draw_text_with_outline which was great, because I could just restore my previous code and implement that method myself. It was very quick and easy. After I did that, I had a completely working Flappy Bird! Below is a video of the game functioning:

My take out of this was that Amazon Q can be helpful getting stared and creating a working base game, but still suffers from common issues that other AIs have: being forgetful, creating faults while trying to fix issues, and other common issues; however, with guidance, you can quickly get to a near promising result. In total, I spent less than an hour getting this setup and I wrote one line of code in total (implementing the text method mentioned above).

If you want to see the code that Amazon Q created, check out the repository below.

0 notes

Text

Edge Computing for Web Developers: How to Speed Up Your Apps

In today’s digital race, milliseconds matter.

Whether you’re building a real-time dashboard, an e-commerce platform, or a SaaS product, users expect one thing — speed. But traditional cloud setups, while powerful, aren’t always fast enough when data has to travel halfway across the globe.

Enter: Edge Computing — a game-changing strategy that moves computing closer to users and supercharges web performance.

What Is Edge Computing (And Why Should You Care)?

Imagine you’re ordering pizza. Would you rather get it from a kitchen next door or one 500 miles away?

That’s the difference between centralized cloud and edge computing.

Edge computing is about processing data as close to the user as possible — often on local servers or network nodes instead of a distant data center. For web developers, this means fewer delays, faster responses, and smoother user experiences.

And in an age where a one-second delay can drop conversions by 7%, that’s a big deal.

How Does It Actually Work?

Here’s the simple version:

You deploy some parts of your app (like APIs, static files, and authentication logic) to a central server and across multiple edge locations worldwide.

When a user in New York accesses your app, it loads from a nearby edge server, not from a main server in Singapore.

Result? Lower latency, less server load, and faster load times.

What Can Web Developers Use Edge Computing For?

Edge computing isn’t just for heavy tech infrastructure — it’s now developer-friendly and API-driven. Here’s how you can use it:

1. Deliver Static Assets Faster

CDNs (Content Delivery Networks) like Cloudflare, Vercel, or Netlify already do this — they serve your HTML, CSS, JS, and images from edge locations.

Bonus Tip: Combine with image optimization at the edge to slash load times.

2. Run Serverless Functions at the Edge

Think dynamic actions like form submissions, authentication, or geolocation-based content. Platforms like Cloudflare Workers, Vercel Edge Functions, and AWS Lambda@Edge let you run logic closer to your users.

Example: Show region-specific content without needing the user to wait for a central server to decide.

3. Improve API Response Times

You can cache API responses or compute lightweight operations at the edge to reduce back-and-forth trips to the origin server.

Imagine: A travel app loading nearby attractions instantly by computing distance at the edge, not centrally.

4. Secure Your App Better

Edge networks can block threats before they ever reach your main server, including bots, DDoS attacks, and suspicious traffic.

It’s like having a security guard posted on every street corner, not just your front door.

But… Does Every App Need Edge Computing?

Not necessarily. If your app is local, low-traffic, or non-latency-sensitive, traditional cloud might be enough.

But if you’re scaling globally, working with real-time data, or want lightning-fast load speeds, edge computing is your secret weapon.

Real-World Impact: Numbers Don’t Lie

Vercel reported a 50% performance boost for apps deployed with edge functions.

Retailers using edge caching see a 20–30% decrease in bounce rates.

Streaming platforms improved video start times by up to 60% with edge delivery.

These aren’t just nice-to-haves — they’re competitive advantages.

Getting Started: Tools You Should Know

Here are a few platforms and tools that make edge computing accessible for developers:

Cloudflare Workers — Write JavaScript functions that run at the edge.

Vercel — Perfect for Next.js and frontend teams, with edge function support.

Netlify Edge Functions — Simplified edge logic built into your CI/CD.

AWS Lambda@Edge — Enterprise-grade, with tight AWS integration.

Pro tip: If you’re already using frameworks like Next.js, Nuxt, or SvelteKit, edge-ready deployments are often just one setting away.

Final Thoughts: Why This Matters to You

For developers: Edge computing lets you build faster, more responsive apps without reinventing your stack.

For business owners: It means happier users, lower customer loss, and more conversions.

In a world where speed = success, edge computing isn’t the future — it’s the edge you need today.

0 notes

Text

Become an AWS Cloud Architect with Evision Technoserve

Master the skills to design scalable, secure, and cost-effective cloud solutions using EC2, VPC, Load Balancers, and Auto Scaling. Get hands-on experience with real-world projects that prepare you for the industry. Our expert-led training ensures you're job-ready from day one.

0 notes