#Apache MXNet

Explore tagged Tumblr posts

Text

Plataformas de Aprendizaje Automático: Las Herramientas que Impulsan la Revolución de la IA

El aprendizaje automático (Machine Learning) se ha convertido en uno de los campos más dinámicos y transformadores de la tecnología moderna. Detrás de cada avance en inteligencia artificial, desde el reconocimiento facial hasta los vehículos autónomos, se encuentran potentes plataformas de software que permiten a desarrolladores e investigadores crear, entrenar y desplegar modelos de IA cada…

#Apache MXNet#data science#deep learning#desarrollo de IA#frameworks IA#inteligencia artificial#Keras#machine learning#plataformas de aprendizaje automático#programación IA#PyTorch#redes neuronales#Scikit-learn#TensorFlow

0 notes

Text

Best Open-Source AI Frameworks for Developers in 2025

Artificial Intelligence (AI) is transforming industries, and open-source frameworks are at the heart of this revolution. For developers, choosing the right AI tools can make the difference between a successful project and a stalled experiment. In 2025, several powerful open-source frameworks stand out, each with unique strengths for different AI applications—from deep learning and natural language processing (NLP) to scalable deployment and edge AI.

Here’s a curated list of the best open-source AI frameworks developers should know in 2025, along with their key features, use cases, and why they matter.

1. TensorFlow – The Industry Standard for Scalable AI

Developed by Google, TensorFlow remains one of the most widely used AI frameworks. It excels in building and deploying production-grade machine learning models, particularly for deep learning and neural networks.

Why TensorFlow?

Flexible Deployment: Runs on CPUs, GPUs, and TPUs, with support for mobile (TensorFlow Lite) and web (TensorFlow.js).

Production-Ready: Used by major companies for large-scale AI applications.

Strong Ecosystem: Extensive libraries (Keras, TFX) and a large developer community.

Best for: Enterprises, researchers, and developers needing scalable, end-to-end AI solutions.

2. PyTorch – The Researcher’s Favorite

Meta’s PyTorch has gained massive popularity for its user-friendly design and dynamic computation graph, making it ideal for rapid prototyping and academic research.

Why PyTorch?

Pythonic & Intuitive: Easier debugging and experimentation compared to static graph frameworks.

Dominates Research: Preferred for cutting-edge AI papers and NLP models.

TorchScript for Deployment: Converts models for optimized production use.

Best for: AI researchers, startups, and developers focused on fast experimentation and NLP.

3. Hugging Face Transformers – The NLP Powerhouse

Hugging Face has revolutionized natural language processing (NLP) by offering pre-trained models like GPT, BERT, and T5 that can be fine-tuned with minimal code.

Why Hugging Face?

Huge Model Library: Thousands of ready-to-use NLP models.

Easy Integration: Works seamlessly with PyTorch and TensorFlow.

Community-Driven: Open-source contributions accelerate AI advancements.

Best for: Developers building chatbots, translation tools, and text-generation apps.

4. JAX – The Next-Gen AI Research Tool

Developed by Google Research, JAX is gaining traction for high-performance numerical computing and machine learning research.

Why JAX?

Blazing Fast: Optimized for GPU/TPU acceleration.

Auto-Differentiation: Simplifies gradient-based ML algorithms.

Composable Functions: Enables advanced research in AI and scientific computing.

Best for: Researchers and developers working on cutting-edge AI algorithms and scientific ML.

5. Apache MXNet – Scalable AI for the Cloud

Backed by Amazon Web Services (AWS), MXNet is designed for efficient, distributed AI training and deployment.

Why MXNet?

Multi-Language Support: Python, R, Scala, and more.

Optimized for AWS: Deep integration with Amazon SageMaker.

Lightweight & Fast: Ideal for cloud and edge AI.

Best for: Companies using AWS for scalable AI model deployment.

6. ONNX – The Universal AI Model Format

The Open Neural Network Exchange (ONNX) allows AI models to be converted between frameworks (e.g., PyTorch to TensorFlow), ensuring flexibility.

Why ONNX?

Framework Interoperability: Avoid vendor lock-in by switching between tools.

Edge AI Optimization: Runs efficiently on mobile and IoT devices.

Best for: Developers who need cross-platform AI compatibility.

Which AI Framework Should You Choose?

The best framework depends on your project’s needs:

For production-scale AI → TensorFlow

For research & fast prototyping → PyTorch

For NLP → Hugging Face Transformers

For high-performance computing → JAX

For AWS cloud AI → Apache MXNet

For cross-framework compatibility → ONNX

Open-source AI tools are making advanced machine learning accessible to everyone. Whether you're a startup, enterprise, or researcher, leveraging the right framework can accelerate innovation.

#artificial intelligence#machine learning#deep learning#technology#tech#web developers#techinnovation#web#ai

0 notes

Text

Mobile App Development Solutions: The AI Revolution

In today's rapidly evolving tech landscape, integrating artificial intelligence (AI) into mobile applications has become a game-changer. As an AI-powered app developer, selecting the right framework is crucial to creating intelligent, efficient, and user-friendly applications. This article delves into some of the Top AI frameworks that can elevate your AI-powered app development solutions and help you build cutting-edge apps.

TensorFlow

Developed by the Google Brain team, TensorFlow is an open-source library designed for dataflow and differentiable programming. It's widely used for machine learning applications and deep neural network research. TensorFlow supports multiple languages, including Python, C++, and Java, and is compatible with platforms like Linux, macOS, Windows, Android, and iOS (via TensorFlow Lite). Its flexibility and scalability make it a preferred choice for many developers. However, beginners might find its learning curve a bit steep, and some operations can be less intuitive compared to other frameworks.

PyTorch

Backed by Facebook's AI Research lab, PyTorch is an open-source machine learning library that offers a dynamic computational graph and intuitive interface. It's particularly favored in academic research and is gaining traction in industry applications. PyTorch supports Python and C++ and is compatible with Linux, macOS, and Windows. Its dynamic nature allows for real-time debugging, and it boasts a strong community with extensive resources. On the flip side, PyTorch's deployment options were previously limited compared to TensorFlow, though recent developments have bridged this gap.

Keras

Keras is a high-level neural networks API that runs on top of TensorFlow. It’s user-friendly, modular, and extensible, making it ideal for rapid prototyping. Keras supports Python and is compatible with Linux, macOS, and Windows. Its simplicity and ease of use are its main strengths, though it may not offer the same level of customization as lower-level frameworks.

Microsoft Cognitive Toolkit (CNTK)

CNTK is an open-source deep-learning framework developed by Microsoft. It allows for efficient training of deep learning models and is highly optimized for performance. CNTK supports Python, C++, and C# and is compatible with Linux and Windows. Its performance optimization is a significant advantage, but it has a smaller community compared to TensorFlow and PyTorch, which might limit available resources.

Apache MXNet

Apache MXNet is a flexible and efficient deep learning framework supported by Amazon. It supports both symbolic and imperative programming, making it versatile for various use cases. MXNet supports multiple languages, including Python, C++, Java, and Scala, and is compatible with Linux, macOS, and Windows. Its scalability and multi-language support are notable benefits, though it has a less extensive community compared to some other frameworks.

Caffe

Developed by the Berkeley Vision and Learning Center, Caffe is a deep learning framework focused on expression, speed, and modularity. It's particularly well-suited for image classification and convolutional neural networks. Caffe supports C++, Python, and MATLAB and is compatible with Linux, macOS, and Windows. Its speed and efficiency are its main advantages, but it may not be as flexible for tasks beyond image processing.

Flutter

Flutter is an open-source UI framework developed by Google that enables developers to build native mobile apps for both Android and iOS. It works great even for mobile app development solutions requiring high-performance rendering, complex custom UI, and heavy animations. Flutter’s benefits include a single codebase for multiple platforms, a rich set of pre-designed widgets, and a hot-reload feature for rapid testing. However, its relatively young ecosystem means fewer libraries and resources compared to more established frameworks.

Softr

Softr is recognized for its ease of use and speed in building AI-powered applications. It allows developers to create applications without extensive coding, making it accessible for those looking to implement AI features quickly. While it offers rapid development capabilities, it might lack the depth of customization available in more code-intensive frameworks.

Microsoft PowerApps

Microsoft PowerApps enables the creation and editing of applications with AI integration. It's part of the Microsoft Power Platform and allows for seamless integration with other Microsoft services. This framework is beneficial for enterprises already utilizing Microsoft products, offering a cohesive environment for app development. However, it may present limitations when integrating with non-Microsoft services.

Google AppSheet

Google AppSheet is designed to turn spreadsheets into applications, providing a straightforward way to create data-driven apps. It’s particularly useful for businesses looking to mobilize their data without extensive development efforts. While it’s excellent for simple applications, it may not be suitable for more complex app development needs.

Choosing the Right Framework

Selecting the appropriate framework depends on various factors, including your project requirements, team expertise, and the specific features you intend to implement. Here are some considerations:

Project Complexity: For complex projects requiring deep customization, frameworks like TensorFlow or PyTorch might be more suitable.

Development Speed: If rapid development is a priority, tools like Flutter or Softr can expedite the process.

Platform Compatibility: Ensure the framework supports the platforms you’re targeting, whether it’s Android, iOS, or both.

Community Support: A robust community can be invaluable for troubleshooting and finding resources. Frameworks like TensorFlow and PyTorch have extensive communities.

Integration Needs: Consider how well the framework integrates with other tools and services you plan to use.

Conclusion

In conclusion, the landscape of AI-powered app development solutions offers a variety of frameworks tailored to different needs. Whether you're searching for the Best free AI app builder or exploring an AI framework list to refine your approach, making the right choice depends on your specific development goals. By carefully evaluating your project’s requirements and the strengths of each framework, you can choose the most suitable tools to create innovative and efficient applications.

#hire developers#hire app developer#mobile app development#hire mobile app developers#ios app development#android app development#app developers#mobile app developers#ai app development

0 notes

Text

Breaking Down AI Software Development: Tools, Frameworks, and Best Practices

Artificial Intelligence (AI) is redefining how software is designed, developed, and deployed. Whether you're building intelligent chatbots, predictive analytics tools, or advanced recommendation engines, the journey of AI software development requires a deep understanding of the right tools, frameworks, and methodologies. In this blog, we’ll break down the key components of AI software development to guide you through the process of creating cutting-edge solutions.

The AI Software Development Lifecycle

The development of AI-driven software shares similarities with traditional software processes but introduces unique challenges, such as managing large datasets, training machine learning models, and deploying AI systems effectively. The lifecycle typically includes:

Problem Identification and Feasibility Study

Define the problem and determine if AI is the appropriate solution.

Conduct a feasibility analysis to assess technical and business viability.

Data Collection and Preprocessing

Gather high-quality, domain-specific data.

Clean, annotate, and preprocess data for training AI models.

Model Selection and Development

Choose suitable machine learning algorithms or pre-trained models.

Fine-tune models using frameworks like TensorFlow or PyTorch.

Integration and Deployment

Integrate AI components into the software system.

Ensure seamless deployment in production environments using tools like Docker or Kubernetes.

Monitoring and Maintenance

Continuously monitor AI performance and update models to adapt to new data.

Key Tools for AI Software Development

1. Integrated Development Environments (IDEs)

Jupyter Notebook: Ideal for prototyping and visualizing data.

PyCharm: Features robust support for Python-based AI development.

2. Data Manipulation and Analysis

Pandas and NumPy: For data manipulation and statistical analysis.

Apache Spark: Scalable framework for big data processing.

3. Machine Learning and Deep Learning Frameworks

TensorFlow: A versatile library for building and training machine learning models.

PyTorch: Known for its flexibility and dynamic computation graph.

Scikit-learn: Perfect for implementing classical machine learning algorithms.

4. Data Visualization Tools

Matplotlib and Seaborn: For creating informative charts and graphs.

Tableau and Power BI: Simplify complex data insights for stakeholders.

5. Cloud Platforms

Google Cloud AI: Offers scalable infrastructure and AI APIs.

AWS Machine Learning: Provides end-to-end AI development tools.

Microsoft Azure AI: Integrates seamlessly with enterprise environments.

6. AI-Specific Tools

Hugging Face Transformers: Pre-trained NLP models for quick deployment.

OpenAI APIs: For building conversational agents and generative AI applications.

Top Frameworks for AI Software Development

Frameworks are essential for building scalable, maintainable, and efficient AI solutions. Here are some popular ones:

1. TensorFlow

Open-source library developed by Google.

Supports deep learning, reinforcement learning, and more.

Ideal for building custom AI models.

2. PyTorch

Developed by Facebook AI Research.

Known for its simplicity and support for dynamic computation graphs.

Widely used in academic and research settings.

3. Keras

High-level API built on top of TensorFlow.

Simplifies the implementation of neural networks.

Suitable for beginners and rapid prototyping.

4. Scikit-learn

Provides simple and efficient tools for predictive data analysis.

Includes a wide range of algorithms like SVMs, decision trees, and clustering.

5. MXNet

Scalable and flexible deep learning framework.

Offers dynamic and symbolic programming.

Best Practices for AI Software Development

1. Understand the Problem Domain

Clearly define the problem AI is solving.

Collaborate with domain experts to gather insights and requirements.

2. Focus on Data Quality

Use diverse and unbiased datasets to train AI models.

Ensure data preprocessing includes normalization, augmentation, and outlier handling.

3. Prioritize Model Explainability

Opt for interpretable models when decisions impact critical domains.

Use tools like SHAP or LIME to explain model predictions.

4. Implement Robust Testing

Perform unit testing for individual AI components.

Conduct validation with unseen datasets to measure model generalization.

5. Ensure Scalability

Design AI systems to handle increasing data and user demands.

Use cloud-native solutions to scale seamlessly.

6. Incorporate Continuous Learning

Update models regularly with new data to maintain relevance.

Leverage automated ML pipelines for retraining and redeployment.

7. Address Ethical Concerns

Adhere to ethical AI principles, including fairness, accountability, and transparency.

Regularly audit AI models for bias and unintended consequences.

Challenges in AI Software Development

Data Availability and Privacy

Acquiring quality data while respecting privacy laws like GDPR can be challenging.

Algorithm Bias

Biased data can lead to unfair AI predictions, impacting user trust.

Integration Complexity

Incorporating AI into existing systems requires careful planning and architecture design.

High Computational Costs

Training large models demands significant computational resources.

Skill Gaps

Developing AI solutions requires expertise in machine learning, data science, and software engineering.

Future Trends in AI Software Development

Low-Code/No-Code AI Platforms

Democratizing AI development by enabling non-technical users to create AI-driven applications.

AI-Powered Software Development

Tools like Copilot will increasingly assist developers in writing code and troubleshooting issues.

Federated Learning

Enhancing data privacy by training AI models across decentralized devices.

Edge AI

AI models deployed on edge devices for real-time processing and low-latency applications.

AI in DevOps

Automating CI/CD pipelines with AI to accelerate development cycles.

Conclusion

AI software development is an evolving discipline, offering tools and frameworks to tackle complex problems while redefining how software is created. By embracing the right technologies, adhering to best practices, and addressing potential challenges proactively, developers can unlock AI's full potential to build intelligent, efficient, and impactful systems.

The future of software development is undeniably AI-driven—start transforming your processes today!

0 notes

Text

How to Develop an AI App at Minimal Cost?

In today's tech-driven landscape, developing an AI app can seem like a daunting and expensive task. However, with strategic planning and some insider knowledge, you can create a robust AI application without breaking the bank. Whether you're looking to partner with an AI development company, utilize app development services, or hire AI developers directly, here are some practical steps to minimize costs while maximizing output.

1. Define Your AI App’s Purpose Clearly

Before diving into the development process, it's crucial to have a clear and concise goal for your AI app. What problem is it solving? Who is it for? A well-defined purpose not only streamlines the development process but also reduces unnecessary expenditure on features that do not align with the core objectives.

2. Choose the Right Development Partner

Selecting the right AI development company is pivotal. Look for partners who not only offer competitive pricing but also have a proven track record in deploying AI applications. They should have a portfolio that demonstrates their expertise and efficiency. Don’t hesitate to ask for case studies or references. This step ensures that you're not only minimizing costs but also working with someone who can genuinely add value to your project.

3. Leverage Open-Source Tools and Platforms

One of the most cost-effective strategies is to utilize open-source AI frameworks and tools. Platforms like TensorFlow, PyTorch, and Apache MXNet offer powerful resources for AI app development at no cost. By leveraging these tools, you can significantly reduce the expenses related to the app’s foundational technology.

4. Opt for a Minimal Viable Product (MVP)

Starting with an MVP allows you to build your app with the minimum necessary features to meet the core objective. This approach not only cuts down on initial costs but also gives you a tangible product to test and refine based on user feedback. It’s a practical way to gauge the app’s viability without fully committing extensive resources.

5. Hire Competent Yet Cost-effective Talent

When looking to hire AI developers, consider a blend of expertise and cost. Hiring developers on contract basis can be beneficial.

6. Focus on Scalability from the Start

Design your AI app with scalability in mind. This means choosing technologies and app development services that can grow with your user base without requiring complete redevelopment. Cloud services, for instance, can be a flexible and cost-effective solution, allowing you to pay only for the server resources you use.

7. Continuously Test and Iterate

Throughout the development process, continuously test the AI app to ensure it meets user needs and functions correctly. Regular testing helps catch and fix errors early, preventing costly fixes after the app’s launch. Tools like automated testing software can help reduce the time and money spent on this phase.

Engage the Community

Finally, don’t underestimate the power of community feedback. Engaging with potential users early in the process can provide invaluable insights that can help refine your app without extensive cost. Utilize social media, forums, and beta testing groups to gather feedback and iteratively improve your product.

Developing an AI app doesn’t have to be an exorbitant venture. By applying these strategic approaches, you can significantly reduce costs while creating an effective and scalable AI application. Remember, success in AI development is not just about having the best technology but also about smart planning and execution. Are you ready to turn your innovative idea into a reality, efficiently and affordably?

0 notes

Link

0 notes

Text

The Many Features of Apache MXNet GluonCV - #Ankaa

The Many Features of Apache MXNet GluonCV In preparation for my talk at the Philadelphia Open Source Conference, Apache Deep Learning 201, I wanted to have some good images for running various Apache MXNet Deep Learning Algorithms for Computer Vision. Using Apache open source tools – Apache NiFi 1.8 and Apache MXNet 1.3... https://ankaa-pmo.com/the-many-features-of-apache-mxnet-gluoncv/ #AI #Ai_Tutorial #Apache_Mxnet #Apache_Nifi #Deep_Learning #Gluoncv #Hortonworks #Python #Tutorial #Yolo

#AI#ai tutorial#apache mxnet#apache nifi#deep learning#gluoncv#hortonworks#python#tutorial#yolo#Actualités#Développement IoT#Innovation

0 notes

Text

Microsoft and Amazon announce deep learning library Gluon

Microsoft and Amazon announce deep learning library Gluon

Microsoft has announced a new partnership with Amazon to create a open-source deep learning library called Gluon. The idea behind Gluon is to make artificial intelligence more accessible and valuable.

According to Microsoft, the library simplifies the process of making deep learning models and will enable developers to run multiple deep learning libraries. This announcement follows their…

View On WordPress

#AI#Amazon#apache mxnet#artificial intelligence#AWS#deep learning#gluon#lstm#Machine Learning#Microsoft#microsoft cognitive toolkit#ONNX#open source#RNN

0 notes

Text

Machine Learning Training in Noida

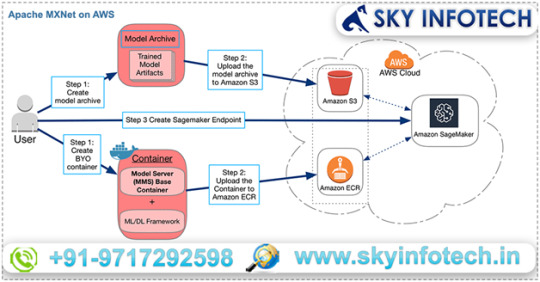

Apache MXNet on AWS- For quick training machine learning applications

Need a quick and scalable training and inference framework. Try Apache MXNet. With its straightforward and concise API for machine learning, it will fulfill your need without a doubt.

MXNet includes the extremely vital Gluon the interface that lets developers of all skill levels to get easily started with deep learning on the cloud, on mobile apps, and on edge devices. Few lines of Gluon code can enable you to build recurrent LSTMs, linear regression and convolutional networks for speech recognition, recommendation, object detection and personalization.

Amazon SageMaker, a top rate platform to build, train, and deploy machine learning models at scale can be relied on to get started with MxNet on AWS with a fully-managed exceptional experience. AWS Deep Learning AMIs can also be used to build custom environments and workflows not only with MxNet but other frameworks as well. These frameworks are TensorFlow, Caffe2, Keras, Caffe, PyTorch, Chainer, and Microsoft Cognitive Toolkit.

Benefits of deep learning using MXNet

Ease-of-Use with Gluon

You don’t have to sacrifice training speed in your endeavor to prototype, train, and deploy deep learning models by employing MXNet’s Gluon library that provides a high-level interface. For loss functions, predefined layers, and optimizers, Gluon offers high-level abstractions. Easy to debug and intuitive to work with flexible structure is provided by Gluon.

Greater Performance

Tremendously large projects can be handled in less time simply because of distribution of deep learning workloads across multiple GPUs with almost linear scalability is possible. Depending on the number of GPUs in a cluster, scaling is automatic. Developers increase productivity by saving precious time by running serverless and batch-based inferencing.

For IoT & the Edge

MXNet produces lightweight neural network model representations besides handling multi-GPU training and deployment of complex models in the cloud. This lightweight neural network model representations can run on lower-powered edge devices like a Raspberry Pi, laptop, or smartphone and process data in real-time remotely.

Flexibility & Choice

A wide array of programming languages like C++, R, Clojure, Matlab, Julia, JavaScript, Python, Scala, and Perl, etc. is supported by MXNet. So, you can easily get started with languages that are already known to you. However, on the backend, all code is compiled in C++ for the best performance regardless of language used to build the models.

1 note

·

View note

Text

Top 12 Artificial Intelligence Tools & Frameworks

Here are the top 12 artificial intelligence tools and frameworks in 2023: Scikit-learn TensorFlow PyTorch CNTK Caffe Apache MXNet Keras OpenNN AutoML H2O Google ML Kit IBM Watson These tools and frameworks are used for a variety of AI tasks, including machine learning, natural language processing, computer vision, and robotics. They are designed to make it easier for developers to…

View On WordPress

0 notes

Text

Watch "CUDA Graphs support in Apache MXNet 1.8" on YouTube

youtube

NFT service mesh for tensorflow ai and pytorch ai

youtube

Update NFT and Nvidia platform management system AI for my intellectual property. Update all assets to Imperium property management system AI

0 notes

Text

Apache MXNet | Perpustakaan yang fleksibel dan cekap untuk pembelajaran mendalam.

0 notes

Text

AWS and machine learning

AWS (Amazon Web Services) is a collection of remote computing services (also called web services) that make up a cloud computing platform, offered by Amazon.com. These services operate from 12 geographical regions across the world.

AWS provides a variety of services for machine learning, including:

Amazon SageMaker is a fully-managed platform for building, training, and deploying machine learning models.

Amazon Machine Learning is a service that makes it easy for developers of all skill levels to use machine learning.

AWS Deep Learning AMIs, pre-built Amazon Machine Images (AMIs) that make it easy to get started with deep learning on Amazon EC2.

AWS Deep Learning Containers, Docker images pre-installed with deep learning frameworks to make it easy to run distributed training on Amazon ECS.

Additionally, AWS also provides services for data storage, data processing, and data analysis which are essential for machine learning workloads. These services include Amazon S3, Amazon Kinesis, Amazon Redshift, and Amazon QuickSight.

In summary, AWS provides a comprehensive set of services that allow developers and data scientists to build, train, and deploy machine learning models easily and at scale.

AWS also provides several other services that can be used in conjunction with machine learning. These include:

Amazon Comprehend is a natural language processing service that uses machine learning to extract insights from text.

Amazon Transcribe is a service that uses machine learning to transcribe speech to text.

Amazon Translate is a service that uses machine learning to translate text from one language to another.

Amazon Rekognition is a service that uses machine learning to analyze images and videos, detect objects, scenes, and activities, and recognise faces, text, and other content.

AWS also provides a number of tools and frameworks that can be used to build and deploy machine learning models, such as:

TensorFlow is an open-source machine learning framework that is widely used for building and deploying neural networks.

Apache MXNet, a deep learning framework that is fully supported on AWS.

PyTorch is an open-source machine-learning

library for Python that is also fully supported on AWS.

AWS SDKs for several programming languages, including Python, Java, and .NET, which make it easy to interact with AWS services from your application.

AWS also offers a number of programs and resources to help developers and data scientists learn about machine learning, including the Machine Learning University, which provides a variety of courses, labs, and tutorials on machine learning topics, and the AWS Machine Learning Blog, which features articles and case studies on the latest developments in machine learning and how to use AWS services for machine learning workloads.

In summary, AWS provides a wide range of services, tools, and resources for building and deploying machine learning models, making it a powerful platform for machine learning workloads at any scale.

0 notes

Text

Use deep learning frameworks natively in Amazon SageMaker Processing

Use deep learning frameworks natively in Amazon SageMaker Processing

Until recently, customers who wanted to use a deep learning (DL) framework with Amazon SageMaker Processing faced increased complexity compared to those using scikit-learn or Apache Spark. This post shows you how SageMaker Processing has simplified running machine learning (ML) preprocessing and postprocessing tasks with popular frameworks such as PyTorch, TensorFlow, Hugging Face, MXNet, and…

View On WordPress

0 notes

Link

0 notes

Text

Who will speak at AI Journey - meet the speakers of the big conference on artificial intelligence

AI Journey conference starts tomorrow. Participants will have 20+ thematic streams, where they will be able to listen to speeches and discussions on a variety of topics. 250+ world-class experts will speak at the conference, and with some of them Joseph Marc Blumenthal had time to talk. Here's what they said. Jason Dye Distinguished Research Engineer and Chief Architect for AI and Big Data, Founder and Member of the Steering Committee of the Apache Spark Project, Mentor of the MXNet Project, Intel In my speech, I will talk about the BigDL program. It is an open source Intel tool that provides an end-to-end AI pipeline for big data to scale distributed AI. It allows information processors and machine learning engineers to create familiar Python notebooks on their PCs, which can then be scaled for use on large clusters and processed large amounts of information in a distributed manner. The tool will be useful for professionals who want to apply AI technologies to work with large-scale distributed datasets in a production environment. BigDL is used in practice by many large companies, such as Mastercard, Burger King, Inspur, JD.com and others. At the conference, I would like to hear reports on how data analytics, machine learning, deep learning are used in practice. I believe that studying this issue is important for communities that are engaged in big data and AI, because AI technologies are now being used not only in experimental applications, but also in real products. Jurgen Schmidhuber Chief Scientific Adviser, Institute for Artificial Intelligence AIRI, Director of the Dalle Molle Institute for Artificial Intelligence Research Laboratory (IDSIA) The topic of my speech is the most popular artificial neural networks. I would probably be naive and propose to evaluate the popularity of a particular neural network by the number of scientific citations. However, it often depends on the popularity of the problem being solved by this network. I think my talk will be of interest to those who already know a little about machine learning and artificial neural networks. But it will be quite understandable to those who do not consider themselves an expert in these topics. More specifically, I will highlight the five most cited neural networks that are used in various applications. The first is the so-called Long Short-Term Memory (LSTM), the most cited neural network of the 20th century. The second is ResNet, the most cited neural network of the 21st century and, in fact, an open version of our Highway Net - the first truly deep feedforward neural network with hundreds of layers. Next are AlexNet and VGG Net - the second and third most cited neural networks of the 21st century. Both build on our earlier DanNet, which became the first deep convolutional network in 2011. She won the computer vision competition and performed better than humans on tasks. The fourth point is about Generative Adversarial Networks. Today, they are used to create realistic images and works of visual art. And this is one variation of our work Adversarial Artificial Curiosity, first published in 1990. In it, two neural networks fought each other in a minimax game. And finally, the fifth point - variations of the so-called Transformers, which are now widely used for natural language processing (NLP, Natural Language Processing). Linear Transformers are formally equivalent to Fast Weight Programmers - our 1991 development. In the 2010s, a community of distinguished machine learning researchers, engineers, and practitioners feverishly pushed these developments forward. As a result, absolutely amazing things turned out that have influenced the lives of billions of people around the world. I hope that many people, especially young people, will want to learn more about modern AI and deep artificial neural networks and watch the speeches. Catherine More President of the Intuitive Foundation On AI Journey, I will talk about the use of AI in surgery. As in other areas of medicine, AI helps the surgeon rather than replaces the doctor. And it will

be interesting for me to share the nuances of the technology application. AI can be useful in several surgical areas today. Machine learning technologies that analyze preoperative and intraoperative images can be used to improve the spatial orientation of the surgeon during surgery. In other words, the doctor will better understand what is happening with the patient. In addition, algorithms are already helping surgeons detect and interpret pathologies. Also, AI “trainers” can recognize the current stage of the operation and generate hints for the doctor regarding the next stages. And for nurses and technicians in perioperative patient management, algorithms help to properly prepare equipment and materials for the next stage of the procedure. I know that some doctors are still skeptical about the use of AI in medicine. It seems to me that there is no need to worry: practice shows that the use of algorithms can improve patient outcomes, reduce training time, and even extend the careers of surgeons themselves. These technologies are not something to be afraid of. At the conference, I would like to hear a variety of points of view and learn about new opportunities for using AI in medicine. For example, about those that have not even occurred to me yet.

0 notes