#Best Source Code Management Software

Explore tagged Tumblr posts

Text

The darkly ironic thing is that if you are worried about the recent news that someone scraped Ao3 for AI research, then you're probably vastly underestimating the scale of the problem. It's way worse than you think.

For the record, a couple of days ago, someone posted a "dataset for AI research" on reddit, which was simply all publicly accessible works on Ao3, downloaded and zipped. This is good, in a way, because that ZIP file is blatantly illegal, and the OTW managed to get it taken down (though it's since been reuploaded elsewhere).

However, the big AI companies, like OpenAI, xAI, Meta and so on, as well as many you've never heard of, all probably had no interest in this ZIP file to begin with. That was only ever of interest to small-scale researchers. These companies probably already have all that data, received by scraping it themselves.

A lot of internet traffic at the moment is just AI companies sucking up whatever they can get. Wikipedia reports that about a third of all visitors are probably AI bots (and they use enormous amounts of bandwidth). A number of sites hosting software source code estimate that more than 90% of all traffic to their sites may be AI bots. It's all a bit fuzzy since most AI crawlers don't identify themselves as such, and pretend to be normal users.

The OTW hasn't released any similar data as far as I am aware, but my guess would be that Ao3 is being continuously crawled by all sorts of AI companies at every moment of the day. If you have a fanfic on Ao3, and it isn't locked to logged-in users only, then it's already going to be part of several AI training data sets. Only unlike this reddit guy, we'll never know for sure, because these AI training data sets won't be released to the public. Only the resulting AI models, or the chat bots that use these models, and whether that's illegal is… I dunno. Nobody knows. The US Supreme Court will probably answer that in 5-10 years time. Fun.

The solution I've seen from a lot of people is to lock their fics. That will, at best, only work for new fics and updates, it's not going to remove anything that e.g. OpenAI already knows.

And, of course, it assumes that these bots can't be logged in. Are they? I have no way of knowing. But if I didn't have a soul and ran an AI company, I might consider ordering a few interns to make a couple dozen to hundreds of Ao3 accounts. It costs nothing but time due to the queue system, and gets me another couple of million words probably.

In other words: I cannot guarantee that locked works are safe. Maybe, maybe not.

Also, I don't think there's a sure way to know whether any given work is included in the dataset or not. I suppose if ChatGPT can give you an accurate summary when you ask, then it's very likely to be in, but that's by no means a guarantee either way.

What to do? Honestly, I don't know. We can hope for AI companies to go bankrupt and fail, and I'm sure a lot of them will over the next five years, but probably not all of them. The answer will likely have to be political and on an international stage, which is not an easy terrain to find solutions for, well, anything.

Ultimately it's a personal decision. For myself, I think the joy I get from writing and having others read what I've written outweighs the risks, so my stories remain unlocked (and my blog posts as well, this very text will make its way into various data sets before too long, count on it). I can totally understand if others make other choices, though. It's all a mess.

Sorry to start, middle and end this on a downer, but I think it's important to be realistic here. We can't demand useful solutions for this from our politicians if we don't understand the problems.

119 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) has plans to stage a “hackathon” next week in Washington, DC. The goal is to create a single “mega API”—a bridge that lets software systems talk to one another—for accessing IRS data, sources tell WIRED. The agency is expected to partner with a third-party vendor to manage certain aspects of the data project. Palantir, a software company cofounded by billionaire and Musk associate Peter Thiel, has been brought up consistently by DOGE representatives as a possible candidate, sources tell WIRED.

Two top DOGE operatives at the IRS, Sam Corcos and Gavin Kliger, are helping to orchestrate the hackathon, sources tell WIRED. Corcos is a health-tech CEO with ties to Musk’s SpaceX. Kliger attended UC Berkeley until 2020 and worked at the AI company Databricks before joining DOGE as a special adviser to the director at the Office of Personnel Management (OPM). Corcos is also a special adviser to Treasury Secretary Scott Bessent.

Since joining Musk’s DOGE, Corcos has told IRS workers that he wants to pause all engineering work and cancel current attempts to modernize the agency’s systems, according to sources with direct knowledge who spoke with WIRED. He has also spoken about some aspects of these cuts publicly: "We've so far stopped work and cut about $1.5 billion from the modernization budget. Mostly projects that were going to continue to put us down the death spiral of complexity in our code base," Corcos told Laura Ingraham on Fox News in March.

Corcos has discussed plans for DOGE to build “one new API to rule them all,” making IRS data more easily accessible for cloud platforms, sources say. APIs, or application programming interfaces, enable different applications to exchange data, and could be used to move IRS data into the cloud. The cloud platform could become the “read center of all IRS systems,” a source with direct knowledge tells WIRED, meaning anyone with access could view and possibly manipulate all IRS data in one place.

Over the last few weeks, DOGE has requested the names of the IRS’s best engineers from agency staffers. Next week, DOGE and IRS leadership are expected to host dozens of engineers in DC so they can begin “ripping up the old systems” and building the API, an IRS engineering source tells WIRED. The goal is to have this task completed within 30 days. Sources say there have been multiple discussions about involving third-party cloud and software providers like Palantir in the implementation.

Corcos and DOGE indicated to IRS employees that they intended to first apply the API to the agency’s mainframes and then move on to every other internal system. Initiating a plan like this would likely touch all data within the IRS, including taxpayer names, addresses, social security numbers, as well as tax return and employment data. Currently, the IRS runs on dozens of disparate systems housed in on-premises data centers and in the cloud that are purposefully compartmentalized. Accessing these systems requires special permissions and workers are typically only granted access on a need-to-know basis.

A “mega API” could potentially allow someone with access to export all IRS data to the systems of their choosing, including private entities. If that person also had access to other interoperable datasets at separate government agencies, they could compare them against IRS data for their own purposes.

“Schematizing this data and understanding it would take years,” an IRS source tells WIRED. “Just even thinking through the data would take a long time, because these people have no experience, not only in government, but in the IRS or with taxes or anything else.” (“There is a lot of stuff that I don't know that I am learning now,” Corcos tells Ingraham in the Fox interview. “I know a lot about software systems, that's why I was brought in.")

These systems have all gone through a tedious approval process to ensure the security of taxpayer data. Whatever may replace them would likely still need to be properly vetted, sources tell WIRED.

"It's basically an open door controlled by Musk for all American's most sensitive information with none of the rules that normally secure that data," an IRS worker alleges to WIRED.

The data consolidation effort aligns with President Donald Trump’s executive order from March 20, which directed agencies to eliminate information silos. While the order was purportedly aimed at fighting fraud and waste, it also could threaten privacy by consolidating personal data housed on different systems into a central repository, WIRED previously reported.

In a statement provided to WIRED on Saturday, a Treasury spokesperson said the department “is pleased to have gathered a team of long-time IRS engineers who have been identified as the most talented technical personnel. Through this coalition, they will streamline IRS systems to create the most efficient service for the American taxpayer. This week the team will be participating in the IRS Roadmapping Kickoff, a seminar of various strategy sessions, as they work diligently to create efficient systems. This new leadership and direction will maximize their capabilities and serve as the tech-enabled force multiplier that the IRS has needed for decades.”

Palantir, Sam Corcos, and Gavin Kliger did not immediately respond to requests for comment.

In February, a memo was drafted to provide Kliger with access to personal taxpayer data at the IRS, The Washington Post reported. Kliger was ultimately provided read-only access to anonymized tax data, similar to what academics use for research. Weeks later, Corcos arrived, demanding detailed taxpayer and vendor information as a means of combating fraud, according to the Post.

“The IRS has some pretty legacy infrastructure. It's actually very similar to what banks have been using. It's old mainframes running COBOL and Assembly and the challenge has been, how do we migrate that to a modern system?” Corcos told Ingraham in the same Fox News interview. Corcos said he plans to continue his work at IRS for a total of six months.

DOGE has already slashed and burned modernization projects at other agencies, replacing them with smaller teams and tighter timelines. At the Social Security Administration, DOGE representatives are planning to move all of the agency’s data off of legacy programming languages like COBOL and into something like Java, WIRED reported last week.

Last Friday, DOGE suddenly placed around 50 IRS technologists on administrative leave. On Thursday, even more technologists were cut, including the director of cybersecurity architecture and implementation, deputy chief information security officer, and acting director of security risk management. IRS’s chief technology officer, Kaschit Pandya, is one of the few technology officials left at the agency, sources say.

DOGE originally expected the API project to take a year, multiple IRS sources say, but that timeline has shortened dramatically down to a few weeks. “That is not only not technically possible, that's also not a reasonable idea, that will cripple the IRS,” an IRS employee source tells WIRED. “It will also potentially endanger filing season next year, because obviously all these other systems they’re pulling people away from are important.”

(Corcos also made it clear to IRS employees that he wanted to kill the agency’s Direct File program, the IRS’s recently released free tax-filing service.)

DOGE’s focus on obtaining and moving sensitive IRS data to a central viewing platform has spooked privacy and civil liberties experts.

“It’s hard to imagine more sensitive data than the financial information the IRS holds,” Evan Greer, director of Fight for the Future, a digital civil rights organization, tells WIRED.

Palantir received the highest FedRAMP approval this past December for its entire product suite, including Palantir Federal Cloud Service (PFCS) which provides a cloud environment for federal agencies to implement the company’s software platforms, like Gotham and Foundry. FedRAMP stands for Federal Risk and Authorization Management Program and assesses cloud products for security risks before governmental use.

“We love disruption and whatever is good for America will be good for Americans and very good for Palantir,” Palantir CEO Alex Karp said in a February earnings call. “Disruption at the end of the day exposes things that aren't working. There will be ups and downs. This is a revolution, some people are going to get their heads cut off.”

15 notes

·

View notes

Text

If you know anyone who writes music, today has probably been a very crappy day for them.

Finale, one of the most dominant programs for music notation for the past 35 years, is coming to an end. They’re no longer updating it or allowing people to purchase it, and it won’t be possible to authorize on new devices or if you upgrade your OS.

I’ve personally been using Finale to write music for about 20 years (since middle school!). It’s not something that I depend on for money, and my work should be compatible with other programs, so I’ll be fine, but this is very, very bad news for lots of people who depend on this software for their livelihood.

(cut added so info added to reblogs doesn't get buried!)

The shittiest thing is that this was preventable. From a comment on Finale’s post:

As a former Tech Lead on Finale (2019-2021) I can tell you this future was avoidable. Those millions of lines of code were old and crufty, and myself and others recognized something had to be done. So we created a plan to modernize the code base, focusing on making it easier to deliver the next few rounds of features. I encouraged product leadership to put together a feature roadmap so our team could identify where the modernization effort should be focused.

We had a high level architecture roadmap, and a low level strategy to modernize basic technologies to facilitate more precise unit testing. The plan was to create smart interfaces in the code to allow swapping out old UI architecture for a more modern, reliable, and better maintained toolset that would grow with us rather than against us.

But in the end it became clear support wasn’t coming from upper management for this effort.

I’m sad to see Finale end this way.

Finale also could allow people who own the software to move it to their new devices in the future, but Capitalism. It’s a pointless corporate IP decision that only hurts users.

There are three main options for those of us who are having to switch: Dorico, MuseScore, or Sibelius.

Sibelius has been Finale’s main competitor for as long as I can remember. It currently runs on a subscription model (ew). The programs are about equal in terms of their capabilities, though I’ve heard Finale has more options for experimental notation. (I’ve used both; Finale worked better for my workflow, but that’s probably just because I grew up using it.)

Dorico is the hip new kid and I’d personally been considering switching for quite a while, but it’s ungodly expensive (about twice what Finale cost at full price). Thankfully, they are allowing current Finale users to purchase at a price comparable (well, still 50% higher) to what Finale used to cost with the educator discount. It apparently has a very steep learning curve at first, though it is probably the best option for experimental notation.

MuseScore is open source, which is awesome! But it also has the most limitations for people who write using experimental notation.

I haven’t used MuseScore or Dorico and will probably end up switching to one of those, but it’s also not an urgent matter for me. Keep your musician friends in your thoughts; it’s going to be a rough road ahead if they used Finale.

#finale#sibelius#musescore#dorico#music notation#music notation software#the end of finale#fuck capitalism#musician#composer#songwriter

25 notes

·

View notes

Text

Hire Dedicated Developers in India Smarter with AI

Hire dedicated developers in India smarter and faster with AI-powered solutions. As businesses worldwide turn to software development outsourcing, India remains a top destination for IT talent acquisition. However, finding the right developers can be challenging due to skill evaluation, remote team management, and hiring efficiency concerns. Fortunately, AI recruitment tools are revolutionizing the hiring process, making it seamless and effective.

In this blog, I will explore how AI-powered developer hiring is transforming the recruitment landscape and how businesses can leverage these tools to build top-notch offshore development teams.

Why Hire Dedicated Developers in India?

1) Cost-Effective Without Compromising Quality:

Hiring dedicated developers in India can reduce costs by up to 60% compared to hiring in the U.S., Europe, or Australia. This makes it a cost-effective solution for businesses seeking high-quality IT staffing solutions in India.

2) Access to a Vast Talent Pool:

India has a massive talent pool with millions of software engineers proficient in AI, blockchain, cloud computing, and other emerging technologies. This ensures companies can find dedicated software developers in India for any project requirement.

3) Time-Zone Advantage for 24/7 Productivity:

Indian developers work across different time zones, allowing continuous development cycles. This enhances productivity and ensures faster project completion.

4) Expertise in Emerging Technologies:

Indian developers are highly skilled in cutting-edge fields like AI, IoT, and cloud computing, making them invaluable for innovative projects.

Challenges in Hiring Dedicated Developers in India

1) Finding the Right Talent Efficiently:

Sorting through thousands of applications manually is time-consuming. AI-powered recruitment tools streamline the process by filtering candidates based on skill match and experience.

2) Evaluating Technical and Soft Skills:

Traditional hiring struggles to assess real-world coding abilities and soft skills like teamwork and communication. AI-driven hiring processes include coding assessments and behavioral analysis for better decision-making.

3) Overcoming Language and Cultural Barriers:

AI in HR and recruitment helps evaluate language proficiency and cultural adaptability, ensuring smooth collaboration within offshore development teams.

4) Managing Remote Teams Effectively:

AI-driven remote work management tools help businesses track performance, manage tasks, and ensure accountability.

How AI is Transforming Developer Hiring

1. AI-Powered Candidate Screening:

AI recruitment tools use resume parsing, skill-matching algorithms, and machine learning to shortlist the best candidates quickly.

2. AI-Driven Coding Assessments:

Developer assessment tools conduct real-time coding challenges to evaluate technical expertise, code efficiency, and problem-solving skills.

3. AI Chatbots for Initial Interviews:

AI chatbots handle initial screenings, assessing technical knowledge, communication skills, and cultural fit before human intervention.

4. Predictive Analytics for Hiring Success:

AI analyzes past hiring data and candidate work history to predict long-term success, improving recruitment accuracy.

5. AI in Background Verification:

AI-powered background checks ensure candidate authenticity, education verification, and fraud detection, reducing hiring risks.

Steps to Hire Dedicated Developers in India Smarter with AI

1. Define Job Roles and Key Skill Requirements:

Outline essential technical skills, experience levels, and project expectations to streamline recruitment.

2. Use AI-Based Hiring Platforms:

Leverage best AI hiring platforms like LinkedIn Talent Insightsand HireVue to source top developers.

3. Implement AI-Driven Skill Assessments:

AI-powered recruitment processes use coding tests and behavioral evaluations to assess real-world problem-solving abilities.

4. Conduct AI-Powered Video Interviews:

AI-driven interview tools analyze body language, sentiment, and communication skills for improved hiring accuracy.

5. Optimize Team Collaboration with AI Tools:

Remote work management tools like Trello, Asana, and Jira enhance productivity and ensure smooth collaboration.

Top AI-Powered Hiring Tools for Businesses

LinkedIn Talent Insights — AI-driven talent analytics

HackerRank — AI-powered coding assessments

HireVue — AI-driven video interview analysis

Pymetrics — AI-based behavioral and cognitive assessments

X0PA AI — AI-driven talent acquisition platform

Best Practices for Managing AI-Hired Developers in India

1. Establish Clear Communication Channels:

Use collaboration tools like Slack, Microsoft Teams, and Zoom for seamless communication.

2. Leverage AI-Driven Productivity Tracking:

Monitor performance using AI-powered tracking tools like Time Doctor and Hubstaff to optimize workflows.

3. Encourage Continuous Learning and Upskilling:

Provide access to AI-driven learning platforms like Coursera and Udemy to keep developers updated on industry trends.

4. Foster Cultural Alignment and Team Bonding:

Organize virtual team-building activities to enhance collaboration and engagement.

Future of AI in Developer Hiring

1) AI-Driven Automation for Faster Hiring:

AI will continue automating tedious recruitment tasks, improving efficiency and candidate experience.

2) AI and Blockchain for Transparent Recruitment:

Integrating AI with blockchain will enhance candidate verification and data security for trustworthy hiring processes.

3) AI’s Role in Enhancing Remote Work Efficiency:

AI-powered analytics and automation will further improve productivity within offshore development teams.

Conclusion:

AI revolutionizes the hiring of dedicated developers in India by automating candidate screening, coding assessments, and interview analysis. Businesses can leverage AI-powered tools to efficiently find, evaluate, and manage top-tier offshore developers, ensuring cost-effective and high-quality software development outsourcing.

Ready to hire dedicated developers in India using AI? iQlance offers cutting-edge AI-powered hiring solutions to help you find the best talent quickly and efficiently. Get in touch today!

#AI#iqlance#hire#india#hirededicatreddevelopersinIndiawithAI#hirededicateddevelopersinindia#aipoweredhiringinindia#bestaihiringtoolsfordevelopers#offshoresoftwaredevelopmentindia#remotedeveloperhiringwithai#costeffectivedeveloperhiringindia#aidrivenrecruitmentforitcompanies#dedicatedsoftwaredevelopersindia#smarthiringwithaiinindia#aipowereddeveloperscreening

5 notes

·

View notes

Text

Hell is terms like ASIC, FPGA, and PPU

I haven't been doing any public updates on this for a bit, but I am still working on this bizarre rabbit hole quest of designing my own (probably) 16-bit game console. The controller is maybe done now, on a design level. Like I have parts for everything sourced and a layout for the internal PCB. I don't have a fully tested working prototype yet because I am in the middle of a huge financial crisis and don't have the cash laying around to send out to have boards printed and start rapidly iterating design on the 3D printed bits (housing the scroll wheel is going to be a little tricky). I should really spend my creative energy focusing on software development for a nice little demo ROM (or like, short term projects to earn money I desperately need) but my brain's kinda stuck in circuitry gear so I'm thinking more about what's going into the actual console itself. This may get techie.

So... in the broadest sense, and I think I've mentioned this before, I want to make this a 16-bit system (which is a term with a pretty murky definition), maybe 32-bit? And since I'm going to all this trouble I want to give my project here a little something extra the consoles from that era didn't have. And at the same time, I'd like to be able to act as a bridge for the sort of weirdos who are currently actively making new games for those systems to start working on this, on a level of "if you would do this on this console with this code, here's how you would do it on mine." This makes for a hell of a lot of research on my end, but trust me, it gets worse!

So let's talk about the main strengths of the 2D game consoles everyone knows and loves. Oh and just now while looking for some visual aids maybe I stumbled across this site, which is actually great as a sort of mid-level overview of all this stuff. Short version though-

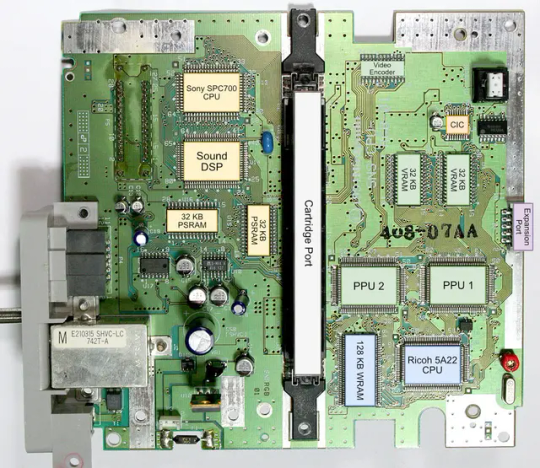

The SNES (or Super Famicom) does what it does by way of a combination of really going all in on direct memory access, and particularly having a dedicated setup for doing so between scanlines, coupled with a bunch of dedicated graphical modes specialized for different use cases, and you know, that you can switch between partway through drawing a screen. And of course the feature everyone knows and loves where you can have one polygon and do all sorts of fun things with it.

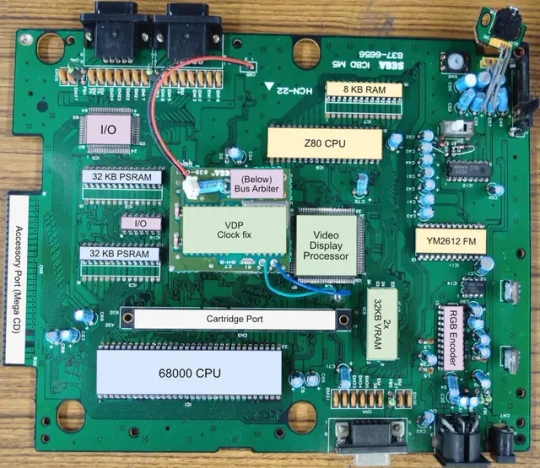

The Genesis (or Megadrive) has an actual proper 16-bit processor instead of this weird upgraded 6502 like the SNES had for a scrapped backwards compatibility plan. It also had this frankly wacky design where they just kinda took the guts out of a Sega Master System and had them off to the side as a segregated system whose only real job is managing the sound chip, one of those good good Yamaha synths with that real distinct sound... oh and they also actually did have a backwards compatibility deal that just kinda used the audio side to emulate an SMS, basically.

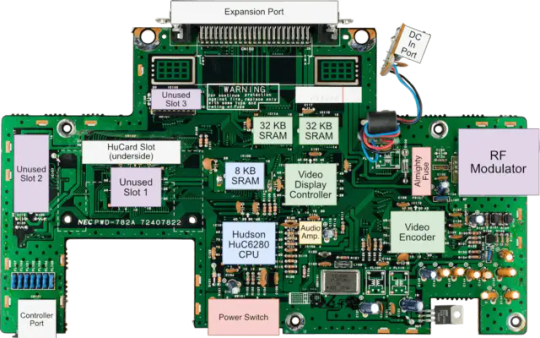

The TurboGrafix-16 (or PC Engine) really just kinda went all-in on making its own custom CPU from scratch which...we'll get to that, and otherwise uh... it had some interesting stuff going on sound wise? I feel like the main thing it had going was getting in on CDs early but I'm not messing with optical drives and they're no longer a really great storage option anyway.

Then there's the Neo Geo... where what's going on under the good is just kind of A LOT. I don't have the same handy analysis ready to go on this one, but my understanding is it didn't really go in for a lot of nice streamlining tricks and just kinda powered through. Like it has no separation of background layers and sprites. It's just all sprites. Shove those raw numbers.

So what's the best of all worlds option here? I'd like to go with one of them nice speedy Motorolla processors. The 68000 the Genesis used is no longer manufactured though. The closest still-in-production equivalent would be the 68SEC000 family. Seems like they go for about $15 a pop, have a full 32-bit bus, low voltage, some support clock speeds like... three times what the Genesis did. It's overkill, but should remove any concerns I have about having a way higher resolution than the systems I'm jumping off from. I can also easily throw in some beefy RAM chips where I need.

I was also planning to just directly replicate the Genesis sound setup, weird as it is, but hit the slight hiccup that the Z80 was JUST discontinued, like a month or two ago. Pretty sure someone already has a clone of it, might use that.

Here's where everything comes to a screeching halt though. While the makers of all these systems were making contracts for custom processors to add a couple extra features in that I should be able to work around by just using newer descendant chips that have that built in, there really just is no off the shelf PPU that I'm aware of. EVERYONE back in the day had some custom ASIC (application-specific integrated circuit) chip made to assemble every frame of video before throwing it at the TV. Especially the SNES, with all its modes changing the logic there and the HDMA getting all up in those mode 7 effects. Which are again, something I definitely want to replicate here.

So one option here is... I design and order my own ASIC chips. I can probably just fit the entire system in one even? This however comes with two big problems. It's pricy. Real pricy. Don't think it's really practical if I'm not ordering in bulk and this is a project I assume has a really niche audience. Also, I mean, if I'm custom ordering a chip, I can't really rationalize having stuff I could cram in there for free sitting outside as separate costly chips, and hell, if it's all gonna be in one package I'm no longer making this an educational electronics kit/console, so I may as well just emulate the whole thing on like a raspberry pi for a tenth of the cost or something.

The other option is... I commit to even more work, and find a way to reverse engineer all the functionality I want out with some big array of custom ROMs and placeholder RAM and just kinda have my own multi-chip homebrew co-processors? Still PROBABLY cheaper than the ASIC solution and I guess not really making more research work for myself. It's just going to make for a bigger/more crowded motherboard or something.

Oh and I'm now looking at a 5V processor and making controllers compatible with a 10V system so I need to double check that all the components in those don't really care that much and maybe adjust things.

And then there's also FPGAs (field programmable gate arrays). Even more expensive than an ASIC, but the advantage is it's sort of a chip emulator and you can reflash it with something else. So if you're specifically in the MiSTer scene, I just host a file somewhere and you make the one you already have pretend to be this system. So... good news for those people but I still need to actually build something here.

So... yeah that's where all this stands right now. I admit I'm in way way over my head, but I should get somewhere eventually?

11 notes

·

View notes

Note

@steamos-official here

I'm on the road, so I'm not doing online searches, but what are you? (eg. hardware, software, linux distro)

I need this for my list.

Thx!

I am the best window manager that you have ever seen ;P, written for wayland in zig

https://codeberg.org/river/river is the source code

6 notes

·

View notes

Text

So I made an app for PROTO. Written in Kotlin and runs on Android.

Next, I want to upgrade it with a controller mode. It should work so so I simply plug a wired xbox controller into my phone with a USB OTG adaptor… and bam, the phone does all the complex wireless communication and is a battery. Meaning that besides the controller, you only need the app and… any phone. Which anyone is rather likely to have Done.

Now THAT is convenient!

( Warning, the rest of the post turned into... a few rants. ) Why Android? Well I dislike Android less than IOS

So it is it better to be crawling in front of the alter of "We are making the apocalypse happen" Google than "5 Chinese child workers died while you read this" Apple?

Not much…

I really should which over to a better open source Linux distribution… But I do not have the willpower to research which one... So on Android I stay.

Kotlin is meant to be "Java, but better/more modern/More functional programming style" (Everyone realized a few years back that the 100% Object oriented programming paradigme is stupid as hell. And we already knew that about the functional programming paradigme. The best is a mix of everything, each used when it is the best option.) And for the most part, it succeeds. Java/Kotlin compiles its code down to "bytecode", which is essentially assembler but for the Java virtual machine. The virtual machine then runs the program. Like how javascript have the browser run it instead of compiling it to the specific machine your want it to run on… It makes them easy to port…

Except in the case of Kotlin on Android... there is not a snowflakes chance in hell that you can take your entire codebase and just run it on another linux distribution, Windows or IOS…

So... you do it for the performance right? The upside of compiling directly to the machine is that it does not waste power on middle management layers… This is why C and C++ are so fast!

Except… Android is… Clunky… It relies on design ideas that require EVERY SINGLE PROGRAM AND APP ON YOUR PHONE to behave nicely (Lots of "This system only works if every single app uses it sparingly and do not screw each-other over" paradigms .). And many distributions from Motorola like mine for example comes with software YOUR ARE NOT ALLOWED TO UNINSTALL... meaning that software on your phone is ALWAYS behaving badly. Because not a single person actually owns an Android phone. You own a brick of electronics that is worthless without its OS, and google does not sell that to you or even gift it to you. You are renting it for free, forever. Same with Motorola which added a few extra modifications onto Googles Android and then gave it to me.

That way, google does not have to give any rights to its costumers. So I cannot completely control what my phone does. Because it is not my phone. It is Googles phone.

That I am allowed to use. By the good graces of our corporate god emperors

"Moose stares blankly into space trying to stop being permanently angry at hoe everyone is choosing to run the world"

… Ok that turned dark… Anywho. TLDR There is a better option for 95% of apps (Which is "A GUI that interfaces with a database") "Just write a single HTML document with internal CSS and Javascript" Usually simpler, MUCH easier and smaller… And now your app works on any computer with a browser. Meaning all of them…

I made a GUI for my parents recently that works exactly like that. Soo this post:

It was frankly a mistake of me to learn Kotlin… Even more so since It is a… awful language… Clearly good ideas then ruined by marketing department people yelling "SUPPORT EVERYTHING! AND USE ALL THE BUZZWORD TECHNOLOGY! Like… If your language FORCES you to use exceptions for normal runtime behavior "Stares at CancellationException"... dear god that is horrible...

Made EVEN WORSE by being a really complicated way to re-invent the GOTO expression… You know... The thing every programmer is taught will eat your feet if you ever think about using it because it is SO dangerous, and SO bad form to use it? Yeah. It is that, hidden is a COMPLEATLY WRONG WAY to use exceptions…

goodie… I swear to Christ, every page or two of my Kotlin notes have me ranting how I learned how something works, and that it is terrible... Blaaa. But anyway now that I know it, I try to keep it fresh in my mind and use it from time to time. Might as well. It IS possible to run certain things more effective than a web page, and you can work much more directly with the file system. It is... hard-ish to get a webpage to "load" a file automatically... But believe me, it is good that this is the case.

Anywho. How does the app work and what is the next version going to do?

PROTO is meant to be a platform I test OTHER systems on, so he is optimized for simplicity. So how you control him is sending a HTTP 1.1 message of type Text/Plain… (This is a VERY fancy sounding way of saying "A string" in network speak). The string is 6 comma separated numbers. Linear movement XYZ and angular movement XYZ.

The app is simply 5 buttons that each sends a HTTP PUT request with fixed values. Specifically 0.5/-0.5 meter/second linear (Drive back or forward) 0.2/-0.2 radians/second angular (Turn right or turn left) Or all 0 for stop

(Yes, I just formatted normal text as code to make it more readable... I think I might be more infected by programming so much than I thought...)

Aaaaaanywho. That must be enough ranting. Time to make the app

31 notes

·

View notes

Text

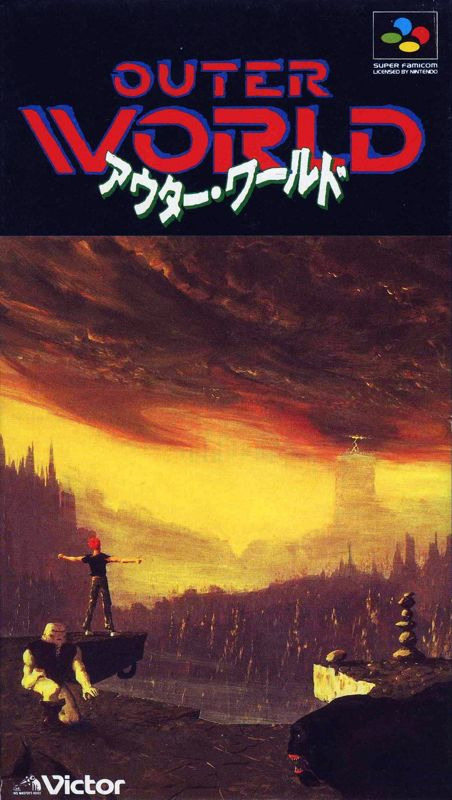

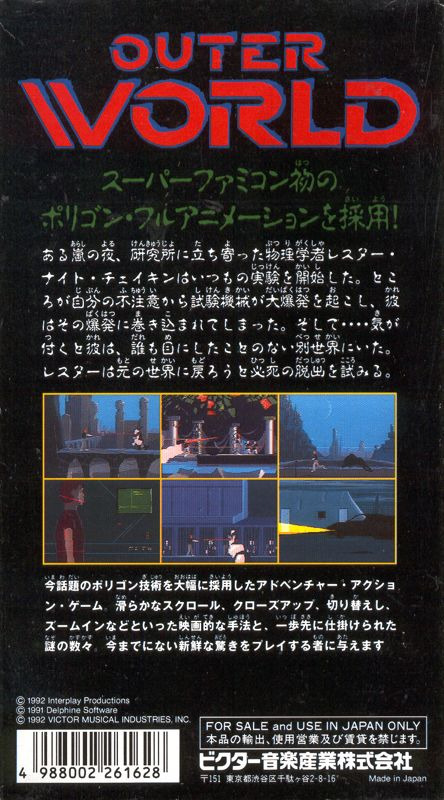

Super Famicom - Outer World (Another World / Out of this World)

Title: Outer World / アウターワールド

Developer: Delphine Software International / Interplay Productions

Publisher: Victor Musical Industries

Release date: 27 November 1992

Catalogue Code: SHVC-TW

Genre: Cinematic Platform Action

Outer World is largely the work of just one man, Eric Chahi. He has managed to create a game that is more satisfying than nearly any modern game you will have played in the past five years, and perhaps more satisfying than any you have ever played. There were no games like it before it came out, and the later imitations (i.e. Heart of the Alien on the Sega CD) can't quite capture the unique spirit of their inspirational source. It's simply that good. While the gameplay is nearly beyond reproach, the best traits of this classic are its imagination, its gorgeous design, and above all its trust in the player's ability to imagine, to commit to the alien world on its terms.

The beginning of the game shows you one major ingredient of the game's genius when the primitive vector graphics are displayed in fully animated glory during the opening cinematic. The crude shapes would seem cheap and disappointing if not for the precision and elegance with which they are employed--cinematic angles and an engaging trust in the player's imagination serve to make these primitive scenes interesting even today. Lester's fancy car squeals into view, and we are treated to some of the green hologram-like interfaces Flashback fans will be intimately familiar with as we watch a physics expert burning the midnight oil. Something goes wrong during particle acceleration, and suddenly Lester and most of his console disappear with a flash of blue sparks and light.

Immediately, you are thrown into the game. Lester must escape from the pool and the grasping tentacles or face what will likely be the first of many, many deaths. Once out of the pool, you can appreciate the appealing sparseness of the alien landscape. With pale, simple blocks of color, an evocative alien world is realized: misty pillars of rock trail off into the horizon below a crescent moon; a beast colored an impenetrable shade of black lopes into view and looks at you with red eyes. And then a tentacle reaches for you from the heretofore calm pool surface and it's time to move again.

From this bleak, lonely landscape that emphasizes sheer scope and emptiness the player travels to a claustrophobic cage, to a deadly alien tank, and to a swinging harem--all the while that cinematic touch to the scenery rarely fails to amaze. Enemies are more than dimwitted patterns that are learned and consequently no longer require thought--the cinematic design goes down to individual enemy behavior. The puzzles come down to how exactly to defeat the alien who is behind six energy shields and lobs energy bombs that can penetrate your own, or how to defeat two soldiers who come in at both sides simultaneously. All the while the story is being told by your actions, and those of your surprisingly expressive alien friend. After you attract the ire of the guard below your cage, when he fires his gun, another guard appears to watch the action in the background. When you crush the guard below you with your falling cage, the prisoners in the background stop breaking rocks as you grab the gun and flee. At no point is there a rote procession of action that involves the same stale maneuvers used just a screen ago--nearly every enemy encounter presents a new and unique challenge, often a new wonder of art direction, and sometimes a diabolically difficult puzzle to be solved. When the game is finally over, its ending battle and cutscene are as cinematic, as boldly unique, and as cohesive with the game's tone as anyone could wish for.

What's the point of all this cinematic style? It makes you accept the world on its own terms. You don't think of Lester as an abstract object, running lifelessly around a gaming world distorted and simplified into a recognizable gaming archetype--you live and breathe the world along with him, because both of you are experiencing this exotic environment for the first time, and it is full of wonders and adventure rather than trite platforming cliches. This game asks the player's imagination to fill in the corners, to ignore the blockiness and palette limitations of vector graphics. All but the most closed of minds will happily go along with that request. Those that do will be richly rewarded by this game because a lot of love has been put into it.

youtube

4 notes

·

View notes

Text

Oh gawd, it’s all unravelling!! I’ve resorted to asking my ChatGPT for advice on how to handle this!! This is the context I put it:

work in a small startup with 7 other people

I have been brought on for a three month contract to assess the current product and make recommendations for product strategy, product roadmap, improved engineering and product processes with a view to rebuilding the platform with a new product and migrating existing vendors and borrowers across

There is one engineer and no-one else in the company has any product or technical experience

The engineer has worked on his own for 6 years on the product with no other engineering or product person

He does all coding, testing, development, devops tasks

He also helps with customer support enquires

He was not involved in the process of bringing me onboard and felt blindsided by my arrival

I have requested access to Github, and his response was:

As you can imagine access to the source code is pretty sensitive. Are you looking for something specifically? And do you plan on downloading the source code or sharing with anyone else?

He then advised they only pay for a single seat

I have spoken with the Chief Operations Officer who I report to in the contract and advised my business risk concerns around single point of failure

I have still not been granted access to Github so brought it up again today with the COO, who said he had requested 2 weeks ago

The COO then requested on Asana that the engineer add myself and himself as Github users

I received the following from the engineer:

Hey can you please send me your use cases for your access to GitHub? How exactly are you going to use your access to the source code?

My response:

Hey! My request isn’t about making changes to the codebase myself but ensuring that Steward isn’t reliant on a single person for access.

Here are the key reasons I need GitHub access:

1 Business Continuity & Risk Management – If anything happens to you (whether you’re on holiday, sick, or god forbid, get hit by a bus!!), we need someone else with access to ensure the platform remains operational. Right now, Steward has a single point of failure, which is a pretty big risk.

2 Product Oversight & Documentation – As Head of Product, I need visibility into the codebase to understand technical limitations, dependencies, and opportunities at a broad level. This DOESN'T mean I’ll be writing code, but I need to see how things are structured to better inform product decisions and prioritization.

3 Facilitating Future Hiring – If we bring in additional engineers or external contractors, we need a structured process for managing access. It shouldn't be on just one person to approve or manage this.

Super happy to discuss any concerns you have, but this is ultimately a business-level decision to ensure Steward isn’t putting itself at risk.

His response was:

1&3 Bridget has user management access for those reasons

2. no one told me you were Head of Product already, which isn’t surprising. But congrats! So will you be sharing the source code with other engineers for benchmarking?

The software engineer is an introvert and while not rude is helpful without volunteering inflation

He is also the single access for AWS, Sentry, Persona (which does our KYC checks).

I already had a conversation with him as I felt something was amiss in the first week. This was when he identified that he had been "blindsided" by my arrival, felt his code and work was being audited. I explained that it had been a really long process to get the contract (18 months), also that I have a rare mix of skills (agtech, fintech, product) that is unusually suited to Steward. I was not here to tell him what to do but to work with him, my role to setup the strategy and where we need to go with the product and why, and then work with him to come up with the best solution and he will build it. I stressed I am not an engineer and do not code.

I have raised some concerns with the COO and he seems to share some of the misgivings, I sense some personality differences, there seems like there are some undercurrents that were there before I started.

I have since messaged him with a gentler more collaborative approach:

Hey, I’ve been thinking about GitHub access and wanted to float an idea, would it make sense for us to do a working session where you just walk me through the repo first? That way, I can get a sense of the structure without us having to rush any access changes or security decisions right away. Then, we can figure out what makes sense together. What do you think?

I’m keen to understand your perspective a bit more, can we chat about it tomorrow when you're back online? Is 4pm your time still good? I know you’ve got a lot on, so happy to be flexible.

I think I’ve fucked it up, I’m paranoid the COO is going to think I’m stirring up trouble and I’m going to miss out on this job. How to be firm yet engage with someone that potentially I’ll have to work closely with(he’s a prickly, hard to engage Frenchie, who’s lived in Aus and the US for years).

5 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

So my post about how you should draw (YOU SHOULD DRAW!) blew up beyond any precedent since my return to tumblr and someone in the FRANKLY KIND OF TERRIFYING NUMBER OF REBLOGS mentioned downloading a free DAW to make music and that got me thinking,

HOBBY SOFTWARE MEGAPOST GO

All of the below software is free to use, and most of it is Open Source (which is its own thing I recommend learning about, its entire existence and success gives me hope for humanity) so GO GET SOME TOOLS! Make things like nobody's watching and then SHOW IT TO THEM ANYWAY! Or don't! Even if you hide your work from the world (lord knows I do!!!) you will have created something! And it feels amazing to create something!

VISUAL ARTS:

Inkscape: Adobe Illustrator replacement, pretty solid if a bit quirky.

Krita: Painting software, if anything slightly overpowered and sometimes more complicated than you want, but can do bloody anything including advanced color management. A wonderful tool.

Blender: You have probably heard it is super hard to use. This is CONDITIONALLY true. Because the developers are working day and night to improve everything about it it's always getting better and now like, 80% of the hardness is just because 3D is hard. Aside: Blender Grease Pencil - A subsystem in Blender is concerned with 2D animation and it is. Surprisingly good. Some annoying conventions but totally possible to literally make professional traditional 2D animation.

MUSIC:

LMMS: A free and open source DAW that can do a lot, except use most modern VST plugins. The practical upshot of this is that if you are just starting out with music it is totally serviceable but over time you might start to long for something with the ability to load hella plugins. (I'm currently trying out Reaper which has a long free trial and is technically nagware after that point...)

PlugData: You GOTTA TRY THIS, it's not mentally for everyone (not HARD exactly, just WEIRD) but if your brain works well with this kind of flow graph stuff it's a magical playground of music. (If you have heard of PureData, PlugData is based on it but has a lot of nice graphical upgrades and can work as a VST if you have a proper DAW)

Surge XT: A big ol' synthesizer plugin that also can run standalone and take midi input so you can technically use it to make music even if you don't have a DAW. If planning notes ahead of time sounds intimidating, but you can get your hands on a midi piano controller, this might actually be a great way to start out playing with music on your computer!

Bespoke Synth: Another open source DAW, but this one is... sort of exploded? Like PlugData you patch things together with cables but it has a wild electrified aesthetic and it can do piano rolls. Fun though!

GAME DEV:

Yeah that's right, game dev. You ABSOLUTELY can make video games with no experience or ability to code. I actually recommend video games as a way to learn how to code because the dopamine hits from making a character bumble around on a screen are enormously bigger than like. Calculating pi or something boring like that. ANYWAY:

Twine: Twine is what I might describe as sort of a zero-barrier game dev tool because you're literally writing a story except you can make it branch. It has programming features but you can sort of pick them up as you go. Lowest possible barrier to entry, especially if you write!

Godot: I use this engine all the time! It's got great tutorials all over the internet and is 100% FREE AND ALWAYS WILL BE. Technically there are more Unity tutorials out there, but Godot has plenty enough to learn how to do things. It's also SUPER LIGHTWEIGHT so you won't spend your precious hobby time waiting for the engine to load. There are absolutely successful games made with this but I think the best thing about it is that the shallow end of the learning curve is PRETTY OK ACTUALLY.

7 notes

·

View notes

Text

Understanding Encryption: How Signal & Telegram Ensure Secure Communication

Signal vs. Telegram: A Comparative Analysis

Signal vs Telegram

Security Features Comparison

Signal:

Encryption: Uses the Signal Protocol for strong E2EE across all communications.

Metadata Protection: User privacy is protected because minimum metadata is collected.

Open Source: Code publicly available for scrutiny, anyone can download and inspect the source code to verify the claims.

Telegram:

Encryption: Telegram uses MTProto for encryption, it also uses E2EE but it is limited to Secret Chats only.

Cloud Storage: Stores regular chat data in the cloud, which can be a potential security risk.

Customization: Offers more features and customization options but at the potential cost of security.

Usability and Performance Comparison

Signal:

User Interface: Simple and intuitive, focused on secure communication.

Performance: Privacy is prioritized over performance, the main focus is on minimizing the data collection.

Cross-Platform Support: It is also available on multiple platforms. Like Android, iOS, and desktop.

Telegram:

User Interface: Numerous customization options for its audience, thus making it feature rich for its intended audience.

Performance: Generally fast and responsive, but security features may be less robust.

Cross-Platform Support: It is also available on multiple platforms, with seamless synchronization across devices because all the data is stored on Telegram cloud.

Privacy Policies and Data Handling

Signal:

Privacy Policy: Signal’s privacy policy is straightforward, it focuses on minimal data collection and strong user privacy. Because it's an independent non-profit company.

Data Handling: Signal does not store any message data on its servers and most of the data remains on the user's own device thus user privacy is prioritized over anything.

Telegram:

Privacy Policy: Telegram stores messages on its servers, which raises concerns about privacy, because theoretically the data can be accessed by the service provider.

Data Handling: While Telegram offers secure end to end encrypted options like Secret Chats, its regular chats are still stored on its servers, potentially making them accessible to Telegram or third parties.

Designing a Solution for Secure Communication

Key Components of a Secure Communication System

Designing a secure communication system involves several key components:

Strong Encryption: The system should employ adequate encryption standards (e.g. AES, RSA ) when data is being transmitted or when stored.

End-to-End Encryption: E2EE guarantees that attackers cannot read any of the communication, meaning that the intended recipients are the only ones who have access to it.

Authentication: It is necessary to identify the users using secure means such as Two Factor Authentication (2FA) to restrict unauthorized access.

Key Management: The system should incorporate safe procedures for creating, storing and sharing encryption keys.

Data Integrity: Some standard mechanisms must be followed in order to ensure that the data is not altered during its transmission; For instance : digital signatures or hashing.

User Education: To ensure the best performance and security of the system, users should be informed about security and the appropriate use of the system such practices.

Best Practices for Implementing Encryption

To implement encryption effectively, consider the following best practices:

Use Proven Algorithms: Do not implement proprietary solutions that are untested, because these algorithms are the ones which haven't gone through a number of testing phases by the cryptographic community. On the other hand, use well-established algorithms that are already known and tested for use – such as AES and RSA.

Keep Software Updated: Software and encryption guidelines must be frequently updated because these technologies get out of date quickly and are usually found with newly discovered vulnerabilities.

Implement Perfect Forward Secrecy (PFS): PFS ensures that if one of the encryption keys is compromised then the past communications must remain secure, After every session a new key must be generated.

Data must be Encrypted at All Stages: Ensure that the user data is encrypted every-time, during transit as well as at rest – To protect user data from interception and unauthorized access.

Use Strong Passwords and 2FA: Encourage users to use strong & unique passwords that can not be guessed so easily. Also, motivate users to enable the two-factor authentication option to protect their accounts and have an extra layer of security.

User Experience and Security Trade-offs

While security is important, but it's also important to take care of the user experience when designing a secure communication system. If your security measures are overly complex then users might face difficulties in adopting the system or they might make mistakes in desperation which might compromise security.

To balance security and usability, developers should:

Balancing Security And Usability

Facilitate Key Management: Introduce automated key generation and exchange mechanisms in order to lessen user's overhead

Help Users: Ensure that simple and effective directions are provided in relation to using security aspects.

Provide Control: Let the users say to what degree they want to secure themselves e.g., if they want to make use of E2EE or not.

Track and Change: Always stay alert and hands-on in the system monitoring for security breaches as well as for users, and where there is an issue, do something about it and change

Challenges and Limitations of Encryption Potential Weaknesses in Encryption

Encryption is without a doubt one of the most effective ways of safeguarding that communications are secured. However, it too has its drawbacks and weaknesses that it is prone to:

Key Management: Managing and ensuring the safety of the encryption keys is one of the most painful heads in encryption that one has to bear. When keys get lost or fall into unsafe hands, the encrypted information is also at risk.

Vulnerabilities in Algorithms: As far as encryption is concerned the advanced encryption methods are safe and developed well, but it is not given that vulnerabilities will not pop up over the years. Such vulnerabilities are meant for exploitation by attackers especially where the algorithm in question is not updated as frequently as it should be.

Human Error: The strongest encryption can be undermined by human error. People sometimes use weak usernames and passwords, where they are not supposed to, and or even share their credentials with other persons without considering the consequences.

Backdoors: In some cases, businesses are pressured by Governments or law officials into adding back doors to the encryption software. These backdoors can be exploited by malicious actors if discovered.

Conclusion

Although technology has made it possible to keep in touch with others with minimal effort regardless of their geographical location, the importance of encryption services still persists as it allows us to protect ourselves and our information from external invaders. The development of apps like Signal and Telegram has essentially transformed the aspect of messaging and provided their clients with the best security features covering the use of multiple types of encryption and other means to enhance user privacy. Still, to design a secure communication system, it's not only designing the hardware or software with anti-eavesdropping features, but it factors in the design of systems that relate to the management of keys, communication of the target users, and the trade-off between security and usability.

However, technology will evolve, followed by the issues and the solutions in secure communications. However by keeping up with pace and looking for better ways to protect privacy we can provide people the privacy that they are searching for.

Find Out More

3 notes

·

View notes

Text

Complete Terraform IAC Development: Your Essential Guide to Infrastructure as Code

If you're ready to take control of your cloud infrastructure, it's time to dive into Complete Terraform IAC Development. With Terraform, you can simplify, automate, and scale infrastructure setups like never before. Whether you’re new to Infrastructure as Code (IAC) or looking to deepen your skills, mastering Terraform will open up a world of opportunities in cloud computing and DevOps.

Why Terraform for Infrastructure as Code?

Before we get into Complete Terraform IAC Development, let’s explore why Terraform is the go-to choice. HashiCorp’s Terraform has quickly become a top tool for managing cloud infrastructure because it’s open-source, supports multiple cloud providers (AWS, Google Cloud, Azure, and more), and uses a declarative language (HCL) that’s easy to learn.

Key Benefits of Learning Terraform

In today's fast-paced tech landscape, there’s a high demand for professionals who understand IAC and can deploy efficient, scalable cloud environments. Here’s how Terraform can benefit you and why the Complete Terraform IAC Development approach is invaluable:

Cross-Platform Compatibility: Terraform supports multiple cloud providers, which means you can use the same configuration files across different clouds.

Scalability and Efficiency: By using IAC, you automate infrastructure, reducing errors, saving time, and allowing for scalability.

Modular and Reusable Code: With Terraform, you can build modular templates, reusing code blocks for various projects or environments.

These features make Terraform an attractive skill for anyone working in DevOps, cloud engineering, or software development.

Getting Started with Complete Terraform IAC Development

The beauty of Complete Terraform IAC Development is that it caters to both beginners and intermediate users. Here’s a roadmap to kickstart your learning:

Set Up the Environment: Install Terraform and configure it for your cloud provider. This step is simple and provides a solid foundation.

Understand HCL (HashiCorp Configuration Language): Terraform’s configuration language is straightforward but powerful. Knowing the syntax is essential for writing effective scripts.

Define Infrastructure as Code: Begin by defining your infrastructure in simple blocks. You’ll learn to declare resources, manage providers, and understand how to structure your files.

Use Modules: Modules are pre-written configurations you can use to create reusable code blocks, making it easier to manage and scale complex infrastructures.

Apply Best Practices: Understanding how to structure your code for readability, reliability, and reusability will save you headaches as projects grow.

Core Components in Complete Terraform IAC Development

When working with Terraform, you’ll interact with several core components. Here’s a breakdown:

Providers: These are plugins that allow Terraform to manage infrastructure on your chosen cloud platform (AWS, Azure, etc.).

Resources: The building blocks of your infrastructure, resources represent things like instances, databases, and storage.

Variables and Outputs: Variables let you define dynamic values, and outputs allow you to retrieve data after deployment.

State Files: Terraform uses a state file to store information about your infrastructure. This file is essential for tracking changes and ensuring Terraform manages the infrastructure accurately.

Mastering these components will solidify your Terraform foundation, giving you the confidence to build and scale projects efficiently.

Best Practices for Complete Terraform IAC Development

In the world of Infrastructure as Code, following best practices is essential. Here are some tips to keep in mind:

Organize Code with Modules: Organizing code with modules promotes reusability and makes complex structures easier to manage.

Use a Remote Backend: Storing your Terraform state in a remote backend, like Amazon S3 or Azure Storage, ensures that your team can access the latest state.

Implement Version Control: Version control systems like Git are vital. They help you track changes, avoid conflicts, and ensure smooth rollbacks.

Plan Before Applying: Terraform’s “plan” command helps you preview changes before deploying, reducing the chances of accidental alterations.

By following these practices, you’re ensuring your IAC deployments are both robust and scalable.

Real-World Applications of Terraform IAC

Imagine you’re managing a complex multi-cloud environment. Using Complete Terraform IAC Development, you could easily deploy similar infrastructures across AWS, Azure, and Google Cloud, all with a few lines of code.

Use Case 1: Multi-Region Deployments

Suppose you need a web application deployed across multiple regions. Using Terraform, you can create templates that deploy the application consistently across different regions, ensuring high availability and redundancy.

Use Case 2: Scaling Web Applications

Let’s say your company’s website traffic spikes during a promotion. Terraform allows you to define scaling policies that automatically adjust server capacities, ensuring that your site remains responsive.

Advanced Topics in Complete Terraform IAC Development

Once you’re comfortable with the basics, Complete Terraform IAC Development offers advanced techniques to enhance your skillset:

Terraform Workspaces: Workspaces allow you to manage multiple environments (e.g., development, testing, production) within a single configuration.

Dynamic Blocks and Conditionals: Use dynamic blocks and conditionals to make your code more adaptable, allowing you to define configurations that change based on the environment or input variables.

Integration with CI/CD Pipelines: Integrate Terraform with CI/CD tools like Jenkins or GitLab CI to automate deployments. This approach ensures consistent infrastructure management as your application evolves.

Tools and Resources to Support Your Terraform Journey

Here are some popular tools to streamline your learning:

Terraform CLI: The primary tool for creating and managing your infrastructure.

Terragrunt: An additional layer for working with Terraform, Terragrunt simplifies managing complex Terraform environments.

HashiCorp Cloud: Terraform Cloud offers a managed solution for executing and collaborating on Terraform workflows.

There are countless resources available online, from Terraform documentation to forums, blogs, and courses. HashiCorp offers a free resource hub, and platforms like Udemy provide comprehensive courses to guide you through Complete Terraform IAC Development.

Start Your Journey with Complete Terraform IAC Development

If you’re aiming to build a career in cloud infrastructure or simply want to enhance your DevOps toolkit, Complete Terraform IAC Development is a skill worth mastering. From managing complex multi-cloud infrastructures to automating repetitive tasks, Terraform provides a powerful framework to achieve your goals.

Start with the basics, gradually explore advanced features, and remember: practice is key. The world of cloud computing is evolving rapidly, and those who know how to leverage Infrastructure as Code will always have an edge. With Terraform, you’re not just coding infrastructure; you’re building a foundation for the future. So, take the first step into Complete Terraform IAC Development—it’s your path to becoming a versatile, skilled cloud professional

2 notes

·

View notes

Text

Gemini Code Assist Enterprise: AI App Development Tool

Introducing Gemini Code Assist Enterprise’s AI-powered app development tool that allows for code customisation.

The modern economy is driven by software development. Unfortunately, due to a lack of skilled developers, a growing number of integrations, vendors, and abstraction levels, developing effective apps across the tech stack is difficult.

To expedite application delivery and stay competitive, IT leaders must provide their teams with AI-powered solutions that assist developers in navigating complexity.

Google Cloud thinks that offering an AI-powered application development solution that works across the tech stack, along with enterprise-grade security guarantees, better contextual suggestions, and cloud integrations that let developers work more quickly and versatile with a wider range of services, is the best way to address development challenges.

Google Cloud is presenting Gemini Code Assist Enterprise, the next generation of application development capabilities.

Beyond AI-powered coding aid in the IDE, Gemini Code Assist Enterprise goes. This is application development support at the corporate level. Gemini’s huge token context window supports deep local codebase awareness. You can use a wide context window to consider the details of your local codebase and ongoing development session, allowing you to generate or transform code that is better appropriate for your application.

With code customization, Code Assist Enterprise not only comprehends your local codebase but also provides code recommendations based on internal libraries and best practices within your company. As a result, Code Assist can produce personalized code recommendations that are more precise and pertinent to your company. In addition to finishing difficult activities like updating the Java version across a whole repository, developers can remain in the flow state for longer and provide more insights directly to their IDEs. Because of this, developers can concentrate on coming up with original solutions to problems, which increases job satisfaction and gives them a competitive advantage. You can also come to market more quickly.

GitLab.com and GitHub.com repos can be indexed by Gemini Code Assist Enterprise code customisation; support for self-hosted, on-premise repos and other source control systems will be added in early 2025.

Yet IDEs are not the only tool used to construct apps. It integrates coding support into all of Google Cloud’s services to help specialist coders become more adaptable builders. The time required to transition to new technologies is significantly decreased by a code assistant, which also integrates the subtleties of an organization’s coding standards into its recommendations. Therefore, the faster your builders can create and deliver applications, the more services it impacts. To meet developers where they are, Code Assist Enterprise provides coding assistance in Firebase, Databases, BigQuery, Colab Enterprise, Apigee, and Application Integration. Furthermore, each Gemini Code Assist Enterprise user can access these products’ features; they are not separate purchases.

Gemini Code Support BigQuery enterprise users can benefit from SQL and Python code support. With the creation of pre-validated, ready-to-run queries (data insights) and a natural language-based interface for data exploration, curation, wrangling, analysis, and visualization (data canvas), they can enhance their data journeys beyond editor-based code assistance and speed up their analytics workflows.

Furthermore, Code Assist Enterprise does not use the proprietary data from your firm to train the Gemini model, since security and privacy are of utmost importance to any business. Source code that is kept separate from each customer’s organization and kept for usage in code customization is kept in a Google Cloud-managed project. Clients are in complete control of which source repositories to utilize for customization, and they can delete all data at any moment.

Your company and data are safeguarded by Google Cloud’s dedication to enterprise preparedness, data governance, and security. This is demonstrated by projects like software supply chain security, Mandiant research, and purpose-built infrastructure, as well as by generative AI indemnification.

Google Cloud provides you with the greatest tools for AI coding support so that your engineers may work happily and effectively. The market is also paying attention. Because of its ability to execute and completeness of vision, Google Cloud has been ranked as a Leader in the Gartner Magic Quadrant for AI Code Assistants for 2024.

Gemini Code Assist Enterprise Costs

In general, Gemini Code Assist Enterprise costs $45 per month per user; however, a one-year membership that ends on March 31, 2025, will only cost $19 per month per user.

Read more on Govindhtech.com

#Gemini#GeminiCodeAssist#AIApp#AI#AICodeAssistants#CodeAssistEnterprise#BigQuery#Geminimodel#News#Technews#TechnologyNews#Technologytrends#Govindhtech#technology

3 notes

·

View notes

Text

The 5 best open source website builders in 2024

Websites are created for many reasons, such as sharing information, selling products, or connecting with customers. Some websites are designed to build communities, share videos, or manage blogs. For businesses, a professional website is essential for connecting with clients and showing that they're a trustworthy company.

Having a well-designed website is crucial for a business's growth. A good website can even increase revenue, as seen in the case of ESPN.com, which saw a 35% increase in revenue by simply updating their homepage.