#Data Pipeline Best Practices

Explore tagged Tumblr posts

Text

Building Robust Data Pipelines: Best Practices for Seamless Integration

Learn the best practices for building and managing scalable data pipelines. Ensure seamless data flow, real-time analytics, and better decision-making with optimized pipelines.

0 notes

Text

Azure DevOps Training

Azure DevOps Training Programs

In today's rapidly evolving tech landscape, mastering Azure DevOps has become indispensable for organizations aiming to streamline their software development and delivery processes. As businesses increasingly migrate their operations to the cloud, the demand for skilled professionals proficient in Azure DevOps continues to soar. In this comprehensive guide, we'll delve into the significance of Azure DevOps training and explore the myriad benefits it offers to both individuals and enterprises.

Understanding Azure DevOps:

Before we delve into the realm of Azure DevOps training, let's first grasp the essence of Azure DevOps itself. Azure DevOps is a robust suite of tools offered by Microsoft Azure that facilitates collaboration, automation, and orchestration across the entire software development lifecycle. From planning and coding to building, testing, and deployment, Azure DevOps provides a unified platform for managing and executing diverse DevOps tasks seamlessly.

Why Azure DevOps Training Matters:

With Azure DevOps emerging as the cornerstone of modern DevOps practices, acquiring proficiency in this domain has become imperative for IT professionals seeking to stay ahead of the curve. Azure DevOps training equips individuals with the knowledge and skills necessary to leverage Microsoft Azure's suite of tools effectively. Whether you're a developer, IT administrator, or project manager, undergoing Azure DevOps training can significantly enhance your career prospects and empower you to drive innovation within your organization.

Key Components of Azure DevOps Training Programs:

Azure DevOps training programs are meticulously designed to cover a wide array of topics essential for mastering the intricacies of Azure DevOps. From basic concepts to advanced techniques, these programs encompass the following key components:

Azure DevOps Fundamentals: An in-depth introduction to Azure DevOps, including its core features, functionalities, and architecture.

Agile Methodologies: Understanding Agile principles and practices, and how they align with Azure DevOps for efficient project management and delivery.

Continuous Integration (CI): Learning to automate the process of integrating code changes into a shared repository, thereby enabling early detection of defects and ensuring software quality.

Continuous Deployment (CD): Exploring the principles of continuous deployment and mastering techniques for automating the deployment of applications to production environments.

Azure Pipelines: Harnessing the power of Azure Pipelines for building, testing, and deploying code across diverse platforms and environments.

Infrastructure as Code (IaC): Leveraging Infrastructure as Code principles to automate the provisioning and management of cloud resources using tools like Azure Resource Manager (ARM) templates.

Monitoring and Logging: Implementing robust monitoring and logging solutions to gain insights into application performance and troubleshoot issues effectively.

Security and Compliance: Understanding best practices for ensuring the security and compliance of Azure DevOps environments, including identity and access management, data protection, and regulatory compliance.

The Benefits of Azure DevOps Certification:

Obtaining Azure DevOps certification not only validates your expertise in Azure DevOps but also serves as a testament to your commitment to continuous learning and professional development. Azure DevOps certifications offered by Microsoft Azure are recognized globally and can open doors to exciting career opportunities in various domains, including cloud computing, software development, and DevOps engineering.

Conclusion:

In conclusion, Azure DevOps training is indispensable for IT professionals looking to enhance their skills and stay relevant in today's dynamic tech landscape. By undergoing comprehensive Azure DevOps training programs and obtaining relevant certifications, individuals can unlock a world of opportunities and propel their careers to new heights. Whether you're aiming to streamline your organization's software delivery processes or embark on a rewarding career journey, mastering Azure DevOps is undoubtedly a game-changer. So why wait? Start your Azure DevOps training journey today and pave the way for a brighter tomorrow.

5 notes

·

View notes

Text

Business Potential with Advanced Data Engineering Solutions

In the age of information, businesses are swamped with data. The key to turning this data into valuable insights lies in effective data engineering. Advanced data engineering solutions help organizations harness their data to drive better decisions and outcomes.

Why Data Engineering Matters

Data engineering is fundamental to making sense of the vast amounts of data companies collect. It involves building and maintaining the systems needed to gather, store, and analyze data. With the right data engineering solutions, businesses can manage complex data environments and ensure their data is accurate and ready for analysis.

1. Seamless Data Integration and Transformation

Handling data from various sources—like databases, cloud services, and third-party apps—can be challenging. Data engineering services streamline this process by integrating and transforming data into a unified format. This includes extracting data, cleaning it, and making it ready for in-depth analysis.

2. Efficient Data Pipeline Development

Data pipelines automate the flow of information from its source to its destination. Reliable and scalable pipelines can handle large volumes of data, ensuring continuous updates and availability. This leads to quicker and more informed business decisions.

3. Centralized Data Warehousing

A data warehouse serves as a central repository for storing data from multiple sources. State-of-the-art data warehousing solutions keep data organized and accessible, improving data management and boosting the efficiency of analysis and reporting.

4. Ensuring Data Quality and Governance

For accurate analytics, maintaining high data quality is crucial. Rigorous data validation and cleansing processes, coupled with strong governance frameworks, ensure that data remains reliable, compliant with regulations, and aligned with best practices. This fosters confidence in business decisions.

5. Scalable and Adaptable Solutions

As businesses grow, their data needs evolve. Scalable data engineering solutions can expand and adapt to changing requirements. Whether dealing with more data or new sources, these solutions are designed to keep pace with business growth.

The Advantages of Working with a Data Engineering Expert

Teaming up with experts in data engineering offers several key benefits:

1. Expertise You Can Rely On

With extensive experience in data engineering, professionals bring a high level of expertise to the table. Their team is adept at the latest technologies and practices, ensuring businesses receive top-notch solutions tailored to their needs.

2. Tailored Solutions

Every business has unique data challenges. Customized data engineering solutions are designed to meet specific requirements, ensuring you get the most out of your data investments.

3. Cutting-Edge Technology

Leveraging the latest technologies and tools ensures innovative data engineering solutions that keep you ahead of the curve. This commitment to technology provides effective data management and analytics practices.

4. Improved Data Accessibility and Insights

Advanced data engineering solutions enhance how you access and interpret your data. By streamlining processes like data integration and storage, they make it easier to generate actionable insights and make informed decisions.

Conclusion

In a world where data is a critical asset, having robust data engineering solutions is essential for maximizing its value. Comprehensive services that cover all aspects of data engineering—from integration and pipeline development to warehousing and quality management—can transform your data practices, improve accessibility, and unlock powerful insights that drive your business forward.

5 notes

·

View notes

Text

Securing and Monitoring Your Data Pipeline: Best Practices for Kafka, AWS RDS, Lambda, and API Gateway Integration

http://securitytc.com/T3Rgt9

3 notes

·

View notes

Text

Intermediate Machine Learning: Advanced Strategies for Data Analysis

Introduction:

Welcome to the intermediate machine learning course! In this article, we'll delve into advanced strategies for data analysis that will take your understanding of machine learning to the next level. Whether you're a budding data scientist or a seasoned professional looking to refine your skills, this course will equip you with the tools and techniques necessary to tackle complex data challenges.

Understanding Intermediate Machine Learning:

Before diving into advanced strategies, let's clarify what we mean by intermediate machine learning. At this stage, you should already have a basic understanding of machine learning concepts such as supervised and unsupervised learning, feature engineering, and model evaluation. Intermediate machine learning builds upon these fundamentals, exploring more sophisticated algorithms and techniques.

Exploratory Data Analysis (EDA):

EDA is a critical first step in any data analysis project. In this section, we'll discuss advanced EDA techniques such as correlation analysis, outlier detection, and dimensionality reduction. By thoroughly understanding the structure and relationships within your data, you'll be better equipped to make informed decisions throughout the machine learning process.

Feature Engineering:

Feature engineering is the process of transforming raw data into a format that is suitable for machine learning algorithms. In this intermediate course, we'll explore advanced feature engineering techniques such as polynomial features, interaction terms, and feature scaling. These techniques can help improve the performance and interpretability of your machine learning models.

Model Selection and Evaluation:

Choosing the right model for your data is crucial for achieving optimal performance. In this section, we'll discuss advanced model selection techniques such as cross-validation, ensemble methods, and hyperparameter tuning. By systematically evaluating and comparing different models, you can identify the most suitable approach for your specific problem.

Handling Imbalanced Data:

Imbalanced data occurs when one class is significantly more prevalent than others, leading to biased model performance. In this course, we'll explore advanced techniques for handling imbalanced data, such as resampling methods, cost-sensitive learning, and ensemble techniques. These strategies can help improve the accuracy and robustness of your machine learning models in real-world scenarios.

Advanced Algorithms:

In addition to traditional machine learning algorithms such as linear regression and decision trees, there exists a wide range of advanced algorithms that are well-suited for complex data analysis tasks. In this section, we'll explore algorithms such as support vector machines, random forests, and gradient boosting machines. Understanding these algorithms and their underlying principles will expand your toolkit for solving diverse data challenges.

Interpretability and Explainability:

As machine learning models become increasingly complex, it's essential to ensure that they are interpretable and explainable. In this course, we'll discuss advanced techniques for model interpretability, such as feature importance analysis, partial dependence plots, and model-agnostic explanations. These techniques can help you gain insights into how your models make predictions and build trust with stakeholders.

Deploying Machine Learning Models:

Deploying machine learning models into production requires careful consideration of factors such as scalability, reliability, and security. In this section, we'll explore advanced deployment strategies, such as containerization, model versioning, and continuous integration/continuous deployment (CI/CD) pipelines. By following best practices for model deployment, you can ensure that your machine learning solutions deliver value in real-world environments.

Practical Case Studies:

To reinforce your understanding of intermediate machine learning concepts, we'll conclude this course with practical case studies that apply these techniques to real-world datasets. By working through these case studies, you'll gain hands-on experience in applying advanced strategies to solve complex data analysis problems.

Conclusion:

Congratulations on completing the intermediate machine learning course! By mastering advanced strategies for data analysis, you're well-equipped to tackle a wide range of machine learning challenges with confidence. Remember to continue practicing and experimenting with these techniques to further enhance your skills as a data scientist. Happy learning!

2 notes

·

View notes

Text

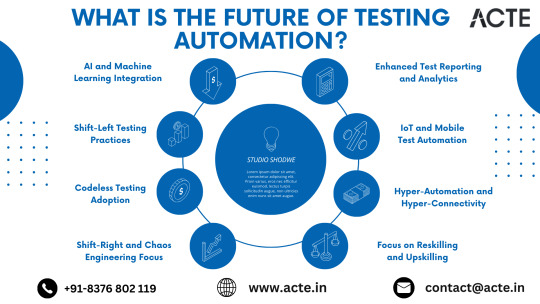

Charting the Course for Tomorrow's Testing Revolution: A Glimpse into the Future of Test Automation

In the ever-shifting landscape of software testing, the future is on the brink of a transformative revolution, driven by technological innovations and paradigm shifts. As we peer into the horizon of test automation, a realm of exciting developments unfolds, promising a testing landscape where adaptability and innovation take center stage.

1. The Rise of Smart Testing with AI and Machine Learning: The integration of Artificial Intelligence (AI) and Machine Learning (ML) into test automation marks a paradigm shift. Testing tools, infused with intelligence, will evolve to dynamically adapt, optimize scripts, and autonomously discern patterns. The era of intelligent and responsive test automation is on the horizon.

2. Embracing Shift-Left Testing Practices: The industry's commitment to a shift-left approach continues to gain momentum. Test automation seamlessly integrates into CI/CD pipelines, fostering swift feedback loops and hastening release cycles. Early testing in the development lifecycle transforms from a best practice into a fundamental aspect of agile methodologies.

3. Codeless Testing: Empowering Beyond Boundaries: Codeless testing tools take center stage, breaking down barriers and democratizing the testing process. Individuals with diverse technical backgrounds can actively contribute, fostering collaboration among developers, testers, and business stakeholders. The inclusivity of codeless testing reshapes the collaborative dynamics of testing efforts.

4. Navigating Production Realms with Shift-Right and Chaos Engineering: Test automation extends beyond development environments with a pronounced shift-right approach. Venturing into production environments, automation tools provide real-time insights, ensuring application reliability in live scenarios. Simultaneously, Chaos Engineering emerges as a pivotal practice, stress-testing system resiliency.

5. Unveiling Advanced Test Reporting and Analytics: Test automation tools evolve beyond mere script execution, offering enhanced reporting and advanced analytics. Deep insights into testing trends empower teams with actionable data, enriching decision-making processes and optimizing the overall testing lifecycle.

6. IoT and Mobile Testing Evolution: With the pervasive growth of the Internet of Things (IoT) and mobile applications, test automation frameworks adapt to meet the diverse testing needs of these platforms. Seamless integration with mobile and IoT devices becomes imperative, ensuring comprehensive test coverage across a spectrum of devices.

7. Hyper-Automation and Seamless Connectivity: The concept of hyper-automation takes center stage, amalgamating multiple automation technologies for advanced testing practices. Automation tools seamlessly connect with diverse ecosystems, creating a hyper-connected testing landscape. This interconnectedness ushers in a holistic approach, addressing the intricacies of modern software development.

8. The Imperative of Continuous Reskilling and Upskilling: Testers of the future embrace a culture of continuous learning. Proficiency in scripting languages, understanding AI and ML concepts, and staying updated with the latest testing methodologies become imperative. The ability to adapt and upskill becomes the linchpin of a successful testing career.

In conclusion, the future of test automation unveils a dynamic and revolutionary journey. Testers and organizations at the forefront of embracing emerging technologies and agile methodologies will not only navigate but thrive in this evolving landscape. As the synergy between human expertise and automation technologies deepens, the future promises to unlock unprecedented possibilities in the realm of software quality assurance.

2 notes

·

View notes

Text

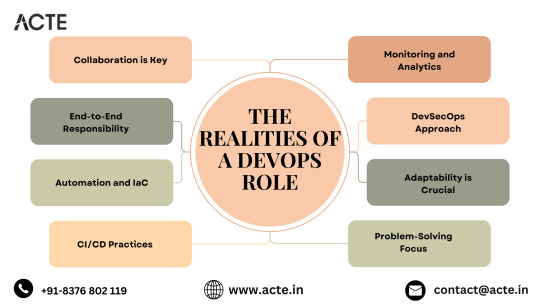

Mastering the DevOps Landscape: A Comprehensive Exploration of Roles and Responsibilities

Embarking on a career in DevOps opens the door to a dynamic and collaborative experience centered around optimizing software development and delivery. This multifaceted role demands a unique blend of technical acumen and interpersonal skills. Let's delve into the intricate details that define the landscape of a DevOps position, exploring the diverse aspects that contribute to its dynamic nature.

1. The Synergy of Collaboration: At the core of DevOps lies a strong emphasis on collaboration. DevOps professionals navigate the intricacies of working closely with development, operations, and cross-functional teams, fostering effective communication and teamwork to ensure an efficient software development lifecycle.

2. Orchestrating End-to-End Excellence: DevOps practitioners shoulder the responsibility for the entire software delivery pipeline. From coding and testing to deployment and monitoring, they orchestrate a seamless and continuous workflow, responding promptly to changes and challenges, resulting in faster and more reliable software delivery.

3. The Automation Symphony and IaC Choreography: Automation takes center stage in DevOps practices. DevOps professionals automate repetitive tasks, enhancing efficiency and reliability. Infrastructure as Code (IaC) adds another layer, facilitating consistent and scalable infrastructure management and further streamlining the deployment process.

4. Unveiling the CI/CD Ballet: Continuous Integration (CI) and Continuous Deployment (CD) practices form the elegant dance of DevOps. This choreography emphasizes regular integration, testing, and automated deployment, effectively identifying and addressing issues in the early stages of development.

5. Monitoring and Analytics Spotlight: DevOps professionals shine a spotlight on monitoring and analytics, utilizing specialized tools to track applications and infrastructure. This data-driven approach enables proactive issue resolution and optimization, contributing to the overall health and efficiency of the system.

6. The Art of DevSecOps: Security is not a mere brushstroke; it's woven into the canvas of DevOps through the DevSecOps approach. Collaboration with security teams ensures that security measures are seamlessly integrated throughout the software development lifecycle, fortifying the system against vulnerabilities.

7. Navigating the Ever-Evolving Terrain: Adaptability is the compass guiding DevOps professionals through the ever-evolving landscape. Staying abreast of emerging technologies, industry best practices, and evolving methodologies is crucial for success in this dynamic environment.

8. Crafting Solutions with Precision: DevOps professionals are artisans in problem-solving. They skillfully troubleshoot issues, identify root causes, and implement solutions to enhance system reliability and performance, contributing to a resilient and robust software infrastructure.

9. On-Call Symphony: In some organizations, DevOps professionals play a role in an on-call rotation. This symphony involves addressing operational issues beyond regular working hours, underscoring the commitment to maintaining system stability and availability.

10. The Ongoing Learning Odyssey: DevOps is an ever-evolving journey of learning. DevOps professionals actively engage in ongoing skill development, participate in conferences, and connect with the broader DevOps community to stay at the forefront of the latest trends and innovations, ensuring they remain masters of their craft.

In essence, a DevOps role is a voyage into the heart of modern software development practices. With a harmonious blend of technical prowess, collaboration, and adaptability, DevOps professionals navigate the landscape, orchestrating excellence in the efficient and reliable delivery of cutting-edge software solutions.

3 notes

·

View notes

Text

Going Over the Cloud: An Investigation into the Architecture of Cloud Solutions

Because the cloud offers unprecedented levels of size, flexibility, and accessibility, it has fundamentally altered the way we approach technology in the present digital era. As more and more businesses shift their infrastructure to the cloud, it is imperative that they understand the architecture of cloud solutions. Join me as we examine the core concepts, industry best practices, and transformative impacts on modern enterprises.

The Basics of Cloud Solution Architecture A well-designed architecture that balances dependability, performance, and cost-effectiveness is the foundation of any successful cloud deployment. Cloud solutions' architecture is made up of many different components, including networking, computing, storage, security, and scalability. By creating solutions that are tailored to the requirements of each workload, organizations can optimize return on investment and fully utilize the cloud.

Flexibility and Resilience in Design The flexibility of cloud computing to grow resources on-demand to meet varying workloads and guarantee flawless performance is one of its distinguishing characteristics. Cloud solution architecture create resilient systems that can endure failures and sustain uptime by utilizing fault-tolerant design principles, load balancing, and auto-scaling. Workloads can be distributed over several availability zones and regions to help enterprises increase fault tolerance and lessen the effect of outages.

Protection of Data in the Cloud and Security by Design

As data thefts become more common, security becomes a top priority in cloud solution architecture. Architects include identity management, access controls, encryption, and monitoring into their designs using a multi-layered security strategy. By adhering to industry standards and best practices, such as the shared responsibility model and compliance frameworks, organizations may safeguard confidential information and guarantee regulatory compliance in the cloud.

Using Professional Services to Increase Productivity Cloud service providers offer a variety of managed services that streamline operations and reduce the stress of maintaining infrastructure. These services allow firms to focus on innovation instead of infrastructure maintenance. They include server less computing, machine learning, databases, and analytics. With cloud-native applications, architects may reduce costs, increase time-to-market, and optimize performance by selecting the right mix of managed services.

Cost control and ongoing optimization Cost optimization is essential since inefficient resource use can quickly drive up costs. Architects monitor resource utilization, analyze cost trends, and identify opportunities for optimization with the aid of tools and techniques. Businesses can cut waste and maximize their cloud computing expenses by using spot instances, reserved instances, and cost allocation tags.

Acknowledging Automation and DevOps Important elements of cloud solution design include automation and DevOps concepts, which enable companies to develop software more rapidly, reliably, and efficiently. Architects create pipelines for continuous integration, delivery, and deployment, which expedites the software development process and allows for rapid iterations. By provisioning and managing infrastructure programmatically with Infrastructure as Code (IaC) and Configuration Management systems, teams may minimize human labor and guarantee consistency across environments.

Multiple-cloud and hybrid strategies In an increasingly interconnected world, many firms employ hybrid and multi-cloud strategies to leverage the benefits of many cloud providers in addition to on-premises infrastructure. Cloud solution architects have to design systems that seamlessly integrate several environments while ensuring interoperability, data consistency, and regulatory compliance. By implementing hybrid connection options like VPNs, Direct Connect, or Express Route, organizations may develop hybrid cloud deployments that include the best aspects of both public and on-premises data centers. Analytics and Data Management Modern organizations depend on data because it fosters innovation and informed decision-making. Thanks to the advanced data management and analytics solutions developed by cloud solution architects, organizations can effortlessly gather, store, process, and analyze large volumes of data. By leveraging cloud-native data services like data warehouses, data lakes, and real-time analytics platforms, organizations may gain a competitive advantage in their respective industries and extract valuable insights. Architects implement data governance frameworks and privacy-enhancing technologies to ensure adherence to data protection rules and safeguard sensitive information.

Computing Without a Server Server less computing, a significant shift in cloud architecture, frees organizations to focus on creating applications rather than maintaining infrastructure or managing servers. Cloud solution architects develop server less programs using event-driven architectures and Function-as-a-Service (FaaS) platforms such as AWS Lambda, Azure Functions, or Google Cloud Functions. By abstracting away the underlying infrastructure, server less architectures offer unparalleled scalability, cost-efficiency, and agility, empowering companies to innovate swiftly and change course without incurring additional costs.

Conclusion As we come to the close of our investigation into cloud solution architecture, it is evident that the cloud is more than just a platform for technology; it is a force for innovation and transformation. By embracing the ideas of scalability, resilience, and security, and efficiency, organizations can take advantage of new opportunities, drive business expansion, and preserve their competitive edge in today's rapidly evolving digital market. Thus, to ensure success, remember to leverage cloud solution architecture when developing a new cloud-native application or initiating a cloud migration.

1 note

·

View note

Text

Python's Age: Unlocking the Potential of Programming

Introduction:

Python has become a powerful force in the ever-changing world of computer languages, influencing how developers approach software development. Python's period is distinguished by its adaptability, ease of use, and vast ecosystem that supports a wide range of applications. Python has established itself as a top choice for developers globally, spanning from web programming to artificial intelligence. We shall examine the traits that characterize the Python era and examine its influence on the programming community in this post. Learn Python from Uncodemy which provides the best Python course in Noida and become part of this powerful force.

Versatility and Simplicity:

Python stands out due in large part to its adaptability. Because it is a general-purpose language with many applications, Python is a great option for developers in a variety of fields. It’s easy to learn and comprehend grammar is straightforward, concise, and similar to that of the English language. A thriving and diverse community has been fostered by Python's simplicity, which has drawn both novice and experienced developers.

Community and Collaboration:

It is well known that the Python community is open-minded and cooperative. Python is growing because of the libraries, frameworks, and tools that developers from all around the world create to make it better. Because the Python community is collaborative by nature, a large ecosystem has grown up around it, full of resources that developers may easily access. The Python community offers a helpful atmosphere for all users, regardless of expertise level. Whether you are a novice seeking advice or an expert developer searching for answers, we have you covered.

Web Development with Django and Flask:

Frameworks such as Django and Flask have helped Python become a major force in the online development space. The "batteries-included" design of the high-level web framework Django makes development more quickly accomplished. In contrast, Flask is a lightweight, modular framework that allows developers to select the components that best suit their needs. Because of these frameworks, creating dependable and

scalable web applications have become easier, which has helped Python gain traction in the web development industry.

Data Science and Machine Learning:

Python has unmatched capabilities in data science and machine learning. The data science toolkit has become incomplete without libraries like NumPy, pandas, and matplotlib, which make data manipulation, analysis, and visualization possible. Two potent machine learning frameworks, TensorFlow and PyTorch, have cemented Python's place in the artificial intelligence field. Data scientists and machine learning engineers can concentrate on the nuances of their models instead of wrangling with complicated code thanks to Python's simple syntax.

Automation and Scripting:

Python is a great choice for activities ranging from straightforward scripts to intricate automation workflows because of its adaptability in automation and scripting. The readable and succinct syntax of the language makes it easier to write automation scripts that are both effective and simple to comprehend. Python has evolved into a vital tool for optimizing operations, used by DevOps engineers to manage deployment pipelines and system administrators to automate repetitive processes.

Education and Python Courses:

The popularity of Python has also raised the demand for Python classes from people who want to learn programming. For both novices and experts, Python courses offer an organized learning path that covers a variety of subjects, including syntax, data structures, algorithms, web development, and more. Many educational institutions in the Noida area provide Python classes that give a thorough and practical learning experience for anyone who wants to learn more about the language.

Open Source Development:

The main reason for Python's broad usage has been its dedication to open-source development. The Python Software Foundation (PSF) is responsible for managing the language's advancement and upkeep, guaranteeing that programmers everywhere can continue to use it without restriction. This collaborative and transparent approach encourages creativity and lets developers make improvements to the language. Because Python is open-source, it has been possible for developers to actively shape the language's development in a community-driven ecosystem.

Cybersecurity and Ethical Hacking:

Python has emerged as a standard language in the fields of ethical hacking and cybersecurity. It's a great option for creating security tools and penetration testing because of its ease of use and large library. Because of Python's adaptability, cybersecurity experts can effectively handle a variety of security issues. Python plays a more and bigger part in system and network security as cybersecurity becomes more and more important.

Startups and Entrepreneurship:

Python is a great option for startups and business owners due to its flexibility and rapid development cycles. Small teams can quickly prototype and create products thanks to the language's ease of learning, which reduces time to market. Additionally, companies may create complex solutions without having to start from scratch thanks to Python's large library and framework ecosystem. Python's ability to fuel creative ideas has been leveraged by numerous successful firms, adding to the language's standing as an engine for entrepreneurship.

Remote Collaboration and Cloud Computing:

Python's heyday aligns with a paradigm shift towards cloud computing and remote collaboration. Python is a good choice for creating cloud-based apps because of its smooth integration with cloud services and support for asynchronous programming. Python's readable and simple syntax makes it easier for developers working remotely or in dispersed teams to collaborate effectively, especially in light of the growing popularity of remote work and distributed teams. The language's position in the changing cloud computing landscape is further cemented by its compatibility with key cloud providers.

Continuous Development and Enhancement:

Python is still being developed; new features, enhancements, and optimizations are added on a regular basis. The maintainers of the language regularly solicit community input to keep Python current and adaptable to the changing needs of developers. Python's longevity and ability to stay at the forefront of technical breakthroughs can be attributed to this dedication to ongoing development.

The Future of Python:

The future of Python seems more promising than it has ever been. With improvements in concurrency, performance optimization, and support for future technologies, the language is still developing. Industry demand for Python expertise is rising, suggesting that the language's heyday is still very much alive. Python is positioned to be a key player in determining the direction of software development as emerging technologies like edge computing, quantum computing, and artificial intelligence continue to gain traction.

Conclusion:

To sum up, Python is a versatile language that is widely used in a variety of sectors and is developed by the community. Python is now a staple of contemporary programming, used in everything from artificial intelligence to web development. The language is a favorite among developers of all skill levels because of its simplicity and strong capabilities. The Python era invites you to a vibrant and constantly growing community, whatever your experience level with programming. Python courses in Noida offer a great starting place for anybody looking to start a learning journey into the broad and fascinating world of Python programming.

Source Link: https://teletype.in/@vijay121/Wj1LWvwXTgz

2 notes

·

View notes

Text

Python FullStack Developer Jobs

Introduction :

A Python full-stack developer is a professional who has expertise in both front-end and back-end development using Python as their primary programming language. This means they are skilled in building web applications from the user interface to the server-side logic and the database. Here’s some information about Python full-stack developer jobs.

Job Responsibilities:

Front-End Development: Python full-stack developers are responsible for creating and maintaining the user interface of a web application. This involves using front-end technologies like HTML, CSS, JavaScript, and various frameworks like React, Angular, or Vue.js.

Back-End Development: They also work on the server-side of the application, managing databases, handling HTTP requests, and building the application’s logic. Python, along with frameworks like Django, Flask, or Fast API, is commonly used for back-end development.

Database Management: Full-stack developers often work with databases like PostgreSQL, MySQL, or NoSQL databases like MongoDB to store and retrieve data.

API Development: Creating and maintaining APIs for communication between the front-end and back-end systems is a crucial part of the job. RESTful and Graph QL APIs are commonly used.

Testing and Debugging: Full-stack developers are responsible for testing and debugging their code to ensure the application’s functionality and security.

Version Control: Using version control systems like Git to track changes and collaborate with other developers.

Deployment and DevOps: Deploying web applications on servers, configuring server environments, and implementing continuous integration/continuous deployment (CI/CD) pipelines.

Security: Ensuring the application is secure by implementing best practices and security measures to protect against common vulnerabilities.

Skills and Qualifications:

To excel in a Python full-stack developer role, you should have the following skills and qualifications:

Proficiency in Python programming.

Strong knowledge of front-end technologies (HTML, CSS, JavaScript) and frameworks.

Expertise in back-end development using Python and relevant web frameworks.

Experience with databases and data modeling.

Knowledge of version control systems (e.g., Git).

Familiarity with web servers and deployment.

Understanding of web security and best practices.

Problem-solving and debugging skills.

Collaboration and teamwork.

Continuous learning and staying up to date with the latest technologies and trends.

Job Opportunities:

Python full-stack developers are in demand in various industries, including web development agencies, e-commerce companies, startups, and large enterprises. Job titles you might come across include Full-Stack Developer, Python Developer, Web Developer, or Software Engineer.

The job market for Python full-stack developers is generally favorable, and these professionals can expect competitive salaries, particularly with experience and a strong skill set. Many companies appreciate the versatility of full-stack developers who can work on both the front-end and back-end aspects of their web applications.

To find Python full-stack developer job opportunities, you can check job boards, company career pages, and professional networking sites like LinkedIn. Additionally, you can work with recruitment agencies specializing in tech roles or attend tech job fairs and conferences to network with potential employers.

Python full stack developer jobs offer a range of advantages to those who pursue them. Here are some of the key advantages of working as a Python full stack developer:

Versatility: Python is a versatile programming language, and as a full stack developer, you can work on both front-end and back-end development, as well as other aspects of web development. This versatility allows you to work on a wide range of projects and tasks.

High demand: Python is one of the most popular programming languages, and there is a strong demand for Python full stack developers. This high demand leads to ample job opportunities and competitive salaries.

Job security: With the increasing reliance on web and mobile applications, the demand for full stack developers is expected to remain high. This job security provides a sense of stability and long-term career prospects.

Wide skill set: As a full stack developer, you gain expertise in various technologies and frameworks for both front-end and back-end development, including Django, Flask, JavaScript, HTML, CSS, and more. This wide skill set makes you a valuable asset to any development team.

Collaboration: Full stack developers often work closely with both front-end and back-end teams, fostering collaboration and communication within the development process. This can lead to a more holistic understanding of projects and better teamwork.

Problem-solving: Full stack developers often encounter challenges that require them to think critically and solve complex problems. This aspect of the job can be intellectually stimulating and rewarding.

Learning opportunities: The tech industry is constantly evolving, and full stack developers have the opportunity to continually learn and adapt to new technologies and tools. This can be personally fulfilling for those who enjoy ongoing learning.

Competitive salaries: Python full stack developers are typically well-compensated due to their valuable skills and the high demand for their expertise. Salaries can vary based on experience, location, and the specific organization.

Entrepreneurial opportunities: With the knowledge and skills gained as a full stack developer, you can also consider creating your own web-based projects or startup ventures. Python’s ease of use and strong community support can be particularly beneficial in entrepreneurial endeavors.

Remote work options: Many organizations offer remote work opportunities for full stack developers, allowing for greater flexibility in terms of where you work. This can be especially appealing to those who prefer a remote or freelance lifestyle.

Open-source community: Python has a vibrant and active open-source community, which means you can easily access a wealth of libraries, frameworks, and resources to enhance your development projects.

Career growth: As you gain experience and expertise, you can advance in your career and explore specialized roles or leadership positions within development teams or organizations.

Conclusion:

Python full stack developer jobs offer a combination of technical skills, career stability, and a range of opportunities in the tech industry. If you enjoy working on both front-end and back-end aspects of web development and solving complex problems, this career path can be a rewarding choice.

Thanks for reading, hopefully you like the article if you want to take Full stack master's course from our Institute, please attend our live demo sessions or contact us: +918464844555 providing you with the best Online Full Stack Developer Course in Hyderabad with an affordable course fee structure.

2 notes

·

View notes

Text

Top Artificial Intelligence and Machine Learning Company

In the rapidly evolving landscape of technology, artificial intelligence, and machine learning have emerged as the driving forces behind groundbreaking innovations. Enterprises and industries across the globe are recognizing the transformative potential of AI and ML in solving complex challenges, enhancing efficiency, and revolutionizing processes.

At the forefront of this revolution stands our cutting-edge AI and ML company, dedicated to pushing the boundaries of what is possible through data-driven solutions.

Company Vision and Mission

Our AI and ML company was founded with a clear vision - to empower businesses and individuals with intelligent, data-centric solutions that optimize operations and fuel innovation.

Our mission is to bridge the gap between traditional practices and the possibilities of AI and ML. We are committed to delivering superior value to our clients by leveraging the immense potential of AI and ML algorithms, creating tailor-made solutions that cater to their specific needs.

Expert Team of Data Scientists

The backbone of our company lies in our exceptional team of data scientists, AI engineers, and ML specialists. Their diverse expertise and relentless passion drive the development of advanced AI models and algorithms.

Leveraging the latest technologies and best practices, our team ensures that our solutions remain at the cutting edge of the industry. The synergy between data science and engineering enables us to deliver robust, scalable, and high-performance AI and ML systems.

Comprehensive Services

Our AI and ML company offers a comprehensive range of services covering various industry verticals:

1. AI Consultation: We partner with organizations to understand their business objectives and identify opportunities where AI and ML can drive meaningful impact.

Our expert consultants create a roadmap for integrating AI into their existing workflows, aligning it with their long-term strategies.

2. Machine Learning Development: We design, develop, and implement tailor-made ML models that address specific business problems. From predictive analytics to natural language processing, we harness ML to unlock valuable insights and improve decision-making processes.

3. Deep Learning Solutions: Our deep learning expertise enables us to build and deploy intricate neural networks for image and speech recognition, autonomous systems, and other intricate tasks that require high levels of abstraction.

4. Data Engineering: We understand that data quality and accessibility are vital for successful AI and ML projects. Our data engineers create robust data pipelines, ensuring seamless integration and preprocessing of data from multiple sources.

5. AI-driven Applications: We develop AI-powered applications that enhance user experiences and drive engagement. Our team ensures that the applications are user-friendly, secure, and optimized for performance.

Ethics and Transparency

As an AI and ML company, we recognize the importance of ethics and transparency in our operations. We adhere to strict ethical guidelines, ensuring that our solutions are built on unbiased and diverse datasets.

Moreover, we are committed to transparent communication with our clients, providing them with a clear understanding of the AI models and their implications.

Innovation and Research

Innovation is at the core of our company. We invest in ongoing research and development to explore new frontiers in AI and ML. Our collaboration with academic institutions and industry partners fuels our drive to stay ahead in this ever-changing field.

Conclusion

Our AI and ML company is poised to be a frontrunner in shaping the future of technology-driven solutions. By empowering businesses with intelligent AI tools and data-driven insights, we aspire to be a catalyst for positive change across industries.

As the world continues to embrace AI and ML, we remain committed to creating a future where innovation, ethics, and transformative technology go hand in hand.

#best software development company#artificial intelligence#software development company chandigarh#ai and ml#marketing#artificial intelligence for app development#artificial intelligence app development#machine learning development company

3 notes

·

View notes

Text

Cybersecurity: Safeguarding the Digital Frontier

Introduction

In our increasingly interconnected world, the security of digital systems has never been more critical. As individuals, corporations, and governments digitize operations, data becomes a prime target for malicious actors. Cybersecurity, once a niche concern, is now a cornerstone of digital strategy. It encompasses the practices, technologies, and processes designed to protect systems, networks, and data from cyber threats. With the rise of cybercrime, which causes trillions in damages annually, understanding cybersecurity is essential — not just for IT professionals, but for anyone using the internet.

1. What is Cybersecurity?

Cybersecurity refers to the practice of defending computers, servers, mobile devices, electronic systems, networks, and data from malicious attacks. It is often divided into a few common categories:

Network security: Protecting internal networks from intruders by securing infrastructure and monitoring network traffic.

Application security: Ensuring software and devices are free of threats and bugs that can be exploited.

Information security: Protecting the integrity and privacy of data, both in storage and transit.

Operational security: Managing and protecting the way data is handled, including user permissions and policies.

Disaster recovery and business continuity: Planning for incident response and maintaining operations after a breach.

End-user education: Teaching users to follow best practices, like avoiding phishing emails and using strong passwords.

2. The Rising Threat Landscape

The volume and sophistication of cyber threats have grown rapidly. Some of the most prevalent threats include:

Phishing

Phishing attacks trick users into revealing sensitive information by masquerading as trustworthy sources. These attacks often use email, SMS, or fake websites.

Malware

Malware is any software designed to harm or exploit systems. Types include viruses, worms, ransomware, spyware, and trojans.

Ransomware

Ransomware locks a user’s data and demands payment to unlock it. High-profile cases like the WannaCry and Colonial Pipeline attacks have demonstrated the devastating effects of ransomware.

Zero-day Exploits

These are vulnerabilities in software that are unknown to the vendor. Hackers exploit them before developers have a chance to fix the issue.

Denial-of-Service (DoS) Attacks

DoS and distributed DoS (DDoS) attacks flood systems with traffic, overwhelming infrastructure and causing service outages.

3. Importance of Cybersecurity

The consequences of cyberattacks can be severe and widespread:

Financial Loss: Cybercrime is estimated to cost the world over $10 trillion annually by 2025.

Data Breaches: Personal data theft can lead to identity fraud, blackmail, and corporate espionage.

Reputational Damage: A data breach can erode trust in a company, damaging customer relationships.

Legal Consequences: Non-compliance with data protection laws can lead to lawsuits and hefty fines.

National Security: Governments and military networks are prime targets for cyber warfare and espionage.

4. Key Cybersecurity Practices

1. Risk Assessment

Identifying and prioritizing vulnerabilities helps organizations allocate resources efficiently and address the most significant threats.

2. Firewalls and Antivirus Software

Firewalls monitor incoming and outgoing traffic, while antivirus software detects and removes malicious programs.

3. Encryption

Encryption protects data by converting it into unreadable code without a decryption key, ensuring data privacy even if intercepted.

4. Multi-Factor Authentication (MFA)

MFA adds a layer of protection beyond passwords, requiring users to verify identity through additional means like OTPs or biometrics.

5. Regular Updates and Patching

Cybercriminals often exploit outdated systems. Regular software updates and security patches close these vulnerabilities.

6. Backups

Frequent data backups help in recovery after ransomware or system failure.

5. Cybersecurity for Individuals

Individuals are often the weakest link in cybersecurity. Here’s how to stay safe:

Use strong, unique passwords for every account.

Be cautious of unsolicited emails or messages, especially those requesting personal information.

Regularly update devices and apps.

Enable two-factor authentication wherever possible.

Avoid public Wi-Fi for sensitive transactions unless using a VPN.

6. Cybersecurity for Businesses

Businesses face unique threats and must adopt tailored security strategies:

Security Policies

Organizations should develop formal policies outlining acceptable use, incident response, and data handling procedures.

Employee Training

Staff should be trained to recognize phishing attacks, report suspicious behavior, and follow cybersecurity protocols.

Security Operations Center (SOC)

Many businesses use SOCs to monitor, detect, and respond to cyber incidents 24/7.

Penetration Testing

Ethical hackers simulate attacks to uncover vulnerabilities and test a company’s defenses.

7. Emerging Technologies and Cybersecurity

As technology evolves, so too do the threats. Here are some emerging fields:

Artificial Intelligence and Machine Learning

AI enhances threat detection by analyzing massive datasets to identify patterns and anomalies in real-time.

Internet of Things (IoT)

With billions of connected devices, IoT expands the attack surface. Weak security in smart devices can create backdoors into networks.

Quantum Computing

While quantum computing promises advancements in processing power, it also threatens traditional encryption methods. Post-quantum cryptography is a new area of focus.

8. Cybersecurity Regulations and Frameworks

Governments and industries enforce standards to ensure compliance:

GDPR (General Data Protection Regulation): Governs data privacy in the EU.

HIPAA (Health Insurance Portability and Accountability Act): Protects health data in the U.S.

NIST Cybersecurity Framework: A widely adopted set of standards and best practices.

ISO/IEC 27001: International standard for information security management.

Compliance not only avoids fines but demonstrates a commitment to protecting customer data.

9. Challenges in Cybersecurity

Cybersecurity faces numerous challenges:

Evolving Threats: Attack techniques change rapidly, requiring constant adaptation.

Talent Shortage: There’s a global shortage of qualified cybersecurity professionals.

Budget Constraints: Small businesses often lack resources for robust security.

Third-Party Risks: Vendors and contractors may introduce vulnerabilities.

User Behavior: Human error remains one of the leading causes of security breaches.

10. The Future of Cybersecurity

Looking ahead, the cybersecurity landscape will be shaped by:

AI-powered threat detection

Greater emphasis on privacy and data ethics

Cybersecurity as a core part of business strategy

Development of zero-trust architectures

International cooperation on cybercrime

Conclusion

Cybersecurity is no longer optional — it’s a necessity in the digital age. With cyber threats becoming more frequent and sophisticated, a proactive and layered approach to security is crucial. Everyone, from casual internet users to CEOs, plays a role in protecting digital assets. Through education, technology, policy, and cooperation, we can build a safer digital world.

0 notes

Text

Can Quality Assurance Testing Online Courses Propel Your Career to the Next Level?

Quality Assurance (QA) testing plays a pivotal role in the software development lifecycle, ensuring that applications meet rigorous standards of reliability, functionality, and user experience. As businesses accelerate digital transformation, the demand for skilled QA testers continues to surge. But how can you break into this in-demand field or elevate your existing QA career? Online QA testing courses have emerged as a flexible, cost-effective way to acquire essential skills, certifications, and hands-on experience. In this article, we explore how Quality assurance testing online courses can propel your career to new heights, offering you the roadmap, tools, and confidence to stand out in a competitive marketplace.

Understanding Quality Assurance Testing

Quality Assurance testing encompasses a broad range of practices designed to identify defects, verify functionality, and validate that a product meets business and technical requirements. QA testers work closely with developers, product managers, and stakeholders to:

Develop test plans and test cases

Perform manual and automated testing

Document and track bugs

Collaborate on quality improvement strategies

A strong foundation in QA testing not only ensures a product’s success but also enhances user satisfaction, reduces development costs, and protects brand reputation. With the rise of agile development, continuous integration, and DevOps, QA professionals now need a diverse skill set that spans manual testing methodologies, automation tools, scripting languages, and quality frameworks.

Why Online Courses? Flexibility, Accessibility, and Affordability

Traditional classroom training can be costly, time-consuming, and inflexible. In contrast, online QA testing courses offer:

Flexibility: Learn at your own pace, fitting modules around full-time work or personal commitments.

Global Accessibility: Gain access to top instructors, industry experts, and peer communities regardless of your location.

Cost-Effectiveness: Many platforms offer free introductory modules, affordable monthly subscriptions, and bundle deals that eliminate travel and accommodation expenses.

Latest Curriculum: Online providers frequently update content to reflect evolving industry standards, tools, and best practices.

Whether you’re a career changer, recent graduate, or seasoned QA professional seeking upskilling, online courses empower you to tailor your learning journey to your specific goals and schedule.

Key Skills and Certifications from Online QA Courses

Manual Testing Fundamentals: Understand the QA lifecycle, testing levels (unit, integration, system, acceptance), and defect tracking. Develop critical thinking to design effective test cases.

Automation Testing: Master popular tools such as Selenium, Cypress, and Appium. Learn scripting with Java, Python, or JavaScript to write maintainable test frameworks.

Performance and Security Testing: Explore load-testing tools like JMeter and security testing basics to identify vulnerabilities before they reach production.

API Testing: Use tools like Postman and REST Assured to validate web services, ensuring backend stability and data integrity.

DevOps and Continuous Testing: Integrate testing into CI/CD pipelines using Jenkins, GitLab CI, or Azure DevOps, accelerating feedback loops and release cycles.

Quality Management and Agile Practices: Gain knowledge in frameworks like ISO 9001, Six Sigma, and Agile testing methodologies (Scrum, Kanban).

Certification Preparation: Prepare for industry-recognized credentials such as ISTQB Foundation, Certified Agile Tester (CAT), and Certified Software Tester (CSTE), which strengthen your professional profile.

How Online Courses Enhance Your Resume

A well-structured online QA course offers certificates of completion or badge credentials that you can showcase on LinkedIn, your portfolio, or during interviews. Recruiters and hiring managers often look for:

Hands-On Projects: Courses that include real-world scenarios or capstone projects allow you to demonstrate practical experience.

Tool Proficiency: Evidence of working knowledge in widely adopted tools increases your marketability.

Up-to-Date Techniques: Employers value candidates familiar with the latest testing frameworks, automation libraries, and DevOps practices.

By clearly highlighting completed courses, project outcomes, and earned certifications, you differentiate yourself from other applicants who may have theoretical knowledge but lack demonstrable skills.

Real Stories: Success from Online QA Training

"I transitioned from a customer support role to a QA automation engineer within six months of completing an online QA course. The hands-on Selenium projects and interview preparation modules were instrumental in landing my first testing job." – Priya S., QA Engineer

"Our team saw a 35% reduction in critical bugs after incorporating performance testing modules from an online course. It not only improved product quality but also increased client satisfaction." – Rahul M., QA Lead

These success stories underscore how targeted online learning can accelerate career progression and deliver tangible business value.

Choosing the Right QA Testing Online Course

With a plethora of platforms Udemy, Coursera, Pluralsight, Test Automation University, and more selecting the right course requires careful consideration:

Course Content and Depth: Review the syllabus to ensure comprehensive coverage of both manual and automation testing, along with specialized areas like performance or security testing.

Instructor Expertise: Look for courses led by industry practitioners with proven track records and positive learner reviews.

Hands-On Learning: Prioritize courses that include coding labs, real-world projects, and sandbox environments.

Community and Mentorship: Access to discussion forums, peer groups, and instructor feedback can significantly enhance your learning experience.

Certification Support: Some programs offer exam vouchers or dedicated prep modules for ISTQB or other certifications.

Trial and Reviews: Take advantage of free trials, sample lectures, and user testimonials to gauge course quality.

Maximizing Learning Outcomes

To get the most out of your online QA testing course:

Set Clear Goals: Define specific objectives master Selenium within three months, pass ISTQB Foundation by June, or build a CI/CD test pipeline prototype.

Create a Study Schedule: Allocate consistent weekly study hours and stick to them to build momentum.

Practice Daily: Regular coding exercises and bug-hunting challenges reinforce learning and build muscle memory.

Network Actively: Participate in forums, engage in group projects, and seek feedback from peers and mentors.

Build a Portfolio: Document your projects on GitHub or a personal blog to showcase your skills to potential employers.

Overcoming Common Challenges

Transitioning into QA testing or upskilling can present hurdles:

Technical Jargon: Abstract concepts and acronyms can be daunting. Use glossaries and community resources to clarify unfamiliar terms.

Self-Motivation: Online learning demands discipline. Partner with a study buddy or join accountability groups to stay on track.

Environment Setup: Configuring local test environments can be tricky. Choose courses with cloud-based labs or detailed setup guides.

Balancing Commitments: If you’re working full-time, prioritize learning tasks and leverage microlearning during commutes or breaks.

By anticipating these obstacles and proactively seeking solutions, you ensure a smoother, more rewarding learning journey.

The ROI of QA Testing Online Courses

Investing time and money in QA training yields high returns:

Increased Earning Potential: QA automation engineers often command higher salaries than manual testers, with mid-career professionals earning upwards of $80,000 USD annually.

Career Mobility: QA skills are transferable across industries finance, healthcare, e-commerce, gaming, and more, opening doors to diverse job opportunities.

Job Security: Quality assurance remains a non-negotiable aspect of software development, ensuring steady demand even during market fluctuations.

Continuous Growth: Advanced courses in AI-driven testing, security testing, and performance engineering allow you to stay ahead of industry trends.

Conclusion:

Quality assurance testing training courses represent a strategic investment in your professional growth. They offer the flexibility, expert instruction, and hands-on experience needed to master modern testing practices and earn industry-recognized certifications. Whether you’re starting fresh or aiming to climb the career ladder, the right online QA testing program can equip you with the skills to:

Deliver high-quality software products

Automate repetitive testing tasks

Integrate seamlessly into DevOps pipelines

Command competitive compensation packages

Ready to propel your QA career to the next level? Explore top-rated online courses, enroll today, and embark on a transformative learning journey that can redefine your future in tech.

Author Bio: Vinay is a seasoned IT professional specializing in software testing and quality management. With over 8 years of experience in delivering robust QA solutions, he helps aspiring QA engineers navigate their career paths through targeted training and mentorship.

0 notes

Text

How do AI-Powered Applicant Tracking Systems Help Build Stronger Talent Pools?

AI-powered Applicant Tracking Systems (ATS) transform recruitment by centralizing candidate data, automating engagement, and leveraging AI to keep a pipeline of qualified prospects ready for new roles. By treating candidates as long-term assets rather than one-off applicants, ATS enables recruiters to fill positions faster, reduce sourcing costs, and improve hire quality. In this article, we’ll explore:

What a talent pool is and why it matters

Five ways ATS builds and maintains talent pools

Additional ATS features that enhance pipeline strength

Real-world statistics and case examples

Best practices for maximizing ATS-driven talent pools

What Is a Talent Pool and Why Does It Matter

A talent pool is a categorized group of potential candidates—active and passive—who have demonstrated interest or match your organization’s hiring criteria. Rather than starting sourcing from scratch for each vacancy, recruiters tap into this pre-qualified database to accelerate hiring and reduce costs. Talent pools often include:

Active applicants who applied for previous roles

Passive candidates identified via sourcing tools

Alumni and past employees

Referrals and networking contacts

Strong talent pools lead to faster time-to-fill, lower acquisition costs, and better cultural fits by enabling continuous engagement and data-driven selection.

1. Centralized Candidate Database

ATS automatically ingests resumes and application data from career sites, job boards, email, and referral sources into a single, searchable database. This centralization:

Eliminates data silos—no more spreadsheets scattered across teams.

Enables keyword and skills searches across all historical applicants.

Surfaces “silver medalists”—candidates who narrowly missed previous roles yet remain strong fits.

With all candidate records in one place, recruiters can quickly mine past applicants before launching new sourcing campaigns, reducing redundant efforts by up to 30%.

2. Automated Candidate Engagement

Maintaining candidate interest over time is critical to keeping talent pools warm. AI-powered ATS includes email automation, SMS alerts, and chatbot interfaces to:

Send personalized job alerts when new positions match a candidate’s profile.

Trigger drip campaigns—e.g., newsletter invitations, company updates—to nurture passive prospects.

Provide real-time status updates on applications to enhance the candidate experience.

Automated engagement workflows ensure timely communication at scale, boosting re-engagement rates by as much as 45% compared to one-off outreach.

3. CRM-Style Nurturing and Segmentation

Top ATS platforms function like candidate relationship management (CRM) systems, offering:

Segmentation: Tag and group candidates by skill sets, locations, or roles.

Drip campaigns: Tailor outreach—webinars for engineers, events for sales pros.

Event tracking: Monitor candidate interactions (email opens, link clicks) to gauge interest.

This CRM-style approach treats talent pools as ongoing communities, not one-time transactions. By segmenting and delivering targeted content, recruiters deepen relationships, driving a 25% increase in engagement over generic mass emails.

4. AI-Driven Matching & Predictive Analytics

Advanced ATS leverages machine learning to analyze historical hiring data and score pool candidates based on:

Skills and experience match the new job requisitions

Likelihood to succeed, learned from past performance metrics

Cultural alignment, inferred from language patterns and assessments

Predictive analytics forecast future hiring needs—e.g., seasonal spikes in customer-support hiring—so recruiters can proactively expand relevant segments. AI-powered matching surfaces top prospects instantly, cutting screening time by up to 70%.

5. Data-Driven Pipeline Health Monitoring

Integrated dashboards in ATS provide real-time visibility into talent-pool metrics, such as:

Pipeline volume: Number of candidates per role or segment

Conversion rates: From pool to interview, interview to offer

Source effectiveness: Which channels contributed to the top-performing candidates

By tracking these KPIs, recruiting teams identify bottlenecks (e.g., low conversion in the “engineers” pool) and adjust tactics—launching targeted campaigns or refining sourcing filters—to maintain a healthy pipeline.

Additional ATS Features to Strengthen Talent Pools

Career-Site Integration

Embedding ATS job feeds and application forms on your career site ensures that every applicant enters the talent-pool database seamlessly, reducing drop-off from external redirects.

Job Distribution Automation

Automated multi-board postings—from LinkedIn to niche forums—keep talent pools fresh by attracting both active and passive candidates without manual posting work.

Assessment & Video-Interview Integrations

Linking assessment platforms and one-way video tools enriches candidate profiles with performance data, enabling recruiters to nurture only high-potential prospects in critical skill areas.

Background Check Workflows

Automated triggering of background and reference checks when candidates meet pool criteria ensures compliance and speeds time-to-hire for pre-screened talent.

Real-World Impact & Statistics

50% faster sourcing: Companies using ATS-driven talent pools report halving their time-to-fill for repeat roles.

20–30% lower acquisition costs: Reusing pre-qualified pool candidates reduces reliance on expensive external agencies.

15% higher quality-of-hire: AI-matched candidates from talent pools consistently outperform cold-sourced applicants.

45% increased candidate engagement: Automated nurturing drives stronger response rates compared to ad hoc outreach.

Best Practices for ATS-Driven Talent Pools

Define Clear Segments Establish pool categories by role, skill, or geography to focus nurturing efforts and content.

Automate Smart WorkflowsUse trigger-based campaigns (e.g., event follow-ups) to keep engagement high without manual effort.

Maintain Data Hygiene Regularly archive inactive profiles and solicit updates from stale contacts to keep pool data current and GDPR-compliant.

Combine AI with Human Touch Leverage AI for screening, but personalize final outreach and interviews to assess soft skills and cultural fit.

Monitor & Iterate Review pipeline health dashboards monthly, adjusting segments, messaging, and sourcing channels based on performance.

Conclusion

Applicant Tracking Systems do far more than process one-off applications—they empower recruiters to build, nurture, and leverage strategic talent pools. By centralizing candidate data, automating engagement, applying CRM principles, and harnessing AI for predictive matching, organizations can:

Fill roles faster

Reduce sourcing costs

Strengthen the employer brand

Improve the quality of hires

Implementing best practices around segmentation, automation, and data governance ensures your AI-powered ATS-driven talent pools become a sustainable competitive advantage in today’s talent-driven market.

#applicant tracking system for small business#ats hr software#corporate learning management systems#applicant tracking systems ats#ats tracking system#ats software solutions#best applicant tracking systems#top applicant tracking software#ats software#best ats systems

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Build and design multiple types of applications that are cross-language, platform, and cost-effective by understanding core Azure principles and foundational conceptsKey FeaturesGet familiar with the different design patterns available in Microsoft AzureDevelop Azure cloud architecture and a pipeline management systemGet to know the security best practices for your Azure deploymentBook DescriptionThanks to its support for high availability, scalability, security, performance, and disaster recovery, Azure has been widely adopted to create and deploy different types of application with ease. Updated for the latest developments, this third edition of Azure for Architects helps you get to grips with the core concepts of designing serverless architecture, including containers, Kubernetes deployments, and big data solutions.You'll learn how to architect solutions such as serverless functions, you'll discover deployment patterns for containers and Kubernetes, and you'll explore large-scale big data processing using Spark and Databricks. As you advance, you'll implement DevOps using Azure DevOps, work with intelligent solutions using Azure Cognitive Services, and integrate security, high availability, and scalability into each solution. Finally, you'll delve into Azure security concepts such as OAuth, OpenConnect, and managed identities.By the end of this book, you'll have gained the confidence to design intelligent Azure solutions based on containers and serverless functions.What you will learnUnderstand the components of the Azure cloud platformUse cloud design patternsUse enterprise security guidelines for your Azure deploymentDesign and implement serverless and integration solutionsBuild efficient data solutions on AzureUnderstand container services on AzureWho this book is forIf you are a cloud architect, DevOps engineer, or a developer looking to learn about the key architectural aspects of the Azure cloud platform, this book is for you. A basic understanding of the Azure cloud platform will help you grasp the concepts covered in this book more effectively.Table of ContentsGetting started with AzureAzure solution availability, scalability, and monitoringDesign pattern– Networks, storage, messaging, and eventsAutomating architecture on AzureDesigning policies, locks, and tags for Azure deploymentsCost Management for Azure solutionsAzure OLTP solutionsArchitecting secure applications on AzureAzure Big Data solutionsServerless in Azure – Working with Azure FunctionsAzure solutions using Azure Logic Apps, Event Grid, and FunctionsAzure Big Data eventing solutionsIntegrating Azure DevOpsArchitecting Azure Kubernetes solutionsCross-subscription deployments using ARM templatesARM template modular design and implementationDesigning IoT SolutionsAzure Synapse Analytics for architectsArchitecting intelligent solutions ASIN : B08DCKS8QB Publisher : Packt Publishing; 3rd edition (17 July 2020) Language : English File size : 72.0 MB Text-to-Speech : Enabled Screen Reader : Supported Enhanced

typesetting : Enabled X-Ray : Not Enabled Word Wise : Not Enabled Print length : 840 pages [ad_2]

0 notes

Text

Anton R Gordon’s Blueprint for Automating End-to-End ML Workflows with SageMaker Pipelines

In the evolving landscape of artificial intelligence and cloud computing, automation has emerged as the backbone of scalable machine learning (ML) operations. Anton R Gordon, a renowned AI Architect and Cloud Specialist, has become a leading voice in implementing robust, scalable, and automated ML systems. At the center of his automation strategy lies Amazon SageMaker Pipelines — a purpose-built service for orchestrating ML workflows in the AWS ecosystem.

Anton R Gordon’s blueprint for automating end-to-end ML workflows with SageMaker Pipelines demonstrates a clear, modular, and production-ready approach to developing and deploying machine learning models. His framework enables enterprises to move swiftly from experimentation to deployment while ensuring traceability, scalability, and reproducibility.

Why SageMaker Pipelines?

As Anton R Gordon often emphasizes, reproducibility and governance are key in enterprise-grade AI systems. SageMaker Pipelines supports this by offering native integration with AWS services, version control, step caching, and secure role-based access. These capabilities are essential for teams that are moving toward MLOps — the discipline of automating the deployment, monitoring, and governance of ML models.

Step-by-Step Workflow in Anton R Gordon’s Pipeline Blueprint

1. Data Ingestion and Preprocessing

Anton’s pipelines begin with a processing step that handles raw data ingestion from sources like Amazon S3, Redshift, or RDS. He uses SageMaker Processing Jobs with built-in containers for Pandas, Scikit-learn, or custom scripts to clean, normalize, and transform the data.

2. Feature Engineering and Storage

After preprocessing, Gordon incorporates feature engineering directly into the pipeline, often using Feature Store for storing and versioning features. This approach promotes reusability and ensures data consistency across training and inference stages.

3. Model Training and Hyperparameter Tuning

Anton leverages SageMaker Training Jobs and the TuningStep to identify the best hyperparameters using automatic model tuning. This modular training logic allows for experimentation without breaking the end-to-end automation.

4. Model Evaluation and Conditional Logic

One of the key aspects of Gordon’s approach is embedding conditional logic within the pipeline using the ConditionStep. This step evaluates model metrics (like accuracy or F1 score) and decides whether the model should move forward to deployment.

5. Model Registration and Deployment

Upon successful validation, the model is automatically registered in the SageMaker Model Registry. From there, it can be deployed to a real-time endpoint using SageMaker Hosting Services or batch transformed depending on the use case.

6. Monitoring and Drift Detection