#Data Visualisation Solutions

Explore tagged Tumblr posts

Text

Choosing the Right Data Visualisation Solution for Your Business Needs

Introduction

Businesses rely heavily on data visualisation solutions to extract meaningful insights and facilitate informed decision-making in the modern data-driven world. However, selecting the right solution can be overwhelming with the vast array of data visualisation tools available. A well-chosen visualisation tool can enhance business efficiency, improve collaboration, and provide deeper insights.

Understanding Data Visualisation Solutions

Data visualisation solutions transform raw data into graphical representations such as charts, graphs, dashboards, and maps. These solutions help businesses interpret complex data patterns, trends, and correlations more effectively. Choosing the right tool requires a thorough understanding of business objectives, data complexity, and user requirements.

Key Considerations When Choosing a Data Visualisation Solution

1. Business Objectives and Use Cases

Before selecting a tool, businesses must define their primary use cases. Are they looking to improve internal reporting, track key performance indicators (KPIs), or create real-time interactive dashboards? Understanding these objectives will narrow down suitable options.

2. Ease of Use and User Experience

A data visualisation solution should be user-friendly, catering to both technical and non-technical users. A tool with an intuitive interface and drag-and-drop functionalities can empower business users to generate insights without extensive technical expertise.

3. Integration with Existing Systems

The chosen tool must integrate seamlessly with existing databases, business intelligence (BI) platforms, and cloud services. Compatibility with data sources such as SQL databases, spreadsheets, and cloud applications ensures smooth data flow.

4. Customisation and Scalability

Businesses should consider whether the tool can be customised to meet specific needs and scale as the company grows. A scalable solution will support increasing data volumes and more complex analytics over time.

5. Advanced Analytical Capabilities

Some businesses require more than basic visualisation; they need predictive analytics, artificial intelligence (AI)-driven insights, and machine learning capabilities. Selecting a tool that provides these features can give businesses a competitive edge.

6. Collaboration and Sharing Features

For organisations with multiple teams, a tool that allows collaborative data exploration, report sharing, and real-time updates is essential. Cloud-based solutions enhance teamwork and remote access.

7. Security and Compliance

Data security is a top priority. Businesses must evaluate whether the solution meets compliance regulations (such as GDPR or HIPAA) and offers encryption, role-based access control, and secure authentication methods.

8. Cost and ROI Consideration

While budget constraints are important, businesses should weigh the cost against potential benefits. A higher-priced tool with robust features may offer greater long-term value compared to a cheaper, limited-scope solution.

Popular Data Visualisation Tools in the Market

Several tools cater to different business needs. Some of the most popular include:

Tableau – Best for advanced analytics and interactive dashboards.

Power BI – Ideal for Microsoft ecosystem users and enterprise-level reporting.

Google Data Studio – Great for free, cloud-based reporting and collaboration.

D3.js – Suitable for developers who need custom visualisation capabilities.

Looker – Best for integrating BI and data analytics into workflows.

Conclusion

Choosing the right data visualisation solution requires careful evaluation of business needs, technical requirements, and budget. By considering factors such as ease of use, scalability, integration, and security, businesses can select a tool that maximises the value of their data. Investing in the right data visualisation solution empowers organisations to turn raw data into actionable insights, driving business growth and success.

0 notes

Text

IT solutions for insurance companies provide tailored technologies to streamline operations, enhance customer experience, and ensure regulatory compliance. These solutions often include policy management systems, claims processing software, CRM platforms, and data analytics tools to improve decision-making and risk management. By integrating digital tools, insurers can automate processes, reduce costs, and deliver personalized services efficiently.

0 notes

Text

Enhancing Project Management with Power BI Reporting and Dashboards

Efficient project management is the cornerstone of successful businesses, and having the right tools can make all the difference. Power BI, a leading business analytics platform, is revolutionizing project management by offering robust reporting and dashboard capabilities that provide real-time insights and data-driven decision-making.

Transforming Project Management with Power BI

Power BI empowers project managers by consolidating data from multiple sources into interactive dashboards. These dashboards enable teams to monitor project progress, track key performance indicators (KPIs), and identify potential bottlenecks—all in real time. With customizable reports, managers can tailor insights to align with specific goals, ensuring that every stakeholder has the information they need at their fingertips.

For example, Power BI’s real-time monitoring allows project managers to oversee task completion rates, budget utilization, and resource allocation. Alerts and automated notifications can be set up to identify risks early, ensuring timely interventions. By offering a centralized view of project data, Power BI eliminates the need for time-consuming manual updates, freeing teams to focus on what matters most—delivering successful projects.

Seamless Integration and Scalability

One of Power BI’s standout features is its ability to integrate seamlessly with tools like Microsoft Excel, Azure, and even ERP systems like Acumatica. This means project teams can work within a familiar ecosystem while leveraging the advanced analytics and visualization capabilities of Power BI. Whether your business is a small startup or a large enterprise, Power BI’s scalable solutions grow with you, ensuring consistent performance and reliability.

Empowering Teams with Exceptional Support

At Power BI Solutions, we specialize in delivering tailored dashboards and reports designed to meet the unique needs of your business. Our certified professionals provide end-to-end implementation and ongoing training, ensuring your team is equipped to unlock the full potential of Power BI. From automating reporting processes to creating actionable insights, we are dedicated to empowering businesses with data-driven project management solutions.

Conclusion

With its interactive dashboards, real-time reporting, and seamless integration, Power BI is transforming project management. By simplifying data analysis and enhancing decision-making, Power BI equips businesses to achieve their project goals efficiently and effectively.

0 notes

Text

How to Optimize Data Management for a Seamless Digital Transformation and ERP Implementation?

Digital transformation and ERP (Enterprise Resource Planning) strategies have become pivotal for businesses aiming to stay competitive in today’s fast-evolving landscape. At the core of these strategies lies data management — a critical aspect that ensures accurate, reliable, and actionable insights for decision-making.

Watch this comprehensive video on starting data management in your ERP strategy for an in-depth understanding.

youtube

If you’re wondering how to start data management in your digital transformation and ERP strategy, this guide provides actionable steps to help you begin your journey effectively.

Why Data Management is Crucial in Digital Transformation

Before diving into the “how,” it’s essential to understand the “why.” Here’s why data management is indispensable:

Data as the Backbone of ERP Systems: ERP systems thrive on clean, structured, and well-organized data. Without proper data management, the efficiency of ERP systems diminishes.

Informed Decision-Making: Reliable data leads to better analytics, which fuels strategic decisions.

Cost Optimization: Effective data management reduces redundancies, eliminates errors, and cuts costs in operations.

5 Steps to Start Data Management in Your Digital Transformation Journey

1. Assess Your Current Data Landscape

Before implementing any strategy, audit your current data repositories. Identify duplicate, incomplete, or irrelevant data that might be affecting your systems.

2. Define Your Data Governance Framework

A strong governance framework ensures consistency and accountability. This includes setting rules for data collection, usage, storage, and security.

3. Choose the Right Tools and Technologies

Invest in master data management (MDM) tools that integrate seamlessly with your ERP system. These tools enable:

Centralized data storage

Automated data cleansing

Real-time data updates

4. Involve Key Stakeholders

Data management isn’t an IT-only responsibility. Include leaders from finance, operations, and supply chain departments to ensure holistic alignment.

5. Provide Continuous Training

Educate your employees about the importance of clean data and how their inputs impact larger systems like ERP. A data-driven culture begins with informed employees.

How ERP Systems and Data Management Work Hand-in-Hand

High-Impact Benefits of Starting Data Management Now

Enhanced Business Agility: With structured data, businesses can respond quickly to changes.

Improved Compliance: Regulatory requirements like GDPR and CCPA demand clean and transparent data practices.

Better Customer Experiences: When your systems are fueled by high-quality data, you deliver superior services.

Conclusion

Starting your data management journey may seem daunting, but with the right approach and resources, it can transform your digital transformation and ERP strategy into a powerful business enabler.

To gain deeper insights and actionable advice on this topic, make sure to watch this detailed video here.

Take the first step today — because the future of your business depends on how well you manage your data today!

#digital transformation#data management in erp#erp services#piloggroup#data analytics#data governance#erp solutions provider#data visualization#data visualisation#youtube#data scientist#data management solutions#datadriven#Youtube

0 notes

Text

Deep in the Mediterranean, in search of quantum gravity

A study published in JCAP places new limits on quantum gravity using data from the underwater detector KM3NeT

Quantum gravity is the missing link between general relativity and quantum mechanics, the yet-to-be-discovered key to a unified theory capable of explaining both the infinitely large and the infinitely small. The solution to this puzzle might lie in the humble neutrino, an elementary particle with no electric charge and almost invisible, as it rarely interacts with matter, passing through everything on our planet without consequences.

For this very reason, neutrinos are difficult to detect. However, in rare cases, a neutrino can interact, for example, with water molecules at the bottom of the sea. The particles emitted in this interaction produce a “blue glow” known as Čerenkov radiation, detectable by instruments such as KM3NeT.

The KM3NeT (Kilometer Cube Neutrino Telescope) is a large underwater observatory designed to detect neutrinos through their interactions in water. It is divided into two detectors, one of which, ORCA (Oscillation Research with Cosmics in the Abyss), was used for this research. It is located off the coast of Toulon, France, at a depth of approximately 2,450 meters.

However, merely observing neutrinos is not enough to draw conclusions about the properties of quantum gravity—we must also look for signs of “decoherence”.

As they travel through space, neutrinos can “oscillate”, meaning they change identity—a phenomenon scientists refer to as flavor oscillations. Coherence is a fundamental property of these oscillations: a neutrino does not have a definite mass but exists as a quantum superposition of three different mass states. Coherence keeps this superposition well-defined, allowing the oscillations to occur regularly and predictably. However, quantum gravity effects could attenuate or even suppress these oscillations, a phenomenon known as “decoherence”.

“There are several theories of quantum gravity which somehow predict this effect because they say that the neutrino is not an isolated system. It can interact with the environment,” explains Nadja Lessing, a physicist at the Instituto de Física Corpuscular of the University of Valencia and corresponding author of this study, which includes contributions from hundreds of researchers worldwide.

“From the experimental point of view, we know the signal of this would be seeing neutrino oscillations suppressed.” This would happen because, during its journey to us—or more precisely, to the KM3NeT sensors at the bottom of the Mediterranean—the neutrino could interact with the environment in a way that alters or suppresses its oscillations.

However, in Lessing and colleagues’ study, the neutrinos analyzed by the KM3NeT/ORCA underwater detector showed no signs of decoherence, a result that provides valuable insights.

“This,” explains Nadja Lessing, “means that if quantum gravity alters neutrino oscillations, it does so with an intensity below the current sensitivity limits.” The study has established upper limits on the strength of this effect, which are now more stringent than those set by previous atmospheric neutrino experiments. It also provides indications for future research directions.

“Finding neutrino decoherence would be a big thing,” says Lessing. So far, no direct evidence of quantum gravity has ever been observed, which is why neutrino experiments are attracting increasing attention. “There has been a growing interest in this topic. People researching quantum gravity are just very interested in this because you probably couldn’t explain decoherence with something else.”

IMAGE: The visualisation of a simulated event in the KM3NeT/ORCA detector. Credit CC BY-NC 4.0 https://creativecommons.org/licences/by-nc/4.0 Credits KM3NeT

9 notes

·

View notes

Note

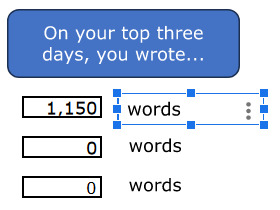

Thank you for all your help with this! your solution for the project totals worked perfectly :D

As for the difficulty deleting projects, thanks again for looking into it. I’m using Google sheets, and when I delete a project - any of them - the top stats sheet starts looking like this:

(The clipped words image was already like that) The chart sheet otherwise deleted the data just fine, but looks like this:

And totals like this:

The 2024 word count in yearly comps has that same REF image instead of a number, and the chart instead says ‘Add a series to start visualising your data’. In totals and comps data the information for the deleted project also shows the REF message, unless I manually delete that column as well (idk if that’s an issue but best be thorough). Daily graph and chart remove the data fine, and if I delete a project that has its own sheet it’s replaced with the add a series message, which I assume is supposed to happen. I haven’t noticed and other adverse affects.

(Seriously, thank you for helping me so much. You’re amazing, and even if you don’t find/know a work around for this you’ve been a huge help!)

Ah, okay, I seeeeeee. So, anywhere you see that #REF! message, it's because it's trying to reference information that's not there any more. In this case, you did the right thing by deleting the project column from the Totals sheet! If you delete a project column in the Daily sheet, you also need to delete its corresponding columns in the Comps. Data and Totals sheets. This is because both of those sheets pulled information from the deleted project column in the Daily sheet, and they'll still be trying to fulfil a formula that no longer has the necessary information to work.

Once those columns are deleted, follow the instructions for deleting a series from a chart on page 7 of the instructions booklet, and delete the project's series from the Totals chart.

Something you could do in the future, instead of deleting project columns from the Daily sheet, is to hide them (right-click on the letter above the column and choose "hide column" from the drop-down menu). Choosing this method means that a) you don't need to delete any project columns elsewhere, and b) "adding" more columns, should you ever need them, becomes a matter of unhiding the column. The only change you'll need to make then is deleting the project series from the Totals chart, as explained in previous paragraph.

(And yes, deleting a project column will affect its corresponding sheet, if it has one. Pages 23–24 of the instructions explain how to make a new project-specific sheet, and you can follow those same instructions to link the sheet to a different project column, if you want to. Otherwise, feel free to hide/delete the sheet.)

However. I noticed that for some reason, removing any data from the totals chart converts the previously-invisible line for the totals into a stacked column instead. To fix that, right-click on the chart and select "Chart style" to open the Chart editor window. Then, under the "customise" tab, scroll down and click on the option that says "series". Pick "Totals" from the drop-down menu of series, and change where it says "column" to "line" and set the line opacity to 0 (Shown in the left image).

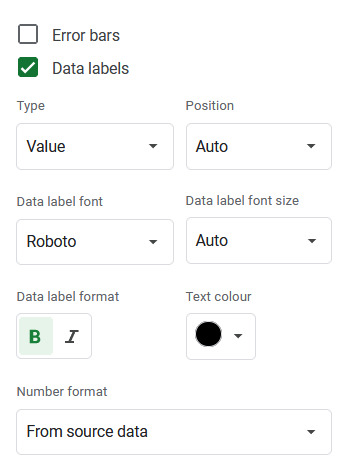

Below this section, there is a list of three tick-boxes labelled Error bars, Data labels and Trend line. Click the box for Data labels, and make sure the text colour is set to black. You'll then get a number for total words written that month appearing above the stacked columns. (Shown in right image)

Fixing the Top Stats sheet:

In the case of the current streak counter, the issue is in two places: with the formula that counts the current streak:

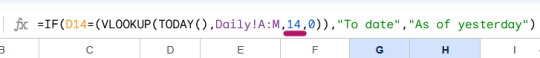

and the formula that decides whether to say "to date" or "as of yesterday":

I've underlined the bits you'll need to change. You can find more details on how to do this and how it works on pages 9–10 (Top Sheets explanation) and 18 (how the streak counter works) of the instructions document.

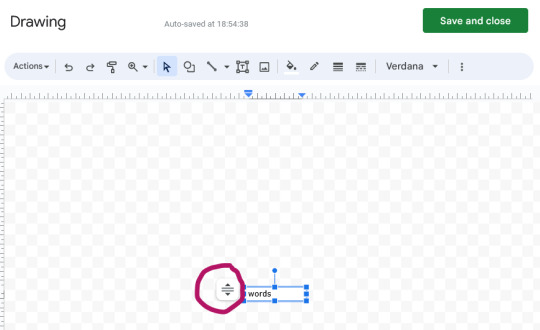

also. if those squashed "words" textboxes are annoying you (they would drive me nuts lol), I think the problem is that the text box isn't set to resize itself with the text (even though I thought i did that...). Try clicking on the box, then on the little three dots symbol and choose "Edit" from the menu that appears. When the Drawing window opens, select that icon next to the box (circled), and choose "Resize shape to fit text".

8 notes

·

View notes

Text

Future of data science?

Exciting developments await the future of data science as it evolves. Data scientists can expect to see greater integration of artificial intelligence (AI) and machine learning, facilitated by automation and AutoML, which will make data analysis more accessible. Proficiency in big data and real-time analytics will be crucial as the volume and complexity of data continue to expand. Additionally, ethical considerations such as privacy protection, bias detection, and responsible AI implementation will remain important focal points. Furthermore, data science will involve increased interdisciplinary collaboration that bridges technical expertise with domain knowledge and ethics.

Explainable AI will be necessary for the development of trust and compliance, while edge and IoT analytics will cater for the increased demand of IoT devices. In this way, data visualisation and storytelling skills will still be vital. Data scientists will need to adapt as organisations shift to hybrid and multi-cloud environments. This field will require continuous learning for success. The next quantum leap for data science with quantum computing. Customization and personalization will also define data science; this will deliver specific solutions for particular business needs. In the data-driven era, it’s the data scientists that will excel.

If you are considering preparing for this promising future, Edure provides a wide variety of comprehensive data science courses designed for students of all skill levels. Edure has a comprehensive curriculum that includes introductory courses in basic data science concepts, tools and techniques for beginners and advanced programs targeted for experienced professionals. These courses will give you the knowledge and the skills that will enable you to succeed in the data-driven world of tomorrow. Therefore, whether a beginner or an expert desiring to remain up to date in data science, Edure courses are valuable for launching into or deepening one’s involvement in this promising domain.

For more information contact as

Edure | Learn to Earn

Aristo Junction, Thampanoor,

Thiruvananthapuram, Kerala , 695001

+91 9746211123 +91 9746711123

2 notes

·

View notes

Text

The Role of Business Intelligence in ERP Software

Enterprise Resource Planning (ERP) software providers like STERP (Shanti Technology), an excellent ERP software company in Madhya Pradesh, understand the value of Business Intelligence (BI) within this context. STERP, a leading provider of manufacturing ERP software in Indore, recognises the potential of business intelligence (BI) to turn collected data into a competitive advantage.

Business intelligence (BI) in the context of enterprise resource planning (ERP) refers to the processes involved in collecting, preparing, and analysing data from a wide variety of ERP subsystems. This suite of state-of-the-art methods and technologies produces insightful reports that may be used for strategic planning, performance monitoring, and operational optimisation.

STERP, a leading ERP software company in Madhya Pradesh and one of the top ERP solution providers in Indore understands the significance of a robust BI system for monitoring key performance indicators (KPIs), tracking trends, identifying patterns, and uncovering hidden opportunities and risks. Data analytics can be put to use in businesses for potential gains in productivity, cost savings, customer satisfaction, and innovation.

STERP, one of the most distinguished ERP software companies in Madhya Pradesh, promises cutting-edge BI tools in all of its ERP packages. By providing intuitive dashboards, customizable reports, and real-time analytics, STERP provides its customers with a bird's eye view of their operations. Let's explore the role that business intelligence plays in enterprise resource planning systems.

Data Integration and Consolidation for Informed Decision-Making:

Integrated and consolidated data is crucial for businesses like STERP, one of the most reliable ERP software providers in Madhya Pradesh, to make well-informed decisions. As an industry leader in manufacturing ERP software in Indore, STERP is well aware of the need of combining and integrating data from several sources.

The term "consolidation" refers to the process of collecting and harmonizing data from several locations. In its capacity as one of the leading ERP software firms in Madhya Pradesh and ERP solution providers in Indore, STERP facilitates the consolidation of data from disparate sources into a single repository. Data centralization ensures that all firm decision-makers and executives are using the same, reliable information.

Reporting and Analytics for Performance Monitoring:

In order to generate reports, it is necessary to construct and present organised data in an understandable and unambiguous way. STERP's ERP software makes it simple for businesses to tailor reports to their specific requirements, allowing for deeper analysis of sales, inventory, production, and finances.

By evaluating data and providing reports, STERP, a well-known manufacturing ERP software provider in Indore, aids numerous firms in gaining insight into their processes. Real-time dashboards and visualisations allow executives to identify bottlenecks, allocate resources effectively, streamline processes, and make educated strategic decisions.

Predictive Analytics and Forecasting for Strategic Planning:

Strategic decision-making at STERP, a distinct ERP software company in Madhya Pradesh, is significantly influenced by analytics and forecasting. As one of the most distinguished ERP solution providers in Indore, STERP recognises the significance of analytics and forecasting in directing business growth and strategy.

Utilising historical information, statistical algorithms, and machine learning techniques, predictive analytics allows for precise forecasting and prediction. In order to stay ahead of the competition, businesses can use predictive analytics to forecast demand, identify risks, determine the most efficient use of resources, and make other proactive decisions.

Self-Service BI and Empowering End Users:

Being one of the trusted ERP solution providers in Indore and a top manufacturing ERP software company in Indore, STERP appreciates the importance of self-service BI in empowering end users to make better, more efficient decisions.

Self-service BI allows end users to access and update data without involving IT or data analysts. To make data exploration, report preparation, and insight production accessible to users of all skill levels, STERP offers intuitive interfaces and clear tools. Users are empowered to make decisions at the moment based on accurate data without relying on IT, thanks to self-service BI.

Final Thoughts:

Business intelligence (BI) is crucial in ERP. Companies like STERP (Shanti Technology), a distinct ERP software company in Madhya Pradesh, appreciate the value of BI since it helps them to leverage data for strategic decision-making and planning. When businesses are able to consolidate and integrate their data, they are able to view the big picture of their operations, and the reporting and analytics functions give them insight into KPIs. In addition, businesses can use forecasting and predictive analytics to anticipate future trends, mitigate risks, and seize opportunities. Self-service BI provides end users with straightforward tools to access and analyse data on their own, fostering a culture of data-driven decision-making and increasing productivity.

#Manufacturing ERP software in Indore#ERP Software Company in Madhya Pradesh#ERP solution providers in Indore#ERP software Companies in Madhya Pradesh#manufacturer#ERP system#cloud ERP#ERP solutions#marketing#ERP software#engineering ERP#business#process

6 notes

·

View notes

Text

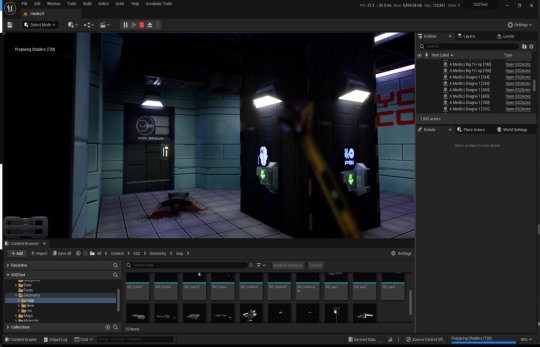

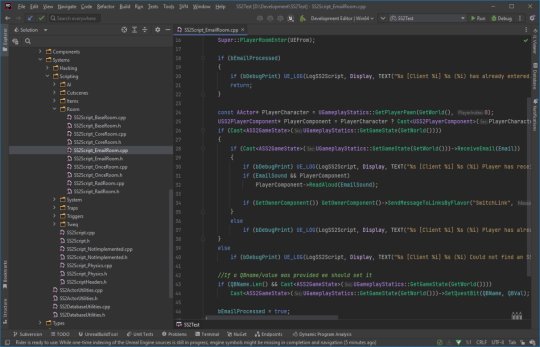

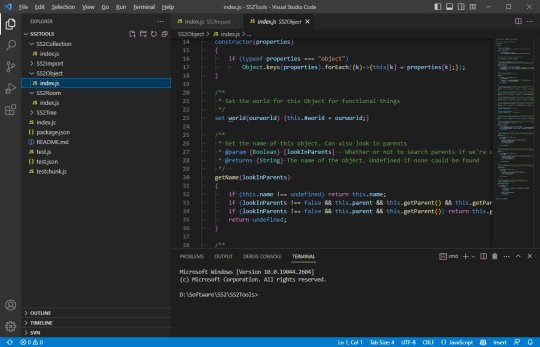

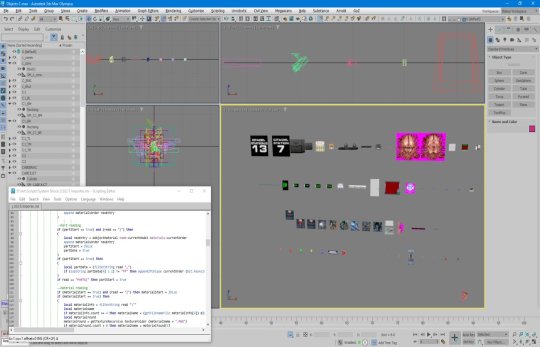

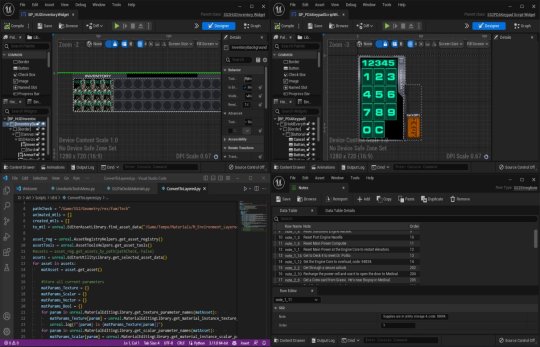

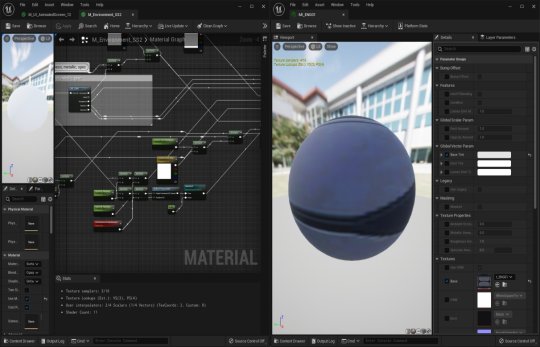

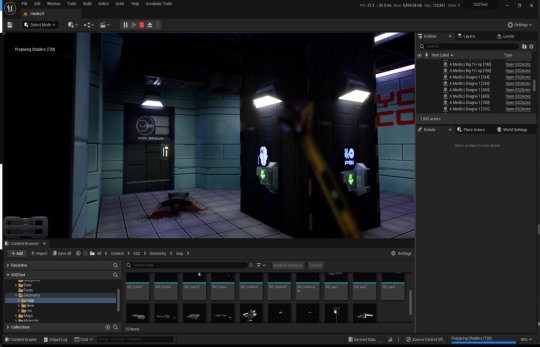

System Shock 2 in Unreal Engine 5

Tools, tools, tools

Back when I worked in the games industry, I was a tools guy by trade. It was a bit of a mix between developing APIs and toolkits for other developers, designing database frontends and automated scripts to visualise memory usage in a game's world, or reverse engineering obscure file formats to create time-saving gadgets for art creation.

I still tend to do a lot of that now in my spare time to relax and unwind, whether it's figuring out the binary data and protocols that makes up the art and assets from my favourite games, or recreating systems and solutions for the satisfaction of figuring it all out.

A Shock to the System

A while back I spent a week or so writing importer tools, logic systems and some basic functionality to recreate System Shock 2 in Unreal Engine 5. It got to the stage where importing the data from the game was a one-click process - I clicked import and could literally run around the game in UE5 within seconds, story-missions and ship systems all working.

Most of Dark engine's logic is supported but I haven't had the time to implement AI or enemies yet. Quite a bit of 3D art is still a bit sketchy, too. The craziest thing to me is that there are no light entities or baked lightmaps placed in the levels. All the illumination you can feast your eyes on is Lumen's indirect lighting from the emissive textures I'd dropped into the game. It has been a fun little exercise in getting me back into Unreal Engine development and I've learnt a lot of stuff as usual.

Here is a video of me playing all the way up to the ops deck (and then getting lost before I decided to cut the video short - it's actually possible to all the way through the game now). Lots of spoilers in this video, obviously, for those that haven't played the game.

youtube

What it is

At it's core, it's just a recreation of the various logic-subsystems in System Shock 2 and an assortment of art that has been crudely bashed into Unreal Engine 5. Pretty much all the textures, materials, meshes and maps are converted over and most of the work remaining is just tying them together with bits of C++ string. I hope you also appreciate that I sprinkled on some motion-blur and depth of field to enhance the gameplay a little. Just kidding - I just didn't get around to turning that off in the prefab Unreal Engine template I regularly use.

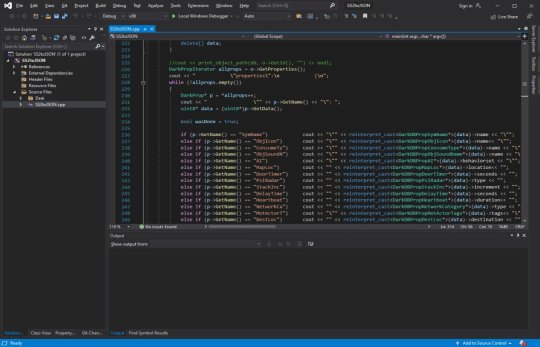

Tool-wise, it's a mishmash of different things working together:

There's an asset converter that organises the art into an Unreal-Engine-compatible pipeline. It's a mix of Python scripting, mind numbingly dull NodeJS and 3dsmaxscript that juggles data. It recreates all the animated (and inanimate) textures as Unreal materials, meshifies and models the map of the ship, and processes the objects and items into file formats that can be read by the engine.

A DB to Unreal converter takes in DarkDBs and spits out JSON that Unreal Engine and my other tools can understand and then brings it into the Engine. This is the secret sauce that takes all the levels and logic from the original game and recreates it in the Unreal-Dark-hybrid-of-an-engine. It places the logical boundaries for rooms and traps, lays down all the objects (and sets their properties) and keys in those parameters to materialise the missions and set up the story gameplay.

Another tool also weeds through the JSON thats been spat out previously and weaves it into complex databases in Unreal Engine. This arranges all the audio logs, mission texts and more into organised collections that can be referenced and relayed through the UI.

The last part is the Unreal Engine integration. This is the actual recreation of much of the Dark Engine in UE, ranging all the way from the PDA that powers the player's journey through the game, to the traps, buttons and systems that bring the Von Braun to life. It has save-game systems to store the state of objects, inventories and all your stats, levels and progress. This is all C++ and is built in a (hopefully) modular way that I can build on easily should the project progress.

Where it's at

As I mentioned, the levels themselves are a one-click import process. Most of Dark engine's logic, quirks and all, is implemented now (level persistence and transitions, links, traps, triggers, questvars, stats and levelling, inventory, signals/responses, PDA, hacking, etc.) but I still haven't got around to any kid of AI yet. I haven't bought much in the way of animation in from the original game yet, either, as I need to work out the best way to do it. I need to pull together the separate systems and fix little bugs here and there and iron it out with a little testing at some point.

Lighting-wise, this is all just Lumen and emissive textures. I don't think it'll ever not impress me how big of a step forward this is in terms of realistic lighting. No baking of lightmaps, no manually placing lighting. It's all just emissive materials, global/indirect illumination and bounce lighting. It gets a little overly dark here and there (a mixture of emissive textures not quite capturing the original baked lighting, and a limitation in Lumen right now for cached surfaces on complex meshes, aka the level) so could probably benefit with a manual pass at some point, but 'ain't nobody got time for that for a spare-time project.

The unreal editor showcasing some of the systems and levels.

Where it's going

I kind of need to figure out exactly what I'm doing with this project and where to stop. My initial goal was just to have an explorable version of the Von Braun in Unreal Engine 5 to sharpen my game dev skills and stop them from going rusty, but it's gotten a bit further than that now. I'm also thinking of doing something much more in-depth video/blog-wise in some way - let me know in the comments if that's something you'd be interested in and what kind of stuff you'd want to see/hear about.

The DB to JSON tool that churns out System Shock 2 game data as readable info

Anyway - I began to expand out with the project and recreate assets and art to integrate into Unreal Engine 5. I'll add more as I get more written up.

#game development#development#programming#video game art#3ds max#retro gaming#unreal engine#ue5#indiedev#unreal engine 5#unreal editor#system shock 2#system shock#dark engine#remake#conversion#visual code#c++#json#javascript#nodejs#tools#game tools#Youtube

1 note

·

View note

Text

AN ECONOMIC LOOK ON RUSSIA

The solution in 3 words

I could say

1) von

2) der

3) laid

Since I didn't do the other 16 sanctions

Honestly reading of recession odds on the guardian propaganda.. mislead me

But it's true I'm not informed enough on Russia economy

Yet some helpful concepts may be applied

Data:

- Russia economy is stable by itself

And not unbalanced to war

That's a good base because points at external reasons

- globally the markets systems are dividing and isolating themselves

From thee EU sanctions feud

To the USA tariff aggressions

Markets are now weapons and that's not Russia controlled

- these aggressions that are targeting Russia too if zooming out they don't look like reactions to a something to blame on Russia but appear as a blaming pattern against Russia regardless of its behaviour, which in any case doesn't have to be justified or pre approved by capitalist countries

+

but there's another FACT

That keeps being minimised, globally, and almost mocked at

And its not yet visible because, attention, is

CONSEQUENTIAL

Fred's WALLER today dared a Saudi statement

"Tariffs impact on inflation are short and light"

BOOOOOMBOCLAT WHAT A SLUT

Because

And its from the same group of personal interests that demanding to ban me for "influence on markets"

But claiming tariffas are inert WTF 🤌🏽,

They ignore that

:

Tariffs influence economy

Therefore there's another data:

- global economic disruption by macroeconomics mishandling

And I hereby state such disruption is a SAUDI STRATEGY IMPLEMENTED BY USA AND EU

PROVINCES OF SALMAN

_____

Actions recommended

1) INTERNAL

- sectors with unfilled market spots

Internally the production can be expanded to fit marketing targets now out:

• Affordability the guideline but not only

• Innovation can be also imported and without sanctions

• increase of wages to sustain the demand now more important than inflation

For instance a new.. innovative car model scalable in pricing but low in costs

I'll design it.. we could make it in Morocco 😎

A big river is often made of many and smaller affluents.. to visualise it

It's an enormous job.. agreed ..DIVERSIFYING

2) EXTERNAL

- access to friendly markets

Linking it to the internal set

Because what is good can be good anywhere

- natural resources

Must become a PR card to the world without threats to Russia, which has to invest in deal making

- Geopolitical obstacles

Are the most important factor

And can be rooted in Arabia and the governments it controls however good they SIMULATE else

They SIMULATE but the fast play discovers them

• some geopolitical entities must be freed and stabilised: EU and USA by evidence

I repeat: by evidence

3) CURRENCY (ENGINEERING)

this is the most interesting and courages try

Because

Increasing wages and sustaining internal demand requires cash, DISTRIBUTED

And the risk is

- inflation

And

- currency depreciation

The first is bad but in this competitive period the second isn't

To control Inflation

Recommending

• a CENTRALISED PRICE CONTROL SYSTEM

Prolly a first or so in economy

• coerced credit

Explaining: 400 BN seized can be for the time range and the geopolitical conditions still considered available 😎

If years from now are definitely lost then years from now it will be accounted

It's a weight in a weighter dish, the inflation

The leverage is over the trillion.. but bankers are better at those maths

I think this is in synthesis a complete even if not detailed approach for an immediate reboot

But

A more long termed strategy should frame it

😎✊🏿 Compañero

PS:

Salman in this moment

It's like a prostitute fearing to lose energy customers

So

If Russia pulls up the mini..

Salman will implode in anger

Why don't you give it a try 😎

0 notes

Text

How Do Data Visualisation Services Turn Raw Data into Strategic Insights?

Data visualisation services help in transforming raw, complex data into insightful visuals like charts, dashboards, and graphs. This makes information easier to interpret, enabling faster and smarter decisions. With the volume of data businesses generate daily.

How Can a Data Visualization Company Help Your Business?

A professional data visualization company like Dataplatr offers the tools and expertise needed to convert data into actionable visuals. We tailor visual storytelling to your business goals—whether it's sales performance, customer engagement, or operational efficiency—ensuring your teams understand the story behind the numbers.

The Role of Data Visualisation in Modern Business

Data visualization consulting plays a transformative role. By representing data through intuitive visuals like dashboards, charts, and graphs, organizations can uncover trends, identify anomalies, and monitor performance in real time.

What Makes Data Visualization Consulting Essential for Strategic Growth?

Through data visualization consulting, businesses gain clarity in their decision-making process. Consultants identify key data sources, design effective dashboards, and help integrate them into your workflow. Our consulting approach ensures your team can track trends, risks, and opportunities.

Benefits of Data Visualization Consulting Services

Here are some top advantages of investing in data visualization consulting services:

Gain clear insights from massive datasets

Support real-time decision-making

Communicate data-driven results effectively

Boost stakeholder engagement

Align teams with visual KPIs and performance tracking

Why Choose Data Visualisation Consulting Services?

While internal tools can display basic visuals, data visualization consulting services offer a tailored approach aligned with your business goals. From tool selection (e.g., Looker, Tableau, Power BI) to implementation and custom dashboard development, Dataplatr ensures your data tells the right story to the right people at the right time.

Dataplatr: Your Strategic Data Visualization Partner

At Dataplatr, we combine industry expertise with cutting-edge technology to deliver powerful, intuitive data visualisations. As a reliable data visualization company, our solutions are scalable, interactive, and designed to achieve deeper business value.

0 notes

Text

Spend Visualization | Procurement Data Visualisation Analyst | PI Data Analytics

Explore the advantages of Procurement Data Visualisation with PI Data Analytics. Our innovative solutions turn raw data into actionable insights, allowing procurement teams to identify trends and areas for improvement. Data-driven decisions lead to better results.

0 notes

Text

Data visualization solutions refer to software tools and platforms that enable users to create meaningful visual representations of data. These solutions range from simple charting tools to sophisticated analytics platforms that can handle large datasets and create interactive visualizations. They are essential for businesses and organizations seeking to uncover insights, communicate trends, and make data-driven decisions more effectively. Popular examples include Tableau, Power BI, Google Data Studio, and D3.js, each offering unique features tailored to different user needs and levels of technical expertise.

0 notes

Text

Role of Technology in Enhancing Commercial Architectural Design

In today’s rapidly evolving construction industry, technology plays a pivotal role in reshaping how commercial spaces are conceptualized, designed, and delivered. The field of architectural commercial design has witnessed significant transformation due to cutting-edge tools and software, which enhance creativity, precision, and collaboration. Among these, Building Information Modeling (BIM) stands out as a revolutionary technology empowering architects, engineers, and builders worldwide.

In this blog, we are going to talk about how technology enhances commercial architectural designing with a particular emphasis on the influence of BIM and its related services. Optimize your project with advanced design solutions or partner with a professional architectural BIM service company to learn how the right technology can be leveraged to bring your practice efficiency and success.

How Architectural Commercial Design Benefits from Technology?

Commercial architectural design is about designing commercial buildings that work for users and for business to be aesthetically pleasing and beautiful. Whether it is BIM or any other design process, technology allows design teams to break free from 2D drawings and into smarter, integrated ways of working for better results.

The Power of BIM in Commercial Architectural Design

BIM means Building Information Modeling, in short — a digital time tested practice with intelligent 3d models of every building creating a visualized management for all possible state between and throughout — concepts to completion — and everything in between. BIM architectural services have become the de-facto standard for commercial projects that need to lower risks, ensure accuracy, and maximize resources.

True 3D Visualisation: BIM offers precise and realistic 3D models that allow your architect and client to visualize exactly how a commercial space will appear to make the best possible early decision in the design process.

Enhanced Collaboration: With data from more than one discipline, architectural bim design service Dubai and other regions are able to bring architects, engineers, contractors, and clients together in close teamwork.

Clash Detection and Problem Solving: BIM software performs clash detection, identifying spaces where different systems such as electrical, plumbing, and structural elements might interfere with each other, thereby helping to avoid expensive delays and rework during construction.

Quick Turn Around On-Project Status: Architectural 3D BIM services are very useful in planning the schedule as well as the cost estimations and procurement of materials for a project so that the project is on the right path in terms of timing and cost.

Why Partner with an Architectural BIM Service Company?

Selecting the right architectural BIM service provider is key to unlock the benefits of BIM to the maximum. These companies provide customized BIM Solutions based on the size and complexity of your project.

Technology Beyond BIM Other Emerging Technologies Improving Commercial Design

Apart from BIM at the fore, other technological advancements also help improve architectural commercial design:

In this regard, Virtual Reality (VR) and Augmented Reality (AR) are some of the best tools that help both clients and designers to experience a virtual walkthrough of commercial spaces even before they floor out.

Technology Beyond BIM: Other Innovations Enhancing Commercial Design

Apart from BIM at the fore, other technological advancements also help improve architectural commercial design:

In this regard, Virtual Reality (VR) and Augmented Reality (AR) are some of the best tools that help both clients and designers to experience a virtual walkthrough of commercial spaces even before they floor out.

Functional Adaptive Design is often facilitated by parametric design software, which allows architects to develop designs that will react and adapt to varying environmental and functional conditions.

Cloud Collaboration Platforms allow geographically separated project teams to work together by enabling real-time communication and sharing documents24 in a central location, increasing transparency and efficiency.

Architectural BIM Design Service Dubai: A Regional Perspective

Dubai is a global hub for innovative construction, where architectural BIM design services play a crucial role in delivering complex commercial projects efficiently. Local BIM service providers bring expertise in meeting strict regulatory requirements while incorporating cutting-edge design trends that define Dubai’s skyline.

By collaborating with a reliable architectural BIM service company in Dubai, businesses can ensure their commercial architectural design projects benefit from world-class technology and skilled professionals.

Key Benefits of Technology in Architectural Commercial Design

Improved Design Accuracy: Reduces errors and increase the quality of your Built.

Decreased Expenses on Projects: With early clash detection and better resource planning on projects, the money spent on avoiding clashes is reduced.

Reduced Project Timeline: Highly optimized process cuts down on time spent on design and construction.

Improved Client Engagement: 3D models and VR experiences allow clients to quickly visualize designs, facilitating faster approvals.

Eco-Friendly Solutions: Technology makes it possible to integrate sustainable materials and energy-efficient solutions.

FAQ’s on Commercial Architectural Design

Q1: What is architectural commercial design?

A1: It is the process of creating functional and aesthetic commercial buildings according to the requirements of any business.

Q2: What are the benefits of BIM architectural services to commercial projects?

A2: They offer accurate 3D modelling, clash detection, improved collaboration and working together, and project management.

Q3: Why should I choose an architectural BIM service company?

A3: Experts ensure precise modeling, regulatory compliance, and efficient project execution

Conclusion: Embracing Technology to Transform Architectural Commercial Design

Technology is no longer optional in today’s architectural landscape—it is essential for delivering innovative, efficient, and sustainable commercial buildings. The integration of Commercial Architectural Design services and other advanced tools empowers architects and clients to collaborate better, reduce risks, and bring visionary designs to life.

Whether you are planning a new commercial project or upgrading an existing one, leveraging BIM and partnering with a trusted architectural BIM service company can significantly enhance your outcomes. Embrace technology today to build smarter, faster, and more sustainably in the world of architectural commercial design.

Stay ahead with the latest insights on engineering innovation, technology, and project success! Follow our LinkedIn page for expert updates on Engineering Solutions that power the future.

#architectural commercial design#bim architectural services#architectural bim design service dubai#architectural 3d bim services#architectural bim services#architectural bim service company

1 note

·

View note

Text

Unlock Precision with LiDAR Sensor, 3D Mapping, and Drone Survey Solutions by LiDAR Solutions

LiDAR Solutions leads the industry with advanced LiDAR sensor, 3D mapping, and drone survey services tailored for diverse projects. Using state-of-the-art technology, we provide accurate, detailed data that helps businesses make informed decisions efficiently.

Our cutting-edge LiDAR sensor technology captures precise spatial information by emitting laser pulses and measuring the reflected signals. This method allows us to create high-resolution maps of terrain and structures, offering unparalleled detail and accuracy. Whether you’re working on environmental assessments or infrastructure development, our LiDAR sensor applications deliver valuable insights.

Combining this technology with 3D mapping, we generate comprehensive digital models that represent real-world environments in stunning detail. Our 3D mapping services support urban planning, construction, mining, and more by visualising complex surfaces and volumes with ease. This data helps you plan effectively and identify potential challenges before they arise.

Our drone survey services complement our LiDAR and mapping offerings by capturing aerial perspectives quickly and safely. Using UAVs equipped with advanced sensors, we perform thorough site inspections and data collection even in hard-to-access areas. Our drone survey solutions save time, reduce costs, and improve safety compared to traditional methods.

At LiDAR Solutions, we customise every project to fit your needs, ensuring seamless integration of LiDAR sensor, 3D mapping, and drone survey data into your workflow. Our experienced team guides you from consultation to delivery, providing expert support throughout.

If you want accurate, reliable spatial data for your project, trust LiDAR Solutions for your LiDAR sensor, 3D mapping, and drone survey needs. Reach out today and discover how our technology-driven services can elevate your next project with precision and efficiency.

Source

0 notes

Text

Mastering Markets with Option Strategies and Gift Nifty Insights

In today’s rapidly changing trading landscape, finding the right tools and strategies can make the difference between profit and loss. One such tool gaining popularity among traders is an option strategy builder, an intelligent solution designed to simplify complex derivatives strategies. At the same time, Gift Nifty is becoming a key indicator for global sentiment on Indian equities, playing a vital role in pre-market analysis. Understanding both of these in the early stages of your trading journey can provide a major edge in navigating the markets effectively.

An option strategy builder is essential for anyone involved in options trading, especially for those who wish to simulate, test, and analyze different combinations without committing actual capital. This tool allows traders to visualise potential profits, losses, breakeven points, and risk levels. In parallel, Gift Nifty—formerly known as SGX Nifty—offers insights into the possible direction of the Indian stock market before it opens, making it an important factor in early decision-making.

Combining the insights from Gift Nifty with strategic planning via an option strategy builder provides a powerful approach. For example, if Gift Nifty is trading significantly higher overnight, a trader might consider bullish option strategies like bull call spreads or put credit spreads using the builder to forecast potential outcomes. These tools are especially useful for creating a balanced, data-driven approach before the market opens.

The biggest advantage of using an option strategy builder is its ability to take emotion out of trading. Many novice traders struggle with impulsive decisions driven by fear or greed. These tools, however, focus entirely on data, risk-reward ratios, and technical analysis. You can enter various market scenarios and let the platform calculate how your strategy would perform. When you integrate this process with Gift Nifty readings, you’re not only reacting to global cues but also shaping your strategies proactively with a clearer perspective.

Understanding market movements through Gift Nifty isn’t just about the numbers—it’s about the sentiment behind them. Global investors use Gift Nifty as a barometer of confidence in the Indian economy. A sudden surge or dip can signify shifting investor expectations and prompt re-evaluation of option strategies. In this way, traders who monitor this pre-market indicator and pair it with strategic simulations tend to develop more disciplined and effective trading habits.

Another benefit of a solid strategy builder is the ability to learn through trial without loss. You can explore scenarios like market neutrality, extreme volatility, or calm sessions. Pairing such simulations with Gift Nifty movements gives traders a full-circle view—what’s driving the market and how they might position themselves without being caught off guard. This leads to better preparation and fewer surprises during live market hours.

Intradayscreener.com is one platform where traders can refine such approaches. The site offers an intuitive interface and powerful analytics that suit both beginners and experienced traders. It empowers users to dig deeper into data and set up actionable strategies aligned with market sentiments. What’s most valuable is the ability to combine real-time global indicators like Gift Nifty with calculated strategies for options trading—all in one place.

Of course, trading is not about following signals blindly. It’s about being prepared. A trader who enters the market with a pre-defined plan crafted using an option strategy builder is often more confident and patient. They know their entry, exit, and potential loss limits well in advance. When these strategies are backed by Gift Nifty trends, it gives the added advantage of a macro-level market view before making a single move.

In conclusion, mastering the market requires more than just luck or instinct. It requires preparation, analysis, and the right tools. When you bring together the precision of an option strategy builder and the global insights provided by Gift Nifty, you begin to develop an edge that most casual traders lack. Platforms like intradayscreener.com are helping more traders discover this advantage by offering the resources needed to strategize smartly and act confidently. By combining intelligence and insight, your trading journey can evolve from reactive guesses to proactive mastery.

For more info:-

Facebook

Instagram

0 notes