#Express SDK

Explore tagged Tumblr posts

Text

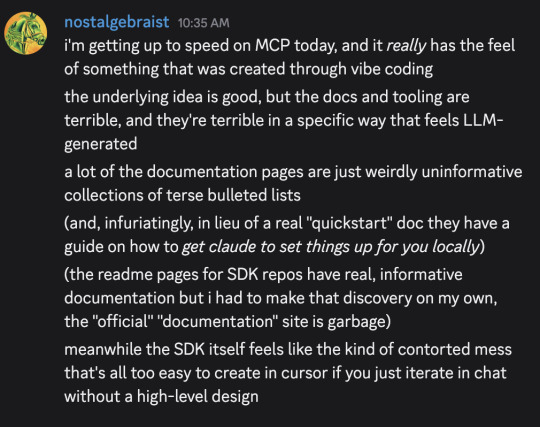

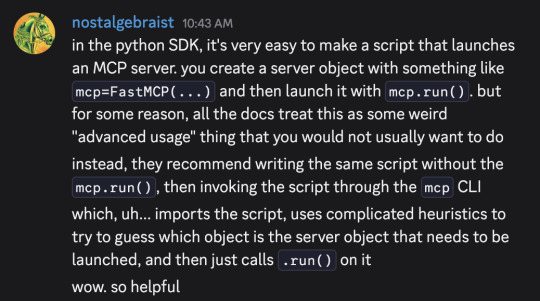

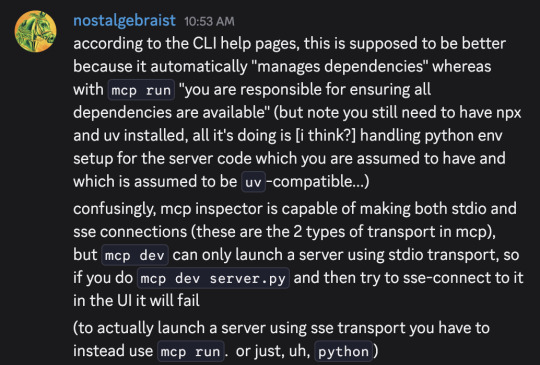

I typed out these messages in a discord server a moment ago, and then thought "hmm, maybe I should make the same points in a tumblr post, since I've been talking about software-only-singularity predictions on tumblr lately"

But, as an extremely lazy (and somewhat busy) person, I couldn't be bothered to re-express the same ideas in a tumblr-post-like format, so I'm giving you these screenshots instead

(If you're not familiar, "MCP" is "Model Context Protocol," a recently introduced standard for connections between LLMs and applications that want to interact with LLMs. Its official website is here – although be warned, that link leads to the bad docs I complained about in the first message. The much more palatable python SDK docs can be found here.)

EDIT: what I said in the first message about "getting Claude to set things up for you locally" was not really correct, I was conflating this (which fits that description) with this and this (which are real quickstarts with code, although not very good ones, and frustratingly there's no end-to-end example of writing a server and then testing it with a hand-written client or the inspector, as opposed to using with "Claude for Desktop" as the client)

63 notes

·

View notes

Text

AI Voice Cloning Market Size, Share, Analysis, Forecast, Growth 2032: Ethical and Regulatory Considerations

The AI Voice Cloning Market was valued at USD 1.9 Billion in 2023 and is expected to reach USD 15.7 Billion by 2032, growing at a CAGR of 26.74% from 2024-2032.

AI Voice Cloning Market is rapidly reshaping the global communication and media landscape, unlocking new levels of personalization, automation, and accessibility. With breakthroughs in deep learning and neural networks, businesses across industries—from entertainment to customer service—are leveraging synthetic voice technologies to enhance user engagement and reduce operational costs. The adoption of AI voice cloning is not just a technological leap but a strategic asset in redefining how brands communicate with consumers in real time.

AI Voice Cloning Market is gaining momentum as ethical concerns and regulatory standards gradually align with its growing adoption. Innovations in zero-shot learning and multilingual voice synthesis are pushing the boundaries of what’s possible, allowing voice clones to closely mimic tone, emotion, and linguistic nuances. As industries continue to explore voice-first strategies, AI-generated speech is transitioning from novelty to necessity, providing solutions for content localization, virtual assistants, and interactive media experiences.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/5923

Market Keyplayers:

Amazon Web Services (AWS) – Amazon Polly

Google – Google Cloud Text-to-Speech

Microsoft – Azure AI Speech

IBM – Watson Text to Speech

Meta (Facebook AI) – Voicebox

NVIDIA – Riva Speech AI

OpenAI – Voice Engine

Sonantic (Acquired by Spotify) – Sonantic Voice

iSpeech – iSpeech TTS

Resemble AI – Resemble Voice Cloning

ElevenLabs – Eleven Multilingual AI Voices

Veritone – Veritone Voice

Descript – Overdub

Cepstral – Cepstral Voices

Acapela Group – Acapela TTS Voices

Market Analysis The AI Voice Cloning Market is undergoing rapid evolution, driven by increasing demand for hyper-realistic voice interfaces, expansion of virtual content, and the proliferation of voice-enabled devices. Enterprises are investing heavily in AI-driven speech synthesis tools to offer scalable and cost-effective communication alternatives. Competitive dynamics are intensifying as startups and tech giants alike race to refine voice cloning capabilities, with a strong focus on realism, latency reduction, and ethical deployment. Use cases are expanding beyond consumer applications to include accessibility tools, personalized learning, digital storytelling, and more.

Market Trends

Growing integration of AI voice cloning in personalized marketing and customer service

Emergence of ethical voice synthesis standards to counter misuse and deepfake threats

Advancements in zero-shot and few-shot voice learning models for broader user adaptation

Use of cloned voices in gaming, film dubbing, and audiobook narration

Rise in demand for voice-enabled assistants and AI-driven content creators

Expanding language capabilities and emotional expressiveness in cloned speech

Shift toward decentralized voice datasets to ensure privacy and consent compliance

AI voice cloning supporting accessibility features for visually impaired users

Market Scope The scope of the AI Voice Cloning Market spans a broad array of applications across entertainment, healthcare, education, e-commerce, media production, and enterprise communication. Its versatility enables brands to deliver authentic voice experiences at scale while preserving the unique voice identity of individuals and characters. The market encompasses software platforms, APIs, SDKs, and fully integrated solutions tailored for developers, content creators, and corporations. Regional growth is being driven by widespread digital transformation and increased language localization demands in emerging markets.

Market Forecast Over the coming years, the AI Voice Cloning Market is expected to experience exponential growth fueled by innovations in neural speech synthesis and rising enterprise adoption. Enhanced computing power, real-time processing, and cloud-based voice generation will enable rapid deployment across digital platforms. As regulatory frameworks mature, ethical voice cloning will become a cornerstone in brand communication and media personalization. The future holds significant potential for AI-generated voices to become indistinguishable from human ones, ushering in new possibilities for immersive and interactive user experiences across sectors.

Access Complete Report: https://www.snsinsider.com/reports/ai-voice-cloning-market-5923

Conclusion AI voice cloning is no longer a futuristic concept—it's today’s reality, powering a silent revolution in digital interaction. As it continues to mature, it promises to transform not just how we hear technology but how we relate to it. Organizations embracing this innovation will stand at the forefront of a new era of voice-centric engagement, where authenticity, scalability, and creativity converge. The AI Voice Cloning Market is not just evolving—it’s amplifying the voice of the future.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Face Liveness Detection SDK for Spoof-Proof Security

How a Face Liveness Detection SDK Beats Spoof Attacks

In a world where unlocking a phone with your face feels like sci-fi, the reality behind that magic is far more complex and vulnerable. Face authentication is no longer just about recognizing a face. It’s about knowing whether that face is real, live, and in front of the camera. This is where a Face Liveness Detection SDK steps into the spotlight, acting like a bouncer at the digital door letting in the real deal and kicking out imposters.

Let’s explore what it is, why it’s important, and how it helps protect both your users and your reputation.

What Is a Face Liveness Detection SDK?

At its core, a face liveness detection SDK is a software development kit that developers can embed into mobile apps, websites, or biometric systems to detect whether a face presented to the camera is a real, live human or a spoof (like a photo, mask, or video replay). It’s not just looking for a face it’s watching for signs of life.

Key Features

Here’s what most high-quality liveness detection SDKs offer:

Passive liveness detection (no blinking or head-turning needed)

Anti-spoofing against photos, videos, deepfakes, and masks

Real-time verification within seconds

Compatibility with iOS, Android, and web platforms

Compliance with global data privacy standards (like GDPR & CCPA)

This SDK works silently and swiftly in the background no cheesy prompts or awkward user interactions are required. The goal? Seamless security.

Why Is Liveness Detection Crucial in Modern Face Authentication?

Passwords are passé. Face authentication is now the go-to for many industries, from fintech to healthcare. But where there's convenience, there's also risk. A Face Liveness Detection SDK prevents spoof attacks, which are surprisingly easy without the right tech in place.

Real-World Risks

Consider these scenarios:

Photo attacks: A printed photo or digital image fools basic facial recognition.

Video replay: Someone plays a video of the real user to unlock a system.

3D mask spoofing: Hyper-realistic masks impersonate a user’s facial structure.

In 2022, the iProov Biometric Threat Intelligence Report found that presentation attacks increased by over 300% compared to the previous year.

How a Face Liveness Detection SDK Works (Without Giving Hackers a Cheat Sheet)

Without going too far into the weeds (or tipping off the bad guys), here’s a peek into how these SDKs spot a real face versus a fake:

1. Texture and Light Analysis

Live skin has dynamic textures and reflects light differently than paper, screens, or silicone masks.

2. Micro-Movement Detection

Real faces have involuntary muscle twitches and eye micro-movements. Fakes? Not so much.

3. Depth Mapping

Using 2D or 3D sensors, the SDK checks for depth cues. Flat images just don’t cut it.

4. AI-Powered Behavior Tracking

Machine learning models look for inconsistencies in facial expressions, blinking, and head positioning.

Top Use Cases Across Industries

Face liveness detection isn’t just a cool trick it’s rapidly becoming a regulatory requirement and a user expectation, particularly in security-conscious industries. Here’s how it’s being utilized across various sectors:

Banking: Remote account opening. Face liveness detection helps prevent identity fraud by verifying the user’s physical presence.

Healthcare: Telehealth logins. It secures access to patient records, ensuring only authorized users can log in.

E-commerce: KYC (Know Your Customer) during high-value purchases. This helps reduce fraudulent transactions, protecting both customers and merchants.

Education: Online exam proctoring. Face liveness detection ensures the presence of test-takers, maintaining the integrity of the exam process.

Government: ePassport verification. It strengthens border control by confirming the identity of travelers.

Spoiler alert: This isn’t just for big players. Even small apps can (and should) use a lightweight Face Liveness Detection SDK to boost trust and protect their users.

What to Look for in a Face Liveness Detection SDK

Not all SDKs are created equal. If you're evaluating options, here’s a checklist to keep handy:

Accuracy & Speed

Low false positives, fast responses. Users shouldn’t wait 10 seconds to be verified.

Passive Liveness

No weird prompts like “turn your head” or “blink twice.” Less friction = better UX.

Spoof Detection Versatility

Should block everything from printed photos to high-res video replays and 3D masks.

On-Device or Cloud Processing

Choose based on your app’s needs: on-device for privacy, cloud for scalability.

SDK Size & Performance

No bloatware, please. Look for a lean, well-documented SDK that won’t slow down your app.

Compliance & Ethics

Ensure the vendor follows ethical AI practices and is transparent about how data is used.

Case Study: How Fintech Apps Are Reducing Fraud with Liveness Detection

A mid-size mobile banking app in Southeast Asia integrated a liveness detection SDK after experiencing a spike in fraudulent account registrations. Within six months:

Fraudulent attempts dropped by 78%

Customer support tickets related to login issues fell by 42%

User trust and app ratings improved by 1.3 stars on average

This wasn’t magic, it was a well-placed layer of invisible security.

Conclusion: Liveness Detection Isn't Optional Anymore

Face recognition is powerful but without liveness detection, it’s like locking your front door and leaving the window wide open. A face liveness detection SDK offers that missing layer of real-world, real-time protection that separates the serious from the spoofed.And if you’re serious about secure, seamless facial authentication, it’s time to start building smarter. Recognito is here to help you do just that.

1 note

·

View note

Text

Mobile App Development with Flutter A Complete Guide

Flutter is an open-source, free UI toolkit for application development (SDK) developed by Google. It enables developers to create natively compiled desktop, web, and mobile applications from a single codebase. Flutter relies on the Dart platform and is opted for rapid development, expressive UI, and improved performance. If you’re looking to learn the Best Flutter app development course, enroll…

0 notes

Link

The latest version of Meta's SDK for Quest adds thumb microgestures, letting you swipe and tap your index finger, and improves the quality of Audio To Expression. #AR #VR #Metaverse

0 notes

Text

Microsoft Azure Cognitive Services for Advanced Business Intelligence

Businesses are always searching for methods to keep ahead of the competition in the fast-paced commercial environment of today. One powerful tool that can help businesses leverage their data for smarter decision-making is Microsoft Azure Cognitive Services. By integrating artificial intelligence (AI) and machine learning (ML) into business operations, organizations can harness insights from their data like never before.

What are Microsoft Azure Cognitive Services?

Microsoft Azure Cognitive Services is a suite of APIs, SDKs, and services that enable businesses to incorporate AI and cognitive capabilities into their applications without needing deep expertise in data science or AI. These services provide advanced machine learning models, natural language processing, computer vision, and speech recognition, empowering businesses to improve customer experiences, automate processes, and extract meaningful insights from their data.

These services can be accessed through the Microsoft Azure Cognitive Services company, which offers an intuitive interface for companies to integrate and customize the services according to their needs.

How Azure Cognitive Services Boosts Business Intelligence

When it comes to advanced business intelligence, Azure Cognitive Services plays a pivotal role in helping businesses uncover trends, patterns, and actionable insights from raw data. Below are some key ways in which these services enhance business intelligence:

Enhanced Data Analysis Businesses often deal with massive amounts of data, and analyzing it manually can be both time-consuming and prone to errors. Azure Cognitive Services can process and analyze data at scale, identifying trends and insights that human analysts may miss. With capabilities such as predictive analytics, companies can forecast trends and make data-driven decisions that lead to better business outcomes.

Natural Language Processing (NLP) Understanding customer sentiment, reviews, and feedback is critical for businesses today. Azure’s NLP tools can interpret and analyze text, enabling companies to gain insights into customer needs, preferences, and pain points. This can be especially useful in industries such as retail, where customer feedback is valuable for improving products and services.

Computer Vision for Visual Data Insights Computer vision capabilities within Azure Cognitive Services allow businesses to analyze visual data from images or video. Whether it's identifying objects, reading barcodes, or analyzing facial expressions, this feature can be used in industries like retail, manufacturing, and healthcare for a deeper understanding of customer behavior or operational efficiency.

Speech Analytics and Voice Recognition Azure Cognitive Services also offers powerful voice recognition tools that can transcribe audio data and provide valuable insights. These tools are particularly useful in sectors like customer service, where understanding the voice of the customer can lead to better service delivery and increased customer satisfaction.

Seamless Integration with Digital Marketing Services

Azure Cognitive Services can also be seamlessly integrated with digital marketing services, enabling businesses to create smarter marketing strategies. By analyzing customer data from social media, website interactions, and other digital channels, companies can refine their marketing efforts, personalize offers, and create content that resonates with their target audience. This level of personalization is crucial for driving conversions and improving customer engagement.

The Bottom Line

For businesses looking to stay competitive, adopting Microsoft Azure Cognitive Services is a smart choice. The platform empowers organizations with advanced AI capabilities, allowing them to make data-driven decisions that drive growth, enhance operational efficiency, and deliver superior customer experiences.

To learn more about how Microsoft Azure Cognitive Services can transform your business, visit the Microsoft Azure Cognitive Services company or explore Digital Marketing services.

#digital marketing#Digital Marketing services#Microsoft Azure Cognitive Services company#Microsoft Azure Cognitive Services#Azure Cognitive Services

0 notes

Text

Image Recognition with AWS Rekognition: A Beginner’s Tutorial

AWS Rekognition is a cloud-based service that enables developers to integrate powerful image and video analysis capabilities into their applications. With its deep learning models, AWS Rekognition can detect objects, faces, text, inappropriate content, and more with high accuracy. This tutorial will guide you through the basics of using AWS Rekognition for image recognition.

1. Introduction to AWS Rekognition

AWS Rekognition provides pre-trained and customizable computer vision capabilities. It can be used for:

Object and Scene Detection: Identify objects, people, or activities in images.

Facial Recognition: Detect, compare, and analyze faces.

Text Detection (OCR): Extract text from images.

Celebrity Recognition: Identify well-known people in images.

Moderation: Detect inappropriate or unsafe content.

2. Setting Up AWS Rekognition

Before using AWS Rekognition, you need to set up an AWS account and configure IAM permissions.

Step 1: Create an IAM User

Go to the AWS IAM Console.

Create a new IAM user with programmatic access.

Attach the AmazonRekognitionFullAccess policy.

Save the Access Key ID and Secret Access Key for authentication.

3. Using AWS Rekognition for Image Recognition

You can interact with AWS Rekognition using the AWS SDK for Python (boto3). Install it using:bashpip install boto3

Step 1: Detect Objects in an Image

pythonimport boto3# Initialize AWS Rekognition client rekognition = boto3.client("rekognition", region_name="us-east-1")# Load image from local file with open("image.jpg", "rb") as image_file: image_bytes = image_file.read()# Call DetectLabels API response = rekognition.detect_labels( Image={"Bytes": image_bytes}, MaxLabels=5, MinConfidence=80 )# Print detected labels for label in response["Labels"]: print(f"{label['Name']} - Confidence: {label['Confidence']:.2f}%")

Explanation:

This script loads an image and sends it to AWS Rekognition for analysis.

The API returns detected objects with confidence scores.

Step 2: Facial Recognition in an Image

To detect faces in an image, use the detect_faces API.pythonresponse = rekognition.detect_faces( Image={"Bytes": image_bytes}, Attributes=["ALL"] # Get all facial attributes )# Print face details for face in response["FaceDetails"]: print(f"Age Range: {face['AgeRange']}") print(f"Smile: {face['Smile']['Value']}, Confidence: {face['Smile']['Confidence']:.2f}%") print(f"Emotions: {[emotion['Type'] for emotion in face['Emotions']]}")

Explanation:

This script detects faces and provides details such as age range, emotions, and facial expressions.

Step 3: Extracting Text from an Image

To extract text from images, use detect_text.pythonresponse = rekognition.detect_text(Image={"Bytes": image_bytes})# Print detected text for text in response["TextDetections"]: print(f"Detected Text: {text['DetectedText']} - Confidence: {text['Confidence']:.2f}%")

Use Case: Useful for extracting text from scanned documents, receipts, and license plates.

4. Using AWS Rekognition with S3

Instead of uploading images directly, you can use images stored in an S3 bucket.pythonresponse = rekognition.detect_labels( Image={"S3Object": {"Bucket": "your-bucket-name", "Name": "image.jpg"}}, MaxLabels=5, MinConfidence=80 )

This approach is useful for analyzing large datasets stored in AWS S3.

5. Real-World Applications of AWS Rekognition

Security & Surveillance: Detect unauthorized individuals.

Retail & E-Commerce: Product recognition and inventory tracking.

Social Media & Content Moderation: Detect inappropriate content.

Healthcare: Analyze medical images for diagnostic assistance.

6. Conclusion

AWS Rekognition makes image recognition easy with powerful pre-trained deep learning models. Whether you need object detection, facial analysis, or text extraction, Rekognition can help build intelligent applications with minimal effort.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

Enhancing Game Development with Kinetix's AI-Driven Emote Creation

In the dynamic world of game development, creating expressive and engaging character animations is crucial. Kinetix's AI-powered platform offers innovative solutions to streamline this process, particularly through its User-Generated Content (UGC) Emotes feature.

Problem Statement:

Developing diverse and dynamic character emotes can be resource-intensive, often requiring significant time and expertise to produce animations that resonate with players.

Application:

By integrating Kinetix's UGC Emotes SDK, game studios empower players to generate their own 3D avatar animations using AI. This not only enriches the gaming experience but also reduces the burden on developers to create a vast array of emotes.

Outcome:

Games that have adopted this technology have seen increased player engagement, as users enjoy the ability to personalize their avatars with unique animations. This customization fosters a deeper connection to the game world and enhances overall player satisfaction.

Industry Examples:

MMORPGs: Allowing players to create custom emotes enhances social interactions within the game.

Virtual Reality Platforms: User-generated animations contribute to more immersive and personalized experiences.

Social Gaming Apps: Facilitating unique expressions through custom emotes strengthens community bonds.

0 notes

Text

Essential Tools and Frameworks for Android App Developers in 2025

Android app development has always been an exciting field, but as we move into 2025, it continues to evolve with new technologies and frameworks making it easier, faster, and more efficient to build high-quality apps. For Android app development companies, staying up-to-date with the latest tools and frameworks is key to delivering cutting-edge applications that meet user demands and stand out in a competitive marketplace. Whether you’re an individual developer or part of a large team, having the right toolkit can significantly impact the quality and performance of the apps you create.

1. Android Studio: The Heart of Android Development

Android Studio has been the go-to Integrated Development Environment (IDE) for Android developers for years. Google’s official IDE continues to dominate the landscape in 2025, offering powerful features like code editing, debugging, performance tooling, and testing capabilities all in one place. The constant updates and optimizations make Android Studio an indispensable tool for developers, providing seamless support for Kotlin, Java, and the latest Android libraries.

With features like real-time collaboration, an intuitive user interface design tool, and easy access to Google’s Android SDK, it’s clear why Android Studio remains at the forefront of Android app development. It integrates well with other essential tools like Firebase, which enhances the app-building experience by simplifying database management, analytics, and authentication.

2. Kotlin: The Future of Android Development

Kotlin, introduced by JetBrains and later adopted by Google as the official language for Android development, has gained massive traction among developers. By 2025, it’s almost impossible to find an Android app development company that doesn’t use Kotlin, and for good reason. The language is more concise and expressive compared to Java, which translates into cleaner code and faster development cycles.

Kotlin also supports functional programming features and ensures interoperability with existing Java code, making it a flexible choice for developers. If you’re starting a new project or migrating an existing one, Kotlin offers a streamlined, modern approach to coding for Android.

3. Jetpack Compose: A Modern Approach to UI Design

Creating beautiful and responsive user interfaces is crucial for the success of any Android app. Jetpack Compose, Google’s declarative UI toolkit, enables developers to design UIs with less boilerplate code and more flexibility. Instead of relying on XML layouts, Jetpack Compose uses Kotlin-based code to build dynamic UIs in a concise and functional manner.

By 2025, Jetpack Compose has become a standard tool in Android app development, allowing developers to create interactive and customizable UIs with ease. Whether you're building a simple screen or a complex layout, Jetpack Compose streamlines the entire process, resulting in faster and more efficient development.

4. Firebase: Backend as a Service

Firebase, a powerful platform provided by Google, continues to be a go-to solution for Android app developers in 2025. It offers a wide range of services such as real-time databases, cloud storage, push notifications, analytics, and user authentication—all in one place. Firebase saves developers time by providing scalable solutions that can handle everything from user management to server-side logic.

For developers working on data-heavy applications, Firebase’s NoSQL cloud database offers real-time synchronization and offline support, ensuring apps function smoothly even in low-connectivity scenarios. With its robust features and integrations, Firebase is a must-have tool for building high-performance Android apps in 2025.

5. Mobile App Cost Calculator: Budgeting Your App Development

When it comes to developing Android apps, knowing how to estimate the costs accurately is critical. With the increasing complexity of modern apps, a mobile app cost calculator has become a valuable tool for both developers and clients. This tool allows you to calculate the potential cost of developing an app based on various factors such as features, functionality, platform, design, and development time.

By using a mobile app cost calculator, you can create a realistic budget and ensure that your app is developed within the expected timeframe and financial scope. This is especially helpful for Android app development companies that need to provide cost estimates for their clients while ensuring profitability.

If you're interested in exploring the benefits of Android App Development services for your business, we encourage you to book an appointment with our team of experts.

Book an Appointment

6. Retrofit: A Powerful Networking Library

Network communication is an integral part of most mobile applications, and for Android developers, Retrofit is one of the best tools to handle API calls. Retrofit simplifies the process of connecting to REST APIs, handling requests, and parsing responses into usable Java or Kotlin objects. With built-in support for Gson and other popular data parsers, Retrofit helps developers build scalable and efficient networking solutions for their apps.

In 2025, as mobile apps become more reliant on cloud-based services, Retrofit remains one of the best libraries to streamline network operations, ensuring a smooth and responsive user experience.

7. ProGuard & R8: Optimizing and Securing Your App

As Android apps become more complex, ensuring optimal performance and security is more important than ever. ProGuard, a code shrinker and obfuscator, helps protect your app from reverse engineering by obfuscating your code, making it harder for attackers to understand the inner workings of your app.

R8, the newer code optimizer introduced by Google, further improves app size and performance by automatically removing unused code and resources. By using ProGuard and R8 together, developers can ensure that their apps are both secure and lightweight, which is critical for delivering a seamless experience to users.

8. CI/CD Tools: Streamlining Development and Deployment

Continuous Integration (CI) and Continuous Deployment (CD) tools have become indispensable for modern Android app development. These tools, such as Jenkins, CircleCI, and GitLab CI, automate the process of testing and deploying code changes, ensuring faster development cycles and fewer bugs.

CI/CD integration helps Android app development companies maintain high-quality standards while delivering frequent updates and features to users. In 2025, these tools are essential for ensuring that apps are bug-free, up-to-date, and ready for deployment at all times.

Conclusion

As the Android app development landscape continues to evolve, it’s crucial to stay informed about the latest tools and frameworks that can enhance productivity and improve the quality of your apps. Whether you’re a solo developer or part of a team in an Android app development company, adopting these essential tools can help you create top-tier apps that meet user expectations and stand out in a competitive market.

If you're looking for professional assistance in building your next Android app, consider reaching out to an experienced Android app development company that can guide you through the process from start to finish. With the right expertise and tools, your app can achieve remarkable success in 2025 and beyond.

0 notes

Text

SSE-KMS Support Available For Amazon S3 Express One Zone

Amazon Key Management Service (KMS) keys can now be used for server-side encryption with Amazon S3 Express One Zone, a high-performance, single-Availability Zone (AZ) S3 storage class (SSE-KMS). All items kept in S3 directory buckets are already encrypted by default by S3 Express One Zone using Amazon S3 management keys (SSE-S3). As of right now, data at rest can be encrypted using AWS KMS customer managed keys without affecting speed. With the help of this new encryption feature, you may use S3 Express One Zone which is intended to provide reliable single-digit millisecond data access for your most frequently accessed data and latency-sensitive applications to further satisfy compliance and regulatory standards.

For SSE-KMS encryption, S3 directory buckets let you define a single customer controlled key per bucket. You cannot change it to use a different key once the customer managed key has been inserted. Conversely, S3 general purpose buckets allow you to use several KMS keys during S3 PUT requests or by modifying the bucket’s default encryption configuration. S3 Bucket Keys are always enabled when utilizing SSE-KMS with S3 Express One Zone. Free S3 bucket keys can minimize AWS KMS queries by up to 99%, improving efficiency and lowering expenses.

Utilizing Amazon S3 Express One Zone with SSE-KMS

First construct an S3 directory bucket in the Amazon S3 console by following the instructions, and you can use apne1-az4 as the Availability Zone, to demonstrate this new functionality to you. To construct the final name, you automatically add the Availability Zone ID to the suffix you enter in the Base name, which is s3express-kms. Then confirm that Data is stored in a single Availability Zone by checking the corresponding checkbox.

Select Server-side encryption using AWS Key Management Service keys (SSE-KMS) under the Default encryption option. You have three options under AWS KMS Key: Create a KMS key, Enter AWS KMS key ARN, or Select from your AWS KMS keys. In this case, you choose to Create bucket after choosing from a list of previously established AWS KMS keys.

You can now automatically encrypt any new object you upload to this S3 directory bucket using my Amazon KMS key.

SSE-KMS in operation with Amazon S3 Express One Zone

You require an AWS Identity and Access Management (IAM) user or role with the following policy in order to use SSE-KMS with S3 Express One Zone using the AWS Command Line Interface (AWS CLI). In order to successfully upload and receive encrypted data to and from your S3 directory bucket, this policy permits the CreateSession API function.

Using the HeadObject command to examine the object’s properties, you can see that it is encrypted using SSE-KMS and my previously generated key:

You can use GetObject to download the encrypted object:

The object downloads and decrypts itself because your session has the required rights.

Use a separate IAM user with a policy who isn’t allowed the required KMS key rights to download the item for this second test. The SSE-KMS encryption is operating as planned, as seen by the AccessDenied error that occurs during this attempt.

Important information

Beginning the process The AWS SDKs, AWS CLI, or the Amazon S3 console can all be used to enable SSE-KMS for S3 Express One Zone. Assign your AWS KMS key and change the S3 directory bucket’s default encryption option to SSE-KMS. Recall that over the lifespan of an S3 directory bucket, only one customer controlled key may be used.

Regions: Every AWS Region where S3 Express One Zone is presently offered offers support for SSE-KMS utilizing customer-managed keys.

Performance: Request latency is unaffected by using SSE-KMS with S3 Express One Zone. The same single-digit millisecond data access will be available to you.

Pricing: To generate and recover data keys used for encryption and decryption, you must pay AWS KMS fees. For additional information, see the pricing page for AWS KMS. Furthermore, S3 Bucket Keys are enabled by default for all data plane operations aside from CopyObject and UploadPartCopy when utilizing SSE-KMS with S3 Express One Zone, and they cannot be removed. By doing this, AWS KMS request volume is lowered by up to 99%, improving both performance and cost-effectiveness.

Read more on Govindhtech.com

#SSEKMS#AmazonS3#AmazonS3ExpressOneZone#Amazon#S3Bucket#KeyManagementService#AmazonS3console#News#Technews#technology#technologynews#technologytrends#govindhtech

0 notes

Link

0 notes

Text

Ola Maps reliability issues according to MapmyIndia CEO

Rohan Verma, CEO of MapmyIndia, has criticized ANI Technologies, the parent company of Ola, for what he describes as “gimmick” promises related to their navigational maps of India. Verma questioned the credibility of Ola’s map service, which allegedly involves a startup named Geospoc Pvt Ltd, after receiving a legal notice from Ola Electric.

Verma expressed skepticism about the quality of Ola’s maps, stating that he does not view them as a business threat because they lack sufficient quality. This skepticism is echoed by numerous users who have complained about the poor performance of Ola’s revamped taxi and electric vehicle apps. Despite the promotional claims, the actual product has been widely criticized for its subpar map quality.

This dispute follows a licensing agreement signed on July 23, 2021, between Ola Electric and MapmyIndia. The deal allowed Ola to use MapmyIndia’s APIs and SDKs. MapmyIndia alleges that Ola breached the terms of this agreement. In response, Ola has strongly denied these accusations, suggesting that they are an attempt by MapmyIndia to undermine their competition.

Verma emphasized that map development is a complex and resource-intensive process that requires significant expertise and investment. He noted that only a few companies have succeeded in this field internationally, and it is implausible for a company to suddenly produce high-quality maps without a solid track record. Verma also cautioned against relying on competing map services, urging consumers to be wary of products that might lack accuracy and reliability.

READ MORE

#MapmyIndia#OlaMaps#MapmyIndiaCEO#OlaMapsCriticism#IndianTech#NavigationApps#TechWarnings#IndianTechNews#MappingTechnology#OlaMapsGimmick#MapmyIndiaVsOla#TechOpinions#NavigationWarnings#TechIndustryInsights#MappingCritique

0 notes

Link

The latest version of Meta's SDK for Quest adds thumb microgestures, letting you swipe and tap your index finger, and improves the quality of Audio To Expression. #AR #VR #Metaverse

0 notes

Text

Top Reasons to Consider Flutter for Your Next App

In today’s fast-paced digital world, choosing the right framework for your app development project is crucial. With countless options available, it's essential to select a platform that streamlines development, ensures top-notch performance, and offers exceptional versatility. Enter Flutter—an innovative leader in the app development landscape.

What is Flutter?

Flutter is an open-source UI software development kit (SDK) created by Google. It enables developers to build visually stunning, native-like applications for mobile, web, and desktop platforms using a single codebase. Since its launch in 2018, Flutter has rapidly gained popularity among developers for its powerful features, seamless performance, and swift development capabilities.

The Power of Flutter: Key Advantages

1. Single Codebase for Multiple Platforms

Flutter’s standout feature is its ability to support cross-platform development without sacrificing native performance. Developers can write code once and deploy it across iOS, Android, web, and desktop platforms. This significantly reduces both time-to-market and development costs.

2. Expressive and Flexible UI

Flutter offers an extensive set of customizable widgets and design capabilities, allowing developers to create visually appealing, pixel-perfect interfaces that look great on any device and screen size. The framework’s hot reload feature facilitates real-time UI updates, enabling rapid iteration and experimentation during development.

3. Native-Like Performance

Unlike hybrid frameworks that rely on web views, Flutter renders UI components directly on the canvas, ensuring native-like performance and smooth animations. This approach delivers a seamless user experience and eliminates common performance issues associated with cross-platform development.

4. Access to Native Features

Flutter integrates seamlessly with platform-specific APIs and native functionalities, allowing developers to leverage device features like cameras, geolocation, and sensors. This ensures that Flutter apps not only look native but also behave natively, providing users with a familiar and intuitive experience.

5. Growing Ecosystem and Community Support

Flutter boasts a thriving ecosystem of plugins, packages, and third-party libraries, giving developers access to a vast array of tools and resources to expedite development and enhance app functionality. Additionally, a vibrant community of Flutter enthusiasts actively contributes to the framework’s growth, offering support, sharing knowledge, and fostering innovation.

Why Choose Flutter for Your Next App Development Project?

Accelerated Development Cycle

Flutter’s hot reload feature and expressive UI framework enable rapid prototyping, iteration, and debugging. This reduces time-to-market and enhances developer productivity.

Consistent User Experience

By leveraging Flutter’s consistent UI rendering across platforms, you can ensure a cohesive and seamless experience for users, no matter what device they’re using.

Cost Efficiency

With a single codebase for multiple platforms, Flutter significantly reduces the costs associated with building and maintaining separate codebases for iOS and Android.

Future-Proof Solutions

Flutter’s versatility extends beyond mobile app development, allowing you to target emerging platforms like web and desktop with minimal effort. This future-proofs your applications for evolving technological landscapes.

Conclusion

In a competitive digital environment where user experience is paramount, selecting the right framework for your app development project is essential. Flutter’s unique blend of performance, productivity, and versatility makes it the ideal choice for businesses and developers aiming to create high-quality, cross-platform applications. Embrace the power of Flutter and unlock endless possibilities for your next app development project.

0 notes

Text

Rigging Techniques for Character Animation

Character animation is a vital aspect of creating lifelike movements and expressions in animated films, video games, and other forms of digital media. One of the most critical steps in this process is rigging, which involves creating a skeletal structure that can control the character's movements. Rigging is an art and a science, requiring both technical proficiency and creative problem-solving. At an esteemed animation institute in Pune, students learn the intricacies of rigging, mastering both foundational and advanced techniques to bring characters to life. Here, we'll explore various rigging techniques for character animation, from basic principles to advanced methods, providing a comprehensive guide for animators at all levels.

#### Understanding Rigging

Rigging involves creating a digital skeleton for a character, known as a rig, which includes bones, joints, and control systems. These components work together to enable the animator to manipulate the character in a realistic and fluid manner. A well-rigged character can perform a wide range of movements, from simple actions like walking and running to complex motions such as dancing or fighting.

#### Basic Rigging Techniques

1. **Creating the Skeleton**:

- The first step in rigging is creating a basic skeleton that aligns with the character's anatomy. This skeleton consists of a series of bones connected by joints. Each bone corresponds to a specific part of the character, such as the arm, leg, or spine. The placement of these bones must be precise to ensure realistic movement.

2. **Joint Placement**:

- Proper joint placement is crucial for realistic animation. Joints should be placed at natural pivot points, such as the shoulders, elbows, knees, and hips. Misplaced joints can result in unnatural or awkward movements, so it's essential to study the character's anatomy and plan accordingly.

3. **Forward Kinematics (FK)**:

- FK is a technique where the animator controls each bone individually, starting from the root and moving outward. This method is intuitive and straightforward, making it suitable for animating simple, linear movements. However, FK can be time-consuming for complex animations as it requires adjusting multiple bones to achieve the desired pose.

4. **Inverse Kinematics (IK)**:

- IK is an advanced technique that allows the animator to control the end effector (e.g., the hand or foot) while the software calculates the positions of the intermediate bones. This method simplifies the animation process, especially for movements where the end effector needs to stay in a fixed position, such as a foot remaining on the ground while the body moves.

#### Advanced Rigging Techniques

1. **Blend Shapes**:

- Blend shapes are used to create facial expressions and other deformations that cannot be achieved with bones alone. By morphing between different shapes, animators can produce a wide range of expressions and subtle movements. This technique is particularly useful for lip-syncing and emotional performances.

2. **Driven Keys**:

- Driven keys allow one attribute to control another, enabling complex interactions between different parts of the rig. For example, a driven key can be used to automatically adjust the position of the fingers when the hand is rotated. This technique helps streamline the animation process and ensures consistency.

3. **Set Driven Keys (SDK)**:

- SDKs are a variation of driven keys that provide even more control. They allow animators to create custom controls that can drive multiple attributes simultaneously. This method is especially useful for creating advanced facial rigs and other intricate animations.

4. **Constraints**:

- Constraints are used to link the movements of different objects or bones. For example, a point constraint can be used to attach a prop to a character's hand, ensuring it moves in sync with the hand. Constraints can also be used to create complex mechanical systems, such as gears and pulleys.

5. **Spline IK**:

- Spline IK is a technique used for animating flexible, snake-like movements, such as tails, tentacles, or spines. It involves using a spline curve to control the positions of the joints along the length of the object. This method provides smooth, flowing movements and is ideal for characters with elongated appendages.

6. **Muscle Systems**:

- For highly realistic animations, muscle systems can be used to simulate the underlying muscle structure of the character. These systems deform the character's mesh based on the movements of the bones, creating lifelike bulges and contractions. Muscle systems are particularly useful for animating creatures and human characters with realistic anatomy.

#### Best Practices for Rigging

1. **Plan Ahead**:

- Before starting the rigging process, it's essential to have a clear plan. Understand the character's anatomy, movement requirements, and any specific needs for the animation. Planning ahead can save time and prevent issues later in the process.

2. **Keep It Simple**:

- While advanced techniques can enhance your rig, it's essential to keep the rig as simple as possible. Overcomplicating the rig can make it difficult to animate and troubleshoot. Focus on creating a functional, efficient rig that meets the needs of the animation.

3. **Test Thoroughly**:

- Regularly test the rig throughout the creation process to ensure it performs as expected. Test a range of movements and poses to identify any issues early on. This approach helps catch problems before they become more challenging to fix.

4. **Use Reference**:

- Reference materials, such as anatomy books, videos, and real-life observations, can be invaluable when rigging a character. Study how real bodies move and deform to create more realistic and believable animations.

5. **Continuous Learning**:

- Rigging is a complex and ever-evolving field. Stay up-to-date with the latest techniques and tools by participating in online communities, attending workshops, and following industry trends.

### Conclusion

Rigging is a foundational skill in character animation, enabling animators to bring their characters to life with realistic and expressive movements. By mastering basic and advanced rigging techniques, animators can create versatile rigs that meet the demands of any animation project. Whether you're a beginner or an experienced professional, understanding and implementing these techniques will enhance your ability to produce high-quality character animations.

0 notes