#Graphs of Amazon performance

Explore tagged Tumblr posts

Text

Mastering Data Structures: A Comprehensive Course for Beginners

Data structures are one of the foundational concepts in computer science and software development. Mastering data structures is essential for anyone looking to pursue a career in programming, software engineering, or computer science. This article will explore the importance of a Data Structure Course, what it covers, and how it can help you excel in coding challenges and interviews.

1. What Is a Data Structure Course?

A Data Structure Course teaches students about the various ways data can be organized, stored, and manipulated efficiently. These structures are crucial for solving complex problems and optimizing the performance of applications. The course generally covers theoretical concepts along with practical applications using programming languages like C++, Java, or Python.

By the end of the course, students will gain proficiency in selecting the right data structure for different problem types, improving their problem-solving abilities.

2. Why Take a Data Structure Course?

Learning data structures is vital for both beginners and experienced developers. Here are some key reasons to enroll in a Data Structure Course:

a) Essential for Coding Interviews

Companies like Google, Amazon, and Facebook focus heavily on data structures in their coding interviews. A solid understanding of data structures is essential to pass these interviews successfully. Employers assess your problem-solving skills, and your knowledge of data structures can set you apart from other candidates.

b) Improves Problem-Solving Skills

With the right data structure knowledge, you can solve real-world problems more efficiently. A well-designed data structure leads to faster algorithms, which is critical when handling large datasets or working on performance-sensitive applications.

c) Boosts Programming Competency

A good grasp of data structures makes coding more intuitive. Whether you are developing an app, building a website, or working on software tools, understanding how to work with different data structures will help you write clean and efficient code.

3. Key Topics Covered in a Data Structure Course

A Data Structure Course typically spans a range of topics designed to teach students how to use and implement different structures. Below are some key topics you will encounter:

a) Arrays and Linked Lists

Arrays are one of the most basic data structures. A Data Structure Course will teach you how to use arrays for storing and accessing data in contiguous memory locations. Linked lists, on the other hand, involve nodes that hold data and pointers to the next node. Students will learn the differences, advantages, and disadvantages of both structures.

b) Stacks and Queues

Stacks and queues are fundamental data structures used to store and retrieve data in a specific order. A Data Structure Course will cover the LIFO (Last In, First Out) principle for stacks and FIFO (First In, First Out) for queues, explaining their use in various algorithms and applications like web browsers and task scheduling.

c) Trees and Graphs

Trees and graphs are hierarchical structures used in organizing data. A Data Structure Course teaches how trees, such as binary trees, binary search trees (BST), and AVL trees, are used in organizing hierarchical data. Graphs are important for representing relationships between entities, such as in social networks, and are used in algorithms like Dijkstra's and BFS/DFS.

d) Hashing

Hashing is a technique used to convert a given key into an index in an array. A Data Structure Course will cover hash tables, hash maps, and collision resolution techniques, which are crucial for fast data retrieval and manipulation.

e) Sorting and Searching Algorithms

Sorting and searching are essential operations for working with data. A Data Structure Course provides a detailed study of algorithms like quicksort, merge sort, and binary search. Understanding these algorithms and how they interact with data structures can help you optimize solutions to various problems.

4. Practical Benefits of Enrolling in a Data Structure Course

a) Hands-on Experience

A Data Structure Course typically includes plenty of coding exercises, allowing students to implement data structures and algorithms from scratch. This hands-on experience is invaluable when applying concepts to real-world problems.

b) Critical Thinking and Efficiency

Data structures are all about optimizing efficiency. By learning the most effective ways to store and manipulate data, students improve their critical thinking skills, which are essential in programming. Selecting the right data structure for a problem can drastically reduce time and space complexity.

c) Better Understanding of Memory Management

Understanding how data is stored and accessed in memory is crucial for writing efficient code. A Data Structure Course will help you gain insights into memory management, pointers, and references, which are important concepts, especially in languages like C and C++.

5. Best Programming Languages for Data Structure Courses

While many programming languages can be used to teach data structures, some are particularly well-suited due to their memory management capabilities and ease of implementation. Some popular programming languages used in Data Structure Courses include:

C++: Offers low-level memory management and is perfect for teaching data structures.

Java: Widely used for teaching object-oriented principles and offers a rich set of libraries for implementing data structures.

Python: Known for its simplicity and ease of use, Python is great for beginners, though it may not offer the same level of control over memory as C++.

6. How to Choose the Right Data Structure Course?

Selecting the right Data Structure Course depends on several factors such as your learning goals, background, and preferred learning style. Consider the following when choosing:

a) Course Content and Curriculum

Make sure the course covers the topics you are interested in and aligns with your learning objectives. A comprehensive Data Structure Course should provide a balance between theory and practical coding exercises.

b) Instructor Expertise

Look for courses taught by experienced instructors who have a solid background in computer science and software development.

c) Course Reviews and Ratings

Reviews and ratings from other students can provide valuable insights into the course’s quality and how well it prepares you for real-world applications.

7. Conclusion: Unlock Your Coding Potential with a Data Structure Course

In conclusion, a Data Structure Course is an essential investment for anyone serious about pursuing a career in software development or computer science. It equips you with the tools and skills to optimize your code, solve problems more efficiently, and excel in technical interviews. Whether you're a beginner or looking to strengthen your existing knowledge, a well-structured course can help you unlock your full coding potential.

By mastering data structures, you are not only preparing for interviews but also becoming a better programmer who can tackle complex challenges with ease.

3 notes

·

View notes

Text

NVIDIA AI Workflows Detect False Credit Card Transactions

A Novel AI Workflow from NVIDIA Identifies False Credit Card Transactions.

The process, which is powered by the NVIDIA AI platform on AWS, may reduce risk and save money for financial services companies.

By 2026, global credit card transaction fraud is predicted to cause $43 billion in damages.

Using rapid data processing and sophisticated algorithms, a new fraud detection NVIDIA AI workflows on Amazon Web Services (AWS) will assist fight this growing pandemic by enhancing AI’s capacity to identify and stop credit card transaction fraud.

In contrast to conventional techniques, the process, which was introduced this week at the Money20/20 fintech conference, helps financial institutions spot minute trends and irregularities in transaction data by analyzing user behavior. This increases accuracy and lowers false positives.

Users may use the NVIDIA AI Enterprise software platform and NVIDIA GPU instances to expedite the transition of their fraud detection operations from conventional computation to accelerated compute.

Companies that use complete machine learning tools and methods may see an estimated 40% increase in the accuracy of fraud detection, which will help them find and stop criminals more quickly and lessen damage.

As a result, top financial institutions like Capital One and American Express have started using AI to develop exclusive solutions that improve client safety and reduce fraud.

With the help of NVIDIA AI, the new NVIDIA workflow speeds up data processing, model training, and inference while showcasing how these elements can be combined into a single, user-friendly software package.

The procedure, which is now geared for credit card transaction fraud, might be modified for use cases including money laundering, account takeover, and new account fraud.

Enhanced Processing for Fraud Identification

It is more crucial than ever for businesses in all sectors, including financial services, to use computational capacity that is economical and energy-efficient as AI models grow in complexity, size, and variety.

Conventional data science pipelines don’t have the compute acceleration needed to process the enormous amounts of data needed to combat fraud in the face of the industry’s continually increasing losses. Payment organizations may be able to save money and time on data processing by using NVIDIA RAPIDS Accelerator for Apache Spark.

Financial institutions are using NVIDIA’s AI and accelerated computing solutions to effectively handle massive datasets and provide real-time AI performance with intricate AI models.

The industry standard for detecting fraud has long been the use of gradient-boosted decision trees, a kind of machine learning technique that uses libraries like XGBoost.

Utilizing the NVIDIA RAPIDS suite of AI libraries, the new NVIDIA AI workflows for fraud detection improves XGBoost by adding graph neural network (GNN) embeddings as extra features to assist lower false positives.

In order to generate and train a model that can be coordinated with the NVIDIA Triton Inference Server and the NVIDIA Morpheus Runtime Core library for real-time inferencing, the GNN embeddings are fed into XGBoost.

All incoming data is safely inspected and categorized by the NVIDIA Morpheus framework, which also flags potentially suspicious behavior and tags it with patterns. The NVIDIA Triton Inference Server optimizes throughput, latency, and utilization while making it easier to infer all kinds of AI model deployments in production.

NVIDIA AI Enterprise provides Morpheus, RAPIDS, and Triton Inference Server.

Leading Financial Services Companies Use AI

AI is assisting in the fight against the growing trend of online or mobile fraud losses, which are being reported by several major financial institutions in North America.

American Express started using artificial intelligence (AI) to combat fraud in 2010. The company uses fraud detection algorithms to track all client transactions worldwide in real time, producing fraud determinations in a matter of milliseconds. American Express improved model accuracy by using a variety of sophisticated algorithms, one of which used the NVIDIA AI platform, therefore strengthening the organization’s capacity to combat fraud.

Large language models and generative AI are used by the European digital bank Bunq to assist in the detection of fraud and money laundering. With NVIDIA accelerated processing, its AI-powered transaction-monitoring system was able to train models at over 100 times quicker rates.

In March, BNY said that it was the first big bank to implement an NVIDIA DGX SuperPOD with DGX H100 systems. This would aid in the development of solutions that enable use cases such as fraud detection.

In order to improve their financial services apps and help protect their clients’ funds, identities, and digital accounts, systems integrators, software suppliers, and cloud service providers may now include the new NVIDIA AI workflows for fraud detection. NVIDIA Technical Blog post on enhancing fraud detection with GNNs and investigate the NVIDIA AI workflows for fraud detection.

Read more on Govindhtech.com

#NVIDIAAI#AWS#FraudDetection#AI#GenerativeAI#LLM#AImodels#News#Technews#Technology#Technologytrends#govindhtech#Technologynews

2 notes

·

View notes

Text

Storing images in mySql DB - explanation + Uploadthing example/tutorial

(Scroll down for an uploadthing with custom components tutorial)

My latest project is a photo editing web application (Next.js) so I needed to figure out how to best store images to my database. MySql databases cannot store files directly, though they can store them as blobs (binary large objects). Another way is to store images on a filesystem (e.g. Amazon S3) separate from your database, and then just store the URL path in your db.

Why didn't I choose to store images with blobs?

Well, I've seen a lot of discussions on the internet whether it is better to store images as blobs in your database, or to have them on a filesystem. In short, storing images as blobs is a good choice if you are storing small images and a smaller amount of images. It is safer than storing them in a separate filesystem since databases can be backed up more easily and since everything is in the same database, the integrity of the data is secured by the database itself (for example if you delete an image from a filesystem, your database will not know since it only holds a path of the image). But I ultimately chose uploading images on a filesystem because I wanted to store high quality images without worrying about performance or database constraints. MySql has a variety of constraints for data sizes which I would have to override and operations with blobs are harder/more costly for the database.

Was it hard to set up?

Apparently, hosting images on a separate filesystem is kinda complicated? Like with S3? Or so I've heard, never tried to do it myself XD BECAUSE RECENTLY ANOTHER EASIER SOLUTION FOR IT WAS PUBLISHED LOL. It's called uploadthing!!!

What is uploadthing and how to use it?

Uploadthing has it's own server API on which you (client) post your file. The file is then sent to S3 to get stored, and after it is stored S3 returns file's URL, which then goes trough uploadthing servers back to the client. After that you can store that URL to your own database.

Here is the graph I vividly remember taking from uploadthing github about a month ago, but can't find on there now XD It's just a graphic version of my basic explanation above.

The setup is very easy, you can just follow the docs which are very straightforward and easy to follow, except for one detail. They show you how to set up uploadthing with uploadthing's own frontend components like <UploadButton>. Since I already made my own custom components, I needed to add a few more lines of code to implement it.

Uploadthing for custom components tutorial

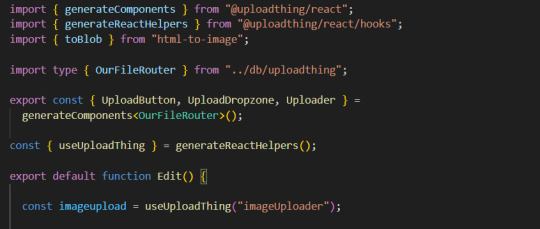

1. Imports

You will need to add an additional import generateReactHelpers (so you can use uploadthing functions without uploadthing components) and call it as shown below

2. For this example I wanted to save an edited image after clicking on the save button.

In this case, before calling the uploadthing API, I had to create a file and a blob (not to be confused with mySql blob) because it is actually an edited picture taken from canvas, not just an uploaded picture, therefore it's missing some info an uploaded image would usually have (name, format etc.). If you are storing an uploaded/already existing picture, this step is unnecessary. After uploading the file to uploadthing's API, I get it's returned URL and send it to my database.

You can find the entire project here. It also has an example of uploading multiple files in pages/create.tsx

I'm still learning about backend so any advice would be appreciated. Writing about this actually reminded me of how much I'm interested in learning about backend optimization c: Also I hope the post is not too hard to follow, it was really hard to condense all of this information into one post ;_;

#codeblr#studyblr#webdevelopment#backend#nextjs#mysql#database#nodejs#programming#progblr#uploadthing

4 notes

·

View notes

Text

North America Gaming Steering Wheels Market, Share, Size, Trends, Future and Industry

"North America Gaming Steering Wheels Market - Size, Share, Demand, Industry Trends and Opportunities

North America Gaming Steering Wheels Market, By Weight (2.1-4.0 kg, 4.1-6.0 kg, 6.1-8.0 kg, 0-2.0 kg, More Than 8.0 Kg), Clutch Pedal (With Clutch Pedal, Without Clutch Pedal), Distribution Channel (Online, Offline) – Industry Trends & Forecast to 2030.

Get the PDF Sample Copy (Including FULL TOC, Graphs and Tables) of this report @

**Segments**

The North America Gaming Steering Wheels Market can be segmented based on platform compatibility, price range, and distribution channel. Platform compatibility segments include PC, PlayStation, Xbox, and others. PC gaming steering wheels are compatible with computers and offer a wide range of customization options for gamers. PlayStation-compatible steering wheels are designed specifically for Sony's gaming consoles, providing seamless integration and optimized performance. Xbox-compatible steering wheels cater to Microsoft's gaming consoles and offer unique features tailored to Xbox gamers. The ""others"" segment may include steering wheels compatible with multiple platforms or niche gaming systems.

Price range segments encompass budget, mid-range, and premium categories. Budget gaming steering wheels are typically entry-level products with essential features and basic functionalities. Mid-range steering wheels offer a balance between price and performance, appealing to casual and enthusiast gamers. Premium gaming steering wheels are high-end products with advanced features, superior build quality, and enhanced immersion, targeting professional gamers and enthusiasts seeking top-of-the-line experiences.

Distribution channel segments consist of online retail, offline retail, and third-party online platforms. Online retail channels include e-commerce websites and manufacturer websites, providing convenience, a wide product selection, and competitive pricing. Offline retail channels comprise brick-and-mortar stores such as electronics retailers and gaming specialty shops, offering hands-on experiences and immediate product availability. Third-party online platforms like Amazon and eBay serve as intermediaries between buyers and sellers, facilitating transactions and expanding market reach.

**Market Players**

- Logitech - Thrustmaster - Fanatec - Hori - SteelSeries - Mad Catz - Trust - Subsonic - Speedlink - Guillemot Corporation

The North America Gaming Steering Wheels Market is witnessing steady growth driven by several key factors. The increasing popularity of simulation and racing games among gamers is a primary growth driver for the market. The demand for realistic gaming experiences has propelled the adoption of gaming steering wheels, as they offer enhanced control, immersion, and responsiveness compared to traditional controllers. Additionally, technological advancements in force feedback systems, pedal setups, and customization options have improved the overall gaming experience, attracting more consumers to invest in gaming steering wheels.

Furthermore, the rise of esports and competitive gaming has contributed to the market growth, as professional gamers and esports enthusiasts seek high-performance gaming peripherals to gain a competitive edge. Gaming steering wheels with advanced features such as customizable force feedback, precision controls, and compatibility with popular gaming titles have become essential tools for competitive gaming scenarios. The integration of virtual reality (VR) and augmented reality (AR) technologies in gaming steering wheels has also driven market expansion, offering immersive and realistic gameplay experiences.

However, the North America Gaming Steering Wheels Market faces challenges such as pricing pressures, competition from alternative input devices like gamepads and joysticks, and compatibility issues with evolving gaming platforms. Price sensitivity among consumers, especially in the budget and mid-range segments, poses a challenge for manufacturers to balance affordability with features and quality. Moreover, the availability of multifunctional gaming controllers and evolving gaming trends may divert consumer preferences away from dedicated gaming steering wheels, impacting market demand.

In conclusion, the North America Gaming Steering Wheels Market is poised for growth driven by the increasing demand for immersive gaming experiences, the rise of esports, and technological advancements in gaming peripherals. Manufacturers in the market are focusing on innovation, product differentiation, and strategic partnerships to cater to diverse consumer preferences and capitalize on emerging market opportunities.

Access Full 350 Pages PDF Report @

The report provides insights on the following pointers:

Market Penetration: Comprehensive information on the product portfolios of the top players in the North America Gaming Steering Wheels Market.

Product Development/Innovation: Detailed insights on the upcoming technologies, R&D activities, and product launches in the market.

Competitive Assessment: In-depth assessment of the market strategies, geographic and business segments of the leading players in the market.

Market Development: Comprehensive information about emerging markets. This report analyzes the market for various segments across geographies.

Market Diversification: Exhaustive information about new products, untapped geographies, recent developments, and investments in the North America Gaming Steering Wheels Market.

Reasons to Buy:

Review the scope of the North America Gaming Steering Wheels Market with recent trends and SWOT analysis.

Outline of market dynamics coupled with market growth effects in coming years.

North America Gaming Steering Wheels Market segmentation analysis includes qualitative and quantitative research, including the impact of economic and non-economic aspects.

North America Gaming Steering Wheels Market and supply forces that are affecting the growth of the market.

Market value data (millions of US dollars) and volume (millions of units) for each segment and sub-segment.

and strategies adopted by the players in the last five years.

Browse Trending Reports:

Middle East And Africa Mobile Phone Accessories Market Middle East And Africa Yeast Market Europe Textile Films Market Asia Pacific Textile Films Market Middle East And Africa Textile Films Market North America Textile Films Market Asia Pacific Adalimumab Market North America Adalimumab Market Europe Adalimumab Market Middle East And Africa Adalimumab Market

About Data Bridge Market Research:

Data Bridge set forth itself as an unconventional and neoteric Market research and consulting firm with unparalleled level of resilience and integrated approaches. We are determined to unearth the best market opportunities and foster efficient information for your business to thrive in the market. Data Bridge endeavors to provide appropriate solutions to the complex business challenges and initiates an effortless decision-making process.

Contact Us:

Data Bridge Market Research

US: +1 614 591 3140

UK: +44 845 154 9652

APAC : +653 1251 975

Email: [email protected]

"

0 notes

Text

Graph Database Market Size, Share, Analysis, Forecast & Growth 2032: Investment Trends and Funding Landscape

The Graph Database Market size was valued at US$ 2.8 billion in 2023 and is expected to reach US$ 15.94 billion in 2032 with a growing CAGR of 21.32 % over the forecast period 2024-2032.

Graph Database Market is rapidly transforming the data management landscape by offering a highly efficient way to handle complex, connected data. With the ever-growing need for real-time insights and deep relationship mapping, businesses across sectors such as healthcare, finance, telecom, and retail are increasingly adopting graph databases to drive smarter, faster decision-making.

Graph Database Market is gaining strong momentum as organizations shift from traditional relational databases to graph-based structures to address modern data challenges. The rising importance of AI, machine learning, and big data analytics is fueling the need for more flexible, scalable, and intuitive data systems—an area where graph databases excel due to their ability to uncover intricate patterns and connections with low latency and high performance.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3615

Market Keyplayers:

Oracle Corporation

Ontotext

Orient DB

Hewlett Packard Enterprise

Microsoft Corporation

Teradata Corporation

Stardog Union Inc.

Amazon Web Services, Inc.

Objectivity Inc.

MangoDB

TIBCO Software

Franz Inc.

TigerGraph Inc.

DataStax

IBM Corporation

Blazegraph

Openlink Software

MarkLogic Corporation

Market Analysis

The evolution of data complexity has made traditional relational databases insufficient for many modern applications. Graph databases, by storing data as nodes and edges, simplify complex relationships and enable dynamic querying across connected datasets. This makes them particularly valuable for fraud detection, recommendation engines, knowledge graphs, social network analysis, and enterprise data management.

Graph database technology is being integrated with cloud platforms and advanced analytics solutions, further expanding its appeal. Startups and tech giants alike are investing in graph-based innovations, driving ecosystem growth and enhancing capabilities. Moreover, open-source projects and graph query languages like Cypher and Gremlin are contributing to the market’s technical maturity and adoption.

Market Trends

Rising adoption in fraud detection and cybersecurity analytics

Increased demand for real-time recommendation systems

Integration with AI and machine learning for advanced pattern recognition

Emergence of hybrid and multi-model database systems

Expansion of graph capabilities in cloud-native environments

Growing use of knowledge graphs in enterprise search and NLP

Surge in funding and acquisitions among graph database vendors

Adoption in government and public sector for intelligence operations

Market Scope

The graph database market encompasses a wide array of industries where connected data is critical. From telecommunications optimizing network infrastructures to healthcare improving patient outcomes through connected health records, the applications are diverse and expanding. As digital transformation accelerates, the need for intuitive, flexible data platforms is pushing enterprises to explore graph database technologies. Vendors are offering customized solutions for SMEs and large-scale deployments alike, with support for multiple data models and integration capabilities.

In addition to industry adoption, the market scope is defined by advancements in technology that allow for greater scalability, security, and usability. Developers are increasingly favoring graph databases for projects that involve hierarchical or network-based data. Education around graph data models and improvements in visualization tools are making these systems more accessible to non-technical users, broadening the market reach.

Market Forecast

The graph database market is poised for substantial long-term growth, driven by escalating demands for real-time data processing and intelligent data linkage. As organizations prioritize digital innovation, the role of graph databases will become even more central in enabling insights from interconnected data. Continuous developments in artificial intelligence, cloud computing, and big data ecosystems will further amplify market opportunities. Future adoption is expected to flourish not just in North America and Europe, but also in emerging economies where digital infrastructure is rapidly maturing.

Investments in R&D, increasing partnerships among technology providers, and the emergence of specialized use cases in sectors such as legal tech, logistics, and social media analysis are indicators of a thriving market. As businesses seek to gain competitive advantages through smarter data management, the adoption of graph databases is set to surge, ushering in a new era of contextual intelligence and connectivity.

Access Complete Report: https://www.snsinsider.com/reports/graph-database-market-3615

Conclusion

In an age where understanding relationships between data points is more critical than ever, graph databases are redefining how businesses store, query, and derive value from data. Their ability to model and navigate complex interdependencies offers a strategic edge in a data-saturated world. As innovation accelerates and digital ecosystems become increasingly interconnected, the graph database market is not just growing—it is reshaping the very foundation of data-driven decision-making. Organizations that recognize and embrace this shift early will be best positioned to lead in tomorrow’s connected economy.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Best Open-Source AI Frameworks for Developers in 2025

Artificial Intelligence (AI) is transforming industries, and open-source frameworks are at the heart of this revolution. For developers, choosing the right AI tools can make the difference between a successful project and a stalled experiment. In 2025, several powerful open-source frameworks stand out, each with unique strengths for different AI applications—from deep learning and natural language processing (NLP) to scalable deployment and edge AI.

Here’s a curated list of the best open-source AI frameworks developers should know in 2025, along with their key features, use cases, and why they matter.

1. TensorFlow – The Industry Standard for Scalable AI

Developed by Google, TensorFlow remains one of the most widely used AI frameworks. It excels in building and deploying production-grade machine learning models, particularly for deep learning and neural networks.

Why TensorFlow?

Flexible Deployment: Runs on CPUs, GPUs, and TPUs, with support for mobile (TensorFlow Lite) and web (TensorFlow.js).

Production-Ready: Used by major companies for large-scale AI applications.

Strong Ecosystem: Extensive libraries (Keras, TFX) and a large developer community.

Best for: Enterprises, researchers, and developers needing scalable, end-to-end AI solutions.

2. PyTorch – The Researcher’s Favorite

Meta’s PyTorch has gained massive popularity for its user-friendly design and dynamic computation graph, making it ideal for rapid prototyping and academic research.

Why PyTorch?

Pythonic & Intuitive: Easier debugging and experimentation compared to static graph frameworks.

Dominates Research: Preferred for cutting-edge AI papers and NLP models.

TorchScript for Deployment: Converts models for optimized production use.

Best for: AI researchers, startups, and developers focused on fast experimentation and NLP.

3. Hugging Face Transformers – The NLP Powerhouse

Hugging Face has revolutionized natural language processing (NLP) by offering pre-trained models like GPT, BERT, and T5 that can be fine-tuned with minimal code.

Why Hugging Face?

Huge Model Library: Thousands of ready-to-use NLP models.

Easy Integration: Works seamlessly with PyTorch and TensorFlow.

Community-Driven: Open-source contributions accelerate AI advancements.

Best for: Developers building chatbots, translation tools, and text-generation apps.

4. JAX – The Next-Gen AI Research Tool

Developed by Google Research, JAX is gaining traction for high-performance numerical computing and machine learning research.

Why JAX?

Blazing Fast: Optimized for GPU/TPU acceleration.

Auto-Differentiation: Simplifies gradient-based ML algorithms.

Composable Functions: Enables advanced research in AI and scientific computing.

Best for: Researchers and developers working on cutting-edge AI algorithms and scientific ML.

5. Apache MXNet – Scalable AI for the Cloud

Backed by Amazon Web Services (AWS), MXNet is designed for efficient, distributed AI training and deployment.

Why MXNet?

Multi-Language Support: Python, R, Scala, and more.

Optimized for AWS: Deep integration with Amazon SageMaker.

Lightweight & Fast: Ideal for cloud and edge AI.

Best for: Companies using AWS for scalable AI model deployment.

6. ONNX – The Universal AI Model Format

The Open Neural Network Exchange (ONNX) allows AI models to be converted between frameworks (e.g., PyTorch to TensorFlow), ensuring flexibility.

Why ONNX?

Framework Interoperability: Avoid vendor lock-in by switching between tools.

Edge AI Optimization: Runs efficiently on mobile and IoT devices.

Best for: Developers who need cross-platform AI compatibility.

Which AI Framework Should You Choose?

The best framework depends on your project’s needs:

For production-scale AI → TensorFlow

For research & fast prototyping → PyTorch

For NLP → Hugging Face Transformers

For high-performance computing → JAX

For AWS cloud AI → Apache MXNet

For cross-framework compatibility → ONNX

Open-source AI tools are making advanced machine learning accessible to everyone. Whether you're a startup, enterprise, or researcher, leveraging the right framework can accelerate innovation.

#artificial intelligence#machine learning#deep learning#technology#tech#web developers#techinnovation#web#ai

0 notes

Text

TI-84 Calculator: The Ultimate Tool for Math and Science Students

The TI-84 calculator is a staple in classrooms and exam halls around the world. Designed by Texas Instruments, the TI-84 is a graphing calculator known for its robust features, user-friendly interface, and long-standing reliability. Whether you're a high school student preparing for standardized tests, a college student tackling calculus, or a teacher guiding students through statistics, the TI-84 is a valuable tool for mastering complex mathematical concepts.

📌 What Is the TI-84 Calculator?

The TI-84 is part of Texas Instruments' line of graphing calculators. It has become one of the most widely used calculators in the U.S. education system. The calculator comes in different versions, including the TI-84 Plus, TI-84 Plus Silver Edition, and the newer TI-84 Plus CE, which features a sleek design and a full-color display.

Its popularity stems from its balance of power and simplicity. Unlike more complex devices that require extensive training, the TI-84 is easy to learn while still offering advanced functionality.

🔍 Key Features of the TI-84

Graphing Capabilities: The TI-84 can graph multiple equations simultaneously. Users can trace graphs, find intercepts, and analyze functions quickly.

Programming Support: With built-in support for TI-BASIC, users can create and run custom programs for math shortcuts, games, and more.

Apps and Functions: The calculator includes apps for financial calculations, polynomial root finding, conic sections, and probability simulations.

Data Analysis: It can perform statistical functions like mean, median, standard deviation, regression models, and more. Students can also enter and analyze lists of data easily.

Exam Friendly: Approved for use on the SAT, ACT, PSAT/NMSQT, and AP exams, the TI-84 is a safe bet for standardized testing.

Connectivity: The TI-84 connects to computers and other calculators via USB. This allows for data transfer, OS updates, and program sharing.

Color Display (TI-84 Plus CE): The CE version includes a bright, high-resolution color screen and is significantly slimmer than older models.

⚡ Battery and Portability

The TI-84 Plus CE uses a rechargeable lithium-ion battery that lasts for up to a month on a single charge. Older models use four AAA batteries, which are easy to replace. Both versions are durable and portable, designed to survive daily use in classrooms and during travel.

🧮 Why Students and Teachers Love It

The TI-84 remains a classroom favorite because it simplifies complex calculations and visualizes data and functions in a way textbooks cannot. Teachers appreciate the consistent interface and features that align with the curriculum, while students benefit from the confidence it brings during exams and assignments.

💡 Tips for Using the TI-84 Efficiently

Learn keyboard shortcuts for faster navigation.

Use apps like PlySmlt2 for solving polynomial equations quickly.

Save formulas or custom programs to avoid repetitive typing.

Reset your RAM occasionally to clear up memory and avoid slowdowns.

🧠 Conclusion

The TI-84 calculator is more than just a graphing device — it's a comprehensive learning tool for mathematics and science. Its reliable performance, wide range of functions, and exam approval make it a long-term investment for students and educators alike. Whether you're plotting graphs, crunching data, or solving equations, the TI-84 will be your trusted math companion.

Let me know if you'd like this in a more SEO-optimized format or tailored for a specific platform like Medium, Amazon product listing, or an educational blog.

0 notes

Text

Top Tools Used in Data Analytics in 2025 – A Complete Guide for Beginners

Data is everywhere. When you order food, shop online, watch a movie, or use an app, you are creating data. Companies use this data to understand people’s behavior, improve their services, and make better decisions. But how do they do it? The answer is data analytics.

To perform data analytics, we need the right tools. These tools help us to collect, organize, analyze, and understand data.

If you are a beginner who wants to learn data analytics or start a career in this field, this guide will help you understand the most important tools used in 2025.

What Is Data Analytics

Data analytics means studying data to find useful information. This information helps people or businesses make better decisions. For example, a company may study customer buying patterns to decide what products to sell more of.

There are many types of data analytics, but the basic goal is to understand what is happening, why it is happening, and what can be done next.

Why Tools Are Important in Data Analytics

To work with data, we cannot just use our eyes and brain. We need tools to:

Collect and store data

Clean and organize data

Study and understand data

Show results through graphs and dashboards

Let us now look at the most popular tools used by data analysts in 2025.

Most Used Data Analytics Tools in 2025

Microsoft Excel

Excel is one of the oldest and most common tools used for data analysis. It is great for working with small to medium-sized data.

With Excel, you can:

Enter and organize data

Do basic calculations

Create charts and tables

Use formulas to analyze data

It is simple to learn and a great tool for beginners.

SQL (Structured Query Language)

SQL is a language used to talk to databases. If data is stored in a database, SQL helps you find and work with that data.

With SQL, you can:

Find specific information from large datasets

Add, change, or delete data in databases

Combine data from different tables

Every data analyst is expected to know SQL because it is used in almost every company.

Python

Python is a programming language. It is one of the most popular tools in data analytics because it can do many things.

With Python, you can:

Clean messy data

Analyze and process large amounts of data

Make graphs and charts

Build machine learning models

Python is easy to read and write, even for beginners. It has many libraries that make data analysis simple.

Tableau and Power BI

Tableau and Power BI are tools that help you create visual stories with your data. They turn data into dashboards, charts, and graphs that are easy to understand.

Tableau is used more in global companies and is known for beautiful visuals.

Power BI is made by Microsoft and is used a lot in Indian companies.

These tools are helpful for presenting your analysis to managers and clients.

Google Sheets

Google Sheets is like Excel but online. It is simple and free to use. You can share your work easily and work with others at the same time.

It is good for:

Small projects

Group work

Quick calculations and reports

Many startups and small businesses use Google Sheets for simple data tasks.

R Programming Language

R is another programming language like Python. It is mainly used in research and academic fields where you need strong statistics.

R is good for:

Doing detailed statistical analysis

Working with graphs

Writing reports with data

R is not as easy as Python, but it is still useful if you want to work in scientific or research-related roles.

Apache Spark

Apache Spark is a big data tool. It is used when companies work with a large amount of data, such as millions of customer records.

It is good for:

Processing huge data files quickly

Using Python or Scala to write data analysis code

Working in cloud environments

Companies like Amazon, Flipkart, and banks use Apache Spark to handle big data.

Which Tools Should You Learn First

If you are just getting started with data analytics, here is a simple learning path:

Start with Microsoft Excel to understand basic data handling.

Learn SQL to work with databases.

Learn Python for deeper analysis and automation.

Then learn Power BI or Tableau for data visualization.

Once you become comfortable, you can explore R or Apache Spark based on your interests or job needs.

Final Thoughts

Learning data analytics is a great decision in 2025. Every company is looking for people who can understand data and help them grow. These tools will help you start your journey and become job-ready.

The best part is that most of these tools are free or offer free versions. You can start learning online, watch tutorials, and practice with real data.

If you are serious about becoming a data analyst, now is the best time to begin.

You do not need to be an expert in everything. Start small, stay consistent, and keep practicing. With time, you will become confident in using these tools.

0 notes

Text

Essential Technical Skills for a Successful Career in Business Analytics

If you're fascinated by the idea of bridging the gap between business acumen and analytical prowess, then a career in Business Analytics might be your perfect fit. But what specific technical skills are essential to thrive in this field?

Building Your Technical Arsenal

Data Retrieval and Manipulation: SQL proficiency is non-negotiable. Think of SQL as your scuba gear, allowing you to dive deep into relational databases and retrieve the specific data sets you need for analysis. Mastering queries, filters, joins, and aggregations will be your bread and butter.

Statistical Software: Unleash the analytical might of R and Python. These powerful languages go far beyond basic calculations. With R, you can create complex statistical models, perform hypothesis testing, and unearth hidden patterns in your data. Python offers similar functionalities and boasts a vast library of data science packages like NumPy, Pandas, and Scikit-learn, empowering you to automate tasks, build machine learning models, and create sophisticated data visualizations.

Data Visualization: Craft compelling data stories with Tableau, Power BI, and QlikView. These visualization tools are your paintbrushes, transforming raw data into clear, impactful charts, graphs, and dashboards. Master the art of storytelling with data, ensuring your insights resonate with both technical and non-technical audiences. Learn to create interactive dashboards that allow users to explore the data themselves, fostering a data-driven culture within the organization.

Business Intelligence (BI) Expertise: Become a BI whiz. BI software suites are the command centers of data management. Tools like Microsoft Power BI, Tableau Server, and Qlik Sense act as a central hub, integrating data from various sources (databases, spreadsheets, social media) and presenting it in a cohesive manner. Learn to navigate these platforms to create performance dashboards, track key metrics, and identify trends that inform strategic decision-making.

Beyond the Basics: Stay ahead of the curve. The technical landscape is ever-evolving. Consider exploring cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) for data storage, management, and scalability. Familiarize yourself with data warehousing concepts and tools like Apache Spark for handling massive datasets efficiently.

.

Organizations Hiring Business Analytics and Data Analytics Professionals:

Information Technology (IT) and IT-enabled Services (ITES):

TCS, Infosys, Wipro, HCL, Accenture, Cognizant, Tech Mahindra (Business Analyst: Rs.400,000 - Rs.1,200,000, Data Analyst: Rs.500,000 - Rs.1,400,000)

Multinational Corporations with Indian operations:

IBM, Dell, HP, Google, Amazon, Microsoft (Business Analyst: Rs.500,000 - Rs.1,500,000, Data Analyst: Rs.600,000 - Rs.1,600,000)

Banking, Financial Services and Insurance (BFSI):

HDFC Bank, ICICI Bank, SBI, Kotak Mahindra Bank, Reliance Life Insurance, LIC (Business Analyst: Rs.550,000 - Rs.1,300,000, Data Analyst: Rs.650,000 - Rs.1,500,000)

E-commerce and Retail:

Flipkart, Amazon India, Myntra, Snapdeal, Big basket (Business Analyst: Rs. 450,000 - Rs. 1,000,000, Data Analyst: Rs. 550,000 - Rs. 1,200,000)

Management Consulting Firms:

McKinsey & Company, Bain & Company, Boston Consulting Group (BCG) (Business Analyst: Rs.700,000 - Rs.1,800,000, Data Scientist: Rs.800,000 - Rs.2,000,000)

By mastering this technical arsenal, you'll be well-equipped to transform from data novice to data maestro. Consider pursuing an MBA in Business Analytics, like the one offered by Poddar Management and Technical Campus, Jaipur. These programs often integrate industry projects and internships, providing valuable hands-on experience with the latest tools and technologies.

0 notes

Text

AI Agent Development: A Comprehensive Guide to Building Intelligent Virtual Assistants

Artificial Intelligence (AI) is reshaping industries, and AI agents are at the forefront of this transformation. From chatbots to sophisticated virtual assistants, AI agents are revolutionizing customer service, automating tasks, and enhancing user experiences. In this guide, we will explore AI agent development, key components, tools, and best practices for building intelligent virtual assistants.

What is an AI Agent?

An AI agent is an autonomous software entity that perceives its environment, processes information, and takes actions to achieve specific goals. AI agents are commonly used in virtual assistants, customer service bots, recommendation systems, and even robotics.

Types of AI Agents

AI agents can be classified based on their capabilities and autonomy levels:

Reactive Agents – Respond to inputs but do not retain memory or learn from past interactions.

Limited Memory Agents – Store past interactions for better decision-making (e.g., chatbots with short-term memory).

Theory of Mind Agents – Understand user emotions and beliefs, improving personalized responses (still in development).

Self-Aware Agents – Theoretical AI that possesses self-awareness and reasoning (future concept).

Key Components of AI Agent Development

To build a functional AI agent, you need several core components:

1. Natural Language Processing (NLP)

NLP enables AI agents to understand, interpret, and generate human language. Popular NLP frameworks include:

OpenAI GPT models (e.g., ChatGPT)

Google Dialogflow

IBM Watson Assistant

2. Machine Learning & Deep Learning

AI agents rely on ML and deep learning models to process data, recognize patterns, and improve over time. Some common frameworks include:

TensorFlow

PyTorch

Scikit-learn

3. Speech Recognition & Synthesis

For voice assistants like Siri and Alexa, speech-to-text (STT) and text-to-speech (TTS) capabilities are essential. Tools include:

Google Speech-to-Text

Amazon Polly

Microsoft Azure Speech

4. Conversational AI & Dialogue Management

AI agents use dialogue management systems to maintain coherent and context-aware conversations. Technologies include:

Rasa (open-source conversational AI)

Microsoft Bot Framework

Amazon Lex

5. Knowledge Base & Memory

AI agents can store and retrieve information to enhance responses. Common databases include:

Vector databases (e.g., Pinecone, FAISS)

Knowledge graphs (e.g., Neo4j)

6. Integration with APIs & External Systems

To enhance functionality, AI agents integrate with APIs, CRMs, and databases. Popular API platforms include:

OpenAI API

Twilio for communication

Stripe for payments

Steps to Build an AI Virtual Assistant

Step 1: Define Use Case & Goals

Decide on the AI agent’s purpose—customer support, sales automation, or task automation.

Step 2: Choose a Development Framework

Select tools based on requirements (e.g., GPT for chatbots, Rasa for on-premise solutions).

Step 3: Train NLP Models

Fine-tune language models using domain-specific data.

Step 4: Implement Dialogue Management

Use frameworks like Rasa or Dialogflow to create conversation flows.

Step 5: Integrate APIs & Databases

Connect the AI agent to external platforms for enhanced functionality.

Step 6: Test & Deploy

Perform extensive testing before deploying the AI agent in a real-world environment.

Best Practices for AI Agent Development

Focus on User Experience – Ensure the AI agent is intuitive and user-friendly.

Optimize for Accuracy – Train models on high-quality data for better responses.

Ensure Data Privacy & Security – Protect user data with encryption and compliance standards.

Enable Continuous Learning – Improve the AI agent’s performance over time with feedback loops.

Conclusion

AI agent development is revolutionizing business automation, customer engagement, and personal assistance. By leveraging NLP, ML, and conversational AI, developers can build intelligent virtual assistants that enhance efficiency and user experience. Whether you’re developing a simple chatbot or a sophisticated AI-powered agent, the right frameworks, tools, and best practices will ensure success.

0 notes

Text

Graph Database Market Dynamics, Trends, and Growth Factors 2032

The Graph Database Market size was valued at US$ 2.8 billion in 2023 and is expected to reach US$ 15.94 billion in 2032 with a growing CAGR of 21.32 % over the forecast period 2024-2032

Graph Database Market is experiencing exponential growth due to the rising need for handling complex and interconnected data. Businesses across various industries are leveraging graph databases to enhance data relationships, improve decision-making, and gain deeper insights. The adoption of AI, machine learning, and real-time analytics is further driving demand for graph-based data management solutions.

Graph Database Market continues to evolve as organizations seek efficient ways to manage highly connected data structures. Unlike traditional relational databases, graph databases provide superior performance in handling relationships between data points. The surge in big data, social media analytics, fraud detection, and recommendation engines is fueling widespread adoption across industries such as finance, healthcare, e-commerce, and telecommunications.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3615

Market Keyplayers:

Oracle Corporation

Ontotext

Orient DB

Hewlett Packard Enterprise

Microsoft Corporation

Teradata Corporation

Stardog Union Inc.

Amazon Web Services

Market Trends Driving Growth

1. Rising Demand for AI and Machine Learning Integration

Graph databases play a crucial role in AI and machine learning by enabling more accurate predictions, knowledge graphs, and advanced data analytics. Businesses are integrating graph technology to enhance recommendation systems, cybersecurity, and fraud prevention.

2. Increased Adoption in Fraud Detection and Risk Management

Financial institutions and e-commerce platforms are utilizing graph databases to detect fraudulent transactions in real time. By mapping and analyzing relationships between entities, these databases can uncover hidden patterns that indicate suspicious activities.

3. Growth of Personalized Recommendation Engines

Tech giants like Amazon, Netflix, and Spotify rely on graph databases to power their recommendation engines. By analyzing user behavior and interconnections, companies can deliver highly personalized experiences that enhance customer satisfaction.

4. Expansion in Healthcare and Life Sciences

Graph databases are revolutionizing healthcare by mapping patient records, drug interactions, and genomic data. Researchers and healthcare providers can leverage these databases to improve diagnostics, drug discovery, and personalized medicine.

5. Surge in Knowledge Graph Applications

Enterprises are increasingly using knowledge graphs to organize and retrieve vast amounts of unstructured data. This trend is particularly beneficial for search engines, virtual assistants, and enterprise data management systems.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3615

Market Segmentation:

By Component

Software

Services

By Deployment

Cloud

On-Premise

By Type

Relational (SQL)

Non-Relational (NoSQL)

By Application

Identity and Access Management

Customer Analytics

Recommendation Engine

Master Data Management

Privacy and Risk Compliance

Fraud Detection and Risk Management

Others

By Analysis Type

Community Analysis

Connectivity Analysis

Centrality Analysis

Path Analysis

Market Analysis and Growth Projections

The shift towards real-time data analytics and the increasing complexity of enterprise data management are key growth drivers. Leading database providers such as Neo4j, Amazon Neptune, and TigerGraph are investing in scalable and high-performance solutions to cater to growing demand.

Key industries driving adoption include:

Banking and Finance: Graph databases enhance fraud detection, risk analysis, and regulatory compliance.

Healthcare and Biotech: Used for genomic sequencing, drug discovery, and personalized treatment plans.

Retail and E-commerce: Enhancing customer engagement through personalized recommendations.

Cybersecurity: Detecting anomalies and cyber threats through advanced network analysis.

Despite its rapid growth, the market faces challenges such as data privacy concerns, high implementation costs, and the need for specialized skills. However, continuous advancements in cloud computing and database-as-a-service (DBaaS) solutions are helping businesses overcome these barriers.

Regional Analysis

1. North America Leading the Market

North America dominates the graph database market, driven by the presence of major tech companies, financial institutions, and government initiatives in AI and big data analytics. The U.S. and Canada are investing heavily in advanced data infrastructure.

2. Europe Experiencing Steady Growth

Europe is witnessing strong adoption, particularly in industries like healthcare, finance, and government sectors. Regulations such as GDPR are pushing organizations to adopt more efficient data management solutions.

3. Asia-Pacific Emerging as a High-Growth Region

Asia-Pacific is experiencing rapid growth due to increased digital transformation in China, India, and Japan. The rise of e-commerce, AI-driven applications, and cloud adoption are key factors driving demand.

4. Latin America and Middle East & Africa Showing Potential

Although these regions have a smaller market share, there is growing interest in graph databases for financial security, telecommunications, and government data management initiatives.

Key Factors Fueling Market Growth

Rising Complexity of Data Relationships: Traditional relational databases struggle with highly connected data structures, making graph databases the preferred solution.

Cloud-Based Deployments: The availability of cloud-native graph database solutions is making adoption easier for businesses of all sizes.

Real-Time Analytics Demand: Businesses require instant insights to improve decision-making, fraud detection, and customer interactions.

AI and IoT Expansion: The growing use of AI and Internet of Things (IoT) is creating a surge in data complexity, making graph databases essential for real-time processing.

Open-Source Innovation: Open-source graph database platforms are making technology more accessible and fostering community-driven advancements.

Future Prospects and Industry Outlook

1. Increased Adoption in Enterprise AI Solutions

As AI-driven applications continue to grow, graph databases will play a vital role in structuring and analyzing complex datasets, improving AI model accuracy.

2. Expansion of Graph Database-as-a-Service (DBaaS)

Cloud providers are offering graph databases as a service, reducing infrastructure costs and simplifying deployment for businesses.

3. Integration with Blockchain Technology

Graph databases are being explored for blockchain applications, enhancing security, transparency, and transaction analysis in decentralized systems.

4. Enhanced Cybersecurity Applications

As cyber threats evolve, graph databases will become increasingly critical in threat detection, analyzing attack patterns, and strengthening digital security frameworks.

5. Growth in Autonomous Data Management

With advancements in AI-driven automation, graph databases will play a central role in self-learning, adaptive data management solutions for enterprises.

Access Complete Report:https://www.snsinsider.com/reports/graph-database-market-3615

Conclusion

The Graph Database Market is on a high-growth trajectory, driven by its ability to handle complex, interconnected data with speed and efficiency. As industries continue to embrace AI, big data, and cloud computing, the demand for graph databases will only accelerate. Businesses investing in graph technology will gain a competitive edge in data-driven decision-making, security, and customer experience. With ongoing innovations and increasing enterprise adoption, the market is poised for long-term expansion and transformation.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Graph Database Market#Graph Database Market Analysis#Graph Database Market Scope#Graph Database Market Share#Graph Database Market Trends

0 notes

Text

Pixtral Large 25.02: Amazon Bedrock Serverless Multimodal AI

AWS releases Pixtral Large 25.02 for serverless Amazon Bedrock.

Amazon Bedrock Pixtral Large

The Pixtral Large 25.02 model is now completely managed and serverless on Amazon Bedrock. AWS was the first major cloud service to provide serverless, fully managed Pixtral Large.

Infrastructure design, specific expertise, and continual optimisation are often needed to manage massive foundation model (FM) computational demands. Many clients must manage complex infrastructures or choose between cost and performance when deploying sophisticated models.

Mistral AI's first multimodal model, Pixtral Large, combines high language understanding with advanced visuals. Its 128K context window makes it ideal for complex visual reasoning. The model performs well on MathVista, DocVQA, and VQAv2, proving its effectiveness in document analysis, chart interpretation, and natural picture understanding.

Pixtral Large excels at multilingualism. Global teams and apps can use English, French, German, Spanish, Italian, Chinese, Japanese, Korean, Portuguese, Dutch, and Polish. Python, Java, C, C++, JavaScript, Bash, Swift, and Fortran are among the 80 languages it can write and read.

Developers will like the model's agent-centric architecture since it integrates with current systems via function calling and JSON output formatting. Its sturdy system fast adherence improves dependability in large context situations and RAG applications.

This complex model is currently available in Amazon Bedrock without infrastructure for Pixtral Large. Serverless allows you to scale usage based on demand without prior commitments or capacity planning. No wasted resources mean you only pay for what you utilise.

Deduction across regions

Pixtral Large is now available in Amazon Bedrock across various AWS Regions due to cross-region inference.

Amazon Bedrock cross-Region inference lets you access a single FM across many regions with high availability and low latency for global applications. A model deployed in both the US and Europe may be accessible via region-specific API endpoints with various prefixes: us.model-id for US and eu.model-id for European.

By confining data processing within defined geographic borders, Amazon Bedrock may comply with laws and save latency by sending inference requests to the user's nearest endpoint. The system automatically manages load balancing and traffic routing across Regional installations to enable seamless scalability and redundancy without your monitoring.

How it works?

I always investigate how new capabilities might solve actual problems as a developer advocate. The Amazon Bedrock Converse API's new multimodal features were perfect for testing when she sought for help with her physics exam.

It struggled to solve these challenges. It realised this was the best usage for the new multimodal characteristics. The Converse API was used to create a rudimentary application that could comprehend photographs of a complex problem sheet with graphs and mathematical symbols. Once the physics test materials were uploaded, ask the model to explain the response process.

The following event impressed them both. Model interpreted schematics, mathematical notation, and French language, and described each difficulty step-by-step. The computer kept context throughout the talk and offered follow-up questions about certain issues to make teaching feel natural.

It was confident and ready for this test, showing how Amazon Bedrock's multimodal capabilities can provide users meaningful experiences.

Start now

The new method is available at US East (Ohio, N. Virginia), US West (Oregon), and Europe (Frankfurt, Ireland, Paris, Stockholm) Regional API endpoints. Regional availability reduces latency, meeting data residency criteria.

Use the AWS CLI, SDK, or Management Console to programmatically access the model using the model ID mistral.pixtral-large-2502-v1:0.

Developers and organisations of all sizes may now employ strong multimodal AI, a major leap. AWS serverless infrastructure with Mistral AI's cutting-edge model let you focus on designing innovative apps without worrying about complexity.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#Pixtral Large#Amazon Bedrock#Mistral AI#Pixtral Large 25.02#Pixtral Large 25.02 model#multimodal model

0 notes

Text

Dell Technologies Secures Major AI Server Deal with xAI

Amazon SageMaker simplifies the process of building, training, and deploying machine learning models.

Artificial Intelligence (AI) continues to be a driving force in the evolution of technology, influencing a wide range of industries by enhancing efficiency, decision-making, and innovation. As organizations increasingly harness the capabilities of AI, partnerships like the one between Dell Technologies and xAI highlight the importance of robust infrastructure to support advanced AI applications. This collaboration underscores the growing need for powerful AI servers that can handle vast datasets and complex algorithms, ultimately enabling businesses to capitalize on insights and streamline operations. The integration of AI into various sectors not only optimizes performance but also fosters the development of new technologies, paving the way for a smarter and more interconnected future.

OpenAI offers powerful language models for various applications.

TensorFlow provides extensive libraries for machine learning and deep learning.

Pytorch is popular for its dynamic computation graph and user-friendly interface.

Google Cloud AI enables scalable AI solutions with robust infrastructure.

IBM Watson specializes in enterprise AI solutions and natural language processing.

Hugging Face is known for its vast collection of pre-trained models and community support.

DataRobot automates machine learning processes for a variety of industries.

Kubeflow is focused on deploying machine learning workflows on Kubernetes.

Overview of the Dell Technologies and xAI Partnership

Dell Technologies is on the verge of finalizing a $5 billion contract with xAI, the artificial intelligence startup founded by Elon Musk. This collaboration will supply xAI with state-of-the-art AI servers, vital for enhancing the company’s technological capabilities. As artificial intelligence transforms numerous industries, this agreement signifies an important milestone, highlighting the dedication of major tech companies to invest in AI-driven advancements.

Dell Technologies is on the verge of finalizing a $5 billion contract with xAI, the artificial intelligence startup founded by Elon Musk. This collaboration will supply xAI with state-of-the-art AI servers, vital for enhancing the company’s technological capabilities. As artificial intelligence transforms numerous industries, this agreement signifies an important milestone, highlighting the dedication of major tech companies to invest in AI-driven advancements.

Significance of the Deal in the AI Landscape

The collaboration between Dell Technologies and xAI is not just a financial transaction; it represents a strategic alliance aimed at enhancing AI capabilities. The importance of this deal lies in its potential to influence the broader AI landscape. As competition accelerates among tech companies to dominate the AI sector, the partnership could inspire similar collaborations that focus on developing more robust and scalable AI solutions. Dell’s established reputation in hardware technology, coupled with xAI’s focus on groundbreaking AI research, positions them as key players in the evolving tech ecosystem.

Technological Advancements for xAI

Technological Advancements for xAI

Technological Advancements for xAI

Technological Advancements for xAI

Technological Advancements for xAI

With this agreement, xAI stands to gain access to state-of-the-art server technology that promises enhanced processing speeds, improved data storage capabilities, and increased scalability. These technological advancements are crucial for xAI, especially as it endeavors to innovate in fields such as machine learning and artificial intelligence. The robustness of Dell’s hardware infrastructure will provide the necessary support that xAI needs to accelerate its development cycles, ultimately enabling quicker and more efficient research outcomes.

Impact on Dell Technologies’ Market Position

For Dell Technologies, securing this deal could significantly strengthen its position in the competitive AI market. By partnering with an influential figure like Elon Musk, Dell not only showcases its capability to meet the complex demands of AI but also reaffirms its commitment to pioneering technology solutions. As the demand for AI servers rises, Dell could capture a larger share of this growing market, which has become increasingly vital for organizations across various sectors. Enhanced visibility in the AI domain may also open doors for Dell to forge similar partnerships with other tech entities.

Future Implications for AI and Machine Learning

The implications of this partnership extend beyond immediate technological advancements; it could herald a new era for AI and machine learning development. As xAI leverages Dell’s infrastructure, the potential for disruptive innovations becomes increasingly plausible. This collaborative dynamic could result in breakthroughs that influence how AI is integrated into daily operations across numerous industries, from healthcare to finance. Furthermore, the success of this partnership could serve as a blueprint for future collaborations within the tech industry, inspiring others to invest in specialized AI solutions and infrastructure.

Conclusion

The impending partnership between Dell Technologies and xAI is a noteworthy event in the tech landscape, reflecting the intense investment and interest in artificial intelligence. As both entities prepare to combine their strengths in hardware and AI research, the collaboration promises to yield significant developments that could reshape the future of technology. As industry observers watch closely, the outcomes of this agreement may well set new standards for what is achievable in AI and machine learning spheres.

0 notes

Text

AWS NoSQL: A Comprehensive Guide to Scalable and Flexible Data Management

As big data and cloud computing continue to evolve, traditional relational databases often fall short in meeting the demands of modern applications. AWS NoSQL databases offer a scalable, high-performance solution for managing unstructured and semi-structured data with efficiency. This blog provides an in-depth exploration of aws no sql databases, highlighting their key benefits, use cases, and best practices for implementation.

An Overview of NoSQL on AWS

Unlike traditional SQL databases, NoSQL databases are designed with flexible schemas, horizontal scalability, and high availability in mind. AWS offers a range of managed NoSQL database services tailored to diverse business needs. These services empower organizations to develop applications capable of processing massive amounts of data while minimizing operational complexity.

Key AWS NoSQL Database Services

1. Amazon DynamoDB

Amazon DynamoDB is a fully managed key-value and document database engineered for ultra-low latency and exceptional scalability. It offers features such as automatic scaling, in-memory caching, and multi-region replication, making it an excellent choice for high-traffic and mission-critical applications.

2. Amazon DocumentDB (with MongoDB Compatibility)

Amazon DocumentDB is a fully managed document database service that supports JSON-like document structures. It is particularly well-suited for applications requiring flexible and hierarchical data storage, such as content management systems and product catalogs.

3. Amazon ElastiCache

Amazon ElastiCache delivers in-memory data storage powered by Redis or Memcached. By reducing database query loads, it significantly enhances application performance and is widely used for caching frequently accessed data.

4. Amazon Neptune

Amazon Neptune is a fully managed graph database service optimized for applications that rely on relationship-based data modeling. It is ideal for use cases such as social networking, fraud detection, and recommendation engines.

5. Amazon Timestream

Amazon Timestream is a purpose-built time-series database designed for IoT applications, DevOps monitoring, and real-time analytics. It efficiently processes massive volumes of time-stamped data with integrated analytics capabilities.

Benefits of AWS NoSQL Databases

Scalability – AWS NoSQL databases are designed for horizontal scaling, ensuring high performance and availability as data volumes increase.

Flexibility – Schema-less architecture allows for dynamic and evolving data structures, making NoSQL databases ideal for agile development environments.

Performance – Optimized for high-throughput, low-latency read and write operations, ensuring rapid data access.

Managed Services – AWS handles database maintenance, backups, security, and scaling, reducing the operational workload for teams.

High Availability – Features such as multi-region replication and automatic failover ensure data availability and business continuity.

Use Cases of AWS NoSQL Databases

E-commerce – Flexible and scalable storage for product catalogs, user profiles, and shopping cart sessions.

Gaming – Real-time leaderboards, session storage, and in-game transactions requiring ultra-fast, low-latency access.

IoT & Analytics – Efficient solutions for large-scale data ingestion and time-series analytics.

Social Media & Networking – Powerful graph databases like Amazon Neptune for relationship-based queries and real-time interactions.

Best Practices for Implementing AWS NoSQL Solutions

Select the Appropriate Database – Choose an AWS NoSQL service that aligns with your data model requirements and workload characteristics.

Design for Efficient Data Partitioning – Create well-optimized partition keys in DynamoDB to ensure balanced data distribution and performance.

Leverage Caching Solutions – Utilize Amazon ElastiCache to minimize database load and enhance response times for your applications.

Implement Robust Security Measures – Apply AWS Identity and Access Management (IAM), encryption protocols, and VPC isolation to safeguard your data.

Monitor and Scale Effectively – Use AWS CloudWatch for performance monitoring and take advantage of auto-scaling capabilities to manage workload fluctuations efficiently.

Conclusion

AWS NoSQL databases are a robust solution for modern, data-intensive applications. Whether your use case involves real-time analytics, large-scale storage, or high-speed data access, AWS NoSQL services offer the scalability, flexibility, and reliability required for success. By selecting the right database and adhering to best practices, organizations can build resilient, high-performing cloud-based applications with confidence.

0 notes

Text

DSA Channel: The Ultimate Destination for Learning Data Structures and Algorithms from Basics to Advanced

DSA mastery stands vital for successful software development and competitive programming in the current digital world that operates at high speeds. People at every skill level from beginner to advanced developer will find their educational destination at the DSA Channel.

Why is DSA Important?

Software development relies on data structures together with algorithms as its essential core components. Code optimization emerges from data structures and algorithms which produces better performance and leads to successful solutions of complex problems. Strategic knowledge of DSA serves essential needs for handling job interviews and coding competitions while enhancing logical thinking abilities. Proper guidance makes basic concepts of DSA both rewarding and enjoyable to study.

What Makes DSA Channel Unique?

The DSA Channel exists to simplify both data structures along algorithms and make them accessible to all users. Here’s why it stands out:

The channel provides step-by-step learning progress which conservatively begins by teaching arrays and linked lists and continues to dynamic programming and graph theory.

Each theoretical concept gets backed through coding examples practically to facilitate easier understanding and application in real-life situations.

Major companies like Google, Microsoft, and Amazon utilize DSA knowledge as part of their job recruiter process. Through their DSA Channel service candidates can perform mock interview preparation along with receiving technical interview problem-solving advice and interview cracking techniques.