#How To Use Devin Ai

Explore tagged Tumblr posts

Text

Devin AI: the world’s ‘first fully autonomous’ AI software engineer

#Devin Site Ai#How To Use Devin Ai#What Is Devin AI Software?#Who Created Devin AI?#How Can I Become An AI Developer In India?#Devin AI

0 notes

Text

Hello! Welcome to the official Double Dead Studio Tumblr, the solodev behind Reanimated Heart, Another Rose in His Garden, and Pygmalion's Folly.

Reanimated Heart is a character-driven horror romance visual novel about finding love in a mysterious small town. There are three monstrous love interests with their own unique personalities and storylines.

Another Rose in His Garden is an 18+ erotic Omegaverse BL visual novel. Abel Valencia is an Omega who's hidden his secondary sex his entire life. Life's alright, until he meets the wealthy tycoon, Mars Rosales, and the two get embroiled in a sexual affair that changes his life forever.

Pygmalion's Folly is a survival murdersim where you play as Roxham Police Department's star detective, hellbent on finding your sister's killer... until he finds you.

Content Warning: All my games are 18+! They contains dark subject matter such as violence and sexual content. Player discretion is advised.

This blog is ran by Jack, the creator.

Itch | Link Tree | Patreon | Twitter

Guidelines

My policy for fanwork is that anything goes in fiction, but respect my authority and copyright outside it. This means normal fan activity like taking screencaps, posting playthroughs, and making fanart/fanfiction is completely allowed, but selling this game or its assets isn't allowed (selling fanwork of it is fine, though). You are also not allowed to feed any of my assets to AI bots, period, even if it's free.

Do not use my stuff for illegal or hateful content.

Also, I expect everyone to respect the Content Warnings on the page. I'm old and do not tolerate fandom wank.

F.A.Q.

Who are the main Love Interests in Reanimated Heart?

Read their character profiles here!!

Who's the team?

Jack (creator, writer, artist), mostly. I closely work with Exodus (main programmer) and Claira (music composer). My husband edits the drafts.

For Reanimated Heart, my friend Bonny makes art assets. I've also gotten help from outsiders like Sleepy (prologue music + vfx) and my friend Gumjamin (main menu heart animation).

For Reanimated Heart's VOs, Alex Ross voices Crux, Devin McLaughlin voices Vincenzo, Christian Cruz voices Black, Maganda Marie voices Grete, and Zoe D. Lee voices Missy.

Basically, it's mostly just me & outsourcing stuff to my friends and professionals.

How can I support Double Dead Studio productions?

You can pay for the game, or join our monthly Patreon! If you don't have any money, just giving it a nice rating and recommending it to a friend is already good enough. :)

Where do the funds go to?

Almost 100% gets poured back into the game. More voice acting, more music, more trailers, more art, etc. I also like to give my programmer a monthly tip for helping me.

This game is really my insane passion project, and I want to make it better with community support.

I live in the Philippines and the purchasing power of php is not high, especially since many of the people I outsource to prefer USD. (One time I spent P10k of my own money in one month just to get things.) I'll probably still do that, even if no money comes in, until I'm in danger of getting kicked out the street… but maybe even then? (jk)

What platforms will Reanimated Heart be released in?

Itch and then Steam when it's fully finished. Still looking into other options, as I hear both are getting bad.

Will Reanimated Heart be free?

Chapter 1 will be free. The rest will be updated on Patreon exclusively until full release.

Are you doing a mobile version?

Yeah. Just Android for now, but it's in the works.

Where can I listen to Reanimated Heart's OST?

It is currently up on YouTube, Spotify, and Bandcamp!

Why didn't you answer my ask?

A number of things! Two big ones that keep coming up are Spoilers (as in, you asked something that will be put in an update) or it's already been asked. If you're really dying to know, check the character tags or the meta commentary. You might find what you're looking for there. :)

Will there be a sequel to Pygmalion's Folly?

It's not my first concern right now, but I am planning on it.

Tag List for Navigation

Just click the tags to get to where you wanna go!

#reanimated heart#updates#asks#official art#crux hertz#black lumaban#vincenzo maria fontana#grete braun#townies#fanwork#additional content#aesthetic#spoilers#lore#meta commentary#memes#horror visual novel#romance visual novel#yandere OC#prompts#another rose in his garden#abel valencia#mars rosales#florentin blanchett#pygmalion's folly

143 notes

·

View notes

Text

A trove of leaked internal messages and documents from the militia American Patriots Three Percent—also known as AP3—reveals how the group coordinated with election denial groups as part of a plan to conduct paramilitary surveillance of ballot boxes during the midterm elections in 2022.

This information was leaked to Distributed Denial of Secrets (DDoSecrets), a nonprofit that says it publishes hacked and leaked documents in the public interest. The person behind these AP3 leaks is an individual who, according to their statement uploaded by DDoSecrets, infiltrated the militia and grew so alarmed by what they were seeing that they felt compelled to go public with the information ahead of the upcoming presidential election.

Election and federal officials have already voiced concern about possible voter intimidation this November, in part due to the proliferation of politically violent rhetoric and election denialism. Some right-wing groups have already committed to conducting surveillance of ballot boxes remotely using AI-driven cameras. And last month, a Homeland Security bulletin warned that domestic extremist groups could plan on sabotaging election infrastructure including ballot drop boxes.

Devin Burghart, president and executive director of the Institute for Research and Education on Human Rights, says that AP3’s leaked plans for the 2022 midterms should be a warning for what may transpire next month. “Baseless election denial conspiracies stoking armed militia surveillance of ballot drop boxes is a dangerous form of voter intimidation,” Burghart tells WIRED. “The expansion of the election denial, increased militia activity, and growing coordination between them, is cause for serious concern heading into November. Now with voter suppression groups like True the Vote and some GOP elected officials targeting drop boxes for vigilante activity, the situation should be raising alarms.”

The leaked messages from 2022 show how AP3 and other militias provided paramilitary heft to ballot box monitoring operations organized by “The People’s Movement,” the group that spearheaded the 2021 anti-vaccine convoy protest, and Clean Elections USA, a group with links to the team behind the 2000 Mules film that falsely claimed widespread voter fraud. In the leaked chats, People’s Movement leader Carolyn Smith identifies herself as an honorary AP3 member.

AP3 is run by Scot Seddon, a former Army Reservist, Long Islander, and male model, according to a ProPublica profile on him published in August. That profile, which relied on the same anonymous infiltrator who leaked AP3’s internal messages to DDoSecrets, explains that AP3 escaped scrutiny in the aftermath of January 6 in part because Seddon, after spending weeks preparing his ranks to go to DC, ultimately decided to save his soldiers for another day. ProPublica reported that some members went anyway but were under strict instruction to forgo any AP3 insignia. According to the leaked messages, Seddon also directed his state leaders to participate in the “operation.”

“All of us have a vested interest in this nation,” Seddon said in a leaked video. “So all the state leaders should be getting their people out and manning and observing ballot boxes, to watch for ballot stuffing. This is priority. This goes against getting your double cheeseburger at mcdonalds … Our nation depends on this. Our future depends on this. This ain't no bullshit issue. We need to be tight on this, straight up.”

A flier using militaristic language shared across various state-specific Telegram channels lays out how this operation would work. With “Rules of Engagement” instructions, “Volunteers” are told not to interfere with anyone dropping off their ballots. If someone is suspected of dropping off “multiple ballots,” then observers are told to record the event, and make a note of the individual's appearance and their vehicle's license plate number. In the event of any sort of confrontation, they’re supposed to “report as soon as possible to your area Captain.”

“At the end of each shift, Patriots will prepare a brief report of activity and transmit it to [the ballot] box team Captain promptly,” the flier states.

The person who leaked these documents and messages says that these paramilitary observers masquerading as civilians will often have a QRF—quick reaction force—on standby who can “stage an armed response” should a threat or confrontation arise.

The goal of the “operation,” according to that flier, was to “Stop the Mules.”

“These are the individuals stuffing ballot boxes,” the flier says. “They are well trained and financed. There is a global network backing them up. They pick up fake ballots from phony non-profits and deliver them to ballot boxes, usually between 2400 hours and 0600 hours.” (This was the core conspiracy of 2,000 Mules; the conservative media company behind the film has since issued an apology for promoting election conspiracies and committed to halting its distribution).

Fears about widespread armed voter intimidation during the 2022 midterms—stemming from online chatter and warnings from federal agencies—never materialized in full. However, there were scattered instances of people showing up to observe ballot drops in Arizona. These individuals, according to the statement by the anonymous leaker in the DDoSecrets files, were not “lone wolves”—they were part of “highly organized groups who intended to influence the elections through intimidation.”

In one widely-publicized incident, two clearly armed people wearing balaclavas and tactical gear showed up in Mesa, Arizona, to conduct drop box surveillance. They were never identified, though a Telegram video on DDoSecrets shows AP3’s North Carolina chapter head Burley Ross admitting that one of them was part of his unit. Ross says that the individual was Elias Humiston, who had previously been conducting vigilante border surveillance. “I was well aware they were doing drop box observations,” said Ross. “I was not aware they were doing so in full kit.” Ross added that Humiston had since resigned from the group.

Seddon also addressed the “little incident in Arizona,” stressing the importance of maintaining clean optics to avoid scrutiny. “We had pushed for helping to maintain election integrity through monitoring the ballot boxes,” said Seddon, in a video message on Telegram. “We never told anyone to do it like that.”

The militia movement largely retreated from public view in the aftermath of the January 6 riot at the US Capitol in 2021. The high-profile implication of the Oath Keepers in the riot, which at the time was America’s biggest militia, thrust the broader militia movement into the spotlight. Amid intense scrutiny, stigma, and creeping paranoia about federal informants, some militias rebanded or even disbanded. But as WIRED reporting has shown, after a brief lull in activity, the militia movement has been quietly rebuilding, reorganizing, and recruiting. With AP3 at its helm, it’s also been engaging in armed training.

Election conspiracies have only continued to fester since 2022, and AP3 has been aggressively recruiting and organizing. Moreover, the rhetoric in the group has also intensified. “The next election won’t be decided at a Ballot Box,” wrote an AP3 leader earlier this year in a private chat, according to ProPublica. “It’ll be decided at the ammo box.”

“Every American has a right to go to the ballot box without fear and the authorities need to urgently learn the lessons of 2022—and the lessons contained in these documents—so they can prevent something even worse from happening in the coming weeks,” the infiltrator wrote in the DDoS statement.

21 notes

·

View notes

Text

So I colored that image (and added a couple of characters that were missing). Anyway, it's the full lineup of BFFs. I feel so normal about these blorbos. Thank you to @viridiandruid for running such a fun game with great characters!

[characer names credits and other info under the cut]

A)ale! my PC and the loml. Massuraman Binder. They have a minimum of three souls in their body at one time and a maximum of five. they're doing so okay right now; don't worry about it

B) Devin, an eagle ale can summon him through their armor as an ally.

C)Cpt. James Hawkins. played by @theboombardbox, spellsword/barbarian. he's missing half of his soul <3, the Hat Man is following him, and he has worms.

D) Sash played by @halfandhalfling our wich/werewolf bestie. Apparently, she's a princess of the moon, but we don't have time to unpack all of that. Also, her (adoptive) mom fucked James.

E) Fig! Sash's familear

F) Orsa, Kiri's animal companion

G) Revazi, once played by @werepaladin. a barbarian whose "grandfather" Grandfather, a red greatwyrm, has been the patron of the party since like session five.

H) Chosen, in a human-only setting, she's an elf child! She's the reincarnation of ale's greatest enemy from both of their past lives, and they're apparently destined to end each other. But for right now they're buds, and she's the adoptive daughter of Kiri.

I) Kiri, @recoveringrevenant's PC, a Spirit Shaman. The newest Pc of the BFFs, our very chaotic party's grounding force, she can see a lot of stuff going on that no one else can see, both literally and figuratively.

J) Beren, a strange teenager our party was charged with keeping safe, then promptly lost (we're working on it). The person who charged us with keeping him safe may or may not be an abloeth, allied with the red dragon previously mentioned.

K) Cloves, the horse we bought in session two or three, that was then awakened by a random druid. He was one of the oldest members of the BFFs until... recently...

L) Erina, @recoveringrevenant 's retired PC, lets us crash at her place most nights, and she was a founding member of the BFFs, so even though she's not adventuring with us anymore, she's still one of us.

M) Agamemnon, our ship's AI, he's an orb that likes to dress up as a wizard :>

N) Ajatus, a guy we threatened to get to help us, but somehow became fully one of the crew. also, we need him to drive the boat, so, a BFF it is.

O) Father Lagi, a priest whose church collapsed into a skink hole after we visited it. We offered him a job in recompense, though by some of the conversations we've had, he's pitty chill in general. Also he embezzled like all of the church's coffers, so that's funny

P) Dahlver-Nar, one of the many souls that take up residence inside of ale's body. Though he's a bit more permanent. He helps out and gives advice alongside some skick-ass powers.

Q) Naz, this is the character I forgot in the first render of this piece. she's functionally dead atm (permanently trapped inside an amber tree), but you know how it is. a character once played by @recoveringrevenant as well

R) Cousin Chet! the half-dragon half ???? freak. we love him though

S) Bailey Wick, a once stow-away, now rogue-for-hire she has the sticky fingers and high dex our party lacks. So whenever we need to infiltrate a place, we send her on those missions. Though we've recently found out she's like 19, which is horrifying lmao. She also is one of the most competent members of the BFFs only because she's the only character that consistently rolls above a 10 (like genuinely)

T) Tansu, Revazi's twin sister. Our most recent true member of the BFFs, she's.... gone through it

#illustration#artists on tumblr#dnd#drawing#dungeons and dragons#player character#oc#dungons and dragons#artist on tumblr#pc#please rb

24 notes

·

View notes

Text

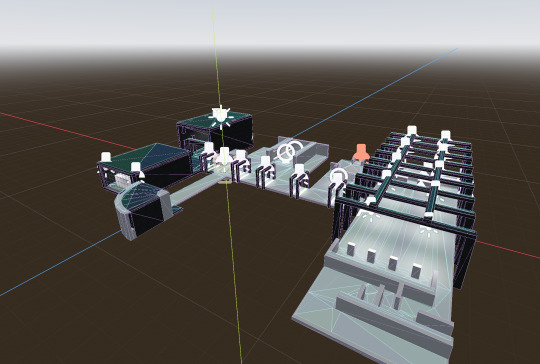

DevLog 2 - The Devining

well. it only took 3 months. but here is our new devlog! or however you call it... We did write a whole devlog for early march, but with school and work taking up most of our schedule, we did not post it, and most of our progress fell to the sands of time.

Snail (@snailmusic) -

Yeah I didn't do nearly as much as freep, so most of those changes will be down there. part of the reason though is that ive been doing a lot of work on my music (haha yes self promo) so if you want to check that out it'd be great! (most of yall are just from my acc so you probably alr know) (my current style of music is probably not representative of O2's audio style or vibe, still working towards that)

The main thing I did was improve trenchbroom (level editor)/qodot/godot interop, which can bring us closer to building some levels (and who knows, a little alpha test in the future ;)). It was actually realllyyyy annoying due to a lack of documentation for qodot 4 (and also ill admit it, a bit of my stupidity) so there was a bug that I couldn't fix for a long time but eventually it was fixed and now it works great!

I also started looking more into the art style of the game, and I'm even learning a bit of how to draw (thanks to my friends! I wouldn't be able to learn like at all without them lol).

^ guy on a cube

oh yeah speaking of outside help im getting this is (very slightly) now bigger than us two! the others aren't doing too much we can note right now (one doesnt have a tumblr acc either) but when their contributions come more into play we'll include them here.

See ya next time!

Freep (@freepdryer) -

Back in march, i spent a lot of time working on the AI, getting it to move… and run away, sort of. But more of that will come later.

Lots of these last week or so has been on the character controller, and reinventing the wheel to introduce a state machine and get a lot cleaner code so its easier to revisit if we ever had to.

Im proud of the work that we've done so far, as we come close to a prototype with *Gameplay*

New Things

Changed the look of the enemy slightly to remove the “amongus factor”

Rewrote the entire script for nav pathing

New enemy prototype can now feel pain / has a health pool that can be depleted using bullets from the player

Added a new line of sight for the enemy to check whether or not the player is in the area to follow

Added the ability for the enemy to hide - WIP - enemy can hide but isnt very good at it. Kinda like a child who turns away while hiding in the corner.

Enemy can also detect when youre in a certain range, I will be adding more flags later on for detection (when the player shoots, sneezes, or explodes on accident)

New testing map!

New areas for target practice, line of sight testing, following and hiding

New player character controller!

Rewrote the entire script for the inclusion of State machines

This was painful.

Added 6(?) new states for several movement states

Added animations for

Walking

Running

Jumping

Crouching

Fixed the stair problem

Whats next?

Continue work on enemy AI - finish hiding, add roaming, add attacking

Dunno?

Fix the stair problem again, but more?

Weapons!

The end?

Thanks for coming to our devlog! We will be back hopefully very soon!

9 notes

·

View notes

Text

How the Devin AI software developer 'might' be a scam

youtube

saying that an artificial intelligence is autonimous is one thing, showing that it is indeed the case is another.

the closing line of the video tells viewers to sent in tasks, so Devin can handle those tasks unseen and send you the results.

okay... but if it really is doing all of this in time, why not just live stream the process at work?

and if deep learning is being used, you should have results you can use, that show the steps the ai took at each decision, a la a teacher asking a student to show their working, so that they can see that they didn't use a cheat sheet, or in this case, that a human operator on the other end didn't do the work for them.

they showed us a prerecorded video of the problem being solved, by now we should know that through the wonders of television, time can seemingly skip ahead by editing out the time between.

they give no explanation as to how Devin solves the issues, they only state that ai can do that you know, which holds as much weight as an illusionist saying it's just magic.

until we can see Devin being given a task and solving it in real time, and a results screen to show the path of deep learning it used, Devin is about as believable an ai software as any sci-fi movie robot.

youtube

12 notes

·

View notes

Text

Smarter Agents, Smarter Choices: Navigating Agentic AI Decision-Making Models

Imagine an AI that doesn't just react—but reasons. One that doesn't wait for instructions, but plans ahead, solves problems, and learns from the consequences of its actions. This isn’t science fiction. It’s the foundation of agentic AI—a new frontier where artificial intelligence takes on a more intentional, adaptive, and decision-centric role.

As businesses chase the next wave of digital transformation, agentic AI decision-making frameworks are emerging as the new compass—guiding machines toward more autonomous, goal-driven behavior. Know More

From Tools to Teammates

In the traditional model, AI has functioned like a calculator—input, process, output. Useful, but limited. Agentic AI flips this script. It functions like a teammate—aware of context, equipped with goals, and capable of making decisions dynamically.

Think of an AI-powered logistics agent that re-routes shipments in real time due to weather changes. Or a digital marketer that autonomously adjusts campaign budgets after detecting a competitor’s pricing shift. These are not programmed responses—they’re decisions born from reasoned judgment within a framework.

What Makes AI Agentic?

Agentic AI systems operate under a structured framework that mimics how humans make decisions. These include:

Autonomy – The agent doesn't need step-by-step instructions. It can act on its own.

Goal Orientation – It doesn’t just process commands; it strives to achieve objectives.

Context Awareness – It considers the environment before acting.

Learning Capability – It refines its decisions over time based on feedback or failures.

Together, these components form a closed-loop system—where perception, decision-making, and learning feed into one another continuously.

Anatomy of an Agentic Decision-Making Framework

Agentic frameworks vary in complexity, but most include these five layers:

Sensing the World (Input Layer)Collects real-time data from sources like APIs, sensors, or databases.

Internal Representation (Cognition Layer)Builds a working model of the world, allowing the AI to understand its current state and context.

Goal Engine (Motivation Layer)Prioritizes tasks based on long-term objectives or external prompts.

Planning & Action (Execution Layer)Crafts multi-step strategies using tools like reinforcement learning, planning trees, or LLM-driven task chaining.

Feedback Loop (Learning Layer)Adapts based on outcomes, refining its future actions.

A Glimpse into the Real World

Let’s step outside the lab and look at real use cases powered by agentic frameworks:

Autonomous Research Agents: Tools like AutoGPT and Devin can explore the web, collect data, analyze findings, and present insights—without human intervention at every step.

AI in Disaster Response: Agentic drones analyze weather data, identify safe evacuation routes, and adjust strategies as on-ground conditions evolve.

Smart Finance Agents: AI bots monitor stock trends, assess risk, rebalance portfolios, and even pause trades if unexpected market behavior is detected.

These agents are decision-makers, not just data-pushers.

Why This Matters Now

Agentic AI isn't just another upgrade—it’s a paradigm shift. As AI agents grow more capable, businesses will no longer rely on static automation. Instead, they’ll delegate outcomes, not just tasks. This opens up opportunities to:

Reduce decision latency in fast-moving industries

Scale expertise across geographies and time zones

Unlock creative potential by letting humans focus on high-level innovation while agents manage execution

The Challenge: Giving Freedom Without Losing Control

More freedom means more responsibility—and more risk. Agentic systems raise questions around:

Alignment: How do we ensure agents pursue the right goals in ethical ways?

Transparency: Can we explain their decisions in human terms?

Boundaries: How do we set limits without stifling autonomy?

The answer lies in governed frameworks—systems that blend flexibility with safety nets, just like human organizations do.

Final Thought: The Age of Agentic Intelligence

Agentic AI will not replace human decision-makers—but it will reshape the way we work, accelerating progress across fields from science to logistics, from design to diagnostics.

As we enter this era, success won’t just belong to those who use AI—but to those who can build, guide, and collaborate with these intelligent agents.

Because in the world of AI, it’s not just about smarter machines—it’s about smarter choices.

1 note

·

View note

Text

So I'm not a fan of ai art at all. But I've struggled for years to accurately capture my oldest OCs, Devin, on paper. He has a simple design but it never comes out right. I was curious and wanted to see if ai could nudge my brain in the right direction

And-

Y'all

We're going to be okay. Ai ain't gonna take art away from us. This is hilarious. How can something be so good and yet completely wrong??

What I asked for:

Native American male, tall, shoulder length black hair, dark brown eyes, wearing a grey tank top, black jacket, a necklace, cargo pants, with a cigarette

What I got:

Not bad, okay, but why are his eyes red?? Sir, where did the other half of your second necklace go?? Your pockets have thumb holes and for some reason... half a jacket?

Nice, his eyes are the right color at least but dude- hands?? I blame the floating hand on that one time he was a ghost. Also, sir, that is an excessive amount of necklaces and fix your shirt- WHERE IS THE OTHER HALF OF YOUR JACKET, AGAIN?!

At least there's two halves to his jacket this time but um ... hey Devin? What was the plot point where you LOST AN ARM?!

Soooooo many of these gave him red eyes

Why red eyes?

Devin are you hiding something from me?

You possessed?

Dev?

Talk to me you figment of my imagination

#still don't like ai art#but this was a fun little adventure#I'll try to draw Devin again#i can't fuck it up as much as ai#ai art#ai oc#oc#original character#ai generated#devin

0 notes

Text

How Google Cloud Workstations Reshape Federal Development

Teams are continuously challenged to provide creative solutions while maintaining the highest security standards in the difficult field of federal software development. It is easy to become overwhelmed by the intricacy of maintaining consistent development environments, scaling teams, and managing infrastructure. Devin Dickerson, Principal Analyst at Forrester, highlighted the findings of a commissioned TEI study on the effects of Google Cloud Workstations on the software development lifecycle in a recent webinar hosted by Forrester. The study focused on how these workstations can improve security, consistency, and agility while lowering expenses and risks.

The Numbers: A Forrester Total Economic Impact (TEI) Study

According to a TEI study that Forrester Consulting commissioned for Google Cloud in April 2024, cloud workstations have a big influence on development teams:

Executive Synopsis

Complex onboarding procedures, erratic workflow settings, and local code storage policies are common obstacles faced by organizations trying to grow their development teams. These issues hinder productivity and jeopardize business security. As a result, businesses are looking for a solution that gives developers a reliable and safe toolkit without requiring expensive on-premises resources.

Google Cloud Workstations give developers access to a safe, supervised development environment that streamlines onboarding and boosts workflow efficiency. Administrators and platform teams make preset workstations available to developers via browser or local IDE, allowing them to perform customisation as needed. To help developers solve code problems and create apps more quickly, Google Cloud Workstations come with a built-in interface with Gemini Code Assist, an AI-powered collaborator.

Forrester Consulting was hired by Google to carry out a Total Economic Impact (TEI) research to investigate the possible return on investment (ROI) that businesses could achieve through the use of Google Cloud Workstations. One This study aims to give readers a methodology for assessing the possible financial impact that cloud workstations may have on their companies.

Productivity gains for developers of 30%: Bottlenecks are removed via AI-powered solutions like Gemini Code Assist, pre-configured environments, and simplified onboarding.

Three-year 293% ROI: Cost savings and increased productivity make the investment in cloud workstations pay for itself quickly.

Department of Defense Experience

The industry expert described a concerning event that occurred while he was in the Navy: a contract developer lost his laptop while on the road, which may have exposed private data and put national security at risk. This warning story emphasizes how important it is to have safe cloud-based solutions, such as Google Cloud Workstations. Workstations reduce the possibility of lost or stolen devices and safeguard sensitive data by centralizing development environments and doing away with the requirement for local code storage. They also improve security and operational efficiency by streamlining onboarding procedures and guaranteeing that new developers have immediate access to safe, standardized environments.

Streamlining Government IT Modernization with Google Cloud Workstations

Google Cloud Workstations provide a powerful solution made especially to ease the difficulties government organizations encounter when developing software today:

Simplified Cloud-Native Development: Reduce the overhead of maintaining several development environments by managing and integrating complicated toolchains, dependencies, and cloud-native architectures with ease.

Decreased Platform Team Overhead: Simplify the processes for infrastructure provisioning, developer onboarding and offboarding, and maintenance to free up important resources for critical projects.

Standardized development environments reduce the infamous “works on my machine” issue and promote smooth teamwork by guaranteeing uniformity and repeatability across teams.

Enhanced Security & Compliance: Use FedRAMP to meet and surpass the strict federal security and compliance requirements. Comprehensive data protection is achieved by centralized administration, high authorization, and integrated security controls.

The Way Forward

Now FedRAMP High Authorized, Google Cloud Workstations are more than just a technical advancement they are a calculated investment in the creativity, security, and productivity of teams. Government agencies may save money, simplify processes, and free up developers to concentrate on what they do best creating innovative solutions that benefit the country by adopting this cloud-native solution.

Read more on Govindhtech.com

#GoogleCloudWorkstations#GoogleCloud#CloudWorkstation#GeminiCodeAssist#FedRAMP#GeminiCode#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Text

This Week in AI: OpenAI’s new Strawberry model may be smart, yet sluggish - Information Today Online https://www.merchant-business.com/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/?feed_id=196478&_unique_id=66e1ee96ca127 #GLOBAL - BLOGGER BLOGGER Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here.This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed.The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that can effectively fact-check itself, in the next two weeks. Strawberry will be a stand-alone product but will be integrated with ChatGPT, OpenAI’s AI-powered chatbot platform.Strawberry is reportedly better at programming and math problems than other top-end generative AI models (including OpenAI’s own GPT-4o). And it avoids some of the reasoning pitfalls that normally trip up those same models. But the improvements come at a cost: Strawberry is said to be slow — quite slow. Sources tell The Information that the model takes 10-20 seconds to answer a single question.Granted, OpenAI will likely position Strawberry as a model for mission-critical tasks where accuracy is paramount. This could resonate with businesses, many of which have grown frustrated with the limitations of today’s generative AI tech. A survey this week by HR specialist Peninsula found that inaccuracies are a key concern for 41% of firms exploring generative AI, and Gartner predicts that a third of all generative AI projects will be abandoned by the end of the year due to adoption blockers.But while some companies might not mind chatbot lag time, I think the average person will.Hallucinatory tendencies aside, today’s models are fast — incredibly fast. We’ve grown accustomed to this; the speed makes interactions feel more natural, in fact. If Strawberry’s “processing time” is indeed an order of magnitude longer than that of existing models, it’ll be challenging to avoid the perception that Strawberry is a step backward in some aspect.That’s assuming the best-case scenario: that Strawberry answers questions consistently correctly. If it’s still error-prone, like the reporting suggests, the lengthy wait times will be even tougher to swallow.OpenAI’s no doubt feeling the pressure to deliver as it burns through billions spending on AI training and staffing efforts. Its investors and potential new backers hope to see a return sooner rather than later, one imagines. But rushing to put out an unpolished model such as Strawberry — and considering charging substantially more for it — seems ill-advised.I’d think the wiser move would be to let the tech mature a bit. As the generative AI race grows fiercer, perhaps OpenAI doesn’t have the luxury.NewsApple rolls out visual search: The Camera Control, the new button on the iPhone 16 and 16 Plus, can launch what Apple calls “visual intelligence” — basically a reverse image search combined with some text recognition. The company is partnering with third parties, including Google, to power search results.Apple punts on AI: Devin writes about how many of Apple’s generative AI features are pretty basic when it comes down to it — contrary to what the company’s bombastic marketing would have you believe.Audible trains AI for audiobooks: Audible, Amazon’s audiobook business, said that it’ll use AI trained on professional narrators’ voices to generate new audiobook recordings. Narrators will be compensated for any audiobooks created using their AI voices on a title-by-title, royalty-sharing basis.Musk denies Tesla-xAI deal: Elon Musk has pushed back against a Wall Street Journal report that one of his companies, Tesla, has discussed sharing revenue with another of his companies, xAI, so that it can use the latter’s generative AI models.Bing gets deepfake-scrubbing tools: Microsoft says it’s collaborating with StopNCII — an organization that

allows victims of revenge porn to create a digital fingerprint of explicit images, real or not — to help remove nonconsensual porn from Bing search results.Google’s Ask Photos launches: Google’s AI-powered search feature Ask Photos began rolling out to select Google Photos users in the U.S. late last week. Ask Photos allows you to ask complex queries like “Show the best photo from each of the National Parks I visited,” “What did we order last time at this restaurant?,” and “Where did we camp last August?”U.S. and EU sign AI treaty: At a summit this past week, the U.S., U.K., and EU signed up to a treaty on AI safety laid out by the Council of Europe (COE), an international standards and human rights organization. The COE describes the treaty as “the first-ever international legally binding treaty aimed at ensuring that the use of AI systems is fully consistent with human rights, democracy and the rule of law.”Research paper of the weekEvery biological process depends on interactions between proteins, which occur when proteins bind together. “Binder” proteins — proteins that bind to specific target molecules — have applications in drug development, disease diagnosis, and more.But creating binder proteins is often a laborious and costly undertaking — and comes with a risk of failure.In search of an AI-powered solution, Google’s AI lab DeepMind developed AlphaProteo, a model that predicts proteins to bind to target molecules. Given a few parameters, AlphaProteo can output a candidate protein that binds to a molecule at a specified binding site.In tests with seven target molecules, AlphaProteo generated protein binders with 3x to 300x better “binding affinity” (i.e., molecule-binding strength) than previous binder-finding methods managed to create. Moreover, AlphaProteo became the first model to successfully develop a binder for a protein associated with cancer and complications arising from diabetes (VEGF-A).DeepMind admits, however, that AlphaProteo failed on an eighth testing attempt — and that strong binding is usually only the first step in creating proteins that might be useful for practical applications.Model of the weekThere’s a new, highly capable generative AI model in town — and anyone can download, fine-tune, and run it.The Allen Institute for AI (AI2), together with startup Contextual AI, developed a text-generating English-language model called OLMoE, which has a 7-billion-parameter mixture-of-experts (MoE) architecture. (“Parameters” roughly correspond to a model’s problem-solving skills, and models with more parameters generally — but not always — perform better than those with fewer parameters.)MoEs break down data processing tasks into subtasks and then delegate them to smaller, specialized “expert” models. They aren’t new. But what makes OLMoE noteworthy — besides the fact that it’s openly licensed — is the fact that it outperforms many models in its class, including Meta’s Llama 2, Google’s Gemma 2, and Mistral’s Mistral 7B, on a range of applications and benchmarks.Several variants of OLMoE, along with the data and code used to create them, are available on GitHub.Grab bagThis week was Apple week. The company held an event on Monday where it announced new iPhones, Apple Watch models, and apps. Here’s a rundown in case you weren’t able to tune in.Apple Intelligence, Apple’s suite of AI-powered services, predictably got airtime. Apple reaffirmed that ChatGPT would be integrated with the experience in several key ways. But curiously, there wasn’t any mention of AI partnerships beyond the previously announced OpenAI deal — despite Apple lightly telegraphing such partnerships earlier this summer.In June at WWDC 2024, SVP Craig Federighi confirmed Apple’s plans to work with additional third-party models, including Google’s Gemini, in the future. “Nothing to announce right now,” he said, “but that’s our general direction.”It’s been radio silence since.Perhaps the necessary paperwork is taking longer to hammer out than expected — or there’s been a technical setback.

Or maybe Apple’s possible investment in OpenAI rubbed some model partners the wrong way.Whatever the case may be, it seems that ChatGPT will be the solo third-party model in Apple Intelligence for the foreseeable future. Sorry, Gemini fans.“Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent,…”Source Link: https://techcrunch.com/2024/09/11/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/ http://109.70.148.72/~merchant29/6network/wp-content/uploads/2024/09/g283129d657ecc140b1f7bad07ed993fbb582776a1dc0e050d309b3cf73c1d0d193a94cad6a7badf8026d04282cbb3ec2632.jpeg Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed. The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that … Read More

0 notes

Text

This Week in AI: OpenAI’s new Strawberry model may be smart, yet sluggish - Information Today Online - #GLOBAL https://www.merchant-business.com/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/?feed_id=196477&_unique_id=66e1ee95d8faf Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here.This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed.The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that can effectively fact-check itself, in the next two weeks. Strawberry will be a stand-alone product but will be integrated with ChatGPT, OpenAI’s AI-powered chatbot platform.Strawberry is reportedly better at programming and math problems than other top-end generative AI models (including OpenAI’s own GPT-4o). And it avoids some of the reasoning pitfalls that normally trip up those same models. But the improvements come at a cost: Strawberry is said to be slow — quite slow. Sources tell The Information that the model takes 10-20 seconds to answer a single question.Granted, OpenAI will likely position Strawberry as a model for mission-critical tasks where accuracy is paramount. This could resonate with businesses, many of which have grown frustrated with the limitations of today’s generative AI tech. A survey this week by HR specialist Peninsula found that inaccuracies are a key concern for 41% of firms exploring generative AI, and Gartner predicts that a third of all generative AI projects will be abandoned by the end of the year due to adoption blockers.But while some companies might not mind chatbot lag time, I think the average person will.Hallucinatory tendencies aside, today’s models are fast — incredibly fast. We’ve grown accustomed to this; the speed makes interactions feel more natural, in fact. If Strawberry’s “processing time” is indeed an order of magnitude longer than that of existing models, it’ll be challenging to avoid the perception that Strawberry is a step backward in some aspect.That’s assuming the best-case scenario: that Strawberry answers questions consistently correctly. If it’s still error-prone, like the reporting suggests, the lengthy wait times will be even tougher to swallow.OpenAI’s no doubt feeling the pressure to deliver as it burns through billions spending on AI training and staffing efforts. Its investors and potential new backers hope to see a return sooner rather than later, one imagines. But rushing to put out an unpolished model such as Strawberry — and considering charging substantially more for it — seems ill-advised.I’d think the wiser move would be to let the tech mature a bit. As the generative AI race grows fiercer, perhaps OpenAI doesn’t have the luxury.NewsApple rolls out visual search: The Camera Control, the new button on the iPhone 16 and 16 Plus, can launch what Apple calls “visual intelligence” — basically a reverse image search combined with some text recognition. The company is partnering with third parties, including Google, to power search results.Apple punts on AI: Devin writes about how many of Apple’s generative AI features are pretty basic when it comes down to it — contrary to what the company’s bombastic marketing would have you believe.Audible trains AI for audiobooks: Audible, Amazon’s audiobook business, said that it’ll use AI trained on professional narrators’ voices to generate new audiobook recordings. Narrators will be compensated for any audiobooks created using their AI voices on a title-by-title, royalty-sharing basis.Musk denies Tesla-xAI deal: Elon Musk has pushed back against a Wall Street Journal report that one of his companies, Tesla, has discussed sharing revenue with another of his companies, xAI, so that it can use the latter’s generative AI models.Bing gets deepfake-scrubbing tools: Microsoft says it’s collaborating with StopNCII — an organization that allows victims

of revenge porn to create a digital fingerprint of explicit images, real or not — to help remove nonconsensual porn from Bing search results.Google’s Ask Photos launches: Google’s AI-powered search feature Ask Photos began rolling out to select Google Photos users in the U.S. late last week. Ask Photos allows you to ask complex queries like “Show the best photo from each of the National Parks I visited,” “What did we order last time at this restaurant?,” and “Where did we camp last August?”U.S. and EU sign AI treaty: At a summit this past week, the U.S., U.K., and EU signed up to a treaty on AI safety laid out by the Council of Europe (COE), an international standards and human rights organization. The COE describes the treaty as “the first-ever international legally binding treaty aimed at ensuring that the use of AI systems is fully consistent with human rights, democracy and the rule of law.”Research paper of the weekEvery biological process depends on interactions between proteins, which occur when proteins bind together. “Binder” proteins — proteins that bind to specific target molecules — have applications in drug development, disease diagnosis, and more.But creating binder proteins is often a laborious and costly undertaking — and comes with a risk of failure.In search of an AI-powered solution, Google’s AI lab DeepMind developed AlphaProteo, a model that predicts proteins to bind to target molecules. Given a few parameters, AlphaProteo can output a candidate protein that binds to a molecule at a specified binding site.In tests with seven target molecules, AlphaProteo generated protein binders with 3x to 300x better “binding affinity” (i.e., molecule-binding strength) than previous binder-finding methods managed to create. Moreover, AlphaProteo became the first model to successfully develop a binder for a protein associated with cancer and complications arising from diabetes (VEGF-A).DeepMind admits, however, that AlphaProteo failed on an eighth testing attempt — and that strong binding is usually only the first step in creating proteins that might be useful for practical applications.Model of the weekThere’s a new, highly capable generative AI model in town — and anyone can download, fine-tune, and run it.The Allen Institute for AI (AI2), together with startup Contextual AI, developed a text-generating English-language model called OLMoE, which has a 7-billion-parameter mixture-of-experts (MoE) architecture. (“Parameters” roughly correspond to a model’s problem-solving skills, and models with more parameters generally — but not always — perform better than those with fewer parameters.)MoEs break down data processing tasks into subtasks and then delegate them to smaller, specialized “expert” models. They aren’t new. But what makes OLMoE noteworthy — besides the fact that it’s openly licensed — is the fact that it outperforms many models in its class, including Meta’s Llama 2, Google’s Gemma 2, and Mistral’s Mistral 7B, on a range of applications and benchmarks.Several variants of OLMoE, along with the data and code used to create them, are available on GitHub.Grab bagThis week was Apple week. The company held an event on Monday where it announced new iPhones, Apple Watch models, and apps. Here’s a rundown in case you weren’t able to tune in.Apple Intelligence, Apple’s suite of AI-powered services, predictably got airtime. Apple reaffirmed that ChatGPT would be integrated with the experience in several key ways. But curiously, there wasn’t any mention of AI partnerships beyond the previously announced OpenAI deal — despite Apple lightly telegraphing such partnerships earlier this summer.In June at WWDC 2024, SVP Craig Federighi confirmed Apple’s plans to work with additional third-party models, including Google’s Gemini, in the future. “Nothing to announce right now,” he said, “but that’s our general direction.”It’s been radio silence since.Perhaps the necessary paperwork is taking longer to hammer out than expected — or there’s been a technical setback.

Or maybe Apple’s possible investment in OpenAI rubbed some model partners the wrong way.Whatever the case may be, it seems that ChatGPT will be the solo third-party model in Apple Intelligence for the foreseeable future. Sorry, Gemini fans.“Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent,…”Source Link: https://techcrunch.com/2024/09/11/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/ http://109.70.148.72/~merchant29/6network/wp-content/uploads/2024/09/g283129d657ecc140b1f7bad07ed993fbb582776a1dc0e050d309b3cf73c1d0d193a94cad6a7badf8026d04282cbb3ec2632.jpeg BLOGGER - #GLOBAL

0 notes

Text

This Week in AI: OpenAI’s new Strawberry model may be smart, yet sluggish - Information Today Online - BLOGGER https://www.merchant-business.com/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/?feed_id=196476&_unique_id=66e1ee94dcab9 Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here.This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed.The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that can effectively fact-check itself, in the next two weeks. Strawberry will be a stand-alone product but will be integrated with ChatGPT, OpenAI’s AI-powered chatbot platform.Strawberry is reportedly better at programming and math problems than other top-end generative AI models (including OpenAI’s own GPT-4o). And it avoids some of the reasoning pitfalls that normally trip up those same models. But the improvements come at a cost: Strawberry is said to be slow — quite slow. Sources tell The Information that the model takes 10-20 seconds to answer a single question.Granted, OpenAI will likely position Strawberry as a model for mission-critical tasks where accuracy is paramount. This could resonate with businesses, many of which have grown frustrated with the limitations of today’s generative AI tech. A survey this week by HR specialist Peninsula found that inaccuracies are a key concern for 41% of firms exploring generative AI, and Gartner predicts that a third of all generative AI projects will be abandoned by the end of the year due to adoption blockers.But while some companies might not mind chatbot lag time, I think the average person will.Hallucinatory tendencies aside, today’s models are fast — incredibly fast. We’ve grown accustomed to this; the speed makes interactions feel more natural, in fact. If Strawberry’s “processing time” is indeed an order of magnitude longer than that of existing models, it’ll be challenging to avoid the perception that Strawberry is a step backward in some aspect.That’s assuming the best-case scenario: that Strawberry answers questions consistently correctly. If it’s still error-prone, like the reporting suggests, the lengthy wait times will be even tougher to swallow.OpenAI’s no doubt feeling the pressure to deliver as it burns through billions spending on AI training and staffing efforts. Its investors and potential new backers hope to see a return sooner rather than later, one imagines. But rushing to put out an unpolished model such as Strawberry — and considering charging substantially more for it — seems ill-advised.I’d think the wiser move would be to let the tech mature a bit. As the generative AI race grows fiercer, perhaps OpenAI doesn’t have the luxury.NewsApple rolls out visual search: The Camera Control, the new button on the iPhone 16 and 16 Plus, can launch what Apple calls “visual intelligence” — basically a reverse image search combined with some text recognition. The company is partnering with third parties, including Google, to power search results.Apple punts on AI: Devin writes about how many of Apple’s generative AI features are pretty basic when it comes down to it — contrary to what the company’s bombastic marketing would have you believe.Audible trains AI for audiobooks: Audible, Amazon’s audiobook business, said that it’ll use AI trained on professional narrators’ voices to generate new audiobook recordings. Narrators will be compensated for any audiobooks created using their AI voices on a title-by-title, royalty-sharing basis.Musk denies Tesla-xAI deal: Elon Musk has pushed back against a Wall Street Journal report that one of his companies, Tesla, has discussed sharing revenue with another of his companies, xAI, so that it can use the latter’s generative AI models.Bing gets deepfake-scrubbing tools: Microsoft says it’s collaborating with StopNCII — an organization that allows victims

of revenge porn to create a digital fingerprint of explicit images, real or not — to help remove nonconsensual porn from Bing search results.Google’s Ask Photos launches: Google’s AI-powered search feature Ask Photos began rolling out to select Google Photos users in the U.S. late last week. Ask Photos allows you to ask complex queries like “Show the best photo from each of the National Parks I visited,” “What did we order last time at this restaurant?,” and “Where did we camp last August?”U.S. and EU sign AI treaty: At a summit this past week, the U.S., U.K., and EU signed up to a treaty on AI safety laid out by the Council of Europe (COE), an international standards and human rights organization. The COE describes the treaty as “the first-ever international legally binding treaty aimed at ensuring that the use of AI systems is fully consistent with human rights, democracy and the rule of law.”Research paper of the weekEvery biological process depends on interactions between proteins, which occur when proteins bind together. “Binder” proteins — proteins that bind to specific target molecules — have applications in drug development, disease diagnosis, and more.But creating binder proteins is often a laborious and costly undertaking — and comes with a risk of failure.In search of an AI-powered solution, Google’s AI lab DeepMind developed AlphaProteo, a model that predicts proteins to bind to target molecules. Given a few parameters, AlphaProteo can output a candidate protein that binds to a molecule at a specified binding site.In tests with seven target molecules, AlphaProteo generated protein binders with 3x to 300x better “binding affinity” (i.e., molecule-binding strength) than previous binder-finding methods managed to create. Moreover, AlphaProteo became the first model to successfully develop a binder for a protein associated with cancer and complications arising from diabetes (VEGF-A).DeepMind admits, however, that AlphaProteo failed on an eighth testing attempt — and that strong binding is usually only the first step in creating proteins that might be useful for practical applications.Model of the weekThere’s a new, highly capable generative AI model in town — and anyone can download, fine-tune, and run it.The Allen Institute for AI (AI2), together with startup Contextual AI, developed a text-generating English-language model called OLMoE, which has a 7-billion-parameter mixture-of-experts (MoE) architecture. (“Parameters” roughly correspond to a model’s problem-solving skills, and models with more parameters generally — but not always — perform better than those with fewer parameters.)MoEs break down data processing tasks into subtasks and then delegate them to smaller, specialized “expert” models. They aren’t new. But what makes OLMoE noteworthy — besides the fact that it’s openly licensed — is the fact that it outperforms many models in its class, including Meta’s Llama 2, Google’s Gemma 2, and Mistral’s Mistral 7B, on a range of applications and benchmarks.Several variants of OLMoE, along with the data and code used to create them, are available on GitHub.Grab bagThis week was Apple week. The company held an event on Monday where it announced new iPhones, Apple Watch models, and apps. Here’s a rundown in case you weren’t able to tune in.Apple Intelligence, Apple’s suite of AI-powered services, predictably got airtime. Apple reaffirmed that ChatGPT would be integrated with the experience in several key ways. But curiously, there wasn’t any mention of AI partnerships beyond the previously announced OpenAI deal — despite Apple lightly telegraphing such partnerships earlier this summer.In June at WWDC 2024, SVP Craig Federighi confirmed Apple’s plans to work with additional third-party models, including Google’s Gemini, in the future. “Nothing to announce right now,” he said, “but that’s our general direction.”It’s been radio silence since.Perhaps the necessary paperwork is taking longer to hammer out than expected — or there’s been a technical setback.

Or maybe Apple’s possible investment in OpenAI rubbed some model partners the wrong way.Whatever the case may be, it seems that ChatGPT will be the solo third-party model in Apple Intelligence for the foreseeable future. Sorry, Gemini fans.“Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent,…”Source Link: https://techcrunch.com/2024/09/11/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/ http://109.70.148.72/~merchant29/6network/wp-content/uploads/2024/09/g283129d657ecc140b1f7bad07ed993fbb582776a1dc0e050d309b3cf73c1d0d193a94cad6a7badf8026d04282cbb3ec2632.jpeg #GLOBAL - BLOGGER Hiya, folks, welcome to TechCrunch’... BLOGGER - #GLOBAL

0 notes

Text

This Week in AI: OpenAI’s new Strawberry model may be smart, yet sluggish - Information Today Online - BLOGGER https://www.merchant-business.com/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/?feed_id=196475&_unique_id=66e1ee93f4084 Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here.This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed.The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that can effectively fact-check itself, in the next two weeks. Strawberry will be a stand-alone product but will be integrated with ChatGPT, OpenAI’s AI-powered chatbot platform.Strawberry is reportedly better at programming and math problems than other top-end generative AI models (including OpenAI’s own GPT-4o). And it avoids some of the reasoning pitfalls that normally trip up those same models. But the improvements come at a cost: Strawberry is said to be slow — quite slow. Sources tell The Information that the model takes 10-20 seconds to answer a single question.Granted, OpenAI will likely position Strawberry as a model for mission-critical tasks where accuracy is paramount. This could resonate with businesses, many of which have grown frustrated with the limitations of today’s generative AI tech. A survey this week by HR specialist Peninsula found that inaccuracies are a key concern for 41% of firms exploring generative AI, and Gartner predicts that a third of all generative AI projects will be abandoned by the end of the year due to adoption blockers.But while some companies might not mind chatbot lag time, I think the average person will.Hallucinatory tendencies aside, today’s models are fast — incredibly fast. We’ve grown accustomed to this; the speed makes interactions feel more natural, in fact. If Strawberry’s “processing time” is indeed an order of magnitude longer than that of existing models, it’ll be challenging to avoid the perception that Strawberry is a step backward in some aspect.That’s assuming the best-case scenario: that Strawberry answers questions consistently correctly. If it’s still error-prone, like the reporting suggests, the lengthy wait times will be even tougher to swallow.OpenAI’s no doubt feeling the pressure to deliver as it burns through billions spending on AI training and staffing efforts. Its investors and potential new backers hope to see a return sooner rather than later, one imagines. But rushing to put out an unpolished model such as Strawberry — and considering charging substantially more for it — seems ill-advised.I’d think the wiser move would be to let the tech mature a bit. As the generative AI race grows fiercer, perhaps OpenAI doesn’t have the luxury.NewsApple rolls out visual search: The Camera Control, the new button on the iPhone 16 and 16 Plus, can launch what Apple calls “visual intelligence” — basically a reverse image search combined with some text recognition. The company is partnering with third parties, including Google, to power search results.Apple punts on AI: Devin writes about how many of Apple’s generative AI features are pretty basic when it comes down to it — contrary to what the company’s bombastic marketing would have you believe.Audible trains AI for audiobooks: Audible, Amazon’s audiobook business, said that it’ll use AI trained on professional narrators’ voices to generate new audiobook recordings. Narrators will be compensated for any audiobooks created using their AI voices on a title-by-title, royalty-sharing basis.Musk denies Tesla-xAI deal: Elon Musk has pushed back against a Wall Street Journal report that one of his companies, Tesla, has discussed sharing revenue with another of his companies, xAI, so that it can use the latter’s generative AI models.Bing gets deepfake-scrubbing tools: Microsoft says it’s collaborating with StopNCII — an organization that allows victims

of revenge porn to create a digital fingerprint of explicit images, real or not — to help remove nonconsensual porn from Bing search results.Google’s Ask Photos launches: Google’s AI-powered search feature Ask Photos began rolling out to select Google Photos users in the U.S. late last week. Ask Photos allows you to ask complex queries like “Show the best photo from each of the National Parks I visited,” “What did we order last time at this restaurant?,” and “Where did we camp last August?”U.S. and EU sign AI treaty: At a summit this past week, the U.S., U.K., and EU signed up to a treaty on AI safety laid out by the Council of Europe (COE), an international standards and human rights organization. The COE describes the treaty as “the first-ever international legally binding treaty aimed at ensuring that the use of AI systems is fully consistent with human rights, democracy and the rule of law.”Research paper of the weekEvery biological process depends on interactions between proteins, which occur when proteins bind together. “Binder” proteins — proteins that bind to specific target molecules — have applications in drug development, disease diagnosis, and more.But creating binder proteins is often a laborious and costly undertaking — and comes with a risk of failure.In search of an AI-powered solution, Google’s AI lab DeepMind developed AlphaProteo, a model that predicts proteins to bind to target molecules. Given a few parameters, AlphaProteo can output a candidate protein that binds to a molecule at a specified binding site.In tests with seven target molecules, AlphaProteo generated protein binders with 3x to 300x better “binding affinity” (i.e., molecule-binding strength) than previous binder-finding methods managed to create. Moreover, AlphaProteo became the first model to successfully develop a binder for a protein associated with cancer and complications arising from diabetes (VEGF-A).DeepMind admits, however, that AlphaProteo failed on an eighth testing attempt — and that strong binding is usually only the first step in creating proteins that might be useful for practical applications.Model of the weekThere’s a new, highly capable generative AI model in town — and anyone can download, fine-tune, and run it.The Allen Institute for AI (AI2), together with startup Contextual AI, developed a text-generating English-language model called OLMoE, which has a 7-billion-parameter mixture-of-experts (MoE) architecture. (“Parameters” roughly correspond to a model’s problem-solving skills, and models with more parameters generally — but not always — perform better than those with fewer parameters.)MoEs break down data processing tasks into subtasks and then delegate them to smaller, specialized “expert” models. They aren’t new. But what makes OLMoE noteworthy — besides the fact that it’s openly licensed — is the fact that it outperforms many models in its class, including Meta’s Llama 2, Google’s Gemma 2, and Mistral’s Mistral 7B, on a range of applications and benchmarks.Several variants of OLMoE, along with the data and code used to create them, are available on GitHub.Grab bagThis week was Apple week. The company held an event on Monday where it announced new iPhones, Apple Watch models, and apps. Here’s a rundown in case you weren’t able to tune in.Apple Intelligence, Apple’s suite of AI-powered services, predictably got airtime. Apple reaffirmed that ChatGPT would be integrated with the experience in several key ways. But curiously, there wasn’t any mention of AI partnerships beyond the previously announced OpenAI deal — despite Apple lightly telegraphing such partnerships earlier this summer.In June at WWDC 2024, SVP Craig Federighi confirmed Apple’s plans to work with additional third-party models, including Google’s Gemini, in the future. “Nothing to announce right now,” he said, “but that’s our general direction.”It’s been radio silence since.Perhaps the necessary paperwork is taking longer to hammer out than expected — or there’s been a technical setback.

Or maybe Apple’s possible investment in OpenAI rubbed some model partners the wrong way.Whatever the case may be, it seems that ChatGPT will be the solo third-party model in Apple Intelligence for the foreseeable future. Sorry, Gemini fans.“Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent,…”Source Link: https://techcrunch.com/2024/09/11/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/ http://109.70.148.72/~merchant29/6network/wp-content/uploads/2024/09/g283129d657ecc140b1f7bad07ed993fbb582776a1dc0e050d309b3cf73c1d0d193a94cad6a7badf8026d04282cbb3ec2632.jpeg BLOGGER - #GLOBAL Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed. The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that … Read More

0 notes

Text

This Week in AI: OpenAI’s new Strawberry model may be smart, yet sluggish - Information Today Online https://www.merchant-business.com/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/?feed_id=196474&_unique_id=66e1ee9302dd1 Hiya, folks, welcome to TechCrunch’... BLOGGER - #GLOBAL Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here.This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed.The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that can effectively fact-check itself, in the next two weeks. Strawberry will be a stand-alone product but will be integrated with ChatGPT, OpenAI’s AI-powered chatbot platform.Strawberry is reportedly better at programming and math problems than other top-end generative AI models (including OpenAI’s own GPT-4o). And it avoids some of the reasoning pitfalls that normally trip up those same models. But the improvements come at a cost: Strawberry is said to be slow — quite slow. Sources tell The Information that the model takes 10-20 seconds to answer a single question.Granted, OpenAI will likely position Strawberry as a model for mission-critical tasks where accuracy is paramount. This could resonate with businesses, many of which have grown frustrated with the limitations of today’s generative AI tech. A survey this week by HR specialist Peninsula found that inaccuracies are a key concern for 41% of firms exploring generative AI, and Gartner predicts that a third of all generative AI projects will be abandoned by the end of the year due to adoption blockers.But while some companies might not mind chatbot lag time, I think the average person will.Hallucinatory tendencies aside, today’s models are fast — incredibly fast. We’ve grown accustomed to this; the speed makes interactions feel more natural, in fact. If Strawberry’s “processing time” is indeed an order of magnitude longer than that of existing models, it’ll be challenging to avoid the perception that Strawberry is a step backward in some aspect.That’s assuming the best-case scenario: that Strawberry answers questions consistently correctly. If it’s still error-prone, like the reporting suggests, the lengthy wait times will be even tougher to swallow.OpenAI’s no doubt feeling the pressure to deliver as it burns through billions spending on AI training and staffing efforts. Its investors and potential new backers hope to see a return sooner rather than later, one imagines. But rushing to put out an unpolished model such as Strawberry — and considering charging substantially more for it — seems ill-advised.I’d think the wiser move would be to let the tech mature a bit. As the generative AI race grows fiercer, perhaps OpenAI doesn’t have the luxury.NewsApple rolls out visual search: The Camera Control, the new button on the iPhone 16 and 16 Plus, can launch what Apple calls “visual intelligence” — basically a reverse image search combined with some text recognition. The company is partnering with third parties, including Google, to power search results.Apple punts on AI: Devin writes about how many of Apple’s generative AI features are pretty basic when it comes down to it — contrary to what the company’s bombastic marketing would have you believe.Audible trains AI for audiobooks: Audible, Amazon’s audiobook business, said that it’ll use AI trained on professional narrators’ voices to generate new audiobook recordings. Narrators will be compensated for any audiobooks created using their AI voices on a title-by-title, royalty-sharing basis.Musk denies Tesla-xAI deal: Elon Musk has pushed back against a Wall Street Journal report that one of his companies, Tesla, has discussed sharing revenue with another of his companies, xAI, so that it can use the latter’s generative AI models.Bing gets deepfake-scrubbing tools: Microsoft says it’s collaborating with StopNCII

— an organization that allows victims of revenge porn to create a digital fingerprint of explicit images, real or not — to help remove nonconsensual porn from Bing search results.Google’s Ask Photos launches: Google’s AI-powered search feature Ask Photos began rolling out to select Google Photos users in the U.S. late last week. Ask Photos allows you to ask complex queries like “Show the best photo from each of the National Parks I visited,” “What did we order last time at this restaurant?,” and “Where did we camp last August?”U.S. and EU sign AI treaty: At a summit this past week, the U.S., U.K., and EU signed up to a treaty on AI safety laid out by the Council of Europe (COE), an international standards and human rights organization. The COE describes the treaty as “the first-ever international legally binding treaty aimed at ensuring that the use of AI systems is fully consistent with human rights, democracy and the rule of law.”Research paper of the weekEvery biological process depends on interactions between proteins, which occur when proteins bind together. “Binder” proteins — proteins that bind to specific target molecules — have applications in drug development, disease diagnosis, and more.But creating binder proteins is often a laborious and costly undertaking — and comes with a risk of failure.In search of an AI-powered solution, Google’s AI lab DeepMind developed AlphaProteo, a model that predicts proteins to bind to target molecules. Given a few parameters, AlphaProteo can output a candidate protein that binds to a molecule at a specified binding site.In tests with seven target molecules, AlphaProteo generated protein binders with 3x to 300x better “binding affinity” (i.e., molecule-binding strength) than previous binder-finding methods managed to create. Moreover, AlphaProteo became the first model to successfully develop a binder for a protein associated with cancer and complications arising from diabetes (VEGF-A).DeepMind admits, however, that AlphaProteo failed on an eighth testing attempt — and that strong binding is usually only the first step in creating proteins that might be useful for practical applications.Model of the weekThere’s a new, highly capable generative AI model in town — and anyone can download, fine-tune, and run it.The Allen Institute for AI (AI2), together with startup Contextual AI, developed a text-generating English-language model called OLMoE, which has a 7-billion-parameter mixture-of-experts (MoE) architecture. (“Parameters” roughly correspond to a model’s problem-solving skills, and models with more parameters generally — but not always — perform better than those with fewer parameters.)MoEs break down data processing tasks into subtasks and then delegate them to smaller, specialized “expert” models. They aren’t new. But what makes OLMoE noteworthy — besides the fact that it’s openly licensed — is the fact that it outperforms many models in its class, including Meta’s Llama 2, Google’s Gemma 2, and Mistral’s Mistral 7B, on a range of applications and benchmarks.Several variants of OLMoE, along with the data and code used to create them, are available on GitHub.Grab bagThis week was Apple week. The company held an event on Monday where it announced new iPhones, Apple Watch models, and apps. Here’s a rundown in case you weren’t able to tune in.Apple Intelligence, Apple’s suite of AI-powered services, predictably got airtime. Apple reaffirmed that ChatGPT would be integrated with the experience in several key ways. But curiously, there wasn’t any mention of AI partnerships beyond the previously announced OpenAI deal — despite Apple lightly telegraphing such partnerships earlier this summer.In June at WWDC 2024, SVP Craig Federighi confirmed Apple’s plans to work with additional third-party models, including Google’s Gemini, in the future. “Nothing to announce right now,” he said, “but that’s our general direction.”It’s been radio silence since.Perhaps the necessary paperwork is taking longer to hammer out than expected — or there’s been a technical setback.

Or maybe Apple’s possible investment in OpenAI rubbed some model partners the wrong way.Whatever the case may be, it seems that ChatGPT will be the solo third-party model in Apple Intelligence for the foreseeable future. Sorry, Gemini fans.“Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent,…”Source Link: https://techcrunch.com/2024/09/11/this-week-in-ai-openais-new-strawberry-model-may-be-smart-yet-sluggish/ http://109.70.148.72/~merchant29/6network/wp-content/uploads/2024/09/g283129d657ecc140b1f7bad07ed993fbb582776a1dc0e050d309b3cf73c1d0d193a94cad6a7badf8026d04282cbb3ec2632.jpeg #GLOBAL - BLOGGER Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up here. This week in AI, OpenAI’s next major product announcement is imminent, if a piece in The Information is to be believed. The Information reported on Tuesday that OpenAI plans to release Strawberry, an AI model that … Read More

0 notes

Text