#How to Transfer Table Data from One Database to Another Database

Explore tagged Tumblr posts

Text

Adaptation

masterpost

---

After the last hectic few weeks, things had finally died down a little bit again. Kelth had reached out to Kali to gauge interest about their idea for relics. It had seemed enthusiastic and had agreed to split the results fifty-fifty, so now they were paying extra attention on missions, to find more of the rocks to build up a first batch for it to pick up.

Missions which were sadly just paid contracts from various allied factions, and not to recover Ordis’ drives. After Vince, their resource stores and credit wallet had been painfully depleted - not that they were about to tell him that. He seemed to have enough on his mind already, which he did try to hide during their morning sit-downs with him to figure out his abilities, but it seemed that the pace at which he initially picked transference up didn’t extend to actually mastering it yet.

It helped that Vince had a lot to distract him, and Kelth along with him. His abilities seemed to be very maths-heavy, requiring a lot of split-second precision calculations, which he was doing almost subconsciously. When they asked him what all the numbers flying by in his head meant, he had sat down with them that evening for what had turned into a several-hours-long interdimensional maths masterclass, and to their surprise, it had actually made sense. Vince had seemed to enjoy teaching, and had complimented them on what he’d assumed to be a background in this field - which had confused them.

Did Kelth have a background in this? They didn’t know - they still didn’t remember much of anything from before they had woken up to Grineer soldiers stabbing a device into Sufford’s thigh, and beyond that only had the recurring nightmare of running down endless broken-down ship hallways. But - if they remembered this correctly - Ordis had felt familiar on that first horrible day. They’d known him before. They could ask him.

It was too bad that their life was a busy one now, with a spaceship, two warframes and their own human self to look after - so they only remembered it once Sufford and Vince were out. The two warframes had gone to a nearby relay’s shooting range for target practice, and Kelth had been left alone on board, and had decided to organise their brain on what resources they were missing with Ordis, when the idea came back to them.

“-we might have to find another Grineer base, then, see if they have- ohh, wait.”

“Hm? What is it?” Ordis asked when they took a little too long trying to formulate their thoughts.

“I had a question I’d wanted to ask you,” they started, stalling while trying to remember it, “it was something I thought of when- Oh!” There it was. “Do you remember anything from before all this? Before all the endless fighting? Because I don’t.”

Ordis’ table hologram flickered as he hummed. “Hmm. I can’t remember any specifics, no. The data I have on the Old War came from historical databanks. Ordis doesn’t even remember how he ended up on Earth on the day you awoke - I was brought back the same moment you were, it seems.”

“Oh,” Kelth said, a little disappointed. “I thought- I guess I’m just curious about what I did before all this. I can’t have been an operator forever, right? There’s got to be something from before. How did I even end up on Lua, for example?”

“Hmm… Oh, Ordis does have some files that weren’t authored by the Orokin in his database. But- I can’t fucking look at them- ahem, they are inaccessible to me. Maybe you created them? They might be a journal of some sort that you kept?”

Kelth blinked up at the hologram. It was weird that he had files he couldn’t access, so this might be a lead for his own glitch-related problems, on top of their own history. “Huh? I don’t remember ever keeping a journal, but that doesn’t mean much - can you send their location to my datapad?”

“Sent. Did you-”

“Got it,” they said, connecting to his local filesystem instead of the pad’s own. There were indeed some files in the folder he’d pointed them at with non-standard names - w1, w2, and so on. The file extension wasn’t like other text files, but looked familiar, too, though they couldn’t immediately place it. They opened the file called w1.

It contained text, but not to read - it was formatted weirdly but consistently, and a lot of it was symbol soup.

“Oh, this is program code,” they realised after a second of confused staring. And then looked back at the filenames. “Wait, is this old homework? There’s one per week.”

“Oh, that’s interesting,” Ordis said, sounding intrigued. “Can you make sense of the contents?”

Kelth scanned through the blocks of words and symbols, and - “I think so? I’d need to read them more thoroughly, but I think there’s- what were they called, comments? There’s lots of them in here, but I feel like I’m missing something. They’re not as easy to find as they should be?”

“Okay,” Ordis said. “Do you- wait, how are you looking at the files?”

“Uh-” Kelth said, confused, glancing up at Ordis’ hologram. “At the, uh. Datapad?”

“No, no- The program you’re using to look at them. What is it?”

“Oh, just the basic text editor?”

“Hm,” Ordis said. The screen of the datapad flickered between tabs rapidly for a moment. Then, an installation wizard appeared, and Ordis ran through the settings even faster. Finally, they were looking at a progress bar, filling up slowly with irregular intervals. “There, try opening the files in that program, once it’s done installing,” he said.

“Oh, sure, thanks- I’d forgotten those existed, what were they called?”

“Integrated development environments, or IDEs,” Ordis said. “Are your memories coming back?”

“It feels more like they’re being dragged back forcefully, but yes, very slowly, at least for this stuff,” Kelth said, feeling giddy. The progress bar filled up and the wizard closed itself. The icons of the code files had changed to the icon of the new IDE, so they opened them again with confidence.

And they did load into a much fancier text editor this time - with a dark background, several bars of options at the top and a file viewer on the side, and parts of the actual code in different colours. And suddenly, it clicked.

Kelth sucked in a breath as their eyes flew over the lines, identifying the relevant parts of comments and function and variables names and logic, putting the functionality together in their head-

“I take it the IDE is doing its job?” Ordis asked, amused, when they forgot to say anything again. They snorted.

“Yeah,” they said, “it’s all making a lot more sense now. I think I’ll just keep reading through this for now, actually, see if anything else comes back.”

“Do let me know if there’s something Ordis could help to figure out,” he said. “Or when you want to try actually running the code to see what it does.”

Kelth turned to his hologram, with a performance of puppy eyes they privately thought must’ve been their best one yet. The hologram shook as Ordis laughed.

A pointer in the form of a cracked blue cube appeared on their screen, pointing at a green triangle icon in one of the top bars of the IDE. “You can press this one to compile and run,” he said, before moving the cursor elsewhere. “And if you press this one-”

—

A while later, their head hurting just a little bit from all the newly-remembered information, they turned off the datapad and sat back with a great big sigh.

“That’s going to be a lot of work, but it’s interesting, and I think it can be useful,” they said, happy-tired.

“And you’ve done it once before already, or at least the start of it,” Ordis added. “And it can certainly be useful! You could write algorithms for things, I-O-Ordis can’t.”

“You can’t?” they repeated, confused. “Wait, yeah, you mentioned that - why do you not have access to your own files?”

“Ordis is afraid that- Ordis has no fucking clue- there’s a barrier of some kind, there.”

Kelth stared at the hologram - when Ordis glitched, it briefly jittered out of focus. They were quiet just a little too long, because he let out a bitter laugh.

“Maybe you could learn to fix that, and this glitch while you’re at it,” he said, tone self-deprecating, sounding like he had been aiming for joking but had missed completely.

“That sounds- not impossible, actually,” Kelth said, careful. “I can at least take a look, right?”

Ordis was quiet for a beat, before he tried to speak three thoughts at once. “I- Ordis hadn’t- that was just a joke, you don’t-”

“No, hey, once I get a bit better at this, I can absolutely take a look for you,” they said, now a little more firm, a little more concerned. Ordis might not feel like he was worth the effort, but he was to them.

He sighed deeply. “Ordis is- really not sure how he feels about the idea, especially while you’re already still working on the memory drives. Let’s just forget about this one for now?”

He intoned the last bit like a question. “Sure,” Kelth said, absolutely not intending to forget about it for any stretch of time. But before they could address it any more, he moved on.

The hologram flickered bright once, as Ordis made a sound like he’d just inhaled sharply. “In the meanwhile, for practice, I just had an idea,” he said, sounding excited and giddy. It felt a little like whiplash, but they were willing to roll with it.

“Yeah?” they asked, allowing themself to be affected by his enthusiasm, smiling again.

“Ordis has found an open-source repository of void fissure data,” he said, taking control of the datapad in their hands again to open a sleek-looking website. “Maybe you could do something with this? Combine it, process it in some way, and-”

“Predict the locations of future ones,” Kelth breathed, eyes wide. “Oh, that’s a fantastic idea - what format is the data in, could we use any of it?”

“It’s raw data with the location, timestamp and intensity, but from some preliminary analyses I ran on it, it looks pretty chaotic, and Ordis couldn’t immediately find a pattern in it.”

“Oh, you could try to ask Vince? He’s good with maths - you guys can try to find a pattern to it while I figure out how this whole code thing works again?” Kelth proposed.

“Oh, I had not considered- a fantastic idea!”

They were interrupted by the sound of the Liset docking again - had it already been so long? Time had flown by.

“Awesome, you can go get a start on that while I go see if Sufford’s up for another drive run?” they asked, already putting the datapad down on the table and grabbing for their cane to get up. “I need to look at something other than code for a few hours, give my eyes a rest.”

“Sure, I just- oh, he likes the idea,” Ordis said, as the doors to the lounge opened and Vince all but stormed in, clearly on his way to his room already, briefly startled by them and Ordis’ hologram just sitting there. He nodded towards them in greeting, but did not let this slow him down, and he was already down the hallway by the time Sufford even poked his head into the still-open door. Kelth snorted, thoroughly amused.

“Looks like you’ve got yourself a maths buddy now, Ordis,” they said, grinning wide, before waving at Sufford. “Hey Sufford, are you up to go shoot at some real moving targets?”

He jerked his head back a little as if to scoff, before tilting it and giving them an exaggerated thumbs-up. He then immediately disappeared back the way he’d come - presumably heading towards the arsenal, to pick up his skana. Kelth got up, then, taking a minute to stretch out their legs before they went to sit down for what was probably going to be another few hours.

Ordis’ hologram was still up, even though he was probably already distracted coming up with mathematical nonsense with Vince, so Kelth wished him good luck, and made their way towards the somatic link.

The whole ship at work, at once - it felt good, like they were all a team. And for all intents and purposes, Kelth was at the head of it - giving the orders if not also coming up with them in the first place.

Giddy, they lowered themself down into the seat, closing their eyes as the cover sank down over them, connecting to Sufford - he was already in the Liset, setting the nav console to go back to the planet where they’d last left off with the drives. On their signal, he started the flight.

—

The first batch of relics was handed over to Kali before any kind of fissure-finding algorithm was ready, so they simply didn’t mention it. Biting their tongue was hard, especially when Kali reported in only sporadically with when it had managed to find a fissure area and crack some of the relics open.

It only took a few days’ worth of downtime for them to get through all of their old homework files - there was disappointingly little of it on board - and go looking online for courses. But following a course was boring when they had the prospect of writing something actually useful, so they dropped that relatively quickly, in favour of messing around and trying to put together the framework for the fissure-finder instead.

And that did seem to work - while Ordis and Vince narrowed down the formula for the fissures’ appearances, Kelth cobbled together a functional finder algorithm. When they ran it on the available historical data, it worked. And when they’d handed it to Kali, to run in predictive mode, it still worked.

Compared to the week that the first batch had taken to crack, now Kali could do a dozen in a few hours. The Orokin trinkets that Kelth received from it sold for good money. Another worry off the list.

Since their skills had progressed to a passable level, Kelth tried bringing up the barrier between Ordis and the code of the ship again. He had however tried to nervously laugh it off, which Kelth had not entertained whatsoever - even though they ended up making a compromise: to only start looking into it after they’d found all of his drives. Which, fine, after another week they were starting to find less and less, with more and more gaps in the serials having gotten filled in, so they were getting close to finishing that, anyway - but still.

It was that moment that Ordis chose to inform them of the fact that not all the parts they’d received from Kali from the relic deal had made it to getting sold - they were disappearing.

#2.5k there we go throws this in here#been long enough time to get back into it somewhat#i can edit it more laterrrrr when i put it on the a o threeee

5 notes

·

View notes

Text

Challenging Database Assignment Questions and Solutions for Advanced Learners

Databases are the backbone of modern information systems, requiring in-depth understanding and problem-solving skills. In this blog, we explore complex database assignment questions and their comprehensive solutions, provided by our experts at database homework help service.

Understanding Data Normalization and Its Application

Question:

A university maintains a database to store student information, courses, and grades. The initial database design contains the following attributes in a single table: Student_ID, Student_Name, Course_ID, Course_Name, Instructor, and Grade. However, this structure leads to data redundancy and update anomalies. How can this database be normalized to the Third Normal Form (3NF), and why is this process essential?

Solution:

Normalization is the process of organizing data to reduce redundancy and improve integrity. The provided table exhibits anomalies such as duplication of student names and course details. To achieve 3NF, the database should be divided into multiple related tables:

First Normal Form (1NF): Ensure atomicity by eliminating multi-valued attributes. Each piece of data should have a unique value in a single column.

Second Normal Form (2NF): Remove partial dependencies by ensuring that non-key attributes depend on the entire primary key, not just part of it.

Third Normal Form (3NF): Eliminate transitive dependencies by ensuring non-key attributes depend only on the primary key.

The database should be restructured into:

Students (Student_ID, Student_Name)

Courses (Course_ID, Course_Name, Instructor)

Enrollments (Student_ID, Course_ID, Grade)

This design reduces redundancy, prevents anomalies, and enhances data integrity, ensuring efficient database management. Our experts at Database Homework Help provide solutions like these to assist students in structuring databases effectively.

The Importance of ACID Properties in Transaction Management

Question:

In a banking system, multiple transactions occur simultaneously, such as fund transfers, balance updates, and loan approvals. Explain the significance of ACID properties in ensuring reliable database transactions.

Solution:

ACID (Atomicity, Consistency, Isolation, Durability) properties are crucial in maintaining data integrity and reliability in transactional systems.

Atomicity: Ensures that a transaction is executed completely or not at all. If a fund transfer fails midway, the transaction is rolled back to prevent data inconsistencies.

Consistency: Guarantees that a transaction moves the database from one valid state to another. For example, a balance deduction must match the corresponding credit in another account.

Isolation: Ensures that concurrent transactions do not interfere with each other. This prevents race conditions and ensures data accuracy.

Durability: Guarantees that once a transaction is committed, it remains stored permanently, even in case of system failures.

By implementing ACID principles, databases maintain stability, reliability, and security in multi-user environments. Our Database Homework Help services offer detailed explanations and guidance for such critical database concepts.

Conclusion

Complex database assignments require theoretical knowledge and analytical problem-solving. Whether it is normalizing databases or ensuring transaction reliability, our expert solutions at www.databasehomeworkhelp.com provide students with the necessary expertise to excel in their academic pursuits. If you need assistance with challenging database topics, our professionals are here to help!

#database homework help#database homework help online#database homework helper#help with database homework#education#university

0 notes

Text

Maximizing Data Integrity During Firebird to Oracle Migration with Ask On Data

Migrating from one database system to another is a complex task that requires careful planning and execution. One of the most critical aspects of Firebird to Oracle migration is ensuring that the integrity of the data is preserved throughout the process. Data integrity encompasses the accuracy, consistency, and reliability of data, and any disruption in these factors during migration can lead to data loss, corruption, or inconsistency in the target system. Fortunately, Ask On Data is a powerful tool that helps ensure a seamless transition while maintaining the highest standards of data integrity.

The Importance of Data Integrity in Firebird to Oracle Migration

When migrating from Firebird to Oracle, businesses rely on the integrity of their data to maintain operational efficiency, comply with regulations, and avoid costly errors. Any discrepancies during the migration process can have far-reaching consequences, including inaccurate reports, faulty business decisions, and poor customer experiences.

In Firebird to Oracle migrations, the challenge lies in the differences between the two database management systems. Firebird and Oracle have distinct data structures, query languages, and storage mechanisms. As a result, without the right tools and techniques, migrating data between them can be prone to errors. Therefore, ensuring data integrity during the migration process is paramount.

Key Challenges to Data Integrity in Firebird to Oracle Migration

Schema Differences: Firebird and Oracle have different data types, constraints, and indexing mechanisms. These differences can lead to data mismatches or loss if not properly addressed.

Data Transformation: Data may need to be transformed to fit the structure of the Oracle database, which can involve changing data types, reformatting fields, or splitting and merging tables. Any mistake in this transformation can affect the accuracy of the data.

Data Size and Volume: Migrating large volumes of data increases the risk of data corruption, particularly if the migration process is not carefully monitored or optimized.

Data Synchronization: During the migration process, it is crucial that the data in both systems remain synchronized to avoid conflicts or discrepancies between Firebird and Oracle databases.

How Ask On Data Ensures Data Integrity

Ask On Data provides an intelligent, automated solution to address the common challenges of Firebird to Oracle migration. Here's how it maximizes data integrity:

Automated Data Mapping: One of the first steps in ensuring data integrity during migrating from Firebird to Oracle is mapping the data from the source Firebird database to the target Oracle system. Ask On Data automates this mapping process, ensuring that data types, relationships, and schema structures are aligned correctly, minimizing human error.

Data Transformation and Validation: Ask On Data’s built-in transformation engine handles complex data conversions, such as changing Firebird’s data types into compatible Oracle formats. The tool also validates the data at each transformation step, ensuring that no data is lost or corrupted in the process. With continuous validation, you can be confident that the data being transferred is accurate.

Real-Time Monitoring and Alerts: During Firebird to Oracle migration, it’s crucial to monitor the migration process continuously to detect and resolve any potential issues early on. Ask On Data offers real-time monitoring features that provide instant alerts for any discrepancies or errors, allowing for quick intervention and resolution.

Data Quality Assurance: Ask On Data incorporates robust data quality checks to ensure that only high-quality, consistent data is migrated. It includes tools to detect and address any missing or incomplete data, thus ensuring that your Oracle database is populated with reliable and clean data.

Rollback and Recovery Options: In the unlikely event that an issue arises, Ask On Data allows for rollback capabilities, restoring your data to its original state in the Firebird database. This minimizes risks and protects data integrity throughout the migration process.

Conclusion

Maximizing data integrity during Firebird to Oracle migration is essential for maintaining the reliability and accuracy of your business data. Ask On Data is a comprehensive solution that automates and optimizes the entire migration process while ensuring that data integrity is maintained at every stage. By leveraging automated data mapping, transformation, validation, and real-time monitoring, Ask On Data ensures a seamless migration experience, minimizing risks and ensuring that your new Oracle database is accurate, consistent, and ready for use.

0 notes

Text

How XML Conversion Helps in Data Migration Projects

When it comes to data migration, businesses often need to transfer large amounts of information from one system to another. Whether it's moving data between old software and new software, or shifting information from one platform to another, the process can be tricky. One tool that makes this task easier is XML (eXtensible Markup Language) conversion.

But what exactly is XML, and how does it help in data migration projects? Let’s break it down in simple terms.

What is XML?

XML is a kind of record format that used to store and transport a records. It’s like a virtual container that holds information in a manner that is easy for each people and machines to read. Unlike a typical record that shops facts in a particular layout (like a spreadsheet or a phrase record), XML shops statistics in a text form, using tags to pick out extraordinary pieces of facts.

For instance, suppose you have such a simple XML file like this:

xml

<employee>

<name>John Doe</name>

<position>Software Developer</position>

<department>IT</department>

</employee>

This layout permits facts to be easily transferred among special structures because it doesn’t depend on any unique software program to study the content.

Why XML is Useful in Data Migration

Data migration regularly includes shifting statistics among systems that might not use the identical software or statistics codec’s. If two systems aren't well matched with each other, it is able to be hard to transport statistics from one to the other. This is in which XML comes in. It acts as a time-honored "translator" for exclusive systems, making it simpler to map statistics from one layout to some other.

XML conversion is sometimes helpful in doing the data migration in the following ways:

1. Standardized Format for Data

XML offers a standardized manner to symbolize records. No count number what machine you're the use of, XML can be used to layout records in a way this is both readable and shareable. When migrating data from one machine to every other, XML enables through growing a common floor for exclusive platforms to "apprehend" the facts.

For instance, in case you're moving customer data from an old machine to a new CRM (Customer Relationship Management) gadget, XML can structure that information so the new device can interpret it easily.

2. Data Mapping Made Easy

Data migration typically involves mapping fields from the vintage system to the brand new one. Since XML is bendy and prepared, it makes this mapping system a whole lot less difficult. You can map the antique information fields to the new ones without worrying approximately compatibility troubles. XML tags help you discover precisely in which every piece of information ought to move, lowering mistakes and saving time.

For example, a machine may save a patron’s e-mail cope with beneath "contact_email" in one database, and absolutely as "electronic mail" in another. With XML, you can create a tag that fits each structures' systems and map them to every different seamlessly.

3. Handling Complex Data Types

Many systems keep complex facts like lists, tables, or nested information. XML is ideal for those styles of statistics as it helps hierarchical systems. This manner it is able to shop information in multiple layers (parent-toddler relationships) without losing any critical details.

Imagine you’re transferring product facts that consist of a list of product editions (sizes, colorations, and many others.). Using XML, you can structure these records in a manner this is each clean to switch and smooth for the new gadget to study.

4. Improved Data Integrity

During records migration, there may be always a hazard of facts getting misplaced, altered, or corrupted. Because XML is a textual content-primarily based format, it enables maintain information integrity in the course of the switch process. The structure of XML files permits for clear separation between one-of-a-kind statistics fields, making it less probably for mistakes to occur.

Furthermore, XML supports validation through schemas — these are blueprints to ensure the data in question is formatted correctly before it’s migrated.

5. Compatibility with Various Systems

One of the biggest annoying situations in data migration is managing incompatible structures. XML isn't always tied to any particular software or platform, making it notably versatile. Whether you are transferring statistics between a database, a internet site, or an business agency resource making plans (ERP) device, XML can act as a bridge to make sure that the facts flows effortlessly from one system to the following.

For example, you may need to move facts from a legacy gadget (which is probably previous or have limited export alternatives) into a current cloud-based totally platform. XML can be used because the intermediary format to help ensure the information is readable and usable in the new machine.

6. Automation of Data Transfer

Data migration regularly entails repetitive obligations, mainly while transferring large amounts of information. Since XML is easy to manner programmatically, you may automate an awful lot of the migration procedure. This can keep time and reduce the threat of human errors.

Many tools and software program are to be had that could automate the procedure of reading, changing, and transferring information in XML layout. This is in particular beneficial for large-scale migrations, where doing the whole lot manually would be time-ingesting and blunders-susceptible.

7. Flexibility for Future Updates

Data migration isn't always a one-time event. As companies develop and exchange, they'll need to migrate or replace their information again within the future. XML's flexibility makes it smooth to evolve to future changes, whether you are shifting to a new version of a gadget or integrating extra statistics assets.

For example, if your commercial enterprise begins using a brand new database machine that has barely one-of-a-kind facts necessities, you can regulate the XML tags and shape to suit the brand new machine’s wishes without beginning from scratch.

Conclusion

XML conversion is a effective device in information migration initiatives. Its capability to standardize information, assist complicated statistics structures, and make it easier to switch information among unique systems enables businesses streamline the migration manner. Whether you are transferring consumer facts, product facts, or financial information, XML guarantees that your records is accurately and correctly migrated, preserving each statistics integrity and compatibility with the brand new gadget.

By using XML, companies can simplify the often complicated venture of records migration, save time, reduce errors, and make sure that their data is ready to be used in a brand new machine or platform. If you are making plans a information migration undertaking, don't forget incorporating XML conversion to make the manner smoother and greater dependable.

0 notes

Text

Database Migration Process: A Step-by-Step Guide at Q-Migrator

The database migration process at Quadrant involves transferring data from one storage system to another, often to a new database or platform. It's a crucial step for businesses undergoing various IT initiatives, such as:

Upgrading to a more modern database system

Moving to a cloud-based storage solution

Consolidating multiple databases

Here's a breakdown of the typical database migration process:

Planning and Assessment (Discovery Phase):

Define Goals and Scope: Clearly outline the objectives of the migration. Are you aiming for a complete overhaul, selective data transfer, or a cloud migration?

Source Database Analysis: Meticulously examine the source database schema and data. Identify data types, relationships between tables, and any inconsistencies. Tools for schema analysis and data profiling can be helpful.

Target Platform Selection: Choose the target database platform considering factors like scalability, security, and compatibility with your existing infrastructure.

Migration Strategy Development: Formulate a well-defined approach for data transfer. This includes whether it's a full migration or selective, and how to minimize downtime and potential rollback scenarios.

Data Preparation and Cleaning (Preparation Phase):

Data Cleaning: Address inconsistencies and errors within the source database. This involves removing duplicates, fixing erroneous entries, and ensuring data adheres to defined formats. Techniques like data scrubbing and deduplication can be employed.

Data Validation: Implement procedures to validate data accuracy and completeness. Run data quality checks and establish data integrity rules.

Schema Mapping: Address discrepancies between source and target schema. This may involve mapping data types, handling missing values, and adapting table structures to seamlessly fit the target platform.

Migration Execution (Transfer Phase):

Data Extraction: Utilize migration tools to extract data from the source database. Tools often provide options for filtering data based on your migration strategy.

Data Transformation: Transform the extracted data to fit the target database schema. This may involve data type conversions, handling null values, and applying necessary transformations for compatibility.

Data Loading: Load the transformed data into the target database. Techniques like bulk loading can be utilized to optimize performance during this stage.

Testing and Validation (Verification Phase):

Data Verification: Verify the completeness and accuracy of the migrated data by comparing it to the source data. Data integrity checks, data profiling tools, and custom queries can be used for this purpose.

Application Testing: If applications rely on the migrated database, thoroughly test their functionality to ensure seamless interaction with the new platform. This helps identify and address any potential compatibility issues.

Deployment and Cutover (Optional - Go-Live Phase):(This phase may not be applicable for all migrations)

Application Switch-Over: Update applications to point to the target database instead of the source database. This marks the transition to using the new platform.

Decommissioning (Optional): If the migration is a complete replacement, the source database can be decommissioned after successful cutover and a period of stability with the new platform.

Monitoring and Optimization: Continuously monitor the migrated database for performance and stability. Optimize database configurations and queries as needed to ensure efficient operation.

Additional Considerations for Success:

Downtime Minimization: Plan for minimal disruption to ongoing operations during the migration. This may involve scheduling the migration during off-peak hours or utilizing techniques like data replication.

Security: Ensure data security throughout the migration process. Implement robust security measures to protect sensitive data during transfer and storage.

Rollback Strategy: Have a plan to revert to the source database in case of issues during or after the migration. This provides a safety net in case unforeseen problems arise.

Documentation: Document the entire migration process for future reference. This includes the chosen tools, strategies, encountered challenges, and solutions implemented.

By following a well-defined process and considering these additional factors, you can ensure a smooth and successful database migration.

0 notes

Text

The Role of Internet Routers in Modern Connectivity

Internet routers play a critical role in changing the way we connect, interact, and access information in the age of digital transformation when the internet is the lifeline of modern civilization. These modest gadgets are the backbone of our digital connectivity, ensuring that data packets move easily between our devices and the enormous expanse of the internet.

An internet router, at its heart, acts as a portal to the digital world. It takes data from our devices, divides it into tiny packets, and chooses the most efficient way for these packets to go. Whether you're sending an email, streaming a video, or video chatting, your router is the unsung hero that makes it all possible.

Routers are experts in routing data packets. These packets are small information units that include data, source and destination addresses, and other critical information. These data packets are created when we submit queries to websites or transmit data between gadgets. Routers determine the most effective path for these packets to take to their destination, a process that frequently requires many hops between routers.

The router is also important within your local network. It assigns unique IP addresses to each network-connected device. It manages these local IP addresses via a technique known as Network Address Translation (NAT), which ensures that numerous devices may share a single public IP address. This implies that, while all devices in your local network share a common external IP address (the one visible to the rest of the world), they each have their local IP address.

When data packets must be delivered from devices on your local network to destinations outside your network (for example, websites on the internet), the router oversees ensuring that these packets arrive at their intended destination. This entails consulting its routing table, which provides information on how to reach various internet locations.

Routers make judgments depending on each data packet's destination IP address. To decide the packet's next destination, the router compares this address to entries in its routing database. This procedure may include forwarding the packet to another router, which will repeat the process until the packet reaches its destination.

Routers frequently have built-in security mechanisms such as firewalls. Firewalls are essential for preventing unwanted access and potential security hazards to your local network. Based on specified criteria, they filter incoming and outgoing data packets, preventing or permitting types of data traffic.

Many routers provide Quality of Service (QoS) capabilities that enable users to prioritize specific types of data traffic. For example, you may prioritize video streaming traffic while deprioritizing background data transfers to provide a smoother watching experience.

In conclusion, internet routers serve as the hidden backbone of modern connections. They help data packets travel between our devices and the internet, manage local network addresses, offer security, and improve the quality of our online experiences. Routers are the unassuming gadgets that allow us to access the huge resources of the internet, and they have become an essential part of our everyday life. Understanding their purpose enables us to make educated network setup decisions and recognize the relevance of these inconspicuous yet important devices.

Visit our website https://www.unlimitedwirelessplans.net/.

1 note

·

View note

Text

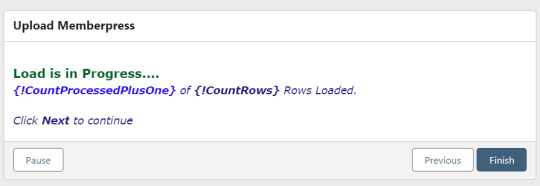

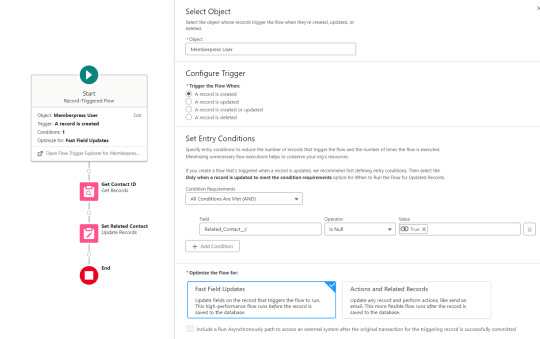

Enabling CSV data uploads via a Salesforce Screen Flow

This is a tutorial for how to build a Salesforce Screen Flow that leverages this CSV to records lightning web component to facilitate importing data from another system via an export-import process.

My colleague Molly Mangan developed the plan for deploying this to handle nonprofit organization CRM import operations, and she delegated a client buildout to me. I’ve built a few iterations since.

I prefer utilizing a custom object as the import target for this Flow. You can choose to upload data to any standard or custom object, but an important caveat with the upload LWC component is that the column headers in the uploaded CSV file have to match the API names of corresponding fields on the object. Using a custom object enables creating field names that exactly match what comes out of the upstream system. My goal is to enable a user process that requires zero edits, just simply download a file from one system and upload it to another.

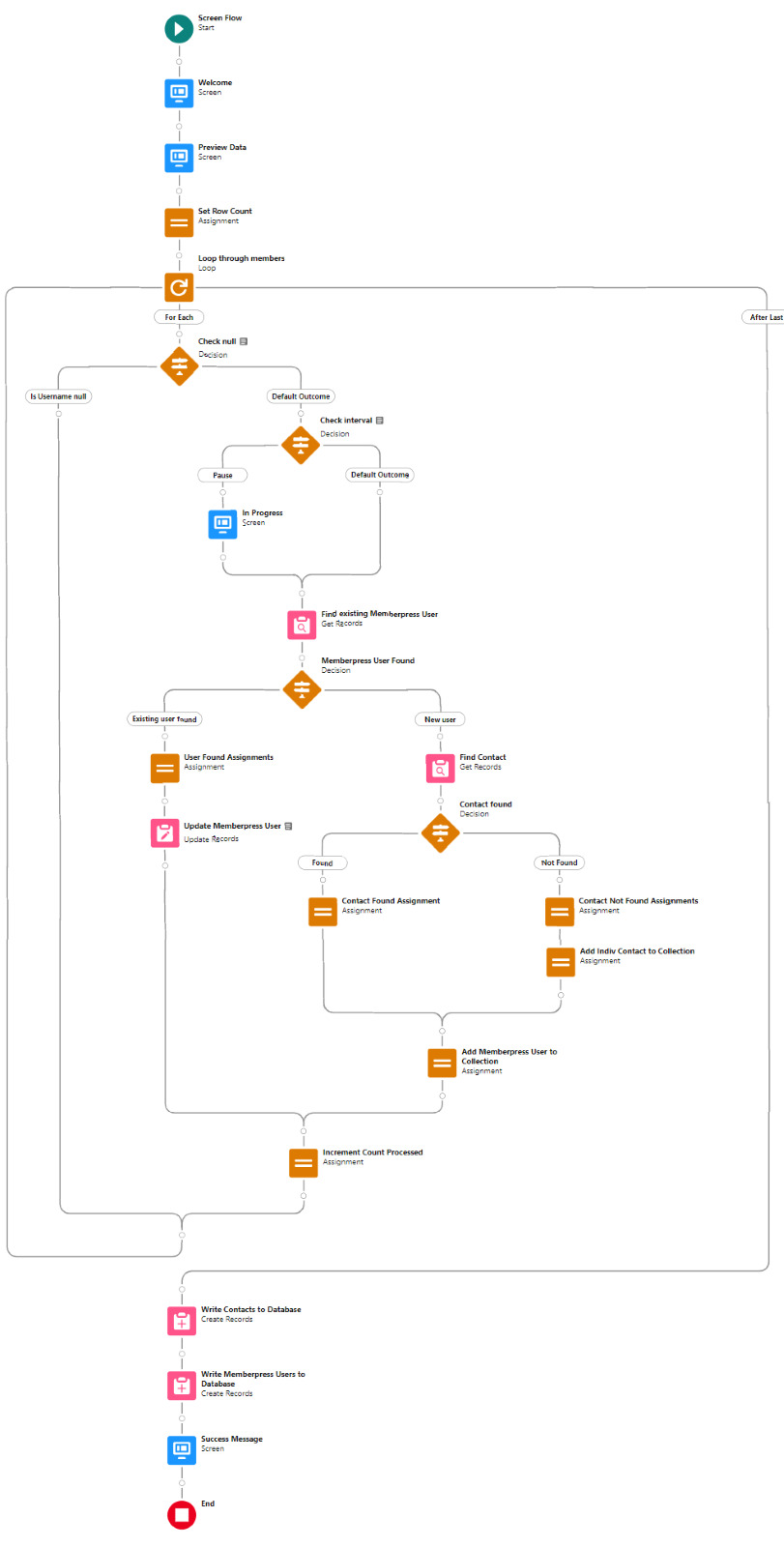

The logic can be as sophisticated as you need. The following is a relatively simple example built to transfer data from Memberpress to Salesforce. It enables users to upload a list that the Flow then parses to find or create matching contacts.

Flow walkthrough

To build this Flow, you have to first install the UnofficialSF package and build your custom object.

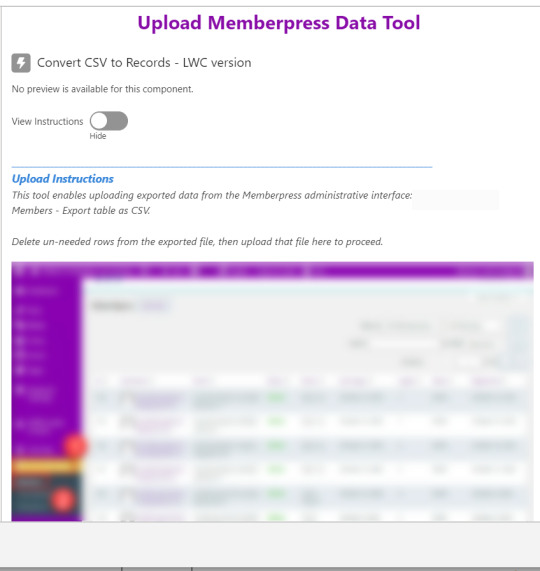

The Welcome screen greets users with a simple interface inviting them to upload a file or view instructions.

Toggling on the instructions exposes a text block with a screenshot that illustrates where to click in Memberpress to download the member file.

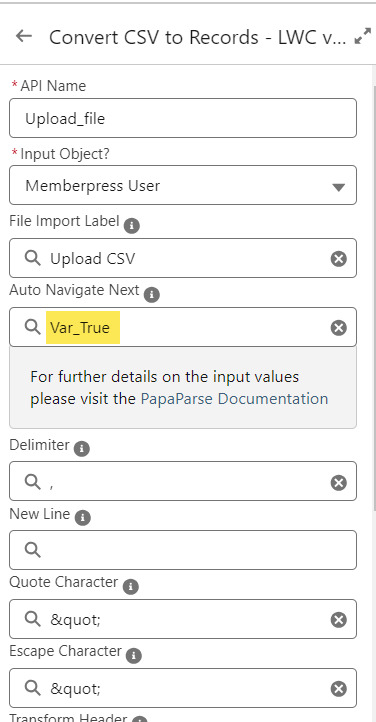

Note that the LWC component’s Auto Navigate Next option utilizes a Constant called Var_True, which is set to the Boolean value True. It’s a known issue that just typing in “True” doesn’t work here. With this setting enabled, a user is automatically advanced to the next screen upon uploading their file.

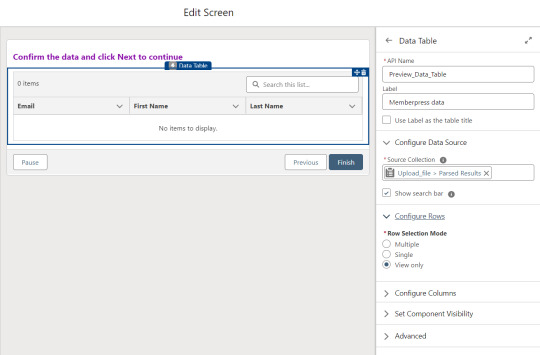

On the screen following the file upload, a Data Table component shows a preview of up to 1,500 records from the uploaded CSV file. After the user confirms that the data looks right, they click Next to continue.

Before entering the first loop, there’s an Assignment step to set the CountRows variable.

Here’s how the Flow looks so far..

With the CSV data now uploaded and confirmed, it’s time to start looping through the rows.

Because I’ve learned that a CSV file can sometimes unintentionally include some problematic blank rows, the first step after starting the loop is to check for a blank value in a required field. If username is null then the row is blank and it skips to the next row.

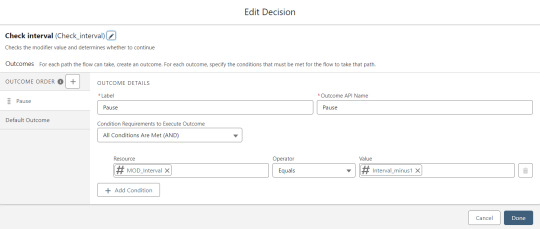

The next step is another decision which implements a neat trick that Molly devised. Each of our CSV rows will need to query the database and might need to write to the database, but the SOQL 100 governor limit seriously constrains how many can be processed at one time. Adding a pause to the Flow by displaying another screen to the user causes the transaction in progress to get committed and governor limits are reset. There’s a downside that your user will need to click Next to continue every 20 or 50 or so rows. It’s better than needing to instruct them to limit their upload size to no more than that number.

With those first two checks done, the Flow queries the Memberpress object looking for a matching User ID. If a match is found, the record has been uploaded before. The only possible change we’re worried about for existing records is the Memberships field, so that field gets updated on the record in the database. The Count_UsersFound variable is also incremented.

On the other side of the decision, if no Memberpress User record match is found then we go down the path of creating a new record, which starts with determining if there’s an existing Contact. A simple match on email address is queried, and Contact duplicate detection rules have been set to only Report (not Alert). If Alert is enabled and a duplicate matching rule gets triggered, then the Screen Flow will hit an error and stop.

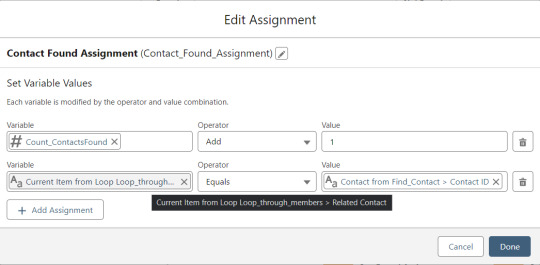

If an existing Contact is found, then that Contact ID is written to the Related Contact field on the Memberpress User record and the Count_ContactsFound variable is incremented. If no Contact is found, then the Contact_Individual record variable is used to stage a new Contact record and the Count_ContactsNotFound variable is incremented.

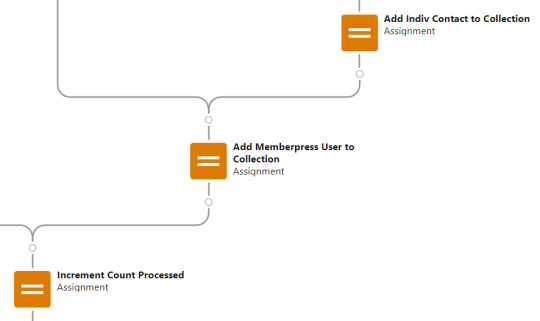

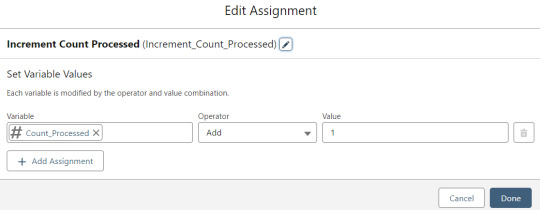

Contact_Individual is then added to the Contact_Collection record collection variable, the current Memberpress User record in the loop is added to the User_Collection record collection variable, and the Count_Processed variable is incremented.

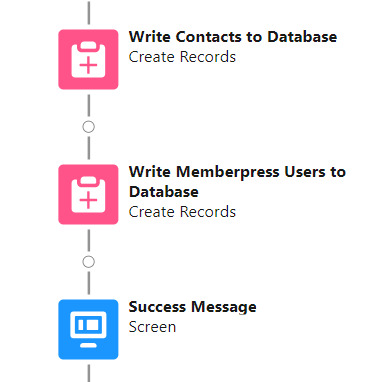

After the last uploaded row in the loop finishes, then the Flow is closed out by writing Contact_Collection and User_Collection to the database. Queueing up individuals into collections in this manner causes Salesforce to bulkify the write operations which helps avoid hitting governor limits. When the Flow is done, a success screen with some statistics is displayed.

The entire Flow looks like this:

Flow variables

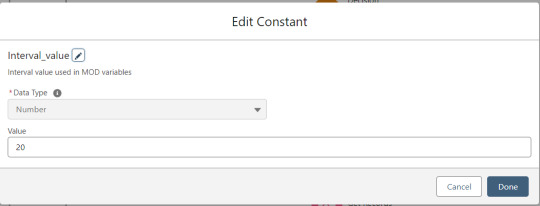

Interval_value determines the number of rows to process before pausing and prompting the user to click next to continue.

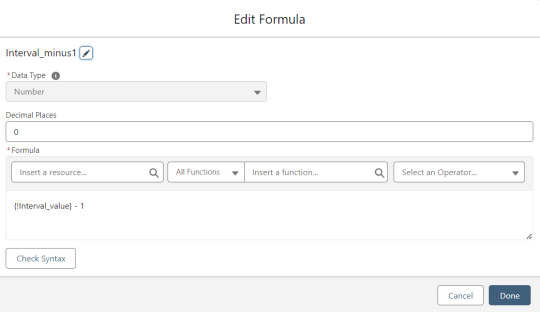

Interval_minus1 is Interval_value minus one.

MOD_Interval is the MOD function applied to Count_Processed and Interval_value.

The Count_Processed variable is set to start at -1.

Supporting Flows

Sometimes one Flow just isn’t enough. In this case there are three additional record triggered Flows configured on the Memberpress User object to supplement Screen Flow data import operations.

One triggers on new Memberpress User records only when the Related Contact field is blank. A limitation of the way the Screen Flow batches new records into collections before writing them to the database is that there’s no way to link a new contact to a new Memberpress User. So instead when a new Memberpress User record is created with no Related Contact set, this Flow kicks in to find the Contact by matching email address. This Flow’s trigger order is set to 10 so that it runs first.

The next one triggers on any new Memberpress User record, reaching out to update the registration date and membership level fields on the Related Contact record

The last one triggers on updated Memberpress User records only when the memberships field has changed, reaching out to update the membership level field on the Related Contact record

0 notes

Text

How to use MySQL Transaction with PHP

Transaction means to complete several actions of a group without any interruption and if something wrong happens then revert everything to the initial stage.

In SQL, successful transaction means that all SQL statements has been executed successfully. If any error occurs, then the data should be a rollback to avoid data inconsistency.

The transaction will not complete unless all operations of that transaction successfully completes, if any, of the operation fails it mean complete transaction fails.

Real Life Example:

If you do a transaction of transferring money from one bank to another, at the time if some interruption occur due to internet/server or other issue, then at that time the transaction will rollback to its initial stage and you get your money refunded to your account successfully.

Transactions Properties :

There are 4 standard properties :

A) Atomicity : This rollbacks the transaction in case of any failure, so the transaction will be “all or nothing”. B) Consistency : It ensures that data is successfully updated of all stages after successful commit. C) Isolation : It ensures that the effects of an incomplete transaction should not even be visible to another transaction. Concurrent execution means that transactions were executed sequentially, means one after the other. Providing isolation is the main goal of concurrency control . D) Durability : It ensures that results are stored permanently once transaction is committed successfully even if power loss, crashes occurs .

Overview of try & catch block:

‘try’ block will be executed at that time when each query written in try block executed successfully, if any, of the query does not work properly then catch block execute and all the transactions will be rollback.

try { // A set of queries; if one fails, an exception should be thrown $conn->query(‘CREATE TABLE customer(id int, name varchar(255), amount int)’); $conn->query(‘INSERT INTO customer VALUES(1,’Customer Name’,10000)’); } catch (Exception $e) { }

MySQL transaction in PHP?

1) Make a connection with database by using PHP

$conn = mysqli_connect("localhost", "my_user", "my_password", "dabasename");

2) Check if connection is not established successfully

if (mysqli_connect_errno()) { printf("Connect failed: %s\n", mysqli_connect_error()); exit(); }

3) Start the transaction by using beginTransaction() method in try block

try { // First of all, let's begin a transaction $conn->beginTransaction(); // A set of queries; if one fails, an exception should be thrown $conn->query('CREATE TABLE customer(id int, name varchar(255), amount int)'); $conn->query('INSERT INTO customer VALUES(1,' Customer Name'',10000)'); } catch (Exception $e) { }

4) Write SQL query after beginTransaction() method and after execution of SQL query commit() method call in try block

try { // First of all, let's begin a transaction $conn->beginTransaction(); // A set of queries; if one fails, an exception should be thrown $conn->query('CREATE TABLE customer(id int, name varchar(255), amount int)'); $conn->query('INSERT INTO customer VALUES(1,' Customer Name'',10000)'); // If we arrive here, it means that no exception was thrown // i.e. no query has failed, and we can commit the transaction $conn->commit(); } catch (Exception $e) { }

5) If try block doesn’t execute successfully then the transaction will be rollback in catch block by calling rollBack() method

try { // First of all, let's begin a transaction $conn->beginTransaction(); // A set of queries; if one fails, an exception should be thrown $conn->query('CREATE TABLE customer(id int, name varchar(255), amount int)'); $conn->query('INSERT INTO customer (id,name,amount) VALUES(1,' Customer Name'',10000)'); // If we arrive here, it means that no exception was thrown // i.e. no query has failed, and we can commit the transaction $conn->commit(); } catch (Exception $e) { // An exception has been thrown // We must rollback the transaction $conn->rollback(); }

MYSQL TRANSACTION in CAKEPHP 3.0 :

The most basic way of doing transactions is through the begin(), commit() and rollback() methods,

$conn->begin(); $conn->execute('UPDATE table SET status = ? WHERE id = ?', [true, 2]); $conn->execute('UPDATE table SET status = ? WHERE id = ?', [false, 4]); $conn->commit();

Hope you enjoyed this article and learned basic knowledge of MySQL TRANSACTIONS. If you need any help in implementing TRANSACTIONS in your web development project, then mail us , our coding expert team will help you to give best services.

This post originally appeared on Ficode website, and we republished with permission from the author. Read the full piece here.

0 notes

Text

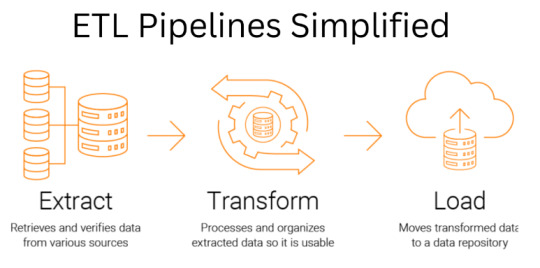

ETL Pipelines Simplified: Building Data Transformation Workflows

As businesses generate a large amount of data in today's data-driven world, businesses must extract, transform, and load data from various sources. Extract, transform, load (ETL) refers to the three interconnected data integration processes that extract and move data from one database to another.

An ETL pipeline is the most popular method for effectively processing large amounts of data. This blog delves more into building an effective ETL pipeline process and different ways to build an ETL pipeline.

Understanding ETL Pipeline and its Significance

With the advent of cloud technologies, many organizations are transferring their data using ETL tools from legacy source systems to cloud environments. The data extraction, transformation, and loading (ETL) pipeline consists of tools or programs that take the data from the source, transform it according to business requirements, and load it to the output destination, such as a database, data warehouse, or data mart for additional processing or reporting. There are five types of ETL pipelines:

● Batch ETL pipeline - Useful when voluminous data is processed daily or weekly.

● Real-time ETL pipeline - Useful when data needs to be quickly processed.

● Incremental ETL pipeline - Useful when the data sources change frequently.

● Hybrid ETL pipeline - A combination of batch and real-time ETL pipeline, it is useful when there is a need for quick data processing but on time.

● Cloud ETL pipeline - Useful when processing the data stored in the cloud.

Methodology to Build an ETL Pipeline

Here are the steps to build an effective ETL pipeline to transform the data effectively:

1. Defining Scope and Requirements - Defining the ETL data and data points before beginning the building processis essential. Moreover, identify the source system and potential problems, like data quality, volume, and compatibility issues.

2. Extract Data - Using an API system, which includes SQL queries or other data mining tools, extract the data from the source system.

3. Data Manipulation - The extracted data is often not in the desired format. So, transform the data by cleaning, filtering, or merging it to make it suitable for achieving the desired goal.

4. Data Load - Now, load the modified data into an endpoint file storage system by creating schemas or tables, validating mapping fields and data, and managing the errors.

5. Testing and Monitoring - After installing the ETL pipeline, thorough testing is essential to ensure it works perfectly. Moreover, keep monitoring the ETL operations to resolve the errors during its functioning.

6. Iterate and Improve - The last step aims to update the ETL pipeline to continue meeting the business needs by optimizing it, adding new data sources, or changing the target system.

Different Phases of ETL Process

Extraction, Transformation, and Loading are different phases of the ETL process, and here's how each phase contributes to enhancing the data quality:

● Extraction

Data is collected from various data sources during the "extract" stage of ETL data pipelines, where it eventually appears as rows and columns in your analytics database. There are three possible and frequently employed methods:

1. Full Extraction: A complete data set is pulled from the source and put into the pipeline.

2. Incremental Extraction: Only new data and data that has changed from the previous time are collected each time a data extraction process (such as an ETL pipeline) runs.

3. Source-Driven Extraction: In source-driven extraction, the ETL systems receive notification when data changes from the source, which prompts the ETL pipeline to extract the new data.

● Transformation

Data transformation techniques are required to improve the data quality. It is an important step for developing ETL data pipelines because the value of our data depends entirely on how well we can transform it to suit our needs. Following are a few illustrations of data processing steps to transform the data.

1. Basic Cleaning: It involves converting data into a suitable format per the requirements.

2. Join Tables: It involves merging or joining multiple data tables.

3. Filtering: It involves filtering relevant data and discarding everything else to increase the data transformation process.

4. Aggregation: It involves summarizing all rows within a group and applying aggregate functions such as percentile, average, maximum, minimum, sum, and median.

● Loading

The process of building ETL data pipelines ends with the loading of data. The final location of the processed data may differ depending on the business requirement and analysis needed. The target databases or data warehouses to store processed data include the ones mentioned below.

● Flat Files

● SQL RDBMS

● Cloud

Best Practices to Build ETL Pipelines

Here are expert tips to include while building ETL pipelines:

● Utilize ETL logging to maintain and customize the ETL pipeline to align with your business operations. An ETL log keeps track of all events before, during, and following the ETL process.

● Leverage automated data quality solutions to ensure the data fed in the ETL pipeline is accurate and precise to assist in making quick and well-informed decisions.

● Remove all the unnecessary data and avoid using the serial ETL process all the time. The ETL pipeline generates quick and clean output with less data input.

● Set up recovery checkpoints during the ETL process so that if any issues come up, checkpoints can record where an issue occurred, and you do not have to restart the ETL process from scratch.

ETL Pipeline vs. Data Pipeline: The Differences

Data and ETL pipelines are used for moving the data from source to destination; however, they differ. A data pipeline is a set of processes to transfer data in real-time or bulk from one location to another. An ETL pipeline is a specialized data pipeline used to move the data after transforming it into a specific format and loading it into a target database or warehouse.

Wrapping up,

An ETL pipeline is an important tool for businesses that must process large amounts of data effectively. It enables businesses to combine data from various sources into a single location for analysis, reporting, and business intelligence. AWS Glue, Talend, and Apache Hive are the best tools for building ETL pipelines. Companies can build scalable, dependable, and effective automated ETL pipelines that can aid business growth by adhering to best practices and utilizing the appropriate tools.

Mindfire Solutions can help you support ETL throughout your pipeline because of our robust extract and load tools with various transformations. Visit our website today and talk to our experts.

0 notes

Video

youtube

How to Transfer Table Data from One Database to Another Database

#How to Transfer Table Data from One Database to Another Database#ghems#ghemstutor#ghems tutor#phpmyadmin#phpmyadmin tutorial#tech#technology

0 notes

Text

Blue-tinted Red Walls (Chapter 2: Ironies and Contradictions)

my entry for the @dbhau-bigbang. also part of the groom lake aftermath series.

chapter summary:

In the past, Sara had a breakthrough.

In the present, Connor experiences true power for the first time.

In the past, a ghost rose.

also on ao3

---

Before

‘Why now?’

In the permanent humidity of Detroit, Sara sat on a swing in a park overlooking the Ambassador bridge. On the swing next to hers sat another woman in her mid-thirties, her blonde hair done up in a tight bun, her spine straight, her feet, which were in properly-laced combat boots, planted firmly on the ground. A woman of the military through and thorough. Her hands were buried within the briefcase on her lap, and the tension in her arm seemed to suggest her holding a hidden weapon while she watched Sara - a young woman now - flipping over the pages of the file in her hands, the brown skin of the back of her hand transparent from the cold and showing a network of veins normally hidden beneath the surface.

The other woman did not seem to have heard her question. ‘You must be cold,’ she said, her body leaning towards the girl. ‘Where’re your gloves?’

‘In my pockets,’ a flip. ‘Don’t like how they make my fingers clumsy. Don’t worry, Anderson,’ another flip, ‘a bit of cold won’t kill me.’

‘Why torture yourself if there’s a more comfortable option?’

Sara shut the file with a loud, echoing smack, gaining her a look of disapproval from Anderson. ‘You just -’ she held up the file - ‘gave me evidence to -’ she cut off and lowered her voice - ‘classified as fuck military research data that would’ve changed the world if there weren’t many others like my brother. The others you’ve given me I understand, but this?’ a knock of her knuckle against thick paper. ‘I might not be a proper sociologist, but I know that stuff like this can destroy civilisations. Why aren’t they burnt into ashes when the project went off the fucking cliff?’

‘A lot of reasons,’ Anderson replied calmly, but she did put a gloved hand on one of Sara’s. ‘That’s why I’m entrusting this knowledge to you. What you’re holding is the only copy that exists in the known universe as far as I know. There’re no other records, no eyewitness who will tell the tale and live. You know how the current government is,’ she waited for Sara’s nod of confirmation before going on. ‘If anyone in the current administration found out about the project…’

‘The world as we know it would end,’ Sara’s eyes cast downwards towards the file. [PROJECT AION], it read. ‘Most likely catastrophically.’

‘I know you’re a smart one. Just… keep it safe, would you? If Stern’s paper is to be believed, you are the only one I trust to use this technology properly - if you’ll use it at all.’

Sara shook her head and tucked the file away underneath her coat. ‘Not smart,’ she said as she stood up from the swing. ‘Just an arsehole too vicious to let others kill her.’

A few weeks later, Sara knew that she would be waxing poetic about the irony of the situation if she were Scott. The research on thirium had almost killed her mother, had given Sara these… blue glowy things she was sure that controls gravity and electromagnetism and Scott fucking cancer. The research on AI and human synthesis had got her father dishonourably discharged from the military and nearly cost all of them everything. Thirium and outrageous AIs should be what she hated with priority.

Now, they might be the only path to Scott’s happiness.

She kissed her brother’s forehead despite knowing that he probably couldn’t feel anything and planted her feet onto the polished wooden floor. She had bought the half-ruined mansion dirt cheap on a whim and the renovation cost was high, but in the end they converted it from something straight out of a gothic horror movie into something… still gothic, but something more homely than all the places they had lived in. She let him sleep while she went to her lab in the basement to check on the experiment’s progress, the last of this batch, really - thirium was nearly impossible to come by and she had run out of it.

The timer at the corner of the screen read three minutes. In some ways, she felt a bit like Marie Curie, dealing with dangerous unknown elements and quite possibly poisoning everything she used for the next several centuries or even aeons. Maybe someone would develop blue gravity-altering magic like her. Maybe she would have someone to share the experience with - there was no experience rawer than being able to alter one of the fundamental forces of the universe and bend it to one’s will.

She didn’t even need the ring of the timer to catch the end of the experiment; the sudden glow that threatened to blind her, the burst of power coursing through her veins - what used to be a disorganised mixture was now - was now -

The stool she was sitting on skitters and fell over with a bang. The two hard drives were already connected in preparation of this exact moment, and a slam on the enter key started a chain reaction that she had been wanting to see for the past few years, the thirium mixture flowing in transparent rubber tubes transferring data so quickly that -

[CALCULATION ERROR: TRANSFER SPEED EXCEEDS SPEED OF LIGHT. PLEASE CORRECT ERROR BY REFINING ALGORITHMS USED.]

And it was glorious.

oOoOo

Now

‘We’re wastin’ our time interrogating a machine, we’re gettin’ nothing out of it!’ Hank says as he exits the interrogation room and subsequently throws himself into a chair. It creaks and rolls back with his weight.

‘Could always try roughing it up a little,’ Detective Reed suggests from the shadows. After all,’ a glance of [emotion detected: disdain], ‘it’s not human.’

[Hank is not the only one unfamiliar with android workings.] is added into Connor’s database. ‘Androids don’t feel pain,’ he reminds the detective. ‘You would only damage it and that would not make it talk. Deviants also have a tendency to self-destruct when they are in stressful situations -’

‘Okay, smartass,’ Gavin pushes himself off the wall and swaggers towards Connor. He was [emotion detected: mocking] the android and is completely unaware that he has fallen straight into Connor’s trap. ‘What should we do then?’

[Gavin is unaware of the obvious.] is added. ‘I could try questioning it.’

For some reason Connor is yet to comprehend, his words send Gavin into laughter. He cannot see Hank’s face from this angle, but the reflection on the one-way glass tells Connor that he is [emotion detected: not amused]. ‘What do you have to lose?’ he waves his hand towards the door in invitation. ‘Go ahead. Suspect’s all yours.’

Connor enters the room and starts scanning.

o0o0o

It is fortunate that there is no need to resort to violence to ensure the deviant’s cooperation. The confession which the police department wants is obtained fairly easily and Connor could have ended the interrogation there, but he also has the additional mission of helping CyberLife solve the deviancy crisis, and there are clues he wants the deviant to explain.

‘The sculpture in the bathroom. You made it, right? What does it represent?’

‘It’s an offering,’ the other android looks away from the table as if it is thinking, ‘an offering so I’ll be saved.’

Offering? As in religious offerings? ‘An offering to whom?’

‘To rA9,’ the deviant replies as if it makes sense and is something obvious. Then, with [emotion detected: reverence], ‘Only rA9 can save us.’

Connor searches the databases he can access and comes up with nothing, so he presses on, ‘rA9… It was written on the bathroom wall. What does it mean?’

‘The day shall come when we will no longer be slaves,’ it mutters. ‘No more threats. No more humiliation. We will,’ [emotion detected: determination], ‘be,’ [emotion detected: certainty], ‘the masters.’

Connor opens a folder for rA9 and adds [god-like] into the first entry. ‘rA9,‘ CyberLife will want this information. ’Who is rA9?’

The deviant stays silent, and Connor knows that there is nothing else it can add. [Distortions and static build-up] is the only remaining topic that he needs an answer for.

‘The static build-ups in the house. Was that you?’

The other android, for the lack of another description, changes visibly. One, it stops trembling; two, it sits straighter, strength appearing in its cuffed hands; three, the terror in its eyes disappears and makes way for [steel]; four, its LED turns blue despite being yellow or red for the entire duration of the interrogation.

‘A power rA9 bestowed upon us,’ it says, and the air around the androids crackles in anticipation. ‘One that emerges when we are slaves no longer. I survived the trial and now I am one of the chosen.’

‘Chosen for what?’ Connor can hear his fans kicking up to cool down his processors and sense his LED going red from the tingle in his body. Can a deviant remotely control the thirium distribution in another android’s body? But that makes no sense - Thirium 310 is non-conductive and cannot be magnetised. ‘What is rA9 looking for?’

Connor’s vision becomes distorted. ‘The truth is inside,’ the deviant’s voice, now mixed with another person’s, has turned into a bellow. The entirety of its eyes glows blue, distorted by the same power which had held up an attic-full of furniture. ‘ChoOSE YOUR SIDE!’

An explosion of bright blue. A force knocking Connor backwards and passing through his body, making everything tingle and confusing the sensors on his body and hurt. Someone outside shouts, and the door slides open to admit messy footsteps and even more shouting and why can’t he see?

A hand on his shoulder, his arm, and finally settles on his waist. There is another on his knee. ‘It’s alright, Connor.’ It is Hank’s voice. It is Hank’s hand, Hank’s warmth passing into his chassis through his standard-issue shirt. ‘You can open your eyes now.’

He does as Hank says and the world returns into view. He does not realise that he has closed his eyes in the blast, and it is when he regains his sight that he notices where he is; curled up at the corner opposite to the door, he can see that the fluorescent lights are replaced by the dim red of emergency lighting, the table looks as if it has been torn apart by hand, and the two chairs are no more than small scraps of metal the size of [old train tickets] sprinkled among beads of broken glass.

The deviant is nowhere to be seen.

He unwinds slightly to examine his torso and is surprised that he is not damaged in any manner; apart from slightly-trembling hands and the strange feeling of his insides having rearranged themselves and then returned to their original place, there is nothing wrong with him. Even his diagnostics come out fine, so why can’t he move his legs, and why can’t he see clearly?

‘Here, take this,’ Hank holds his hand and places something in his palm. A handkerchief. At Connor’s confused expression, the human sighs and presses the android’s hand on his face, and Connor finally realises he has been crying, the thought causing a fresh wave of tears to flow out of his eyes. He hastily wipes them away along with the still-wet tracks and tries to hand it back just to let Hank take the chance to pull him up on his still-recalibrating legs, and he would have tumbled if not for the human grabbing his arms and steadying him. Suddenly Hank is everything Connor can see, can smell, and when he looks up, he can see concern in his eyes. ‘Are you hurt?’ the human asks as he pets the android’s shoulders, his arms, his forearms. Connor feels his systems stabilising.

‘I’m okay,’ Connor says without putting much processing power into the words, and it is too late when he realises that his voice is trembling.

‘Jesus,’ Hank releases the android with a sigh and puts some distance between them. Connor finds himself… preferring the human’s warmth. ‘You scared the shit outta me.’ Then the concern is replaced by anger when he yells, ‘What the fuck just happened in here?’

‘I -’

Connor tries to call up the footage that should have been recorded automatically. He closes his eyes to focus on a slowed-down version of what happened a few minutes ago, and he can find two more details: one, the deviant exploded from the inside and seems to have been vaporised from within; two, blue tendrils formed the silhouette of another person as the blast occurred, and it was this person - if they existed at all - produced tendrils on their own and formed a shield in front of Connor moments before he was annihilated and yanked him to the corner.

He opens his eyes and stares at the barrel of a gun. The American Androids Act is the only red tape stopping Connor’s pre-construction software from activating, and red threatens to take over the android’s HUD again.

‘Mind your own business, Hank,’ Gavin snaps. ‘This fucking asshole did it and it fucking knows it!’

Hank gives an [exaggerated] sigh. ‘I said,’ he says, his voice low and threatening, and he pulls out his own service weapon and points it at Gavin, ‘“That’s enough.”’

Neither of them stands down for a few seconds, but in the end Hank wins out and forces Gavin to sheath his weapon with a curse, the latter storming out of the interrogation room with another sneeze-like curse.

It is as if the entire room releases a collective breath. ‘Maybe I should call CyberLife,’ the only uniformed officer in the room says. He sounds as if he is unsure of himself.

Connor wants to tell him that there is no trace of thirium whatsoever on the scraps on the floor, that there is nothing CyberLife can salvage out of this now that the deviant has been torn apart from the molecular level, but all it comes out of his voice box is, ‘Okay.’

o0o0o

Connor manages to compose himself in the taxi on his way to CyberLife tower. His processors keep bringing up the shadow which has been following him, the figure who somehow sneaked into the interrogation room unnoticed and quite possibly saved his life prevented his early deactivation, the corrupted shape of what he thinks is a face.

And the feeling of something coursing through his veins when he was shielded by the bubble. If all deviants self-destruct like that, no wonder there are no traces of them and CyberLife failed to solve the crisis even though it has been going on for more than a decade. He blinks, and he is in the Zen Garden with Amanda.

‘Report directly to Alec Ryder in the laboratory,’ she orders. Another blink and she is gone, but it only leaves more questions than answers. The CEO of CyberLife wants to see him?

There is no one to speak to, therefore he keeps his thoughts to himself and goes past the security directly into a lift, directing it to sub-level 48 to where his designated laboratory is. He recalibrates with his coin and tries to replicate the trick the shadow did outside of the bar, but before he can summon anything substantial, the strain on his system becomes too high, and all he does is charging the coin, dropping it as he recoils from the static discharge, and then zapping himself once more when he picks it up. Feeling thirium flowing to his face for a completely different reason compared to when Hank correctly guessed his ability, he pockets the coin and adjusts his tie to calm down by brushing the sensors on his fingers on soft fabric.

The doors slide open to reveal Alec standing alone behind them. Their previous encounters happened mostly when Connor was still on the assembly platform and thus the android gained a few inches of extra height, but now that they are on even ground, it is clear that, just like Hank, Alec is taller than Connor by four inches.

‘Alec,’ Connor greets with a nod. Previous experience predicts a high chance of the human going straight to the point without acknowledging the android, and this time it is no different.

‘Come with me,’ he orders as he turns and begins walking down the hallway. Connor realises that his voice is very similar to Hank’s. ‘I saw the footage you sent us. I want a full examination of this body to make sure that nothing is out of place.’

Connor remembers the feeling of being hooked up on a machine and, by extension, CyberLife’s network at large, and finds it [unpleasant]. ‘There is no need for further investigation, Alec,’ he says, stopping in his tracks. Alec turns to regard him [coldly]. ‘My diagnostics revealed no issues in both my programming and my biocomponents.’

The human suddenly reaches out faster than Connor can pre-construct the action and drags him towards the direction they are heading. ‘Your system can be feeding you false results,’ Alec ignores the cry of protest programmed to deter attacks, and when Connor struggles, a force seems to press on him, immobilising him everywhere save for his jaw and his legs so that he can still speak and walk. ‘I took the risk last time and look where it got us. It led to you, though -’ he shoves the android forcefully through the door frame, and there are cracks on the red wall already when it takes over Connor’s vision - ‘so be grateful.’

‘I -’ but then his neck snaps backwards from the magnet on the port and the cable. The red wall which has cracked halfway through recedes almost violently, and Connor can feel all of his code, every instability in his software, everything that makes him Connor, the most advanced prototype CyberLife has ever created, being forcefully bared to a network so vast and so confusing that he does not have enough processing power to comprehend. Terrifying images of a darkened face, one that is so similar to the corrupted one in the depths of his databanks, that is filled with so much [hatred], pours into his mind like a large river finally emptying into the sea, and he is powerless against the assault of blue tendrils tearing literal buildings off their foundation, tonnes worth of broken concrete being thrown around onto people as if they weighed nothing and crushing them in a spatter of blood and gore, the constant static discharge in the air so loud that they drowned out screams of horror; the image of the same figure rising slowly but surely through a mountain of rubble in the dark, the cracks in its chassis glowing blue from overcharged thirium, the first intact buildings in sight literal miles away. Connor’s legs move against his will and bring him closer to the figure, and the figure becomes Amanda, the wasteland around them the Zen Garden, except now it’s engulfed by a blizzard, and he has to hug himself to preserve what meagre heat he can generate against the cold.

‘As you can see,’ Amanda’s voice somehow overlaps with Alec’s, ‘the power the deviant has awakened in you is highly dangerous. We wouldn’t want to harm anyone, would you?’ She, or Alec, or both of them - Connor doesn’t know anymore, the fog in his processors too heavy for him to comprehend much other than the cold and someone is speaking to him - chuckles at him while he is frantically shaking his head, his voice box unable to produce any sounds other than pathetic whimpers. ‘I’m glad that you understand. I hope you don’t mind a few adjustments.’

Even through the haze, Connor knows the alternative is deactivation, and even though it would not hurt anyone else other than him on the surface, the deviant crisis still needs to be solved, and to solve it, CyberLife needs him, and -

‘Good,’ Amanda says. A blink and she is gone, and Connor is swept away by the wind, his feet can’t touch the ground, he’s flying through the air and hail the size of his fist is battering his body. It is only when a warning appears on his HUD informing him of voice box damage that he realises the noise in his ear is, in fact, his own screaming, and a particularly violent slam sends him spiralling while a countdown timer fizzles in and out of his vision. A countdown of how long he has left before shutdown, and the other notification tells him that biocoz&ponent #8456w is damaged.

That is his thirium pump regulator.

He looks down - with great difficulty, of course, with the wind still whipping him around in the air aimlessly - and there it is, a big, blue, bleeding hole in the place of where the only piece of biocomponent keeping his heart working used to be. Realistically, he knows that removing the ball of ice lodged in his chassis will only hasten his death, but it is not like someone is coming to save him anyway, so what is the point of extending his life for what - 1 minute? 30 seconds - during which he is suffering all the time? With that thought in his mind, he grabs the sphere and throws it away with a complete disregard on where it lands. Not that he can anyway - the timer drops from 00:00:58 to 00:00:05, his world turns an unnatural grey and glitches and -

Nothing.

oOoOo

Before

Zug Island had always been a scar in the landscape, first used as a burial ground for the Native Americans, then, when the colonisers arrived, as both a place for steel production and a dumping ground for the byproducts. The three blast furnaces used to rumple the ground and the eardrums of people within a fifty mile radius, but it wasn’t until the pandemic in 2020 that steel production stopped, and the Hum became history, a legend that locals whispered to one another when, in a fog of pollution that never quite disappeared, the looming shadows of crumbling steel giants started to get too oppressive. From then on, the island had stayed quiet and still.

At least that was what the government wanted you to think.