#IBM Bluemix course

Explore tagged Tumblr posts

Text

Enhance your experience with Node.js and IBM Bluemix SDK and battle your competitors! So we hear you’ve made amends with the kingdom of Node.js... But while you were getting all friendly and drinking beers together, enemies from all over Europe have become eager to take your place. So there is only one way to keep befriended: be better than your opponent! How to win? We send you to battle at kingdom Coderpower, a platform for developers. Learn, practice and compete to enhance your coding skills across six challenges. Each takes one hour of battling. Every Wednesday, a new challenge brings you one step closer to being the global leader of Europe in the €1000 prizepool! Of course, we understand that you want to train a bit first in order to be ruler of the Bluemix and Node.js Battle. Get in shape with the warm-ups and tutorials we provide here. Not only do you make your friendship stronger, you build up experience and your chances to win. For each of the six challenges, there is a smartphone-controlled SmartPlane to win. And if you end up the best coder of all… you can win €500 in cash! Join now and battle immediately to show the enemy your Node.js skills! Summary Of Challenge 6 weeks - 6 challenges to manipulate the IBM Bluemix SDK in the Bluemix & Node.js Battle! Every Wednesday a new challenge makes you enhance your skills and brings you one step closer to be the global leader of the challenge. 1 SmartPlane is offered each week. If you are the fiercest battler amongst all, we offer you €500 in cash!

0 notes

Link

0 notes

Photo

Wow! Love this! 😍 #Repost @programunity ・・・ Rate this setup 👇🤓🚀 . . Photo by: @carmencodes_ . . . . [29/100] The Hot Dog, Not Hot Dog app 🌭 #100daysofSwift Happy Wednesday everyone! How is your week so far? I don’t know why I keep thinking today is Tuesday 🤔. My week is going okay, have a lot going on at work which keeps me busy almost the whole day and I started watching a new show it’s called The Good Doctor, have you watched this show before? 👨🏻⚕ Today in my course I built a Hot dog No Hot dog app like the one from the show Silicon Valley📱. When we tap on the camera button in the navigation bar, our camera opens and you’ll be able to take a photo of something, the app will show you whether if the image was classified as a hot dog or not a hot dog 🌭. In this lesson we saw how we were able to use the image that the user took in the imagePickerController and converted that image into a CIImage and then passed that CIImage into the detect image method 📸 Once that image goes into this detect image method, we loaded up our model using the importer Inception V3 model and then we created a request that asks the model to classify whatever data we passed 📝. This data is defined using a handler. Finally, we used that image handler to perform the request of classifying the image and once that process completes then a callback gets trigger and we get back a request or an error and we print out the results that we got from the classification 📇. That’s pretty much how we figure out if our image is a hot dog or not a hot dog. I’m excited for my next lesson, we will be building this Hot Dog app again but using IBM Bluemix and Swift. 👉🏼 Have you used IBM Bluemix in the past? Have a great day everyone! ☺💋 — view on Instagram https://ift.tt/2JH8j0u

1 note

·

View note

Text

News explorer watson

#News explorer watson how to#

#News explorer watson full#

A Watson Explorer Application Builder news article widget.

A basic Bluemix application exposing the Watson AlchemyAPI service as a web service.

This tutorial will walk through the creation and deployment of three components.

The Application Builder proxy up and running.

Completion of the Watson Explorer AppBuilder tutorial.

Watson Explorer - Installed, configured, and running.

Please see the Introduction for an overview of the integration architecture, and the tools and libraries that need to be installed to create Java-based applications in Bluemix. By the end of the tutorial you will have enhanced the example-appbuilder app with a news widget that provides links to recent news articles and blog updates relevant to individual entities, as well as a trend widget that provides a visualization of the frequency with which individual entities appear in the news.

#News explorer watson how to#

The goal of this tutorial is to demonstrate how to get started with an integration between Watson Explorer and the Watson AlchemyAPI service available on IBM Watson Developer Cloud.

#News explorer watson full#

The full AlchemyAPI reference is available on the AlchemyAPI website. There are additional cognitive functions available via the AlchemyAPI service in Bluemix that will be covered in other integration examples. In this tutorial, we demonstrate development of two Watson Explorer Application Builder widgets that leverage the AlchemyAPI News API to produce a 360 degree app that allows end-users to keep their fingers on the pulse of their entities. The Watson AlchemyAPI News API offers sophisticated search over a curated dataset of news and blogs that has been enriched with AlchemyAPI's text analysis. IBM Watson Explorer combines search and content analytics with unique cognitive computing capabilities available through external cloud services such as Watson Developer Cloud to help users find and understand the information they need to work more efficiently and make better, more confident decisions. This course offers hands-on labs giving students exposure to the various aspects of configuring analytics collections and creating custom annotators that can be used in solutions that will involve Watson Explorer Analytical Components functionality.Integrating the Watson Developer Cloud AlchemyAPI News Service with Watson Explorer This course will also look at creating and deploying a custom annotator using Content Analytics Studio. In this course we will look at the results of analytic collections thru the products Content Miner application. This primary functionality is found in an Analytics collections crawling, parsing, indexing, annotating, and searching components. This course is designed to introduce the technical student to using the content analytics, annotator, and content mining functionality. The Advanced Edition also includes the Foundational Components functionality which is covered in a separate 4 day course (O3100). This functionality is found in Watson Explorer Advanced Edition. In this course, you will learn the core features and functionality of Watson Explorer Analytical Components. The Top 20 Highest-Paying IT Certifications in 2022.What are Four Types of Cloud Computing Services.35 Important In-Demand IT Skills for 2022.Partner With Us Add product value and help your clients keep their skills up to date.Room Rentals Renting a classroom from ExitCertified is easy and convenient.Government Government training solutions at ExitCertified.Corporate Training Achieve your strategic goals through organizational training.Group Training Work with us on a custom training plan for your next group training.Individual Training Build your technical skills and learn from an accredited instructor.

0 notes

Photo

Online Certification in IoT, Cloud Computing, and Edge AI By E&ICT Academy, IIT Guwahati

For beginners, individuals, and working professionals, industry experts from The IoT Academy, in collaboration with the IIT Guwahati's E&ICT Academy have designed a course offering a foundational base related to concepts like IoT, Cloud Computing, and Edge AI.

Details of The Course

This online cloud computing course modules cover major concepts like-

● IoT Architecture, IoT Protocols

● IoT security, Node-RED

● Cloud Computing, AWS

● IBM Bluemix, Machine Learning with IoT, Edge AI, Node js & Edge Impulse

● It gives a strong foundation to try out the applied concept for solving real-time issues

Our cloud computing course can help you master concepts related to cloud computing and implement them in various sectors. You will get an inside and out comprehension of cloud engineering, arrangement, administrations, and a lot more to take care of any business framework issues.

Certificate of Completion by E& ICT Academy, IIT Guwahati

Subsequent to finishing this program, you will acquire an Online Certification in IoT, Cloud Computing and Edge AI By E&ICT Academy, IIT Guwahati after successful completion of the program.

Highlights of the Program

● 80 Hrs of Instructor-led Training

● Certification from E&ICT Academy, IIT Guwahati

● Code Reviews & Personalized Feedback by Mentors

● 1:1 Doubt Resolution in Live Sessions

● Designed by leading academic and industry experts

● Industry-relevant skills for IoT, Cloud Computing & Edge AI

● Hands-on and Industry-Grade Projects

● The IoT Academy Dedicated Career Services

Fee: ₹ 20,000 + GST

(Avail scholarships up to 20%)

Duration - 80 Hrs of Instructor-led Training

Session - Online

Also Read: Online Cloud Computing course

Details:https://www.theiotacademy.co/online-certification-in-iot-cloud-computing-and-edge-ai-by-eict-academy-iit-guwahati

0 notes

Text

New Post has been published on Cryptonewz.com

New Post has been published on http://cryptonewz.com/ibm-government-and-blockchain-sector-should-work-together-to-enhance-national-security/

IBM: Government and Blockchain Sector Should Work Together to Enhance National Security

IBM Vice President for Blockchain Technologies Jerry Cuomo recently testified before the Commission on Enhancing National Cybersecurity on how the blockchain can benefit transactions, eWeek reports.

Cuomo is persuaded that the technology could potentially cause a tectonic shift in the way financial systems are secured and that government, technology companies and industries should work together to advance blockchain technology to enhance national security.

The Commission on Enhancing National Cybersecurity, announced in April, is tasked with making detailed recommendations on actions that can be taken over the next decade to enhance cybersecurity awareness and protections throughout the private sector and at all levels of government, to protect privacy, to ensure public safety and economic and national security, and to empower Americans to take better control of their digital security. Sam Palmisano, former CEO of IBM, is the commission’s vice-chair.

President Barack Obama issued Executive Order 13718 to establish the commission in February. The executive order tasked the National Institute of Standards and Technology (NIST) to provide the commission with such expertise, services, funds, facilities, staff, equipment and other support services necessary to carry out its mission.

“The Commission will make detailed short-term and long-term recommendations to strengthen cybersecurity in both the public and private sectors, while protecting privacy, ensuring public safety and economic and national security, fostering discovery and development of new technical solutions, and bolstering partnerships between federal, state and local government and the private sector in the development, promotion and use of cybersecurity technologies, policies and best practices,” notes the official commission websiteat NIST.

The commission held an open meeting on May 16 in New York City. Cuomo’s statement, which appears in the Panelist Statements document issued by the commission, is republished in the IBM Think blog with the title “Blockchain: Securing the Financial Systems of the Future.”

Cuomo noted that 80 years ago a public-private partnership between the U.S. government and IBM created the Social Security system, which was the most advanced financial system of the time. “Today, as financial transactions become increasingly digital and networked, government and industry must once again combine forces to make the financial systems of the future more efficient, effective and secure than those of the past,” he said.

A similar partnership between government and industry, centered on innovative applications of distributed ledger technology, could enhance national security. Cuomo is persuaded that the government has a key role to play in funding blockchain research and providing official identity certification services for the emergent blockchain economy. In particular, according to the IBM executive, the NIST should define standards for interoperability, privacy and security, and government agencies should become early adopters of blockchain applications.

Cuomo identifies four key priority areas for government-supported developments in distributed ledger technology for national security: a new identity management system able to provide robust proof of identity; automatic systems able to track changes made to data and verify data provenance with time stamps and annotations; secure transaction processing; and a blockchain-based system to securely and confidentially share intelligence on cyber-threats and cyber-terrorism.

“We need to create a new social compact, where business, with input from government, architects the future of financial services,” concluded Cuomo. “We at IBM look forward to working with our partners in government, industry and academia to get this done.”

Of course, Cuomo defended IBM’s positions on current distributed ledger issues and development prospects. After bashing the “public enemy” Bitcoin for its openness, anarchy and potential for anonymity, Cuomo defended the “permissioned blockchain” approach favored by IBM and other major industry players.

“Blockchain came to prominence because it’s the core technology underlying the infamous Bitcoin cryptocurrency, but, while Bitcoin is an anonymous network, industries and government agencies are exploring the use of blockchain in networks where the participants are known,” said Cuomo. “We call this a ‘permissioned blockchain.’”

IBM is a premier member of Linux Foundation’s Hyperledger Project, a collaborative effort started in December to establish, build and sustain an open ‒ but “permissioned” ‒ non-Bitcoin blockchain. In February, Bitcoin Magazine reported that IBM is making tens of thousands of lines of code available to the Hyperledger Project. In April, IBM announced new cloud services based on the company’s Hyperledger code and Bluemix, IBM’s cloud Platform as a Service (PaaS).

“IBM is playing a central role in the development of a permissioned blockchain,” said Cuomo. “We’re a founding member of the Linux Foundation’s open-source Hyperledger Project, where we’re helping to build the foundational elements of business-ready blockchain architecture with a focus on privacy, confidentiality and auditability. We have joined consortia that are developing industry-specific blockchain implementations. And we’re pioneering the use of blockchain in our own operations.”

The post IBM: Government and Blockchain Sector Should Work Together to Enhance National Security appeared first on Bitcoin Magazine.

2 notes

·

View notes

Text

Top Platform as a service (PaaS) cloud solutions

Deciding on best PaaS (Platform-as-a-service) cloud solutions provider is very difficult as services provided by most service providers seem similar. The following are the best PaaS providers.

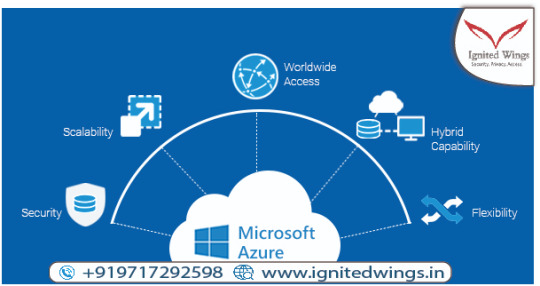

Microsoft Azure

Biggest benefit of Microsoft Azure is it supports any operating system, tool, language, and framework. This clearly makes the developer’s job a lot easier. Some of the available languages and options are Node.js, PHP, .NET, Python, Java and Ruby. Another significant benefit of using Azure is the availability of Visual Studio for creating and deploying applications for developers. The Visual Studio supports storage account diagnostics, local debugging of application code as well as other troubleshooting features

IBM-

BluemixIdea behind IBM’s open-source PaaS, IBM- Bluemix that is based on cloud foundry is to provide greater security and control to users. To extend the functionality of apps users can rely on third-party and community services. Another benefit is that already existing infrastructure can be seamlessly migrated to Bluemix. The languages available include Ruby on Rails, PHP, Python, and Ruby Sinatra. Bluemix can support other languages through build packs. Amazon web services- Elastic BeanstalkElastic Beanstalk is meant for scaling and deploying web applications developed on Ruby, .NET, PHP, Java, Python, Node.js, and Docker. Biggest benefit is that AWS is frequently adding new tools and so the latest tools are always available to users. IaaS users are allowed to use PaaS to build apps. This blurs the demarcation between PaaS and IaaS.

Redhat-

OpenshiftRedhat offers the whole array of options for developers which include hosted, private or open-source PaaS projects. That simply means at whatever level the user is RedHat has an option to offer. One of the shining benefits is automated workflows helping developers to scale automatically in order to tackle peak workloads.SalesforceDevelopers can build multi-tenant applications using PaaS options from Salesforce. Development is carried out using a development language called Apex and nonstandard, purpose-built tools. Heroku has originally supported Ruby programming language but now it has developed support for Scala, Clojure, Java, Node.js, Python and PHP. One of the demerits is that the number of add-ons varies and similarly, load requirements vary too. This can cause cost fluctuations making it difficult to plan in advance. Training courses related to Microsoft Azure, Salesforce and Amazon web services are in great demand. Ignited wings are well accepted as a great option for those who want AWS, Salesforce and Microsoft Azure training in Noida.

0 notes

Link

Machine Learning with Microsoft AZURE ##elearning ##udemy #Azure #learning #Machine #Microsoft Machine Learning with Microsoft AZURE As Machine learning and cloud computing are trending topic and also have lot of job opportunities If you have interest in machine learning as well as cloud computing then this course for you. This course will let you use your machine learning skills deploy in cloud. There are various cloud platform but only few are popular like Azure, AWS, IBM Bluemix and GCP. Microsoft Azure a cloud platform where we will going to deploy machine learning skills. In this course you will going to learn following topics Concept of Machine Learning Cognitive Services Difference between Artificial Intelligence and Machine Learning Simple chatbot integrate in HTML websites Echo Bot Facebook Chat bot Question and Answer Maker LUIS(Language Understanding) Text Analytics Detecting Language Analyze image and video Recognition handwritten from text Generate Thumbnail Content Moderator Translate and many more things ALL THE BEST !! 👉 Activate Udemy Coupon 👈 Free Tutorials Udemy Review Real Discount Udemy Free Courses Udemy Coupon Udemy Francais Coupon Udemy gratuit Coursera and Edx ELearningFree Course Free Online Training Udemy Udemy Free Coupons Udemy Free Discount Coupons Udemy Online Course Udemy Online Training 100% FREE Udemy Discount Coupons https://www.couponudemy.com/blog/machine-learning-with-microsoft-azure/

0 notes

Text

The FinTech Future of IBM i

Written by, Tamara R. Campbell-Teghillo

With fintech companies moving toward the creation of new transaction models for blockchain support of payment and lending transactions, IBM has launched new developer tools, software, and training programs targeted at financial services industry software developers. Version 7.3 of IBM i was released in April 2016. Requiring little to no onsite IT administration during standard operations, IBM iis making blockchain programming endeavors possible.

IBM BlueMix Garage developers are using the Bluemix PaaS (platform as a service) capabilities to test network solutions on the cloud designed to unlock the potential of blockchain. The Hyperledger Project set up to advance blockchain technology as a cross-industry, enterprise-level, open standard for distributed ledgers will be critical to development of the latest in fintech services IaaS (infrastructure application as service) technologies as they emerge.

The collaboration of software developers on blockchain framework and platform projects, stands to promote the transparency and interoperability of fintech IaaS. Providing the support required to bring blockchain technologies into adoption by mainstream commercial entities, BlueMix Garage developers are keen on IBM i database software programming as turn-key solution to operating systems on PowerSystems and PureSystems servers.

Recent release of fintech and blockchain courses by the IBM Learning Lab, offers training and use cases for financial operations analysts and developers. Offered in partnership with blockchain education programs and coding communities, IBM is engaged with the best in cognitive developer talent to capture ideas for the next generation of APIs, artificial intelligence apps, and business process solutions from the IBM i COMMON community.

0 notes

Text

sslnotify.me - yet another OpenSource Serverless MVP

After ~10 years of experience in managing servers at small and medium scales, and despite the fact that I still love doing sysadmin stuff and I constantly try to learn new and better ways to approach and solve problems, there's one thing that I think I've learned very well: it's hard to get the operation part right, very hard.

Instead of dealing with not-so-interesting problems like patching critical security bugs or adding/removing users or troubleshooting obscure network connectivity issues, what if we could just cut to the chase and invest most of our time in what we as engineers are usually hired for, i.e. solving non-trivial problems?

TL;DL - try the service and/or check out the code

sslnotify.me is an experimental web service developed following the Serverless principles. You can inspect all the code needed to create the infrastructure, deploy the application, and of course the application itself on this GitHub repository.

Enter Serverless

The Serverless trend has been the last to join the list of paradigms which are supposedly going to bring ops bliss into our technical lives. If Infrastructure as a Service didn't spare us from the need of operational work (sometimes arguably making it even harder), nor Platfrom as a Service was able to address important concerns like technical lock-in and price optimization, Function as a Service is brand new and feels like a fresh new way of approaching software development.

These are some of the points I find particually beneficial in FaaS:

very fast prototyping speed

sensible reduction in management complexity

low (mostly zero while developing) cost for certain usage patterns

natual tendency to design highly efficient and scalable architectures

This in a nutshell seems to be the FaaS promise so far, and I'd like to find out how much of this is true and how much is just marketing.

Bootstrapping

The Net is full of excellent documentation material for who wants to get started with some new technology, but what I find most beneficial to myself is to get my hands dirty with some real project, as I recently did with Go and OpenWhisk.

Scratching an itch also generally works well for me as source of inspiration, so after issuing a Let's Encrypt certificate for this OpenWhisk web service I was toying with, I thought would be nice to be alerted when the certificate is going to expire. To my surprise, a Google search for such a service resulted in a list of very crappy or overly complicated web sites which are in the best case hiding the only functionality I needed between a bloat of other services.

That's basically how sslnotify.me was born.

Choosing the stack

The purpose of the project was yes to learn new tools and get some hands on experience with FaaS, but doing so in the simpliest possible way (i.e. following KISS principle as much as possible), so keep in mind that many of the choices might not necessary be the "best" nor most efficient nor elegant ones, they are representative of what looked like the simpliest and most straightforward option to me while facing problems while they were rising.

That said, this is the software stack taken from the project README:

Chalice framework - to expose a simple REST API via AWS API Gateway

Amazon Web Services:

Lambda (Python 2.7.10) - for almost everything else

DynamoDB (data persistency)

S3 (data backup)

SES (email notifications)

Route53 (DNS records)

CloudFront (delivery of frontend static files via https, redirect of http to https)

ACM (SSL certificate for both frontend and APIs)

CloudWatch Logs and Events (logging and reporting, trigger for batch jobs)

Bootstrap + jQuery (JS frontend)

Moto and Py.test (unit tests, work in progress)

I know, at first sight this list is quite a slap in the face of the beloved KISS principle, isn't it? I have to agree, but what might seem an over complication in terms of technologies, is in my opinion mitigated by the almost-zero maintenance and management required to keep the service up and running. Let's dig a little more into the implementation details to find out.

Architecture

sslnotify.me is basically a daily monitoring notification system, where the user can subscribe and unsubscribe via a simple web page to get email notifications if and when a chosen SSL certificate is expiring before a certain amount of days which the user can also specify.

Under the hoods the JS frontend interacts with the backend available at the api.sslnotify.me HTTPS endpoint to register/unregister the user and to deliver the feeedback form content, otherwise polls the sslexpired.info service when the user clicks the Check now button.

The actual SSL expiration check is done by this other service which I previously developed with OpenWhisk and deployed on IBM Bluemix platform, in order to be used indipendently as a validation backend and to learn a bit more of Golang along the way, but that's for another story...

The service core functionality can be seen as a simple daily cronjob (the cron lambda triggered by CloudWatch Events) which sets the things in motion to run the checks and deliver notifications when an alert state is detected.

To give you a better idea of how it works, this is the sequence of events behind it: - a CloudWatch Event invokes the cron lambda async execution (at 6:00 UTC every day) - cron lambda invokes data lambda (blocking) to retrieve the list of users and domains to be checked - data lambda connects to DynamoDB and get the content of the user table, sends the content back to the invoker lambda (cron) - for each entry in the user table, cron lambda asyncrounosly invokes one checker lambda, writes an OK log to the related CloudWatch Logs stream, and exits - each checker lambda executes indipendently and concurrently, sending a GET request to the sslexpired.info endpoint to validate the domain; if no alert condition is present, logs OK and exits, otherwise asyncrounosly invokes a mailer lambda execution and exits - any triggered mailer lambda runs concurrently, connects to the SES service to deliver the alert to the user via email, logs OK and exits

Beside the main cron logic, there are a couple of other simpler cron-like processes:

a daily reporter lambda, collecting logged errors or exceptions and bounce/feedback emails delivered (if any) since the day before, and sending me an email with the content (if any)

an hourly backup of the DynamoDB tables to a dedicated S3 bucket, implemented by the data lambda

Challenges

Everyone interested in Serverless seems to agree on one thing: being living its infancy it is still not very mature, especially in regards of tooling around it. Between other novelties, you'll have to understand how to do logging right, how to test properly, and so on. It's all true, but thankfully there's a lot going on on this front, frameworks and tutorials and hands-on and whitepapers are popping up at a mindblowing rate and I'm quite sure it's just a matter of time before the ecosystem makes it ready for prime time.

Here though I'd like to focus on something else which I found interesting and suprisingly to me not that much in evidence yet. Before starting to getting the actual pieces together, I honestly underestimated the complexity that even a very very simple service like this could hide, not much in the actual coding required (that was the easy part) but from the infrastructure setup perspective, so I actually think that what's in the Terraform file here is the interesting part of this project that might be helpful to whoever is starting from scratch.

For example, take setting SES properly, it is non trivial at all, you have to take care of DNS records to make DKIM work, setup proper bounces handling and so on, and I couldn't find that much of help just googling around, so I had to go the hard way, i.e. reading in details the AWS official documentation, which sometimes unfortunately is not that exhaustive, especially if you plan to do things right, meaning hitting the APIs in a programmatic way instead of clicking around the web console (what an heresy!) as shown in almost every single page of the official docs.

One thing that really surprises me all the time is how broken are the security defaults suggested there. For example, when setting up a new lambda, docs usually show something like this as policy for the lambda role:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents", "logs:DescribeLogStreams" ], "Resource": [ "arn:aws:logs:*:*:*" ] } ] }

I didn't check but I think it's what you actually get when creating a new lambda from the web console. Mmmm... read-write permission to ANY log group and stream... seriously? Thankfully with Terraform is not that hard to set lambdas permission right, i.e. to create and write only to their dedicated stream, and in practice it looks something like this:

data "aws_iam_policy_document" "lambda-reporter-policy" { statement { actions = [ "logs:CreateLogStream", "logs:PutLogEvents", ] resources = ["${aws_cloudwatch_log_group.lambda-reporter.arn}"] } [...]

Nice and clean, and definitely safer. For more details on how Terraform was used, you can find the whole setup here. It's also taking care of crating the needed S3 buckets, especially the one for the frontend which is actually delivered via CloudFront using our SSL certificate, and to link the SSL certificate to the API backend too.

Unfortunately I couldn't reduce to zero the 'point and click' work, some was needed to validate ownership of the sslnotify.me domain (obviously a no-go for Terraform), some to deal with the fact that CloudFront distributions take a very long time to be in place.

Nevertheless, I'm quite happy with the result and I hope this could help who's going down this path to get up to speed quicker then me. For example, I was also suprised not to find any tutorial on how to setup an HTTPS static website using cloud services: lots for HTTP only examples, but with ACM and Let's encrypt out in the wild and totally free of charge, there's really no more excuse for not serving HTTP traffic via SSL in 2017.

What's missing

The more I write the more I feel there's much more to say, for example I didn't touch at all the frontend part yet, mostly because I had almost zero experience with CSS, JS, CORS, sitemaps, and holy moly how much you need to know just to get started with something so simple... the web party is really hard for me and thankfully I got great support from some very special friends while dealing with it.

Anyway, this was thought as an MVP (minimum viable product) from the very beginning, so obviously there's a huge room from improvement almost everywhere, especially on the frontend side of the fence. If you feel like giving some advise or better patch the code, please don't hold that feeling and go ahead! I highly value any feedback I receive and I'll be more then happy to hear about what you think about it.

Conclusion

I personally believe Serverless is here to stay and, it's fun to work with, and the event driven paradigm pushes you to think on how to develop highly efficient applications made up of short lived processes, which are easy to be scaled (by the platform, without you moving a finger) and naturally adapt to usage pattern, with the obvious benefits of cost reduction (you pay for the milliseconds your code is executed) and almost zero work needed to keep the infrastructure up and running. The platform under the hood is now taking care of provisioning resources on demand and scaling out as many parallel lambda executions as needed to serve the actual user load; on top of this (or, better, on the bottom of it) built-in functionalities like DynamoDB TTL fileds, S3 objects lifecycles or CloudWatch logs expirations spare us from the need of writing the same kind of scripts, again and again, to deal with basic tech needs like purging old resources.

That said, keeping it simple is in practice very hard to follow, and this case was no different. It's important not to get fooled into thinking FaaS is the panacea to every complexity pain, because it's not the case (and this is valid as a general advise, you can replace FaaS with any cool tech out there and still stands true). There are still many open questions and challenges waiting down the road, for example setting up an environment in a safe and predictible way is not as easy as it may look at a first sight, even if you're an experienced engineer; there are lots of small details you'll have to learn and figure out in order to make all the setup rock solid, but it's definitely doable and worth the initial pain of going through pages and pages of documentation. The good part is that it's very easy to start experimenting with it, and the generous free tiers offered by all the cloud providers make it even easier to step in.

What's next

Speaking of free tiers, the amazing 300USD Google Compute Cloud credit, together with the enthusiasm of some friend already using it, convinced me to play with this amazing toy they call Kubernetes; let's see if I'll be able to come up with something interesting to share, given the amount of docs and tutorials out there I highly doubt that, but never say never. Till the next time... try Serverless and have fun with it!

2 notes

·

View notes

Text

Engageons la réflexion sur l'avenir de l'Intelligence artificielle

Dans l'actualité de la semaine on ne pouvait pas manquer l'annonce par Axelle Lemaire, secrétaire d'Etat en charge du numérique et depuis octobre dernier de l'innovation, du lancement de la mission "France IA" pour définir une stratégie nationale en Intelligence Artificielle pour la France. Une démarche pleine de sens, tant la France a d'atouts sur ce sujet (mathématiques, ingénieurs, recherche...), mais une démarche tardive à moins de quatre mois d'un nouveau gouvernement... Espérons que cette démarche réussira au moins à boucler sa première étape d'identification des acteurs et à faire parler de ces atouts, à défaut de pouvoir déjà fédérer ces acteurs façon "French Tech" avec par exemple un label "France IA" démontrant la qualité du savoir faire français. Les domaines de l'IA sont très vastes, mais tous sont stratégiques pour l'évolution de l'Industrie et des services numériques. Il rassemblent principalement :

l'apprentissage automatique (machine learning)

la vision des ordinateurs en temps réel ou par analyse d'images/vidéos

les robots intelligents (versus simplement programmés)

les assistants virtuels

la reconnaissance du langage (speech to speech)

la reconnaissance des gestes

En parlant d'intelligence artificielle, aujourd'hui les plateformes GAFA+MI n'en sont plus à simple la réflexion. Elles sont opérationnelles ! Leurs organisations ont recruté les meilleurs spécialistes, elles ont racheté ou investi dans les startups disruptives et se font la course pour trouver le modèle économique qui dominera :

Google a développé une stratégie d'enrichissement de ses services avec Google Now qui vise a être votre assistant personnel, mais aussi Google Maps et les débuts d'Allo qui amène son IA dans une messagerie instantanée pour devenir un assistant virtuel.

Amazon a surpris toute l'industrie avec "Echo" - dont GreenSI a déjà parlé - un appareil dans la maison dopé à l'IA d'Alexa pour déplacer cette assistance virtuelle dans le quotidien et non en mobilité (où le smartphone reste le terminal n°1 en attendant la voiture)

Facebook

Apple, le pionnier avec Siri, l'assistant virtuel sur iPhone, mais qui dans ce domaine aussi peine a se renouveler et innover depuis quelques années.

Microsoft, avec une stratégie résolument dans le Cloud depuis l'arrivée de Satya Nadella, où la Cortana Intelligence Suite et les "Azure Cognitive Services" sont mis à la disposition des entreprises qui développent des applications pour les enrichir avec de l'IA.

IBM parti plus tôt avec Watson dès 2011, qui est devenu une division d'IBM en 2014, a une stratégie similaire mais totalement orientée vers les grandes entreprises, même si ses services sont disponibles en ligne sur BlueMix sa "plateform as a service" (PaaS).

GreenSI, a une position plus tranchée sur Facebook qui semble un peu derrière ces acteurs sur le plan des réalisations opérationnelles. Annonce de "M" en 2014, ouverture d'un Lab (à Paris) en 2015, pourtant son IA est uniquement présente avec des "bots" dans Messenger dont l'intelligence est réellement dans l'ecosystème des startups comme Chatfuel qui permettent de les faire fonctionner. Et ce malgré une communication bien rodée en fin d'année dernière avec la vidéo "intime" de Mark Zuckerberg parlant à Jarvis qui pilote sa maison. Mais si on regarde quelques années en arrière, les jeux dans Facebook ont explosés avec des sociétés comme Zinga et non avec des développements propres. Rappelons-nous aussi que Facebook a failli rater le virage mobile en 2011. Leur application interne n'était pas au point. C'est le rachat d'Instagram (2012) puis de Whatsapp (2014) qui leur a donné la légitimité et qui a fait décoller leur cours de Bourse mi-2013, une fois les analystes rassurés sur le fait que Facebook pourrait capter la manne de la publicité mobile. La stratégie de Facebook semble donc pour l'instant beaucoup plus axée sur son écosystème et la co-innovation. Cela pourrait changer quand Facebook exploitera les conversations de ses 1,7 milliards de membres actifs mensuels (1 sur mobile) et mieux connaître le genre humain... Dans les autres acteurs, on peut aussi citer ceux qui sont en embuscade et qui entrerons dans le peloton de tête de façon opportuniste avec un rachat ou de nouveaux services, mais qui pour l'instant agissent via leur fonds d'investissement qui prennent des positions : Salesforce (Digital Genius, MetaMind ), Samsung et pourquoi pas le français AXA qui avec AXA Ventures s'interesse de très près au sujet dans ce qui pourrait outiller la santé (Neura, BI Beats, Medlanes) ou la location immobilière (price methods devenu Wheelhouse) Au niveau des applications, les assistants virtuels et les objets intelligents (et connectés) en B2C et B2B,vont certainement tirer les usages à court terme. Dans les assistants virtuels, attendons-nous a voir les sites fleurir des "chatbots" pour améliorer la qualité de service en ligne dont une partie sous Facebook. En terme d'industries, la santé est celle qui oriente 15% des investissements des startups (selon CB Insights) suivi de près par l'expérience client et l'analyse de données. Le marché B2C sera donc tiré par la santé et le B2B pour les deux derniers. L'IA n'est pas non plus une nouveauté puisque l'origine est souvent donnée à "Computing Machinery and Intelligence" d'Alan Turing en 1950 et les premiers algorithmes de diagnostic médical datent des années 1970 (Mycin). Notre ex-pépite nationale Ilog créée en 1987 s'est faite avaler par IBM en 2009. On constate donc une effervescence mondiale, et des investissements en hausse depuis 2010. Les voyants de la stratégie française devaient donc clignoter depuis longtemps quand Axelle Lemaire est passée devant, et finalement malgré un contexte peu favorable a décidé de relever le défi avec Thierry Mandon (Recherche). Oui, la "France des ingénieurs" a une certaine avance sur le sujet. Car même si elle fleurte avec le dernier rang du classement PISA en mathématiques au collège, sa recherche et son enseignement supérieur sont au plus haut niveau mondial. L'ambassade de cette excellence comptant dans ses rangs Cédric Villani, médaille Fields en 2010, qui par sa photo officielle qui a fait le tour du monde, incarne à merveille la modernisme et la tradition pour les sciences. L'intelligence artificielle est aussi un vrai sujet de société qui dépasse largement le champ du numérique, puisqu'elle vise à développer des dispositifs imitant ou remplaçant l'humain. Son rôle dans la société sera donc certainement débattu, domaine par domaine, industrie par industrie dans les années qui arrivent. L'algorithme est déjà sous les feux du débat, avec la question très naïve de sa neutralité, mais l'IA va plus loin puisqu'elle donne la main à un machine. L'IA sera acceptée quand elle permettra par exemple de sauver des vies en accélérant les décisions et en réduisant les risques d'erreurs de diagnostic, mais certainement très challengé quand les usines Michelin de Clermont-Ferrand auront plus de robots que d'humains syndiqués... Ce débat a été lancé cette semaine au Forum Economique Mondial de Davos où Satya Nadella participait à un débat sur l'intelligence artificielle : "Quels profits la société toute entière peut attendre de l’IA". Le PDG de Microsoft a défendu l'idée que ceux qui disposent des platerformes d'IA devraient l'orienter vers des tâches aidant les humains plutôt que supprimant des emplois. Au delà de l'intention très louable de Microsoft, et de l'humanisme avéré de son leader, ne soyons quand même pas dupes, car cette question est celle qui différencie un Microsoft d'un Google pour rejoindre le cercle très fermé des GAFAs... Microsoft ou IBM en B2B vendent leur technologie à des entreprises qui décideront des usages. On a encore du mal a croire qu'ils refuseront une vente quand un patron d'usine viendra les voir pour outiller ses usines avec leur technologie. Il est aussi probable que les investisseurs qui depuis 2011 mettent des centaines de millions dans le développement de startups visent en priorité les usages qui valoriseront le mieux leur participation sans nécessairement prendre en compte l'avenir de l'humanité.

Google, Facebook, IBM, Microsoft et Amazon ont aussi créé un alliance pour instaurer de « bonnes pratiques » dans ce domaine, et mieux informer le grand public. Et puis à la conférence Frontiers en octobre dernier (billet GreenSI), la Maison Blanche avait organisé un débat et pris des engagements sur l'IA et les robots.

Donc oui, la France a un autre risque de retard. Celui de ne pas participer à la réflexion sur l'éthique de l'IA qui est enjeu majeur dans les années qui arrivent.

Une réflexion qui se traduira demain en usages standards qui pourraient s'imposer à tous, et surtout ne pas limiter les usages qu'on ne voudrait pas voir se développer. Car dans ce jeu, les plateformes mondiales seront certainement les plus influentes, et il sera ensuite trop tard de vouloir interdire telle ou telle plateforme depuis le sol français, ou européen. L'IA n'est bien sûr pas un ennemi mais un redoutable allié au service de la stratégie d'entreprise, et certainement du développement de nouveaux services intelligents pour l'industrie et la ville numérique. C'est surtout une compétence qui se marie très bien avec les capteurs et l'augmentation du nombre de données capturées par les systèmes d'information. Dans l'entreprise en 2017, GreenSI conseille donc que ce soit l'année de la réflexion pour anticiper cette technologie qui au-delà de transformer les usages va modifier les relations entre l'Homme et la machine. Une autre façon de se repenser son avantage concurrentiel. from www.GreenSI.fr http://ift.tt/2jQmZxb

1 note

·

View note

Text

Cloud Computing with IBM Bluemix

Explore the benefits of cloud computing with Bluemix, the PaaS offering from IBM Cloud. Learn about the available services under the Platform and Infrastructure categories, and what developers can do with each service. Instructor Harshit Srivastava runs down the compute, storage, and network and security offerings, as well as solutions for mobile, DevOps, artificial intelligence, serverless, blockchain, and continuous delivery. Then he provides practical examples of Bluemix in action: creating an AI-powered chatbot with Watson Assistant; building and deploying web apps with Cloud Foundry; creating a DevOps toolchain for continuous delivery; using block storage and containers; and leveraging boilerplate code. Plus, learn how to use tools such as Bluemix Analytics Engine and Streaming Analytics to gain deeper insights on everything from big data analysis to streaming data from social media.

Topics include:

Infrastructure with Bluemix Platforms: DevOps, mobile, IoT, and more Creating a chatbot with Watson Assistant Deploying projects on Bluemix Continuous delivery Block storage Containers Boilerplate Data and analytics

Duration: 2h 40m Author: Harshit Srivastava Level: Intermediate Category: Developer Subject Tags: Programming Languages Software Tags: IBM Cloud ID: 6e34e6a23ecf48c369ceac7f5d656500

Course Content: (Please leave comment if any problem occurs)

Welcome

The post Cloud Computing with IBM Bluemix appeared first on Lyndastreaming.

source https://www.lyndastreaming.com/cloud-computing-with-ibm-bluemix/?utm_source=rss&utm_medium=rss&utm_campaign=cloud-computing-with-ibm-bluemix

0 notes

Text

Azure Machine Learning Certification- AZ AI100

Azure Machine Learning Certification- AZ AI100

As Machine learning and cloud computing are trending topic and also have lot of job opportunities

If you have interest in machine learning as well as cloud computing then this course for you. This course will let you use your machine learning skills deploy in cloud. There are various cloud platform but only few are popular like Azure, AWS, IBM Bluemix and GCP.

Microsoft Azure a cloud…

View On WordPress

0 notes

Photo

Online Certification in IoT, Cloud Computing and Edge AI By E&ICT Academy, IIT Guwahati

Certification in IoT, Cloud Computing, and Edge Ai is conceived and designed for freshers, undergraduates, & working professionals by industry experts from E&ICT Academy, IIT Guwahati.

Work on 10+ industry projects and various apparatuses while mastering popular concepts, for example, IoT, Artificial Intelligence, Cloud Computing, Machine Learning with IoT, IoT Security, IoT Gateways, IoT Protocols, and Event-driven applications.

Overview of the Program

This online cloud computing course modules cover topics, for example, IoT Architecture, IoT Protocols, IoT Security, Node-RED, Cloud Computing, AWS, IBM Bluemix, Machine Learning with IoT, Edge AI, Node js, and Edge Impulse. It gives a strong structure to testing the relevant idea for certifiable issues.

● You will figure out how to move a current application to the cloud without disturbing its continuing activity, as well as how to choose the ideal cloud organization stages or organizations to convey cloud arrangements as per the client's prerequisites.

● With online distributed computing, you will figure out how to design endeavor grade applications that can be scaled over the cloud on a case by case basis.

Certificate of Completion by E& ICT Academy, IIT Guwahati

In the wake of completing this program, you will get an Online Internet of Things, Cloud Computing, and Edge AI by E&ICT Academy, IIT Guwahati as the Knowledge Partner. This certificate will validate your mastery in IoT, Cloud Computing, and Edge AI offers online confirmation in IoT, Cloud Computing, and Edge AI.

E&ICT Academy, IIT Guwahati has planned an industry-endorsed program for IoT, Cloud Computing, and Edge AI Engineers.

Program Benefits

● E&ICT Academy, IIT Guwahati certification

● Live Sessions for 1:1 Doubt Resolution

● Mentors provide code reviews and personalized feedback.

● Developed by top academic and industrial specialists

● Industry-relevant IoT, Cloud Computing, and Edge AI competencies

● Hands-on and Professional-Grade Projects

● Career Services at the IoT Academy

Fee: ₹ 20,000 + GST

(Avail scholarships up to 20%)

Duration - 80 Hrs of Instructor-led Training

Session - Online

Learn More: https://www.theiotacademy.co/online-certification-in-iot-cloud-computing-and-edge-ai-by-eict-academy-iit-guwahati

0 notes

Text

IBM Cloud Training Courses kuala lumpur malaysia

IBM CLOUD

AAA, OAuth, and OIDC in IBM DataPower V7.5

RM820.00

8 Hours

Developing Cloud-Native Applications for Bluemix

RM1,595.00

16 Hours

0 notes

Text

Master CHATBOT development w/o coding : IBM Watson Assistant

http://bit.ly/2DC6QWS Master CHATBOT development w/o coding : IBM Watson Assistant, Develop chatbot using IBM Watson Assistant. Learn Watson Text to Speech, Speech to Text and Visual Recognition Service. In this course you would learn about building a chatbot from scratch. We would explore a range of Cognitive services offered by IBM on Bluemix cloud, exploring how we can utilise power of Watson AI services offered. We would begin our journey from learning the fundamental concepts for building a chatbot- Intent, Entities and Dialog. They are vital components in developing a chatbot application. We will then implement those concepts and one by one build Intents, Entities and Dialog for out chatbot application. We would also learn to integrate our chatbot to web or mobile application using IBM Watson Assistant API’s. We would also learn to create intents, entities and dialogs programatically. You will also learn Watson Speech to Text and Text to Speech service. You will also learn to create Visual Recognition models using IBM Watson Visual Recognition Service. After completing the course you would be in a position to implement the concepts learned and build your very own chatbot application powered by IBM Watson.

0 notes