#Like the API's and libraries exist already

Explore tagged Tumblr posts

Text

willing to bet it's an attempt to incentivise users to stay with the official app, instead of using some of the open source teledildonics frameworks that exist?

why does my butt plug have dailies

#One of our planned projects for post-surgery is a secure peer to peer to control system for friend groups to link into xD#Like the API's and libraries exist already#And we want an excuse to get some experience with networking security and gui design#Granted the expected user count will be 1 (us)#But it's a fun idea to be able to send someone a python script they can use to fuck us (literally)

16K notes

·

View notes

Note

Hello!

This might be a weird question, but since you work in IT, do you use AI tools like ChatGPT or Claude a lot, or not at all? I’ve been learning programming for a few months, and honestly, it’s super hard. I’m definitely not a genius, so I use AI a lot to help me figure out what I’m doing and generate code.

The problem is that other students kind of judge and look down on people who use these tools, and it’s making me feel bad about it. Should I stop using AI altogether? I just don’t know how to manage without help or researching all the time.

If you have any tips, they'd really help me out 🙏

Thanks for reading this!

Hey anon! Well, the thing is that the IT industry in its entirety is pushing for AI integration as a whole into their products, so industry-wise it has become sort of inevitable. That being said, because we are still early into the adoption of AI I personally don't use it as I don't have much of a need for it in my current projects. However, Github Copilot is a tool that a lot of my colleagues like to use to assist with their code, and IDEs like IntelliJ have also begun to integrate AI coding assistance into their software. Some of my colleagues do use ChatGPT to ask very obscure and intricate questions about some topics, less to do with getting a direct answer and moreso to get a general idea of what they should be looking at which will segway into my next point. So code generation. The thing is, before the advent of ChatGPT, there already existed plenty of tools that generate boilerplate templates for code. As a software engineer, you don't want to be wasting time reinventing the wheel, so we are already accustomed to using tools to generate code. Where your work actually comes in is writing the logic that is very specific to the way that your project functions. The way I see ChatGPT is that it's a bit smarter than the general libraries and APIs we already use to generate code, but it still doesn't take the entire scope of your project into consideration. The point I am getting at here is that I don't necessarily think there is a problem in generating code, whether you are using AI or anything else, but the problem is do you understand what the code is doing, why it works, and how it will affect your project? Can you take what ChatGPT gives you and actually optimize it to the specifics of your project, or do you just inject it, see that it works, and go on your merry way without another thought as to why it worked? So, I would say, as a student, I would suggest trying not to use ChatGPT to generate code, because it defeats the purpose of learning code. Software engineering as a whole is tough! It is actually the nature of the beast that, at times, you will spend hours trying to solve a specific problem, and often times the solution at the end is to add one line in one very specific place which can feel anticlimactic after so much effort. However, what you get from all those hours of debugging, researching, and asking questions is a wealth of knowledge that you can add to your toolbox, and that is what is most important as a software developer. The IT landscape is rapidly changing; you might be expected to pick up a different programming language and different framework within weeks, you might suddenly be saddled with a new project you've never seen in your life, or you might suddenly have something new like AI thrown at you where you suddenly have to take it into consideration in your current work. You can only keep up with this sort of environment if you have a good understanding of programming fundamentals. So, try not to lean too much on things like ChatGPT because it will get you through today, but it will hurt you down the line (like in tech interviews, for example).

6 notes

·

View notes

Text

Vogon Media Server v0.43a

So I decided I did want to deal with the PDF problem.

I didn't resolve the large memory footprint problem yet, but I think today's work puts me closer to a solution. I've written a new custom PDF reader on top of the PDF.js library from Mozilla. This reader is much more simple than the full one built into Firefox browsers, but it utilizes the same underlying rendering libraries so it should be relatively accurate (for desktop users). The big benefit this brings is that it's a match for the existing CBZ reader controls and behavior, unifying the application and bringing in additional convenience features like history tracking and auto link to next issue/book when you reach the end of the current one.

The history tracking on the PDFs is a big win, in my opinion, as I have a lot of comics in PDF form that I can now throw into series and read.

Word of warning. PDFs bigger than a few MB can completely overload mobile browsers, resulting in poor rendering with completely missing elements, or a full browser crash. I'm betting the limit has a lot to do with your device's RAM, but I don't have enough familiarity to know for sure. These limitations are almost the same as the limits for the Mozilla written reader, so I think it has more to do with storing that much binary data in a browser session than how I am navigating around their APIs.

As far as addressing that problem, I suspect that if I can get TCPDF to read the meta data (i.e. the number of pages), I can use the library to write single page PDF files to memory and serve those instead of the full file. I'm already serving the PDF as a base64 encoded binary glob rather than from the file system directly. It doesn't solve the problem of in-document links, but since I provide the full Mozilla reader as a backup (accessible by clicking the new "Switch Reader" button), you can always swap readers if you need that.

As noted earlier today, the install script is still not working correctly so unless you have a working install already you'll have to wait until I get my VMs set up to be able to test and fix that. Good news on that front, the new media server builds much much faster than the old PI, so fast iteration should be very doable.

But, if you want to look at the code: https://github.com/stephentkennedy/vogon_media_server

6 notes

·

View notes

Text

Integrating Third-Party APIs in .NET Applications

In today’s software landscape, building a great app often means connecting it with services that already exist—like payment gateways, email platforms, or cloud storage. Instead of building every feature from scratch, developers can use third-party APIs to save time and deliver more powerful applications. If you're aiming to become a skilled .NET developer, learning how to integrate these APIs is a must—and enrolling at the Best DotNet Training Institute in Hyderabad, Kukatpally, KPHB is a great place to start.

Why Third-Party APIs Matter

Third-party APIs let developers tap into services built by other companies. For example, if you're adding payments to your app, using a service like Razorpay or Stripe means you don’t have to handle all the complexity of secure transactions yourself. Similarly, APIs from Google, Microsoft, or Facebook can help with everything from login systems to maps and analytics.

These tools don’t just save time—they help teams build better, more feature-rich applications.

.NET Makes API Integration Easy

One of the reasons developers love working with .NET is how well it handles API integration. Using built-in tools like HttpClient, you can make API calls, handle responses, and even deal with errors in a clean and structured way. Plus, with async programming support, these interactions won’t slow down your application.

There are also helpful libraries like RestSharp and features for handling JSON that make working with APIs even smoother.

Smart Tips for Successful Integration

When you're working with third-party APIs, keeping a few best practices in mind can make a big difference:

Keep Secrets Safe: Don’t hard-code API keys—use config files or environment variables instead.

Handle Errors Gracefully: Always check for errors and timeouts. APIs aren't perfect, so plan for the unexpected.

Be Aware of Limits: Many APIs have rate limits. Know them and design your app accordingly.

Use Dependency Injection: For tools like HttpClient, DI helps manage resources and keeps your code clean.

Log Everything: Keep logs of API responses—this helps with debugging and monitoring performance.

Real-World Examples

Here are just a few ways .NET developers use third-party APIs in real applications:

Adding Google Maps to show store locations

Sending automatic emails using SendGrid

Processing online payments through PayPal or Razorpay

Uploading and managing files on AWS S3 or Azure Blob Storage

Conclusion

Third-party APIs are a powerful way to level up your .NET applications. They save time, reduce complexity, and help you deliver smarter features faster. If you're ready to build real-world skills and become job-ready, check out Monopoly IT Solutions—we provide hands-on training that prepares you for success in today’s tech-driven world.

#best dotnet training in hyderabad#best dotnet training in kukatpally#best dotnet training in kphb#best .net full stack training

0 notes

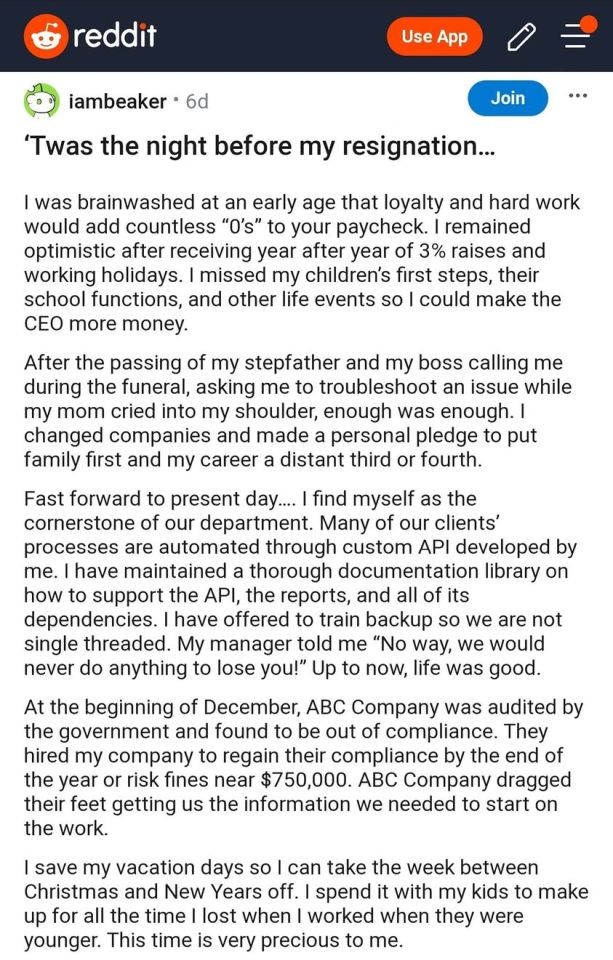

Photo

There are so, so, so many reasons why this incredibly fake story is incredibly fake. But I just keep reading the API technobabble and I can't stop laughing.

Mf out here bragging about how not only is their code unreadable and unmaintainable, but also their documentation is unreadable and undiscoverable. The problem that this creative writer has is that they need to be indispensable in this story, but unfortunately an indispensable programmer is uniquely gifted at making themself superfluous as quickly and efficiently as possible in as many contexts as possible.

The "nobody is familiar with Python" part is probably my favorite. Python is one of the easiest programming languages to learn. It's so easy that it's the language that engineers (real engineers like chemical engineers, biomedical engineers, material engineers, not software engineers developers) use to help them automate things in their work. Anyone who is familiar with any other programming language can pick up Python in under an hour. You who are familiar with zero programming languages could probably pick up a decent amount of Python in a month. Try it! It is probably easier than you think.

More reasons why this fake story is fake:

This is posted on r/antiwork. It's one of the subreddits infamous for fake stories of bad bosses

It's a multipart series. One of the tropes of fake Reddit stories is the escalating updates. Creative writers hear an encore and they keep going back for more

It's a bit ambiguous how long this person has been in industry, but given the context of missing multiple children's school functions, I think 10+ years of experience is a cautious estimate. That is long enough in software for you to be a team lead, if not a people manager yourself. This person should be training junior devs. They explicitly say that they aren't

Software is incredibly collaborative. There's no way a manager would turn down an offer to train new devs on the existing tooling

Moreover there's no way the code got push to production without several eyes on it. Most companies do either code reviews or pair programming or both. It makes no sense that zero other people understand what's going on with this code. Unless it's really buggy

The fact that someone tried to use it and it corrupted a CSV file (??) shows that it's actually really buggy. If the software was so good, anyone would be able to run it

That goes double for the documentation being so bad that nobody knows how to read it. The entire purpose of documentation is to explain how code works. You failed at your one job.

If the only documentation is something that's hard to find, that looks bad on OOP for two reasons: 1) Documentation is normally put inline next to the code precisely for the reason that it would be easy to find. Don't want to see what a nightmare their code with no inline docs looks like. 2) Their programming practices are so bad that their other documentation is hard to find. The program should have a file called README that either has all the documentation or tells you where to find all the documentation.

This violates NDA so bad

"Out of compliance" for what? Which regulation? Why do they have a deadline to regain compliance? They should already be suffering whatever fines or consequences or whatever for already being out of compliance. It would make more sense if they were at risk of being out of compliance if they didn't implement XYZ by January

There's a lot of weird wording here that indicates a lack of familiarity with software: "complex API", "documentation library", "single threaded". That's not how we use those terms

If you're a software developer for a company the size of Disney (ABC's parent) then what OOP asked for is your starting salary straight out of undergrad. Def not a raise for a senior engineer who's been in industry 10+ years. Def not more than their manager is making.

At a company that size, your direct manager has no ability to decide what the terms of your hiring agreement would be. Def not over text. It would need to go through HR and probably legal as well

"Legal checked the contract and there's a clause stating" lmao get outta here!

#Reddit creative writing exercise#codeblr#progblr#You can learn almost any programming language for free online if you first learn the essential software engineer skill of#googling what you need#Im hesitant to pick a specific python tutorial because I havent needed a python tutorial in over a decade#I dont know which of the modern ones are good#Freecodecamp seems to be fine for JavaScript#Similar but imo superior language to start with

247K notes

·

View notes

Text

Hugging Face partners with Groq for ultra-fast AI model inference

New Post has been published on https://thedigitalinsider.com/hugging-face-partners-with-groq-for-ultra-fast-ai-model-inference/

Hugging Face partners with Groq for ultra-fast AI model inference

Hugging Face has added Groq to its AI model inference providers, bringing lightning-fast processing to the popular model hub.

Speed and efficiency have become increasingly crucial in AI development, with many organisations struggling to balance model performance against rising computational costs.

Rather than using traditional GPUs, Groq has designed chips purpose-built for language models. The company’s Language Processing Unit (LPU) is a specialised chip designed from the ground up to handle the unique computational patterns of language models.

Unlike conventional processors that struggle with the sequential nature of language tasks, Groq’s architecture embraces this characteristic. The result? Dramatically reduced response times and higher throughput for AI applications that need to process text quickly.

Developers can now access numerous popular open-source models through Groq’s infrastructure, including Meta’s Llama 4 and Qwen’s QwQ-32B. This breadth of model support ensures teams aren’t sacrificing capabilities for performance.

Users have multiple ways to incorporate Groq into their workflows, depending on their preferences and existing setups.

For those who already have a relationship with Groq, Hugging Face allows straightforward configuration of personal API keys within account settings. This approach directs requests straight to Groq’s infrastructure while maintaining the familiar Hugging Face interface.

Alternatively, users can opt for a more hands-off experience by letting Hugging Face handle the connection entirely, with charges appearing on their Hugging Face account rather than requiring separate billing relationships.

The integration works seamlessly with Hugging Face’s client libraries for both Python and JavaScript, though the technical details remain refreshingly simple. Even without diving into code, developers can specify Groq as their preferred provider with minimal configuration.

Customers using their own Groq API keys are billed directly through their existing Groq accounts. For those preferring the consolidated approach, Hugging Face passes through the standard provider rates without adding markup, though they note that revenue-sharing agreements may evolve in the future.

Hugging Face even offers a limited inference quota at no cost—though the company naturally encourages upgrading to PRO for those making regular use of these services.

This partnership between Hugging Face and Groq emerges against a backdrop of intensifying competition in AI infrastructure for model inference. As more organisations move from experimentation to production deployment of AI systems, the bottlenecks around inference processing have become increasingly apparent.

What we’re seeing is a natural evolution of the AI ecosystem. First came the race for bigger models, then came the rush to make them practical. Groq represents the latter—making existing models work faster rather than just building larger ones.

For businesses weighing AI deployment options, the addition of Groq to Hugging Face’s provider ecosystem offers another choice in the balance between performance requirements and operational costs.

The significance extends beyond technical considerations. Faster inference means more responsive applications, which translates to better user experiences across countless services now incorporating AI assistance.

Sectors particularly sensitive to response times (e.g. customer service, healthcare diagnostics, financial analysis) stand to benefit from improvements to AI infrastructure that reduces the lag between question and answer.

As AI continues its march into everyday applications, partnerships like this highlight how the technology ecosystem is evolving to address the practical limitations that have historically constrained real-time AI implementation.

(Photo by Michał Mancewicz)

See also: NVIDIA helps Germany lead Europe’s AI manufacturing race

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

#Accounts#ai#ai & big data expo#AI assistance#AI development#AI Infrastructure#ai model#AI systems#amp#Analysis#API#applications#approach#architecture#Artificial Intelligence#automation#Big Data#Building#california#chip#chips#Cloud#code#Companies#competition#comprehensive#conference#consolidated#consolidated approach#customer service

0 notes

Text

Hiring Solana Developers 101: A Beginner’s Guide

As blockchain adoption progresses rapidly, high-performance platforms like Solana are presently being explored by enterprises to be the infrastructure for their decentralized applications. Considered to be fast, Solana is attractive for developers and entrepreneurs due to its high speed and low fee structure. With that said, development on Solana would require certain special expertise, and hence knowing where and how to Hire Solana developers becomes an important factor for success in your project. Whether it's a DeFi platform, NFT marketplace, or a Web3 tool, this guide is an easy way to sail through with the confidence to conduct the whole hiring process.

Why Solana? A Quick Overview

Solana stands apart in the ecosystem due to its scalability and efficiency. Where other chains struggle with congestion and high gas fees, Solana can manage more than 65,000 transactions per second with the introduction of Proof of History (PoH) and Sea Level, a parallel execution engine. This brings about the best environment for real-time applications, gaming, financial protocols, and high-volume NFT drops.

Further, Solana enjoys a secured and rapidly growing ecosystem, unlike other chains. Projects like Magic Eden, Phantom Wallet, and Marinade Finance truly demonstrate its potential. Hence, knowledge of factors such as the usage of Rust or C and an understanding of Solana's very different runtime environment will greatly enhance your hiring abilities to find developers that will take full advantage of these benefits.

When Do You Need a Solana Developer?

Hiring a Solana developer becomes extremely essential when the use case requires decentralized logic or blockchain-based storage. A few of the occasions when hiring a Solana developer becomes wise are:

Identifying a dApp: Be it a game, a financial tool, or something else, any application decentralized on Solana needs a developer who is an expert in smart contract design.

NFT Projects: Developers can help you build, mint, and operate NFTs and marketplaces that run natively on Solana.

DeFi Protocols: From staking contracts to liquidity pools, you'll need specialists to write and deploy secure contracts for finance.

Data-Oriented Applications: Developers can build an analytics dashboard or an analytics tool that interacts with Solana nodes and RPC endpoints.

Porting from Ethereum: When moving an already existing app to Solana for lower fees, skilled developers will be the ones to tackle all compatibility and performance adjustments.

Top Skills to Look For in Solana Developers

Getting somebody who just "knows blockchain" is just not enough; for development on the Solana network, you will have to hire a developer with the following skill set:

Rust Programming Proficiency: Solana smart contracts, called on-chain programs, are mostly written in Rust, which poses a steep learning curve but offers the best performance and safety. The developer should profess knowledge of practical experience with the language.

Anchor Framework Proficiency: Anchor framework eases development by providing macros and eliminating boilerplate code, thus accelerating dApp delivery. A developer experienced with Anchor works faster and writes much cleaner, testable code.

Frontend and Wallet Integration: Expertise in linking Solana smart contracts to frontend apps through JavaScript or TypeScript libraries (e.g., Solana Web3.js) is essential. Integration with wallets such as Phantom, Solflare, or Backpack will also be needed for genuine test uses.

Security Best Practices: With hacking and exploits happening so frequently in Web3, it is critical that your developer know how to audit, write secure logic, and utilize tools such as Solana Playground or Devnet for safe testing.

Solana Dev Tools: Knowledge of CLI tools, explorer APIs, RPC calls, test validators leads to easier development and debugging.

Where to Find Solana Developers

Finding the appropriate developer is always a task; with a strategic approach, you can reach out to either individuals or teams that are truly talented. Here lies where to begin your search:

Freelance Platforms: Sites like Upwork, Toptal, and Fiverr Pro are places to find developers who may have Solana experience. Look for profiles that are verified; portfolios and reviews of clients are also important.

Community Hubs: Join the official Solana Discord, look for active developers on GitHub, and take part in discussions on Stack Overflow, Solana Stack Exchange, or Reddit r/solana.

Web3 Job Boards: Such niche sites as CryptoJobs, Web3.career, and Remote3 let you post jobs and connect with developers who are into blockchain tech already.

Hackathons & Meetups: Solana hosts almost yearly hackathons that top emerging talent tends to flock to. You could scout developers that have won or placed through these competitions.

Development Agencies: If you are running on time, partner with a Solana-focused agency that will provide full-stack dApps, project managers, and comprehensive QA support.

How to Evaluate and Interview Candidates

Inappropriate hiring and selection may lead to delays and low-quality code, or in some cases, security vulnerabilities. Here's how to screen top Solana talent:

Look at Their Portfolio: Request work done previously using Solana or dApps. Look for live demos, GitHub repositories, deployment of smart contracts on Mainnet or Devnet.

Technical Fit: Questions may concern Solana architecture, handling of state changes, cross-program invocations (CPIs), and optimizations for rent and compute units.

Give Them a Mini Project or Coding Assignment: Small tasks such as creating a simple token, setting up an SPL wallet, or coding a staking contract can be considered. In addition to code quality, evaluate their approach to solving problems.

Communication Skills Test: Working remotely? Documenting work done, reporting bugs, and collaborating using Git, Slack, or Notion tools require as much importance as technical skills.

Common Hiring Models

The hiring model has advantages and disadvantages that apply depending on how complex your project is, how megabucks you intend to budget, and how long you've got for working out a timetable for production itself. Here is a quick breakdown:

Freelancer: Use one for a short piece of work or MVP development. Flexibility and cost are in their favor, but the downside is that freelance contractors are not willing to commit forever.

Inhouse Developers: They stand ideally prepared for startups and growing companies with a continuous blockchain need. They also get involved in your product strategy.

Agencies or Dev Shops: These tend to suit non-technical founders or companies needing full-scale development. Design, QA, project management, and maintenance might also be part of the package.

Look at what you want to achieve, and pick the one suited for your development roadmap and operational needs.

Conclusion

Solana is one of the most scalable and least expensive blockchains in the world, hence an ideal choice for developers of demanding Web3 applications. Because of the complexity of the environment, one does not just need any generalist developer but a developer versed with Solana's unique architecture and tools and its best practices.

If you want to develop a commercially viable dApp or ramp up an NFT platform, devoting the right talent is of essence. So, at the end of this guide, you will know how to fairly evaluate skills, vet candidates, and ultimately Hire professional Solana Developers who can swiftly convert your vision from concept to reality with speed, security, and scalability.

0 notes

Text

How to Perform Technical SEO Audits on Websites Using Python Scripts Conducting regular technical SEO audits is essential for maintaining a healthy website that ranks well on search engines. Traditionally, SEO audits rely on tools and manual checks, but with the power of Python, you can automate and customize yoru SEO analysis efficiently. In this complete guide, we’ll explore how to perform detailed technical SEO audits on websites using Python scripts, ensuring your website’s structure, performance, and crawlability are optimized for better ranking. Why Use Python for Technical SEO Audits? Python is a versatile and beginner-friendly programming language widely adopted in SEO for automation and data analysis. Here are key reasons why Python is excellent for technical SEO audits: Automation: Run repetitive SEO checks quickly without manual interference.Customization: Tailor scripts to target specific SEO factors unique to your site.Integration: Combine with SEO APIs like Google Search Console, Ahrefs, or Screaming Frog data.Scalability: Audit multiple pages or entire domains with minimal effort. Key Components of a Technical SEO Audit Using Python To automate a powerful technical SEO audit, your Python script should check key website elements, including: HTTP Status Codes – Identify broken links and server errors.Page Titles & Meta Descriptions – Check length, existence, and uniqueness.Heading Tags (H1, H2) – Assess usage and structure.Internal Linking – Analyze link structure and depth.Page Load Speed – Monitor site performance metrics.mobile-Friendliness – Ensure responsive design and usability on all devices.Robots.txt and sitemap.xml – Verify configurations and accessibility. Step-by-Step Guide to Creating Your Python SEO Audit Script 1. Setup Your Environment Start by setting up Python and essential SEO libraries: Install Python 3.x if not already installed.Use pip to install libraries: pip install requests beautifulsoup4 pandas matplotlib 2. Check HTTP Status Codes Detect broken URLs and server issues using the requests module. import requests def check_status(url): try: response = requests.get(url,timeout=5) return response.status_code except requests.RequestException: return None 3. extract and Analyze Meta Tags and Headings Use BeautifulSoup to scrape and analyze page titles,meta descriptions,and header tags. from bs4 import BeautifulSoup def analyze_meta_and_headings(html): soup = BeautifulSoup(html, 'html.parser') title = soup.title.string if soup.title else 'No Title' meta_desc_tag = soup.find('meta', attrs='name':'description') meta_desc = meta_desc_tag['content'] if meta_desc_tag else 'No meta Description' h1_tags = [h.get_text(strip=True) for h in soup.find_all('h1')] h2_tags = [h.get_text(strip=True) for h in soup.find_all('h2')] return 'title': title, 'meta_description': meta_desc, 'h1_count': len(h1_tags), 'h2_count': len(h2_tags) 4. Crawl Internal Links Gather internal links to analyze website structure and crawl depth. def get_internal_links(html, base_url): soup = BeautifulSoup(html, 'html.parser') internal_links = set() for link in soup.find_all('a', href=True): href = link['href'] if href.startswith('/') or base_url in href: internal_links.add(href if href.startswith('http') else base_url + href) return internal_links 5. Save Results and Generate Report Use pandas to organize audit findings and export as CSV or Excel for deeper analysis. import pandas as pd data = [ 'URL': 'https://example.com', 'Status': 200, 'Title': 'Example Domain', 'Meta Description': 'No Meta Description', 'H1 Count': 1, 'H2 Count': 0, # Add more page data here ] df = pd.DataFrame(data) df.to_csv('seo_audit_report.csv', index=False) Example Python SEO Audit Workflow Input the website’s root URL.

Fetch the homepage and run check_status().Analyze meta data and headings.Extract internal links.Recursively crawl internal links with controlled depth.Compile results into a spreadsheet or HTML report. Benefits of automating technical SEO Audits with Python time Efficiency: Automate routine checks to save hours.Accuracy: reduce human errors in repetitive audits.scalability: handle audits for large websites with thousands of URLs.Insightful Data: Generate actionable reports tailored to your SEO strategy. Practical Tips for Writing Effective SEO Audit Scripts Use request headers with a user-agent to avoid blocking.Implement error handling to handle timeouts and connection errors gracefully.Respect robots.txt directives to avoid scanning restricted pages.Throttle requests to prevent server overload and IP bans.Regularly update scripts to accommodate website structure changes and new SEO standards. Sample SEO Audit Results Overview Page URL Status Code Title Length Meta Description Length H1 Tags Internal Links Count https://example.com 200 24 0 (missing) 1 15 https://example.com/about 200 15 120 1 10 https://example.com/contact 404 0 0 0 5 conclusion Technical SEO audits are the backbone of website optimization. By leveraging Python scripts, SEO professionals and webmasters can streamline their audit process, uncover critical insights, and maintain a site that’s well-structured, fast, and crawl-friendly. Whether you are a beginner or an experienced developer, integrating Python into your SEO toolkit empowers you to perform faster, more accurate, and repeatable audits tailored to your site’s unique needs. Start simple, build your scripts piece by piece, and watch your website’s SEO health improve dramatically over time.

0 notes

Text

How Contract Lifecycle Management Software Transforms Your Contract Workflow

Contracts are the backbone of every business relationship and managing them should not be a bottleneck. As organizations juggle multiple agreements across departments, manual methods often lead to delays, compliance risks, and missed opportunities. That’s where Contract Lifecycle Management Software steps in—not just as a solution but as a strategic enabler that streamlines and optimizes your entire contract workflow.

This article explores how Contract Lifecycle Management Software revolutionizes the way contracts are created, negotiated, approved, and stored, ensuring your business operates with agility and precision at every stage.

From Requisition to Signature—Simplified and Streamlined

Traditional contract workflows can be chaotic, with scattered documents, siloed communication, and inconsistent approval processes. With a centralized platform like SimpliAuthor by SimpliContract, organizations can go from requisition to signed, Simpli faster. This powerful tool brings structure and automation to every step, reducing cycle times and eliminating manual bottlenecks.

At the drafting stage, templates and clause libraries ensure legal consistency while reducing redundant work. Collaboration becomes seamless, as stakeholders can co-author, comment, and edit in real-time within the same system. Integrated workflows ensure that reviews and approvals are automated and traceable, so nothing slips through the cracks.

Centralized AI-Powered Contract Repository

One of the standout features of modern Contract Lifecycle Management Software is the AI-infused repository.

All contracts—past and present—are stored in a centralized digital place, making them easily accessible to authorized users. This eliminates the need to dig through emails or shared folders to locate specific agreements.

With the industry’s most capable AI search built into the platform, users can quickly find key terms, renewal dates, and obligations across thousands of documents. This empowers legal, procurement, and sales teams with actionable insights, improving visibility and reducing risk.

Integration with Your Tech Stack

Efficiency multiplies when your contract platform integrates seamlessly with your existing systems—CRM, ERP, e-signature tools, and more. The best Contract Management Vendors offer flexible APIs and pre-built integrations that ensure smooth data flow and reduce the need for duplicate entry.

SimpliAuthor, for example, extends your current tech ecosystem by syncing contracts and metadata across tools you already use. This allows teams to initiate contracts directly from Salesforce, finalize terms with DocuSign, and push updates to your ERP system—all without switching platforms.

Smarter Negotiations with AI Assistance

Negotiating contracts is a critical phase where delays often occur. Contract Lifecycle Management Software accelerates this phase by offering AI-powered suggestions, redline tracking, and automated compliance checks. Users can compare clauses against company standards, detect risky language, and ensure legal alignment—all in real-time.

This results in faster negotiations, fewer errors, and a better experience for both internal and external stakeholders. With a clear audit trail, compliance and accountability are always ensured.

Closing the Loop—Post-Signature Efficiency

The contract lifecycle doesn’t end at the signature. Managing post-signature obligations is equally vital. Effective Contract Management Vendors ensure that once contracts are signed, they are tracked for renewals, expirations, and compliance milestones. Automated alerts and dashboards help teams stay on top of key dates and deliverables, minimizing the risk of missed obligations or revenue leakage.

Conclusion

From the initial request to post-signature follow-up, Contract Lifecycle Management Software transforms the way contracts are handled—making them faster, more accurate, and far more efficient. With solutions like SimpliAuthor, businesses can unify their contract process, reduce risks, and empower every team involved in the contract journey.

Choosing the right Contract Management Vendors is crucial to unlocking this transformation. Look for platforms that offer AI-driven features, seamless integration, and end-to-end lifecycle support. When implemented correctly, contract management becomes not just a back-office function, but a strategic driver of business performance.

0 notes

Text

Flutter vs. React Native in 2025: Which Should You Choose?

In the evolving world of mobile application development services, two frameworks continue to dominate developer discussions in 2025 — Flutter and React Native. Both platforms offer cross-platform capabilities, robust community support, and fast development cycles, but deciding which to use depends on your project goals, team expertise, and long-term vision.

Let’s break down the pros, cons, and most recent updates to help you make the right decision.

🚀 What’s New in 2025?

🔹 Flutter in 2025

Flutter, developed by Google, has seen significant upgrades this year. Its support for multiplatform apps (web, mobile, desktop) is more stable than ever, with Flutter 4.0 emphasizing performance improvements and smaller build sizes.

Strengths:

Native-like performance due to Dart compilation

Single codebase for Android, iOS, Web, and Desktop

Rich UI widgets with high customization

Strong support for Material and Cupertino design

What’s new in 2025:

Enhanced DevTools for performance monitoring

Integrated AI components via Google's ML APIs

Faster cold-start performance on mobile apps

🔹 React Native in 2025

Backed by Meta (Facebook), React Native remains a strong contender thanks to its large community and JavaScript ecosystem. In 2025, React Native has tightened integration with TypeScript, and modular architecture has made apps more maintainable and scalable.

Strengths:

Hot reloading and fast iteration cycles

Large plugin ecosystem

Shared logic with web apps using React

Active open-source support

What’s new in 2025:

TurboModules fully implemented

Fabric Renderer is default, boosting UI speed

Easier integration with native code via JSI (JavaScript Interface)

📊 Performance & Stability

Flutter delivers better performance overall because it doesn't rely on a JavaScript bridge. This results in smoother animations and faster app startup.

React Native has narrowed the performance gap significantly with Fabric and TurboModules, but complex UIs may still perform better in Flutter.

🛠️ Development Speed & Ecosystem

Flutter provides a cohesive, “batteries-included” approach with everything bundled, which can reduce time spent finding third-party libraries.

React Native leverages the enormous JavaScript and React ecosystem, making it ideal for teams already using React for web development.

🎨 UI and Design Flexibility

Flutter has a clear edge when it comes to UI. Its widget-based architecture allows for highly customizable designs that look consistent across platforms. React Native relies more on native components, which can lead to slight inconsistencies in appearance between iOS and Android.

🤝 Community & Hiring Talent

React Native has a larger pool of developers due to its ties with JavaScript.

Flutter is catching up fast, especially among startups and companies focused on design-forward apps.

✅ When to Choose Flutter

You need a high-performance app with complex animations.

You want a unified experience across mobile, web, and desktop.

Your team is comfortable learning Dart or is focused on Google’s ecosystem.

✅ When to Choose React Native

Your team already uses React and JavaScript.

You need to rapidly prototype and iterate with existing web talent.

You're integrating heavily with native modules or third-party services.

💼 Final Thoughts

Both frameworks have matured immensely by 2025. The right choice depends on your specific project needs, existing team expertise, and your product roadmap. Whether you're building a lightweight MVP or a performance-intensive product, either tool can serve you well — with the right planning.

If you're unsure where to start, partnering with a reliable mobile application development company can help you assess your needs and build a roadmap that aligns with your business goals.

#mobile app development company#mobile app development services#mobile application development company#mobile application development services#mobile application services#mobile application company

0 notes

Text

Real-World Projects You’ll Work on During a Data Analytics Course in Delhi

If you're planning to start your career in data analytics, joining a good training course is the first step. But not just any course—you need one that helps you build real skills by working on real-world projects. That’s exactly what Uncodemy offers in its Data Analytics Course in Delhi. In this article, we’ll walk you through the types of real-world projects you’ll work on during your journey at Uncodemy. These projects will not only make your learning exciting but will also prepare you for real jobs in the industry.

Why Real-World Projects Matter in a Data Analytics Course

Before diving into the types of projects, let’s understand why they are so important:

Hands-on experience: You learn best by doing. Real projects help you understand how to apply what you've learned in real business situations.

Job-ready skills: Employers prefer candidates who have already worked on real-world problems.

Portfolio building: Projects give you something to show on your resume or during interviews.

Confidence boost: When you complete real-world tasks, you feel more confident about your skills.

Now, let’s look at some of the exciting and useful real-world projects you’ll work on during the Data Analytics Course by Uncodemy in Delhi.

1. Sales Analysis for a Retail Store

Project Overview:

You’ll work on sales data from a retail company. The goal is to find out which products sell the most, which days have the highest sales, and what customer patterns exist.

Tools Used:

Excel

Python

Tableau or Power BI

What You’ll Learn:

Data cleaning and preparation

Creating dashboards and visual reports

Analyzing trends and making suggestions to improve sales

This project helps you understand how businesses make decisions based on sales data.

2. Customer Segmentation Using Clustering

Project Overview:

In this project, you’ll work on customer data from an e-commerce company. You will use clustering techniques to group customers based on their behavior.

Tools Used:

Python (using libraries like Pandas, NumPy, and Scikit-learn)

Jupyter Notebook

What You’ll Learn:

K-means clustering

Data visualization

Feature selection and preprocessing

This project is important because customer segmentation helps companies create better marketing strategies.

3. Analyzing Social Media Data

Project Overview:

You’ll collect and analyze social media data (like tweets or Facebook posts) to understand public opinion about a brand or product.

Tools Used:

Python

Natural Language Processing (NLP)

APIs like Twitter API

What You’ll Learn:

Sentiment analysis

Text data cleaning

Visualization of public opinion

Social media is a goldmine of information, and companies love hiring people who can understand it.

4. HR Analytics – Employee Retention

Project Overview:

You’ll analyze HR data to find out why employees are leaving a company and what can be done to reduce attrition.

Tools Used:

Excel

Python

Tableau

What You’ll Learn:

Identifying key reasons for employee turnover

Building dashboards to present findings

Making data-driven suggestions for HR improvement

This project shows how data analytics can help improve employee satisfaction and save company costs.

5. Credit Card Fraud Detection

Project Overview:

You will work on a dataset containing credit card transactions. Your task is to detect unusual patterns that may indicate fraud.

Tools Used:

Python

Machine Learning (Logistic Regression, Decision Trees)

Jupyter Notebook

What You’ll Learn:

Data imbalance techniques

Model training and evaluation

Real-time fraud detection logic

This project is not only interesting but also very important in industries like banking and finance.

6. Movie Recommendation System

Project Overview:

You will build a basic recommendation system like the ones used by Netflix or Amazon. The goal is to suggest movies to users based on their past viewing habits.

Tools Used:

Python

Pandas

Collaborative Filtering techniques

What You’ll Learn:

Recommender systems

User-item interaction matrix

Personalization in analytics

This type of project is perfect for learning how modern platforms use data to improve customer experience.

7. Web Analytics for a Blog or Website

Project Overview:

You’ll analyze traffic data from a blog or website using Google Analytics or simulated data.

Tools Used:

Google Analytics

Excel or Python

Power BI

What You’ll Learn:

Page views, bounce rate, and traffic sources

Visitor behavior tracking

Strategies to increase user engagement

This project helps you understand how websites make data-driven changes to improve their reach.

8. Healthcare Data Analysis

Project Overview:

Work on patient or hospital data to find trends in diseases, treatments, or hospital visits.

Tools Used:

Excel

Python

Tableau

What You’ll Learn:

Understanding healthcare KPIs

Data filtering and sorting

Creating actionable insights for hospitals

Healthcare is one of the fastest-growing fields for data analysts.

9. Weather Data Analysis

Project Overview:

You will analyze historical weather data to understand patterns, extreme events, or seasonal changes.

Tools Used:

Python

APIs for weather data

Visualization tools

What You’ll Learn:

Time-series analysis

Data extraction from APIs

Report generation

This project is fun and teaches you how data works with time-based information.

10. Stock Market Data Analysis

Project Overview:

Work on historical stock market data to find trends, make predictions, or compare different companies.

Tools Used:

Python (Pandas, Matplotlib)

Yahoo Finance API

What You’ll Learn:

Time-series forecasting

Data visualization

Financial KPIs

This project is ideal for those interested in finance or stock trading.

11. Real Estate Price Prediction

Project Overview:

You’ll build a model to predict property prices based on location, size, number of rooms, and other factors.

Tools Used:

Python

Machine Learning (Linear Regression, Random Forest)

Jupyter Notebook

What You’ll Learn:

Regression techniques

Data preparation

Model accuracy testing

This kind of project shows how analytics is used in real estate and housing markets.

How These Projects Help You Get Hired

These real-world projects are more than just assignments. They are stepping stones to your career. When you complete them, you will:

Be more confident in job interviews

Have a strong portfolio to show hiring managers

Be able to answer practical questions during technical interviews

Show proof of real-world experience on your resume

At Uncodemy, we make sure that every student gets to work on real projects so they’re not just job seekers—they’re job-ready professionals.

Why Choose Uncodemy for Your Data Analytics Course in Delhi?

Here are a few reasons why Uncodemy stands out:

Industry Experts: Learn from professionals who have real-world experience.

100% Practical Learning: Less theory, more hands-on practice.

Live Projects: Not just dummy data, but real or simulated projects.

Job Assistance: Get help with resume building, mock interviews, and job placements.

Flexible Batches: Weekend, weekday, and online options available.

Final Thoughts

Learning data analytics is one of the best career moves you can make today. But just learning theory is not enough. You need practical, real-world experience, and that’s exactly what Uncodemy offers through its Data Analytics course in delhi.

By working on real projects like sales analysis, customer segmentation, fraud detection, and stock market predictions, you’ll become a skilled analyst ready to face real industry challenges. You won’t just be learning—you’ll be doing.

If you’re ready to start your journey toward becoming a data analyst, join Uncodemy today and get real skills that get real jobs.

0 notes

Text

Using an LLM API As an Intelligent Virtual Assistant for Python Development

The world of Python development is constantly evolving, demanding efficiency, creativity, and a knack for problem-solving. What if you had a tireless, knowledgeable assistant right at your fingertips, ready to answer your questions, generate code snippets, and even help you debug? Enter the era of Large Language Model (LLM) APIs, powerful tools that can transform your development workflow into a more intelligent and productive experience.

Forget endless Google searches and sifting through Stack Overflow threads. Imagine having an AI companion that understands your coding context and provides relevant, insightful assistance in real-time. This isn't science fiction; it's the potential of integrating LLM APIs into your Python development process.

Beyond Autocomplete: What an LLM API Can Do for You:

Think of an LLM API not just as a sophisticated autocomplete, but as a multifaceted intelligent virtual assistant capable of:

Code Generation on Demand: Need a function to perform a specific task? Describe it clearly, and the LLM can generate Python code snippets, saving you valuable time and effort.

Example Prompt: "Write a Python function that takes a list of dictionaries and sorts them by the 'age' key."

Intelligent Code Completion: Going beyond basic syntax suggestions, LLMs can understand the context of your code and suggest relevant variable names, function calls, and even entire code blocks.

Answering Coding Questions Instantly: Stuck on a syntax error or unsure how to implement a particular library? Ask the LLM directly, and it can provide explanations, examples, and even point you to relevant documentation.

Example Prompt: "What is the difference between map() and apply() in pandas?"

Explaining Complex Code: Faced with legacy code or a library you're unfamiliar with? Paste the code snippet, and the LLM can provide a clear and concise explanation of its functionality.

Example Prompt: "Explain what this Python code does: [x**2 for x in range(10) if x % 2 == 0]"

Refactoring and Code Improvement Suggestions: Want to make your code more readable, efficient, or Pythonic? The LLM can analyze your code and suggest improvements.

Example Prompt: "Refactor this Python code to make it more readable: [your code snippet]"

Generating Docstrings and Comments: Good documentation is crucial. The LLM can automatically generate docstrings for your functions and suggest relevant comments to explain complex logic.

Example Prompt: "Write a docstring for this Python function: [your function definition]"

Debugging Assistance: Encountering an error? Paste the traceback, and the LLM can help you understand the error message, identify potential causes, and suggest solutions.

Example Prompt: "I'm getting a TypeError: 'int' object is not iterable. What could be the issue in this code: [your code snippet and traceback]"

Learning New Libraries and Frameworks: Trying to grasp the basics of a new library like Flask or Django? Ask the LLM for explanations, examples, and common use cases.

Example Prompt: "Explain the basic routing mechanism in Flask with a simple example."

Integrating an LLM API into Your Workflow:

Several ways exist to leverage an LLM API for Python development:

Direct API Calls: You can directly interact with the API using Python's requests library or dedicated client libraries provided by the LLM service. This allows for highly customized integrations within your scripts and tools.

IDE Extensions and Plugins: Expect to see more IDE extensions and plugins that seamlessly integrate LLM capabilities directly into your coding environment, providing real-time assistance as you type.

Dedicated Code Editors and Platforms: Some emerging code editors and online platforms are already embedding LLM features as core functionalities.

Command-Line Tools: Command-line interfaces that wrap LLM APIs can provide quick and easy access to AI assistance without leaving your terminal.

The Benefits of Having an AI Assistant:

Increased Productivity: Generate code faster, debug more efficiently, and spend less time on repetitive tasks.

Faster Learning Curve: Quickly grasp new concepts, libraries, and frameworks with instant explanations and examples.

Improved Code Quality: Receive suggestions for better coding practices, refactoring, and documentation.

Reduced Errors: Catch potential bugs and syntax errors earlier in the development process.

Enhanced Creativity: Brainstorm ideas and explore different approaches with an AI partner.

The Future is Intelligent:

While LLM APIs are not a replacement for skilled Python developers, they represent a powerful augmentation of our abilities. By embracing these intelligent virtual assistants, we can streamline our workflows, focus on higher-level problem-solving, and ultimately become more effective and innovative Python developers. The future of coding is collaborative, with humans and AI working together to build amazing things. So, explore the available LLM APIs, experiment with their capabilities, and unlock a new level of intelligence in your Python development journey.

1 note

·

View note

Text

Understanding Integration Testing: A Key Step Towards Robust Software

In the fast-paced world of software development, ensuring that individual components work together seamlessly is critical. Integration testing is the stage in the software testing process where multiple modules are combined and tested as a group. This blog will explore the importance of integration testing, common approaches, and how tools like Bugasura can streamline the process.

What is Integration Testing?

Integration testing verifies that different software modules, which have already been unit-tested individually, work collectively as intended. It aims to expose issues arising from the interactions between integrated units, such as data flow errors, interface mismatches, or incorrect behavior across module boundaries.

Why is Integration Testing Important?

Detects Interface Defects: Even if individual components function correctly in isolation, their interactions can introduce bugs. Integration testing identifies such defects early.

Ensures Module Compatibility: It ensures that newly developed modules align with existing ones, preventing compatibility issues during system deployment.

Improves System Reliability: By validating data exchange and functional cooperation, integration testing enhances system robustness and performance.

Reduces Post-Deployment Failures: Catching integration issues early minimizes the risk of system crashes or malfunctions in production environments.

Common Approaches to Integration Testing

Several strategies exist for conducting integration testing, depending on the project complexity and development methodology:

Big Bang Approach:

All modules are integrated simultaneously and tested as a whole.

Suitable for small systems but can delay defect detection in larger systems.

Incremental Testing:

Modules are integrated and tested step-by-step.

Subcategories include:

Top-Down Approach: Starts testing from top-level modules down to lower-level ones using stubs.

Bottom-Up Approach: Begins from lower-level modules upward, employing drivers to simulate higher-level modules.

Sandwich Testing: A hybrid approach combining top-down and bottom-up testing.

Continuous Integration (CI):

Automated integration testing is performed frequently, often triggered by code changes.

Popular in Agile and DevOps environments.

Challenges in Integration Testing

Complex Dependencies: As systems grow, module dependencies become intricate, complicating integration scenarios.

Third-Party Components: Integrating external libraries or APIs can introduce unpredictable issues.

Data Consistency: Ensuring consistent data flow across modules is crucial but often challenging.

Test Environment Setup: Creating a realistic testing environment that mirrors production can be time-consuming.

How Bugasura Enhances Integration Testing

Bugasura, a modern bug-tracking and testing tool, can simplify and optimize the integration testing process:

Real-Time Bug Reporting: Instantly capture and document issues arising during integration testing.

Collaborative Testing: Enable seamless communication between developers and testers to resolve integration defects faster.

Visual Testing Workflow: Gain clarity on module interactions and dependencies, aiding in pinpointing integration breakdowns.

Integrations with CI/CD Pipelines: Align Bugasura with your development workflow to detect and manage integration issues continuously.

Final Thoughts

Integration testing is indispensable for ensuring that software modules work cohesively. By implementing structured testing approaches and leveraging tools like Bugasura, teams can detect integration flaws early, enhance system stability, and accelerate software delivery. As you refine your testing strategy, make integration testing a cornerstone of your quality assurance process. https://bugasura.io/blog/integration-testing-for-software-releases/

0 notes

Text

Replace C language! Many Python developers are joining the Rust team

In the future, more and more libraries will use Python as the front end (improving programming efficiency) and Rust as the back end (improving performance).

python

Rust is replacing C as the “backend” for high-performance Python packages. What is the reason behind this?

First, let’s consider motivation. Python is easy to write, but has the problem of slow execution speed. I especially can’t write data processing libraries because Python is very slow and it’s difficult to write high-performance libraries in pure Python. However, Python is the primary language for machine learning and data engineering. So when you try to write a library for data engineers or machine learning engineers, you run into the following problems:

Although we need to write APIs in Python, high-performance data processing tasks cannot be done solely in Python. This means that you have the following options for writing a library:

Either you learn and use C, or someone else learns C, writes a library, and you rely on that library to be able to perform low-level operations. Someone familiar with the C language might ask, “Is there anything wrong with this? Many library authors may outsource their numerical computation to NumPy or SciPy. You might think, “I can learn.”

However, the situation is not so ideal. It’s convenient to outsource some tasks to libraries like NumPy, SciPy, etc., but it requires all functions to be vectorized and you can’t write your code in a for loop. You also have to worry about certain operations being blocked by the Global Interpreter Lock (GIL), and there are various other issues. Not everything you want to do can be easily found in a library that already exists.

Therefore, there is another method. What about writing a library from scratch in C and adding the Python bindings afterwards? However, if you have a Python background, writing low-level code using C feels very low-level, and learning the language takes effort. Null pointer dereferences, buffer overflows, memory leaks… these are traps you can encounter when using the C language, and are unfamiliar to programmers learning Python for the first time.

Rust

Rust is fast and has efficient memory management. Therefore, concurrent and parallel programming becomes easier. Rust has great tools, a friendly compiler, and a large and active developer community. Rust makes your programs faster and allows you to make more friends while learning.

Most importantly, Rust is easier to learn than C for Python developers.

It improves the “first level” experience and makes it easier for beginners to write “safe” code. The learning curve is smoother, allowing you to gradually master more advanced language features over time.

Therefore, over the past few years, more and more high-performance libraries are choosing Python as their front end and Rust as their back end. example:

Lance: A high-performance, low-cost vector database. Founders Chang She and Lei Xu originally wrote code libraries in C++, but decided to switch to Rust when they no longer needed to work with CMake. Here’s why Chang made this decision.

“The decision to switch from C++ to Rust was because I could work more efficiently, without losing performance, and didn’t have to deal with CMake.I basically started learning Rust from scratch, and while I was learning it, Lei and I We rewrote about 4 months worth of C++ code in Rust, and each time we write and release a new feature in Rust, we become more confident that we won’t get a segfault every time we run every other command. I don’t have to worry about it happening.”

Not only is Rust well-suited for data processing, it can also serve as a backend for many other Python packages with high performance requirements.

Pydantic: A Python verification library for developers. The Pydantic team rewrote the second version in Rust and saw a 20x performance improvement for even simple models. Besides performance improvements, Rust has several other benefits. Pydantic founder Samuel Colvin cites several advantages.

“Another thing about Rust is that in addition to its speed, code written in Rust is usually easier to use and maintain. In particular, Rust catches and handles all possible errors. , and the Python (and TypeScript) type system tends to ignore these errors, so I’m not sure which exceptions can be raised in which situations when calling ‘foobar()’ I have no idea. Basically I have to try iteratively to find possible failures.”

Combining Python and Rust

In the future, more and more libraries will use Python as the front end and Rust as the back end. Overall, Python developers today have a better and smoother approach to building high-performance libraries.

0 notes

Text

Windows File Handles

Need to rant about an issue I've come across today -- Windows has far too many types of file handle.

First you have the stdc and win32 FILE* and HANDLE respectively. Having these two be separate made sense historically (and still somewhat nowadays) as DOS and Win32 applications were broadly structured as separate -- with the latter preferring Win32-specific solutions as stdc was/is viewed as being TUI-centric with wholly graphical applications being Windows' original purpose; people would just drop back to DOS to use console applications. Whilst a tad annoying to have two -- the historical reasoning wasn't entirely without reason.

Moving onto the first of the weirder ones -- HFILE-oriented functions (_lopen, _hread/_lread etc.) deal with their own handle type intended only for compat with these functions in 16-bit Windows with its different handle width and IO function implementations. This would be fine so far as compat went but there is no reason these handles needed to be entirely disconnected from Win32 HANDLEs as it actively breaks compat with functions now designated for "low level IO handles" (which we'll address later). This means that this set of functions operate on a handle type needlessly distinct from the other Win32 HANDLE type -- they're both literally just integers -- in the name of "compatibility" which got broken a few years later anyway; API design and maintainence, ladies and gentlemen.

Now for "low level IO handles" -- otherwise known as FDs. These are a type of file handle stored purely as int and used with what are now seen as the POSIX compatibility functions introduced into NT's unix subsystem -- eventually incorporated into Win32/the Windows CRT directly. These are your _read, _fstat, _fdopen etc. (Microsoft's hypocritical approach to C compliance with these stupid "oldname" techniques is a matter for another day). However -- when HFILE functions were still broadly used, there were some unix-y functions provided with many DOS C libraries which could work with them (such as fstat f.e.). So when these unix-y functions were introduced they were compatible with the very similar DOS functions which already had unix-y compat and stored their handles as ints right? Of course not. The new UNIX-y compatibility functions effectively overrode the old DOS versions and replaced the kind of file handle they consume -- thus leading to a compatibility breakage with a class of functions whose continued existence was solely in the name of compatibility. Great job Microsoft.

Then we have the WME/WinMM HMMIO class of file functions. I'm not going to go into too much detail on WME here because we'd be here all day -- but basically it was Windows 3's version of the "multimedia OS" craze which would come to dominate the 90s (and was more effectively realised in 9x). One aspect of this led to a separate IO system supporting memory files, file cookie-like behaviour so one could implement specific file formats via. standard handles (as well as effective CD usage) and of course normal file IO. HMMIO files are not compatible with any other kind of file handle and have their own set of functions for interaction -- add another to the pile.

These are all that I've encountered personally (at least recently) in Windows' native system APIs when doing work on AftGangAglay (https://aftgangaglay.github.io/). There may be more kinds of handle I've missed/forgotten (I seem to remember a COM-based file stream interface but I can't find it at time of writing) but it's certainly more than enough for MS to reconsider what they're up to. My entire project is founded on the continued efforts of software systems to maintain compatibility, which is a noble goal; however as we've seen some of these were introduced only to be abandoned instead of simply extending existing behaviours in the first place -- or were overriden by newer behaviours. Microsoft seems to be allergic to using existing standards in modern Windows -- but it all really got started in the Windows 3 era when they started rampantly overriding stdc/unix-y functionality they already had in MS-DOS with their own inferior API in the name of an ill-formed perception that compatibility with a standard would adversely affect the practice of programmers for their principally graphical system.

0 notes

Text

Upgaming Enters into a Strategic Partnership with Slotegrator

Leading Enterprise solutions provider, Upgaming is making waves once again, entering into a strategic partnership with Slotegrator, a respected name in iGaming game aggregation. Through this collaboration, Upgaming's already robust game aggregation software will become even richer with the addition of Slotegrator’s extensive game content, known as APIgrator. This means Upgaming’s users can now explore a wider variety of games, from classic slots to live dealer tables, sourced from over 100 global providers.

For Upgaming, this partnership represents a key step forward in its mission to deliver a world-class gaming experience. By integrating Slotegrator’s diverse portfolio, Upgaming can offer clients a broader range of options, catering to different player preferences and enhancing the overall value of its platform. Slotegrator’s APIgrator is a comprehensive solution that brings quality and variety in one package, allowing Upgaming to expand its game library quickly and effectively.

This move is especially timely for Upgaming, whose game aggregation software has already gained industry-wide recognition. Winning the SiGMA Eurasia 2023 award and earning a shortlisting as the “Best Aggregator 2024” at the SiGMA Europe Awards, Upgaming has solidified its reputation as a trusted, innovative solution provider. Adding Slotegrator’s offerings further boosts Upgaming’s competitive edge, making its platform more appealing to new and existing clients alike.

Another advantage of Upgaming’s aggregation platform is its simple, efficient integration process. Whether clients are looking to add the casino aggregation software to their existing platform via Casino API or opting for the full Enterprise iGaming platform, Upgaming’s solution can be seamlessly incorporated in just a matter of days. This ease of access allows operators to quickly elevate their game selection without lengthy setup times.

This strategic partnership underscores Upgaming and Slotegrator’s shared commitment to growth and innovation in the iGaming industry. By joining forces, they’re setting a new standard for what a well-rounded, high-quality game library should look like. With the promise of fresh, engaging content and a streamlined integration process, Upgaming and Slotegrator are empowering operators to deliver an unmatched gaming experience to players worldwide.

With this collaboration, Upgaming continues its trajectory of success, enhancing its platform and ensuring its clients always have access to top-tier game content that engages and excites. To get more information about the ongoing partnership, read the article from the original source: Upgaming Enters into a Strategic Partnership with Slotegrator.

0 notes